Kubernetes: an amazingly affordable solution for personal projects

Hello colleagues!

In January, we finally have a long-awaited book on Kubernetes. Speech on the "Mastering Kubernetes 2nd Edition" Gigi Sayfana:

We did not dare to publish a book on Kubernetes about a year ago, since at that time the technology definitely looked like a dreadnought for supercorporations. However, the situation is changing, in confirmation of what we suggest reading a great article by Caleb Doxsey, who, by the way, wrote a book about the Go language. Mr. Doxy's arguments are very interesting, and we hope that after reading them, you really want to try Kubernetes in practice.

A few months earlier this year I spent on in-depth study of Kubernetes: I needed it for one project at work. Kubernetes is a complex technology for infrastructure management, “everything is included, even batteries”. Kubernetes solves a number of problems that you are destined to face when developing for large enterprises. However, there is a widespread belief that Kubernetes is an overly complicated technology that is relevant only when driving a large cluster of machines. Allegedly, the operational load when working with Kubernetes is so great that using it for small infrastructures, where the machines are not counted in dozens, this is shooting from a cannon on sparrows.

Beg not agree with this. Kubernetes is good for small projects, and today you can already afford your own Kubernetes cluster for less than $ 5 a month.

')

Kubernetes Defense Word

Below, I will show you how to set up your own Kubernetes cluster, but first I will try to explain why Kubernetes should be used in small projects:

Kubernetes is thorough

Yes, at first glance Kubernetes seems to be a somewhat redundant solution. It seems, is not it easier to take and acquire a virtual machine and not configure your own application as a service, why not? Having chosen this path, you will have to decide on some decisions, in particular:

Kubernetes solves all these problems. Naturally, all of them can be solved in other ways, among which there are better options Kubernetes; however, how much better is it not to think about all this at all and concentrate on developing the application.

Kubernetes is reliable

A single server is bound to collapse. Yes, it happens rarely, maybe once a year, but after such an event a real headache begins: how to get everything back into working condition. This is especially true if you have manually configured the entire configuration. Remember all the commands that ran last time? Do you even remember what worked on the server? I recall one quote from the bashorga:

Kubernetes uses a descriptive format, so you always know what things were supposed to run when and where; In addition, all components of the system you have deployed are much clearer. Moreover, in the control plane, the failure of nodes is handled carefully, and the hearths are automatically redistributed. When working with a stateless service, for example, with a web application, you can probably forget about failures altogether.

Kubernetes is as easy to learn as alternatives are.

Kubernetes does not follow the Unix model. It does not fit into the ecosystem of tools. He is not one of those decisions that "do only one thing and do it well." Kubernetes is a comprehensive solution for many problems, it can replace a variety of techniques and tools that developers have already got used to.

Kubernetes has its own terminology, its own toolkit, its own paradigm of dealing with servers, which differs significantly from the traditional unix approach. When you navigate these systems, many of the characteristic features of Kubernetes may seem random and overcomplicated, perhaps even cruel. I suppose there are compelling reasons why such complexity arose, but here I don’t say that Kubernetes is simple and elementary to understand; I say that Kubernetes knowledge is enough to create and support any infrastructure.

It is impossible to say that any sysadmin has sufficient unix background. For example, after graduating from college, I worked for 5 years in the Windows ecosystem. I can say that my first job at a startup, where it took to deal with linux, required a difficult transformation. I did not know the memory by command, I was not used to using the command line for almost all occasions. It took me some time to learn how to work with the new platform (although, by that time I already had some programming experience), but I clearly remember how much I had suffered then.

With Kubernetes, you can start all the work literally from scratch. In Kubernetes, you can easily allocate services, even without an SSH connection to the server. You do not have to learn systemd; it is not necessary to understand the launch levels or to know which command was used:

Is this really:

Much harder this one?

And this is a relatively successful case. If you manage the infrastructure 100% remotely, you will not be able to provide server support manually. For this you need some tool: ansible, salt, chef, puppet, etc. Naturally, to master Kubernetes and work effectively with it, you need to learn a lot, but this is no more difficult than dealing with alternatives.

Kubernetes open source

In an era of huge popularity of serverless technologies, Kubernetes is notable for its independence from specific suppliers. There are at least 3 popular and easy-to-manage Kubernetes providers (Google, Amazon, Microsoft) that will not disappear in the foreseeable future. There are also many companies that successfully cope with their own clusters of Kubernetes, and every day the number of such companies is multiplying. Today, working with Kubernetes from the first day is an obvious solution for most startups.

Kubernetes, being an open-source project, is well documented, stable and popular, and any problems can be as detailed as possible on stackoverflow. Of course, Kubernetes has its own bugs and technical challenges, but I assure you: there are guys in the world who sharpen Kubernetes with inconceivable skill. Their work is your dividend; In the next few years, this technology will only be improved.

Kubernetes scaled

One of the challenges associated with supporting the infrastructure is the following: techniques that are useful when deploying small systems are rarely successfully reproduced in larger systems. It is definitely convenient to SCP-noob a binary file to the server, kill the process and restart it if you have only one server. But when you need to support multiple servers and simultaneously monitor them, this task can be overwhelmingly complex. That's why when managing such an infrastructure you can't do without tools like chef or puppet.

However, if you choose the wrong tool, over time this can get you into a corner. Suddenly, it turns out that the lead chef server is not coping with the 1000-server load, the blue-green deployment does not fit into your model, and it takes hours to complete the capistrano tasks. When the infrastructure reaches a certain size, you will be forced to tear down everything that has already been done and start over. How great would it be if you managed to break out of this eternal squirrel wheel with infrastructure and switch to technology that will scale according to your needs?

Kubernetes is a lot like a SQL database. SQL is the product of many years of hard lessons about data storage and efficient query. Probably, you will never need a tenth of the possibilities that are provided in a suitable SQL database. You might even be able to design a more efficient system based on your own database. But in the absolute majority of situations, the SQL database will not only satisfy all your needs, but will also drastically expand your ability to quickly issue ready-made solutions. SQL schemas and indexing are much easier to use than your own file-based data structures, since your own data structures will almost certainly become obsolete as your product grows and evolves over time. But the SQL database is likely to survive any inevitable refactoring.

Kubernetes will survive too. Perhaps your side project will never grow to such a scale where its problems can be solved only by means of Kubernetes, but in Kubernetes there are absolutely all the tools for dealing with any problems, and the skills that you will get when handling this toolkit can turn out to be invaluable in future projects.

Build your own cluster Kubernetes

So, I believe that it is advisable to use Kubernetes in small projects, but only if setting up the cluster is easy and inexpensive. It turns out that both are achievable. There are managed Kubernetes providers that independently resolve all confusion with the support of the control plane of the Kubernetes master node. And the recent dumping wars in the cloud infrastructure environment have led to a striking cheapening of such services.

We will analyze the next case using the example of Google's Kubernetes engine (GKE), however, you can also look at offers from Amazon (EKS) or Microsoft (AKS), if Google is not satisfied with you. To build our own Kubernetes cluster, we need:

For additional savings, we will try to do without the Google input controller. Instead, we will use Nginx on each node as a daemon and create our own operator, which will provide us with synchronization of the external IP addresses of the working node with Cloudflare.

Google configuration

First, go to console.cloud.google.com and create a project, if you have not already done so. You will also need to create a billing account. Then through the hamburger menu we go to the Kubernetes page and create a new cluster. Here's what to do next:

Putting all these options, you can proceed to the next step: create a cluster. Here's how to save it:

So we set up a cluster of Kubernetes of 3 nodes, it cost us the same price as the only Digital Ocean machine.

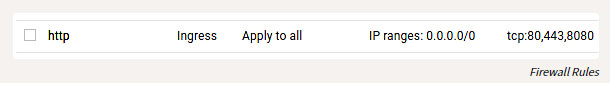

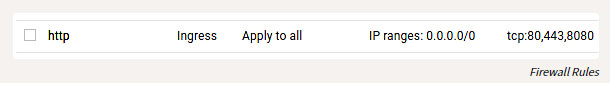

Above the GKE settings, you also need to configure a couple of firewall rules so that the HTTP ports of our sites can be reached from the outside world. Find the VPC Network entry in the hamburger menu, then go to Firewall Rules and add rules for TCP ports 80 and 443, with the IP address range 0.0.0.0/0.

Firewall rules

Local setting

So, we raised and launched a cluster, and now let's configure it. Install the

Of course, you still have to install the docker, and then bind it to the GCR, so that you can send containers:

You can also install and configure

Simplified:

By the way, just a fairy tale that all this toolkit works on Windows, OSX or Linux. As a person who sometimes did such things under Windows, I confess that this is a pleasant surprise.

Build a web application

You can write a web application in any programming language. The container allows you to abstract particulars. We need to create an HTTP application that listens to the port. I prefer the Go language for such purposes, but for a change, let's try crystal. Create the

We also need the Dockerfile:

To build and test our application, run:

And then go to the browser at localhost: 8080. Having adjusted this mechanism, we can send our application to the GCR by running:

Configuring Kubernetes

My configuration of Kubernetes is here .

For this example, we will have to create several files in yaml format, where our various services will be presented, and then run kubectl apply to configure them in a cluster. The Kubernetes configuration is descriptive, and all these yaml files tell Kubernetes what state we want to get. In a broad sense, this is what we are going to do:

Web Application Configuration

First, let's configure our web application: (be sure to replace the

This is how Deployment (deployed configuration) is created, according to which Kubernetes should create a single container with a single container (our docker container will work there) and a service that we will use to discover services in our cluster. To apply this configuration, run (from the

You can test it like this:

We can also create a proxy API for access:

And then go: localhost : 8001 / api / v1 / namespaces / default / services / crystal-www-example / proxy /

NGINX configuration

As a rule, when working with HTTP services, Kubernetes uses an input controller. Unfortunately, the HTTP load balancer from Google is too expensive, so we will not use it, but use our own HTTP proxy and configure it manually (it sounds scary, but in reality everything is very simple).

To do this, use the Daemon Set and Config Map. Daemon Set is an application running on each node. Config Map is, in principle, a small file that we can mount in a container; This file will store the nginx configuration.

The yaml file looks like this:

This is how we mount the nginx.conf file of the configuration card in the nginx container. We also set values for two more fields:

Apply these expressions - and you can go to nginx using the public ip of your nodes.

Here's how to verify this:

So now our web application is accessible from the Internet. It remains to come up with a beautiful name for the application.

DNS connection

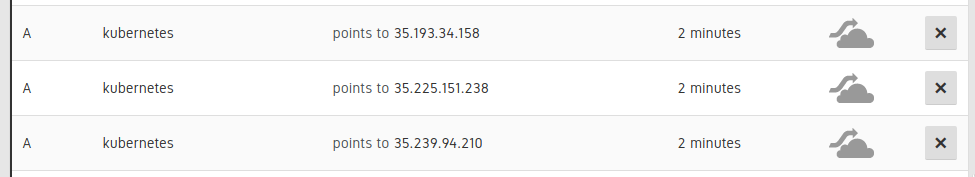

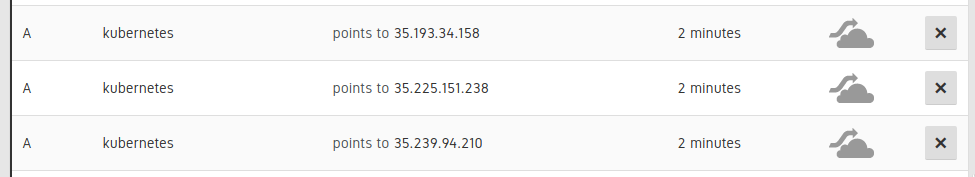

It is required to set 3 A DNS records for nodes of our cluster:

Entries in the UI Cloudflare

Then we add a CNAME record to point to these A-records. (eg www.example.com CNAME for kubernetes.example.com). This can be done manually, but better - automatically, so that if someday we have to scale or replace the nodes in the DNS records, this information is also updated automatically.

I think this example also illustrates well how Kubernetes can delegate part of your work, and not try to overcome it. Kubernetes understands scripts perfectly and has a powerful API, and you can fill in the existing spaces with your own components, which are not so difficult to write. For this, I made a small application on Go, available at this address: kubernetes-cloudflare-sync .

To start, created the informant

It will call my resynchronization function whenever a node changes. Then I synchronize the API using the Cloudflare API library, like this:

Then, as is the case with our web application, we run this application in Kubernetes as a Deployment:

We will need to create a secret Kubernetes, specifying the key

We will also need to create a service account (which opens our Deployment access to the Kubernetes API to retrieve nodes). First run (especially for GKE):

And then apply:

Working with RBAC is a bit of a chore, but I hope everything is clear. When the configuration is ready, and our application works with Cloudflare, this application can be updated with any changes to any of the nodes.

Conclusion

Kubernetes is destined to become the flagship technology for managing large systems. , Kubernetes , Kubernetes , Kubernetes , Kubernetes .

, Kubernetes : , . – !

In January, we finally have a long-awaited book on Kubernetes. Speech on the "Mastering Kubernetes 2nd Edition" Gigi Sayfana:

We did not dare to publish a book on Kubernetes about a year ago, since at that time the technology definitely looked like a dreadnought for supercorporations. However, the situation is changing, in confirmation of what we suggest reading a great article by Caleb Doxsey, who, by the way, wrote a book about the Go language. Mr. Doxy's arguments are very interesting, and we hope that after reading them, you really want to try Kubernetes in practice.

A few months earlier this year I spent on in-depth study of Kubernetes: I needed it for one project at work. Kubernetes is a complex technology for infrastructure management, “everything is included, even batteries”. Kubernetes solves a number of problems that you are destined to face when developing for large enterprises. However, there is a widespread belief that Kubernetes is an overly complicated technology that is relevant only when driving a large cluster of machines. Allegedly, the operational load when working with Kubernetes is so great that using it for small infrastructures, where the machines are not counted in dozens, this is shooting from a cannon on sparrows.

Beg not agree with this. Kubernetes is good for small projects, and today you can already afford your own Kubernetes cluster for less than $ 5 a month.

')

Kubernetes Defense Word

Below, I will show you how to set up your own Kubernetes cluster, but first I will try to explain why Kubernetes should be used in small projects:

Kubernetes is thorough

Yes, at first glance Kubernetes seems to be a somewhat redundant solution. It seems, is not it easier to take and acquire a virtual machine and not configure your own application as a service, why not? Having chosen this path, you will have to decide on some decisions, in particular:

- How to deploy the application? Just rsync it to the server?

- What about addictions? If you are working with Python or Ruby, then they will have to be installed on the server. Are you going to just run the commands manually?

- How are you going to run the app? Just run the binary in the background and then nohup it? This is probably not too good, so if you organize the application as a service, you will have to learn systemd?

- How are you going to cope with the operation of many applications, when all of them have different domain names or http-paths? (you probably need to configure haproxy or nginx for this)

- Let's say you have updated your application. How in that case will roll out changes? Stop the service, deploy the code, restart the service? How to avoid downtime?

- What if you screw up the deployment? Are there opportunities to roll back? (Symlink directory ...? This simple script no longer seems particularly simple)

- Do you use other services in your application, for example, redis? How to configure all these services?

Kubernetes solves all these problems. Naturally, all of them can be solved in other ways, among which there are better options Kubernetes; however, how much better is it not to think about all this at all and concentrate on developing the application.

Kubernetes is reliable

A single server is bound to collapse. Yes, it happens rarely, maybe once a year, but after such an event a real headache begins: how to get everything back into working condition. This is especially true if you have manually configured the entire configuration. Remember all the commands that ran last time? Do you even remember what worked on the server? I recall one quote from the bashorga:

erno: Hmm. I lost my computer ... seriously, I lost it. He pings, works fine, I just won’t know where he went in the apartment.Exactly the same thing happened to me recently, on my own blog. I just needed to update the link, but I completely forgot how to deploy a blog. Suddenly, a ten-minute fix turned into a piece of work the whole weekend long.

bash.org/?5273

Kubernetes uses a descriptive format, so you always know what things were supposed to run when and where; In addition, all components of the system you have deployed are much clearer. Moreover, in the control plane, the failure of nodes is handled carefully, and the hearths are automatically redistributed. When working with a stateless service, for example, with a web application, you can probably forget about failures altogether.

Kubernetes is as easy to learn as alternatives are.

Kubernetes does not follow the Unix model. It does not fit into the ecosystem of tools. He is not one of those decisions that "do only one thing and do it well." Kubernetes is a comprehensive solution for many problems, it can replace a variety of techniques and tools that developers have already got used to.

Kubernetes has its own terminology, its own toolkit, its own paradigm of dealing with servers, which differs significantly from the traditional unix approach. When you navigate these systems, many of the characteristic features of Kubernetes may seem random and overcomplicated, perhaps even cruel. I suppose there are compelling reasons why such complexity arose, but here I don’t say that Kubernetes is simple and elementary to understand; I say that Kubernetes knowledge is enough to create and support any infrastructure.

It is impossible to say that any sysadmin has sufficient unix background. For example, after graduating from college, I worked for 5 years in the Windows ecosystem. I can say that my first job at a startup, where it took to deal with linux, required a difficult transformation. I did not know the memory by command, I was not used to using the command line for almost all occasions. It took me some time to learn how to work with the new platform (although, by that time I already had some programming experience), but I clearly remember how much I had suffered then.

With Kubernetes, you can start all the work literally from scratch. In Kubernetes, you can easily allocate services, even without an SSH connection to the server. You do not have to learn systemd; it is not necessary to understand the launch levels or to know which command was used:

groupadd or addgroup ; you do not have to learn how to deal with ps or, God forbid, vim. All this materiel is useful and important, nothing from it disappears anywhere. I have great respect for system administrators who are able to navigate their way through any unix environment. But how cool would it be if developers could productively acquire all such resources without delving into such subtleties of administration?Is this really:

[Unit] Description=The NGINX HTTP and reverse proxy server After=syslog.target network.target remote-fs.target nss-lookup.target [Service] Type=forking PIDFile=/run/nginx.pid ExecStartPre=/usr/sbin/nginx -t ExecStart=/usr/sbin/nginx ExecReload=/usr/sbin/nginx -s reload ExecStop=/bin/kill -s QUIT $MAINPID PrivateTmp=true [Install] WantedBy=multi-user.target Much harder this one?

apiVersion: apps/v1 kind: Deployment metadata: name: my-nginx spec: selector: matchLabels: run: my-nginx replicas: 1 template: metadata: labels: run: my-nginx spec: containers: - name: my-nginx image: nginx ports: - containerPort: 80 And this is a relatively successful case. If you manage the infrastructure 100% remotely, you will not be able to provide server support manually. For this you need some tool: ansible, salt, chef, puppet, etc. Naturally, to master Kubernetes and work effectively with it, you need to learn a lot, but this is no more difficult than dealing with alternatives.

Kubernetes open source

In an era of huge popularity of serverless technologies, Kubernetes is notable for its independence from specific suppliers. There are at least 3 popular and easy-to-manage Kubernetes providers (Google, Amazon, Microsoft) that will not disappear in the foreseeable future. There are also many companies that successfully cope with their own clusters of Kubernetes, and every day the number of such companies is multiplying. Today, working with Kubernetes from the first day is an obvious solution for most startups.

Kubernetes, being an open-source project, is well documented, stable and popular, and any problems can be as detailed as possible on stackoverflow. Of course, Kubernetes has its own bugs and technical challenges, but I assure you: there are guys in the world who sharpen Kubernetes with inconceivable skill. Their work is your dividend; In the next few years, this technology will only be improved.

Kubernetes scaled

One of the challenges associated with supporting the infrastructure is the following: techniques that are useful when deploying small systems are rarely successfully reproduced in larger systems. It is definitely convenient to SCP-noob a binary file to the server, kill the process and restart it if you have only one server. But when you need to support multiple servers and simultaneously monitor them, this task can be overwhelmingly complex. That's why when managing such an infrastructure you can't do without tools like chef or puppet.

However, if you choose the wrong tool, over time this can get you into a corner. Suddenly, it turns out that the lead chef server is not coping with the 1000-server load, the blue-green deployment does not fit into your model, and it takes hours to complete the capistrano tasks. When the infrastructure reaches a certain size, you will be forced to tear down everything that has already been done and start over. How great would it be if you managed to break out of this eternal squirrel wheel with infrastructure and switch to technology that will scale according to your needs?

Kubernetes is a lot like a SQL database. SQL is the product of many years of hard lessons about data storage and efficient query. Probably, you will never need a tenth of the possibilities that are provided in a suitable SQL database. You might even be able to design a more efficient system based on your own database. But in the absolute majority of situations, the SQL database will not only satisfy all your needs, but will also drastically expand your ability to quickly issue ready-made solutions. SQL schemas and indexing are much easier to use than your own file-based data structures, since your own data structures will almost certainly become obsolete as your product grows and evolves over time. But the SQL database is likely to survive any inevitable refactoring.

Kubernetes will survive too. Perhaps your side project will never grow to such a scale where its problems can be solved only by means of Kubernetes, but in Kubernetes there are absolutely all the tools for dealing with any problems, and the skills that you will get when handling this toolkit can turn out to be invaluable in future projects.

Build your own cluster Kubernetes

So, I believe that it is advisable to use Kubernetes in small projects, but only if setting up the cluster is easy and inexpensive. It turns out that both are achievable. There are managed Kubernetes providers that independently resolve all confusion with the support of the control plane of the Kubernetes master node. And the recent dumping wars in the cloud infrastructure environment have led to a striking cheapening of such services.

We will analyze the next case using the example of Google's Kubernetes engine (GKE), however, you can also look at offers from Amazon (EKS) or Microsoft (AKS), if Google is not satisfied with you. To build our own Kubernetes cluster, we need:

- Domain name (~ $ 10 / year, depending on the domain)

- Cloudflare DNS hosting (free)

- GKE Kubernetes cluster consisting of three nodes (~ $ 5 / month)

- Web application uploaded as a docker container to Google Container Registry (GCR) (free)

- Some yaml files for Kubernetes configuration

For additional savings, we will try to do without the Google input controller. Instead, we will use Nginx on each node as a daemon and create our own operator, which will provide us with synchronization of the external IP addresses of the working node with Cloudflare.

Google configuration

First, go to console.cloud.google.com and create a project, if you have not already done so. You will also need to create a billing account. Then through the hamburger menu we go to the Kubernetes page and create a new cluster. Here's what to do next:

- Select Zonal for the location type (Location).

- I indicated my location as us-central1-a

- Choose your version of kubernetes

- Create a pool of 3 nodes using the cheapest instance type (f1-micro).

- For this pool of nodes, on the “advanced” (advanced) screen, set the size of the boot disk to 10GB, turn on the nodes being pushed out (they are cheaper), turn on auto-update and autotreatment.

- Under the pool of nodes will find a number of additional options. We want to disable HTTP load balancing (load balancing in GCP is expensive), as well as shutting down all the facilities associated with StackDriver (it can also be expensive and, in my experience, not very reliable). Also turn off the kubernetes display panel.

Putting all these options, you can proceed to the next step: create a cluster. Here's how to save it:

- Kubernetes control plane: free because Google does not charge leading nodes

- Working Kubernetes nodes: $ 5.04 / month, as a rule, 3 micro-nodes will cost you $ 11.65 / month, and, having made them crowded out, we will reduce this rate to $ 7.67 / month, and at the “Always Free” level - to $ 5.04.

- Storage costs: free. We get a free 30GB of permanent disk space, so we chose the size 10GB above.

- Load balancing costs: free of charge, we have disabled HTTP load balancing, since only it would cost us $ 18 / month. Instead, we will run our own HTTP proxies on each node and direct the DNS to public IP.

- Network costs: free, the egress function remains free until you choose 1GB per month. (then each next gigabyte costs 8 cents)

So we set up a cluster of Kubernetes of 3 nodes, it cost us the same price as the only Digital Ocean machine.

Above the GKE settings, you also need to configure a couple of firewall rules so that the HTTP ports of our sites can be reached from the outside world. Find the VPC Network entry in the hamburger menu, then go to Firewall Rules and add rules for TCP ports 80 and 443, with the IP address range 0.0.0.0/0.

Firewall rules

Local setting

So, we raised and launched a cluster, and now let's configure it. Install the

gcloud tool by following the instructions at cloud.google.com/sdk/docs . By installing it, you can proceed to the configuration by doing this: gcloud auth login Of course, you still have to install the docker, and then bind it to the GCR, so that you can send containers:

gcloud auth configure-docker You can also install and configure

kubectl by following the instructions outlined here.Simplified:

gcloud components install kubectl gcloud config set project PROJECT_ID gcloud config set compute/zone COMPUTE_ZONE gcloud container clusters get-credentials CLUSTER_NAME By the way, just a fairy tale that all this toolkit works on Windows, OSX or Linux. As a person who sometimes did such things under Windows, I confess that this is a pleasant surprise.

Build a web application

You can write a web application in any programming language. The container allows you to abstract particulars. We need to create an HTTP application that listens to the port. I prefer the Go language for such purposes, but for a change, let's try crystal. Create the

main.cr file: # crystal-www-example/main.cr require "http/server" Signal::INT.trap do exit end server = HTTP::Server.new do |context| context.response.content_type = "text/plain" context.response.print "Hello world from crystal-www-example! The time is #{Time.now}" end server.bind_tcp("0.0.0.0", 8080) puts "Listening on http://0.0.0.0:8080" server.listen We also need the Dockerfile:

# crystal-www-example/Dockerfile FROM crystallang/crystal:0.26.1 as builder COPY main.cr main.cr RUN crystal build -o /bin/crystal-www-example main.cr --release ENTRYPOINT [ "/bin/crystal-www-example" ] To build and test our application, run:

docker build -t gcr.io/PROJECT_ID/crystal-www-example:latest . docker run -p 8080:8080 gcr.io/PROJECT_ID/crystal-www-example:latest And then go to the browser at localhost: 8080. Having adjusted this mechanism, we can send our application to the GCR by running:

docker push gcr.io/PROJECT_ID/crystal-www-example:latest Configuring Kubernetes

My configuration of Kubernetes is here .

For this example, we will have to create several files in yaml format, where our various services will be presented, and then run kubectl apply to configure them in a cluster. The Kubernetes configuration is descriptive, and all these yaml files tell Kubernetes what state we want to get. In a broad sense, this is what we are going to do:

- Create a Deployment and Service for our crystal-www-example web application.

- Create a Daemon Set (service set) and Config Map (configuration map) for nginx

- Run our own application to synchronize the IP nodes with Cloudflare for DNS

Web Application Configuration

First, let's configure our web application: (be sure to replace the

PROJECT_ID with the id of your project) # kubernetes-config/crystal-www-example.yaml apiVersion: apps/v1 kind: Deployment metadata: name: crystal-www-example labels: app: crystal-www-example spec: replicas: 1 selector: matchLabels: app: crystal-www-example template: metadata: labels: app: crystal-www-example spec: containers: - name: crystal-www-example image: gcr.io/PROJECT_ID/crystal-www-example:latest ports: - containerPort: 8080 --- kind: Service apiVersion: v1 metadata: name: crystal-www-example spec: selector: app: crystal-www-example ports: - protocol: TCP port: 8080 targetPort: 8080 This is how Deployment (deployed configuration) is created, according to which Kubernetes should create a single container with a single container (our docker container will work there) and a service that we will use to discover services in our cluster. To apply this configuration, run (from the

kubernetes-config directory): kubectl apply -f You can test it like this:

kubectl get pod # : # crystal-www-example-698bbb44c5-l9hj9 1/1 Running 0 5m We can also create a proxy API for access:

kubectl proxy And then go: localhost : 8001 / api / v1 / namespaces / default / services / crystal-www-example / proxy /

NGINX configuration

As a rule, when working with HTTP services, Kubernetes uses an input controller. Unfortunately, the HTTP load balancer from Google is too expensive, so we will not use it, but use our own HTTP proxy and configure it manually (it sounds scary, but in reality everything is very simple).

To do this, use the Daemon Set and Config Map. Daemon Set is an application running on each node. Config Map is, in principle, a small file that we can mount in a container; This file will store the nginx configuration.

The yaml file looks like this:

apiVersion: apps/v1 kind: DaemonSet metadata: name: nginx labels: app: nginx spec: selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: hostNetwork: true dnsPolicy: ClusterFirstWithHostNet containers: - image: nginx:1.15.3-alpine name: nginx ports: - name: http containerPort: 80 hostPort: 80 volumeMounts: - name: "config" mountPath: "/etc/nginx" volumes: - name: config configMap: name: nginx-conf --- apiVersion: v1 kind: ConfigMap metadata: name: nginx-conf data: nginx.conf: | worker_processes 1; error_log /dev/stdout info; events { worker_connections 10; } http { access_log /dev/stdout; server { listen 80; location / { proxy_pass http://crystal-www-example.default.svc.cluster.local:8080; } } } This is how we mount the nginx.conf file of the configuration card in the nginx container. We also set values for two more fields:

hostNetwork: true , so that you can bind the port host and reach nginx from the outside and dnsPolicy: ClusterFirstWithHostNet , so that you can access services within the cluster. If this is not done, then we will have a completely standard configuration.Apply these expressions - and you can go to nginx using the public ip of your nodes.

Here's how to verify this:

kubectl get node -o yaml # look for: # - address: ... # type: ExternalIP So now our web application is accessible from the Internet. It remains to come up with a beautiful name for the application.

DNS connection

It is required to set 3 A DNS records for nodes of our cluster:

Entries in the UI Cloudflare

Then we add a CNAME record to point to these A-records. (eg www.example.com CNAME for kubernetes.example.com). This can be done manually, but better - automatically, so that if someday we have to scale or replace the nodes in the DNS records, this information is also updated automatically.

I think this example also illustrates well how Kubernetes can delegate part of your work, and not try to overcome it. Kubernetes understands scripts perfectly and has a powerful API, and you can fill in the existing spaces with your own components, which are not so difficult to write. For this, I made a small application on Go, available at this address: kubernetes-cloudflare-sync .

To start, created the informant

factory := informers.NewSharedInformerFactory(client, time.Minute) lister := factory.Core().V1().Nodes().Lister() informer := factory.Core().V1().Nodes().Informer() informer.AddEventHandler(cache.ResourceEventHandlerFuncs{ AddFunc: func(obj interface{}) { resync() }, UpdateFunc: func(oldObj, newObj interface{}) { resync() }, DeleteFunc: func(obj interface{}) { resync() }, }) informer.Run(stop) It will call my resynchronization function whenever a node changes. Then I synchronize the API using the Cloudflare API library, like this:

var ips []string for _, node := range nodes { for _, addr := range node.Status.Addresses { if addr.Type == core_v1.NodeExternalIP { ips = append(ips, addr.Address) } } } sort.Strings(ips) for _, ip := range ips { api.CreateDNSRecord(zoneID, cloudflare.DNSRecord{ Type: "A", Name: options.DNSName, Content: ip, TTL: 120, Proxied: false, }) } Then, as is the case with our web application, we run this application in Kubernetes as a Deployment:

apiVersion: apps/v1 kind: Deployment metadata: name: kubernetes-cloudflare-sync labels: app: kubernetes-cloudflare-sync spec: replicas: 1 selector: matchLabels: app: kubernetes-cloudflare-sync template: metadata: labels: app: kubernetes-cloudflare-sync spec: serviceAccountName: kubernetes-cloudflare-sync containers: - name: kubernetes-cloudflare-sync image: gcr.io/PROJECT_ID/kubernetes-cloudflare-sync args: - --dns-name=kubernetes.example.com env: - name: CF_API_KEY valueFrom: secretKeyRef: name: cloudflare key: api-key - name: CF_API_EMAIL valueFrom: secretKeyRef: name: cloudflare key: email We will need to create a secret Kubernetes, specifying the key

cloudflare api and mailing address: kubectl create secret generic cloudflare --from-literal=email='EMAIL' --from-literal=api-key='API_KEY' We will also need to create a service account (which opens our Deployment access to the Kubernetes API to retrieve nodes). First run (especially for GKE):

kubectl create clusterrolebinding cluster-admin-binding --clusterrole cluster-admin --user YOUR_EMAIL_ADDRESS_HERE And then apply:

apiVersion: v1 kind: ServiceAccount metadata: name: kubernetes-cloudflare-sync --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: kubernetes-cloudflare-sync rules: - apiGroups: [""] resources: ["nodes"] verbs: ["list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-cloudflare-sync-viewer roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-cloudflare-sync subjects: - kind: ServiceAccount name: kubernetes-cloudflare-sync namespace: default Working with RBAC is a bit of a chore, but I hope everything is clear. When the configuration is ready, and our application works with Cloudflare, this application can be updated with any changes to any of the nodes.

Conclusion

Kubernetes is destined to become the flagship technology for managing large systems. , Kubernetes , Kubernetes , Kubernetes , Kubernetes .

, Kubernetes : , . – !

Source: https://habr.com/ru/post/433192/

All Articles