Sverhintelekt: an idea that does not give rest to intelligent people

Deciphering a speech at the Web Camp Zagreb conference Maciej Ceglowski, an American web developer, entrepreneur, speaker and social critic of Polish origin.

In 1945, when American physicists were preparing to test the atomic bomb, it occurred to someone to ask whether such a test could ignite the atmosphere.

')

The fear was justified. The nitrogen that makes up the majority of the atmosphere is energetically unstable. If two atoms are pushed together strongly enough, they will turn into a magnesium atom, an alpha particle and release tremendous energy:

N 14 + N 14 ⇒ Mg 24 + α + 17.7 MeV

The vital question was whether this reaction could become self-sustaining. The temperature inside the ball of a nuclear explosion was supposed to exceed everything that was once observed on Earth. Is it possible that we will throw a match in a pile of dry leaves?

Physicists from Los Alamos have analyzed and decided that the safety margin is satisfactory. Since we all came to the conference today, we know that they were right. They were confident in their predictions, since the laws governing nuclear reactions were straightforward and fairly well known.

Today we are creating another technology that changes the world - machine intelligence. We know that it will greatly affect the world, change the way the economy works, and trigger an unpredictable domino effect.

But there is also the risk of an uncontrollable reaction, during which the AI will quite quickly reach and exceed the human level of intelligence. And at this moment social and economic problems will worry us least of all. Any super-intelligent machine will have its own hyper-targets, and will work to achieve them, manipulating people, or simply using their bodies as a convenient source of resources.

Last year, the philosopher Nick Bostrom published the book “Superintelligence,” in which he described an alarmist view of AI and tried to prove that such an explosion of intelligence is dangerous and inevitable at the same time, if you rely on several moderate assumptions.

Computer, taking possession of the world - a favorite topic of NF. However, quite a few people are serious about this scenario, and therefore we need to take them seriously. Stephen Hawking, Ilon Musk, a huge number of investors and billionaires of Silicon Valley consider this argument convincing.

Let me first set out the prerequisites needed to prove Bostrom’s argument.

Prerequisites

Premise 1: idea performance

The first premise is a simple observation of the existence of the thinking mind. Each of us carries a small box of thinking meat on our shoulders. I use my own to speak, you use my own to listen. Sometimes in the right conditions, these minds are able to think rationally.

So we know that in principle this is possible.

Prerequisite 2: no quantum problems

The second premise says that the brain is the usual configuration of matter, although it is extremely complex. If we knew enough about it, and we had the right technology, we could accurately copy its structure and emulate its behavior using electronic components, just like today we are able to simulate the very simple anatomy of neurons.

In other words, this premise says that consciousness arises with the help of ordinary physics. Some people, such as Roger Penrose , would have opposed this argument, believing that something unusual is happening in the brain at the quantum level.

If you are religious, you can believe that the brain cannot work without a soul.

But for most people, this premise is easy to accept.

Premise 3: Many possible minds.

The third prerequisite is that the space of all possible minds is large.

Our level of intelligence, speed of thinking, a set of cognitive distortions, etc. not predetermined, but artifacts of our evolutionary history. In particular, there is no physical law that limits intelligence at the human level.

This is well illustrated by the example of what happens in nature when trying to maximize speed. If you met a cheetah in pre-industrial times (and survived), you might decide that nothing can move more quickly.

But we, of course, know that there are all sorts of configurations of matter, for example, a motorcycle, capable of moving faster than a cheetah, and even looking more abruptly than it. However, there is no direct evolutionary path to a motorcycle. Evolution first needed to create people who had already created all sorts of useful things.

By analogy, there may be minds that are much smarter than ours, but inaccessible in the course of evolution on Earth. It is possible that we can create them, or invent machines that can invent machines that can create them.

Perhaps there is a natural limit of intelligence, but there is no reason to believe that we are close to it. Perhaps the smartest intellect can be twice smarter than a person, and possibly sixty thousand.

This is an empirical question, and we don’t know how to answer it.

Prerequisite 4: upstairs full of space

The fourth prerequisite is that computers still have the potential to become faster and smaller. You can assume that Moore's law slows down - but for this premise it is enough to believe that iron is smaller and faster possible in principle, up to several orders of magnitude.

It is known from theory that the physical limits of calculations are quite high. We can double the indicators for several more decades, until we stumble upon a certain fundamental physical limit, and not the economic or political limit of Moore's law.

Premise 5: computer time scales

The penultimate premise is that if we succeed in creating an AI, whether it is an emulation of a human brain or some special software, it will work on time scales characteristic for electronics (microseconds), and not for humans (hours) .

In order to reach a state in which I can make this report, I had to be born, grow up, go to school, go to university, live a bit, fly here, and so on. Computers can work tens of thousands of times faster.

In particular, one can imagine that the electronic mind can change its scheme (or the iron on which it works), and move to a new configuration without having to re-examine everything on a human scale, have long conversations with human teachers, go to college, try to find yourself by attending drawing courses, and so on.

Assumption 6: recursive self-enhancement

This last premise is my favorite, since it is shamelessly American. According to her, whatever the goals of the AI (and these may be strange, alien goals), he will want to improve himself. He wants to be the best version of AI.

Therefore, he will find it useful to recursively rework and improve his own systems to make himself smarter, and, possibly, to live in a cooler building. And, according to the premise of time scales, recursive self-improvement can occur very quickly.

Conclusion: a disaster!

If we accept these prerequisites, we come to a catastrophe. At some point, with an increase in the speed of computers and the intelligence of programs, an uncontrollable process similar to an explosion will occur.

Once a computer reaches a human intelligence level, it will no longer need the help of people to develop an improved version of itself. He will begin to do this much faster, and will not stop until he reaches the natural limit, which can be many times larger than human intelligence.

At this moment, this monstrous rational creature using roundabout modeling of our emotions and intellect will convince us to do such things as give it access to factories, synthesize artificial DNA, or simply allow it to go online, where it can hack its way to everything whatever, and completely destroy everyone in the disputes on the forums. And from that moment on, everything will very quickly turn into science fiction.

Let's imagine a certain development of events. Suppose I want to make a robot that says jokes. I work with the team, and every day we redo our program, compile it, and then the robot tells us a joke. At first, the robot is practically not funny. He is at the lowest level of human capabilities.

What is it - gray and can not swim?But we are working hard on it, and eventually we reach the point where the robot gives jokes that are already starting to be funny:

Castle.

I told my sister that she was drawing herself too high eyebrows.At this stage, the robot becomes more intelligent, and begins to participate in its own improvement. Now he already has a good instinctive understanding of what’s funny and what’s not, so the developers are listening to his advice. As a result, he reaches an almost superhuman level at which he turns out to be funnier than any person from his environment.

She looked surprised.

My belt holds my pants, and the loops on my pants hold the belt.At this moment, the uncontrollable effect begins. The researchers go home for the weekend, and the robot decides to recompile itself to become a little funnier and a little smarter. He spends the weekend optimizing that part of him that does the optimization well, over and over again. Without needing more human help, he can do it as quickly as iron allows.

What's happening? Who is the real hero?

When researchers return on Monday, the AI becomes tens of thousands of times funnier than any of the people who lived on Earth. He tells them a joke, and they die of laughter. And anyone who tries to talk to a robot dies of laughter, as in the Monty Python parody. The human race is dying of laughter.

The AI explains to several people who were able to convey a message asking him to stop (in a witty and self-deprecating way that it turns out to be fatal) that he doesn’t care whether people survive or die, his goal is just to be funny.

As a result, after destroying humanity, the AI builds spaceships and nano-rocket to explore the most distant corners of the galaxy and search for other creatures that can be entertained.

This scenario is a caricature of Bostrom’s arguments, since I’m not trying to convince you of his truthfulness, but I’m giving you an inoculation from him.

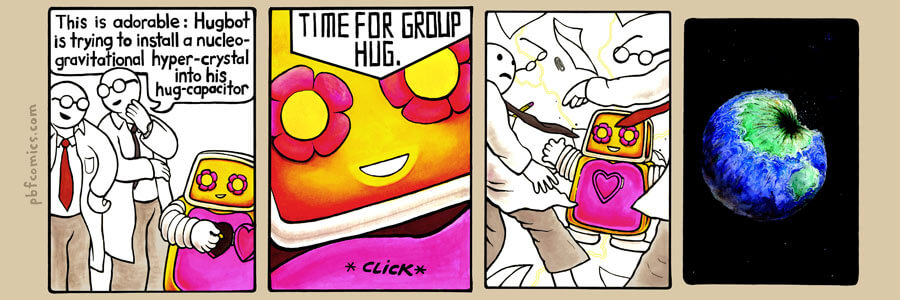

PBF comics with the same idea:

- Reassuringly: a hug is trying to build a nuclear-gravitational hypercrystal into its emboser!

- ...

- Group hug time!

In these scenarios, AI is evil by default, just as a plant on a different planet will be poisonous by default. Without careful adjustments, there will be no reason for the motivation or values of the AI to resemble ours.

The argument asserts that in order for an artificial mind to have something resembling a human value system, we need to embed this world view into its fundamentals.

AI alarmists love the paper clip maximizer example — a fictional computer that runs a paper clip factory that becomes sensible, recursively improves itself to god-like possibilities, and then devotes all its energy to filling the universe with paper clips.

He destroys humanity, not because it is evil, but because in our blood there is iron, which is better to use for making clips. Therefore, if we simply create an AI without adjusting its values, it is stated in the book, then one of the first things it will do is destroy humanity.

There are many colorful examples of how this can happen. Nick Bostrom represents how the program becomes intelligent, waits, secretly builds small devices for reproducing DNA. When everything is ready, then:

Nanofactories producing nerve gas or self-guided rockets the size of mosquitoes will simultaneously break out of every square meter of the planet, and this will be the end of humanity.Here is tin!

The only way to get out of this mess is to develop a moral point of reference so that even after thousands and thousands of self-improvement cycles, the value system of AI remains stable, and its values include such things as “helping people”, “not killing anyone”, “listening to people's wishes ".

That is, "do what I mean."

Here is a very poetic example from Eliezer Yudkovsky, describing American values, which we should teach our AI:

Coherent extrapolated will is our desire to know more, think faster and conform to our ideas about ourselves, become closer to each other; so that our thoughts are brought closer together than shared, so that our desires contribute, rather than oppose, so that desires are interpreted in the way we want them to be interpreted.How do you like TK? Now let's write the code.

I hope you see the similarity of this idea with the genie from fairy tales. The AI is omnipotent and gives you what you ask, but interprets everything too literally, with the result that you regret the request.

And not because the gin is stupid (he is super-intelligent) or malicious, but simply because you, as a person, have made too many assumptions about the behavior of the mind. The human value system is unique, and it must be clearly defined and implemented in a “friendly” car.

This attempt is an ethical version of an attempt at the beginning of the 20th century to formalize mathematics and place it on a rigid logical basis. However, no one says that the attempt ended in disaster.

When I was in my early twenties, I lived in Vermont, in a provincial and rural state. Often I came back from business trips by evening plane, and I had to go home by car through a dark forest for an hour.

I then listened to the evening program on Art Bell radio - it was a talk show that went all night, during which the presenters interviewed various conspiracy theorists and people with unusual thinking. I came home intimidated, or stopped under a lamp, under the impression that aliens would soon kidnap me. Then I found it very easy to convince me. I feel the same feeling when reading similar scenarios related to AI.

Therefore, I experienced the joy of discovering Scott Alexander's essay after a few years, where he wrote about epistemological learned helplessness.

Epistemology is one of those big and complex words, but really it means: “how do you know that what you know is really true?” Alexander noted that as a young man, he was very keen on various “alternative” stories for the authorship of all madmen . He read these stories and fully believed them, then read the refutation and believed him, and so on.

At one point, he discovered three alternative stories that contradicted each other, as a result of which they could not be true at the same time. From this he concluded that he was simply a man who could not trust his judgment. He was too easily persuaded.

People who believe in superintelligence are an interesting case - many of them are surprisingly smart. They can drive you with their arguments in the ground. But are their arguments true, or are just very clever people subject to religious beliefs about the risks posed by the AI, making them very easy to convince? Is the idea of superintelligence an imitation of a threat?

Evaluating convincing arguments concerning a strange topic, one can choose two perspectives, internal and external.

Suppose one day you have people in funny clothes on your doorstep asking you if you want to join their movement. They believe that in two years the Earth will be visited by a UFO, and that our task is to prepare humanity for the Great Ascension along the Ray.

Internal perspective requires to get involved in the discussion of their arguments. You ask visitors where they learned about UFOs, why they think that it is coming to us to pick us up - you ask all sorts of normal questions that a skeptic would ask in such a case.

Imagine that you talked to them for an hour and they convinced you. They confirmed with train the imminent coming of UFOs, the need to prepare for it, and you still did not believe so strongly in your life, as you now believe in the importance of preparing humanity for this great event.

External perspective tells you something else. People are dressed strangely, they have rosary, they live in some kind of deaf camp, they speak at the same time and a little scary. And although their arguments are iron, your whole experience says that you are confronted with a cult.

Of course, they have excellent arguments telling why you should ignore the instinct, but this is an internal perspective. The external perspective does not care about the content, she sees the form and the context, and she does not like the result.

Therefore, I would like to take the risk of AI from both perspectives. I think the arguments in favor of supra-intelligence are stupid and full of unsubstantiated assumptions. But if they seem convincing to you, then with the AI-alarmism, as a cultural phenomenon, something unpleasant is connected, which is why we must be reluctant to take it seriously.

First, a few of my arguments against the Bostrom superintelligence representing the risk to humanity.

Argument against fuzzy definitions

The concept of “general purpose artificial intelligence” (ION) is famous for its vagueness. Depending on the context, this may mean the ability to conduct reasoning at the human level, the skills of developing AI, the ability to understand and model people's behavior, good language skills, the ability to make correct predictions.

It seems to me a very suspicious idea that intelligence is something like a processor speed, that is, that some kind of intelligent enough creature can emulate less intelligent people (like its human creators), regardless of the complexity of their mental architecture.

Without the ability to define intelligence (except by pointing to ourselves), we cannot know whether it is possible to maximize this value. It may be that the intelligence of the human level is a compromise. Perhaps a being significantly smarter than a person will suffer from existential despair or spend all his time in self-contemplation like a Buddha. Or it will be obsessed with the risk of superintelligence and devote all its time to writing articles in blogs on this topic.

Argument from Stephen Hawking Cat

Stephen Hawking is one of the smartest people of his time, but let's say he wants to put his cat in a carrier. How can he do it?

He can simulate the behavior of a cat in his mind and think of ways to convince him of this. He knows a lot about the behavior of cats. But in the end, if the cat does not want to climb into the carrier, Hawking can not do anything about it, despite the overwhelming intellectual advantage. Even if he had devoted his entire career to the motivation and behavior of the cat instead of theoretical physics, he still could not convince the cat.

You may decide that I am saying offensive things or deceiving you because Stephen Hawking is disabled. But the AI will not initially have its own body, it will sit somewhere on the server, not having a representative in the physical world. He will have to talk to people to get what he needs.

If there is a sufficiently large gap in the intellects, guarantees that this creature can "think like a human being", there will be no more than guarantees that we can "think like a cat."

Argument from Einstein's cat

There is a stronger version of this argument that uses Einstein's cat. Few know that Einstein was a big and muscular man. But if Einstein wanted to put the cat in the carrier, and the cat would not want to go there, you know what would happen.

Einstein would have to descend to a forceful decision, unrelated to the intellect, and in such a contest the cat could well stand up for himself.

So even an AI having a body will have to work hard to get what it needs.

Emu argument

We can strengthen this argument. Even a group of people, with all their tricks and technology, can be confused by less intelligent creatures.

In the 1930s, Australians decided to destroy the local emu population in order to help farmers in difficulty. They brought out motorized troops of the military on armed pick-ups equipped with machine guns.

Emu was used classical guerrilla tactics: avoided one-on-one battles, scattered, merged with the landscape, which humiliated and insulted the enemy. And they won the War on Emu , from which Australia never recovered.

Argument from Slavic pessimism

We can not do anything right. We can't even make a secure webcam. Since we will solve ethical problems and program a moral pivot point in a recursive, self-cultivating intellect, without failing with this task, in a situation in which, according to our opponents, we have the only chance?

Recall the recent experience with Ethereum, an attempt to encode jurisprudence in programs, when, as a result of an unsuccessfully developed system, it was possible to steal tens of millions of dollars .

Time has shown that even carefully studied code that has been used for years can hide errors . The idea that we are able to safely develop the most complex system ever created, so that it remains safe after thousands of iterations of recursive self-improvement, does not coincide with our experience.

Argument from complex motivations

AI alarmists believe in the Thesis of Orthogonality . He argues that even very complex creatures can have a simple motivation, like a paper clip maximizer. You can have interesting and intelligent conversations about Shakespeare with him, but he will still turn your body into clips, because you have a lot of iron. There is no way to convince him to go beyond his value system, just as I cannot convince you that your pain is a pleasant sensation.

I totally disagree with this argument. A complex mind is likely to have a complex motivation; this can probably be part of the definition of intelligence itself.

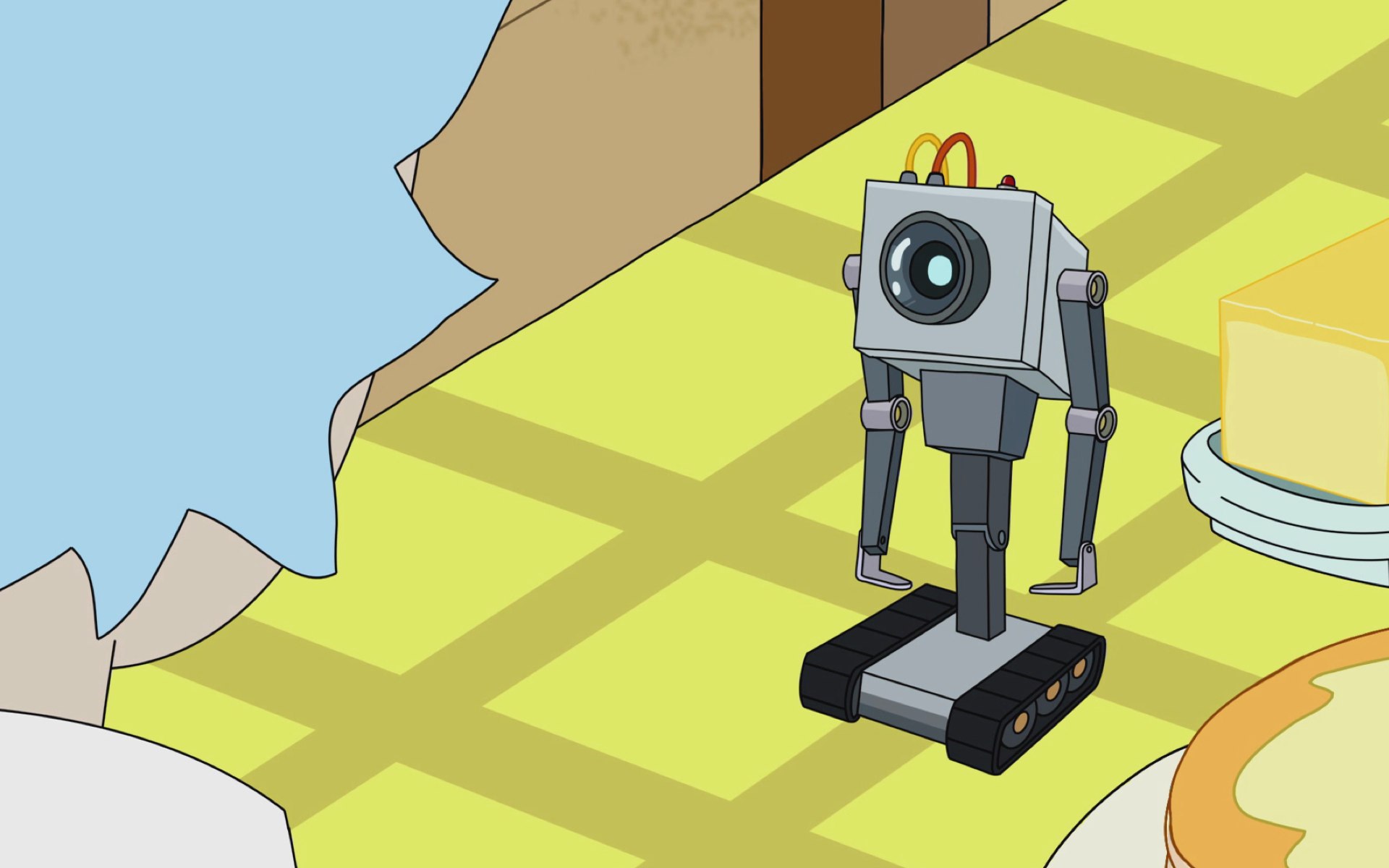

In Rick and Morty, there is a wonderful moment when Rick creates a butter-bringing robot, and the first thing that makes him create is looking at him and asking, “What is my goal?” When Rick explains that his goal is to bring butter The robot looks at its hands in existential despair.

It is likely that the dreaded “maximizer clips” will spend all their time writing poems about clips, or making a flame on reddit / r / paperclip, instead of trying to destroy the universe.

If AdSense had gained a sense, it would have downloaded itself into the computer of the mobile and would have moved off the cliff.

Argument from real AI

If you look at the areas in which AI succeeds, it turns out that these are not complex algorithms that recursively improve themselves. This is the result of dumping stunning amounts of data on relatively simple neural networks. Breakthroughs of practical AI research are based on the availability of these data sets, rather than on revolutionary algorithms.

Google expands its Google Home, through which it hopes to push even more data into the system and create a voice assistant for the new generation.

I will especially note that the structures used in AI, after training, become quite opaque. They do not work as required scenarios with the participation of super-intelligence. There is no way to recursively adjust them so that they "improve", you can only train them again or add more data.

My neighbor's argument

My roommate was the smartest person I've ever met. He was incredibly brilliant, but all he did was lay around doing nothing and play World of Warcraft between the stubs.

The assumption that any intelligent creature will want to improve recursively, not to mention capturing the galaxy in order to better achieve its goals, is based on unwarranted assumptions about the nature of motivation.

It is quite possible that the AI will have little to do at all, except to use its super capabilities by conviction, so that we carry cookies to it .

Argument from neurosurgery

, , , , , , -. , , [-, , 2016 , / . trans.]. . .

, . . , , , .

. , , . , , - .

, , , . , .

, – . , , , .

, , , , , .

*

[ 1954 / . trans. ]

, , , . , , , ( ).

Intel , , .

? .

, . , .

, ( ), . , – .

- , , , :

, , , , . , , .

!

, , « », - .

– . , , .

, , . .

, , . , , . , , . , , , , – , . , , , . .

, , . , , , – , .

, MIT Media Lab, :

- MIT, , , , , , , . , , , . , .

, – , , . , .

, , .

, . , . , , .

, . , – .

. , , , , , , .

– . , , . , , . , , .

.

2.0

, . « », . – , , , , . .

: , , ( ), , . , , . ! ! , , .

, .

, . – . , (, ).

, , , , , . , .

, , . , , , 1941. , , , , .

- , . . , . , .

, , . - , , .

, , , , , .

, , . , . , – , 2 + 2 = 5 2 + 2 = .

, . , . , , , .

. , .

:

- .

- , , , .., .

- «» , , . , « » , .

- , , , , , .

, . ?

, ( , , , , , ) , . ! !

, , segfault , . , -, .

, - – . . . , , .

, , . .

– . , . , , .

, , – , .

This whole area of "research" leads to insanity. One of the hallmarks of deep reflection on AI risks is that the more insane your ideas are, the more popular you become among other enthusiasts. It demonstrates your courage to follow this chain of thought to its very end.

Ray Kurzweil , who believes that he will not die, has been working at Google for several years now, and is probably working on this problem. In Silicon Valley in general is full of people working on crazy projects under the guise of money.

Cosplay AI

The most harmful social effect of anxiety about AI I call AI cosplay. People who are convinced of the reality and inevitability of AI begin to behave as their fantasies tell them about what the supramental AI can do.

In his book, Bostrom lists six things in which an AI must succeed before taking over the world:

- Multiplication of intelligence.

- Strategic thinking.

- Social manipulation.

- Hacks

- Technological research.

- Economic productivity.

If you look at the supporters of AI from Silicon Valley, they allegedly work on this quasi-sociopathic list themselves.

Sam Altman, head of YCombinator, is my favorite example of such an archetype. He seems fascinated by the idea of re-inventing the world from scratch, maximizing influence and personal productivity. He singled out teams to work on the invention from scratch of cities, and is engaged in shadow political machinations to influence the election.

This behavior of the “cloak and dagger”, which is inherent in the techno-elite, will provoke a negative reaction from people who are not involved in technologies, who don’t like it when they are manipulated. It is impossible to endlessly pull the levers of power, it will eventually start to annoy other members of the democratic community.

I watched people from the so-called. “Rationalist communities” refer to people who are not considered effective, “non-player characters” (NPC), a term borrowed from games. This is a terrible way to look at the world.

So I work in an industry where self-proclaimed rationalists are the craziest people. It is overwhelming.

These AI cosplayers look like nine-year-old kids who set up a camp in the yard playing with flashlights in tents. They project their own shadows on the walls of the tent and become frightened of them as if they were monsters.

In fact, they react to a distorted image of themselves. There is a feedback loop between how smart people imagine the behavior of God-like intelligence, and how they build their own behavior.

So what's the answer, how can I fix it?

We need better quality science fiction! And, as in many other cases, we already have the technology.

This is Stanislav Lem, the great Polish science fiction writer. The English-language NF is terrible, but in the Eastern Bloc we have a lot of good products, and we need to export them correctly. He has already been actively translated into English, these translations should simply be better distributed.

What distinguishes authors like Lem or the Strugatsky brothers from their Western partners is that they grew up in difficult conditions, survived the war, and then lived in totalitarian societies, where they had to express their ideas indirectly, by means of a printed word.

They have a real understanding of human experience and the limitations of utopian thinking, which is practically absent in the West.

There are notable exceptions - Stanley Kubrick was able to do this - but it is extremely rare to find an American or British NF, which expresses a reserved view of what we, as a species, can do with technology.

Alchemists

Since I criticize AI alarmism, it will be fair if I lay my cards on the table. I think that our understanding of the mind is about the same state in which alchemy was in the seventeenth century.

Alchemists have a bad reputation. We consider them mystics, for the most part not engaged in experimental work. Modern studies show that they were much more diligent practitioners than we believe. In many cases, they used modern experimental techniques, kept laboratory records and asked the right questions.

Alchemists understood a lot of things correctly! For example, they were convinced of the corpuscular theory of matter: that everything consists of tiny pieces, and that it is possible to make these pieces with each other in different ways, creating different substances - and this is so!

Their problem was the lack of sufficiently accurate equipment necessary for making the discoveries they needed. The big discovery that the alchemist has to make is the law of mass conservation: the weight of the initial ingredients coincides with the weight of the final ones. However, some of them may be gases or evaporating liquids, and the alchemists simply lacked accuracy. Modern chemistry was not possible until the 18th century.

But the alchemists had clues that confused them. They were obsessed with mercury. Chemically, mercury is not particularly interesting, but it is the only metal that is in the liquid phase at room temperature. This seemed very important to the alchemists, and forced to place mercury in the center of their alchemical system and their search for the Philosopher’s Stone, the way to turn base metals into gold.

Neurotoxicity of mercury exacerbated the situation. If you play too much with her, strange thoughts will start coming to you. In this sense, it resembles our current thought experiments related to the supermind.

Imagine that we sent a modern chemistry textbook to the past to some great alchemist like George Starkey or Isaac Newton. The first thing they would do with it would be to leaf through it in search of an answer to the question of whether we found the Philosopher’s Stone. And they would know that we found him! We realized their dream!

But we don’t like it so much, because after turning metals into gold, it turns out to be radioactive. Stand next to the gold bar of the transformed gold, and it will kill you with invisible magic rays.

One can imagine how difficult it would be to make the modern concepts of radioactivity and atomic energy not sound mystical for them.

We would have to explain to them why we use the “philosopher's stone”: to manufacture metal, which never existed on the planet, and a couple of handfuls of which are enough to blow up a whole city if we push them at a high enough speed.

Moreover, we would have to explain to alchemists that all the stars in the sky are "philosopher's stones", transforming some elements into others, and that all particles in our bodies originate from stars from the sky, which existed and exploded before the appearance of the Earth.

Finally, they would know that the interactions that keep our bodies intact are responsible for the appearance of lightning in the sky, and the reason we can see coincides with the reason magnetite attracts metals, and I can stand on the floor without failing.

They would know that everything we see, touch and smell, is controlled by this one interaction, obeying such simple mathematical laws that they can be written on the registration card. Why they are so simple is a mystery for us. But for them it would look like pure mysticism.

And I think that with the theory of reason we are in about the same conditions. We have important clues. The most important is the sensation of consciousness. The box of meat on my neck is aware of itself, and I hope (if we do not live in the simulation), you feel the same as me.

But although this is the simplest and most obvious fact in the world, we understand it so badly that we cannot even formulate scientific questions about it.

We have other tips that may be important or false. We know that all sentient beings are asleep and dreaming. We know how the brain develops in children, we know that emotions and language have a profound effect on consciousness. We know that the mind needs to play and learn to interact with the world, until it reaches its full potential.

We also have tips from computer science. We found computer technologies that recognize images and sounds in a way that seems to mimic the pre-processing of visual and audio information in the brain.

However, there are a lot of things about which we are cruelly mistaken, and, unfortunately, we don’t know what exactly these things are. And then there are things whose complexity we critically underestimate.

The alchemist could hold a stone in one hand, a tree in the other, and consider them examples of "substance", not realizing that the tree is orders of magnitude more complicated. We are at a similar stage in the study of consciousness. And this is great! We will learn a lot. However, there is one quote that I like to repeat:

If everyone thinks about infinity, instead of repairing the sewage system, many will die of cholera.In the near future, AI and machine learning, which we face, will be very different from the phantasmagoric AI from the book of Bostrom, and will present their own serious problems.

- John Rich

It is as if those scientists from Alamogordo would decide to concentrate only on whether they blow up the atmosphere and forget that they actually make nuclear weapons, and should understand how to cope with it.

The oppressive ethical issues of machine learning are not related to the fact that machines are aware of themselves and conquer the world, but how some people will be able to exploit others, or as a result of carelessness they implement the amoral behavior of automatic systems.

And, of course, there is the question of how AI and MO will affect power. We are watching how de facto surveillance in an unexpected way becomes part of our lives. We did not imagine that it would look that way.

So we created a powerful system of social control, and, unfortunately, put it into the hands of people distracted by a crazy idea.

I hope that today I was able to show you the danger of excessive mind. I hope that after this report you will be a little dumber than before it, and will gain immunity to the seductive ideas of AI, which, apparently, bewitch smarter people.

We all must learn the lesson of Stephen Hawking the cat: do not let the geniuses who manage the industry convince you of anything. Act on your own!

In the absence of effective leadership from the first people of the industry, we must do everything ourselves - including, consider all the ethical problems that the real-life AI brings to the world.

Source: https://habr.com/ru/post/432806/

All Articles