Misconceptions players in assessing risks. Control random number generator in development

The human brain, by its nature, is very poor at estimating the probability of triggering random events, on the basis of a numerical evaluation issued. And pretty good based on quality ratings. And all because the person mentally does the conversion of numerical probabilities into qualitative estimates, and does so very subjectively:

The article will address the following questions:

In the process of evolution, the human brain learned very well to operate with qualitative assessments of events and images of its experience. A person came up with the number and abstract numerical estimate of the probability of "random" events relatively recently [ 1 ]. Nevertheless, people still convert these numbers into qualitative ideas: a little bit, a little, a lot, a danger, a safety, enough. These assessments always depend on the context and subjective attitude to this context. This is the main cause of erroneous numerical assumptions.

')

As an example, the turn-based game Disciples 2 [ 2 ] will be considered. In this part of the article, the quality of random number generation specifically in this game will not be considered. About their generation there will be enough data in the second part of the article.

Disciples 2 - typical slip [ 2 ]

Disciples 2 - typical slip [ 2 ]

A standard melee attack has a chance to hit 80%. Usually this chance is perceived by the player as “ almost always ”, and the risk of a miss is “ occasionally / occasionally ”. The chance to miss 2 times in a row with two attacks is 4%. The player perceives this risk as " almost never / this will happen very rarely ." And when this happens, the players are indignant at the "curve of random": [ 3 ]; [ 4 ].

But in situations with two misses in a row and a risk assessment of 4%, a quick assessment by the player has several erroneous assumptions:

1. 4% is not " almost never " . This is an event that will happen in every 25th independent experiment (on average).

2. Evaluation is conducted at the wrong time interval . The minimum piece of measurement of attacks by 80% should not be taken 2 attacks, and all attacks of the same class (~ 80%) during the "alert" memory of the player. Most often during this time you can take a long gaming session or even the whole game, it all depends on the type of game, the player’s memory and the preferences of the person counting.

Since I don’t have real statistics on the use of this game, I will assume that a long gaming session in Disciples 2 lasts 3 hours and during this time about 250 attacks are made with an accuracy of ~ 80%. It is worth noting that the failures of enemies should be ignored, because their player misses are not angry, and he rarely remembers them.

So, the situation should be assessed: "For all 250 attacks, 2 blunders in a row will never happen."

To calculate the probability of truth of this event, the recurrence formula was used: P1 (n) = 0.8 * P1 (n-1) + 0.16 * P1 (n-2). In deriving this formula, I used the help of the community, a quotation of calculations, the details and the result are given below.

To solve the problem, instead of dealing with methods for solving recurrence relations, a small javascript code was written for the browser console and the result was calculated.

In the end, the answer: 0.0001666846271670716

Or 0.0167%

This answer coincides with the answer of another author Kojiec9: 0.000166684627177505 [ 6 ], who used his developed method with a five-fold numeral system and calculated the result using his own formula in Pascal. Minor differences in the answers are explained, apparently, by rounding float numbers.

So, for 3 hours of playing in Disciples 2, the player has the chance to avoid a double miss with 80% accuracy of just 0.0167% . This is indeed very unlikely. (and the probability of at least one double slip , respectively, is 99.9833% ).

The increase in the probability of at least one double slip with an increase in the number of experiments

The increase in the probability of at least one double slip with an increase in the number of experiments

About the imperfections and distortions of human memory, by the way, in general, whole studies and books exist: “The illusions of the brain. Cognitive distortions due to an overabundance of information ”[ 7 ]; “Do not believe your brain” [ 8 ], if you are interested in this topic, I recommend to start reading these articles.

3. The third incorrect assumption is the expectation of dependence in mutually independent experiments .

That is, after the first slip, the player thinks:

“So, the character missed with an accuracy of 80%. Now it will definitely hit, because the risk of a double slip is only 4%. ”

And no. After the first miss, the risk of another miss will be equal to 20%. After all, the last attack has already happened and does not affect the future shot. Moreover, if before the first attack the probability of a double miss was 4%, then after the first miss the probability of a double miss becomes 20%, that is, with respect to the player's expectations, the risk only increases.

This feature of the human brain is especially attractive for manipulations from casinos (and the like) in gambling (more about the device of gaming machines from the manufacturer [ 9 ]).

More interesting examples of the human brain's misconceptions can be found in the articles about Jan Schreiber's balance sheet “Level 5: Probability and Randomness Gone Horribly Wrong” ( Level 5. Probability and chance went astray ) [ 10 ].

The term “Random Number Generator” (RNG / random number generator) used in games almost always means “Pseudo-Random Number Generator” [ 11 ]. The main feature of this generator is its reproducibility. Reproducibility means that, knowing the original generating element (or grain / seed), the same sequence of random ( pseudo-random ) numbers will always be obtained. In some games, this effect is manifested in the fact that after restarting the game, the sequence of hits and misses remains unchanged. And in other games it does not remain the same.

And the thing is how this pseudo-random number generator is used and what design goals the developer pursues.

To better understand the principle of operation of the pseudo-random number generator, you can consider how to implement it in the first classic games, when the resources of computers (and consoles) were particularly severely limited. (For an in- depth description of the examples and their features, see the article “How classic games make smart use of random number generation” [ 12 ]. )

How classic games make smart use of random number generation [ 12 ]

How classic games make smart use of random number generation [ 12 ]

In Final Fantasy I , several tables were used with predetermined fixed numbers with 256 values in each table:

Final Grain I uses the sequence number (index) of a fixed value in the table as a grain (a generating element). That is, knowing the place from which random numbers will be selected further, you can accurately predict which random number will be issued after 1000 checks. In more complex algorithms for generating pseudo-random numbers, the grain is not so easy to use, but the basic principle and effect remain.

Now, to generate pseudo-random numbers, standard libraries of used programming languages are mainly used. The current system time is most often used as the source of entropy. For the needs of the gaming industry, the characteristics of standard libraries are usually sufficient. The disadvantages of the generator and the source of entropy are used by players very rarely due to the complexity of their calculation and manipulation. Usually this remains the lot of speed-programmers ( for example, hacking the logic of Pokemon Colosseum [ 13 ] ), which means that developers are usually more sensible to ignore such details.

1. Grain is fixed at the time of the start of the mission or game campaign.

Implications for the player : restarting the game will not change the fact of a miss by the character (let's call him crazy Fidel ), even if the probability of his getting is 99%. However, before trying to get a player can perform any other action that uses a random number, for example, is like another character - bald Mick . As a result of this, an unlucky random number will be used by a bald Mick, and the next random number in the sequence will be used on the crazy Fidel - then he will probably fall.

As a player can abuse it : if a throw with an accuracy of 50% leads to a miss, then you can reboot and try an attack with an accuracy slightly higher (55%) until it hits. After hitting, save and repeat it with other throws.

Positive implications for the developer :

1) The game can be played step by step, if you store only the initial state, the grain and the sequence of actions. Thanks to this, it is possible to show repetitions and even very compactly store save files. The method allows you to greatly save disk space / memory.

2) Protection of internal gambling so that saving / loading does not allow the player to bankrupt all encountered NPC opponents.

Example :

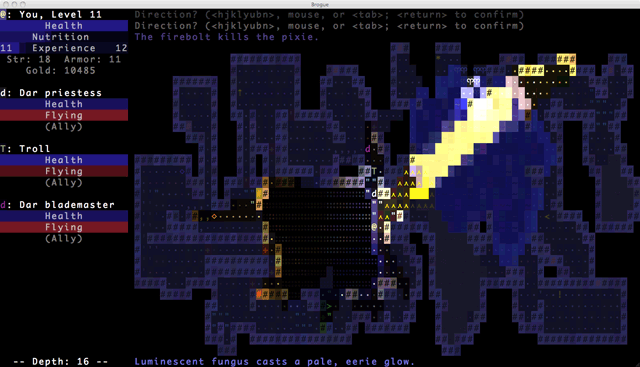

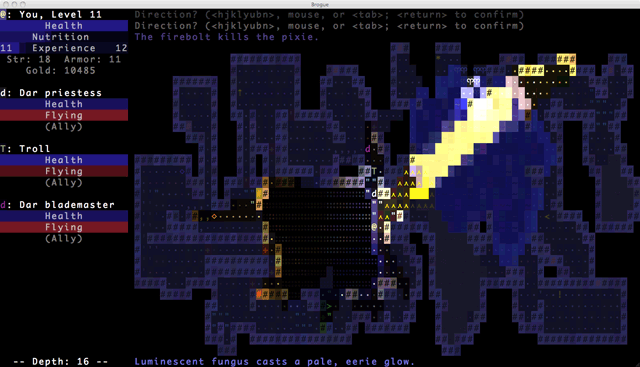

The game roguelike Brogue [ 14 ] uses this method, starting with the generation of the game world and ending with the miscalculation of all player actions. As a result, only the seed and the sequence of game commands are stored in the save file. An additional bonus of this effect is that you can start the game with the selected grain number, after selecting the most interesting world in the tables of the generated Brogue worlds [ 15 ].

Brogue roguelike - official site [ 14 ]

Brogue roguelike - official site [ 14 ]

2. Grain is not fixed or updated every time after a reboot.

Consequences for the player : any reload changes all calculations in the chances of hits.

How a player can abuse this : very simply - a few reboots and the most unlikely hit scenario can become a reality.

Positive implications for the developer :

1) Players perceive such a game as more honest with real chance, simply because of the lack of knowledge of internal mechanics.

2) Players get an unofficial easy mode, which, if desired, allows you to greatly ease difficult areas.

3) The developer can cover the freebie with different methods: one game auto-save (i.e., overwriting the save and permanent death) or prohibiting the save during the mission (in different variations). And the most sensitive areas (gambling mini-games) can be calculated on the basis of a separate unchanging grain, although technically it is much more difficult.

Example :

The Battle for Wesnoth [ 16 ] game uses non-fixed grain with a fundamentally fair coincidence. Honesty lies in the fact that sometimes quite unlikely sequences of failures are possible, and the game engine does not correct them. The result of this is periodic angry posts from annoyed players to game developers.

Reddit is a super deft mermaid in Wesnoth [ 17 ]

Reddit is a super deft mermaid in Wesnoth [ 17 ]

Also before the attack, the game provides detailed calculations of the probabilities of each of the possible outcomes of the attack: the damage done, the damage taken and the probability of the death of one of the opponents. The derivation of these probabilities only aggravates the anger of the “unsuccessful ones”, since having been assured of good chances during an attack, it is difficult to come to terms with the result, which had a rating of 1 in 1000.

3. Grain is not fixed, and the results themselves are subject to additional manipulations.

By manipulation, I mean such dynamic adjustments as a result of which the sensation of correct ( fair ) randomness increases due to the loss of real pseudo-randomness in the resulting numbers.

Implications for the player : similar to non-fixed grain games - the reload allows you to recalculate the results.

As a player, this can be abused : with the help of seamsking, you can choose an advantageous combination of attacks, but depends largely on the method of implementation and the presence of protective mechanisms on the part of the developer.

Positive consequences for the developer : the developer can control the rarity of undesirable outcomes, make the appearance of " fair random ", increase and decrease the complexity of the game. If the developer, when calculating the chance of hitting, stores and separately takes into account the triggers for each team, then he can greatly reduce the abuse of saveskamminga, ensuring that the player receives his average arithmetic portion of damage anyway.

Examples :

Developer Carsten Germer uses the function of controlled randomness for rare and not only events [ 18 ]. For example, in order to guarantee the occasional loss of a particularly rare bonus with a chance of 1 to 10,000, after each “miss” it increases the odds in order: 1 to 9,900; 1 to 9800; 1 to 9700 ... and so on until the event was recorded. And in order to guarantee the absence of frequent rarities, he introduced an additional variable that blocks the triggering by 100% for the next 10 checks after the last triggering.

In my Grue the Monster bagel [ 19 ], I also used random manipulations. Usually, when pursuing victims, a player’s character must hide behind their backs, waiting for them to step back and fall into their hands. Usually such a chance is 1/6 in open space (1/2 in corridors and 1/1 in dead ends), but in order to reduce the irritating effect of particularly unlucky situations, before each checking of the direction of the victim’s path in 15% of cases, she was guaranteed to walk in the direction of Gru .

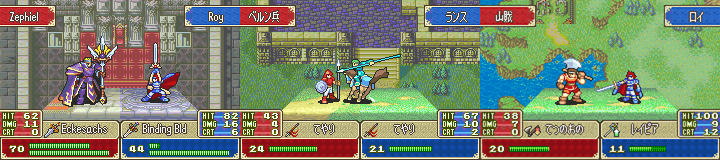

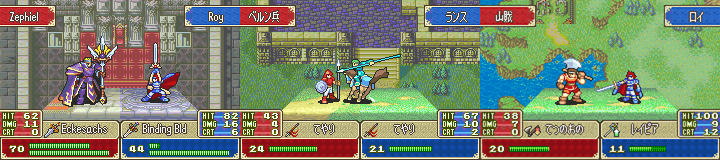

The most interesting case: in the game Fire Emblem: The Binding Blade [ 20 ], the hidden mechanics of hit detection during attacks [ 21 ] was implemented. Traditionally for a series, the probability of hitting is shown by the game as a percentage from 0 to 100%. In earlier games of the series, the fact of a hit was determined by one random number from 1 to 100: if the falling out number (for example, 61) is less than or equal to the probability of hitting (for example, 75), then a hit is counted, if greater, then a miss.

Fire Emblem: The Binding Blade [ 20 ]

Fire Emblem: The Binding Blade [ 20 ]

In this part, a sparing system was introduced: instead of one random number, the average of two random numbers was taken, and this average was compared with the accuracy value. That is, a random number tends to be more than 50. This leads to a distortion of the linear randomness effect: fighters with accuracy more than 50% fall more often than in 50% of cases, and with accuracy less than 50% much less often. And since in the game the overwhelming majority of player characters are more accurate, and most enemies are smaller, the player gets a very serious hidden advantage [ 21 ]. Below is a graph of this effect, where the blue lines show the frequency of hits in the old system, and the red frequency of hits in the new, depending on the percentage of the attacker's accuracy. For example, with a shown probability of hitting 90%, the actual probability will be equal to 98.1%, at 80% - 92.2%, and at 10% - only 1.9%!

Distortion of chance to hit. On the Y axis, the actual probability, on the X axis - shown to the player

Distortion of chance to hit. On the Y axis, the actual probability, on the X axis - shown to the player

These are certainly not the only examples of manipulations with random numbers and balance, but it is very difficult to find them. Therefore, the help of the community here will be especially valuable.

I want to note that I do not regard procedural generation, as such, as manipulated chance. Example: a bagel generated a random level with a random set of enemies, traps, walls and dead ends. If a level was created that cannot be passed, then an example of a development curve, insufficiently thought-out design, or weak testing. The developer is obliged to procedurally check at least the basic problems of random generation:

Such interference with random results is not a manipulation, but is simply a rule of minimally competent approach to development. These checks are used in all methods of generating pseudo-random numbers.

4. In general, remove the element of chance from the mechanics of the game.

That is, the results of each attack always have a 100% chance of hitting and fixed damage, as well as constant rules for triggering additional effects. Instead, you can use random computations for cosmetic purposes: periodic “goggling” of waiting characters; flying off damage numbers; collision and explosion effects. There is no difference how to generate random numbers and how evenly the distribution is.

Although in this case, you can use the first three methods in the calculations of artificial intelligence, when enemy characters can in any degree randomly choose targets to attack or walk in a team in a random order. But it will be less noticeable for the player and annoying situations will be much less.

Consequences for the player : a reboot either does not affect the results in any way, or has little effect

How a player can abuse this : a player can calculate or find a stable dominant strategy on the network and use only it. It is worth noting that for certain groups of players this is the main interest in the game.

Positive consequences for the developer : the developer is much easier to balance such a game. As a minus, the dominant strategies that emerge become stable, which means that even the player’s bad luck cannot lead him to defeat, but leads to the disappearance of all surprises, boredom and the removal of the game. High-class players will almost always win lower-class players, for different types of games this can be both an advantage and a disadvantage.

Example :

Any chess with classic rules.

Also here come logical roguelike. For example, this method is very well implemented in Desktop Dungeons Alpha [ 22 ].

Desktop Dungeons Alpha [ 22 ]

Desktop Dungeons Alpha [ 22 ]

Here, the results of the attack sequence are always the same and read in advance. However, due to the random (procedural) generation of game dungeons and the presence of fog of war, the game acquires its unique replay value of the best bagels.

Thus, the article discusses two sub-topics of randomness in games:

- 80% of the shot hit in the game - well, this is almost a guaranteed hit;

- 80% of the fact that your comrade will ever repay a debt — no-no-no, that will not work, this is too great a risk;

- 5% critical damage on enemy NPC is unlikely, the risk can be ignored;

- 1% risk of falling icicles, if you walk under a roof with dripping meter icicles - something else, it is better to go around on the other side of the sidewalk;

- 51% probability of winning a mini RPG game - you can count on the fact that after 20 bets I win a little bit or at least stay with my ... after 20 bets ... how could this happen that I lost half of my total gold? There is clearly broken random number generator!

The article will address the following questions:

- erroneous assumptions in estimating probabilities;

- specific examples of player misconceptions and the actual probabilities of "rare" events;

- random number generator (generally pseudo-random);

- early simple pseudo-random number generators on the example of Final Fantasy I;

- approaches to the implementation of random events with reproducibility and without;

- examples of successfully implemented different approaches and manipulations in Fire Emblem.

I. Erroneous assumptions in estimating probabilities

In the process of evolution, the human brain learned very well to operate with qualitative assessments of events and images of its experience. A person came up with the number and abstract numerical estimate of the probability of "random" events relatively recently [ 1 ]. Nevertheless, people still convert these numbers into qualitative ideas: a little bit, a little, a lot, a danger, a safety, enough. These assessments always depend on the context and subjective attitude to this context. This is the main cause of erroneous numerical assumptions.

')

As an example, the turn-based game Disciples 2 [ 2 ] will be considered. In this part of the article, the quality of random number generation specifically in this game will not be considered. About their generation there will be enough data in the second part of the article.

A standard melee attack has a chance to hit 80%. Usually this chance is perceived by the player as “ almost always ”, and the risk of a miss is “ occasionally / occasionally ”. The chance to miss 2 times in a row with two attacks is 4%. The player perceives this risk as " almost never / this will happen very rarely ." And when this happens, the players are indignant at the "curve of random": [ 3 ]; [ 4 ].

But in situations with two misses in a row and a risk assessment of 4%, a quick assessment by the player has several erroneous assumptions:

1. 4% is not " almost never " . This is an event that will happen in every 25th independent experiment (on average).

2. Evaluation is conducted at the wrong time interval . The minimum piece of measurement of attacks by 80% should not be taken 2 attacks, and all attacks of the same class (~ 80%) during the "alert" memory of the player. Most often during this time you can take a long gaming session or even the whole game, it all depends on the type of game, the player’s memory and the preferences of the person counting.

Since I don’t have real statistics on the use of this game, I will assume that a long gaming session in Disciples 2 lasts 3 hours and during this time about 250 attacks are made with an accuracy of ~ 80%. It is worth noting that the failures of enemies should be ignored, because their player misses are not angry, and he rarely remembers them.

So, the situation should be assessed: "For all 250 attacks, 2 blunders in a row will never happen."

To calculate the probability of truth of this event, the recurrence formula was used: P1 (n) = 0.8 * P1 (n-1) + 0.16 * P1 (n-2). In deriving this formula, I used the help of the community, a quotation of calculations, the details and the result are given below.

You can try induction. We introduce several notation. The probability of keeping the paw after the nth outing is denoted by P (n). Imagine this probability as the sum of two probabilitiesQuote from the solution of the problem from the user Serbbit [ 6 ]

P (n) = P1 (n) + P2 (n), where P1 (n) is the probability at which the extreme n-th outing was successful, and P2 (n) - unsuccessful.

It may seem that P1 and P2 are proportional to 0.8 and 0.2, but this is not the case. Due to the fact that we are not considering any possible outcomes, but only those that have saved the paw of a raccoon.

Now let's try to deduce the recurrence formula.

P1 (n) = 0.8 * (P1 (n-1) + P2 (n-1))

P2 (n) = 0.2 * P1 (n-1)

Substitute P2 in the formula for P1 we get

P1 (n) = 0.8 * P1 (n-1) + 0.16 * P1 (n-2)

Next Solving Recurrence Relations [5]

To solve the problem, instead of dealing with methods for solving recurrence relations, a small javascript code was written for the browser console and the result was calculated.

var P1 = []; P1[0] = 1; P1[1] = 1; for (var n=2; n<=250; n++) { P1[n] = 0.8 * P1[n-1] + 0.16 * P1[n-2]; console.log(n + ') ' + P1[n]); } // : 250) 0.0001666846271670716 In the end, the answer: 0.0001666846271670716

Or 0.0167%

This answer coincides with the answer of another author Kojiec9: 0.000166684627177505 [ 6 ], who used his developed method with a five-fold numeral system and calculated the result using his own formula in Pascal. Minor differences in the answers are explained, apparently, by rounding float numbers.

So, for 3 hours of playing in Disciples 2, the player has the chance to avoid a double miss with 80% accuracy of just 0.0167% . This is indeed very unlikely. (and the probability of at least one double slip , respectively, is 99.9833% ).

About the imperfections and distortions of human memory, by the way, in general, whole studies and books exist: “The illusions of the brain. Cognitive distortions due to an overabundance of information ”[ 7 ]; “Do not believe your brain” [ 8 ], if you are interested in this topic, I recommend to start reading these articles.

3. The third incorrect assumption is the expectation of dependence in mutually independent experiments .

That is, after the first slip, the player thinks:

“So, the character missed with an accuracy of 80%. Now it will definitely hit, because the risk of a double slip is only 4%. ”

And no. After the first miss, the risk of another miss will be equal to 20%. After all, the last attack has already happened and does not affect the future shot. Moreover, if before the first attack the probability of a double miss was 4%, then after the first miss the probability of a double miss becomes 20%, that is, with respect to the player's expectations, the risk only increases.

This feature of the human brain is especially attractive for manipulations from casinos (and the like) in gambling (more about the device of gaming machines from the manufacturer [ 9 ]).

More interesting examples of the human brain's misconceptions can be found in the articles about Jan Schreiber's balance sheet “Level 5: Probability and Randomness Gone Horribly Wrong” ( Level 5. Probability and chance went astray ) [ 10 ].

Ii. Random number generator

The term “Random Number Generator” (RNG / random number generator) used in games almost always means “Pseudo-Random Number Generator” [ 11 ]. The main feature of this generator is its reproducibility. Reproducibility means that, knowing the original generating element (or grain / seed), the same sequence of random ( pseudo-random ) numbers will always be obtained. In some games, this effect is manifested in the fact that after restarting the game, the sequence of hits and misses remains unchanged. And in other games it does not remain the same.

And the thing is how this pseudo-random number generator is used and what design goals the developer pursues.

To better understand the principle of operation of the pseudo-random number generator, you can consider how to implement it in the first classic games, when the resources of computers (and consoles) were particularly severely limited. (For an in- depth description of the examples and their features, see the article “How classic games make smart use of random number generation” [ 12 ]. )

In Final Fantasy I , several tables were used with predetermined fixed numbers with 256 values in each table:

- for calculating random battles with each step in the game map, the algorithm moved around the table, changing the index by 1 at a time, thus gradually scrolling through all possible values — both the fact of the collision and the possible group of opponents depended on it;

- to calculate the results of the battle, a similar table of 256 fixed values was also used, but movement on it occurred not only with each successive use of a pseudo-random number, but every 2 frames. That is, every 2 frames, the idle algorithm scrolled through the table with numbers, thereby reducing the risk of predicted identical sequences. The source of entropy here was uncertainty, how long the player will think until the next team is selected.

Final Grain I uses the sequence number (index) of a fixed value in the table as a grain (a generating element). That is, knowing the place from which random numbers will be selected further, you can accurately predict which random number will be issued after 1000 checks. In more complex algorithms for generating pseudo-random numbers, the grain is not so easy to use, but the basic principle and effect remain.

Now, to generate pseudo-random numbers, standard libraries of used programming languages are mainly used. The current system time is most often used as the source of entropy. For the needs of the gaming industry, the characteristics of standard libraries are usually sufficient. The disadvantages of the generator and the source of entropy are used by players very rarely due to the complexity of their calculation and manipulation. Usually this remains the lot of speed-programmers ( for example, hacking the logic of Pokemon Colosseum [ 13 ] ), which means that developers are usually more sensible to ignore such details.

Iii. Different approaches to the use of the generator

1. Grain is fixed at the time of the start of the mission or game campaign.

Implications for the player : restarting the game will not change the fact of a miss by the character (let's call him crazy Fidel ), even if the probability of his getting is 99%. However, before trying to get a player can perform any other action that uses a random number, for example, is like another character - bald Mick . As a result of this, an unlucky random number will be used by a bald Mick, and the next random number in the sequence will be used on the crazy Fidel - then he will probably fall.

As a player can abuse it : if a throw with an accuracy of 50% leads to a miss, then you can reboot and try an attack with an accuracy slightly higher (55%) until it hits. After hitting, save and repeat it with other throws.

Positive implications for the developer :

1) The game can be played step by step, if you store only the initial state, the grain and the sequence of actions. Thanks to this, it is possible to show repetitions and even very compactly store save files. The method allows you to greatly save disk space / memory.

2) Protection of internal gambling so that saving / loading does not allow the player to bankrupt all encountered NPC opponents.

Example :

The game roguelike Brogue [ 14 ] uses this method, starting with the generation of the game world and ending with the miscalculation of all player actions. As a result, only the seed and the sequence of game commands are stored in the save file. An additional bonus of this effect is that you can start the game with the selected grain number, after selecting the most interesting world in the tables of the generated Brogue worlds [ 15 ].

2. Grain is not fixed or updated every time after a reboot.

Consequences for the player : any reload changes all calculations in the chances of hits.

How a player can abuse this : very simply - a few reboots and the most unlikely hit scenario can become a reality.

Positive implications for the developer :

1) Players perceive such a game as more honest with real chance, simply because of the lack of knowledge of internal mechanics.

2) Players get an unofficial easy mode, which, if desired, allows you to greatly ease difficult areas.

3) The developer can cover the freebie with different methods: one game auto-save (i.e., overwriting the save and permanent death) or prohibiting the save during the mission (in different variations). And the most sensitive areas (gambling mini-games) can be calculated on the basis of a separate unchanging grain, although technically it is much more difficult.

Example :

The Battle for Wesnoth [ 16 ] game uses non-fixed grain with a fundamentally fair coincidence. Honesty lies in the fact that sometimes quite unlikely sequences of failures are possible, and the game engine does not correct them. The result of this is periodic angry posts from annoyed players to game developers.

Also before the attack, the game provides detailed calculations of the probabilities of each of the possible outcomes of the attack: the damage done, the damage taken and the probability of the death of one of the opponents. The derivation of these probabilities only aggravates the anger of the “unsuccessful ones”, since having been assured of good chances during an attack, it is difficult to come to terms with the result, which had a rating of 1 in 1000.

3. Grain is not fixed, and the results themselves are subject to additional manipulations.

By manipulation, I mean such dynamic adjustments as a result of which the sensation of correct ( fair ) randomness increases due to the loss of real pseudo-randomness in the resulting numbers.

Implications for the player : similar to non-fixed grain games - the reload allows you to recalculate the results.

As a player, this can be abused : with the help of seamsking, you can choose an advantageous combination of attacks, but depends largely on the method of implementation and the presence of protective mechanisms on the part of the developer.

Positive consequences for the developer : the developer can control the rarity of undesirable outcomes, make the appearance of " fair random ", increase and decrease the complexity of the game. If the developer, when calculating the chance of hitting, stores and separately takes into account the triggers for each team, then he can greatly reduce the abuse of saveskamminga, ensuring that the player receives his average arithmetic portion of damage anyway.

Examples :

Developer Carsten Germer uses the function of controlled randomness for rare and not only events [ 18 ]. For example, in order to guarantee the occasional loss of a particularly rare bonus with a chance of 1 to 10,000, after each “miss” it increases the odds in order: 1 to 9,900; 1 to 9800; 1 to 9700 ... and so on until the event was recorded. And in order to guarantee the absence of frequent rarities, he introduced an additional variable that blocks the triggering by 100% for the next 10 checks after the last triggering.

In my Grue the Monster bagel [ 19 ], I also used random manipulations. Usually, when pursuing victims, a player’s character must hide behind their backs, waiting for them to step back and fall into their hands. Usually such a chance is 1/6 in open space (1/2 in corridors and 1/1 in dead ends), but in order to reduce the irritating effect of particularly unlucky situations, before each checking of the direction of the victim’s path in 15% of cases, she was guaranteed to walk in the direction of Gru .

The most interesting case: in the game Fire Emblem: The Binding Blade [ 20 ], the hidden mechanics of hit detection during attacks [ 21 ] was implemented. Traditionally for a series, the probability of hitting is shown by the game as a percentage from 0 to 100%. In earlier games of the series, the fact of a hit was determined by one random number from 1 to 100: if the falling out number (for example, 61) is less than or equal to the probability of hitting (for example, 75), then a hit is counted, if greater, then a miss.

In this part, a sparing system was introduced: instead of one random number, the average of two random numbers was taken, and this average was compared with the accuracy value. That is, a random number tends to be more than 50. This leads to a distortion of the linear randomness effect: fighters with accuracy more than 50% fall more often than in 50% of cases, and with accuracy less than 50% much less often. And since in the game the overwhelming majority of player characters are more accurate, and most enemies are smaller, the player gets a very serious hidden advantage [ 21 ]. Below is a graph of this effect, where the blue lines show the frequency of hits in the old system, and the red frequency of hits in the new, depending on the percentage of the attacker's accuracy. For example, with a shown probability of hitting 90%, the actual probability will be equal to 98.1%, at 80% - 92.2%, and at 10% - only 1.9%!

These are certainly not the only examples of manipulations with random numbers and balance, but it is very difficult to find them. Therefore, the help of the community here will be especially valuable.

I want to note that I do not regard procedural generation, as such, as manipulated chance. Example: a bagel generated a random level with a random set of enemies, traps, walls and dead ends. If a level was created that cannot be passed, then an example of a development curve, insufficiently thought-out design, or weak testing. The developer is obliged to procedurally check at least the basic problems of random generation:

- the passage to the exit should always be at least in the singular. Any path finding algorithms help here;

- a series of traps must be able to bypass, and if too many of them are created in one place, then the algorithm must calculate their density and remove the excess;

- too strong enemies must provide at least one of the available ways of their “passage”: brute force; scrolls and potions; special artifacts or the ability to just get away from them.

Such interference with random results is not a manipulation, but is simply a rule of minimally competent approach to development. These checks are used in all methods of generating pseudo-random numbers.

4. In general, remove the element of chance from the mechanics of the game.

That is, the results of each attack always have a 100% chance of hitting and fixed damage, as well as constant rules for triggering additional effects. Instead, you can use random computations for cosmetic purposes: periodic “goggling” of waiting characters; flying off damage numbers; collision and explosion effects. There is no difference how to generate random numbers and how evenly the distribution is.

Although in this case, you can use the first three methods in the calculations of artificial intelligence, when enemy characters can in any degree randomly choose targets to attack or walk in a team in a random order. But it will be less noticeable for the player and annoying situations will be much less.

Consequences for the player : a reboot either does not affect the results in any way, or has little effect

How a player can abuse this : a player can calculate or find a stable dominant strategy on the network and use only it. It is worth noting that for certain groups of players this is the main interest in the game.

Positive consequences for the developer : the developer is much easier to balance such a game. As a minus, the dominant strategies that emerge become stable, which means that even the player’s bad luck cannot lead him to defeat, but leads to the disappearance of all surprises, boredom and the removal of the game. High-class players will almost always win lower-class players, for different types of games this can be both an advantage and a disadvantage.

Example :

Any chess with classic rules.

Also here come logical roguelike. For example, this method is very well implemented in Desktop Dungeons Alpha [ 22 ].

Here, the results of the attack sequence are always the same and read in advance. However, due to the random (procedural) generation of game dungeons and the presence of fog of war, the game acquires its unique replay value of the best bagels.

Conclusion

Thus, the article discusses two sub-topics of randomness in games:

- Erroneous assumptions in estimating probabilities . Intuitive assumptions that a player makes, and which are often incorrect due to their subjectivity, are described. The main conclusion: this accident not only does not guarantee that users will be satisfied, but can even lead to the opposite effect.

- Pseudorandom number generation . Described different approaches to the use of randomness. Successful examples of implementation show that, regardless of the chosen approach, the game can be interesting, unexpected, and with a good degree of replayability.

Conscious sequential use of the chosen approach allows developers to emphasize their positive aspects and minimize negative ones.

Sources and literature

1. The history of mathematics, probability theory - Wikipedia .

2. Disciples 2 - GOG Gold .

3. Disciples 2 - comment about the curve random number 1 .

4. Disciples 2 - comment about the curve random number 2 .

5. The solution of recurrence relations .

6. Quote from the solution of the problem from the user Serbbit .

7. Illusions of the brain. Cognitive distortion due to an overabundance of information .

8. Do not believe your brain .

9. Gaming machine inside and out. Review from the manufacturer .

10. Level 5: Probability and Randomness Gone Horribly Wrong .

11. Pseudorandom number generator - Wikipedia .

12. How classic games make smart use of random number generation .

13. Controlling luck in video games - Pokemon Colosseum and XD .

14. Brogue roguelike - official site .

15. The Brogue Seed Scummer .

16. The Battle for Wesnoth .

17. Super Dexterous Mermaid in Wesnoth .

18. "Not So Random Randomness" in Game Design and Programming .

19. Grue the Monster roguelike .

20. Fire Emblem: The Binding Blade .

21. Random Number Generator in Fire Emblem .

22. Desktop Dungeons Alpha roguelike .

2. Disciples 2 - GOG Gold .

3. Disciples 2 - comment about the curve random number 1 .

4. Disciples 2 - comment about the curve random number 2 .

5. The solution of recurrence relations .

6. Quote from the solution of the problem from the user Serbbit .

7. Illusions of the brain. Cognitive distortion due to an overabundance of information .

8. Do not believe your brain .

9. Gaming machine inside and out. Review from the manufacturer .

10. Level 5: Probability and Randomness Gone Horribly Wrong .

11. Pseudorandom number generator - Wikipedia .

12. How classic games make smart use of random number generation .

13. Controlling luck in video games - Pokemon Colosseum and XD .

14. Brogue roguelike - official site .

15. The Brogue Seed Scummer .

16. The Battle for Wesnoth .

17. Super Dexterous Mermaid in Wesnoth .

18. "Not So Random Randomness" in Game Design and Programming .

19. Grue the Monster roguelike .

20. Fire Emblem: The Binding Blade .

21. Random Number Generator in Fire Emblem .

22. Desktop Dungeons Alpha roguelike .

Source: https://habr.com/ru/post/432080/

All Articles