Raise IDS / NMS: Mikrotik and Suricata with a web-interface

Apparently, I have such a karma: no matter how I undertake the implementation of any service on the open source, I will definitely find a bunch of manuals, each individually in my particular case will not work, the ready solution will not start or dislike it, what else will happen somebody is indigestible, and as a result you have to make your own way to the result.

This time all the manuals were on ELK5 or even older, but I didn’t really like to install the software of the previous versions. I wanted to take a software with the most promising terms of support: preferably the freshest of stable ones.

As a result, in order to continue to be able to repeat the perfect feat without repeating all the torment, you have to write such step-by-step cheat sheets, which I share with you.

')

So, today Mikrotik (RouterOS), Suricata 4.1, Elasticsearch + Filebeat + Kibana 6.5.

Conditions:

I will not tell you here what IDS / IPS / NMS is, why it is needed and how it happens. Everyone knows this without me, but whoever does not know will naguglit.

Also, I will not justify my choice between Snort and Suricata in favor of the latter. It's a matter of taste.

But I will superficially explain how this works:

Suricata somehow gets traffic. There are three options: a) pass it through itself in inline-mode, b) receive a copy of the traffic from the switch port and c) analyze the dumps with traffic. The resulting traffic Suricata analyzes and on the basis of the analysis gives data about what she found there in this traffic.

Suricata data can be issued in JSON. Accordingly, having structured data, they can be fed to any system for processing, systematization, analysis and visualization.

For the analysis and visualization of data, as I understand it, not being an expert in this field, ELK-stack is perfect. ELK-stack originally consisted of Elasticsearch, Logstash, Kibana. Now Beat has been added to it (a family of program interfaces that act as an intermediary between the data source and Logstash or Elasticsearch). Looking ahead, I will say that there was no Logstash, because the Beat gives the data directly to Elasticsearch perfectly, and Elasticsearch eats it perfectly. Elasticsearch transfers the data to Kibana, the web interface for the entire ELK stack. Kibana, using the templates transferred to it by Filebeat, provides the user with a visualization of the data, the so-called Dashboards. Considering the fact that Elasticsearch, Logstash, Beat and Kibana is the fruit of the work of one producer, this whole economy is well connected with each other, and the process of binding is well documented (of course, by open-source measures).

Thus, based on the above, the task can be described as follows: get a copy of the traffic from the router port, transfer it to Suricata, receive JSON-formatted data from Suricata, transfer it to Filebeat so that the latter in turn sends it to Elasticsearch and helped Kibana create their visual display.

If I had a hardware Mikrotik router, then the question of port mirroring would not be at all. Everything would be decided by including traffic mirroring through the external interface to any free port of Mikrotik itself. If there were no free port on Mikrotik, it would be possible to enable port mirroring on the switch. But in my case, Mikrotik had no physical ports at all, and the port on the switch received traffic from the entire host, on which, besides Mikrotik, there were several other virtual machines.

And then I once again mentally said: "Thank you, Mikrotik!". Thanks for the sniffer built into RouterOS. By tradition, we manage without screenshots, only console commands.

Open the terminal in WinBox and turn on the sniffer:

Instead of if-out, specify the name of the interface from which you plan to intercept traffic, and instead of 192.168.1.253 , specify the IP address of the machine to which intercepted traffic will be sent via the TZSP protocol.

With Mikrotik'om everything.

In general, I am not very Linux-headed, so I like pop distros most of all. Well, except that I like the more ascetic Debian more. That started with him. Well, of course, by virtue of non-linear headaches, I wanted to put the binaries from the repository as well. Build is always lazy for me. So, if it is possible to choose Debian, do not choose . Now I don’t remember exactly where I had a plug in the installation of the entire farm under Debian, but it was. And the whole further story about installing everything under Ubunta.

A 4-core virtual machine with 4 gigs of RAM was created, Ubuntu Server 18.04.1 LTS (x64) was downloaded and installed on it

Since at each stage I made snapshots, and then repeatedly rolled back to them, at the end I lit up pretty glitches with an out of time sync in a virtual machine with real time.

Therefore, we immediately set the correct time zone and NTP synchronization:

To ensure that there are no dependency problems during the installation of Suricata, we add universe repositories in /etc/apt/sources.list :

We also add a repository, from where we will take Suricata:

Updating the package database:

Install Suricata:

The next step is to set up the rules for Suricata and their update:

Run the update itself suricata-update :

Running without additional configuration will give us Emerging Threats Open ruleset:

To view the list of sources, perform:

Update rule sources:

Let's see what was updated there in the sources, let's re-execute:

We include all free sources:

And once again we update the rules:

Suricata is installed.

Now you need to get traffic.

Trafr is an application written by Mikrotik to convert TZSP traffic to pcap. The application is 32-bit, so to start it you will need to enable support for 32-bit applications in 64-bit Ubunta:

Download and unpack trafr :

Check that traffic is caught:

After such a launch, the symbolic output in graphics mode broke in the virtual machine console, I had to reboot. When connecting remotely via ssh to PuTTY, there were no problems.

If you see random flickering on the screen, then the traffic arrives, and trafr catches it. If so, we transfer the trafr to the permanent residence and start it with the transfer of the caught traffic through the pipeline immediately to Suricata:

Now we check that the traffic goes to Suricata, for this we perform in the next terminal:

You should see a smart scrolling of meaningful text - a log of receiving meerkat traffic.

It is also useful to make sure that Suricata not only receives traffic, but also analyzes:

This is exactly the same event output from Suricata in JSON format, which we will feed to Filebeat.

Install the PGP key required for using the Elastic repository and install the necessary dependencies:

Please note that Java version 8. Everything above 8 is not supported. Therefore, if you have previously managed to install more recent Java, demolish it and put 8.

Make sure that Java is installed as it should:

We get about the following conclusion:

Create a username and password to access Kibana. Instead of admin, choose something that you prefer:

Since ELK will run on localhost, configure the reverse proxy in nginx:

Restart nginx:

We put Elasticsearch:

Enable autorun when booting the OS:

Run:

We check whether:

Depending on the performance of your piece of hardware, running ES can take some time. If we get connection refused , then we simply repeat the request and wait until we get something like a response:

We put Kibana:

Enable autorun when booting the OS:

Run:

Now you can go to 192.168.1.253 (of course, the IP address is the one that was assigned to your car with meerkats). The Kibana title page should open.

We put Filebeat:

Enable autorun when booting the OS:

We include the Suricata module included in the Filebeat module set:

Install the Suricata plugins in Elasticsearch:

Restart Elasticsearch:

Perform the initial configuration of Filebeat, at the same time loading the templates in Kibana:

We check that Filebeat found /var/log/suricata/eve.json and processes it. To do this, we start Filebeat in the mode of displaying data with the publish marker:

The first is the json-formatted output of Filebeat itself, then the simple text output of its logs, and only after some time the output from Suricata, so wait and make sure that everything works. After that, interrupt Filebeat and return to bash.

Enable autorun when booting the OS:

Launch Filebeat:

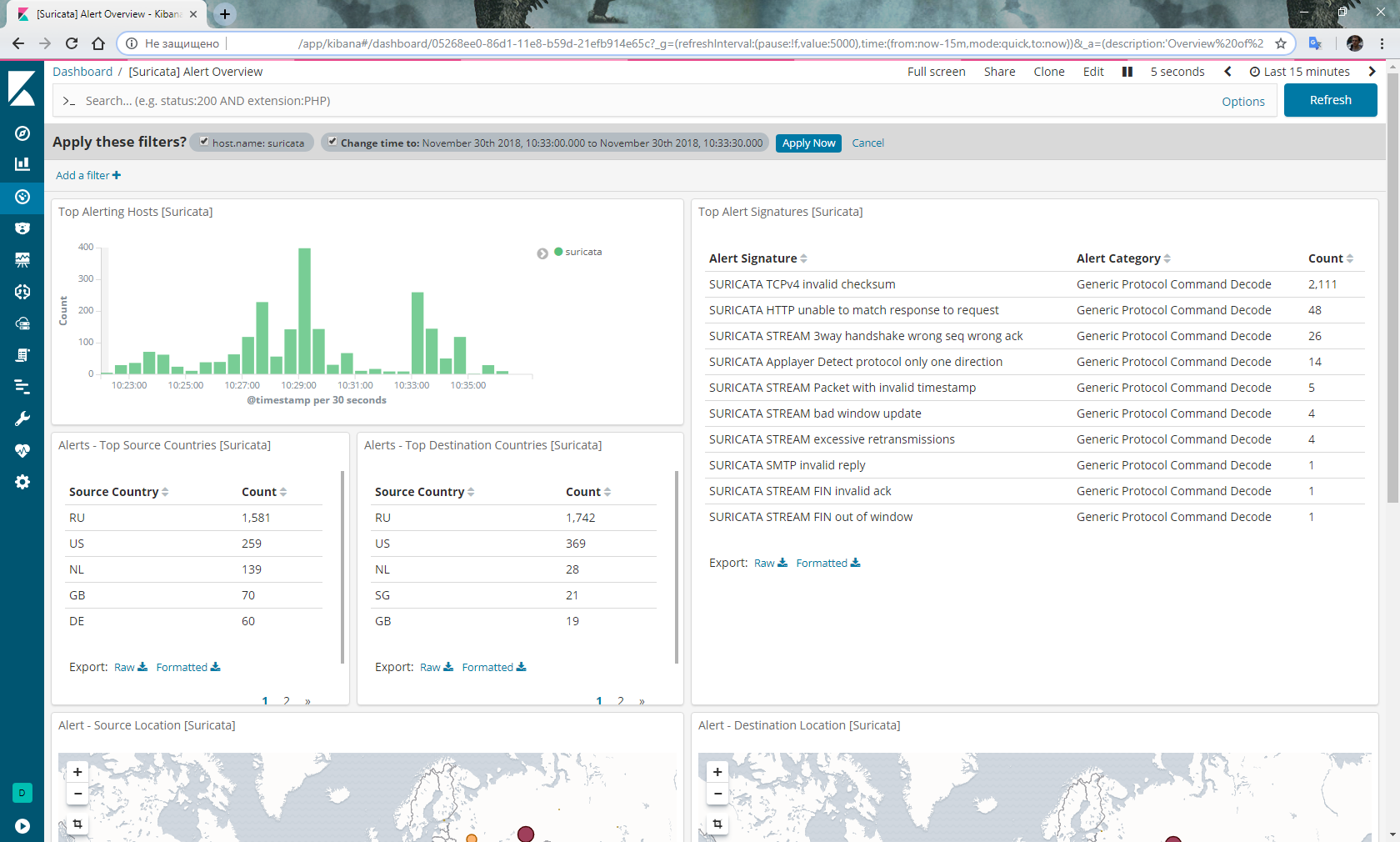

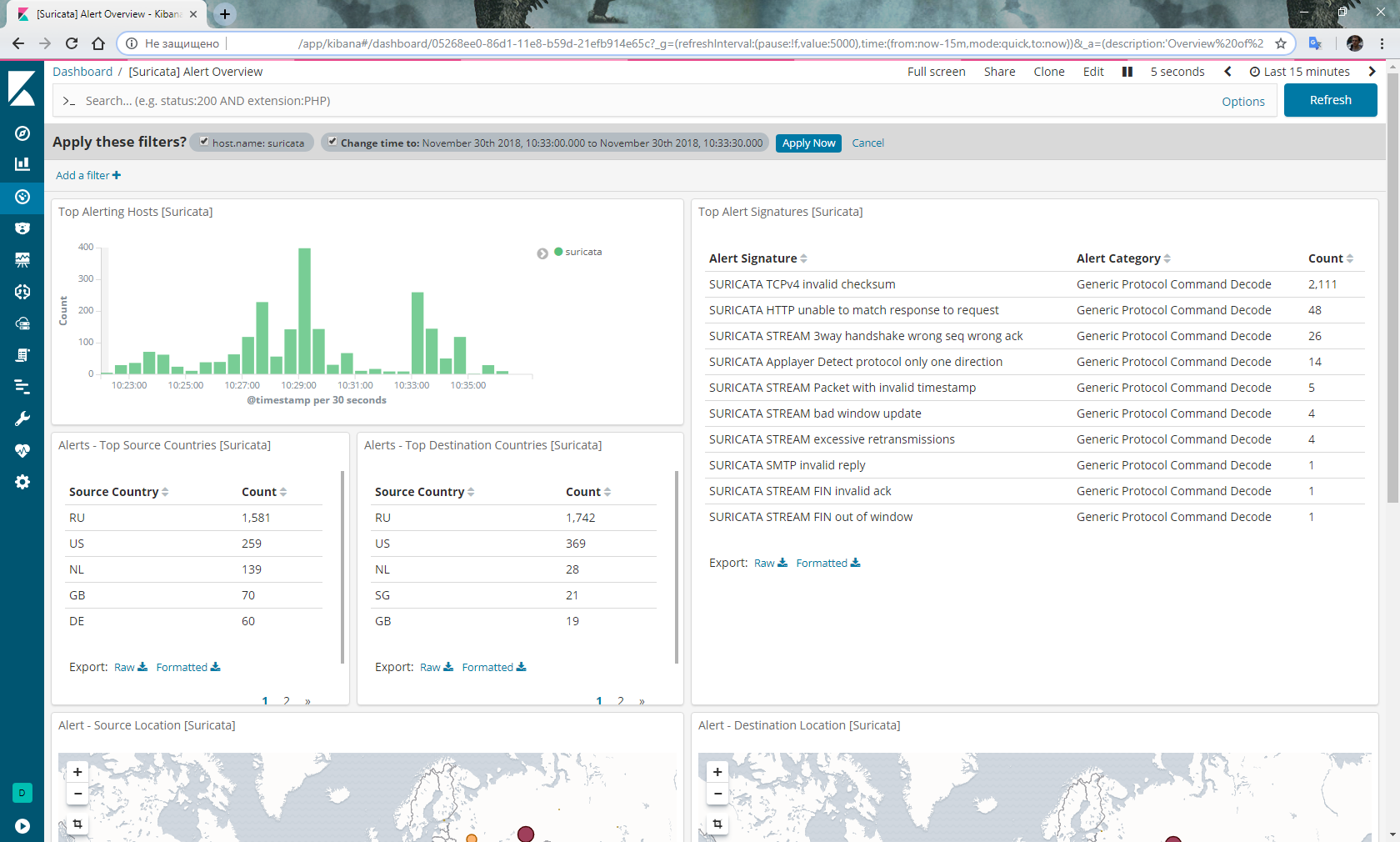

Go to Kibana, select the Dashboard in the menu on the left, select the filebeat- * index. Select Dashboard again, select [Suricata] Alert Overview from the list and should get something like this:

Do not forget the logrotate, and not that no matter how capacious the hard drive, Suricata will score it very quickly:

In addition, there were rumors that someone regularly has a sniffer in Mikrotik with the status of running and stops sending traffic. Then we write a script to restart the sniffer and run it on a schedule:

Frankly, I'm not quite happy with the stability of the above bundle. Namely: it is worth rebooting, and miracles begin. Once I stopped processing all the rules except the pair. I had to reinstall everything. The second time Elasticsearch stopped receiving data from Filebeat at all, and had to roll back to the snapshot state before rebooting.

These problems have not yet decided.

In addition, plans to implement IPS on the basis of IP-addresses of villains identified by Suricata transferred to Mikrotik.

UPD : Accusations of instability are removed. My conclusion about stopping rule processing was erroneous. In fact, the reason for the emptiness in Dashboard after a reboot is due to the fact that Filebeat and Elasticsearch take quite a lot of time to parse a multi-gigabyte json file from meerkats. If you open the Dashboard with events for the period, which includes the date of creation of the eve.json file, you can see how the columns of the chart grow as the file is processed. Along with the processed events, alerts appear in the corresponding Dashboard. In addition, the sniffer in RouterOS on x86 did not hang even once.

This time all the manuals were on ELK5 or even older, but I didn’t really like to install the software of the previous versions. I wanted to take a software with the most promising terms of support: preferably the freshest of stable ones.

As a result, in order to continue to be able to repeat the perfect feat without repeating all the torment, you have to write such step-by-step cheat sheets, which I share with you.

')

So, today Mikrotik (RouterOS), Suricata 4.1, Elasticsearch + Filebeat + Kibana 6.5.

Instead of intro

Conditions:

- Mikrotik on i386 in a virtual machine on host A. All interfaces on Mikrotik are scattered across VLANs, the host has one physical network interface.

- Available resources for IDS / IPS / NMS on host B with a single physical network interface.

- 20 megabyte channel out.

- The desire to receive analytics about traffic passing through the Mikrotik interface.

- The budget in hell rubles and FIG kopeks.

- Some hassle free time.

I will not tell you here what IDS / IPS / NMS is, why it is needed and how it happens. Everyone knows this without me, but whoever does not know will naguglit.

Also, I will not justify my choice between Snort and Suricata in favor of the latter. It's a matter of taste.

But I will superficially explain how this works:

Suricata somehow gets traffic. There are three options: a) pass it through itself in inline-mode, b) receive a copy of the traffic from the switch port and c) analyze the dumps with traffic. The resulting traffic Suricata analyzes and on the basis of the analysis gives data about what she found there in this traffic.

Suricata data can be issued in JSON. Accordingly, having structured data, they can be fed to any system for processing, systematization, analysis and visualization.

For the analysis and visualization of data, as I understand it, not being an expert in this field, ELK-stack is perfect. ELK-stack originally consisted of Elasticsearch, Logstash, Kibana. Now Beat has been added to it (a family of program interfaces that act as an intermediary between the data source and Logstash or Elasticsearch). Looking ahead, I will say that there was no Logstash, because the Beat gives the data directly to Elasticsearch perfectly, and Elasticsearch eats it perfectly. Elasticsearch transfers the data to Kibana, the web interface for the entire ELK stack. Kibana, using the templates transferred to it by Filebeat, provides the user with a visualization of the data, the so-called Dashboards. Considering the fact that Elasticsearch, Logstash, Beat and Kibana is the fruit of the work of one producer, this whole economy is well connected with each other, and the process of binding is well documented (of course, by open-source measures).

Thus, based on the above, the task can be described as follows: get a copy of the traffic from the router port, transfer it to Suricata, receive JSON-formatted data from Suricata, transfer it to Filebeat so that the latter in turn sends it to Elasticsearch and helped Kibana create their visual display.

Mikrotik RouterOS

If I had a hardware Mikrotik router, then the question of port mirroring would not be at all. Everything would be decided by including traffic mirroring through the external interface to any free port of Mikrotik itself. If there were no free port on Mikrotik, it would be possible to enable port mirroring on the switch. But in my case, Mikrotik had no physical ports at all, and the port on the switch received traffic from the entire host, on which, besides Mikrotik, there were several other virtual machines.

And then I once again mentally said: "Thank you, Mikrotik!". Thanks for the sniffer built into RouterOS. By tradition, we manage without screenshots, only console commands.

Open the terminal in WinBox and turn on the sniffer:

/tool sniffer set filter-interface=if-out filter-stream=yes streaming-enabled=yes streaming-server=192.168.1.253

/tool sniffer startInstead of if-out, specify the name of the interface from which you plan to intercept traffic, and instead of 192.168.1.253 , specify the IP address of the machine to which intercepted traffic will be sent via the TZSP protocol.

With Mikrotik'om everything.

Suricata

In general, I am not very Linux-headed, so I like pop distros most of all. Well, except that I like the more ascetic Debian more. That started with him. Well, of course, by virtue of non-linear headaches, I wanted to put the binaries from the repository as well. Build is always lazy for me. So, if it is possible to choose Debian, do not choose . Now I don’t remember exactly where I had a plug in the installation of the entire farm under Debian, but it was. And the whole further story about installing everything under Ubunta.

A 4-core virtual machine with 4 gigs of RAM was created, Ubuntu Server 18.04.1 LTS (x64) was downloaded and installed on it

Agreement : all further actions are performed on behalf of the superuser, so either log in as root, or add sudo to each command.

Since at each stage I made snapshots, and then repeatedly rolled back to them, at the end I lit up pretty glitches with an out of time sync in a virtual machine with real time.

Therefore, we immediately set the correct time zone and NTP synchronization:

systemctl start systemd-timesyncd

systemctl status systemd-timesyncd

dpkg-reconfigure tzdataTo ensure that there are no dependency problems during the installation of Suricata, we add universe repositories in /etc/apt/sources.list :

nano /etc/apt/sources.list...

deb archive.ubuntu.com/ubuntu bionic main universe

deb archive.ubuntu.com/ubuntu bionic-security main universe

deb archive.ubuntu.com/ubuntu bionic-updates main universe

We also add a repository, from where we will take Suricata:

add-apt-repository ppa:oisf/suricata-stableUpdating the package database:

apt-get updateInstall Suricata:

apt-get install -y suricataThe next step is to set up the rules for Suricata and their update:

apt-get install -y python-pip

pip install pyyaml

pip install https://github.com/OISF/suricata-update/archive/master.zipRun the update itself suricata-update :

pip install --pre --upgrade suricata-updateRunning without additional configuration will give us Emerging Threats Open ruleset:

suricata-updateTo view the list of sources, perform:

suricata-update list-sourcesUpdate rule sources:

suricata-update update-sourcesLet's see what was updated there in the sources, let's re-execute:

suricata-update list-sourcesWe include all free sources:

suricata-update enable-source ptresearch/attackdetection

suricata-update enable-source oisf/trafficid

suricata-update enable-source sslbl/ssl-fp-blacklistAnd once again we update the rules:

suricata-updateSuricata is installed.

Now you need to get traffic.

Trafr

Trafr is an application written by Mikrotik to convert TZSP traffic to pcap. The application is 32-bit, so to start it you will need to enable support for 32-bit applications in 64-bit Ubunta:

dpkg --add-architecture i386

apt-get update && apt-get install -y libc6:i386Download and unpack trafr :

wget http://www.mikrotik.com/download/trafr.tgz

tar xzf trafr.tgzCheck that traffic is caught:

./trafr -sAfter such a launch, the symbolic output in graphics mode broke in the virtual machine console, I had to reboot. When connecting remotely via ssh to PuTTY, there were no problems.

If you see random flickering on the screen, then the traffic arrives, and trafr catches it. If so, we transfer the trafr to the permanent residence and start it with the transfer of the caught traffic through the pipeline immediately to Suricata:

mv trafr /usr/local/bin/

/usr/local/bin/trafr -s | suricata -c /etc/suricata/suricata.yaml -r /dev/stdin

Now we check that the traffic goes to Suricata, for this we perform in the next terminal:

tail -f /var/log/suricata/fast.logYou should see a smart scrolling of meaningful text - a log of receiving meerkat traffic.

It is also useful to make sure that Suricata not only receives traffic, but also analyzes:

tail -f /var/log/suricata/eve.jsonThis is exactly the same event output from Suricata in JSON format, which we will feed to Filebeat.

Elasticsearch + Filebeat + Kibana 6.5

Install the PGP key required for using the Elastic repository and install the necessary dependencies:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

echo "deb https://artifacts.elastic.co/packages/6.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-6.x.list

apt-get update && apt-get install -y openjdk-8-jre apt-transport-https wget nginxPlease note that Java version 8. Everything above 8 is not supported. Therefore, if you have previously managed to install more recent Java, demolish it and put 8.

Make sure that Java is installed as it should:

java -versionWe get about the following conclusion:

java version "1.8.0_191"

Java (TM) SE Runtime Environment (build 1.8.0_191-b12)

Java HotSpot (TM) 64-Bit VM Server (build 25.191-b12, mixed mode)

Create a username and password to access Kibana. Instead of admin, choose something that you prefer:

echo "admin:`openssl passwd -apr1`" | sudo tee -a /etc/nginx/htpasswd.usersSince ELK will run on localhost, configure the reverse proxy in nginx:

nano /etc/nginx/sites-available/kibanaserver {

listen 80;

server_name suricata.server;

auth_basic "Restricted Access";

auth_basic_user_file /etc/nginx/htpasswd.users;

location / {

proxy_pass localhost : 5601;

proxy_http_version 1.1;

proxy_set_header Upgrade $ http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $ host;

proxy_cache_bypass $ http_upgrade;

}

}

rm /etc/nginx/sites-enabled/default

ln -s /etc/nginx/sites-available/kibana /etc/nginx/sites-enabled/kibana

Restart nginx:

systemctl restart nginxWe put Elasticsearch:

apt-get install -y elasticsearchEnable autorun when booting the OS:

systemctl daemon-reload

systemctl enable elasticsearch.serviceRun:

systemctl start elasticsearch.serviceWe check whether:

curl -X GET "localhost:9200/"Depending on the performance of your piece of hardware, running ES can take some time. If we get connection refused , then we simply repeat the request and wait until we get something like a response:

{

"Name": "lcZuxxm",

"Cluster_name": "elasticsearch",

"Cluster_uuid": "kmJHqJnlQe2Rk7F-CRi4EA",

"Version": {

"Number": "6.5.1",

"Build_flavor": "default",

"Build_type": "deb",

"Build_hash": "8c58350",

"Build_date": "2018-11-16T02: 22: 42.182257Z",

"Build_snapshot": false,

"Lucene_version": "7.5.0",

"Minimum_wire_compatibility_version": "5.6.0",

"Minimum_index_compatibility_version": "5.0.0"

},

"Tagline": "You Know, for Search"

}

We put Kibana:

apt-get install -y kibanaEnable autorun when booting the OS:

systemctl daemon-reload

systemctl enable kibana.serviceRun:

systemctl start kibana.serviceNow you can go to 192.168.1.253 (of course, the IP address is the one that was assigned to your car with meerkats). The Kibana title page should open.

We put Filebeat:

apt-get install -y filebeatEnable autorun when booting the OS:

systemctl daemon-reload

systemctl enable filebeatWe include the Suricata module included in the Filebeat module set:

filebeat modules enable suricataInstall the Suricata plugins in Elasticsearch:

/usr/share/elasticsearch/bin/elasticsearch-plugin install ingest-geoip

/usr/share/elasticsearch/bin/elasticsearch-plugin install ingest-user-agentRestart Elasticsearch:

systemctl restart elasticsearch.servicePerform the initial configuration of Filebeat, at the same time loading the templates in Kibana:

filebeat setup -eWe check that Filebeat found /var/log/suricata/eve.json and processes it. To do this, we start Filebeat in the mode of displaying data with the publish marker:

filebeat -e -d "publish"The first is the json-formatted output of Filebeat itself, then the simple text output of its logs, and only after some time the output from Suricata, so wait and make sure that everything works. After that, interrupt Filebeat and return to bash.

Enable autorun when booting the OS:

systemctl daemon-reload

systemctl enable filebeat.serviceLaunch Filebeat:

systemctl start filebeat.serviceGo to Kibana, select the Dashboard in the menu on the left, select the filebeat- * index. Select Dashboard again, select [Suricata] Alert Overview from the list and should get something like this:

Optional

Do not forget the logrotate, and not that no matter how capacious the hard drive, Suricata will score it very quickly:

nano /etc/logrotate.d/suricata/var/log/suricata/*.log /var/log/suricata/*.json

{

weekly

rotate 3

missingok

nocompress

create

sharedscripts

postrotate

/ bin / kill -HUP `cat /var/run/suricata.pid 2> / dev / null` 2> / dev / null || true

endscript

}

In addition, there were rumors that someone regularly has a sniffer in Mikrotik with the status of running and stops sending traffic. Then we write a script to restart the sniffer and run it on a schedule:

/tool sniffer stop

:delay 30s

/tool sniffer startConclusion

Frankly, I'm not quite happy with the stability of the above bundle. Namely: it is worth rebooting, and miracles begin. Once I stopped processing all the rules except the pair. I had to reinstall everything. The second time Elasticsearch stopped receiving data from Filebeat at all, and had to roll back to the snapshot state before rebooting.

These problems have not yet decided.

In addition, plans to implement IPS on the basis of IP-addresses of villains identified by Suricata transferred to Mikrotik.

UPD : Accusations of instability are removed. My conclusion about stopping rule processing was erroneous. In fact, the reason for the emptiness in Dashboard after a reboot is due to the fact that Filebeat and Elasticsearch take quite a lot of time to parse a multi-gigabyte json file from meerkats. If you open the Dashboard with events for the period, which includes the date of creation of the eve.json file, you can see how the columns of the chart grow as the file is processed. Along with the processed events, alerts appear in the corresponding Dashboard. In addition, the sniffer in RouterOS on x86 did not hang even once.

Source: https://habr.com/ru/post/431600/

All Articles