node.js serverside - work on the bugs. Part 1

Good day.

This article is aimed at developers who have an idea about node.js.

Recently I was preparing material on facts that it is useful for developers to know for node.js in our office. The projects we are working on are API services that use the node.js express module as a web server. The material is based on real cases in which the code worked incorrectly or the logic in it was carefully hidden, or it provoked errors during expansion. On the basis of this material, a seminar was held on staff development.

')

Here, I decided to share. While only the first part, it is about 30%. If it will be interesting, will be continued!

I tried to provide an opportunity for quick familiarization, so I hid examples, arguments and comments in the spoilers. If the statements are obvious, “water” can be skipped. Although our "rake" in spoilers can also be interesting.

One colleague at the workshop asked me a question, why talk about it, if everything is already in this or that documentation. My answer was as follows. Despite the fact that the message is correct, everything is really in the documentation, we still make annoying mistakes related to the lack of understanding or ignorance of basic things.

Let's get started!

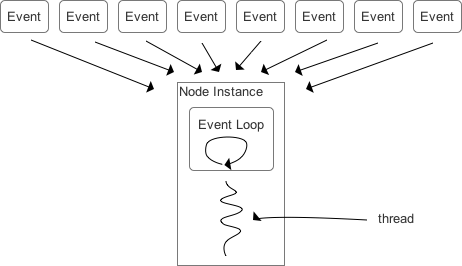

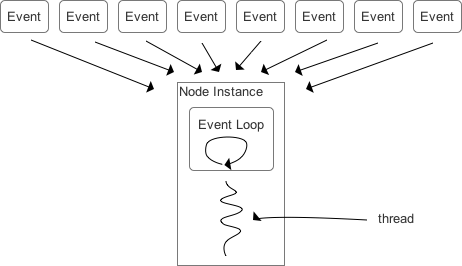

Unlike javavm, nodejs-vm is single-threaded ** .

A source

Scaling is done by running another node.js process or, if server resources are coming to an end, by running another server.

Starting with version 10.5.0, experimental support for multithreading has appeared in node.js.

A source

The heart of nodejs-vm is the event loop. When the execution of the code should be suspended or the code seems to be over, control passes to him.

package.json - our package description file. In this context, we are talking about our application, and not about dependencies. Listed below are the fields and explanations why it’s worth filling them out.

At this point you can put an end. Information not entered is code simplification techniques used by our team.

If errors are found, I will try to fix them quickly!

This article is aimed at developers who have an idea about node.js.

Recently I was preparing material on facts that it is useful for developers to know for node.js in our office. The projects we are working on are API services that use the node.js express module as a web server. The material is based on real cases in which the code worked incorrectly or the logic in it was carefully hidden, or it provoked errors during expansion. On the basis of this material, a seminar was held on staff development.

')

Here, I decided to share. While only the first part, it is about 30%. If it will be interesting, will be continued!

I tried to provide an opportunity for quick familiarization, so I hid examples, arguments and comments in the spoilers. If the statements are obvious, “water” can be skipped. Although our "rake" in spoilers can also be interesting.

One colleague at the workshop asked me a question, why talk about it, if everything is already in this or that documentation. My answer was as follows. Despite the fact that the message is correct, everything is really in the documentation, we still make annoying mistakes related to the lack of understanding or ignorance of basic things.

Let's get started!

Node.js virtual machine

Single streaming

Unlike javavm, nodejs-vm is single-threaded ** .

A source

more

At the same time, there is a pool of auxiliary threads that are used by the virtual machine itself, for example, for organizing I / O. But all user code is executed only in one, “main” thread.

This seriously simplifies life, as there is no competition. Code execution cannot be interrupted in any place and continued in another. The code is simply executed until it is necessary to wait for something, for example, data readiness when reading from a file. As long as a wait occurs, another handler can be executed, either until it finishes working, or until it also starts to wait for something.

That is, if there is an internal data structure, then you do not have to worry about synchronizing access to it!

What to do if the "main" stream does not have time to process the data?

This seriously simplifies life, as there is no competition. Code execution cannot be interrupted in any place and continued in another. The code is simply executed until it is necessary to wait for something, for example, data readiness when reading from a file. As long as a wait occurs, another handler can be executed, either until it finishes working, or until it also starts to wait for something.

That is, if there is an internal data structure, then you do not have to worry about synchronizing access to it!

What to do if the "main" stream does not have time to process the data?

Scaling is done by running another node.js process or, if server resources are coming to an end, by running another server.

consequences and our "rake"

Here, too, everything is clear. You must always be prepared for the fact that the processes node.js may be (and most likely will be) more than one. Yes, and sometimes the servers can also be several.

"Rakes" that were

bad, not very good in isolation from the context, but even more so in this situation. Without the involvement of a third-party service, this task seems to me to have no solution.

A colleague who was engaged in this, very, very much wanted to implement it without attracting the actual database. In the end, after several “approaches to the projectile”, this was realized ... by engaging SharePoint.

"Rakes" that were hidden are found in our code

Parallel lines in infinity intersect. It is impossible to prove, but I have seen.An attempt was made to ensure the uniqueness of instances of entities in the database solely by the application. In general, it looks

Jean Effel, "The novel of Adam and Eve."

A colleague who was engaged in this, very, very much wanted to implement it without attracting the actual database. In the end, after several “approaches to the projectile”, this was realized ... by engaging SharePoint.

** Multithreading or “if you really want”

Starting with version 10.5.0, experimental support for multithreading has appeared in node.js.

A source

But the paradigm remained the same.

Therefore, the old code will continue to work when using workflows.

You can read more here.

- For each new workflow, a separate isolated instance of the node.js virtual machine environment is created.

- Workflows do not have shared mutable data. (There are a couple of reservations, but basically the statement is true.)

- Communication is done using messages and a SharedArrayBuffer.

Therefore, the old code will continue to work when using workflows.

You can read more here.

Application life cycle

The heart of nodejs-vm is the event loop. When the execution of the code should be suspended or the code seems to be over, control passes to him.

Hidden text

In the event loop, it checks to see if (ooh) events have occurred for which we have registered handlers. If it does, the handlers will be called. And if not, it will be checked, and whether there are no “generators” of events for which we have registered handlers. An open tcp connection or timer can be such generators. If those could not be found, then the program exits. Otherwise, waiting for one of these events occurs, handlers are called, and everything repeats.

The consequence of this behavior is the fact that when the code seems to be finished, the output from nodejs-vm does not occur, for example, because we registered a timer handler that must be called after some time.

This is shown in the following example.

result:

You can read more here.

As a result, if the administrator visited the system, any user who accessed this instance of the service was perceived as an administrator.

It cost me some effort to show a colleague that there was a mistake in logic. The colleague was sure that a completely new environment was created for every http request.

The consequence of this behavior is the fact that when the code seems to be finished, the output from nodejs-vm does not occur, for example, because we registered a timer handler that must be called after some time.

This is shown in the following example.

console.log('registering timer callbacks'); setTimeout( function() { console.log('Timer Event 1'); }, 1000); console.log('Is it the end?'); result:

registering timer callbacks Is it the end? Timer Event 1 You can read more here.

One more "rake" in our code

Anyone can rule the state!A sign of whether the user is an administrator is stored in a global variable. This variable was initialized to false at the beginning of the program. Later, when the administrator was registered, this variable was assigned the value true.

As a result, if the administrator visited the system, any user who accessed this instance of the service was perceived as an administrator.

It cost me some effort to show a colleague that there was a mistake in logic. The colleague was sure that a completely new environment was created for every http request.

package.json - fields to fill out

package.json - our package description file. In this context, we are talking about our application, and not about dependencies. Listed below are the fields and explanations why it’s worth filling them out.

Hidden text

As long as we do not publish the package in the repository, the field can be scored. The question is that this field is useful for naming the installation file or, for example, for displaying the product name on its web page. In general, "how do you call a yacht, .."

The main idea is not to forget to increase the version number when expanding the functionality, correcting errors, ... Unfortunately, in our office you can still find products with the same version 0.0.0. And then go and guess what kind of functionality works for the client ...

This field tells you which file will be launched when our application starts (`npm start`). If the package is used as a dependency, which file will be imported when using our module by another application. The current directory is the directory where the `package.json` file is located.

And yet, if we, for example, use vscode , the file specified in this field will be launched when the debugger is invoked or when the "execute" command is run.

The extension ".js" may be omitted. It is rather a consequence of all possible uses, so the documentation is not directly spelled out.

This field contains a tuple: {"node": version , "npm": version , ...}.

I know the “node” and “npm” fields. They determine the node.js and npm versions needed for the operation of our application. Versions are checked when running the “npm install” command.

The standard syntax for determining dependency package versions is supported: without prefix (single version), the prefix "~" (the first two numbers of the version must match) and the prefix "^" (only the first number of the version must match). If there is a prefix, the version must be greater than or equal to that specified in this field.

Some containers, at least in the documentation, write that the appropriate versions will be used by default. In this case, we are talking about Azure.

Example:

It was repeatedly agreed with the client that the required version of `node.js` should be no less than 8. When the initial versions of the application were delivered, everything worked. “One fine day” after the delivery of the new version from the client, the application stopped running. Everything worked in our tests.

The problem was that in this version we began to use functionality that was supported only starting from version 8 node.js. The “engines” field was not filled, so no one had noticed before that the client had an ancient version of node.js installed. (Azure web services default).

The field contains a tuple of the form: {"script1": script1 , "script2": script2 , ...}.

There are standard scripts that are executed in a given situation. For example, the “install” script will run after running “npm install”. Very convenient, for example, to check the availability of programs required for the application to work. Or, say, to compress all the static files available through our web service so that they do not have to be compressed on the fly.

You can not be limited to standard names. In order to execute an arbitrary script, you need to run "npm run script-name ".

It is convenient to collect all used scripts in one place.

Example:

PS The extension ".js" can be omitted in most cases.

name

As long as we do not publish the package in the repository, the field can be scored. The question is that this field is useful for naming the installation file or, for example, for displaying the product name on its web page. In general, "how do you call a yacht, .."

version

The main idea is not to forget to increase the version number when expanding the functionality, correcting errors, ... Unfortunately, in our office you can still find products with the same version 0.0.0. And then go and guess what kind of functionality works for the client ...

main

This field tells you which file will be launched when our application starts (`npm start`). If the package is used as a dependency, which file will be imported when using our module by another application. The current directory is the directory where the `package.json` file is located.

And yet, if we, for example, use vscode , the file specified in this field will be launched when the debugger is invoked or when the "execute" command is run.

The extension ".js" may be omitted. It is rather a consequence of all possible uses, so the documentation is not directly spelled out.

engines

This field contains a tuple: {"node": version , "npm": version , ...}.

I know the “node” and “npm” fields. They determine the node.js and npm versions needed for the operation of our application. Versions are checked when running the “npm install” command.

The standard syntax for determining dependency package versions is supported: without prefix (single version), the prefix "~" (the first two numbers of the version must match) and the prefix "^" (only the first number of the version must match). If there is a prefix, the version must be greater than or equal to that specified in this field.

Some containers, at least in the documentation, write that the appropriate versions will be used by default. In this case, we are talking about Azure.

Example:

"engines": { "node": "~8.11", // require node version 8.11.* starting from 8.11.0 "npm": "^6.0.1" // require npm version 6.* starting from 6.0.1 }, next "rake"

And the king is naked!

It was repeatedly agreed with the client that the required version of `node.js` should be no less than 8. When the initial versions of the application were delivered, everything worked. “One fine day” after the delivery of the new version from the client, the application stopped running. Everything worked in our tests.

The problem was that in this version we began to use functionality that was supported only starting from version 8 node.js. The “engines” field was not filled, so no one had noticed before that the client had an ancient version of node.js installed. (Azure web services default).

scripts

The field contains a tuple of the form: {"script1": script1 , "script2": script2 , ...}.

There are standard scripts that are executed in a given situation. For example, the “install” script will run after running “npm install”. Very convenient, for example, to check the availability of programs required for the application to work. Or, say, to compress all the static files available through our web service so that they do not have to be compressed on the fly.

You can not be limited to standard names. In order to execute an arbitrary script, you need to run "npm run script-name ".

It is convenient to collect all used scripts in one place.

Example:

"scripts": { "install": "node scripts/install-extras", "start": "node src/well/hidden/main/server extra_param_1 extra_param_2", "another-script": "node scripts/another-script" } PS The extension ".js" can be omitted in most cases.

package-lock.json - helps to install specific versions of dependencies, but not the "freshest" ones

Hidden text

This file appeared in npm relatively recently. His goal - to organize the repeatability of the assembly.

By car colleagues application worked fine. On another computer in an identical environment, in an application placed from git to a new directory, after executing 'npm install', 'npm start', hitherto unseen errors appeared.

The problem was caused by the fact that the 'package-lock.json' file was missing from the git repository. Therefore, when installing packages, all dependencies of the second and more levels (of course, not written in package.json) were installed as fresh as possible. On a colleague's computer, everything was fine. An incompatible set of versions was selected on the tested computer.

Returning from the lyrical digression. The file 'package-lock.json' contains a list of all modules installed locally for our application. The presence of this file allows you to recreate one-in-one set of module versions.

Summary: do not forget to put in git and include in the file of delivery (installation) of the application!

Useful: if the file 'package-lock.json' is missing, but there is a directory 'node_modules' with all the necessary modules, the file 'package-lock.json' can be recreated:

To git or not to git? ..

This file appeared in npm relatively recently. His goal - to organize the repeatability of the assembly.

and one more "rake"

But I have not changed anything in my program! Yesterday she worked!

By car colleagues application worked fine. On another computer in an identical environment, in an application placed from git to a new directory, after executing 'npm install', 'npm start', hitherto unseen errors appeared.

The problem was caused by the fact that the 'package-lock.json' file was missing from the git repository. Therefore, when installing packages, all dependencies of the second and more levels (of course, not written in package.json) were installed as fresh as possible. On a colleague's computer, everything was fine. An incompatible set of versions was selected on the tested computer.

package-lock.json - to git!

Returning from the lyrical digression. The file 'package-lock.json' contains a list of all modules installed locally for our application. The presence of this file allows you to recreate one-in-one set of module versions.

Summary: do not forget to put in git and include in the file of delivery (installation) of the application!

Useful: if the file 'package-lock.json' is missing, but there is a directory 'node_modules' with all the necessary modules, the file 'package-lock.json' can be recreated:

npm shrinkwrap rename npm-shrinkwrap.json package-lock.json At this point you can put an end. Information not entered is code simplification techniques used by our team.

If errors are found, I will try to fix them quickly!

Source: https://habr.com/ru/post/430972/

All Articles