Mom sleeps at night - we collect OpenCV for Raspbian

The last couple of weeks have been tough for our team. OpenCV 4 was released , and with it they were preparing for Intel's OpenVINO toolkit R4, which includes OpenCV. You think, I will distract for a while, I will look, as usual, the OpenCV forums, yes user comments, and then you, it became fashionable to say that OpenCV is not IoT, that under Raspberry Pi to collect - there is not enough solder, that at night make -j2 to set - in the morning will be ready if you're lucky.

Therefore, I propose to join hands and see how you can build the OpenCV library for a 32-bit operating system running on an ARM processor using machine resources with a 64-bit OS driven by an excellent CPU architecture. Witchcraft Cross compilation, no other way!

Formulation of the problem

Compiling directly on the board, usually called native, is really time consuming, so we’ll consider here a way to build a project that allows stronger computing devices (let's call them hosts) to prepare binaries for their small relatives. Moreover, both machines may have different CPU architectures. This is cross-compiling.

So, for the preparation of raspberry pie stuffed with OpenCV, we need:

- Carcass docker image of Ubuntu 16.04

- The host machine is more powerful than the Raspberry Pi (otherwise what’s the point, isn't it?)

- Cross compiler for ARMhf, as well as libraries of the corresponding architecture

The entire build process of OpenCV will occur on the host machine. I use Ubuntu in my home. With another version of Linux, problems with reproduction should not arise. For Windows users, my sincere wishes not to give up and try to figure it out.

Docker installation

I started my acquaintance with docker about a week ago, so gourmets should add salt and syntactic sugar to taste. We have enough with you three ingredients - Dockerfile, the concept of the image and the container.

Docker itself is a tool for creating and reproducing the configuration of any operating system with the required set of components. A dockerfile is a set of shell commands that you usually use on a host machine, but in this case they all apply to the so-called docker image.

In order to deliver the docker, consider the easiest way: order a package through the apt-get delivery service:

sudo apt-get install -y docker.io We'll give the docker daemon everything he asks for and logout from the system (notice login accordingly).

sudo usermod -a -G docker $USER Preparing the workspace

Raspberry Pi (in my case RPI 2 Model B) in the most common preparation is the ARMv7 CPU with the Raspbian (Debian based) operating system. We will create a docker image based on Ubuntu 16.04, into which we will cross-compiler, arm libraries and build OpenCV there.

Create a daddy where our Dockerfile will lie:

mkdir ubuntu16_armhf_opencv && cd ubuntu16_armhf_opencv touch Dockerfile Add information about the base OS and armhf architecture for the apt-get package installer:

FROM ubuntu:16.04 USER root RUN dpkg --add-architecture armhf RUN apt-get update Please note that commands like FROM ... , RUN ... are the docker syntax and are written in the generated Dockerfile test file.

Let's ubuntu16_armhf_opencv back to the ubuntu16_armhf_opencv parent directory and try to create our docker image:

docker image build ubuntu16_armhf_opencv During the execution of the apt-get update command, you should see errors of the following kind: Err:[] [url] xenial[-] armhf Packages

Ign:30 http://archive.ubuntu.com/ubuntu xenial-backports/main armhf Packages Ign:32 http://archive.ubuntu.com/ubuntu xenial-backports/universe armhf Packages Err:7 http://archive.ubuntu.com/ubuntu xenial/main armhf Packages 404 Not Found Ign:9 http://archive.ubuntu.com/ubuntu xenial/restricted armhf Packages Ign:18 http://archive.ubuntu.com/ubuntu xenial/universe armhf Packages Ign:20 http://archive.ubuntu.com/ubuntu xenial/multiverse armhf Packages Err:22 http://archive.ubuntu.com/ubuntu xenial-updates/main armhf Packages 404 Not Found Ign:24 http://archive.ubuntu.com/ubuntu xenial-updates/restricted armhf Packages Ign:26 http://archive.ubuntu.com/ubuntu xenial-updates/universe armhf Packages Ign:28 http://archive.ubuntu.com/ubuntu xenial-updates/multiverse armhf Packages Err:30 http://archive.ubuntu.com/ubuntu xenial-backports/main armhf Packages 404 Not Found Ign:32 http://archive.ubuntu.com/ubuntu xenial-backports/universe armhf Packages If you pry into the /etc/apt/sources.list file, then each such error corresponds to a line, for example:

Mistake

Err:22 http://archive.ubuntu.com/ubuntu xenial-updates/main armhf Packages 404 Not Found Line in /etc/apt/sources.list :

deb http://archive.ubuntu.com/ubuntu/ xenial-updates main restricted Solution :

Split into two:

deb [arch=amd64] http://archive.ubuntu.com/ubuntu/ xenial-updates main restricted deb [arch=armhf] http://ports.ubuntu.com/ubuntu-ports/ xenial-updates main restricted Thus, it is necessary to replace several sources of packages. In our docker, we will replace them all with one command:

RUN sed -i -E 's|^deb ([^ ]+) (.*)$|deb [arch=amd64] \1 \2\ndeb [arch=armhf] http://ports.ubuntu.com/ubuntu-ports/ \2|' /etc/apt/sources.list Now apt-get update should work without errors.

We put the necessary packages

We need to put the host packages such as git , python-pip , cmake and pkg-config , as well as crossbuild-essential-armhf , which is a set of cross-compilers from gcc / g ++ ( arm-linux-gnueabihf-gcc and arm-linux-gnueabihf-g++ ) and system libraries of the corresponding architecture:

RUN apt-get install -y git python-pip cmake pkg-config crossbuild-essential-armhf From the unusual - we also download GTK (used for drawing windows in the highgui module), GStreamer and Python, but with an explicit indication of the foreign architecture:

RUN apt-get install -y --no-install-recommends \ libgtk2.0-dev:armhf \ libpython-dev:armhf \ libgstreamer1.0-dev:armhf \ libgstreamer-plugins-base1.0-dev:armhf \ libgstreamer-plugins-good1.0-dev:armhf \ libgstreamer-plugins-bad1.0-dev:armhf A further - we clone and collect, indicating the necessary flags:

RUN git clone https://github.com/opencv/opencv --depth 1 RUN mkdir opencv/build && cd opencv/build && \ export PKG_CONFIG_PATH=/usr/lib/arm-linux-gnueabihf/pkgconfig && \ cmake -DCMAKE_BUILD_TYPE=Release \ -DOPENCV_CONFIG_INSTALL_PATH="cmake" \ -DCMAKE_TOOLCHAIN_FILE="../opencv/platforms/linux/arm-gnueabi.toolchain.cmake" \ -DWITH_IPP=OFF \ -DBUILD_TESTS=OFF \ -DBUILD_PERF_TESTS=OFF \ -DOPENCV_ENABLE_PKG_CONFIG=ON \ -DPYTHON2_INCLUDE_PATH="/usr/include/python2.7" \ -DPYTHON2_NUMPY_INCLUDE_DIRS="/usr/local/lib/python2.7/dist-packages/numpy/core/include" \ -DENABLE_NEON=ON \ -DCPU_BASELINE="NEON" .. Where

CMAKE_TOOLCHAIN_FILE- the path to the cmake file that defines the cross-compilation process (sets the required compiler, limits the use of host libraries.WITH_IPP=OFF, - disable heavy dependencies.BUILD_TESTS=OFF,BUILD_PERF_TESTS=OFF, disable the test build.OPENCV_ENABLE_PKG_CONFIG=ON- so that pkg-config can find dependencies like GTK.PKG_CONFIG_PATHis the correct path wherepkg-configwill look for libraries.PYTHON2_INCLUDE_PATH,PYTHON2_NUMPY_INCLUDE_DIRS- paths needed for cross-compiling wrappers for python2.ENABLE_NEON=ON,CPU_BASELINE="NEON"- we allow NEON optimization.OPENCV_CONFIG_INSTALL_PATH- regulates the location of files in theinstalldirectory.

The main thing you should pay attention after the execution of cmake is that all the necessary modules are built (python2, for example):

-- OpenCV modules: -- To be built: calib3d core dnn features2d flann gapi highgui imgcodecs imgproc java_bindings_generator ml objdetect photo python2 python_bindings_generator stitching ts video videoio -- Disabled: world -- Disabled by dependency: - -- Unavailable: java js python3 -- Applications: tests perf_tests apps -- Documentation: NO -- Non-free algorithms: NO and the necessary dependencies, such as GTK, were found:

-- GUI: -- GTK+: YES (ver 2.24.30) -- GThread : YES (ver 2.48.2) -- GtkGlExt: NO -- -- Video I/O: -- GStreamer: -- base: YES (ver 1.8.3) -- video: YES (ver 1.8.3) -- app: YES (ver 1.8.3) -- riff: YES (ver 1.8.3) -- pbutils: YES (ver 1.8.3) -- v4l/v4l2: linux/videodev2.h It remains only to call make , make install and wait for the build to finish:

Successfully built 4dae6b1a7d32 Use this image id to tag and create a container:

docker tag 4dae6b1a7d32 ubuntu16_armhf_opencv:latest docker run ubuntu16_armhf_opencv And it remains for us to pump the collected OpenCV from the container. First we look at the identifier of the created container:

$ docker container ls --all CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES e94667fe60d2 ubuntu16_armhf_opencv "/bin/bash" 6 seconds ago Exited (0) 5 seconds ago clever_yalow And copy the install directory with the OpenCV installed:

docker cp e94667fe60d2:/opencv/build/install/ ./ mv install ocv_install We're setting the table

Copy ocv_install to Raspberry Pi, install paths and try to run OpenCV from python.

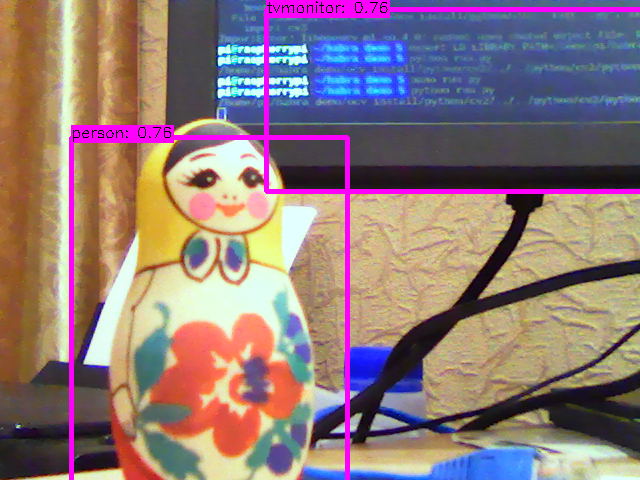

export LD_LIBRARY_PATH=/path/to/ocv_install/lib/:$LD_LIBRARY_PATH export PYTHONPATH=/path/to/ocv_install/python/:$PYTHONPATH Let's run the detection example using the MobileNet-SSD neural network from https://github.com/chuanqi305/MobileNet-SSD :

import cv2 as cv print cv.__file__ classes = ['backgroud', 'aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus', 'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse', 'motorbike', 'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tvmonitor'] cap = cv.VideoCapture(0) net = cv.dnn.readNet('MobileNetSSD_deploy.caffemodel', 'MobileNetSSD_deploy.prototxt') cv.namedWindow('Object detection', cv.WINDOW_NORMAL) while cv.waitKey(1) != 27: hasFrame, frame = cap.read() if not hasFrame: break frame_height, frame_width = frame.shape[0], frame.shape[1] blob = cv.dnn.blobFromImage(frame, scalefactor=0.007843, size=(300, 300), mean=(127.5, 127.5, 127.5)) net.setInput(blob) out = net.forward() for detection in out.reshape(-1, 7): classId = int(detection[1]) confidence = float(detection[2]) xmin = int(detection[3] * frame_width) ymin = int(detection[4] * frame_height) xmax = int(detection[5] * frame_width) ymax = int(detection[6] * frame_height) if confidence > 0.5: cv.rectangle(frame, (xmin, ymin), (xmax, ymax), color=(255, 0, 255), thickness=3) label = '%s: %.2f' % (classes[classId], confidence) labelSize, baseLine = cv.getTextSize(label, cv.FONT_HERSHEY_SIMPLEX, 0.5, 1) ymin = max(ymin, labelSize[1]) cv.rectangle(frame, (xmin, ymin - labelSize[1]), (xmin + labelSize[0], ymin + baseLine), (255, 0, 255), cv.FILLED) cv.putText(frame, label, (xmin, ymin), cv.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 0)) cv.imshow('Object detection', frame)

That's all, the complete assembly takes no more than 20 minutes. I attach the final version of Dockerfile below and take this opportunity to offer a short survey from the OpenCV team for those who once had experience with the library: https://opencv.org/survey-2018.html .

And yes, congratulations on OpenCV 4! This is not just the work of a separate team, it is the work of the entire community - OpenCV 4 you.

FROM ubuntu:16.04 USER root RUN dpkg --add-architecture armhf RUN sed -i -E 's|^deb ([^ ]+) (.*)$|deb [arch=amd64] \1 \2\ndeb [arch=armhf] http://ports.ubuntu.com/ubuntu-ports/ \2|' /etc/apt/sources.list RUN apt-get update && \ apt-get install -y --no-install-recommends \ cmake \ pkg-config \ crossbuild-essential-armhf \ git \ python-pip \ libgtk2.0-dev:armhf \ libpython-dev:armhf \ libgstreamer1.0-dev:armhf \ libgstreamer-plugins-base1.0-dev:armhf \ libgstreamer-plugins-good1.0-dev:armhf \ libgstreamer-plugins-bad1.0-dev:armhf RUN pip install numpy==1.12.1 RUN git clone https://github.com/opencv/opencv --depth 1 RUN mkdir opencv/build && cd opencv/build && \ export PKG_CONFIG_PATH=/usr/lib/arm-linux-gnueabihf/pkgconfig && \ cmake -DCMAKE_BUILD_TYPE=Release \ -DOPENCV_CONFIG_INSTALL_PATH="cmake" \ -DCMAKE_TOOLCHAIN_FILE="../opencv/platforms/linux/arm-gnueabi.toolchain.cmake" \ -DWITH_IPP=OFF \ -DBUILD_TESTS=OFF \ -DBUILD_PERF_TESTS=OFF \ -DOPENCV_ENABLE_PKG_CONFIG=ON \ -DPYTHON2_INCLUDE_PATH="/usr/include/python2.7" \ -DPYTHON2_NUMPY_INCLUDE_DIRS="/usr/local/lib/python2.7/dist-packages/numpy/core/include" \ -DENABLE_NEON=ON \ -DCPU_BASELINE="NEON" .. && make -j4 && make install ')

Source: https://habr.com/ru/post/430906/

All Articles