MIT course "Computer Systems Security". Lecture 18: "Private Internet Browsing", part 3

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems". Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: "Introduction: threat models" Part 1 / Part 2 / Part 3

Lecture 2: "Control of hacker attacks" Part 1 / Part 2 / Part 3

Lecture 3: "Buffer overflow: exploits and protection" Part 1 / Part 2 / Part 3

Lecture 4: "Separation of privileges" Part 1 / Part 2 / Part 3

Lecture 5: "Where Security Errors Come From" Part 1 / Part 2

Lecture 6: "Opportunities" Part 1 / Part 2 / Part 3

Lecture 7: "Sandbox Native Client" Part 1 / Part 2 / Part 3

Lecture 8: "Model of network security" Part 1 / Part 2 / Part 3

Lecture 9: "Web Application Security" Part 1 / Part 2 / Part 3

Lecture 10: "Symbolic execution" Part 1 / Part 2 / Part 3

Lecture 11: "Ur / Web programming language" Part 1 / Part 2 / Part 3

Lecture 12: "Network Security" Part 1 / Part 2 / Part 3

Lecture 13: "Network Protocols" Part 1 / Part 2 / Part 3

Lecture 14: "SSL and HTTPS" Part 1 / Part 2 / Part 3

Lecture 15: "Medical Software" Part 1 / Part 2 / Part 3

Lecture 16: "Attacks through the side channel" Part 1 / Part 2 / Part 3

Lecture 17: "User Authentication" Part 1 / Part 2 / Part 3

Lecture 18: "Private Internet browsing" Part 1 / Part 2 / Part 3

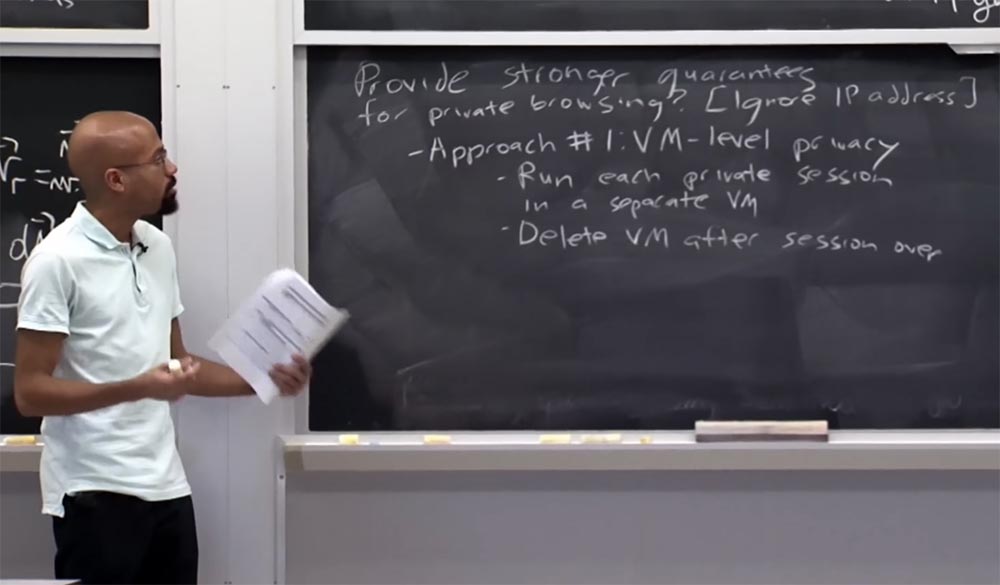

So, the first approach will be to use virtual machines as a way to enhance the guarantee of private browsing, that is, we consider privacy at the virtual machine level.

')

The basic idea is that each private session should run in a separate virtual machine. Then, when the user finishes a private browsing session, the virtual machine is deleted. So what is the advantage of this idea?

It’s probably because you’ve got stronger privacy guarantees that you can provide to the user because, presumably, the virtual machine has a fairly clean data input / output interface. It can be assumed that you combine these virtual machines into, say, some kind of secure solution for a swap, for example, using Open BSD along with disk encryption.

So, we have a very clear separation of the VM here, above, and all I / O operations that occur below. This provides you with stronger guarantees than those that you get from a browser that was not designed from scratch to take very careful care of all the information input / output ways and what secrets can leak when this information resides in the data store. .

So yes, it provides stronger guarantees. And besides, it does not require any changes in your applications, that is, in the browser. You take a browser, put it in one of these virtual machines - and everything magically gets better without any changes in the application.

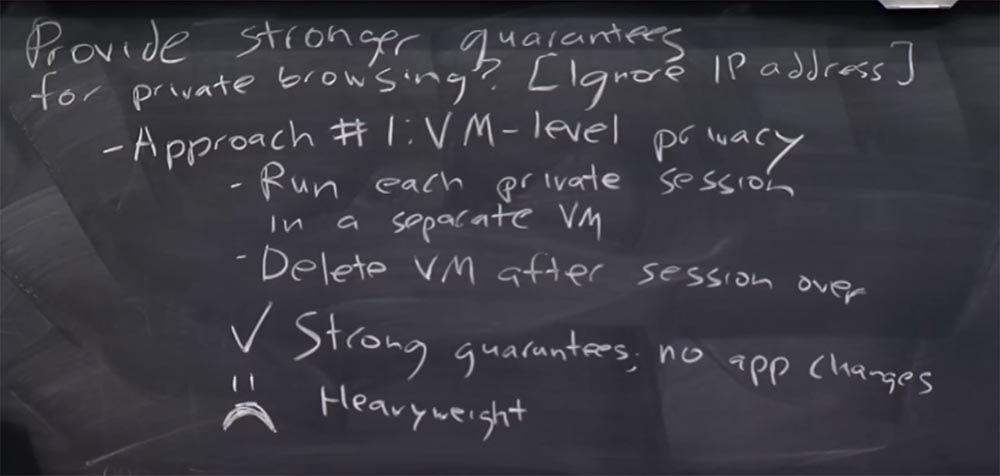

What's wrong with that - I’ll draw a sad smiley on the blackboard - it’s cumbersome. By cumbersomeness, I mean that when you want to start one of the private browsing sessions, you have to start the whole virtual machine. And it can be quite painful, because users will be upset because it will take them a long time to launch their private browsing sessions.

Other problems are that this solution is impractical. And the reason for the impracticality is not that it’s really difficult for users to do such things as transfer files that they saved in private browsing mode to a computer, transfer all the bookmarks they generated in this mode - all this can be done by But here there are many inconveniences associated with laziness.

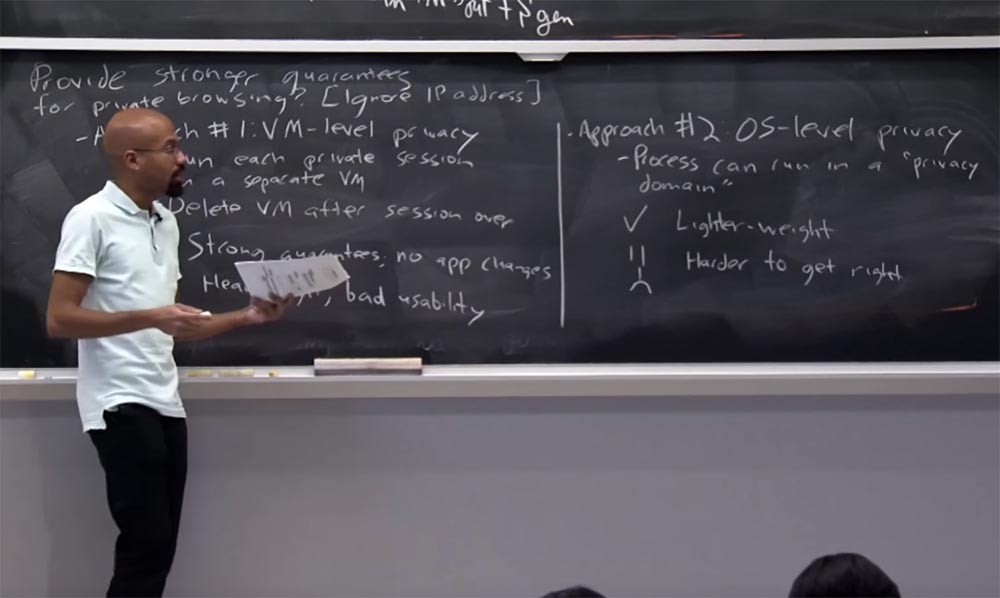

The second approach to the problem is similar to the first, but we actually implement it inside the OS itself, and not in the virtual machine. Here the basic idea is that each process can potentially be started in a private domain. A private domain is a kind of collection of shared resources of the OS that the process uses, and the OS keeps track of all such things. And as soon as the process dies, the OS looks at everything that is in the private domain, and completely releases all these resources for new use.

The advantage of this approach compared to using VM is lighter weight, because, if you think about it, a virtual machine is essentially agnostic with the state of the OS and the state of all applications being launched. Thus, using a VM creates more work than an OS does, because the operating system presumably knows all the points at which a private browser will come into contact with data input / output, “talk” to the network, and the like. Perhaps the OS even knows how to selectively clear the DNS cache.

Thus, you can imagine that it is much easier to “unleash” these privacy domains, so that you can simply “pull them down”. However, the disadvantage of this solution, at least with respect to running the VM, is that it is much more difficult to execute in the right way. Therefore, I just described the approach using VM as progressive, because the virtual machine is essentially agnostic to everything that works inside the OS container.

The nice thing is that the VM approach only allows you to focus on a few low-level interfaces. For example, the interface used by the virtual machine to write to disk causes a higher degree of trust because it contains everything that is needed. While using the OS, this is much more complicated, because you expect it to use individual files with a system interface, for example, with an individual network interface. So if you do all this at the OS level, the possibility of data leakage is much greater.

So, these were the two main approaches to enhancing privacy guarantees when using the private browsing mode, which can be implemented now.

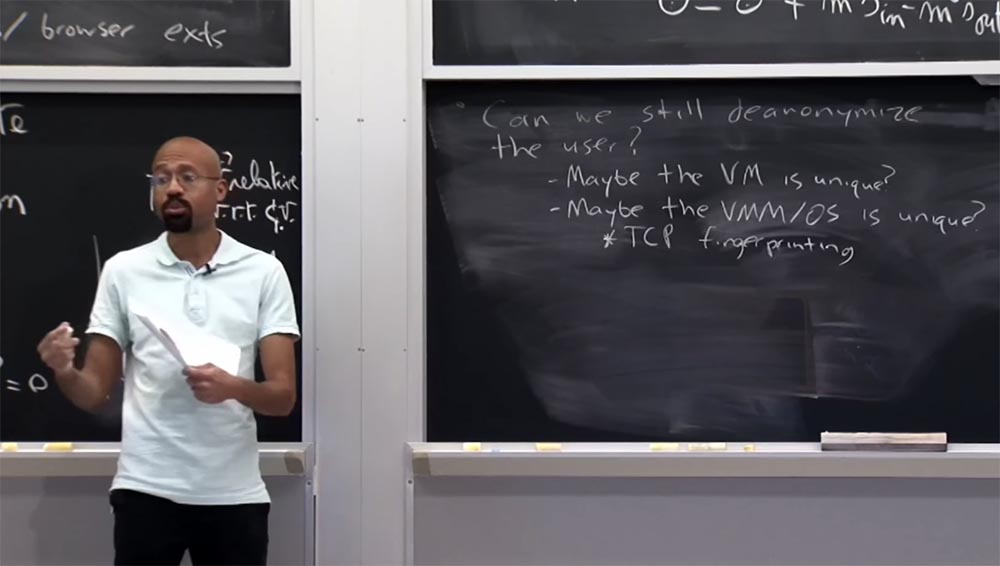

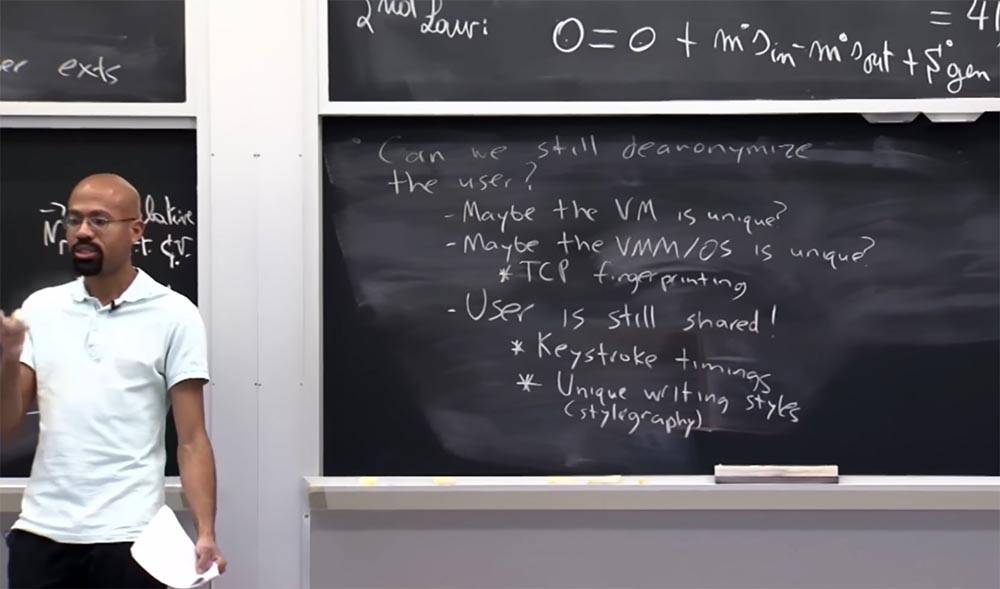

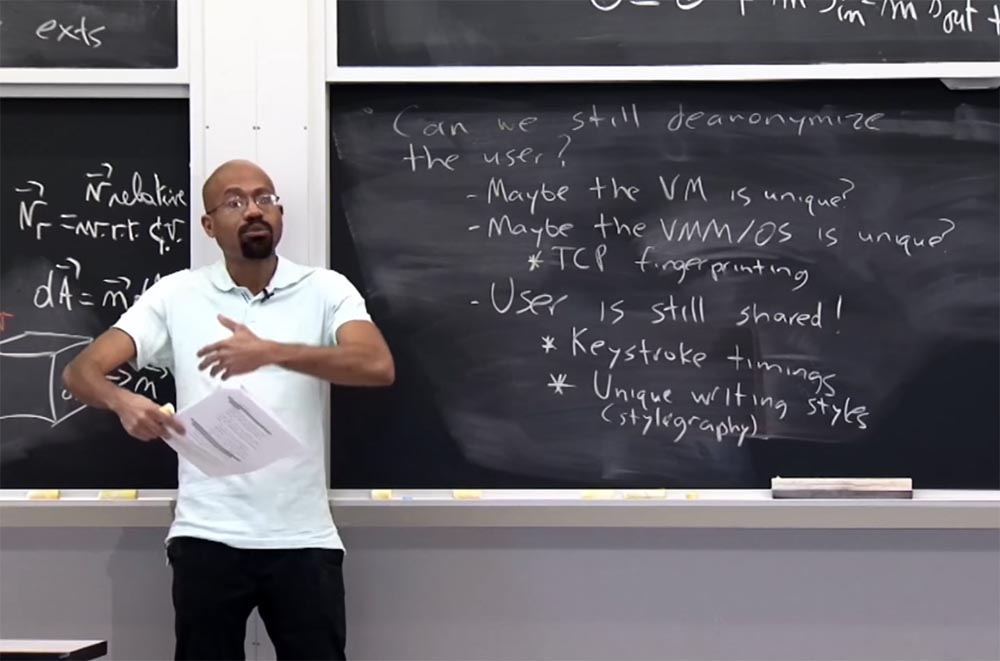

You may ask, can we still reveal the user's identity if he applies one of these more powerful security solutions - browsing the Internet using a virtual machine or privacy domains in the OS? Can we deprive the user of anonymity? The answer to this question will be - yes, we can!

User deanonymization is possible because the virtual machine is unique for some reason. This is similar to how we were able to fingerprint the browser using the Panopticlick website. There is probably something unique about how a virtual machine will be configured, which allows you to take its fingerprints. It is also possible that the VM monitor or the OS itself is in some ways unique. And this allows a network attacker to reveal the user's identity.

A typical example is TCP fingerprinting. The idea is that the specification of the TCP protocol actually allows the installation of some protocol parameters during the implementation of this protocol. For example, TCP allows executors to select the initial size of packets that are sent in the first part of establishing a TCP connection, which allows executors to select such things as the initial lifetime of these packets.

In this way, you can get off-the-shelf tools, such as InMap, which can tell with high probability which operating system you are running by simply sending you carefully processed packages. They will see such things as the fact that TTL is represented here, and here - the size of the distribution package, and here was the serial number of the TTP. Thus, they create a database of fingerprints. They say: “if the returned package has this, this and this characteristic, then according to the table, you are working on Solaris, you are using a Mac, and you are Windows”, or something else. Therefore, even using one of these approaches to enhance privacy during private browsing using a virtual machine or OS, an attacker is still able to launch one of these fingerprint detection attacks via TCP and learn a lot about a particular user.

It will be interesting to know that even if the user is protected by one of these more powerful methods, the user is still divided between both viewing modes, public and private, he still physically uses the computer. This is interesting because you yourself in the process of your handling of a computer can contribute to the leakage of your personal information.

For example, as it turns out, each user has a unique keystroke speed. So if I ask you to start typing the phrase “quick brown fox” or any such nonsense at the same time, the observation will show that each of you has a unique keystroke time that could potentially be used for fingerprinting.

It is also interesting that users have unique writing styles. There is a security industry called styling.

The idea behind stylography is that an attacker can figure out who you are by just looking at the samples of your letter. Imagine that for some reason you hang out at 4chan and I want to find out if you really were hanging out there. I can look at a bunch of different messages on 4chan and group them into comment sets that look stylistically the same. Then I will try to find public samples of the style of your writing, for example, in homework, the author of which you are. After that, I match the style samples in the 4chan comment sets with your homework, and if I find a match, I can write to your parents to explain to you the harm of hanging on the 4chan forums. That is the reason that I decided to draw your attention to the stylography. This is actually quite interesting.

So, we discussed how you can use VMs or modified operating systems to provide support for private browsing. Therefore, you may wonder why, then, browsers do not require users to do one of these things - run a virtual machine or modify the OS? Why do browsers take on the implementation of all this?

The main reason is deployability. Browser manufacturers usually do not want to force their users to do something special to use the browser, except for installing the browser itself. This is similar to the motivation of the Native Client, when Google is going to add these cool features to end-user computers, but does not want to force users to install a special version of Windows or Linux or do something else. Thus, Google says that "we will take care of this ourselves."

Another reason is usability. Many of these private browsing solutions at the virtual machine and OS level, as we have already discussed, make it difficult for users to save things received during a private browsing session — downloaded files, bookmarks, and the like.

Basically, browser makers say that if they themselves implement private browsing modes, they will be able to allow users to receive files downloaded in private browsing mode and save them on a computer. At first it sounds good. But note that this approach allows users to export a certain kind of private state, which opens up many security vulnerabilities and makes it very difficult to analyze the security properties used to implement the private browsing mode.

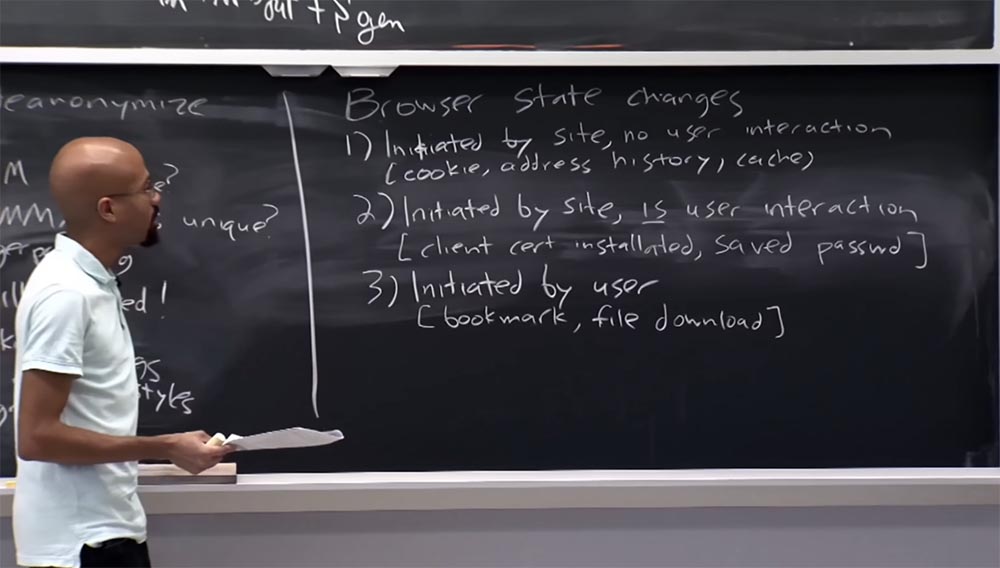

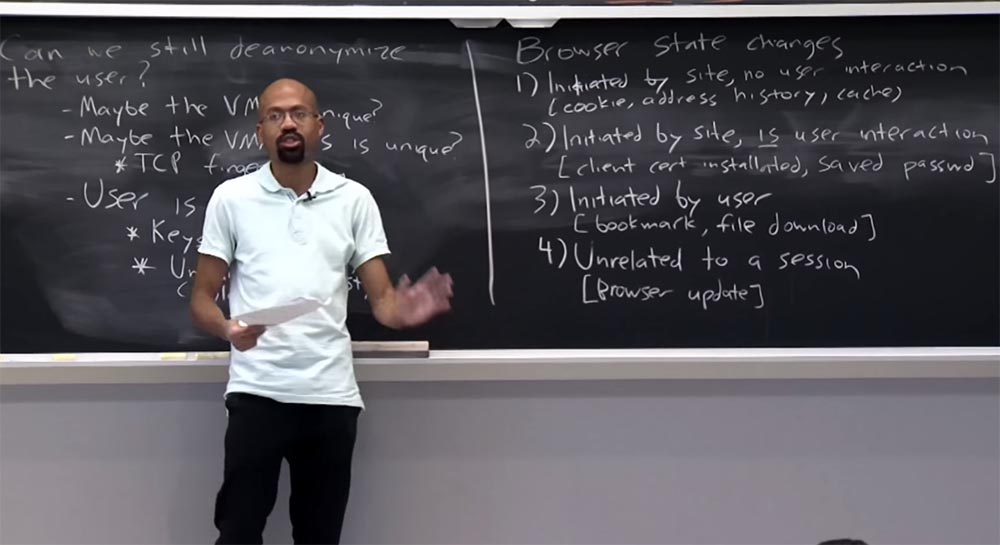

Therefore, the authors of the article are trying to characterize various types of browser states that can be modified, and consider how the current private viewing modes can modify them.

The article classifies browser status changes. There are four types of changes in this classification. The first type is when state changes are initiated by the site itself without user intervention. Examples of this type of state change are getting cookies, adding something to the browser’s address history, and possibly updating the browser’s cache. Therefore, basically this state is preserved throughout the entire mode of private viewing, but is destroyed after its completion.

It can be assumed that since the user does not interact with the browser during the formation of this state, it is assumed that the user himself would not like to participate in it.

The second type of browser state change is also initiated by the website, but there is some interaction with the user who visited this website. For example, a user installs a client certificate or uses a password for authorization on the site, that is, tries to go somewhere. At the same time, the browser very helpfully says: “Do you want to save this password?”. If the user answers yes, then such things as stored passwords can be used outside of the private viewing mode. Therefore, in principle, it is not clear what the privacy policy should be in this case. In practice, it turns out that browsers allow the existence of things that have arisen in the private viewing mode, outside of it, assuming that the user himself will choose the best option for him, saying “yes” or “no”. If the user is smart enough, then he will not save the password for some dubious site, because someone else can then use it. So there may be a user error, not a browser that could lead to loss of privacy.

Therefore, it is unclear which policy is the best, but in practice this type of state change is allowed to be kept outside the private viewing mode.

The third type of state change is fully initiated by the user. These are things like saving bookmarks or downloading files. This state is similar to the previous one, because the user directly participates in its creation. In this case, the private viewing mode agrees that state changes of this type are saved for further use outside the private viewing.

Further, there are some kinds of states that are not associated at all with any particular session. For example, this is the update state of the browser itself, that is, changing the file that represents the browser. Browser developers believe that this state change is part of a global state that is available for both public and private viewing.

In the end, if you look at it carefully, you will notice that there are quite a few conditions under which data can be leaked out of the limits of the private viewing mode, especially if there is user interaction. I wonder if this is the best compromise between security and privacy?

The article says that it is difficult to prevent the possibility that a local attacker can determine whether you are using private browsing or not. This article says a little vague. The fact is that the very nature of information leaks can tell in what mode of viewing - private or public - they occur. For example, in Firefox and Chrome, when you create a bookmark in private browsing mode, this tab has a bunch of metadata associated with it, such as time to visit a site and the like. In many cases, this metadata will be zero or close to some null value if this tab was created in the private viewing mode. Then whoever controls your computer later will be able to view your bookmark information. If he sees that this metadata is zero, he will conclude that this bookmark was probably created in private browsing mode.

When we talk about browser security, we think about what exactly people do with JavaScript, HTML or CSS, what can they do with plugins or extensions? In the context of private browsing, plug-ins and extensions are quite interesting, because in most cases they are not limited to the same origin policy, for example, they can limit the use of things like JavaScript. Interestingly, these extensions and plugins usually work with very high privileges. Roughly speaking, you can think of them as kernel modules. They have a high authority, allowing for the implementation of new features directly within the browsers themselves. Therefore, this is a bit problematic, since these plugins and extensions are often developed by someone who is not the actual developer of the browser. This means that someone is trying to do something good and give your browser useful properties by adding a plugin or extension to it. But this third-party developer may not fully understand the security context in which its extension is performed, so this extension may not provide the semantics of the private viewing mode or provide it in the wrong way.

, , , . , , . , , , HTML5 , , . , Java Flash. , 2D 3D-, - Java Flash. , Web GL , , , .

, IE , , - , HTML5. , YouTube, , , - HTML5-, . . , . , , , , .

, , , , 2010 , , ?

, . , , , HTML5.

, , . , . - , .

, 2014 Firefox, pdf.js, PDF-, HTML5-. , , .

, - PDF-, . PDF- , pdf.js , . . , , . , — , .

. , , , , , , , .

, : , , .

Firefox , 2011 . . , , , about:memory, , , , URL- , . , , , about:memory. , Firefox. , Firefox . , , about:memory , .

, URL-, , , . , . .

Bugzilla, , . , , , , , . , , . , . : « , , , .» : « , ».

, — HTML5 — , , . , , , .

, . , , . , .

, Magnet, -, . , , , URL- . - .

, Tor.

.

, . ? ? , 30% entry-level , : VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps $20 ? ( RAID1 RAID10, 24 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps , .

Dell R730xd 2 ? 2 Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 $249 ! . c Dell R730xd 5-2650 v4 9000 ?

Source: https://habr.com/ru/post/430208/

All Articles