MIT course "Computer Systems Security". Lecture 18: "Private Internet Browsing", part 2

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems". Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: "Introduction: threat models" Part 1 / Part 2 / Part 3

Lecture 2: "Control of hacker attacks" Part 1 / Part 2 / Part 3

Lecture 3: "Buffer overflow: exploits and protection" Part 1 / Part 2 / Part 3

Lecture 4: "Separation of privileges" Part 1 / Part 2 / Part 3

Lecture 5: "Where Security Errors Come From" Part 1 / Part 2

Lecture 6: "Opportunities" Part 1 / Part 2 / Part 3

Lecture 7: "Sandbox Native Client" Part 1 / Part 2 / Part 3

Lecture 8: "Model of network security" Part 1 / Part 2 / Part 3

Lecture 9: "Web Application Security" Part 1 / Part 2 / Part 3

Lecture 10: "Symbolic execution" Part 1 / Part 2 / Part 3

Lecture 11: "Ur / Web programming language" Part 1 / Part 2 / Part 3

Lecture 12: "Network Security" Part 1 / Part 2 / Part 3

Lecture 13: "Network Protocols" Part 1 / Part 2 / Part 3

Lecture 14: "SSL and HTTPS" Part 1 / Part 2 / Part 3

Lecture 15: "Medical Software" Part 1 / Part 2 / Part 3

Lecture 16: "Attacks through the side channel" Part 1 / Part 2 / Part 3

Lecture 17: "User Authentication" Part 1 / Part 2 / Part 3

Lecture 18: "Private Internet browsing" Part 1 / Part 2 / Part 3

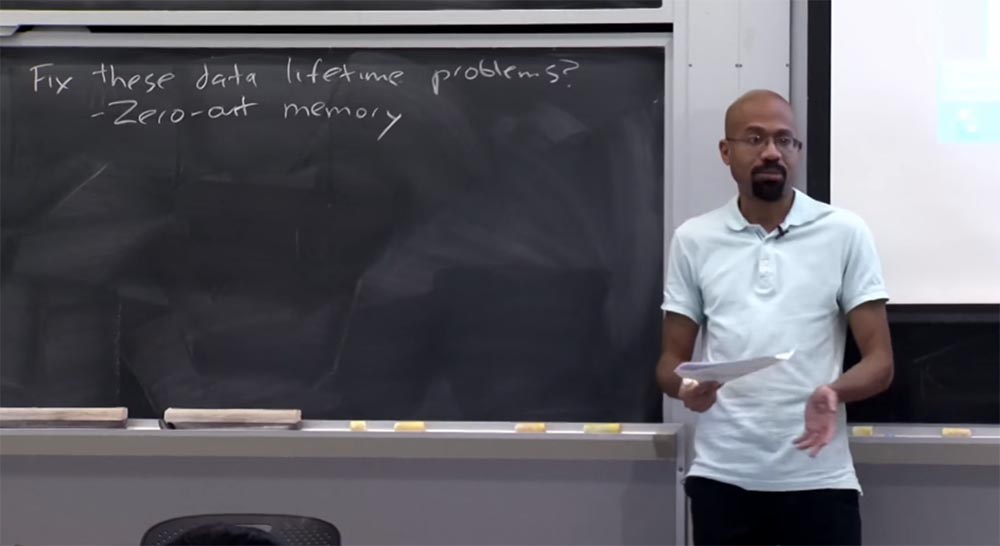

Does anyone see this as a potential problem? The first problem is that people always complain about performance when it comes to security. When you reset the memory and your program is limited by the I / O device's capabilities, this is not a problem, you just wait for the mechanical parts of the hard disk or something else to work out.

')

But imagine that your program is tied to a processor and, possibly, interacts very intensively with memory, making data allocation and freeing. In this case, resetting memory can seriously reduce system performance and will be at the cost of security that you don’t want to pay. This is usually not a problem in practice. But as we all know, people really appreciate the performance, so the option of resetting the memory will certainly meet objections.

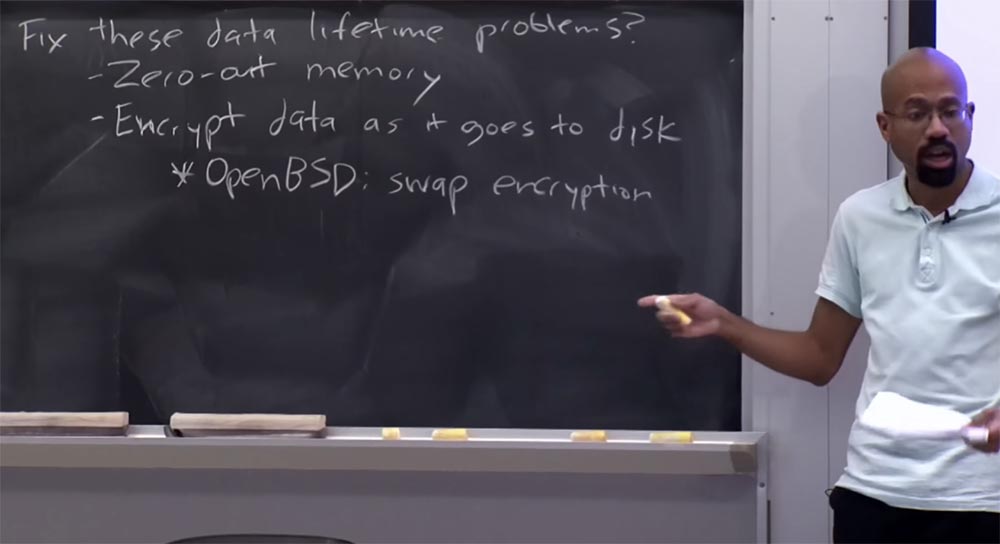

An alternative to zeroing memory can be to encrypt data as it is received for permanent storage. That is, the data is encrypted before the application writes it to the SSD or HDD. Then, when data is requested by the program from a stable repository, it is dynamically decrypted before it is stored in RAM. In this method of protection, it is interesting that if you throw away the key that was used to encrypt and decrypt the data, the attacker will not be able to recover this data from the disk. It assumes that you absolutely trust cryptography.

So it is very, very good, because it gives us the opportunity not to remember all the places where you write this encrypted data. You may ask, why throw away the keys, because we simply consider all the encrypted data as something that can be selected again?

For example, consider the Open BSD operating system, in which there is a swap encryption option. Thus, you can associate the keys with different sections of the paging file, that is, what I mentioned happens. Therefore, every time you boot a computer, this OS generates a bunch of new keys. After the computer is turned off or rebooted, it will forget all the keys that it used to encrypt the swap space. We can say that the swap is now available for reuse and since these keys are forgotten, it can be assumed that the attacker will not be able to get to the things stored there.

Student: how is the entropy of these keys assigned and is it possible to decipher them?

Professor: this is a good question. I do not know what resources of entropy are used here. Open BSD has security paranoia. Therefore, I suppose that it, for example, considers the entropy pool, compiled from user input from the keyboard, and other similar things. I do not know how this OS manages keys. But you are absolutely right that if the entropy resources used are predictable, then this compresses the entropy space of the key itself, which makes it more vulnerable.

In principle, this model offers only swap encryption, that is, it is assumed that RAM pages for keys are never unloaded from memory. It is quite easy to do in the OS, if you just fix this page in memory. It also does not help you to do something with someone who has contacts with the memory bus or with someone who can “walk” on the kernel memory page or the like.

Student: from the point of view of browsing, it helps to protect against intruders who come after a private viewing, because if you have to throw away the key, it does not remain in memory.

Professor: quite right. The good thing about this is that swap encryption, in fact, does not require changes in applications.

Student: going back a little bit - if you look at the data before they get into RAM, are there any memory artifacts left?

Professor: if I understand your question correctly, it bothers you that although the data is encrypted on disk, they are stored in clear text in memory. Returning to the discussion of encryption swap. Transferring data to disk in encrypted form does not protect against an attacker who can view RAM in real time. Thus, if you are only worried about an attacker after a private browsing session that cannot view RAM in real time, this works fine. But you are definitely right that it does not provide - for lack of a better term, let's say - RAM encryption. There are some research systems that are trying to do something like this. This is a bit more complicated, because at some point, when you access your hardware, your processor must do something with real data. For example, if you want to do something with the add command and you need to pass in plain text operands.

There are also some interesting research systems that try to make calculations based on encrypted data. It explodes the brain like the movie "The Matrix." But suffice it to say that data protection in RAM is usually much weaker than the protection of data stored on stable drives. Any more questions?

Student: we talked about the attacker who acts after the end of the session of private viewing. But if you have a Public mode tab and a Private mode tab in your browser, and after closing the private viewing tab, the public viewing tab remains open, can a hacker get to the memory artifacts through it?

Professor: This is an interesting question, and at the end of the lecture we will talk about a similar attack method. The fact is that most threat models in private viewing mode do not assume the presence of an attacker in real time at all. In other words, they assume that when you make a private viewing, at the same time you do not have another person who has a public viewing tab or something like that open. But in fact you are right that the way private browsing is often implemented is not sufficiently protected.

Suppose you open a tab for private viewing and after a while close it to run for a cup of coffee. However, Firefox, for example, saves statistics such as memory allocation. So if the memory for your private browsing tab is along with all the garbage collected by the browser, then I can see the URLs you visited and so on in your tab. But, in short, most of the attacking models do not assume the presence of an attacker at the same time that you are busy browsing the Internet privately.

So, swap encryption is a useful thing, as it provides you with some pretty interesting security features without having to change the browser or any of the applications running on top of it. In practice, the cost of using a processor when encrypting a swap is much less than the actual cost of performing I / O operations as a whole, especially if you have a hard disk, because together with the disk you buy its performance. This is the cost of purely mechanical parts, "hardware", so swap encryption is not such a big blow to performance.

So, the next type of attacker we’ll look at is the web attacker I mentioned at the beginning of the lecture. Here it is assumed that the attacker controls the website that the user is going to visit in private browsing mode, but this attacker does not control the user's local computer. In doing so, we pursue two security goals in order to protect against web attacks.

First, we don’t want an attacker to identify users. Identification means that an attacker can distinguish one user from another user visiting his site.

Secondly, we don’t want the attacker to tell whether the user is using the private viewing mode or not. According to a lecture article, protection against a web attacker is actually quite a smart task.

So what does it mean to identify different users? As I said, in a global sense, you can imagine that one user looks exactly the same as all other users who visit this site. Suppose a web attacker might want to do one of two specific things. The first one looks like this: “I see that several people visited my site in private browsing mode, and you were the fifth, seventh and eighth visitor”. In other words, it means the identification of a user in the context of several private browsing sessions.

The second thing an attacker might want is to try to establish a connection between the user and viewing sessions in public and private modes. Suppose once I go to amazon.com in the public view, and the second time in the private view. Will the attacker really find out that the same user made both visits to the site?

Student: but this is all tied to IP addresses?

Professor: yes, that's right. This is a great assumption, so I dare to assume that in our case the user does not use Tor, or something like that. So yes, we can build on the user's IP address. It is easy to identify by IP address. And if the site is visited twice during a short period of time from the same IP address, it is highly likely that the same user did it.

In fact, it serves as a motivation for using things like Tor, we will discuss this topic in the next lecture. If you have not heard of Tor, I’ll say that this is basically a tool that tries to hide things like your IP address. You can imagine the browser as a collection of layers based on Tor, and on top of it you have a private browsing mode. This can provide you with such advantages that private mode cannot provide at all. Thus, Tor provides a certain sense of anonymity of an IP address, but is not really able to ensure the secrecy of the data, a specific time of their life, or similar things. You can consider “Thor” a necessary, but not sufficient condition for the full implementation of the private Internet browsing mode.

The interesting thing is that even if you use Tor, there are still ways by which the web server can identify the user by looking at the unique characteristics of this browser.

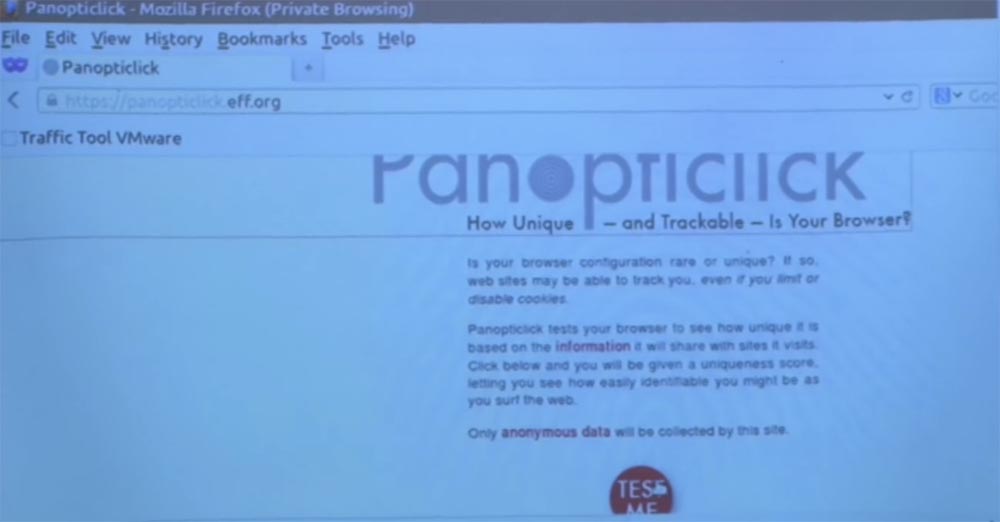

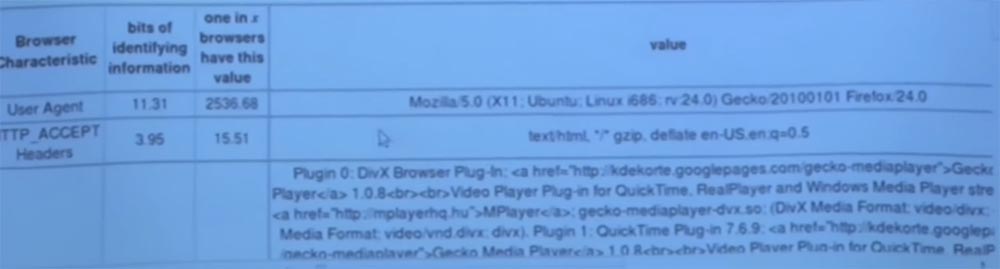

Now I will show you the latest demo for today. Let's see how I use a site called Panopticlick. Some of you have heard of this site. This is an online project launched by the EFF - Electronic Frontier Foundation, the Electronic Frontier Foundation. The main idea of the project is that it tries to identify you as a user by analyzing various characteristics of your web browser. I'll show you exactly what I mean. We go to this site, you see the button "Test me", and I click on it.

It basically runs a bunch of javascript code, maybe a few applets and a little java.

This site is trying to remove fingerprints from my browser and find out how much unique information it has.

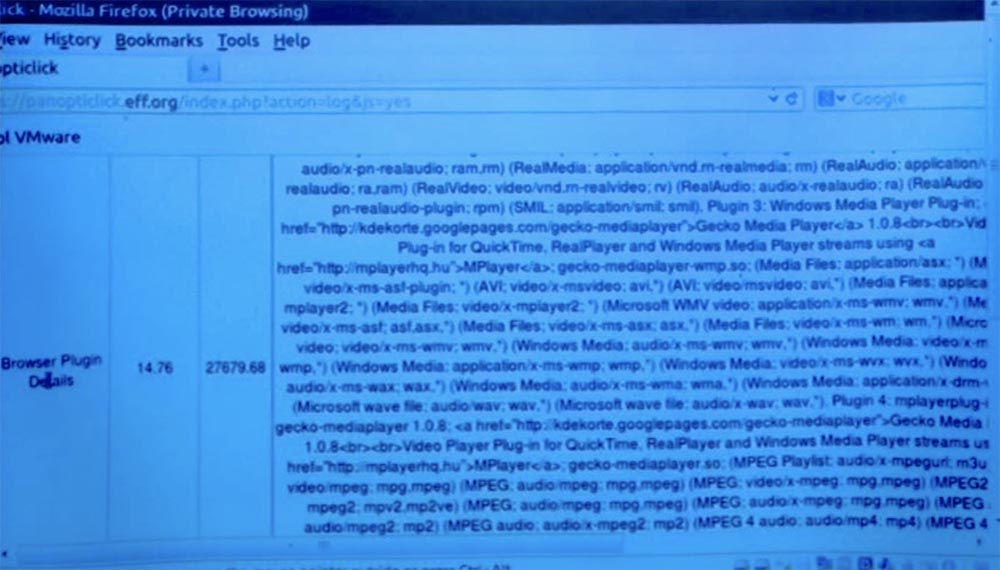

Let me increase the font - you see that one of the things he wants to find out are the details of the browser plug-ins that I use. So basically, this test will run a code that looks to see if I have Flash installed, which version of this Flash is installed, whether Java is installed and which version it has.

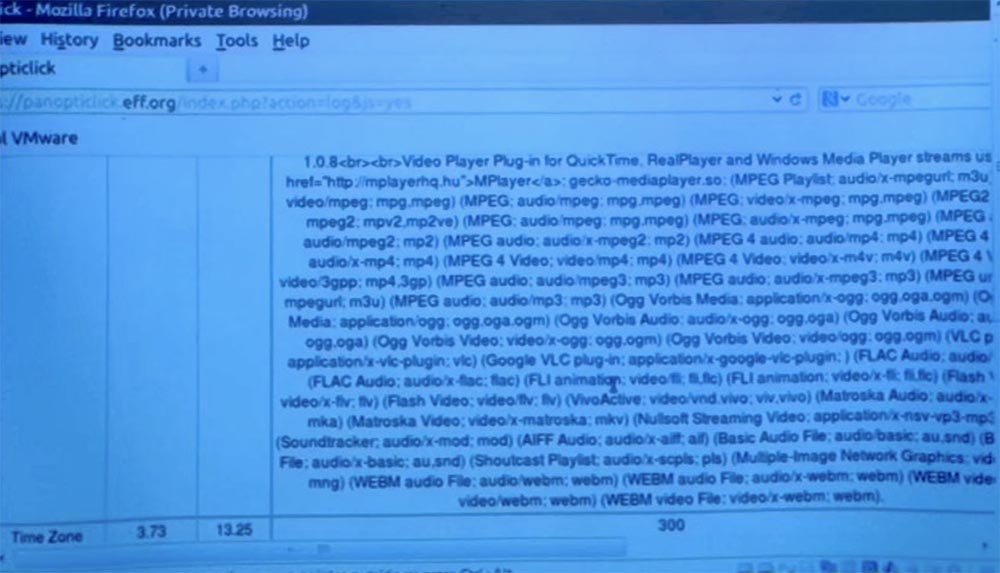

You can see that all these plugins do not even fit simultaneously on the same screen. These are all different plugins and file formats that my browser supports. In a global sense, this should worry you if you are a “security person.” If I really actively use all these things at this moment in time, then this is just a nightmare!

Ultimately, a web server, or a web attacker, can hunt for the code shown here and be able to figure out which plugins your browser uses. Now, if you look at these two columns on the left, what is it?

The first column is the bits of the identified information. The next column is more interesting - it shows that only one of a given number of browsers has the same set of plug-ins, in this case it is 1 of 27679 browsers. So this is a rather specific way to get my fingerprints. This number suggests that there are very, very few people whose browser has exactly the same set of plug-ins and file configurations.

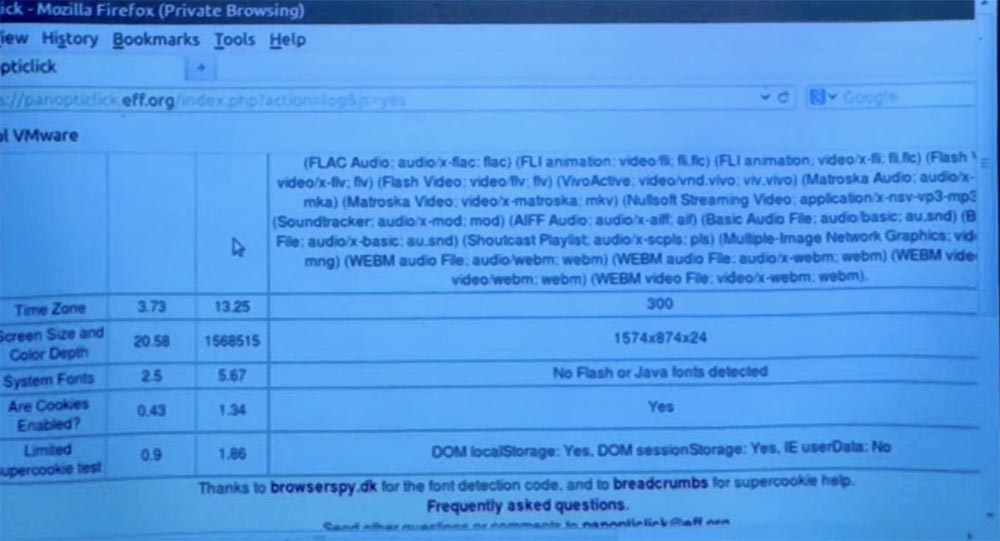

So, as it turned out, they are right, I am a completely unique person, but in the future this creates a big security problem. Look at the rest of the test results.

Here is the screen resolution and the color depth of my laptop, one for 1.5 million computers. This is quite a shocking discovery, because it means that only one person in a sample of one and a half million users has such screen characteristics.

So these things are in some sense additive. The more prints, the easier it is for an attacker to find out that it was you who visited his site. And note that this was done solely by the server. I just went to this page and just pressed a button, and that's what they were able to get to. Just a second, I want to show one more thing. Login to the site Panopticlick was made in the mode of private viewing. Now I will copy the address from the address bar and open the regular, public version of Firefox. After that, I will run this test again.

I will note again - now I'm in a public browser mode, and before that I was in private mode. You can see that if you look at browser plugins, the browser's fingerprint uniqueness indicator remains almost the same - 27679.82 versus 27679.68. This difference is made up of several plug-ins, which may or may not be loaded depending on how the privacy mode is implemented. But look - fingerprinting is still easy. Look at the screen size and color depth - these figures are no different in public and private viewing modes.

So the ability to leave fingerprints in both modes is about the same. This is one of the reasons why it is so difficult to protect yourself from such a web attack, because the browsers themselves in their configuration carry a lot of information for your identification by the attacker.

Student: I'm curious about the screen size and color depth options. How does this happen? Why are they so unique? How many screen sizes and color depths are there?

Professor: I think he is actually hiding here a piece of magic that Panopticlick uses to figure out what it is. Globally, how do many of these tests work? There are some parts of your browser that are viewed using only JavaScript code. Thus, you can imagine that the JavaScript code looks at the properties of the window object, which is a three-dimensional picture from JavaScript and notices how the code inside the window denotes this strange widget, the neighboring widget, the text part, plug-ins, and so on. Such pages also typically use the fact that Java applets and Flash objects can search for more interesting things, such as fonts that are available on your computer, and the like. Therefore, I personally believe that when determining the uniqueness of the screen size and color depth, the following happens: the test launches an applet that will try to query your graphic card or graphical user interface in Java, and check various aspects of their work while displaying the browser page.

Therefore, I think that this indicator actually contains more than just the screen size and color depth, they just shorten its name to this type.

This is how all these techniques work at a high level. So, you see a bunch of information in which you can delve into exploring JavaScript. Then you run a bunch of plugins that can usually access more things and see what they can find. After that, you can create an overall picture of what is happening.

It's clear? . , Tor, Tor – , IP- , — . !

. , , -. , , , , Firefox v10.7. , Firefox, . .

: Tor? ?

: , . , , , , , -. - , - .

: , , - Tor, Firefox .

: , - Tor. , «» Tor. , — IP, — , , , -.

, -, , , Firefox, - . - , . , - Firefox 10.7. , Firefox 10.7. HTML , . .

: , , . Tor , Firefox.

: , . , , , , . , Tor , Tor, , Firefox Java, .

– Tor , «» . , . – , , .

, ? – . , , ? , - . . , , ?

, . , . , , , iframe URL-, , iframe . . , iframe, , , , .

, , , , , . , , , , . . , . JavaScript JavaScript, . .

: , , ?

: , . , , , . , , -. , - , .

, , . , Amazon.com , . , , cookie , -, .

: , IP-.

: , .

: , , , IP- . , , .

: , . , , -, . JavaScript, iframe foo.com, iframe . , , , .

. , iframe, , .

, . , .

IP-, , , Tor IP-.

54:00

MIT « ». 18: « », 3

.

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until December for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Source: https://habr.com/ru/post/430206/

All Articles