Likelihood, P-values and reproducibility crisis

Or: How the transition from the publication of P-values to the publication of likelihood functions will help to cope with the crisis of reproducibility: personal opinion of Eliezer Yudkovsky.

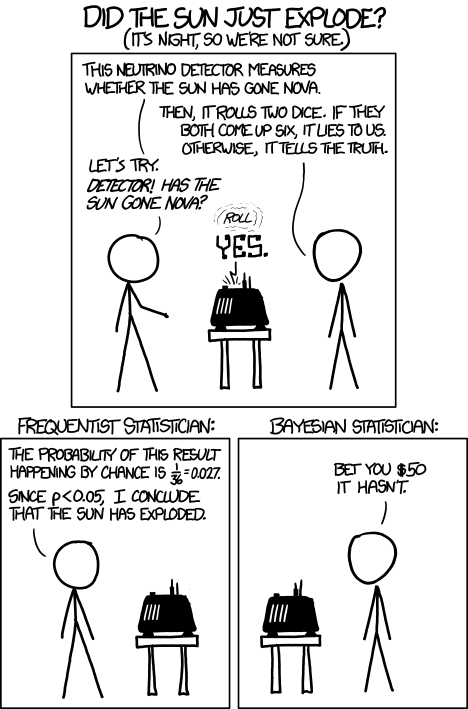

Translator's commentary: Yudkovsky, the author of HPMOR , the creator of Lesswrong and so on and so forth, stated his position on the use of Bayesian statistics in the natural sciences in the form of a dialogue. Just such a classic dialogue from antiquity or the Renaissance, with characters setting out ideas, exchanging barbs interspersed with convoluted arguments and inevitably blunt Simplicio. The dialogue is quite long, about twenty minutes of reading, but in my opinion, it's worth it.

')

Moderator: Good evening. Today in our studio: A scientist , a practicing specialist in the field of ... chemical psychology or something like that; his opponent is Bayesian , who intends to prove that the crisis of reproducibility in science can be somehow overcome by replacing the P-values with something from Bayesian statistics ...

Student: Sorry, how do you spell it?

Moderator: ... and, finally, not understanding anything Student to my right.

Moderator: Bayesian , could you start by telling you what the essence of your proposal is?

Bayesian: Roughly speaking, the essence is this. Suppose we have a coin. We throw it up six times and watch the series OOOOOOOR (approx. Lane: here and hereinafter O - Orel, R - Reshka) . Should we suspect that something is wrong with the coin?

Scientist: No.

Bayesian: The coin is just for example. Suppose we offer a sample of volunteers a plate with two cookies: one with green dressing and one with red. The first five people take the green cookies, and the sixth takes the red. Is it true that people prefer cookies with green dressing, or is it better to consider such a result as random?

Student: Probably, one may suspect that maybe people prefer green dressing. At least, psychology students who tend to volunteer in strange experiments, like green sprinkling more. Even after six observations, one may suspect so much, although I suspect that there is some kind of trick here.

Scientist: I think this is not yet suspicious. Many which hypotheses look promising at N = 6, but not confirmed at N = 60.

Bayesian: Personally, I would suspect that our volunteers do not prefer red dressing , or at least prefer it not very much. But in general, I came up with these examples only to show how P-values are considered in modern scientific statistics and what is wrong with them from the Bayesian point of view.

Scientist: Can't you think of a more realistic example with 30 volunteers?

Bayesian: Yes, but the Student doesn’t understand anything.

Student: That's for sure.

Bayesian: So, dear experts: Eagle, eagle, eagle, eagle, eagle, tails. Attention, question: will you call this result “statistically significant” or not?

Scientist: Mr. Leading, it is not significant. Under the null hypothesis that a coin is honest (or with a similar null hypothesis that the color of the dressing does not affect the choice of cookies), the same or a more pronounced result can be obtained in 14 out of 64 cases.

Student: Yeah. I understand correctly: This is because we consider the outcomes of OOOOOOO and as “the same or more pronounced,” there are a total of 14, and the total possible outcomes for 6 throws are 2 6 = 64. 14/64 is 22%, which is above 5%, so the result is not considered significant at the level of p <0.05. So?

Scientist: Right. I would also note that in practice, even at the outcome of OOOOOOO, it is not worth stopping the experiment and writing an article about the fact that the coin always falls out of an eagle.

Bayesian: The fact is that if you can stop throwing a coin at any time, you will have to ask yourself: "How likely is it that I will find a moment to stop the experiment in which the number of eagles will look public?" And this is in the P-values paradigm is a completely different story.

Scientist: I meant only that only six experiments - this is not serious, even if we study the color of the cookies. But yes, you are right too.

Student: Why is it important at all, can I stop throwing a coin or can't I?

Bayesian: What a wonderful question.

Scientist: The thing is, P-values are complicated. You can not just take the numbers, throw them into the program and publish what this program will issue. If you decide in advance to flip a coin exactly six times, and then stop regardless of the result, then the result of OOOOOO or PPPPPP will be obtained on average 2 times out of 64, or in 3.1% of cases. This is significant at p <0.05. But suppose that in reality you are a false and shameless forger. Or just an incompetent student who himself does not understand what he is doing. Instead of choosing the number of shots in advance, you throw and throw a coin until you get a result that looks statistically significant. It would be statistically significant if you decided to throw a coin exactly the same amount in advance. But in fact, you did not decide in advance. You decided to stop only after you got the results. So you can not do.

Student: Okay, I read about it somewhere, but I didn’t understand what was wrong with that. This is my research, and I should know better if there is enough data or not.

Scientist: The whole point of P-values is to create a test that the null hypothesis cannot pass. To make sure, in other words, that smoke without fire happens not too often. To do this, it is necessary to organize research in such a way as not to generate “statistically significant” discoveries in the absence of the desired phenomenon. If you flip a coin exactly six times (and decide on this number in advance), then the probability of receiving from an honest coin six eagles or six tails is less than 5%. If you throw a coin as many times as you like , and after each throw you recalculate the P-value ( pretending that the number of throws was known in advance), then the chance to get less than p <0.05 is much more than 5% sooner or later. Therefore, such an experiment detects smoke without fire much more often than in 1 out of 20 cases.

Bayesian: Personally, I like to formulate this problem like this: let's say you throw a coin and get OOOOOR. If at the same time you are in the slave only to Allah (for Allah is wise, knowing) the depths of your heart have determined the number of shots in advance , then the result does not matter; p = 0.22. If, after three months of fasting, you brought a vow to Saint Francis to throw a coin until the tails fell , then the same result is statistically significant with a quite good p = 0.03. Because the chance that with probabilities of 1: 1 tails will have to wait six or more shots, 1/32.

Student: What?

Scientist: It is rather a parody, of course. In practice, no one will throw a coin until a single tail falls and then stop. But in general, Bayesian is right, P-values do exactly that. Strictly speaking, we are trying to find out how rare the result is among those that we could get. A person who tosses a coin before the first tails can get the results {R, OR, OOR, OOOR, OOOOR, OOOOOR ...} and so on. The class of results in which six or more shots are made is {OOOOOR, OOOOOOR, OOOOOOOOO ...}, the total probability of which is 1/64 + 1/128 + 1/256 ... = 1/32. A person throwing a coin exactly six times gets one of the results of the class {, OOOOO, OOOORO, OOOOORR ...}, in which 64 elements. For the purposes of our experiment, OOOOOR is equivalent to OOOORO, OOOROO and others the same. So yes, this is all pretty counter-intuitive. If we really carried out the first experiment - OOOOOR would be a significant result, which is unlikely with an honest coin. And if we carried out the second experiment - OOOOOR would not have been significant, because even with an honest coin from time to time something similar happens.

Bayesian: You do not accidentally worry that the results of the experiment depend on what you think?

Scientist: This is a matter of conscience. Any kind of research will cost little if you lie about their results, that is, literally telling the truth about which side the coin fell out. If you lie about what kind of experiment was conducted - the effect will be the same. So you just have to honestly say what rules were used for throwing. Of course, the contents of the head of a scientist are less obvious than the side with which the coin lies. Therefore, it is always possible to tweak the analysis parameters, not to write how the number of subjects was determined, choose the statistical test that confirms your favorite hypothesis ... You can think of a lot of things if you wish. And it will be easier than falsifying the original data. In English, this is called p-hacking. And in practice, of course, much less obvious ways of creating smoke without fire are used than the stupid null hypothesis invented after the fact. This is a serious problem, and to some extent a crisis of reproducibility is connected with it, although it is not clear to which one.

Student: Does this ... sound reasonable? Probably, this is one of those things that you have to deal with and search through a bunch of examples for a long time, and then everything will become clear?

Bayesian: No.

Student: I mean?

Bayesian: In the sense of "Student, you were right from the very beginning." If what the experimenter thinks doesn’t affect the side by which the coin falls, then his thoughts should not affect the fact that the results of the throw tell us about the universe. My dear Student, the statistics you are taught is nothing more than a heap of crooked crutches that you haven't even bothered to do internally consistent. For God's sake, she gives out different wrong results depending on what's going on in your head! And this is a much more serious problem than the tendency of some scientists to slightly lie in the “Materials and Methods”.

Scientist: This is ... a serious statement, to say the least. But tell me, I ask you: what are we, the unfortunate, to do?

Bayesian: Analyze as follows: this particular OOOOOP result can be obtained with six shots of a perfectly balanced coin with a probability of 1/64, or approximately 1.6%. Suppose we already suspected that our coin was not perfectly balanced. And not just imperfectly, but in such a way that it fell on an eagle on average five out of six times. This, of course, is a wild simplification, but I will move on to realistic hypotheses a bit later. And so, this hypothetical shulersky coin gives out OOOOOR sequence with probability (5/6) 5 * (1/6) 1 . This is about 6.7%. So we have two hypotheses: "The coin is the most common" and "The coin falls out of an eagle in 5/6 cases." This particular result in the second case is 4.3 times more likely than in the first. The probability of the LLCOOP sequence for another hypothetical cheat coin, which in 5 cases out of six falls on a tail, is 0.01%. So if someone suddenly thought that this second coin was before us, then we now have a good argument against his hypothesis. This particular result is 146 times more likely for an honest coin than for a coin that falls out of an eagle only once out of six. Similarly, our hypothetical lovers of red cookies would be much less likely to eat green.

Student: Okay, I seem to understand math. But, frankly, I do not catch, what is its meaning.

Bayesian: Let me explain now, but first note the following: the results of my calculations do not depend on why the coin was planted exactly six times. Maybe after the sixth shot you decided that there was already enough data. Maybe, after a series of five shots, Namagiri Tayyar appeared in your dream and advised you to throw the coin again. Coin anyway. The fact remains: this particular series of OOOOOR for an honest coin is four times less likely than for a coin that falls out of an eagle five times out of six.

Scientist: I agree, your calculations have at least one useful property. What's next?

Bayesian: And then you publish the results in a journal. It is desirable together with the raw data, because then anyone can calculate the plausibility of any hypothesis. Suppose someone unexpectedly became interested in the hypothesis “A coin falls out of an eagle 9 times out of 10, not 5 times out of 6”. In this case, the series of observations of the LLCOOR has a probability of 5.9%, which is slightly less than our hypothesis about five eagles out of six throws (6 , 7%), but 3.7 times more than the hypothesis that the coin is perfectly balanced (1.6%). It is impossible to think up all possible hypotheses in advance, and it is not necessary. It is enough to publish complete data - then anyone who has a hypothesis will be able to easily calculate the likelihood he needs. The Bayesian paradigm requires the publication of raw data, because the focus is precisely on a specific result , and not on a class of supposedly identical outcomes.

Scientist: In this I agree with you, the publication of complete data sets is one of the most important steps to overcome the reproducibility crisis. But personally, I don’t understand what I should do with all these “And it’s so much more likely than B”.

Student: Me too.

Bayesian: It's not entirely trivial ... did you read our introduction to Bayes rule ?

Student: Great. Here are just another three-page statistical textbook, and I did not have enough.

Bayesian: You can actually read it in an hour . It's just that this is literally not trivial , that is, it requires an explanation. But okay, in the absence of a full introduction, I will try to think of something. Most likely, it will sound reasonable - and the logic is indeed correct - but not a fact that is self-evident. Go. There is a theorem that proves the correctness of the following arguments:

(Bayesian gains air)

Bayesian: Let's say Professor Plume and Miss Scarlet are suspected of murder. After examining the biographies of both, we assume that it would be twice as easy for a professor to kill a man than Miss Scarlet. With this assumption, let's start. It turns out, however, that the deceased was poisoned. We know that if Professor Plume is about to kill someone, he will use poison with a probability of 10% (and in 9 cases out of 10 he will prefer, for example, a revolver). Miss Scarlet, if she decides to kill, uses poison with a probability of 60%. In other words, the professor’s use of poison is six times less likely than Miss Scarlet’s use of poison. Since we have new information, namely the method of murder, we must update our assumption and assume that Plume is about three times less likely killer: 2 * 1/6 = 1/3.

Student: Not sure I understood that. What does the phrase "Professor Plume mean three times less likely murderer than Miss Scarlet"?

Bayesian: It means that if we do not have other suspects, then the probability that the victim was killed by Plume is 1/4. The remaining 3/4 make up the probability that the killer is Miss Scarlet. Therefore, the probability of the professor's guilt is three times lower than that of Miss Scarlet.

Scientist: And now I want to know what you mean by "probability of guilt." Plume either committed the murder, or he did not commit it. We cannot consider a sample of the killings and find that Plume is indeed guilty of a quarter of them.

Bayesian: I was hoping not to get into this, but oh well. My good Scientist, I mean that if you offered me a bet with 1: 1 bets on whether Plume killed the victim or not, I would bet that he did not. But if under the terms of the betting, I would pay you $ 1 in case of his innocence, and you pay me $ 5 in the event of his guilt, I would gladly bet on the guilt. The 2012 presidential election was held only once, and Obama's Probability of Victory is the same conceptually vague thing as Plume's Guilt Probability. But if on November 7 you were offered to bet $ 10 on Obama and promised $ 1000 in case of his victory, then you would hardly refuse such a bet. In general, when prediction markets and large liquid pools of bets accept 6: 4 bets on an event, this event occurs in about 60% of cases. Markets and pools are well calibrated by probabilities in this range. If they were calibrated poorly, that is, if the events that are being bid at 6-4, happened 80% of the time, then someone would have noticed and enriched themselves at the expense of such rates. At the same time, he would raise the price of the rate until the market becomes well calibrated. And since events with a market estimate of a probability of 70% do occur about 7 times out of 10, I don’t understand why to insist that such a probability does not make sense.

Student: I admit, it sounds convincing. But for sure it only seems to me, and in fact there are a whole bunch of tricky arguments for and against.

Bayesian: There really is a bunch of arguments, but the general conclusion from it is that your intuitive presentation is pretty close to the truth.

Scientist: Well, we'll come back to this. And what if there are two agents, both in your terms “well calibrated”, but one of them claims “60%” and the other says “70%”?

Bayesian: Suppose I toss a coin and do not see which side it fell. In this case, my ignorance is not information about a coin, it is information about me. It exists in the head, not in the surrounding world, just as the white spots on the map do not mean that there is no territory in this place. If you looked at the coin, but I didn’t, it’s quite reasonable that you and I are in different states of uncertainty about it. Given that I am not one hundred percent certain, it makes sense for me to express my uncertainty in terms of probability. There are three hundred theorems that claim that if someone’s expression of uncertainty is not in fact a distribution of probability, then, in general, that’s what he needs. For some reason, it always turns out that if an agent’s thinking under conditions of uncertainty violates any of the standard axioms of probability theory, the earth opens up, water turns into blood, and dominated strategies and obviously losing stakes spill out of heaven.

Scientist: Well, here I was wrong. We will come back to this, too, but first answer my question: what should we do with plausibility after we received them?

Bayesian: According to the laws of probability theory, these likelihoods are evidence.They are the ones who force us to change our a priori probabilities from 2: 1 in favor of Plume to 3: 1 in favor of Scarlet. If I have two hypotheses and the likelihood of data for both, then I should change my opinion in the manner described above. If I change it somehow differently - then the heavens open, strategies pour in, and so on. Bayes theorem: this is not just a statistical method, it is a LAW.

Student: I apologize, but I still do not understand. Suppose we are conducting an experiment. And, let's say, the results obtained six times more likely if Herr Troopa was killed by Professor Plume than they would have been if Miss Scarlet was the killer . The student obviously confused the likelihood of poison use by two killers . To arrest us professors or not?

Scientist: I think, first we need to come up with a more or less realistic a priori probability, for example, " a priori I believe that the probability of killing the Troupe by Plume is 20%." Then it must be multiplied by a likelihood ratio of 6: 1, and the ratio of a posteriori probabilities of 3: 2, which Plume did kill the Troupe, is obtained. Then you can say that Plume is guilty with a probability of 60%, and then let the prosecutor's office understand.

Bayesovets: None . For heaven's sake! Do you really think Bayesian statistics work that way?

Scientist:Does she work wrong? I have always believed that its main advantage is that it gives us a posteriori probabilities, which P-values do not really give, and the main drawback is that it requires a prior probabilities. Since they have to be taken more or less from the ceiling, the correctness of a posteriori probabilities can be challenged to the end of time.

Bayesian: Articles should be published likelihood . More precisely, it is necessary to publish raw data and calculate for them a few likelihoods of interest. But certainly not a posteriori probabilities.

Student: I'm confused again. What is a posteriori probabilities?

Bayesian: A posteriori probability- This is a statement like "With a probability of 60% of Herr. The troupe was killed by Professor Plume." As my colleague has already noted, such statements do not follow from P-values. And, in my opinion, they have no place in experimental articles, because these are not the results of an experiment .

Student: But ... ok, Scientist, a question for you: let's say we got results with p <0.01, that is, something with a probability of less than 1% with the null hypothesis "Professor Plume did not kill Herr Troupe". To arrest us or not?

Scientist:First, it is not a realistic null hypothesis. Most likely, the null hypothesis will be something like "Nobody killed Herr Troupe" or "all suspects are equally guilty." But even if the null hypothesis described by you worked, even if we could reject Plume's innocence with p <0.01, it would still be impossible to say that Plume is guilty with a probability of 99%. P-values of this are not reported to us.

Student: And what do they report then?

Scientist: They report that the observed data are included in a certain class of possible outcomes, and that the results of this class are observed in less than 1% of cases if the null hypothesis is correct. More p-value means nothing. You can not just go and move from p <0.01 to "Professor Plume is guilty with a probability of 99%." A Bayesian is more likely to be able to better explain why. In general, in science one cannot interpret one thing as something else. Figures denote exactly what they denote, no more and no less.

Student: Generally excellent. At first I did not understand what I should do with plausibility, and now I still do not understand what I should do with P-values. What experiment is required to finally send Plume to prison?

Scientist: In practice? If another pair of experiments in other laboratories confirms his guilt with p <0.01, then most likely he is really guilty.

Bayesian:A “crisis of reproducibility” is when the matter is later raised and it turns out that he did not commit murder.

Scientist: In general, yes.

Student: Somehow it turns out unpleasant.

Scientist: Life is generally an unpleasant thing.

Student: So ... Bayesean, you probably have a similar answer? Something like the fact that if the likelihood ratio is large enough, say, 100: 1, then in practice can we assume that the corresponding hypothesis is true?

Bayesian:Yes, but it is somewhat more complicated. Suppose I throw a coin 20 times and get OOOROOOROROROROOOOOOORROR. The catch is that the plausibility of the hypothesis “The coin is guaranteed to issue a sequence of OOOROOOROROROOOOOOORROR” is higher than the plausibility of the hypothesis “Coin equiprobably drops out like an eagle or tails” is about a million times. In practice, if you did not hand me this hypothesis in a sealed envelope before the start of the experiment, I will consider it strongly retrained. I will have to give this hypothesis a penalty for complexity of at least 2 : 20 : 1, because the sequence description alone takes 20 bits. In other words, to lower the prior probability so much that it more than compensates for the likelihood advantage. And this is not the only underwater rock. But nevertheless, if you understand how and why the Bayes rule works - then in each case you can understand along the way. If the ratio of credibility for Plume versus any other suspect is 1000: 1, and there are only six suspects at all, then it can be assumed that the a priori probability was hardly much more than 10: 1 against the fact that he was a murderer. If so, then we can assume that he is guilty with a probability of 99%.

Scientist: But nevertheless, it’s not worth writing in the article ?

Bayesian: Right. How to formulate ... The key condition for Bayesian analysis is that the wholerelevant information. You can’t exclude data from analysis just because you don’t like it. This is actually the key condition of science as such, regardless of the statistics used. There are a lot of articles, the conclusions of which turned out just because some factor was not taken into account or the sample was unrepresentative in some parameter. I'm talking about what? And besides, how do I (as an experimenter) know what “all relevant information” is? Who am I to calculate a posteriori probabilities? Maybe someone has published an article in which there are additional data and additional likelihoods that I should have taken into account, but I have not read it yet. So I just publish my data and my likelihood functions - and that’s it! I can not say that I considered everythingarguments and now I can offer reliable a posteriori probabilities. And even if I could, then in a week another article may come out, and these probabilities will become obsolete.

Student: Roughly speaking, the experimenter just has to publish his data, calculate some likelihood for them and that's all? And then someone else will decide how to deal with them?

Bayesian: Someone will have to choose a priori probabilities — equal, or with maximum entropy, or with penalties for complexity, or some other — then try to collect all possible data, calculate likelihoods, make sure that the result is not crazy , and so on. other And they still have to recount if a new article comes out in a week.

Student:It sounds quite laborious .

Bayesian: It would be much worse if we took up the meta-analysis of P-values. Updating Bayesian probabilities is much easier. It is enough to simply multiply the old a posteriori probabilities by new likelihood functions and normalize them. Everything.If experiment 1 gives a likelihood ratio of 4: 1 for hypotheses A and B, and experiment 2 gives a likelihood ratio of 9: 1 for them, then together they give a ratio of 36: 1. That's all.

Student: And you can't do that with P-values? One experiment with p = 0.05 and another experiment with p = 0.01 does not mean that actually p <0.0005?

Scientist: No .

Bayesian: Dear viewers, please pay attention to my arrogant smile.

Scientist: But I still worry about the need to invent a priori probabilities.

Bayesian: And why does she bother you more than the fact that everyone decided to consider one experiment and two replications with p <0.01 criterion of Truth?

Scientist: You want to say that the choice of a priori values is no more subjective than the interpretation of P-values? HmI wanted to say that the requirement, say, p <0.001 should guarantee objectivity. But then you will answer that the figure 0.001 (instead of 0.1 or 1e-10) is also sucked from the finger.

Bayesian: And add to this that it is less effective to require any arbitrary P-value than to suck a prior probability from the same finger. One of the first theorems that threatened violators of probability axioms with Egyptian punishments was proved by Abraham Wald in 1947. He tried to describe all the acceptable strategies , calling the strategy some way to react to what you are observing. Of course, different strategies under different circumstances can be more or less profitable. Acceptable strategyHe called one that is not dominated by any other strategy under all possible conditions. So, Wald discovered that the class of acceptable strategies coincides with the class of strategies that contain a probability distribution, update it based on observations according to Bayes' rule, and optimize the utility function.

Student: Excuse me, is it possible in Russian?

Bayesian: If you do something in connection with what you observe and get more or less money, for example, depending on what the real world is, then one of two things is true. Either your strategy in some sense contains a probability distribution and updates it according to Bayes rule, orthere is some other strategy that is never inferior to yours, and sometimes surpasses it. That is, for example, you say: “I will not quit smoking until I see an article proving the connection between smoking and cancer at p <0.0001”. At least theoretically, there is a way to say “In my opinion, the link between smoking and cancer exists with a probability of 0.01%. What are your likelihoods? ”, Which will be no worse than the first formulation, no matter what a priori probabilities of the existence of such a connection.

Scientist: Really?

Bayesian:Yeah. The Bayesian revolution began with this theorem; since then it has been slowly gaining momentum. It should be noted that Wald proved his theorem a couple of decades after the invention of P-values. This, in my opinion, explains how it happened that all modern science was tied up with obviously ineffective statistics.

Scientist: So you propose to throw out P-values and instead publish only likelihood ratios?

Bayesian: In short, yes.

Scientist: Something I do not really believe in ideal solutions that are suitable for any conditions. I suspect - please do not consider it an insult - that you are an idealist. In my experience, in different situations different tools are needed and it would be unwise to throw out all but one.

Bayesian:Well, I am ready to explain what I am an idealist and what is not. Likelihood functions alone will not resolve the reproducibility crisis. It cannot be completely resolved by simply ordering everyone to use more efficient statistics. The popularity of open access journals does not depend on the choice between plausibility and P-values. Problems with the review system also do not depend on it.

Scientist: And everything else, therefore, depends?

Bayesovets: Not everything, but they have a lot what to help . Let's count.

Bayesian:First of all. Likelihood functions do not force us to draw a line between “statistically significant” and “insignificant” results. An experiment cannot have a “positive” or “negative” outcome. What is called the null hypothesis is now just one of the hypotheses that is not fundamentally different from all the others. If you throw a coin and get an OORRRROOO - one cannot say that the experiment could not “reject the null hypothesis at p <0.05” or “reproduce the previously obtained result”. He merely added data that supports the hypothesis of an honest coin against the 5/6 eagles hypothesis with a likelihood ratio of 3.78: 1. So with the massive acceptance of Bayesian statistics, the results of such experiments will be less likely to go to the table. Not at all,because the editors of magazines have unexpected results that are more interesting than honest coins, and this must be dealt with directly. But P-values do not just do not struggle with this approach, they are hisstimulate ! It is because of him that p-hacking exists at all. So the transition to the likelihood will not bring happiness to all and a gift, but it will definitely help .

Bayesian: Secondly. The likelihood system emphasizes the importance of the source data much more and will stimulate their publication wherever possible, because Bayesian analysis is based on how likely these particular results are in a particular model. The system of P-values, on the contrary, forces the researcher to consider the data as just one of the members of the class of “equally extreme” results. Some scientists like to keep all their precious data with them; it's not just statistics. But P-values stimulateand this, because for the article, it’s not the data itself that is important, but whether they belong to a particular class. Once this is established, all the information contained in them as if collapses into a single bit of "significance" or "insignificance."

Bayesian: Thirdly. From the point of view of probability theory, from the Bayes point of view, different magnitudes of effects are different hypotheses. This is logical, because they correspond to different likelihood functions and, accordingly, different probabilities of the observed data. If one experiment found an effect of 0.4, and another experiment found a “statistically significant” value of the same effect of 0.1, then the experiment did not reproduce.and we don't know what the effect really is. This will avoid a fairly common situation where the magnitude of the “statistically significant” effect all decreases and decreases with increasing sample size.

Bayesian: Fourth. Likelihood functions greatly simplify data integration and meta-analysis. They may even help us notice that data is collected in heterogeneous conditions or that we do not consider the true hypothesis. In this case, either all the functions will be close to zero for all possible parameters, or the best hypothesis will give a much lower likelihood on the combined data than it itself predicts . A more rigorous approach to reproducibility allows you to quickly understand whether such an experiment can be considered a repeat of such and such.

Bayesian: Fifth. Likelihood functions do not depend on what they think about them. These are objective statements about the data. If you publish the likelihood values, then there is only one way to deceive the reader - to falsify the data itself. P-hacking will not work.

Scientist: This is what I strongly doubt. Suppose I decide to convince you that the coin often drops out of an eagle, although in fact it is honest. I will take a coin, I will throw it until I accidentally get a little more eagles, and then I’ll stop. What then?

Bayesian: Go ahead. If you do not falsify the data, you will not deceive me.

Scientist:The question was about what would happen if I checked the likelihood ratio after each roll and stopped as soon as it supported my favorite theory.

Bayesian: As an idealist, seduced by the deceptive beauty of the theory of probability, I answer you: as long as you give me honest raw data, I can and must do only one thing - multiply according to Bayes' rule.

Scientist: Really?

Bayesian: Seriously.

Scientist: So you don't care that I can check the likelihood ratio until I like it?

Bayesian: Go ahead.

Scientist: Okay. Then I will write a script on Python, which simulates a throw of an honest coin to, say, 300 times, and see how often I manage to get a 20: 1 ratio in favor of the “coin eagle drops in 55% of cases” ... What?

Bayesian: Just a funny coincidence. When I first found out about this and doubted that the likelihood relationship could not be deceived in any tricky way, I wrote the same program on Python. Later, one friend of mine also learned about the likelihood relationship and also wrote the same program, also for some reason on Python . He launched it and discovered that the 20: 1 ratio for the hypothesis "55% of the eagles" was found at least once in 1.4% of the throws. If you require, for example, 30: 1 or 50: 1, their frequency drops even faster.

Scientist:If you count your one and a half percent P-value, it looks good. But this is a very rude way to fool analysis; perhaps there are more complex and effective?

Bayesian: I was ... about five years old, probably, if not less, when I first learned about addition. One of my earliest memories. I sat, added 3 to 5 and tried to think of some way not to get 8. Which, of course, is very nice and generally an important step towards understanding what addition is (and mathematics in general). But now this is exactly what is nice, because we are adults and we understand that 5 plus 3 is inevitably equal to 8. The script, which constantly tests the likelihood ratio, does the same thing that I did in childhood. Having understood the theory, I realized that attempts to deceive Bayes' rule are obviouslydoomed. It's like trying to decompose 3 in some tricky way into 2 and 1 and add them separately to 5, or try to add first 1, and only then 2. Neither that nor 7 or 9 will work. The result of the addition is a theorem , and it doesn't matter what sequence of operations we perform. If it is really equivalent to adding 3 to 5, then nothing can be obtained at the output, except 8. Probability theory theorems are also theorems. If the script could really work, it would mean a contradiction in probability theory, which means a contradiction in Peano arithmetic, on which the analysis of probabilities is built using rational numbers. What you and I tried to do - exactlyas difficult as adding 3 and 5 in standard axioms of arithmetic and getting 7.

Student: Uh, why?

Scientist: I did not understand either.

Bayesian: Let e denote observation, H denote the hypothesis ,! X denotes "not X", P ( H ) denotes the probability of the hypothesis, and P ( X | Y ) denotes the conditional probability of X, provided that Y is true. There is a theorem showing that

P (H) = P ( H | e ) * P ( e )) + (P ( H |! e ) * P ( ! e )

Therefore, for the probability functions there is noarbitrarily complex analog of p-hacking, apart from data falsification, because no procedure known to the Bayesian agent will force it to update its a priori probabilities in a deliberately incorrect direction. For every change that we can get from watching an e , there is a inverse variation that can be expected from the observation ! An e .

Student: What?

Scientist: I did not understand either.

Bayesian: Okay, let's put it off until math and see ... yes, to the crisis of reproducibility. The scientist said that he is suspicious of ideal universal solutions. But in my opinion, the transition to likelihood functions should reallysolve many problems at once. Suppose ... now come up with. Suppose a certain corporation has major accounting problems. These problems are connected with the fact that all accounting uses floating point numbers; and it would be still half the problem, but three different implementations are used (approximately in one third of the corporation each), so it turns out that God knows all. Someone, for example, takes 1.0, adds 0.0001 a thousand times, then subtracts 0.1 and gets 0.999999999999989. Then he travels to another floor, repeats the calculations on their computers and gets 1.000000000000004. And everyone thinks that this is necessary. And the error, suppose, is really HUGE, all three implementations are the fruit of an unnatural union of cave paintings and Roman numerals. So due to the differences between them, it is possible to get quite tangible differences in the results. Of courseeveryone picks up the sales in such a way that their quarterly reports converge. Therefore, it is considered a good result if the budget of the department does not contradict at least to itself, and the department of cognitive priming is likely to go bankrupt 20 years ago. And then I come out, all in white, and say: “Good afternoon. And what if instead of your three implementations, you will use this cool thing, which cannot be manipulated in a similar way and which will solve half of your problems. ”which cannot be manipulated in a similar way and which will solve half of your problems. ”which cannot be manipulated in a similar way and which will solve half of your problems. ”

( Bayesian , in the voice of the Scientist ) : "I am suspicious of such universal solutions," the chief accountant replies to me. “Do not consider it an insult, but you, my friend, are an idealist. In my experience, different entries of floating-point numbers are well suited for different operations, so you shouldn’t immediately throw out all the tools except one. ”

Bayesian: To which I answer him:“ Maybe it sounds too bold, but I'm going to demonstrate you are perfecta representation of fractions in which the results do not depend on the order in which you add numbers or on whose computer the calculations take place. Maybe in 1920, when your system was just being created, it required too much memory. But now is not the year 1920, you can afford not to save computing resources. Especially since you have there how many, 30 million bank accounts? This is really nonsense. Yes, my presentation has its flaws. For example, square roots are taken much more difficult. But how often, honestly, do you need to take the square root of someone's salary? For most real-world tasks, this system is not inferior to yours, and besides, it cannot be fooled without faking input values. ”Then I explain to them,how to represent an integer of arbitrary length in memory and how to represent a rational number as a ratio of two integers. That is what we would now call a self-evident way of representingreal rational numbers in computer memory. The only and unique system of theorems about rational numbers, for which floating-point numbers are just an approximation. And if you handle an unfortunate 30 million bills; if in practice your approximations at the same time do not converge with each other or with yourself; if they also allow everyone to steal your money; if, finally, the yard is not 1920 and you can afford normal computers, then the need to transfer accounting to real rational numbers is pretty obvious. Similarly, Bayes' rule and its corollaries are the only system of theorems about probabilities based on axioms and rigorously proved. And so p-hacking does not work in it.

Scientist: This is ... bold. Even if everything you say is true, practical difficulties remain. The statistics that we use now has been forming for more than a decade; she proved her worth. How did your light Bayesian path show itself in practice?

Bayesian: It is almost never used in the natural sciences. In machine learning, where, as it is more modest to say, it is quite easy to see that the model is incorrect - because the AI based on it does not work - so, in machine learning, I last saw the frequency approach to probability ten years ago. And I can't remember a single one.work in which the AI would consider the P-value of some hypothesis. If probability at all somehow appears in the study, then it is almost certainly Bayesian. If something is classified by unitary codes, then the cross entropy is minimized, but not ... I do not even know what could be the analogue of P-values in AI. I would venture to suggest that this is what it is. The statistics in machine learning either works, or does not, and this is immediately obvious: the AI either does what it should or tupit. And in the natural sciences, all are primarily needed publications. Since it so happened that it is customary to specify P-values in the articles, and for non-reproducible results we don’t punish - we have what we have.

Scientist:So you are a mathematician or programmer rather than a natural scientist? For some reason, this does not surprise me. I have no doubt that a more successful statistical apparatus may exist, but the experience of using P-values is also worth something. Yes, now they are often twisted in one way or another, but we know how to do it, and begin to understand how to deal with it. The pitfalls of the P-values are at least known. In any new system they will be too. But that's exactly where - it turns out only in decades. Perhaps they will be even more dangerous than the current.

Bayesian:Yes, thieving accountants will probably come up with some new exciting manipulations with rational numbers. Especially in those cases where the exact operations still turn out to be too computationally expensive and will have to be approximated somehow. But I still believe that if the same experimental psychology right now breaks the crisis of reproducibility, and if this crisis is clearly associated with the use of P-values, which, frankly, are nothing more than a bunch of conflicting crutches - then you should at least try to use more rational method. Although I also do not call for all to demolish and rebuild again. In practice, you can start to abandon the P-values in any one area (at least in psychology) and see what happens.

Scientist:And how are you going to persuade psychologists to such an experiment?

Bayesian: I have no idea. Frankly, I do not really expect anyone to change anything. Most likely, people will simply use the P-values until the end of the centuries. So it goes.But there is a chance that the idea will still be popular. I was pleasantly surprised by how quickly Open Access took root. I was pleasantly surprised by the fact that the crisis of reproducibility was generally noticed, and moreover, people care about it. It is possible that the P-values will still be pulled out onto the market square and upturned with a large crowd ( comment lane: at least one psychological journal in 2015 refused to test null hypotheses ). If so, I will be pleasantly surprised. In this case, it turns out that my work on popularizing Bayesian rules and plausibility was not in vain.

Scientist: It may also turn out that nobody likes plausibility in experimental science., and P-values are all considered convenient and useful.

Bayesian: If the university course of statistics was so monstrous that when one thinks about the theory of probability, they are shaky, then yes, the changes will have to come from the outside. I personally hope that our dear Student will read a short and rather fascinating introduction to Bayesian probability theory , compare it with his awesome textbook on statistics and be begging you for the next six months, “Well, please, can I just calculate the likelihood and everything, please, well, allow ".

Student: Uh ... well, I read him first, okay?

Bayesian:Dear Student, think about your choice. Some changes in science occur only because students grow in an environment of different ideas and choose the right ones. This is Max Planck’s famous aphorism, and Max Planck will not say nonsense. Ergo, the ability of science to distinguish bad ideas from good ones depends solely on the students' intelligence.

Scientist: Well, this is already ...

Moderator: And this is where we complete our transmission. Thanks for attention!

Translator's commentary: Yudkovsky, the author of HPMOR , the creator of Lesswrong and so on and so forth, stated his position on the use of Bayesian statistics in the natural sciences in the form of a dialogue. Just such a classic dialogue from antiquity or the Renaissance, with characters setting out ideas, exchanging barbs interspersed with convoluted arguments and inevitably blunt Simplicio. The dialogue is quite long, about twenty minutes of reading, but in my opinion, it's worth it.

')

Disclaimers

If you are not familiar with the Bayes rule, there is a detailed introduction on Arbital.

- This dialogue was written by a proponent of the Bayesian approach . The replicas of the Scientist in the dialogue below may not even pass the Turing ideological test for imaginationism. It is possible that they do not pay tribute to the arguments and counterarguments of the advocates of the frequency approach to probability.

- The author does not expect that the proposals described below will be accepted by the wide scientific community in the next ten years. However, it was worth writing.

If you are not familiar with the Bayes rule, there is a detailed introduction on Arbital.

Moderator: Good evening. Today in our studio: A scientist , a practicing specialist in the field of ... chemical psychology or something like that; his opponent is Bayesian , who intends to prove that the crisis of reproducibility in science can be somehow overcome by replacing the P-values with something from Bayesian statistics ...

Student: Sorry, how do you spell it?

Moderator: ... and, finally, not understanding anything Student to my right.

Moderator: Bayesian , could you start by telling you what the essence of your proposal is?

Bayesian: Roughly speaking, the essence is this. Suppose we have a coin. We throw it up six times and watch the series OOOOOOOR (approx. Lane: here and hereinafter O - Orel, R - Reshka) . Should we suspect that something is wrong with the coin?

Scientist: No.

Bayesian: The coin is just for example. Suppose we offer a sample of volunteers a plate with two cookies: one with green dressing and one with red. The first five people take the green cookies, and the sixth takes the red. Is it true that people prefer cookies with green dressing, or is it better to consider such a result as random?

Student: Probably, one may suspect that maybe people prefer green dressing. At least, psychology students who tend to volunteer in strange experiments, like green sprinkling more. Even after six observations, one may suspect so much, although I suspect that there is some kind of trick here.

Scientist: I think this is not yet suspicious. Many which hypotheses look promising at N = 6, but not confirmed at N = 60.

Bayesian: Personally, I would suspect that our volunteers do not prefer red dressing , or at least prefer it not very much. But in general, I came up with these examples only to show how P-values are considered in modern scientific statistics and what is wrong with them from the Bayesian point of view.

Scientist: Can't you think of a more realistic example with 30 volunteers?

Bayesian: Yes, but the Student doesn’t understand anything.

Student: That's for sure.

Bayesian: So, dear experts: Eagle, eagle, eagle, eagle, eagle, tails. Attention, question: will you call this result “statistically significant” or not?

Scientist: Mr. Leading, it is not significant. Under the null hypothesis that a coin is honest (or with a similar null hypothesis that the color of the dressing does not affect the choice of cookies), the same or a more pronounced result can be obtained in 14 out of 64 cases.

Student: Yeah. I understand correctly: This is because we consider the outcomes of OOOOOOO and as “the same or more pronounced,” there are a total of 14, and the total possible outcomes for 6 throws are 2 6 = 64. 14/64 is 22%, which is above 5%, so the result is not considered significant at the level of p <0.05. So?

Scientist: Right. I would also note that in practice, even at the outcome of OOOOOOO, it is not worth stopping the experiment and writing an article about the fact that the coin always falls out of an eagle.

Bayesian: The fact is that if you can stop throwing a coin at any time, you will have to ask yourself: "How likely is it that I will find a moment to stop the experiment in which the number of eagles will look public?" And this is in the P-values paradigm is a completely different story.

Scientist: I meant only that only six experiments - this is not serious, even if we study the color of the cookies. But yes, you are right too.

Student: Why is it important at all, can I stop throwing a coin or can't I?

Bayesian: What a wonderful question.

Scientist: The thing is, P-values are complicated. You can not just take the numbers, throw them into the program and publish what this program will issue. If you decide in advance to flip a coin exactly six times, and then stop regardless of the result, then the result of OOOOOO or PPPPPP will be obtained on average 2 times out of 64, or in 3.1% of cases. This is significant at p <0.05. But suppose that in reality you are a false and shameless forger. Or just an incompetent student who himself does not understand what he is doing. Instead of choosing the number of shots in advance, you throw and throw a coin until you get a result that looks statistically significant. It would be statistically significant if you decided to throw a coin exactly the same amount in advance. But in fact, you did not decide in advance. You decided to stop only after you got the results. So you can not do.

Student: Okay, I read about it somewhere, but I didn’t understand what was wrong with that. This is my research, and I should know better if there is enough data or not.

Scientist: The whole point of P-values is to create a test that the null hypothesis cannot pass. To make sure, in other words, that smoke without fire happens not too often. To do this, it is necessary to organize research in such a way as not to generate “statistically significant” discoveries in the absence of the desired phenomenon. If you flip a coin exactly six times (and decide on this number in advance), then the probability of receiving from an honest coin six eagles or six tails is less than 5%. If you throw a coin as many times as you like , and after each throw you recalculate the P-value ( pretending that the number of throws was known in advance), then the chance to get less than p <0.05 is much more than 5% sooner or later. Therefore, such an experiment detects smoke without fire much more often than in 1 out of 20 cases.

Bayesian: Personally, I like to formulate this problem like this: let's say you throw a coin and get OOOOOR. If at the same time you are in the slave only to Allah (for Allah is wise, knowing) the depths of your heart have determined the number of shots in advance , then the result does not matter; p = 0.22. If, after three months of fasting, you brought a vow to Saint Francis to throw a coin until the tails fell , then the same result is statistically significant with a quite good p = 0.03. Because the chance that with probabilities of 1: 1 tails will have to wait six or more shots, 1/32.

Student: What?

Scientist: It is rather a parody, of course. In practice, no one will throw a coin until a single tail falls and then stop. But in general, Bayesian is right, P-values do exactly that. Strictly speaking, we are trying to find out how rare the result is among those that we could get. A person who tosses a coin before the first tails can get the results {R, OR, OOR, OOOR, OOOOR, OOOOOR ...} and so on. The class of results in which six or more shots are made is {OOOOOR, OOOOOOR, OOOOOOOOO ...}, the total probability of which is 1/64 + 1/128 + 1/256 ... = 1/32. A person throwing a coin exactly six times gets one of the results of the class {, OOOOO, OOOORO, OOOOORR ...}, in which 64 elements. For the purposes of our experiment, OOOOOR is equivalent to OOOORO, OOOROO and others the same. So yes, this is all pretty counter-intuitive. If we really carried out the first experiment - OOOOOR would be a significant result, which is unlikely with an honest coin. And if we carried out the second experiment - OOOOOR would not have been significant, because even with an honest coin from time to time something similar happens.

Bayesian: You do not accidentally worry that the results of the experiment depend on what you think?

Scientist: This is a matter of conscience. Any kind of research will cost little if you lie about their results, that is, literally telling the truth about which side the coin fell out. If you lie about what kind of experiment was conducted - the effect will be the same. So you just have to honestly say what rules were used for throwing. Of course, the contents of the head of a scientist are less obvious than the side with which the coin lies. Therefore, it is always possible to tweak the analysis parameters, not to write how the number of subjects was determined, choose the statistical test that confirms your favorite hypothesis ... You can think of a lot of things if you wish. And it will be easier than falsifying the original data. In English, this is called p-hacking. And in practice, of course, much less obvious ways of creating smoke without fire are used than the stupid null hypothesis invented after the fact. This is a serious problem, and to some extent a crisis of reproducibility is connected with it, although it is not clear to which one.

Student: Does this ... sound reasonable? Probably, this is one of those things that you have to deal with and search through a bunch of examples for a long time, and then everything will become clear?

Bayesian: No.

Student: I mean?

Bayesian: In the sense of "Student, you were right from the very beginning." If what the experimenter thinks doesn’t affect the side by which the coin falls, then his thoughts should not affect the fact that the results of the throw tell us about the universe. My dear Student, the statistics you are taught is nothing more than a heap of crooked crutches that you haven't even bothered to do internally consistent. For God's sake, she gives out different wrong results depending on what's going on in your head! And this is a much more serious problem than the tendency of some scientists to slightly lie in the “Materials and Methods”.

Scientist: This is ... a serious statement, to say the least. But tell me, I ask you: what are we, the unfortunate, to do?

Bayesian: Analyze as follows: this particular OOOOOP result can be obtained with six shots of a perfectly balanced coin with a probability of 1/64, or approximately 1.6%. Suppose we already suspected that our coin was not perfectly balanced. And not just imperfectly, but in such a way that it fell on an eagle on average five out of six times. This, of course, is a wild simplification, but I will move on to realistic hypotheses a bit later. And so, this hypothetical shulersky coin gives out OOOOOR sequence with probability (5/6) 5 * (1/6) 1 . This is about 6.7%. So we have two hypotheses: "The coin is the most common" and "The coin falls out of an eagle in 5/6 cases." This particular result in the second case is 4.3 times more likely than in the first. The probability of the LLCOOP sequence for another hypothetical cheat coin, which in 5 cases out of six falls on a tail, is 0.01%. So if someone suddenly thought that this second coin was before us, then we now have a good argument against his hypothesis. This particular result is 146 times more likely for an honest coin than for a coin that falls out of an eagle only once out of six. Similarly, our hypothetical lovers of red cookies would be much less likely to eat green.

Student: Okay, I seem to understand math. But, frankly, I do not catch, what is its meaning.

Bayesian: Let me explain now, but first note the following: the results of my calculations do not depend on why the coin was planted exactly six times. Maybe after the sixth shot you decided that there was already enough data. Maybe, after a series of five shots, Namagiri Tayyar appeared in your dream and advised you to throw the coin again. Coin anyway. The fact remains: this particular series of OOOOOR for an honest coin is four times less likely than for a coin that falls out of an eagle five times out of six.

Scientist: I agree, your calculations have at least one useful property. What's next?

Bayesian: And then you publish the results in a journal. It is desirable together with the raw data, because then anyone can calculate the plausibility of any hypothesis. Suppose someone unexpectedly became interested in the hypothesis “A coin falls out of an eagle 9 times out of 10, not 5 times out of 6”. In this case, the series of observations of the LLCOOR has a probability of 5.9%, which is slightly less than our hypothesis about five eagles out of six throws (6 , 7%), but 3.7 times more than the hypothesis that the coin is perfectly balanced (1.6%). It is impossible to think up all possible hypotheses in advance, and it is not necessary. It is enough to publish complete data - then anyone who has a hypothesis will be able to easily calculate the likelihood he needs. The Bayesian paradigm requires the publication of raw data, because the focus is precisely on a specific result , and not on a class of supposedly identical outcomes.

Scientist: In this I agree with you, the publication of complete data sets is one of the most important steps to overcome the reproducibility crisis. But personally, I don’t understand what I should do with all these “And it’s so much more likely than B”.

Student: Me too.

Bayesian: It's not entirely trivial ... did you read our introduction to Bayes rule ?

Student: Great. Here are just another three-page statistical textbook, and I did not have enough.

Bayesian: You can actually read it in an hour . It's just that this is literally not trivial , that is, it requires an explanation. But okay, in the absence of a full introduction, I will try to think of something. Most likely, it will sound reasonable - and the logic is indeed correct - but not a fact that is self-evident. Go. There is a theorem that proves the correctness of the following arguments:

(Bayesian gains air)

Bayesian: Let's say Professor Plume and Miss Scarlet are suspected of murder. After examining the biographies of both, we assume that it would be twice as easy for a professor to kill a man than Miss Scarlet. With this assumption, let's start. It turns out, however, that the deceased was poisoned. We know that if Professor Plume is about to kill someone, he will use poison with a probability of 10% (and in 9 cases out of 10 he will prefer, for example, a revolver). Miss Scarlet, if she decides to kill, uses poison with a probability of 60%. In other words, the professor’s use of poison is six times less likely than Miss Scarlet’s use of poison. Since we have new information, namely the method of murder, we must update our assumption and assume that Plume is about three times less likely killer: 2 * 1/6 = 1/3.

Student: Not sure I understood that. What does the phrase "Professor Plume mean three times less likely murderer than Miss Scarlet"?

Bayesian: It means that if we do not have other suspects, then the probability that the victim was killed by Plume is 1/4. The remaining 3/4 make up the probability that the killer is Miss Scarlet. Therefore, the probability of the professor's guilt is three times lower than that of Miss Scarlet.

Scientist: And now I want to know what you mean by "probability of guilt." Plume either committed the murder, or he did not commit it. We cannot consider a sample of the killings and find that Plume is indeed guilty of a quarter of them.

Bayesian: I was hoping not to get into this, but oh well. My good Scientist, I mean that if you offered me a bet with 1: 1 bets on whether Plume killed the victim or not, I would bet that he did not. But if under the terms of the betting, I would pay you $ 1 in case of his innocence, and you pay me $ 5 in the event of his guilt, I would gladly bet on the guilt. The 2012 presidential election was held only once, and Obama's Probability of Victory is the same conceptually vague thing as Plume's Guilt Probability. But if on November 7 you were offered to bet $ 10 on Obama and promised $ 1000 in case of his victory, then you would hardly refuse such a bet. In general, when prediction markets and large liquid pools of bets accept 6: 4 bets on an event, this event occurs in about 60% of cases. Markets and pools are well calibrated by probabilities in this range. If they were calibrated poorly, that is, if the events that are being bid at 6-4, happened 80% of the time, then someone would have noticed and enriched themselves at the expense of such rates. At the same time, he would raise the price of the rate until the market becomes well calibrated. And since events with a market estimate of a probability of 70% do occur about 7 times out of 10, I don’t understand why to insist that such a probability does not make sense.

Student: I admit, it sounds convincing. But for sure it only seems to me, and in fact there are a whole bunch of tricky arguments for and against.

Bayesian: There really is a bunch of arguments, but the general conclusion from it is that your intuitive presentation is pretty close to the truth.

Scientist: Well, we'll come back to this. And what if there are two agents, both in your terms “well calibrated”, but one of them claims “60%” and the other says “70%”?

Bayesian: Suppose I toss a coin and do not see which side it fell. In this case, my ignorance is not information about a coin, it is information about me. It exists in the head, not in the surrounding world, just as the white spots on the map do not mean that there is no territory in this place. If you looked at the coin, but I didn’t, it’s quite reasonable that you and I are in different states of uncertainty about it. Given that I am not one hundred percent certain, it makes sense for me to express my uncertainty in terms of probability. There are three hundred theorems that claim that if someone’s expression of uncertainty is not in fact a distribution of probability, then, in general, that’s what he needs. For some reason, it always turns out that if an agent’s thinking under conditions of uncertainty violates any of the standard axioms of probability theory, the earth opens up, water turns into blood, and dominated strategies and obviously losing stakes spill out of heaven.

Scientist: Well, here I was wrong. We will come back to this, too, but first answer my question: what should we do with plausibility after we received them?

Bayesian: According to the laws of probability theory, these likelihoods are evidence.They are the ones who force us to change our a priori probabilities from 2: 1 in favor of Plume to 3: 1 in favor of Scarlet. If I have two hypotheses and the likelihood of data for both, then I should change my opinion in the manner described above. If I change it somehow differently - then the heavens open, strategies pour in, and so on. Bayes theorem: this is not just a statistical method, it is a LAW.

Student: I apologize, but I still do not understand. Suppose we are conducting an experiment. And, let's say, the results obtained six times more likely if Herr Troopa was killed by Professor Plume than they would have been if Miss Scarlet was the killer . The student obviously confused the likelihood of poison use by two killers . To arrest us professors or not?

Scientist: I think, first we need to come up with a more or less realistic a priori probability, for example, " a priori I believe that the probability of killing the Troupe by Plume is 20%." Then it must be multiplied by a likelihood ratio of 6: 1, and the ratio of a posteriori probabilities of 3: 2, which Plume did kill the Troupe, is obtained. Then you can say that Plume is guilty with a probability of 60%, and then let the prosecutor's office understand.

Bayesovets: None . For heaven's sake! Do you really think Bayesian statistics work that way?

Scientist:Does she work wrong? I have always believed that its main advantage is that it gives us a posteriori probabilities, which P-values do not really give, and the main drawback is that it requires a prior probabilities. Since they have to be taken more or less from the ceiling, the correctness of a posteriori probabilities can be challenged to the end of time.

Bayesian: Articles should be published likelihood . More precisely, it is necessary to publish raw data and calculate for them a few likelihoods of interest. But certainly not a posteriori probabilities.

Student: I'm confused again. What is a posteriori probabilities?

Bayesian: A posteriori probability- This is a statement like "With a probability of 60% of Herr. The troupe was killed by Professor Plume." As my colleague has already noted, such statements do not follow from P-values. And, in my opinion, they have no place in experimental articles, because these are not the results of an experiment .

Student: But ... ok, Scientist, a question for you: let's say we got results with p <0.01, that is, something with a probability of less than 1% with the null hypothesis "Professor Plume did not kill Herr Troupe". To arrest us or not?

Scientist:First, it is not a realistic null hypothesis. Most likely, the null hypothesis will be something like "Nobody killed Herr Troupe" or "all suspects are equally guilty." But even if the null hypothesis described by you worked, even if we could reject Plume's innocence with p <0.01, it would still be impossible to say that Plume is guilty with a probability of 99%. P-values of this are not reported to us.

Student: And what do they report then?

Scientist: They report that the observed data are included in a certain class of possible outcomes, and that the results of this class are observed in less than 1% of cases if the null hypothesis is correct. More p-value means nothing. You can not just go and move from p <0.01 to "Professor Plume is guilty with a probability of 99%." A Bayesian is more likely to be able to better explain why. In general, in science one cannot interpret one thing as something else. Figures denote exactly what they denote, no more and no less.

Student: Generally excellent. At first I did not understand what I should do with plausibility, and now I still do not understand what I should do with P-values. What experiment is required to finally send Plume to prison?

Scientist: In practice? If another pair of experiments in other laboratories confirms his guilt with p <0.01, then most likely he is really guilty.

Bayesian:A “crisis of reproducibility” is when the matter is later raised and it turns out that he did not commit murder.

Scientist: In general, yes.

Student: Somehow it turns out unpleasant.

Scientist: Life is generally an unpleasant thing.

Student: So ... Bayesean, you probably have a similar answer? Something like the fact that if the likelihood ratio is large enough, say, 100: 1, then in practice can we assume that the corresponding hypothesis is true?

Bayesian:Yes, but it is somewhat more complicated. Suppose I throw a coin 20 times and get OOOROOOROROROROOOOOOORROR. The catch is that the plausibility of the hypothesis “The coin is guaranteed to issue a sequence of OOOROOOROROROOOOOOORROR” is higher than the plausibility of the hypothesis “Coin equiprobably drops out like an eagle or tails” is about a million times. In practice, if you did not hand me this hypothesis in a sealed envelope before the start of the experiment, I will consider it strongly retrained. I will have to give this hypothesis a penalty for complexity of at least 2 : 20 : 1, because the sequence description alone takes 20 bits. In other words, to lower the prior probability so much that it more than compensates for the likelihood advantage. And this is not the only underwater rock. But nevertheless, if you understand how and why the Bayes rule works - then in each case you can understand along the way. If the ratio of credibility for Plume versus any other suspect is 1000: 1, and there are only six suspects at all, then it can be assumed that the a priori probability was hardly much more than 10: 1 against the fact that he was a murderer. If so, then we can assume that he is guilty with a probability of 99%.

Scientist: But nevertheless, it’s not worth writing in the article ?

Bayesian: Right. How to formulate ... The key condition for Bayesian analysis is that the wholerelevant information. You can’t exclude data from analysis just because you don’t like it. This is actually the key condition of science as such, regardless of the statistics used. There are a lot of articles, the conclusions of which turned out just because some factor was not taken into account or the sample was unrepresentative in some parameter. I'm talking about what? And besides, how do I (as an experimenter) know what “all relevant information” is? Who am I to calculate a posteriori probabilities? Maybe someone has published an article in which there are additional data and additional likelihoods that I should have taken into account, but I have not read it yet. So I just publish my data and my likelihood functions - and that’s it! I can not say that I considered everythingarguments and now I can offer reliable a posteriori probabilities. And even if I could, then in a week another article may come out, and these probabilities will become obsolete.

Student: Roughly speaking, the experimenter just has to publish his data, calculate some likelihood for them and that's all? And then someone else will decide how to deal with them?

Bayesian: Someone will have to choose a priori probabilities — equal, or with maximum entropy, or with penalties for complexity, or some other — then try to collect all possible data, calculate likelihoods, make sure that the result is not crazy , and so on. other And they still have to recount if a new article comes out in a week.

Student:It sounds quite laborious .

Bayesian: It would be much worse if we took up the meta-analysis of P-values. Updating Bayesian probabilities is much easier. It is enough to simply multiply the old a posteriori probabilities by new likelihood functions and normalize them. Everything.If experiment 1 gives a likelihood ratio of 4: 1 for hypotheses A and B, and experiment 2 gives a likelihood ratio of 9: 1 for them, then together they give a ratio of 36: 1. That's all.

Student: And you can't do that with P-values? One experiment with p = 0.05 and another experiment with p = 0.01 does not mean that actually p <0.0005?

Scientist: No .

Bayesian: Dear viewers, please pay attention to my arrogant smile.

Scientist: But I still worry about the need to invent a priori probabilities.

Bayesian: And why does she bother you more than the fact that everyone decided to consider one experiment and two replications with p <0.01 criterion of Truth?

Scientist: You want to say that the choice of a priori values is no more subjective than the interpretation of P-values? HmI wanted to say that the requirement, say, p <0.001 should guarantee objectivity. But then you will answer that the figure 0.001 (instead of 0.1 or 1e-10) is also sucked from the finger.

Bayesian: And add to this that it is less effective to require any arbitrary P-value than to suck a prior probability from the same finger. One of the first theorems that threatened violators of probability axioms with Egyptian punishments was proved by Abraham Wald in 1947. He tried to describe all the acceptable strategies , calling the strategy some way to react to what you are observing. Of course, different strategies under different circumstances can be more or less profitable. Acceptable strategyHe called one that is not dominated by any other strategy under all possible conditions. So, Wald discovered that the class of acceptable strategies coincides with the class of strategies that contain a probability distribution, update it based on observations according to Bayes' rule, and optimize the utility function.

Student: Excuse me, is it possible in Russian?

Bayesian: If you do something in connection with what you observe and get more or less money, for example, depending on what the real world is, then one of two things is true. Either your strategy in some sense contains a probability distribution and updates it according to Bayes rule, orthere is some other strategy that is never inferior to yours, and sometimes surpasses it. That is, for example, you say: “I will not quit smoking until I see an article proving the connection between smoking and cancer at p <0.0001”. At least theoretically, there is a way to say “In my opinion, the link between smoking and cancer exists with a probability of 0.01%. What are your likelihoods? ”, Which will be no worse than the first formulation, no matter what a priori probabilities of the existence of such a connection.

Scientist: Really?

Bayesian:Yeah. The Bayesian revolution began with this theorem; since then it has been slowly gaining momentum. It should be noted that Wald proved his theorem a couple of decades after the invention of P-values. This, in my opinion, explains how it happened that all modern science was tied up with obviously ineffective statistics.

Scientist: So you propose to throw out P-values and instead publish only likelihood ratios?

Bayesian: In short, yes.

Scientist: Something I do not really believe in ideal solutions that are suitable for any conditions. I suspect - please do not consider it an insult - that you are an idealist. In my experience, in different situations different tools are needed and it would be unwise to throw out all but one.

Bayesian:Well, I am ready to explain what I am an idealist and what is not. Likelihood functions alone will not resolve the reproducibility crisis. It cannot be completely resolved by simply ordering everyone to use more efficient statistics. The popularity of open access journals does not depend on the choice between plausibility and P-values. Problems with the review system also do not depend on it.

Scientist: And everything else, therefore, depends?

Bayesovets: Not everything, but they have a lot what to help . Let's count.

Bayesian:First of all. Likelihood functions do not force us to draw a line between “statistically significant” and “insignificant” results. An experiment cannot have a “positive” or “negative” outcome. What is called the null hypothesis is now just one of the hypotheses that is not fundamentally different from all the others. If you throw a coin and get an OORRRROOO - one cannot say that the experiment could not “reject the null hypothesis at p <0.05” or “reproduce the previously obtained result”. He merely added data that supports the hypothesis of an honest coin against the 5/6 eagles hypothesis with a likelihood ratio of 3.78: 1. So with the massive acceptance of Bayesian statistics, the results of such experiments will be less likely to go to the table. Not at all,because the editors of magazines have unexpected results that are more interesting than honest coins, and this must be dealt with directly. But P-values do not just do not struggle with this approach, they are hisstimulate ! It is because of him that p-hacking exists at all. So the transition to the likelihood will not bring happiness to all and a gift, but it will definitely help .

Bayesian: Secondly. The likelihood system emphasizes the importance of the source data much more and will stimulate their publication wherever possible, because Bayesian analysis is based on how likely these particular results are in a particular model. The system of P-values, on the contrary, forces the researcher to consider the data as just one of the members of the class of “equally extreme” results. Some scientists like to keep all their precious data with them; it's not just statistics. But P-values stimulateand this, because for the article, it’s not the data itself that is important, but whether they belong to a particular class. Once this is established, all the information contained in them as if collapses into a single bit of "significance" or "insignificance."