"Revival of AI" - no more than expensive iron and advertising, thrown on the implementation of the old idea

There is no spirit in the car

In the past few years, the media has been overwhelmed by exaggerated descriptions of artificial intelligence (AI) and machine learning (MO) technologies. It seems that never in the field of computer science has there been such that so many ridiculous statements make so many people who have such a small idea of what is happening. For anyone actively involved in advanced computer hardware in the 1980s, what is happening seems strange.

In this month’s issue of The Atlantic, a high-flying intellectual and best-selling author Sapiens. A Brief History of Humanity and Homo Deus: A Brief History of Tomorrow , Yuval Noi Harari describes the influence of AI on democracy. The most interesting aspect of the article is Harari’s excessive belief in the capabilities of modern AI technologies. He describes Google's companion, a program for playing chess from the company DeepMind , as "creative", "possessing imagination" and "ingenious instincts."

In the BBC documentary Joy II, Professor Jim Al-Khalili and DeepMind founder Demis Hassabis describe how the AI system “made a real discovery”, “is capable of actually generating a new idea” and has developed “strategies designed independently”.

And if a similar stream of exaggerations and anthropomorphisms is used to describe blunt and mechanistic systems, then it is time to test reality with a return to basics.

')

Discussion of computer technology often occurs through myths, metaphors and human interpretations of what appears on the screen. Metaphors such as "intuition", "creative principle" and newer "strategies" are part of the emerging mythology. Experts on AI find regularities in the game moves of AI and call them "strategies", but the neural network has no idea what a strategy is. If there is any creative beginning, it belongs to the researchers from DeepMind, who develop and manage the systems training processes.

Today's AI systems are trained based on a huge amount of automated trial and error; At each stage, back-propagation techniques are used to transmit information about errors and adjust the system in order to reduce the number of errors in the future - and this gradually improves the effectiveness of AI in performing a specific task, such as playing chess.

The ongoing increase in the effectiveness of AI, MO and so-called. “Depth learning” (GO) is mostly based on the use of this back-propagation technique. It was first invented in the 1960s, and applied to neural networks in the 1980s by Joffrey Hinton. In other words, for more than 30 years there was no significant conceptual progress in the field of AI. Most of the results of research in the field of AI and articles in the media show what happens when the execution of the old idea is thrown into mountains of expensive computing equipment and ingenious advertising campaign.

And it cannot be said that DeepMind does not do valuable work. Auxiliary work of machines when creating new strategies and ideas is an interesting thing, especially if the work of this machine is difficult to understand because of its complexity. In our secular culture, the magic and mystery of technology attracts people, and giving a mysterious image of a mostly dry and rational engineering field is only good. But in the car, a friend of Google, there is no spirit.

Iron vs software, analog vs digital, Thompson vs Hassabis

All the hype around the DeepMind machines reminds me of the joyful excitement that arose a couple of decades ago on a completely different wave, and perhaps a deeper machine learning system.

All the hype around the DeepMind machines reminds me of the joyful excitement that arose a couple of decades ago on a completely different wave, and perhaps a deeper machine learning system.In November 1997, Adrian Thompson, a researcher from the Center for Computational Neuroscience and Robotics at the University of Sussex, got the cover of the New Scientist magazine along with the article “Creations from prehistoric silicon — release Darwinism in the electronics laboratory and see what it will create. A tough car that nobody understands. ”

The work of Thompson caused a slight sensation, since he rejected the customs and launched the evolution of the MO system in electronic equipment, instead of using the program approach, like everything else. He decided to do this because he realized that digital software is limited by the binary on / off nature of the switches that make up the signals processing brain of any digital computer.

The neurons of the human brain, on the contrary, have evolved so as to participate in various subtle, sometimes inconceivably complex physical and biochemical processes . Thompson suggested that the development of computing equipment using the automatic process of natural selection can take advantage of all the analog (infinitely varied) physical properties of the real world, inherent in silicon, of which the simplest digital switches of computers are made - which may lead to something resembling effective analog work of the components of the human brain. And he was right.

In his laboratory, Thompson conducted an evolution of the FPGA configuration (such as a digital silicon chip, in which the connections between its digital switches can be constantly reconfigured) in order to teach him to separate two different audio signals. Looking after the inside of the chip to see how the evolutionary process set up the connections between the switches, he noted an impressively effective scheme of work - she used only 37 components.

In addition, the resulting evolution of the scheme has ceased to be understandable to digital engineers. Some of the 37 components were not related to others, but when they were removed from the circuit, the entire system stopped working. The only reasonable explanation for this strange situation was that the system used some mysterious electromagnetic connections between its kind of digital components. In other words, the evolutionary process has adopted the analog characteristics of the components and materials of the system from the real world in order to carry out its “calculations”.

It was a brain explosion. I was a young researcher in the 1990s, I had experience in both electronic equipment research and AI, and Thompson’s work struck me. The computer was not only able to invent a completely new kind of electronic circuits and surpass the capabilities of electronic engineers, but, more importantly, pointed the way to the development of infinitely more powerful computer systems and AI.

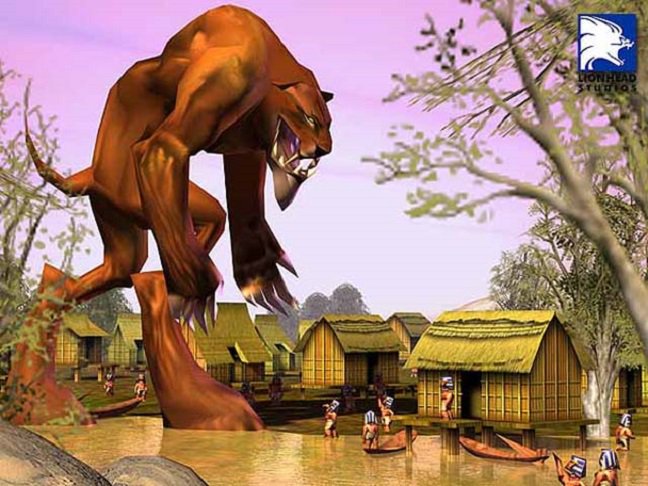

Hassabis began as a leading AI programmer in a now-forgotten game from Lionhead Studio, Black & White.

So what happened? Why is Thompson practically forgotten, and Google’s parent company, Alphabet, throws Hassabis with money, and BBC documentaries sing to him eulogies? For the most part, it's about luck. In the 1990s, AI was fashionable, like grandmothers pantaloons. Today, on the shoulders of AI lies the burden of having to lead us to the “fourth industrial revolution”. Capital is chasing the "next major project." And although DeepMind's digital AI systems are not very suitable for modeling complex real-world analog systems like the weather or the human brain, they are definitely well suited for grinding digital data from the simplest digital world online in the form of links, clicks, likes, playlists and pixels .

DeepMind also benefited from its ability to show face to face. DeepMind advertised its technology and leadership, cultivating technological mystery, but the whole demonstration of its work was reduced to toys with the simplest computable rules. The advantage of the games is their comprehensibility and visual appeal to the media and the public. In fact, most of the commercial applications of this technology will be associated with banal background business applications , such as optimizing the energy efficiency of the data centers where Google keeps its computers.

Ceci n'est pas une paddle *

* "This is not a paddle" - a reference to the painting "The Treachery of Images "

Common to Thompson and Hassabis - besides belonging to Britain - were definitely the experience and skills necessary for effective training and the evolution of their systems, but this dependence on the skills and creativity of people is obviously a weakness of any AI or MO system. Also, their technology was very fragile. For example, Thompson systems often stopped working at temperatures different from those at which they evolved. Meanwhile, in DeepMind, a simple change in the size of the paddle in one of the company's video games completely negated the effectiveness of AI. This fragility is due to the fact that DeepMind's AI does not understand what paddle is - and even the video game itself; its switches work only with binary numbers.

MO systems have indeed achieved great success lately, but this progress, for the most part, was obtained through the use of a huge amount of standard computing equipment for solving problems, rather than radical innovations. At some point, the near future will no longer be able to shove more tiny silicon switches onto a silicon chip. The efficiency of the scheme (more computations with less equipment) will become important commercially, and at this point the evolving equipment may finally become fashionable.

Hybrid systems may also appear, combining Thompson and Hassabis approaches. But whatever happens, Harrari will have to wait until he can get a “creative” AI system for writing his next bestseller.

Source: https://habr.com/ru/post/430154/

All Articles