Environment generation based on sound and music in Unity3D

annotation

Hello. This article is the first of a series dedicated to the generation of content based on music and sound. In fact, such a generation is quite a complex technical task, so this material will be introductory, more focused on game design and a general understanding of the subject, after which we will plunge into the technical aspects of this topic.

In this article we will look at the specific genre of games in which content is generated based on sound and music. The general theory of sound will not be given here, but in the material you can find links to sources of information and a brief description of the terms that we will use. The material, although it contains technical information on sound theory and programming, is designed for a novice audience. The illustrations are made on their own and, since I am not an artist, please do not take them seriously, they are needed only for a better understanding of the material. Enjoy reading!

Introduction

Procedural generation based on music in video games is a topic that, personally for me, has always been shrouded in a kind of touch of mysticism. There is something incredible in how sound forms are not only visualized, but also directly affect the gameplay. It all looks peculiar, but damn fun. That is why these games have their own small but stable audience. On Steam for such games, they added separate tags “Generation based on music” and “Rhythm game”. Currently, about 200 projects can be found on these tags, although there are much more games that use this mechanics.

In fact, sound-based generation provides unlimited scope for gameplay development, but so far such ideas remain for indie projects, while in AAA games (with the exception of Guitar Hero), such experiments are not applicable due to their gameplay instability and singularity. .

Examples of good projects

Before turning to the very essence of sound-based generation (not to be confused with sound generation!), I would like to give examples of successful games that use similar mechanics. If you haven't played them yet, try it! Probably this you have never seen. Many of these projects have received awards from players and the press.

Strictly speaking, pure generation is used only in Audiosurf. The rest of the games use some of its elements. Some games are more sharpened to track a bit, some - to the volume of the track, etc.

Such projects are very difficult to assess by a screenshot or description, so if you do not have time to play, just watch the video on YouTube.

Audiosurf

For many players, Audiosurf was the first game in which music-based content generation was used. The project was created by Dylan Fiterer, an independent developer of Invisible Handlebar. When Audiosurf appeared on Steam, this market was just beginning to develop, and it was still far from the current monstrous growth rates. Audiosurf looked very fresh and not usually, moreover, it was the first game on Steam (except for Valve games), which contained achievements in itself!

The slogan Audiosurf - "Ride your music" - very accurately reflects the gameplay: on the basis of your music track, the track you need to drive is created. The game takes into account the speed of the track, dynamic changes (increase and decrease in volume) and bits. You can use different genres of music in it and combine them with different game modes. In addition, there is a table of records for each track, and you can be surprised to learn that your favorite “Undeground death metal” is being listened to by ten more people.

Unfortunately, in statics Audiosurf is not perceived. Need to play.

If we talk about the gameplay, then Audiosurf is great. For example, if the track starts with a quiet introduction, your boat crawls slowly uphill, but when the musical texture becomes more intense and full sound, the speed increases and you rush down for all times. The so-called Drop is particularly well felt, during which the sound “breaks off” in the midst of development, and then returns to the moment of its culmination. A diverse batch of drums can turn your track into a hilly road, as well as affect the specials. effects. In addition, the blocks that you have to assemble, passing the generated track, are also placed under the bit.

10 years have passed since the game was released, but it is still very popular with players. At the moment, the two parts of the game came out, and the developer Dylan Fitterer continued to develop his ideas and created Audioshield, where you fight off the blocks in virtual reality flying at you under the bit.

We advise you to play on such tracks as:

- System of a Down - Chop Suey!

- Martin Garrix - Animals

- Skrillex - Bangarang

Share your favorite tracks for Audiosurf in the comments!

The game can be bought on Steam .

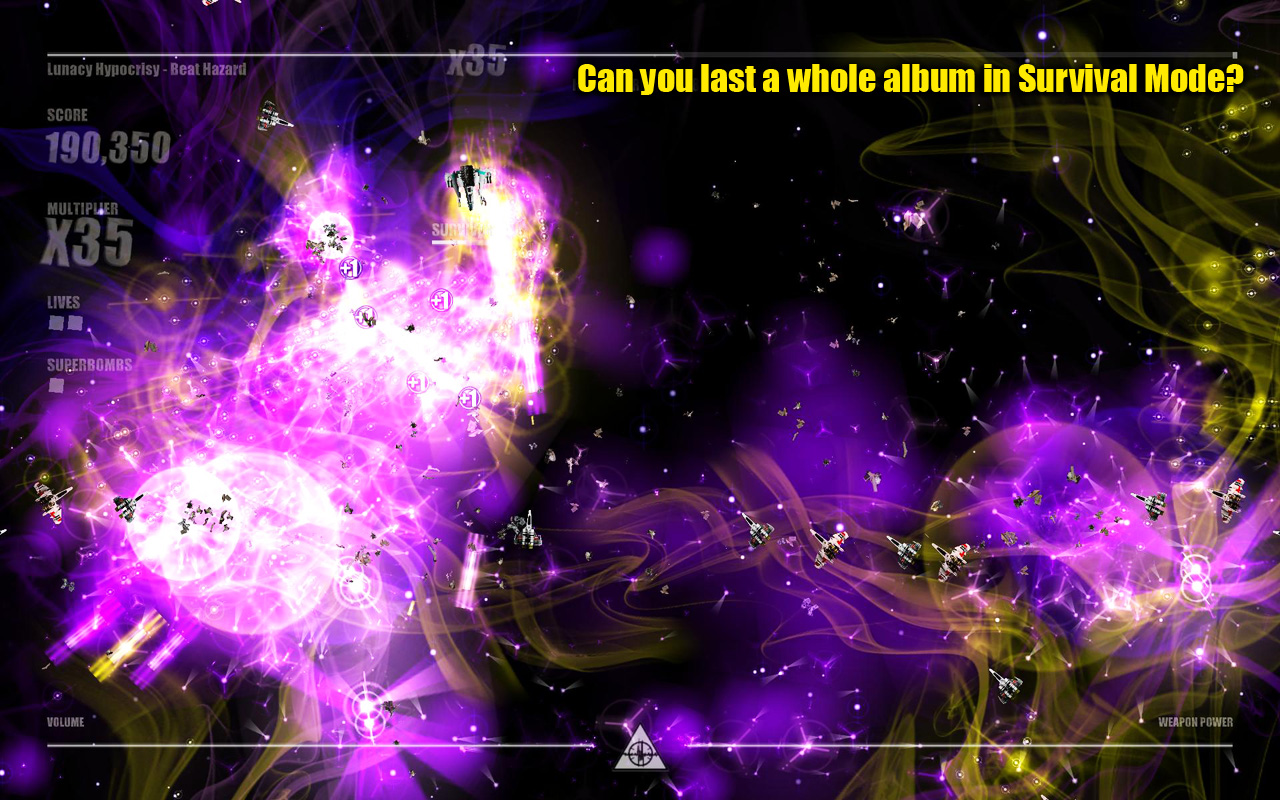

Beat hazard

In this project, the creators took the standard mechanics Shoot'em up and added there the analysis of your music tracks. It turned out very cool. Music affects everything: the power of your ship, the number of opponents, the overall speed of the game. Best of all, the game can be experienced with music, where there are sharp transitions from silence to powerful sound, then your screen just explodes from the effects, and the ship starts to shoot with huge rays.

The game has a fairly rich mechanics, several modes and huge bosses. In general, everything you need for fun!

We advise you to play on such tracks as:

- The Prodigy - Invaders Must Die!

- Infected Mushroom - The Legend of the Black Shawarma

- Daft Punk - Contact

Write in the comments, under which tracks you like most to destroy enemy ships!

The game can be bought on Steam .

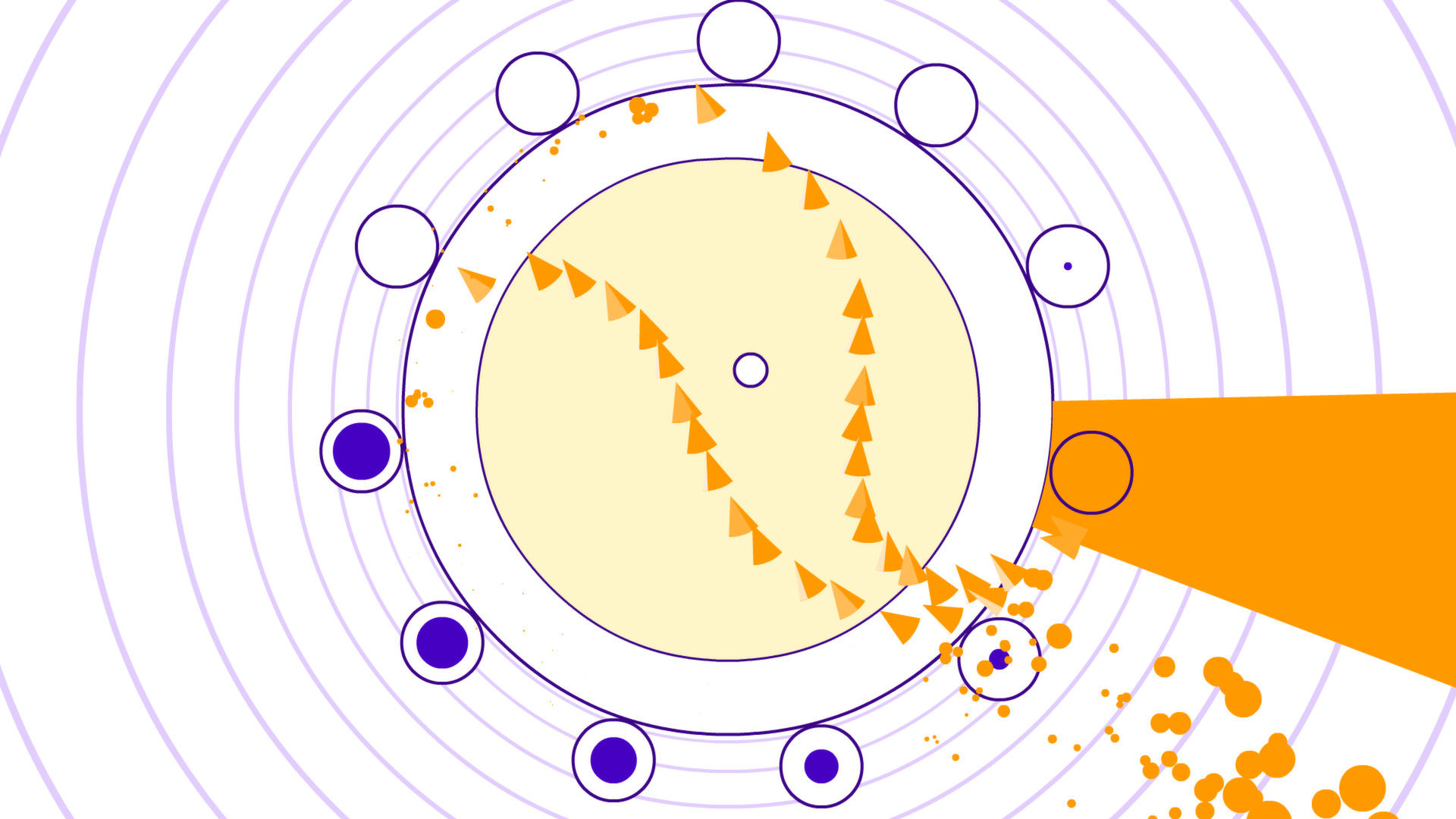

Soundodger +

The game is a mixture of Beat Hazzard and the popular Super Hexagon. The essence of the gameplay is that you need to dodge objects, the activity of which depends on your music track. The process is very complex and therefore super-fascinating.

The game can be bought on Steam .

Crypt of the NecroDancer

A game that insanely accurately conveys rhythm and makes you dance with a gamepad. Crypt of the NecroDancer is a Roguelike project in which both you and the monsters must move strictly to the music. If you do not fall into a rhythm, the game penalizes you when you move. At the same time, the entire location pulsates to the beat, which creates an unreal atmosphere. The soundtrack was written by the famous Danny Baranowsky (Super Meat Boy, The Binding of Isaac). By the way, the game supports dance mats!

The game can be bought on Steam .

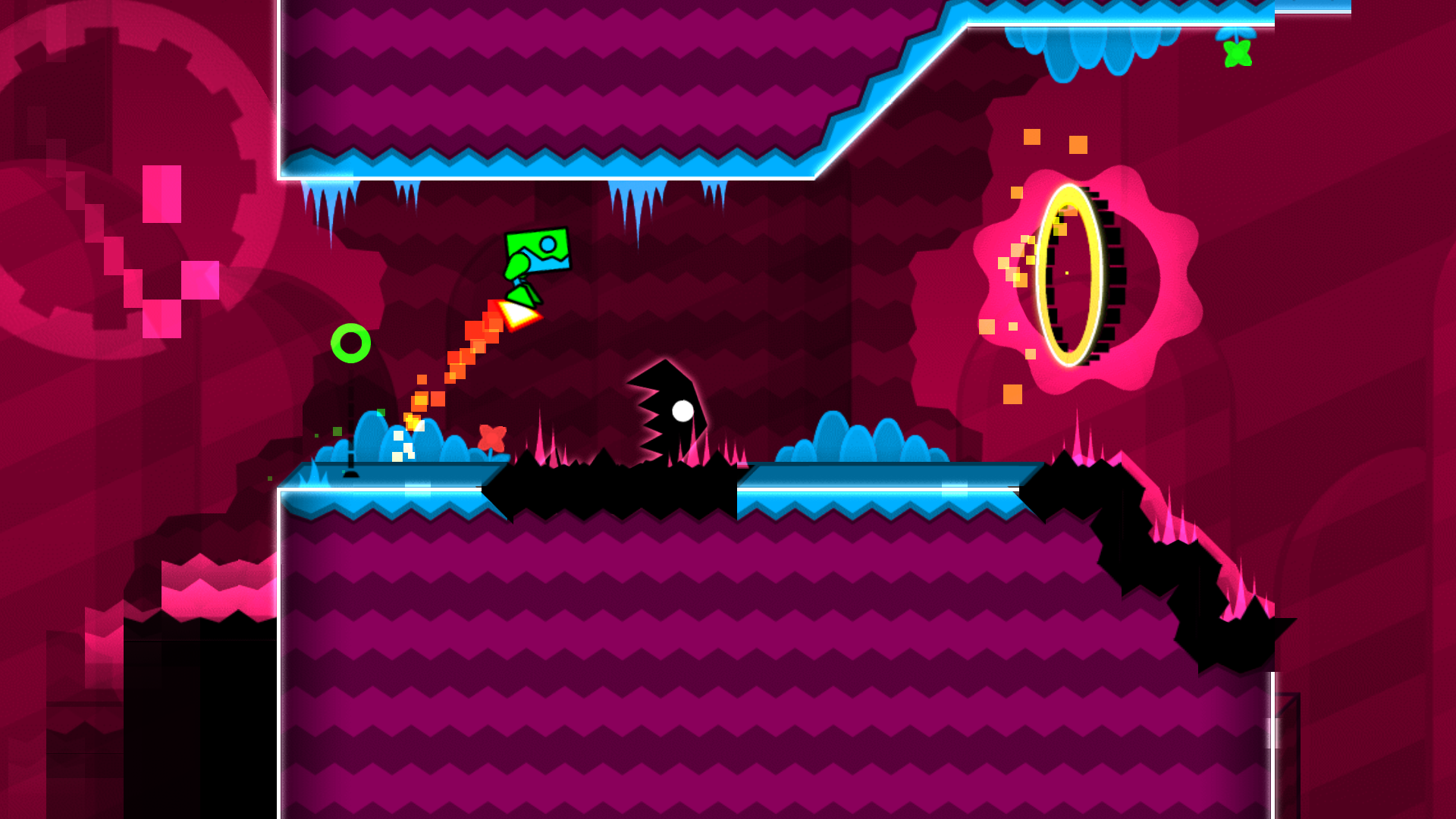

Geometry Dash

A very hardcore runner in which you rely not only on your reaction, but also on the sense of rhythm, since all the obstacles are clearly placed under the beat, and by the end of the track (if you reach) you are already moving at an intuitive level. Strictly speaking, there is no generation as such, but the developers used the analysis of music tracks to better construct the level.

Like almost all games of this genre, Geometry Dash looks very bright

The game can be bought on Steam .

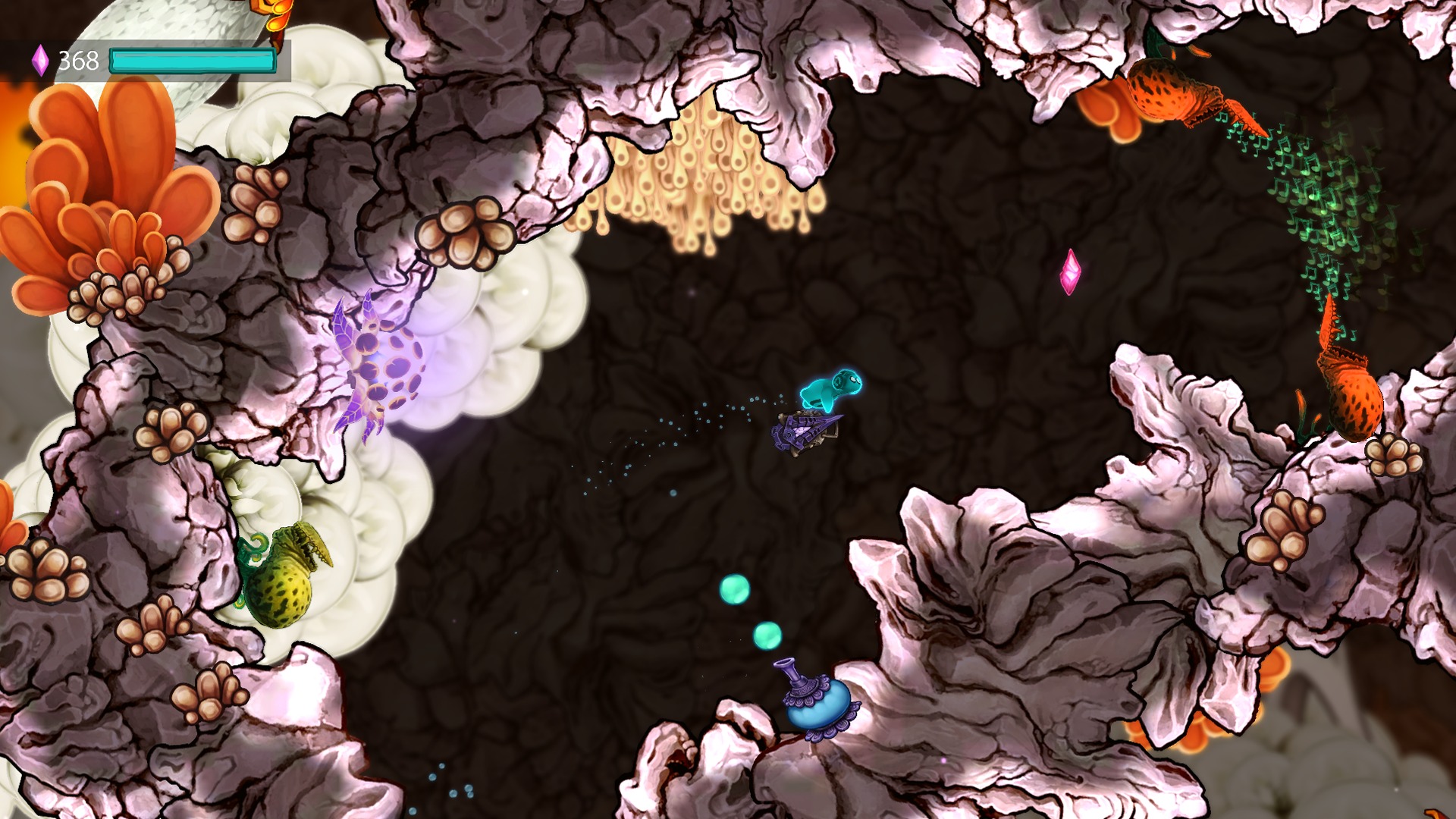

Beatbuddy: Tale of the Guardians

Gorgeous music platformer, the action in which takes place in the underwater world, living under a bright electro swing beat. Every creature in this game generates its own sound. Krabik taps a quick beat, the anemones are responsible for the barrel ... At the same time, the main character can influence the inhabitants of the world and change the music and the gameplay itself. Tracks of such musicians as Parov Stelar, Austin Wintori, Sabrepulse and La Rochelle Band were used for the game.

The game can be bought on Steam .

After we were inspired by excellent projects, you can proceed to the study of the question "how does it all work?".

Basic sound parameters

Analog audio options

Since the chosen topic is not too simple and contains many technical aspects, I decided not to inflate the article with theory, but to define basic concepts. If you want to study sound theory more closely, there were many articles on this topic on Habr. At the end of the article you will find many useful links.

We will attribute the following to the basic sound parameters:

- Sound wave frequency or pitch, Hz

- Sound wave amplitude or loudness, dB

- The attenuation coefficient is the rate of decrease of the amplitude over time.

- Waveform - a general view of the sound wave. With this parameter you can divide sounds into groups (sharp, smooth, noise, etc.)

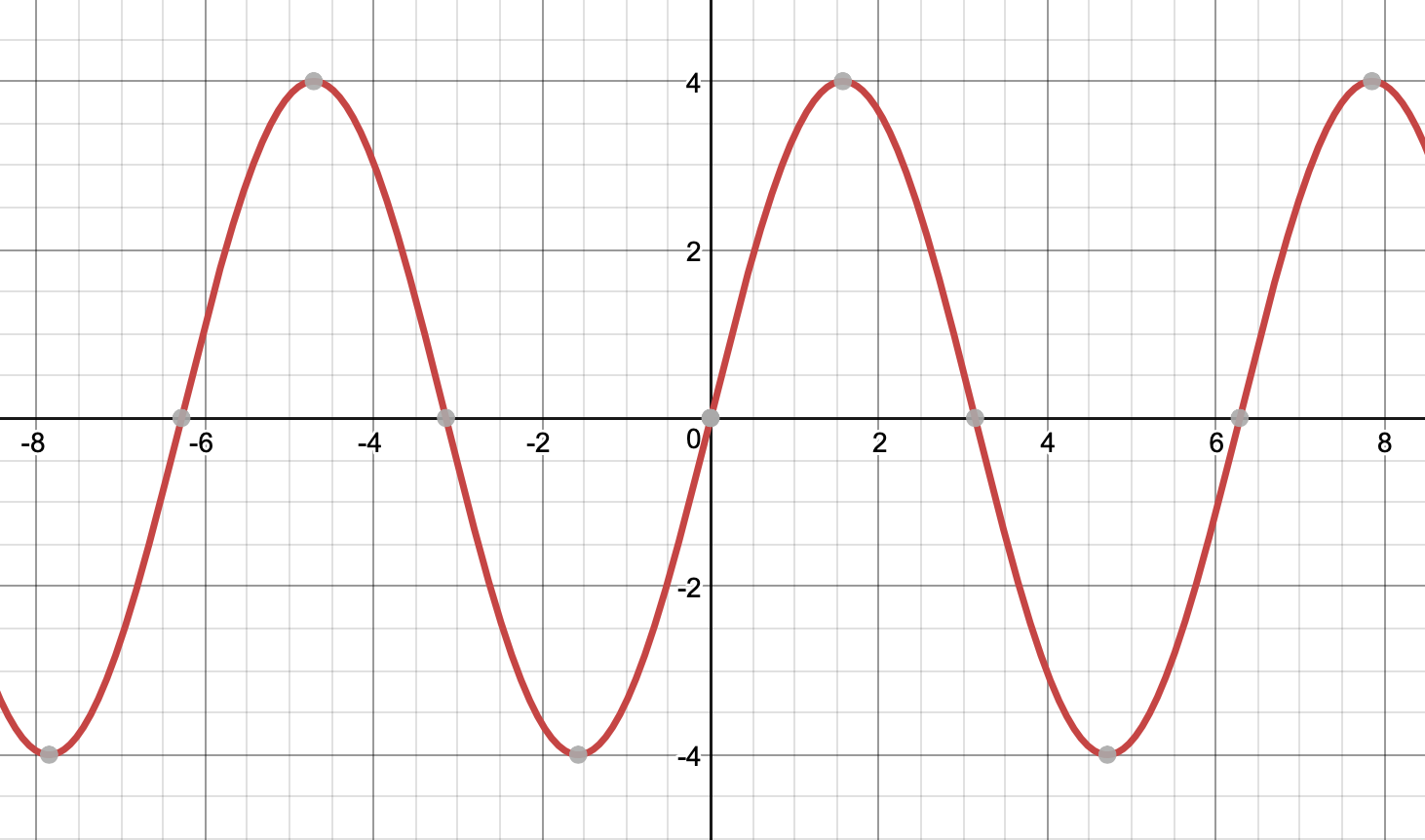

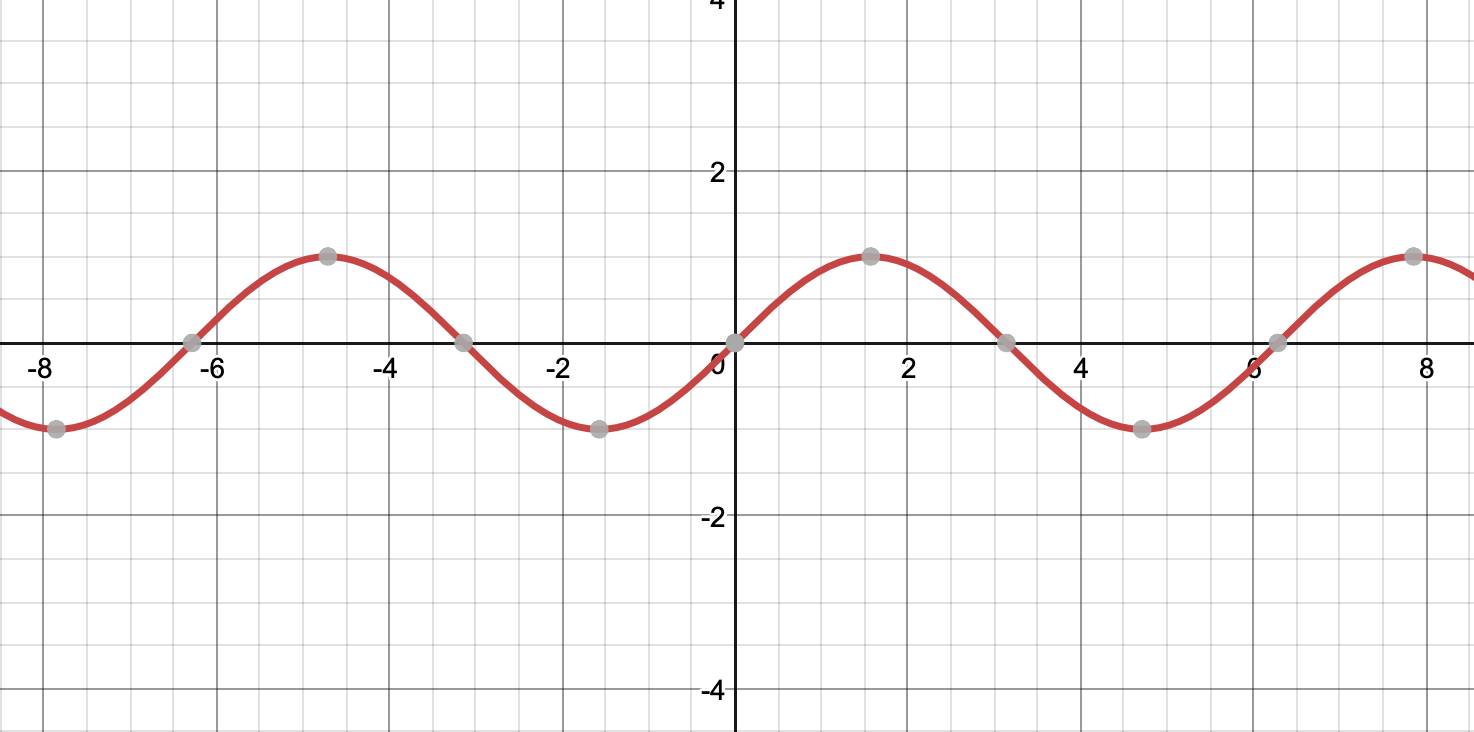

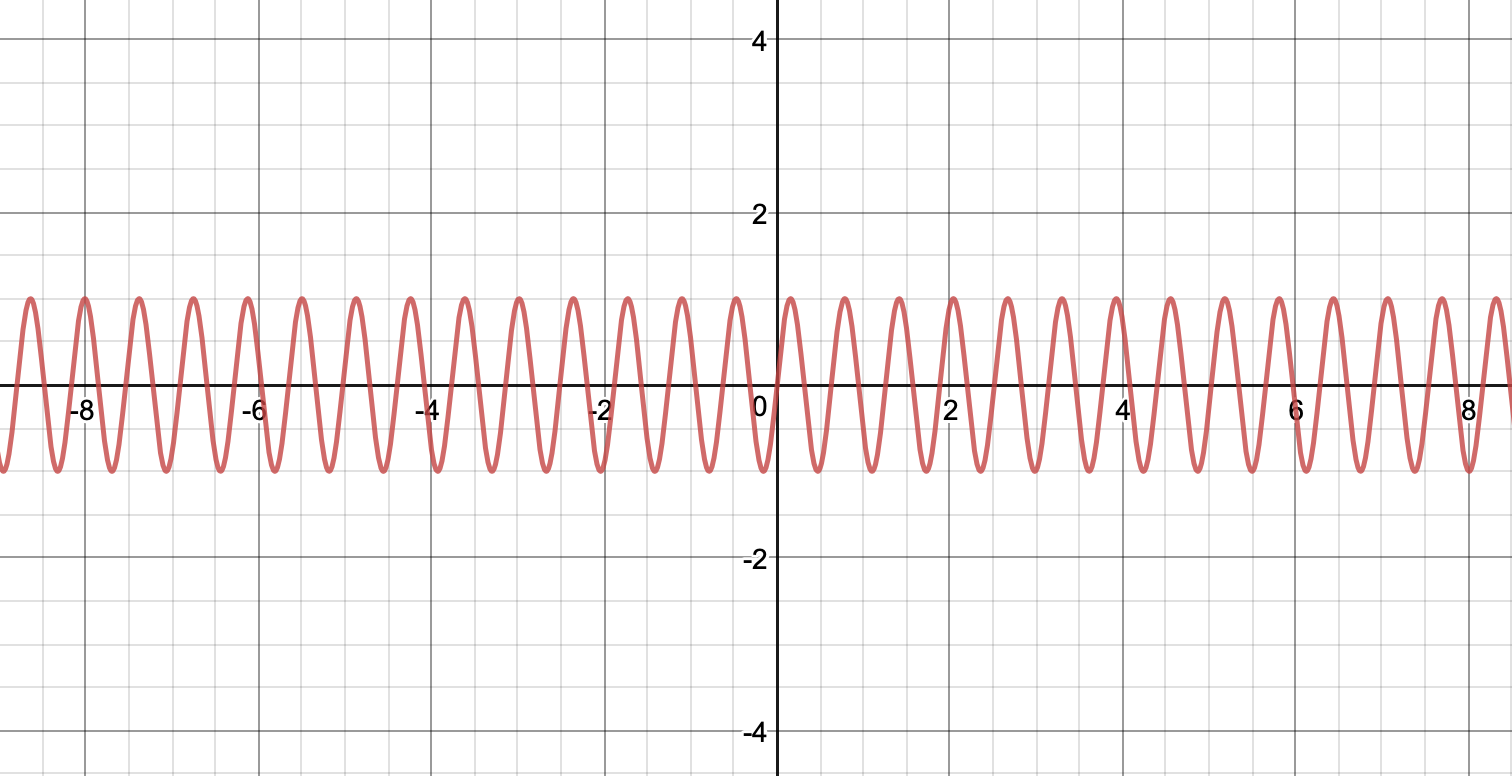

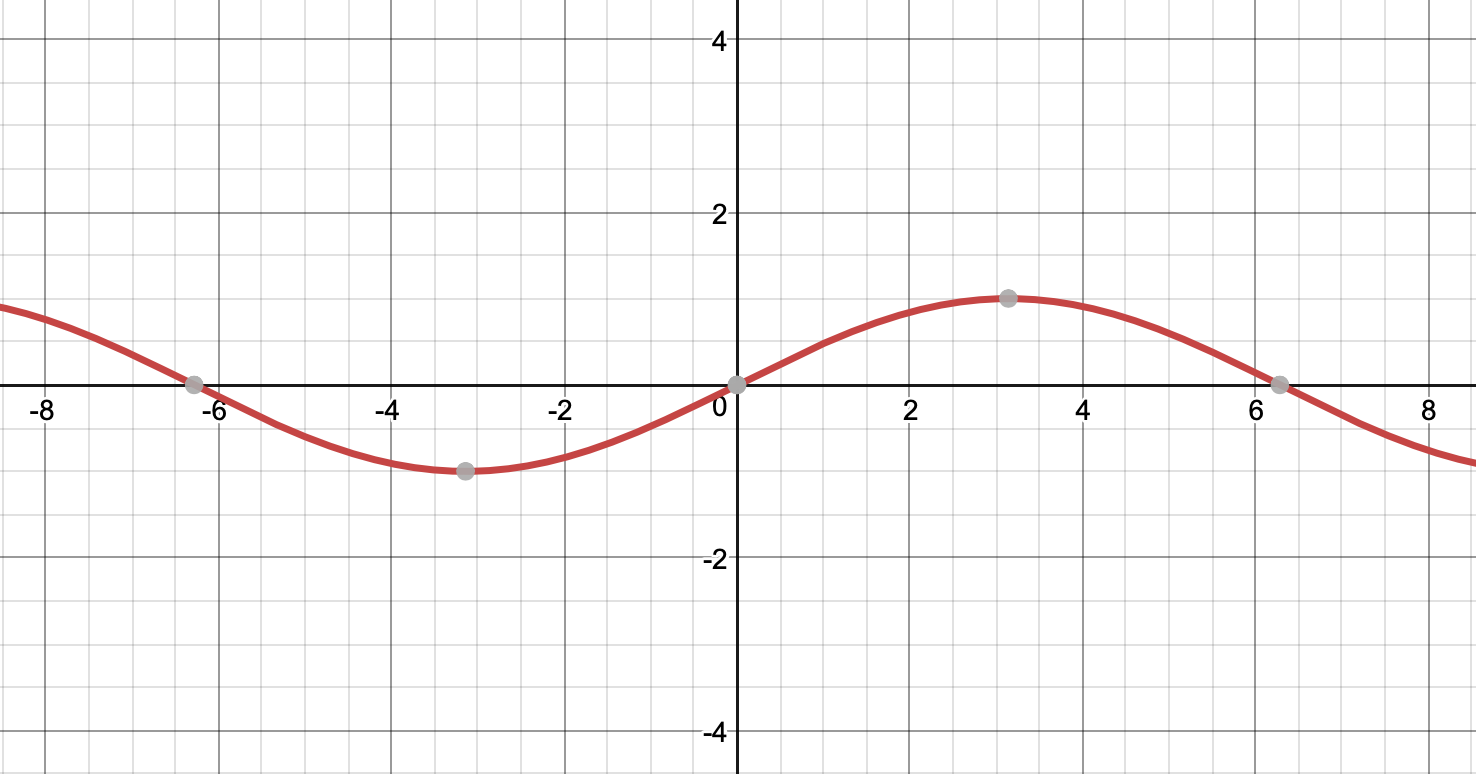

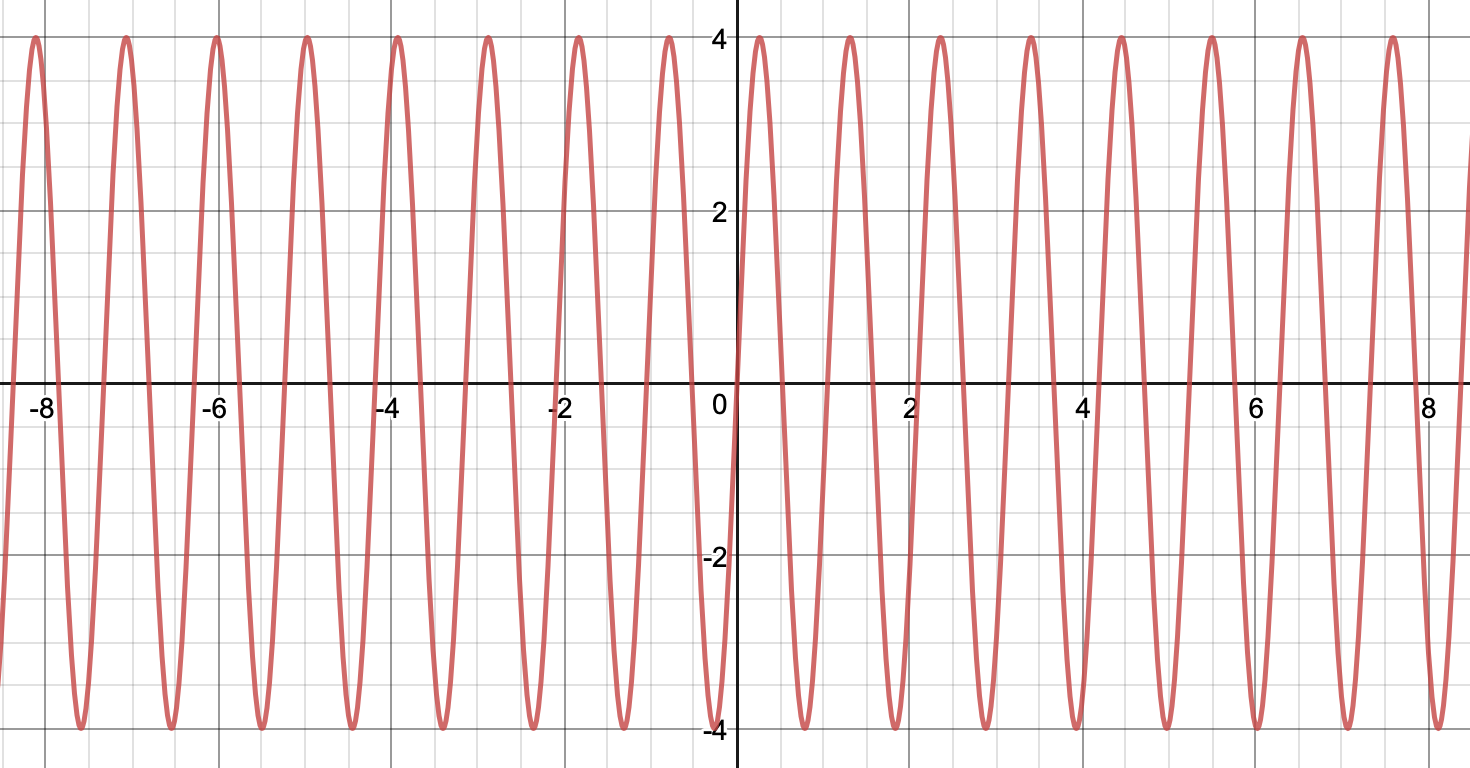

For clarity, I gave the main characteristics of the sound wave on the following graphs (units of measurement are not stamped, I apologize):

Loud high amplitude sound

Silent sound with less amplitude

Alt, with great frequency

Low sound, less frequent

Loud and high sound (take care of your ears!)

In general, this data is enough to acquire a sufficient understanding of what sound is and move on. But if you are interested in this topic, then, of course, you need to study it deeper.

Digital audio options

When writing games and other programs, we will work with a digital type of sound. Digital sound is obtained by sampling (splitting into small points in time), quantization (rounding to a certain level) and coding (actually, turning sound into zeros and ones). A lot of information on this topic can be found in the article Theory of Sound. What you need to know about the sound to work with it. Experience Yandex. Music , which I really liked. There you will find information about measuring the volume and a description of the filters that will be mentioned by me.

The main thing that we need to know about the digital signal is:

Sample rate - describes how accurately your sound will be digitized. For example, when we say that the sampling rate is 44.1 kHz, this means that the signal is measured 44,100 times within one second. Today, the most common sampling rates are 44.1 kHz and 48 kHz.

Bit depth of a digital signal (Bit depth) - describes how wide the dynamic range "fit" into your digital. It is measured in bits and is usually 16, 24 and 32 bit. Dynamic range is the difference between the loudest and quietest sound, expressed in decibels. For example, it is 48 dB for an 8-bit signal, 96 dB for a 16-bit signal, 144 dB for a 24-bit signal and 192 dB for a 32-bit signal.

Bitrate is what all Internet users watched in 2002 when they downloaded music. Bit rate is the number of bits used to transfer or process data per unit of time. In streaming video and audio formats (for example, MPEG and MP3) using quality loss compression, the “bitrate” parameter expresses the compression rate of the stream.

Metadata in music files

In addition to directly sound parameters, we can use information that is hidden in the sound files themselves. Such information is usually called tags and for each file format has its own tag format. We will talk about mp3 files as the most common.

Tags are easiest to read using an existing library, for example using TagLib .

The mp3 format contains in its ID3 structure metadata in which we can store a lot of information. There are several options for ID3 format, but we will talk about ID3 v2.3, in which you can store the most data. From the useful information you need to pay attention to: name, year of recording, genre, BPM (number of beats per minute), track duration . How can these fields help us when generating content? If everything is clear with BPM, it is used for generation all the time and we will talk about it separately, then why do we need other fields? Here you need to show imagination.

For example, we read the genre and year. To begin with, we need to process this information a little. For simplicity, we will consider only English. Mp3 tags (and other tags) are often filled in manually, hence different spelling of genres, different punctuation, etc. The resulting tag is better to lead to a single format, so that it is easier to compare with others. It is advisable to remove all punctuation, spaces, and convert the string to lower case. This can be done using regular expressions.

After such manipulations it will be much easier to compare the genres of songs. Next, you will need to create a database of genres, based on some source, and go through it to compile your database of tags. Here is an example of a list of all genres: musicgenreslist.com . Naturally, you need to choose only the most basic ones.

I did not answer, why such difficulties? I will give examples (very simplified).

Based on the genre, we can:

- Change location (rock - dark forest, electronic music - space, country - desert, pop - city, etc.)

- Change character

- Change enemies, etc.

An example of a character based on the tags rock, electro, pop.

And then you can come up with anything! Year of release of the album: 1960-1970? Dress up your character in the clothes of this period. Track duration 10 minutes? Let your “musically generated snake” have a long tail. There are a lot of opportunities, and there is always a chance to surprise the player.

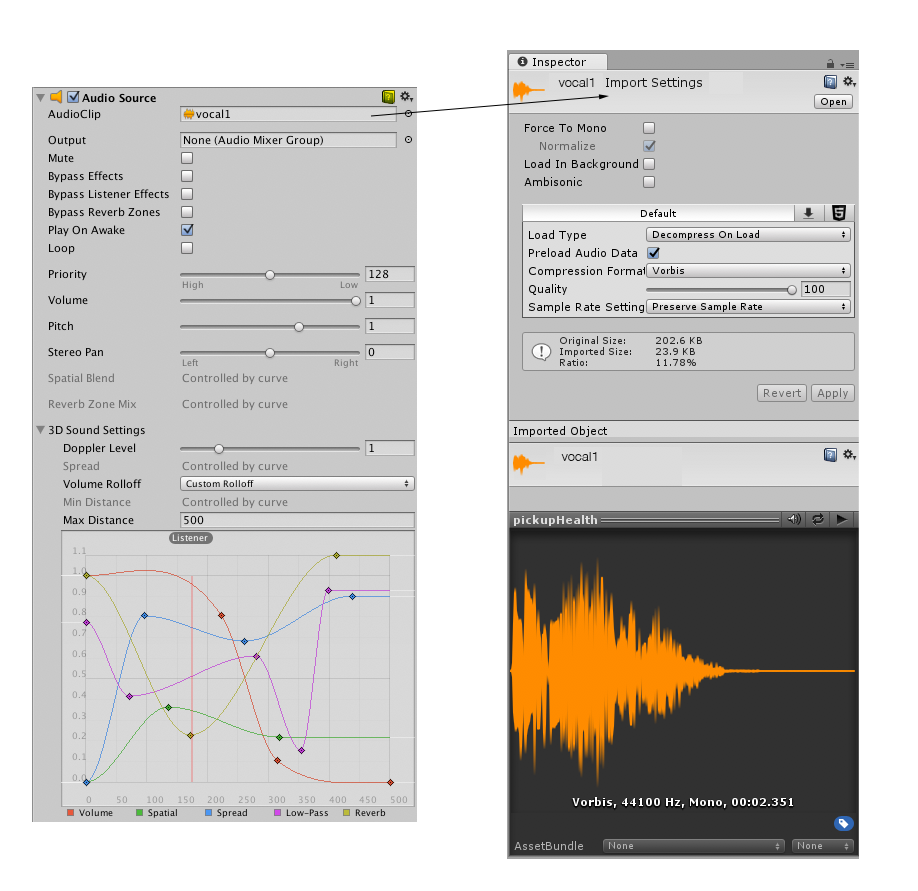

Representation of audio files in Unity

Everything seems to be simple here ...

Unity has several major sound components:

- Audio Listener (listener) behaves like a microphone. It receives incoming data from any audio source (Audio Source) in the scene and plays sounds through the speakers. For most applications, it makes sense to add a listener to the main camera - the Main Camera object.

- Audio Source (source) plays the audio clip in the scene. If the Audio Clip is a 3D clip, the source is played at a specified position in space and will be muted depending on the distance.

- Audio Clips (clips) contain audio data used in audio sources. Unity supports mono, stereo and multichannel sound assets. Unity can import the following file types: .aif, .wav, .mp3, and .ogg.

When deeply immersed in work with sound, you will need to interact directly with the audio clips. The audio clip contains an array of sound frames (samples) that you can read and rewrite. With this (and heaps of matan) you can determine the parameters of the sounding tracks and make sound effects.

Musical track parameters

So, we considered some starting data from our track and we had the first generation rudiments. Now you can go to the more difficult part. We need to understand what dynamic parameters of the track we can determine and how they can be used in the gameplay. Some of the material is based on the Parallelcube studio blog. The guys make assets and tutorials for the Unreal Engine, for which many thanks to them.

We can distinguish the following tasks:

- Defining a barrel as a trigger for special effects

- Identify quiet and loud moments in the track

- Using this data to build a level

- Using this data to create effects

Identify the barrels in the track and use this sound as a trigger.

In order to get started on determining track parameters, you need to select music. To make it easier to work, you need a track in which there is an obvious barrel and, preferably, abrupt transitions in volume. I chose the track Perturbator - Future Club , which you could hear in the game Hotline Miami 2: Wrong Number.

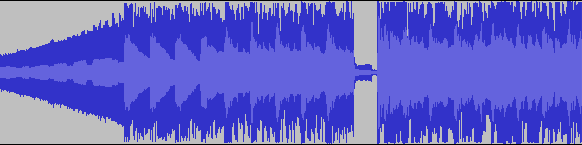

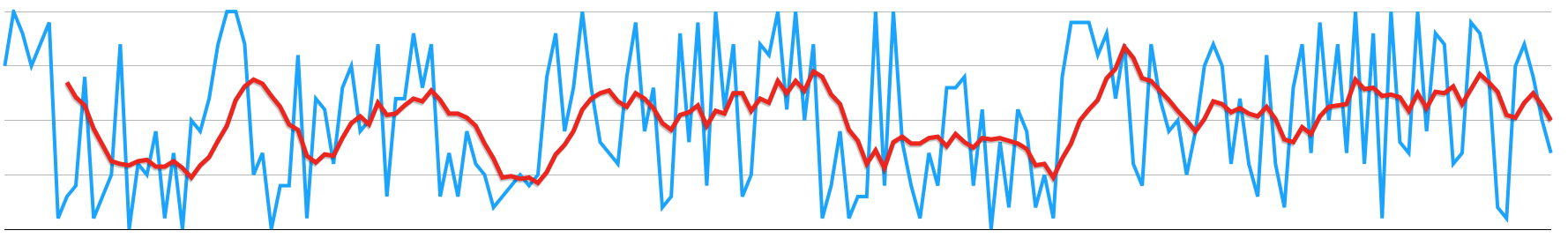

Here is a small analysis of the entry of this track (the audio track was specially reduced)

- The so-called fade in, or intro. At this point, the track is increasing in volume.

- The main shock force appears - a barrel. The moments of the appearance of the barrel are very easy to read on the visual presentation of the audio track. In addition, the background synthesizer sounds here, and its noise is visible on the track. If the track was not compressed, it would be even clearer. (Details about the compression here )

- An additional synthesizer appears, which slightly blurs the clarity of the visual representation of the barrel.

- Pause, appearing before the high point of the track (drop).

- The main part, where, besides the barrel, a bass synthesizer appears, which greatly distorts the visual representation of the signal, but the drum can still be distinguished.

So, our main task is to determine at what point in our barrel sounds. This is a rather difficult task, for which special audio libraries are usually used. Remember, if there are ready-made options, and you have a goal to make an effect, not to learn mate. part, take ready-made options. If your game has a budget and an extra $ 25, then you can buy a special asset from the developer 3y3net, which is called Beat Detection . In addition, you can consider a great option from the developer Allan Pichardo . If you want to understand how such algorithms work, then, of course, write it from scratch yourself.

As we saw on the audio track, the bit is always clearly distinguished by the level of sound. This is its essence. The musicians specially process and compress the track so that the beat is not clogged by other sounds and has its place in the frequency range of the track. The bit is always louder than the average volume level (Average). If the sound is louder than a certain threshold (Threshold), then we just looking at the graphic display of the sound wave, we can confidently say "Yes, this is a bit!".

I would like to take a more detailed technical description and specific algorithms for determining the bit in a separate article (it will be posted some time later), since this topic is much beyond the scope of this material. In short, in such algorithms, the sound first passes through the filters to make it easier to process, after which the dynamics of the sound energy are analyzed. Here is an example of such an algorithm (note, matlab!) .

A full mathematical description of this algorithm can be found in this article (eng.): Design and implementation of a Beat Detector algorithm .

, , , , . , BPM , "" . ?

Beathazard , , , -. - :

, . . , :

, , BPM (beats per minute ). BPM , , . (150 — 180 BMP), X5 ..

. , — float.

, . , , , . , . , .

.

, , , , ( ). , , 2D-? . :

3D, 2D . , Audiosurf , .

Elastomania, ? !?

, , , - .

Conclusion

, , . , , , , . , , , . , , . Unity. Follow the news!

useful links

')

Source: https://habr.com/ru/post/429872/

All Articles