Backup to tape

There is a very large network of stores in Russia. Each store is backed up to a tape library (below the photo is a ZIP). Then they take the tapes and take them to the archive by car.

Mechanical devices: they break, fail, we go to repair. Then they go with an extended warranty, and it infuriates everyone.

')

At some point they are outdated. But the budget was exactly the new version of the tape library. At this point, the customer appeared at our doorstep with a certain amount and asked if it was possible to invent something within its framework.

We thought about the central installation of one large piece of iron, but the situation was complicated by the fact that the channels from the stores are limited to 5 Mbit / s (from the farthest).

When studying the infrastructure, it turned out that Dell EMC Data Domain was already installed in the central office. True for their office tasks. And in the office a toy for a long time and successfully used. They know how to work with it, it is compatible with their backup software and is firmly embedded in the infrastructure. Now - clarification of the problem: there are shops, ribbons, money. Locally, they should be stored in the store for up to three months, then not in the store data should be available for up to 10 years for a number of indicators. This is necessary according to the requirements of the regulator and internal policies.

There are wishes not to bother with ribbons. No special speed requirements. But I would very much like to minimize the number of admin's manual operations and reduce the chance of non-recovery. With tape this, alas, happens. One tape broke - copies of Khan, because there is no redundancy.

We decided to propose to consider alternatives to tapes: transfer backups to S3-compatible storage. Understandably, you can get up in the Amazon, MS-cloud, to us in a public cloud - anywhere, where there are object storages with certain SLA. And you can take and mount a small private cloud right in the office. This is exactly the solution that Dell EMC has: you can bring the hardware to the office and get the installation of the cloud. And Dell EMC is already a familiar vendor, so integration issues are much easier than in all other cases.

Plus, there is already a data domain that can do deduplication. And the transfer of deduplicated data to it allows you to greatly reduce traffic.

At the request of the customer, a comparative cost analysis was performed: Dell EMC at the site, our cloud, CRIC and MS.

Dell EMC ECS is a big purchase, it is necessary to extend support there, place it in the server and data center. We looked at the horizon for 10 years, and it turned out to be more expensive due to the fact that the minimum configuration is very redundant and therefore costs like a wing from an airplane, and you have to pay immediately plus then extend support (for dollars that are not clear how much will cost in 3-5 –7 years) and keep in mind the dates of end of sale and end of support. MS storage with the same redundancy is more expensive. A feature of our S3 is to automatically scatter data into three geographically separated sites. At MS the tariff for geodistribution is higher.

As a result, the customer looked and asked the pilot in our S3. Let's say, see if it works. Because vendors say: we have the support of Amazon, Azhura and Google.klaud, and no one knows whether this solution will work with us.

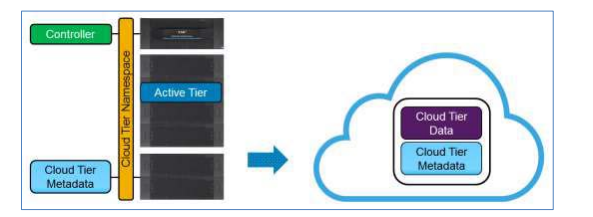

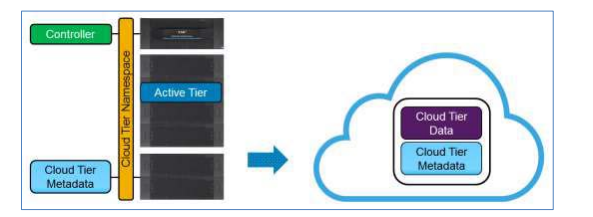

The point is to put the “hot” data on the storage, and then download the old copies from the storage to the cloud in the same format as they are on the storage.

We did the Dell EMC Data Domain testing. They have stated that they can shift their copies from themselves to S3. Dell EMC DD has earned, their support helped us with great enthusiasm, because the engineer on the other side was really happy about this task, said: cool story with Veeam!

Further, we are faced with the fact that Dell EMC has a peculiarity: this is done so that the data first add up to 14 days for a piece of metal and only then can be uploaded to the cloud. Engineers say it is deeply wired, and this is the logic of the developers: these two storage cycles are written almost in hardcode. More - you can, less - no way. It is believed that two weeks is the storage time when the user wants to recover.

If the data has already been transferred to the cloud - we returned it back, recovered from it, and they again lie for 14 days before leaving.

We would like to put the week, but all the royal army and all the cavalry could not help us.

The result - met the specified budget without tapes, took into account all the costs of support works and introduced a more reliable solution with geodistribution. Well, they pleased one engineer on the vendor's side, who was happy that there are people in this world who know how to think: this is me now about the team of administrators of the chain of stores.

Mechanical devices: they break, fail, we go to repair. Then they go with an extended warranty, and it infuriates everyone.

')

At some point they are outdated. But the budget was exactly the new version of the tape library. At this point, the customer appeared at our doorstep with a certain amount and asked if it was possible to invent something within its framework.

We thought about the central installation of one large piece of iron, but the situation was complicated by the fact that the channels from the stores are limited to 5 Mbit / s (from the farthest).

Found Data Domain

When studying the infrastructure, it turned out that Dell EMC Data Domain was already installed in the central office. True for their office tasks. And in the office a toy for a long time and successfully used. They know how to work with it, it is compatible with their backup software and is firmly embedded in the infrastructure. Now - clarification of the problem: there are shops, ribbons, money. Locally, they should be stored in the store for up to three months, then not in the store data should be available for up to 10 years for a number of indicators. This is necessary according to the requirements of the regulator and internal policies.

There are wishes not to bother with ribbons. No special speed requirements. But I would very much like to minimize the number of admin's manual operations and reduce the chance of non-recovery. With tape this, alas, happens. One tape broke - copies of Khan, because there is no redundancy.

Our option

We decided to propose to consider alternatives to tapes: transfer backups to S3-compatible storage. Understandably, you can get up in the Amazon, MS-cloud, to us in a public cloud - anywhere, where there are object storages with certain SLA. And you can take and mount a small private cloud right in the office. This is exactly the solution that Dell EMC has: you can bring the hardware to the office and get the installation of the cloud. And Dell EMC is already a familiar vendor, so integration issues are much easier than in all other cases.

Plus, there is already a data domain that can do deduplication. And the transfer of deduplicated data to it allows you to greatly reduce traffic.

At the request of the customer, a comparative cost analysis was performed: Dell EMC at the site, our cloud, CRIC and MS.

Dell EMC ECS is a big purchase, it is necessary to extend support there, place it in the server and data center. We looked at the horizon for 10 years, and it turned out to be more expensive due to the fact that the minimum configuration is very redundant and therefore costs like a wing from an airplane, and you have to pay immediately plus then extend support (for dollars that are not clear how much will cost in 3-5 –7 years) and keep in mind the dates of end of sale and end of support. MS storage with the same redundancy is more expensive. A feature of our S3 is to automatically scatter data into three geographically separated sites. At MS the tariff for geodistribution is higher.

As a result, the customer looked and asked the pilot in our S3. Let's say, see if it works. Because vendors say: we have the support of Amazon, Azhura and Google.klaud, and no one knows whether this solution will work with us.

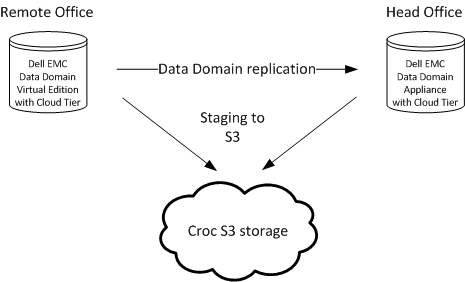

The point is to put the “hot” data on the storage, and then download the old copies from the storage to the cloud in the same format as they are on the storage.

We did the Dell EMC Data Domain testing. They have stated that they can shift their copies from themselves to S3. Dell EMC DD has earned, their support helped us with great enthusiasm, because the engineer on the other side was really happy about this task, said: cool story with Veeam!

Further, we are faced with the fact that Dell EMC has a peculiarity: this is done so that the data first add up to 14 days for a piece of metal and only then can be uploaded to the cloud. Engineers say it is deeply wired, and this is the logic of the developers: these two storage cycles are written almost in hardcode. More - you can, less - no way. It is believed that two weeks is the storage time when the user wants to recover.

If the data has already been transferred to the cloud - we returned it back, recovered from it, and they again lie for 14 days before leaving.

We would like to put the week, but all the royal army and all the cavalry could not help us.

Total

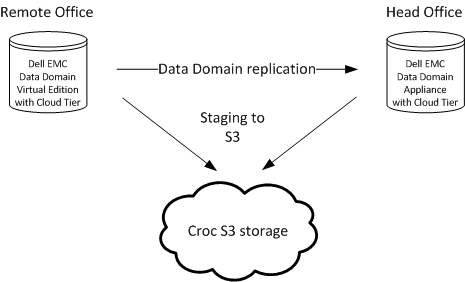

- Veeam collects backups from all store objects and gives it to Data Domain, as usual. He doesn't know anything about S3.

- Data Domain instances deduplicate traffic in the field and, if necessary, can send replicas to the central office.

- Cloud Tier is built into the data domain, it automatically transfers data to S3 in our data centers.

- When the user needs to pull something out of the backup, he knocks on Veeam. Veeam knocks on his recording system, the recording system knocks on his “disk” (physical or S3) and takes a copy. Everything is pretty nicely integrated.

The result - met the specified budget without tapes, took into account all the costs of support works and introduced a more reliable solution with geodistribution. Well, they pleased one engineer on the vendor's side, who was happy that there are people in this world who know how to think: this is me now about the team of administrators of the chain of stores.

Links

- Customer backups (2016)

- Backup in the data center (2012)

- My mail for communication - EKorotkikh@croc.ru

Source: https://habr.com/ru/post/429676/

All Articles