Microinteractions in iOS. Yandex lecture

A few weeks ago, a special event of the CocoaHeads community took place in the Yandex office - more ambitious than traditional meetings. Developer Anton Sergeev spoke at this meeting and talked about the model of micro-interactions that UX-designers usually use, as well as how to put the ideas in it into practice. Anton paid the most attention to animation.

- It is very important for me that I was honored to meet the guests. I see here those with whom I have been acquainted for a very long time, those with whom I have recently been acquainted, and those with whom I have not yet known. Welcome to CocoaHeads.

I will tell about microinteractions. This is a little clickback - we are engineers, developers, let's talk more about the software part, but let's start with a very humanitarian topic, such as microinteraction. As a result, we will apply this humanitarian theme in the technical part in order to learn more effectively and simply to design very small visual components, such as buttons, small loaders, bars. They are saturated with animation, and the branched animation code can often look very difficult, it is extremely difficult to maintain.

')

But first, let's digress a little. Think about it, do you remember when you decided to become a developer? I remember that clearly. It all started with a table. Once I decided to learn ObjC. Fashionable language, fun, just like that, without far-reaching plans. I found the book, it seems, Big Nerd Ranch, and began to read chapter after chapter, perform each exercise, checked, read, until I reached the table. It was then that I first became acquainted with the delegate pattern, more precisely with its subtype "Data Source", the data source. This paradigm now seems very simple to me: there is a data source, delegate, everything is simple. But then it blew my mind: how can I separate a table from completely different data? You once saw a table on a piece of paper in which you can put an infinite number of lines, completely abstract data. It affected me a lot. I realized that programming, development has great opportunities, and it will be very interesting to use them. Since then, I decided to become a developer.

During the development, various patterns were encountered. Huge, which are called architectures that describe the whole application. Small, which dozens fit in a small button. It is important to understand that all these patterns came not from the air, but from the humanitarian industry. The same delegate pattern. Delegation appeared long before programming, and programming takes over all these humanitarian things to work more efficiently.

Today I will talk about another approach, which adopts another humanitarian thing. In particular - about microinteraction.

It all started with a loader. In the last work, before Yandex, I had the task to repeat the google material design loader. There are two of them, one is uncertain, the other is certain. I had a task to combine them into one, he had to be able to both certain and indefinite, but there were strict requirements for it to be extremely smooth. At any moment we can go from one state to another, and everything should be smooth and neatly animated.

I am a smart developer, I did everything. I got more than 1000 lines of incomprehensible noodle code. It worked, but I received a cool remark on the code review: “I really hope that no one will ever rule this code.” And for me it is practically incompetent. I wrote awful code. It worked cool, it was one of my best animations, but the code was terrible.

Today I will try to describe the approach that I discovered after I left that job.

Let's start with the most humanitarian theme - microinteraction models. How are they embedded and generally where are they hidden in our applications? We continue with the use of this model in our technical world. Consider how UIView, which deals with the display and animation, how it works. In particular, let's talk a lot about the CAAction mechanism, which is closely integrated into UIView, CALayer and works with it. And then we look at small examples.

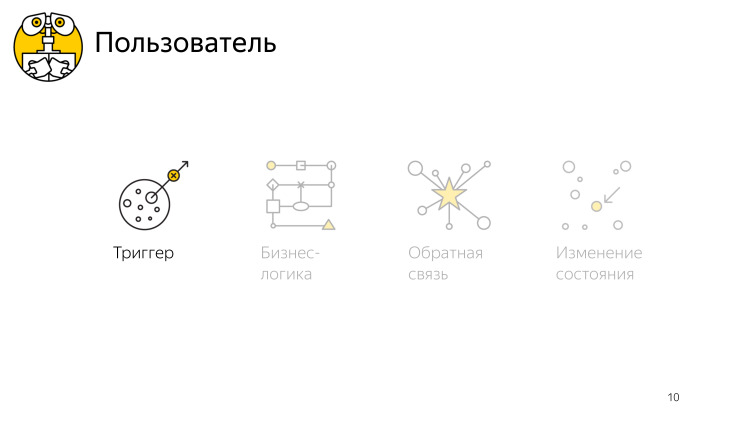

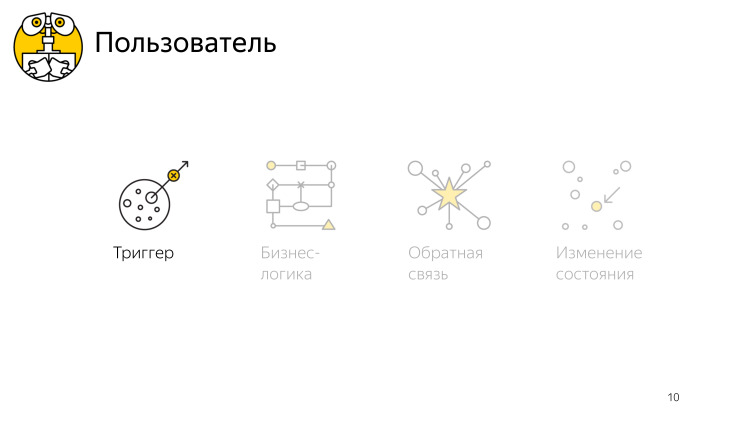

First definition. Apparently, the author really liked the “micro” prefix, but there are no macro or nanointeractions, the size does not matter. For simplicity, we will simply call them interactions. This is such a convenient model that allows you to describe any interaction with the application, from beginning to end. It consists of four points: a trigger, business logic that needs to be implemented in this interaction, feedback to convey something to the user, and a change in the state of the application.

I will tell one story with three different roles. I'll start with the user, as the most important in the development. When I was preparing for the report, I got sick. I needed to find a pharmacy, and I opened Yandex.Maps. I opened the app, look at it, it looks at me, but nothing happens. I then realized that I’m the user, I’m the main user, giving instructions to what the application should do. I oriented, clicked on the “search” button, entered “pharmacy”, clicked “OK”, the application did the internal work, found the necessary pharmacies that were next to me, and brought it to the screen.

I looked for the right one and found that, in addition to the pharmacies, a special button appeared on the screen - to build a route. Thus, the application has moved to a new state. I clicked on it, and went to the pharmacy. I went to this application for some purpose - to find a pharmacy. I reached it. I am a happy user.

Before this application appeared, and I was able to search for something in it, it was first developed. What did the UX designer think when he invented this process? It all started with the need to get out of the dumb scene, when the user and the application look at each other, and nothing happens. For this was needed some kind of trigger. Everything has a beginning, and here, too, it was necessary to start somewhere.

Trigger was selected - search button. When clicking on it, it was necessary to solve the problem from a technical point of view. Request data on the server, parse the response, somehow update the model, analyze. Request the current position of users and so on. And so we got this data and know exactly where all the pharmacies are.

It would seem that it was possible to finish it. After all, we solved the problem, found all the pharmacies. There is only one problem: the user still knows nothing about these pharmacies. He needs to get it across.

Somehow pack our solution to this problem and bring it to him in a beautiful package so that he will understand it. It so happens that users are people, they interact with the outside world through their senses. The current state of technology is such that only three senses are available to us, as mobile application developers: vision — we can show something on the screen, hearing — can reproduce in the speakers, and a tactile sensation, we can push the user into the arm.

But the man is much more functional. But the current state of technology is such that at the moment we can only rely on these three. And we choose in this case the screen, show on top of the map the nearest pharmacies, and a list with more detailed information about these pharmacies. And it would seem that everything is exactly there, the user found pharmacies and everything is fine.

But there is a problem here. When the user entered the application, he was in a context in which he does not know where the pharmacies are located. And the tasks he had to find her. But now the context has changed, he knows where the pharmacies are, he no longer needs to look for them. He had the following task - to pave the route to the next pharmacy. That is why we need to display additional controls on the screen, in particular, this is the button for building a route, that is, to transfer the application to another state in which it is ready to accept new triggers for the next interactions.

Imagine, the UX-designer came up with all this, comes to the developer and begins to describe in colors how the user presses the button, how it happens, how it is searched, how the user is satisfied, how we increase the DAU and so on. The developer’s stack of unresolved issues overflowed somewhere else on the first sentence, when we first mentioned the button.

He listens patiently to everything, and in the end, when it ends, he says that, okay, it's cool, but let's discuss the button. This is an important element.

During the discussion, it turns out that the button is inherently a trigger, it contains within itself the logic by which it can receive messages from the system, in particular about user clicks on the screen. Based on this click, it can launch a chain of events, which begins with the same button sending messages to different objects about the need to start various processes, in this case request information on the server, some more.

When pressed, the button changes its state, it becomes pressed. When the user releases - ceases to be pressed. That is, it gives feedback to the user so that he understands what to expect from this button. And the button can be pressed, not pressed, be active or inactive, in different states, and go through different logic from one state to another.

Thus, we looked at the fact that the same microinteraction model, which consists of a trigger, business logic, feedback and state changes, can describe our application at various scales, as in the whole use case, a huge search for the nearest pharmacy, and in terms of a little button.

And this is a very convenient model that allows you to simplify the interaction within the team and describe programmatically, to separate four entities: trigger, business logic, feedback, and state change. Let's see what UIKit provides us to use. And not just provides, but it uses. When implementing various animations, a small component of a UIView subclass, it only uses this mechanism and does not follow a different path.

Let's start with UIView, how it fits into this model. Then we will consider CALayer, what it provides to us to maintain these states, and we will consider the mechanism of actions, the most interesting point.

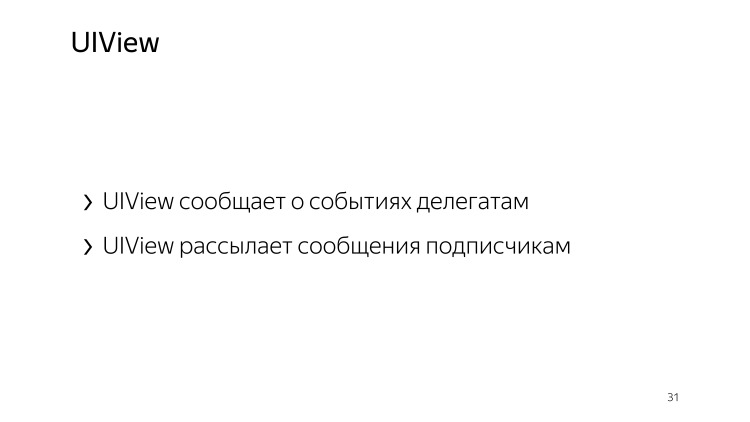

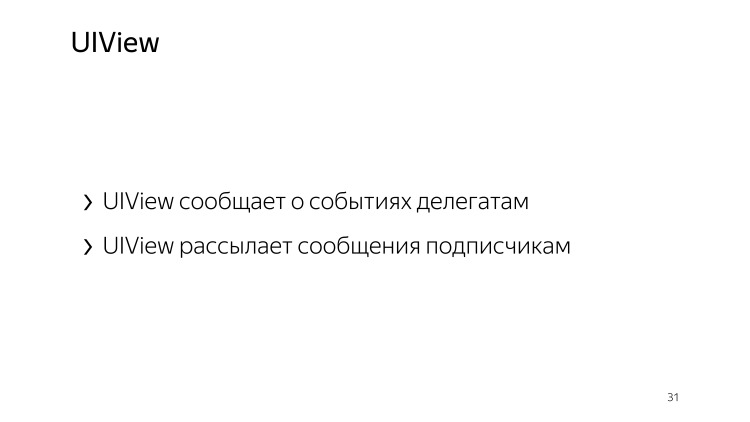

Let's start with UIView. We use it to display some rectangles on the screen. But in fact, UIView cannot draw itself, it uses another CALayer object for this. In fact, UIView is responsible for receiving messages about touching the system, as well as other calls, about the API that we have defined in our subclasses of UIView. Thus, UIView itself implements the trigger logic, that is, the launch of some processes, receiving these messages from the system.

Also, UIView can notify its delegates about events that have occurred, and also send messages to subscribers, such as, for example, subclass UIControl with various events. Thus, the business logic of this UIView is implemented. Not all of them have business logic; many of them are only display elements and do not have feedback in the sense of business logic.

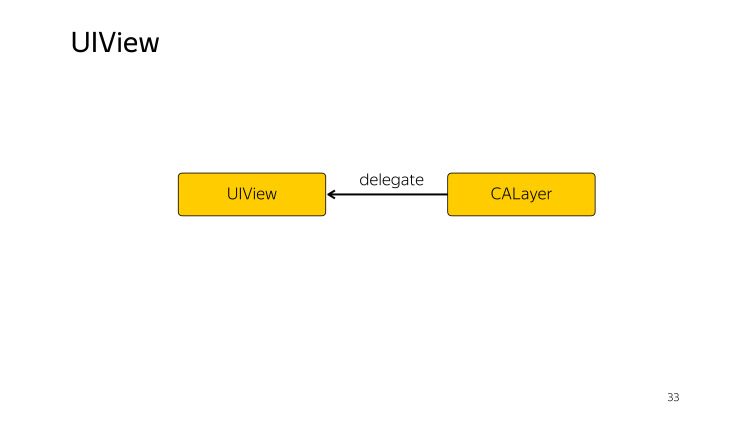

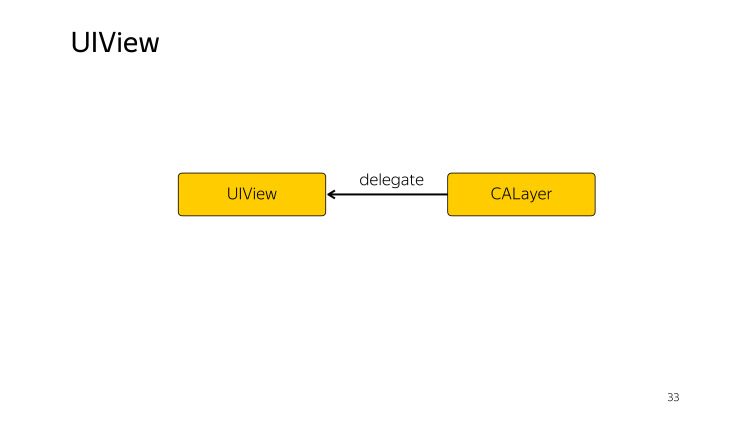

We looked at two points, a trigger and a business logic. And where is the feedback and state change hidden in UIView? To understand this, we must remember that UIView does not exist by itself. When creating it, it creates itself a backlayer, a subclass of CALayer.

And he appoints himself a delegate. To understand how UIView uses CALayer, it can exist in different states.

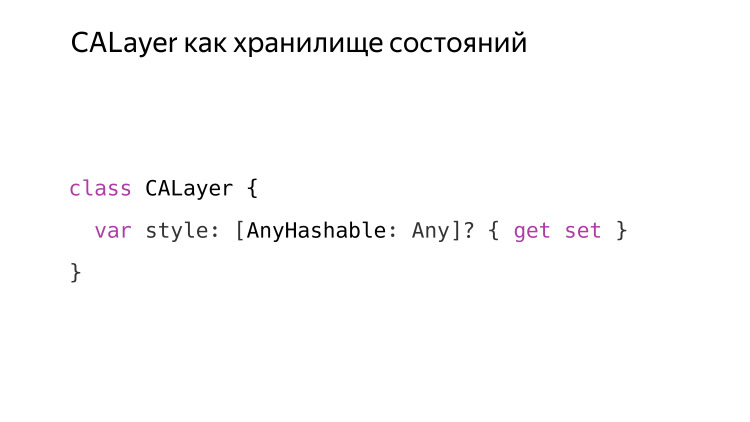

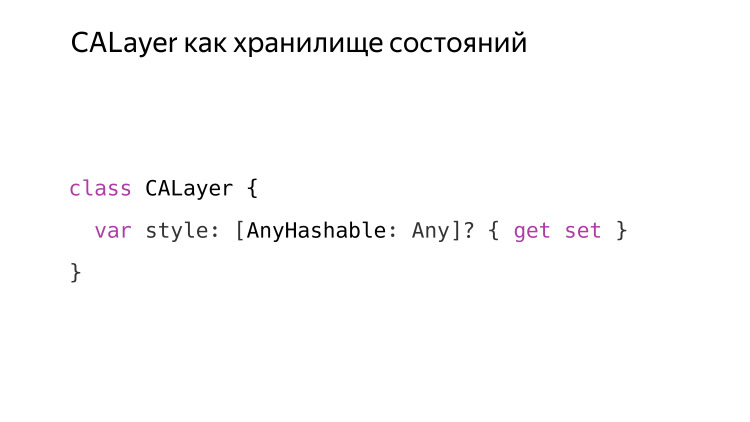

How to distinguish one state from another? They differ in the data set that needs to be stored somewhere. We will consider what opportunities CALayer provides us with for UIView so that it stores the state.

We have a slightly expanded interface, an interaction between UIView and CALayer, and UIView has an additional task - to update the storage inside CALayer.

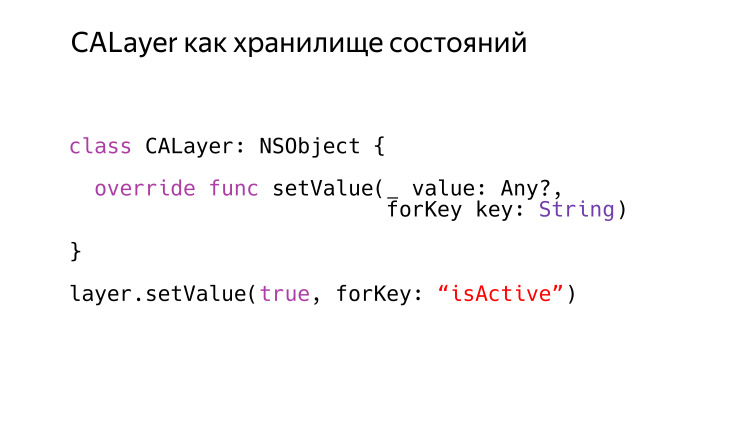

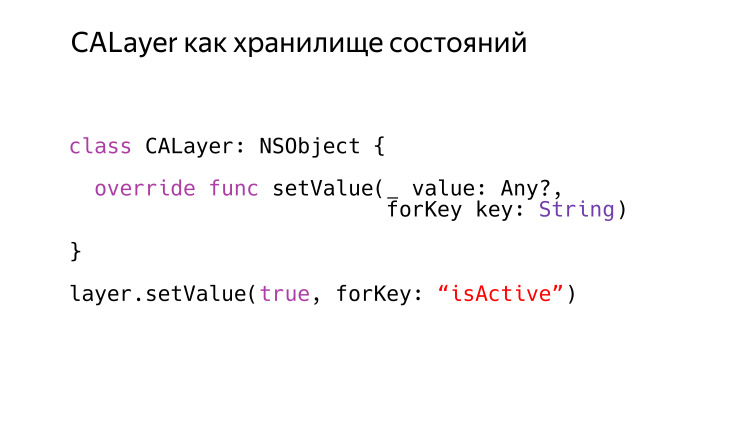

A little-known fact that few people use: CALayer can behave like an associative array, which means that we can write arbitrary data to it on any key as follows: setValue (_: forKey :).

This method is present in all subclasses of NSObject, but unlike many others, when it receives a key that is not redefined by it, it does not fall. And it records correctly, and then we can count it. This is a very handy thing that allows, without creating subclasses of CALayer, to write down any data there and then read it in consultation with them. But this is a very primitive simple repository, in fact, one dictionary. CALayer is much more progressive. It supports styles.

This is implemented by the Style property that any CALayer has. By default, it is nil, but we can redefine it and use it.

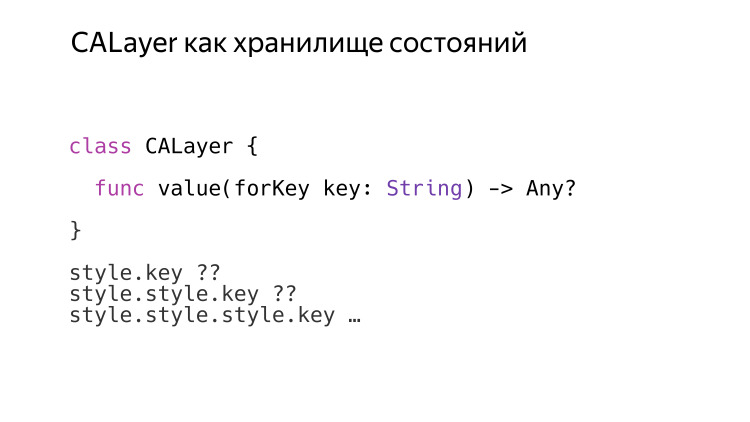

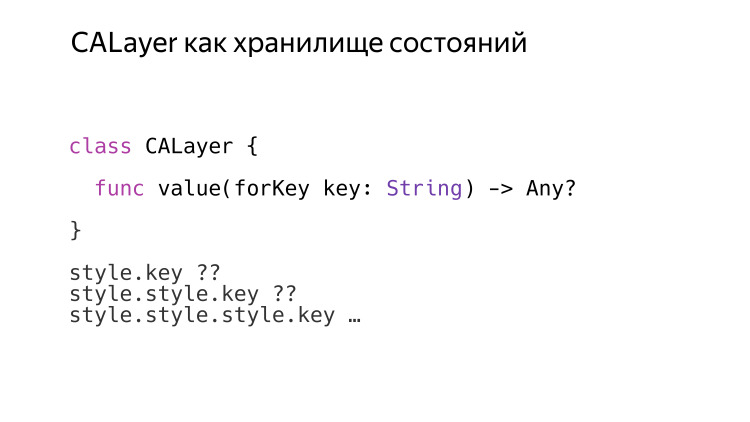

In general, this is a regular dictionary and nothing more, but it has a peculiarity about how CALayer works with it if we request value forKey, another method that NSObject has. It acts very interestingly, it searches for the necessary values in the style dictionary recursively. If we pack one style existing in the new style with the style key and write some keys there, it will be searched as follows.

First look at the root, then deep into, and so on, until it makes sense. When style becomes nil, then there is no sense to look further.

In this way, UIView, using the infrastructure provided by CALayer, can organize state changes, update the internal CALayer storage, either using style, a very powerful storage that can simulate a stack, or using a regular associative array, which is also very efficient and very useful .

Finished with the repository, start with CAAction. I will tell you more about him.

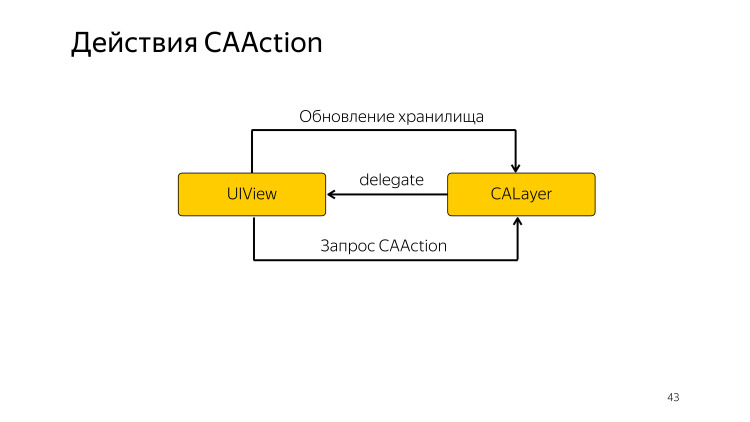

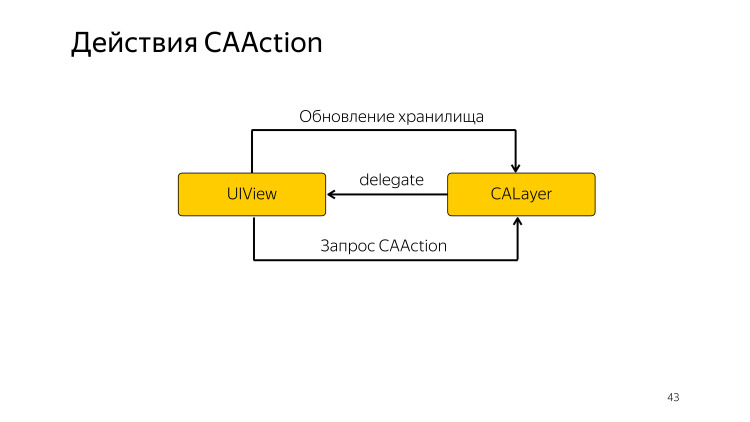

There is a new challenge for UIView - to request actions from CALayer. What are action games?

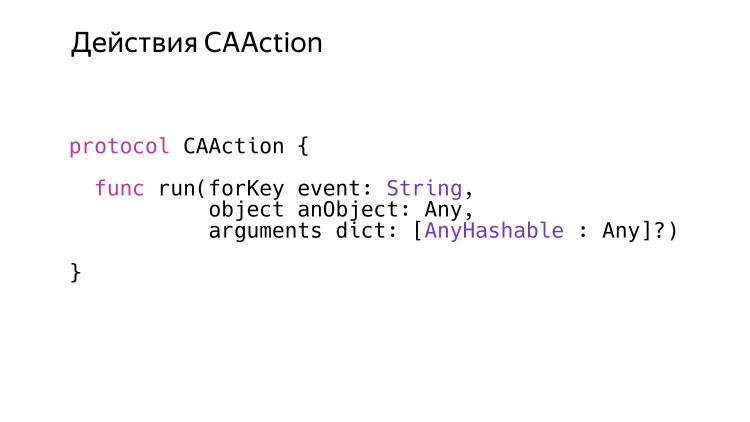

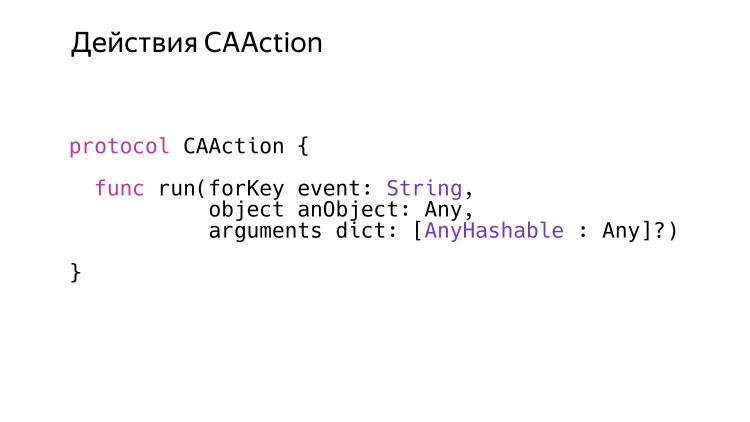

CAAction is only a protocol with only one method - run. Apple generally loves the cinema theme, the action here is like “camera, motor!”. This “motor” is just an action, and it’s not just that the name was used. The run method means to launch an action that can start, run and end, which is the most important. This method is very generic, it has only the string event, and everything else can be of any type. In ObjC, these are all id and the usual NSDictionary.

Inside UIKit, there are classes that satisfy the CAAction protocol. First is the animation. First, we know that you can add animation to a layer, but this is a very low-level thing. High-level abstraction over it - to launch action with the necessary parameters with a layer.

The second important exception is NSNull. We know that it cannot call any methods, but it satisfies the CAAction protocol, and this is done in order to conveniently look for CAAction on layers.

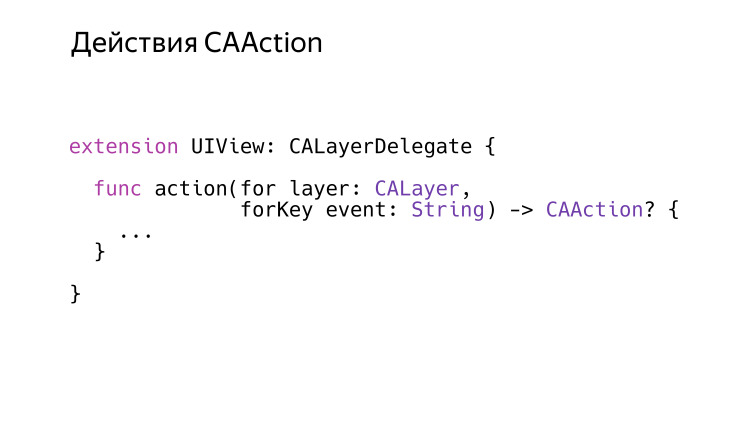

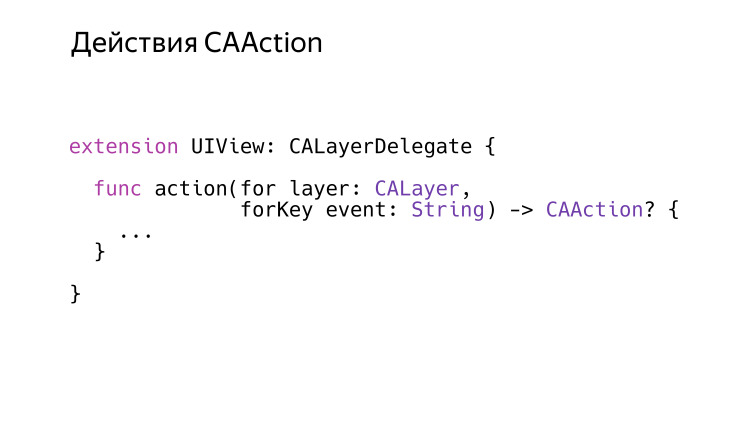

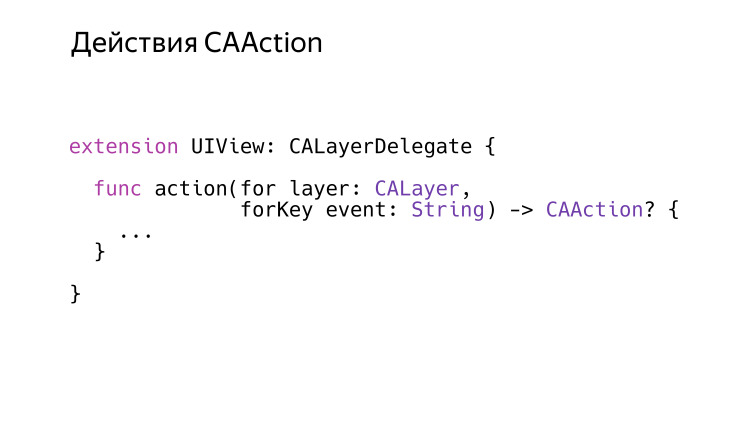

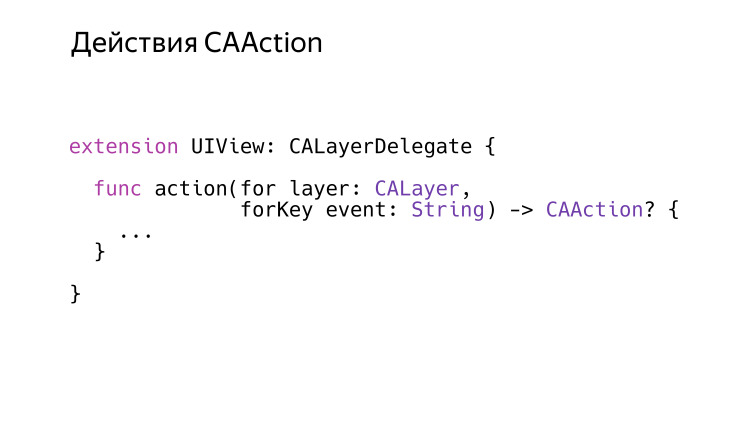

As we said before, UIView is a delegate for CALayer, and one of the delegate methods is action (for: forKey :). The layer has a method, action forKey.

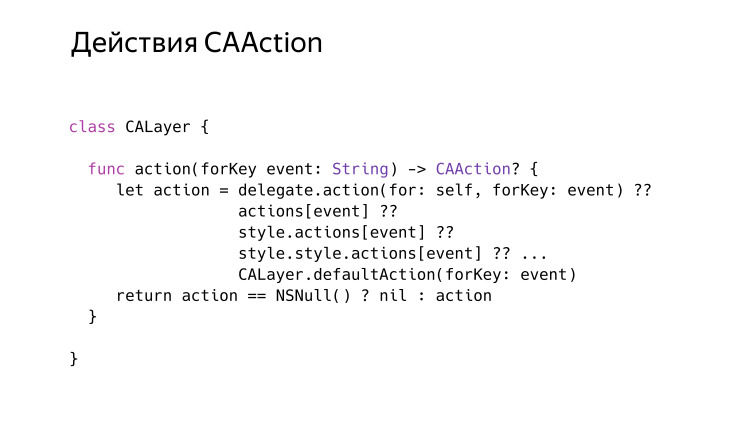

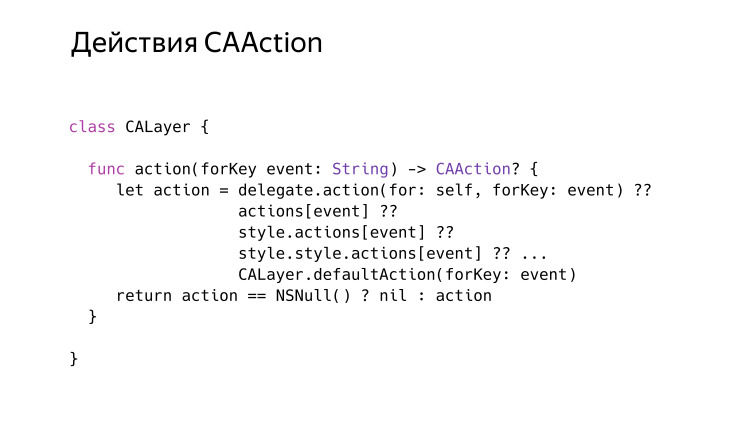

We can call it at the layer at any time, and at any time it will give the correct action or nil, as it can also give. Algorithm is a very unusual search. Here the pseudocode is written, let's take a look at the lines. When receiving such a message, he first consults with the delegate. The delegate can either return nil, which will mean that the search should continue elsewhere, or it can return a valid action, a valid object that satisfies the CAAction protocol. But there is a logical rule: if it returns an NSNull that satisfies this protocol, then it will later be converted to nil. That is, if we return Null, in fact it would mean “stop searching.” Action is not and is not necessary.

But there is another one. After he had consulted with the delegate, and the delegate returned nil, he continues to search. First in the Actions dictionary, which the layer has, and then it will recursively search in the style dictionary, where there can also be a dictionary with the key actions, into which many actions can be written, and it will also be able to search them recursively. If it doesn’t work there either, it will request the default action forKey class method, which is defined by CALayer and until recently returned something, but lately it always returns nil in recent iOS versions.

Understood with the theory. Let's see how everything is applied in practice.

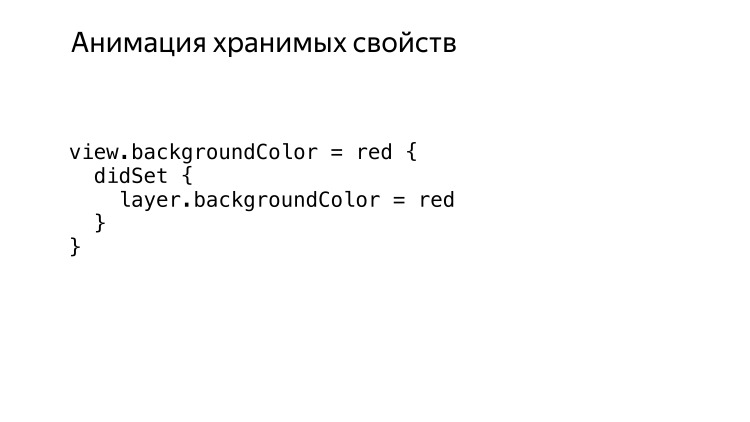

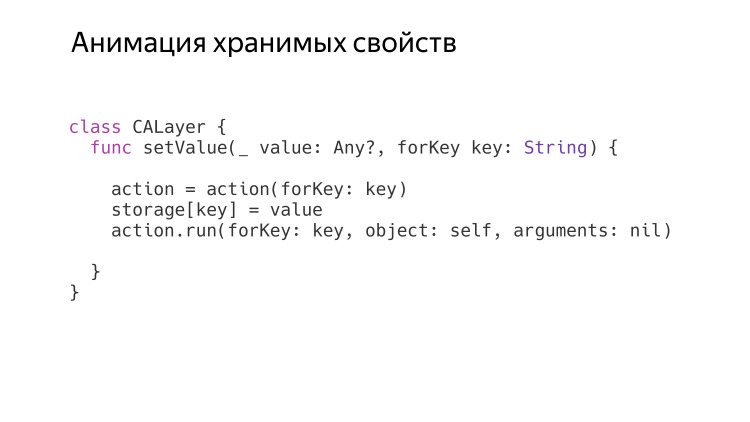

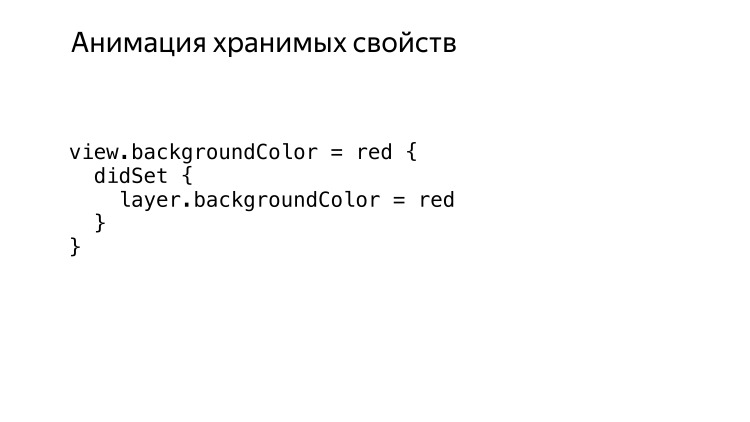

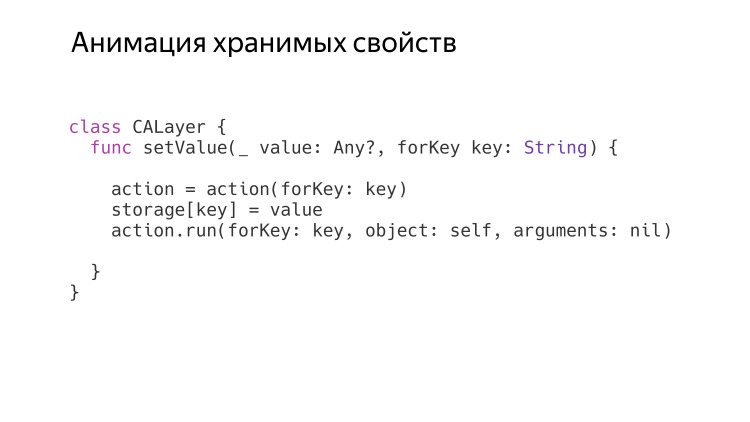

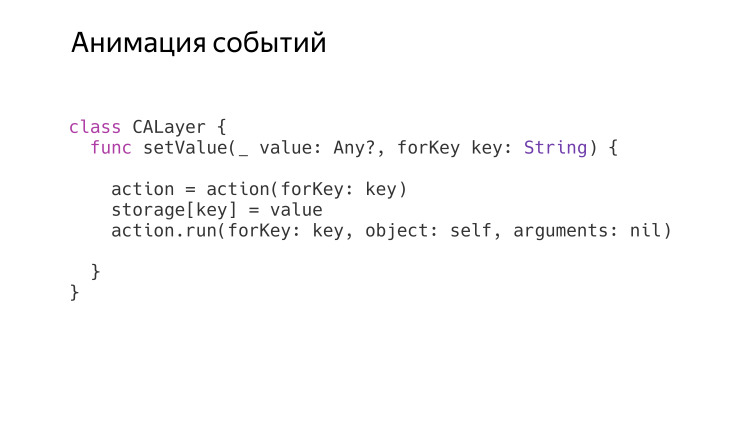

There are events, they have keys, some actions are taking place on these events. In principle, two different types of events can be distinguished. The first is the animation of stored properties. Suppose when we call View backgroundcolor = red, then it is theoretically possible to animate it.

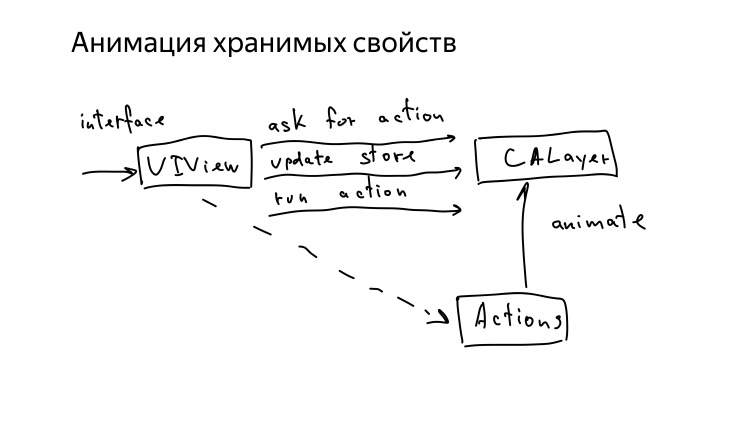

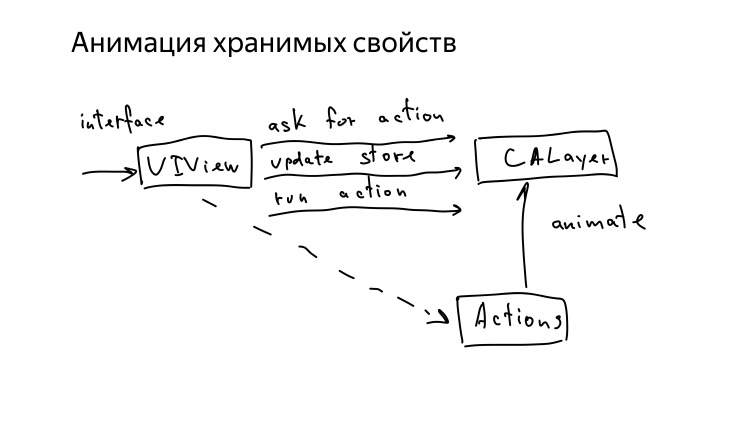

What is the report about patterns without a scheme? I drew a couple. UIView has some kind of interface that we defined in subclasses or the one that is received from the system with events. The task of UIView is to request the necessary action, update the internal store and launch the action that occurred. The order is very important about the request: the action, only then the update of the action, and only then the update of the store and the action.

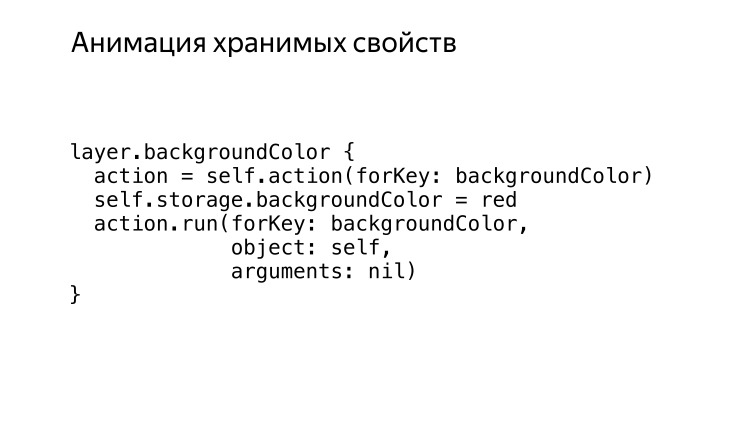

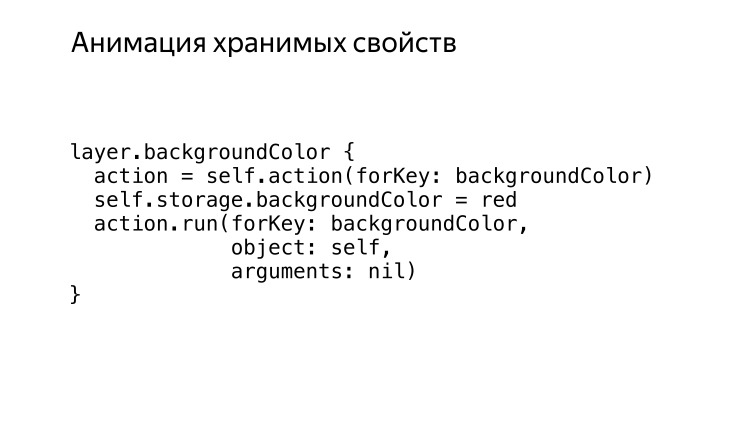

What happens if at UIView we update backgroundColor. We know that in UIView everything that concerns the display on the screen, it is all the same proxy to CALayer. It is everything that he receives, just in case, he caches, but at the same time, CALayer broadcasts everything, and CALayer is engaged in all the logic. What happens inside CALayer when he is asked to change the background? Everything is a little more complicated here.

For starters, he will ask for action. And it is important to understand that the action will be requested first. This will allow CALayer to ask its current values, including backgroundColor, at the time of the action creation, only then the store will be updated, and when the received action receives the run command, it will be able to consult CALayer and get new values. Thus, he will have both old and new ones, and this will allow him to create an animation, if there is a need for it.

But there is one feature in UIView, if we change the backgroundColor in UIView, if we do it in the animation block, then it is animated, and if outside the animation block, it is not animated.

It's very simple, there is no magic. But it suffices to remember that UIView is a delegate to CALayer, it has such a method. Everything is very simple.

If this method was launched in a block of animations, then it will return some kind of action. If outside the animation block, this method will return NSNull, which means you don’t need to animate anything. , CALayer .

, UIView , . . ?

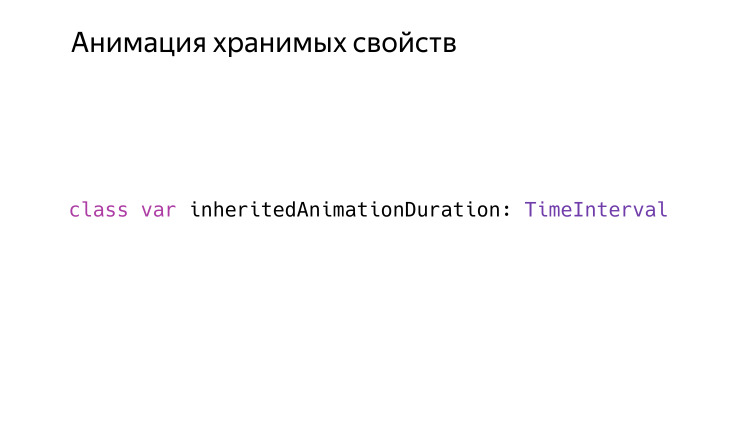

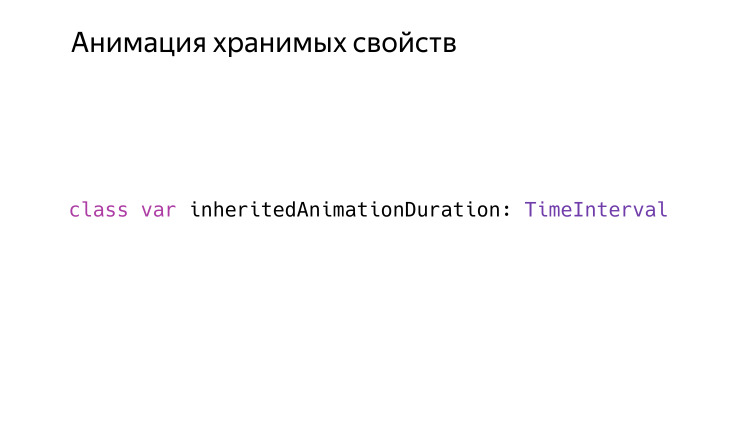

, . UIView , read only, , inheritedAnimationDuration. . , . .

? duration, . , run, , .

, CAAction, backgroundcolor opacity, UIView . , , , , . . setValue forKey , , , , , , .

, , , , .

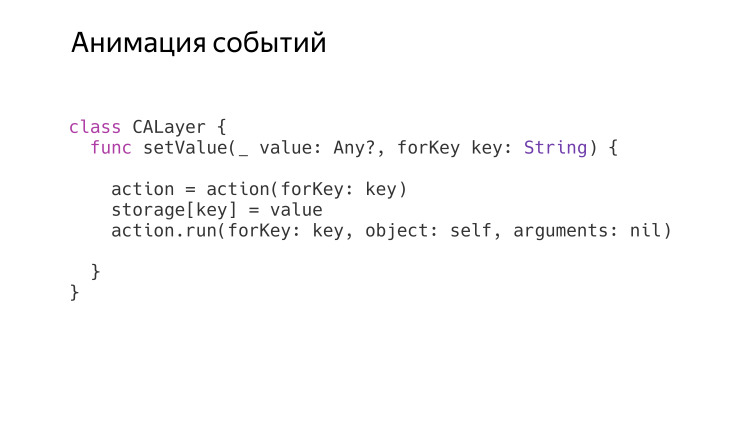

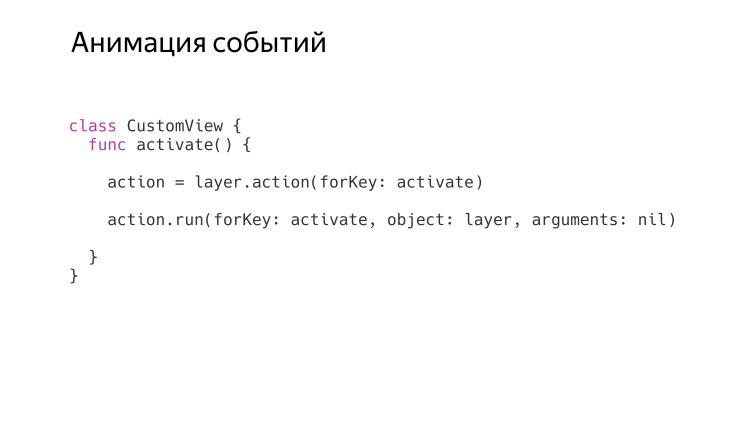

— . , «» «» . .

.

. , , , . UIView CALayer, , , CAAction, , , .

, , , . , . .

. - .

CAAction, , . , , , .

, , , home, . , . , .

. - .

, - , - . , , . , .

, , .

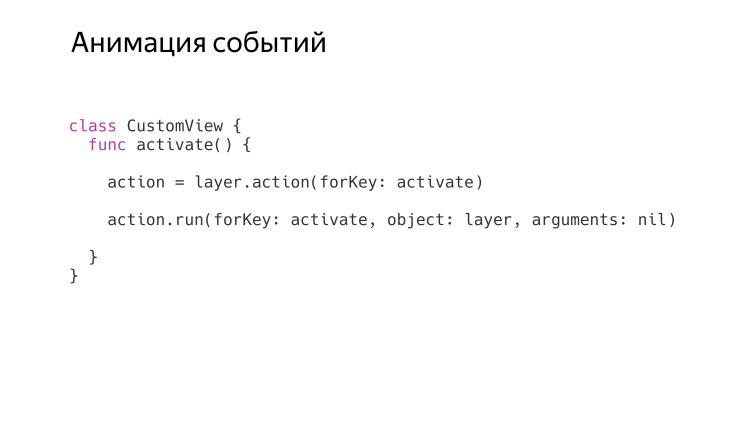

, CAAction , . , UIControl, - , - , , , - .

, . , UIView -, , - , , , .

— . .

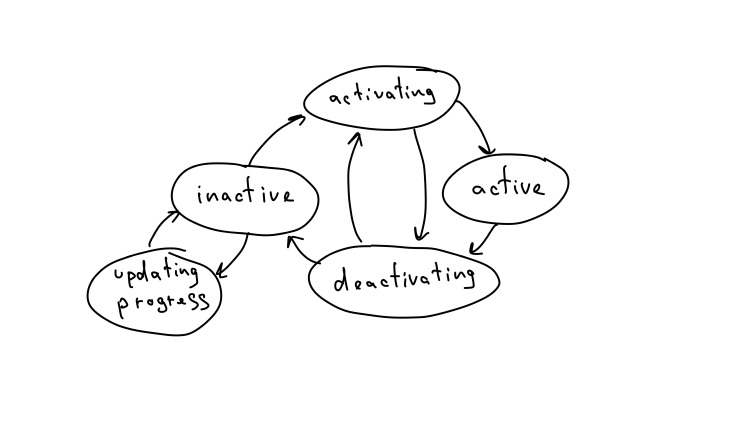

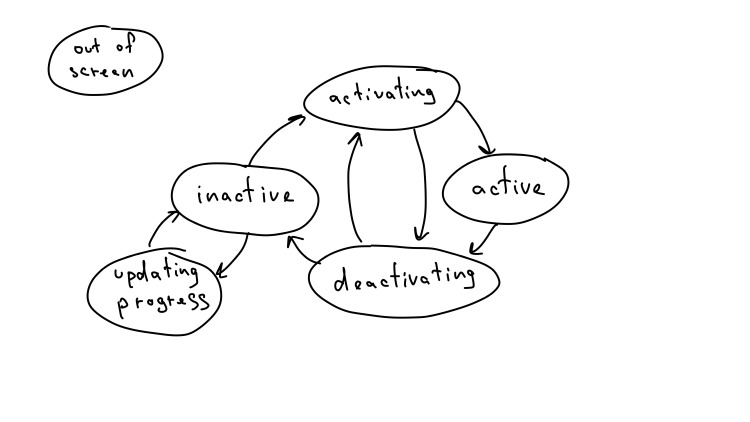

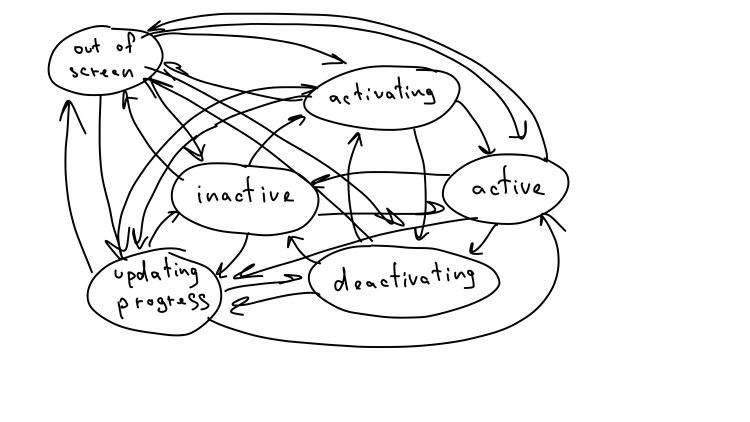

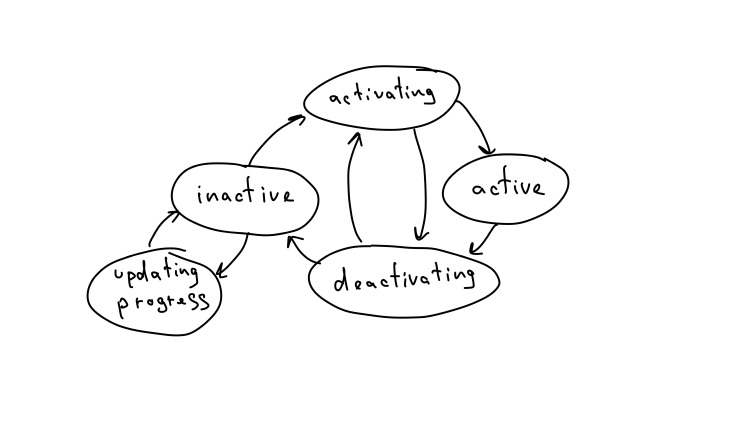

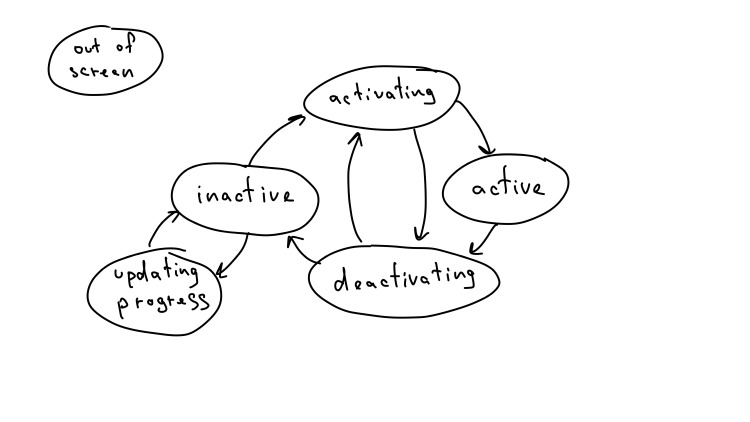

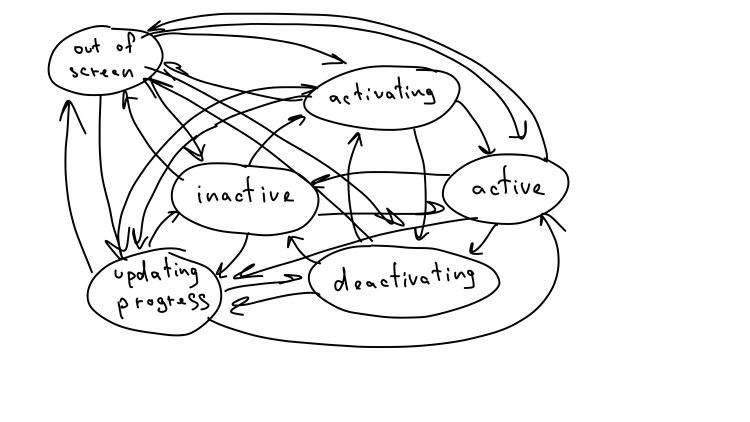

? . , . — . activating, inactive active. , , .

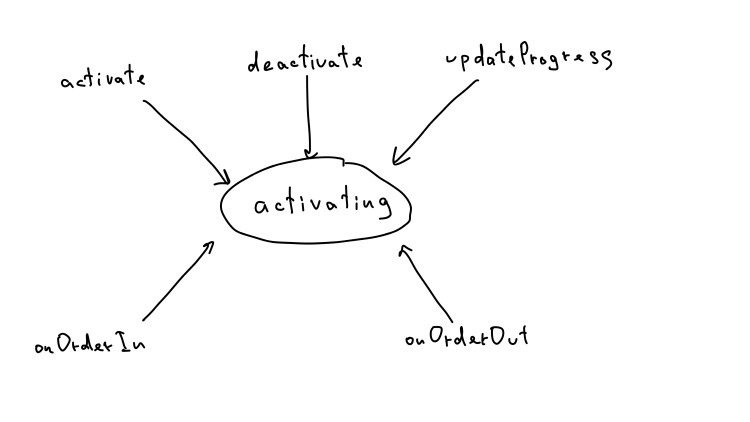

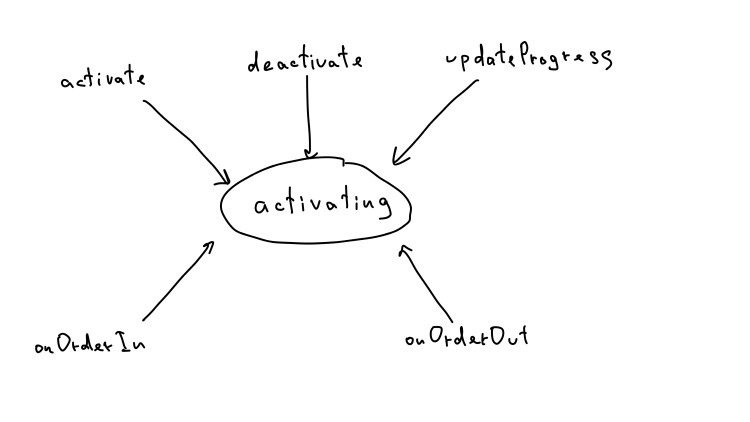

. , onOrderIn onOrderOut. , UIKit, .

, -, — , .

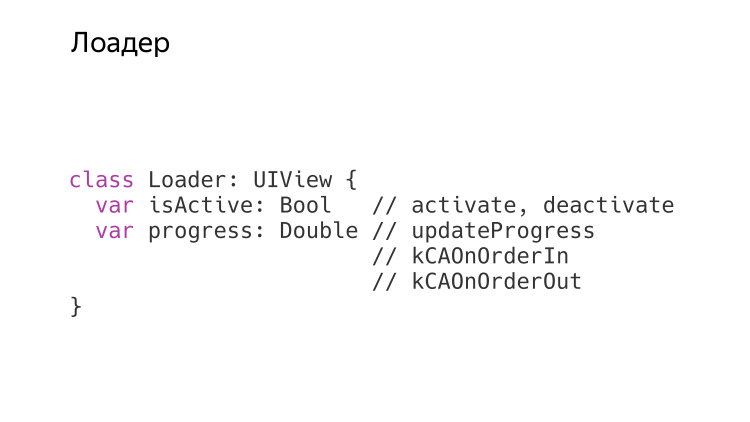

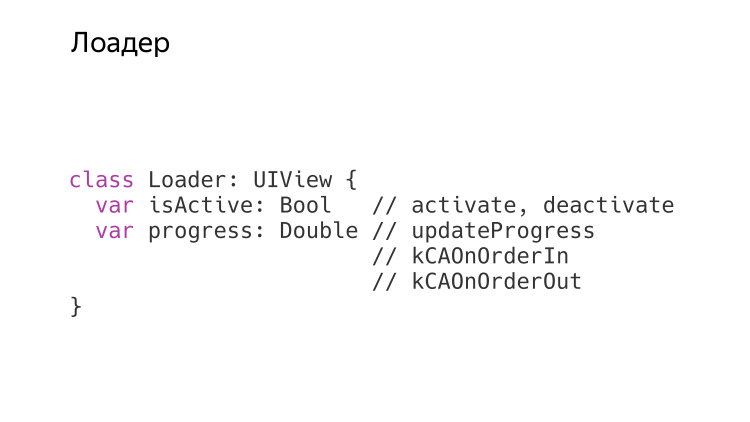

. UIView , : isActive progress. . CAAction, .

, . , , 30 CAACtion, . , 30 , NSNull. 15 15 . . — . , — .

, . .

. , , : , -, .

, , . . , UIKit , . . , , , . Thanks for attention.

- It is very important for me that I was honored to meet the guests. I see here those with whom I have been acquainted for a very long time, those with whom I have recently been acquainted, and those with whom I have not yet known. Welcome to CocoaHeads.

I will tell about microinteractions. This is a little clickback - we are engineers, developers, let's talk more about the software part, but let's start with a very humanitarian topic, such as microinteraction. As a result, we will apply this humanitarian theme in the technical part in order to learn more effectively and simply to design very small visual components, such as buttons, small loaders, bars. They are saturated with animation, and the branched animation code can often look very difficult, it is extremely difficult to maintain.

')

But first, let's digress a little. Think about it, do you remember when you decided to become a developer? I remember that clearly. It all started with a table. Once I decided to learn ObjC. Fashionable language, fun, just like that, without far-reaching plans. I found the book, it seems, Big Nerd Ranch, and began to read chapter after chapter, perform each exercise, checked, read, until I reached the table. It was then that I first became acquainted with the delegate pattern, more precisely with its subtype "Data Source", the data source. This paradigm now seems very simple to me: there is a data source, delegate, everything is simple. But then it blew my mind: how can I separate a table from completely different data? You once saw a table on a piece of paper in which you can put an infinite number of lines, completely abstract data. It affected me a lot. I realized that programming, development has great opportunities, and it will be very interesting to use them. Since then, I decided to become a developer.

During the development, various patterns were encountered. Huge, which are called architectures that describe the whole application. Small, which dozens fit in a small button. It is important to understand that all these patterns came not from the air, but from the humanitarian industry. The same delegate pattern. Delegation appeared long before programming, and programming takes over all these humanitarian things to work more efficiently.

Today I will talk about another approach, which adopts another humanitarian thing. In particular - about microinteraction.

It all started with a loader. In the last work, before Yandex, I had the task to repeat the google material design loader. There are two of them, one is uncertain, the other is certain. I had a task to combine them into one, he had to be able to both certain and indefinite, but there were strict requirements for it to be extremely smooth. At any moment we can go from one state to another, and everything should be smooth and neatly animated.

I am a smart developer, I did everything. I got more than 1000 lines of incomprehensible noodle code. It worked, but I received a cool remark on the code review: “I really hope that no one will ever rule this code.” And for me it is practically incompetent. I wrote awful code. It worked cool, it was one of my best animations, but the code was terrible.

Today I will try to describe the approach that I discovered after I left that job.

Let's start with the most humanitarian theme - microinteraction models. How are they embedded and generally where are they hidden in our applications? We continue with the use of this model in our technical world. Consider how UIView, which deals with the display and animation, how it works. In particular, let's talk a lot about the CAAction mechanism, which is closely integrated into UIView, CALayer and works with it. And then we look at small examples.

First definition. Apparently, the author really liked the “micro” prefix, but there are no macro or nanointeractions, the size does not matter. For simplicity, we will simply call them interactions. This is such a convenient model that allows you to describe any interaction with the application, from beginning to end. It consists of four points: a trigger, business logic that needs to be implemented in this interaction, feedback to convey something to the user, and a change in the state of the application.

I will tell one story with three different roles. I'll start with the user, as the most important in the development. When I was preparing for the report, I got sick. I needed to find a pharmacy, and I opened Yandex.Maps. I opened the app, look at it, it looks at me, but nothing happens. I then realized that I’m the user, I’m the main user, giving instructions to what the application should do. I oriented, clicked on the “search” button, entered “pharmacy”, clicked “OK”, the application did the internal work, found the necessary pharmacies that were next to me, and brought it to the screen.

I looked for the right one and found that, in addition to the pharmacies, a special button appeared on the screen - to build a route. Thus, the application has moved to a new state. I clicked on it, and went to the pharmacy. I went to this application for some purpose - to find a pharmacy. I reached it. I am a happy user.

Before this application appeared, and I was able to search for something in it, it was first developed. What did the UX designer think when he invented this process? It all started with the need to get out of the dumb scene, when the user and the application look at each other, and nothing happens. For this was needed some kind of trigger. Everything has a beginning, and here, too, it was necessary to start somewhere.

Trigger was selected - search button. When clicking on it, it was necessary to solve the problem from a technical point of view. Request data on the server, parse the response, somehow update the model, analyze. Request the current position of users and so on. And so we got this data and know exactly where all the pharmacies are.

It would seem that it was possible to finish it. After all, we solved the problem, found all the pharmacies. There is only one problem: the user still knows nothing about these pharmacies. He needs to get it across.

Somehow pack our solution to this problem and bring it to him in a beautiful package so that he will understand it. It so happens that users are people, they interact with the outside world through their senses. The current state of technology is such that only three senses are available to us, as mobile application developers: vision — we can show something on the screen, hearing — can reproduce in the speakers, and a tactile sensation, we can push the user into the arm.

But the man is much more functional. But the current state of technology is such that at the moment we can only rely on these three. And we choose in this case the screen, show on top of the map the nearest pharmacies, and a list with more detailed information about these pharmacies. And it would seem that everything is exactly there, the user found pharmacies and everything is fine.

But there is a problem here. When the user entered the application, he was in a context in which he does not know where the pharmacies are located. And the tasks he had to find her. But now the context has changed, he knows where the pharmacies are, he no longer needs to look for them. He had the following task - to pave the route to the next pharmacy. That is why we need to display additional controls on the screen, in particular, this is the button for building a route, that is, to transfer the application to another state in which it is ready to accept new triggers for the next interactions.

Imagine, the UX-designer came up with all this, comes to the developer and begins to describe in colors how the user presses the button, how it happens, how it is searched, how the user is satisfied, how we increase the DAU and so on. The developer’s stack of unresolved issues overflowed somewhere else on the first sentence, when we first mentioned the button.

He listens patiently to everything, and in the end, when it ends, he says that, okay, it's cool, but let's discuss the button. This is an important element.

During the discussion, it turns out that the button is inherently a trigger, it contains within itself the logic by which it can receive messages from the system, in particular about user clicks on the screen. Based on this click, it can launch a chain of events, which begins with the same button sending messages to different objects about the need to start various processes, in this case request information on the server, some more.

When pressed, the button changes its state, it becomes pressed. When the user releases - ceases to be pressed. That is, it gives feedback to the user so that he understands what to expect from this button. And the button can be pressed, not pressed, be active or inactive, in different states, and go through different logic from one state to another.

Thus, we looked at the fact that the same microinteraction model, which consists of a trigger, business logic, feedback and state changes, can describe our application at various scales, as in the whole use case, a huge search for the nearest pharmacy, and in terms of a little button.

And this is a very convenient model that allows you to simplify the interaction within the team and describe programmatically, to separate four entities: trigger, business logic, feedback, and state change. Let's see what UIKit provides us to use. And not just provides, but it uses. When implementing various animations, a small component of a UIView subclass, it only uses this mechanism and does not follow a different path.

Let's start with UIView, how it fits into this model. Then we will consider CALayer, what it provides to us to maintain these states, and we will consider the mechanism of actions, the most interesting point.

Let's start with UIView. We use it to display some rectangles on the screen. But in fact, UIView cannot draw itself, it uses another CALayer object for this. In fact, UIView is responsible for receiving messages about touching the system, as well as other calls, about the API that we have defined in our subclasses of UIView. Thus, UIView itself implements the trigger logic, that is, the launch of some processes, receiving these messages from the system.

Also, UIView can notify its delegates about events that have occurred, and also send messages to subscribers, such as, for example, subclass UIControl with various events. Thus, the business logic of this UIView is implemented. Not all of them have business logic; many of them are only display elements and do not have feedback in the sense of business logic.

We looked at two points, a trigger and a business logic. And where is the feedback and state change hidden in UIView? To understand this, we must remember that UIView does not exist by itself. When creating it, it creates itself a backlayer, a subclass of CALayer.

And he appoints himself a delegate. To understand how UIView uses CALayer, it can exist in different states.

How to distinguish one state from another? They differ in the data set that needs to be stored somewhere. We will consider what opportunities CALayer provides us with for UIView so that it stores the state.

We have a slightly expanded interface, an interaction between UIView and CALayer, and UIView has an additional task - to update the storage inside CALayer.

A little-known fact that few people use: CALayer can behave like an associative array, which means that we can write arbitrary data to it on any key as follows: setValue (_: forKey :).

This method is present in all subclasses of NSObject, but unlike many others, when it receives a key that is not redefined by it, it does not fall. And it records correctly, and then we can count it. This is a very handy thing that allows, without creating subclasses of CALayer, to write down any data there and then read it in consultation with them. But this is a very primitive simple repository, in fact, one dictionary. CALayer is much more progressive. It supports styles.

This is implemented by the Style property that any CALayer has. By default, it is nil, but we can redefine it and use it.

In general, this is a regular dictionary and nothing more, but it has a peculiarity about how CALayer works with it if we request value forKey, another method that NSObject has. It acts very interestingly, it searches for the necessary values in the style dictionary recursively. If we pack one style existing in the new style with the style key and write some keys there, it will be searched as follows.

First look at the root, then deep into, and so on, until it makes sense. When style becomes nil, then there is no sense to look further.

In this way, UIView, using the infrastructure provided by CALayer, can organize state changes, update the internal CALayer storage, either using style, a very powerful storage that can simulate a stack, or using a regular associative array, which is also very efficient and very useful .

Finished with the repository, start with CAAction. I will tell you more about him.

There is a new challenge for UIView - to request actions from CALayer. What are action games?

CAAction is only a protocol with only one method - run. Apple generally loves the cinema theme, the action here is like “camera, motor!”. This “motor” is just an action, and it’s not just that the name was used. The run method means to launch an action that can start, run and end, which is the most important. This method is very generic, it has only the string event, and everything else can be of any type. In ObjC, these are all id and the usual NSDictionary.

Inside UIKit, there are classes that satisfy the CAAction protocol. First is the animation. First, we know that you can add animation to a layer, but this is a very low-level thing. High-level abstraction over it - to launch action with the necessary parameters with a layer.

The second important exception is NSNull. We know that it cannot call any methods, but it satisfies the CAAction protocol, and this is done in order to conveniently look for CAAction on layers.

As we said before, UIView is a delegate for CALayer, and one of the delegate methods is action (for: forKey :). The layer has a method, action forKey.

We can call it at the layer at any time, and at any time it will give the correct action or nil, as it can also give. Algorithm is a very unusual search. Here the pseudocode is written, let's take a look at the lines. When receiving such a message, he first consults with the delegate. The delegate can either return nil, which will mean that the search should continue elsewhere, or it can return a valid action, a valid object that satisfies the CAAction protocol. But there is a logical rule: if it returns an NSNull that satisfies this protocol, then it will later be converted to nil. That is, if we return Null, in fact it would mean “stop searching.” Action is not and is not necessary.

But there is another one. After he had consulted with the delegate, and the delegate returned nil, he continues to search. First in the Actions dictionary, which the layer has, and then it will recursively search in the style dictionary, where there can also be a dictionary with the key actions, into which many actions can be written, and it will also be able to search them recursively. If it doesn’t work there either, it will request the default action forKey class method, which is defined by CALayer and until recently returned something, but lately it always returns nil in recent iOS versions.

Understood with the theory. Let's see how everything is applied in practice.

There are events, they have keys, some actions are taking place on these events. In principle, two different types of events can be distinguished. The first is the animation of stored properties. Suppose when we call View backgroundcolor = red, then it is theoretically possible to animate it.

What is the report about patterns without a scheme? I drew a couple. UIView has some kind of interface that we defined in subclasses or the one that is received from the system with events. The task of UIView is to request the necessary action, update the internal store and launch the action that occurred. The order is very important about the request: the action, only then the update of the action, and only then the update of the store and the action.

What happens if at UIView we update backgroundColor. We know that in UIView everything that concerns the display on the screen, it is all the same proxy to CALayer. It is everything that he receives, just in case, he caches, but at the same time, CALayer broadcasts everything, and CALayer is engaged in all the logic. What happens inside CALayer when he is asked to change the background? Everything is a little more complicated here.

For starters, he will ask for action. And it is important to understand that the action will be requested first. This will allow CALayer to ask its current values, including backgroundColor, at the time of the action creation, only then the store will be updated, and when the received action receives the run command, it will be able to consult CALayer and get new values. Thus, he will have both old and new ones, and this will allow him to create an animation, if there is a need for it.

But there is one feature in UIView, if we change the backgroundColor in UIView, if we do it in the animation block, then it is animated, and if outside the animation block, it is not animated.

It's very simple, there is no magic. But it suffices to remember that UIView is a delegate to CALayer, it has such a method. Everything is very simple.

If this method was launched in a block of animations, then it will return some kind of action. If outside the animation block, this method will return NSNull, which means you don’t need to animate anything. , CALayer .

, UIView , . . ?

, . UIView , read only, , inheritedAnimationDuration. . , . .

? duration, . , run, , .

, CAAction, backgroundcolor opacity, UIView . , , , , . . setValue forKey , , , , , , .

, , , , .

— . , «» «» . .

.

. , , , . UIView CALayer, , , CAAction, , , .

, , , . , . .

. - .

CAAction, , . , , , .

, , , home, . , . , .

. - .

, - , - . , , . , .

, , .

, CAAction , . , UIControl, - , - , , , - .

, . , UIView -, , - , , , .

— . .

? . , . — . activating, inactive active. , , .

. , onOrderIn onOrderOut. , UIKit, .

, -, — , .

. UIView , : isActive progress. . CAAction, .

, . , , 30 CAACtion, . , 30 , NSNull. 15 15 . . — . , — .

, . .

. , , : , -, .

, , . . , UIKit , . . , , , . Thanks for attention.

Source: https://habr.com/ru/post/429580/

All Articles