Future VR Video - Google's VR180

Hint: this gif starts and stops on click

In April of this year, Google announced the technical details of the new format for VR-video - VR180 . The format specifications were uploaded to the Google repository on GitHub , camera manufacturers were asked to make special cameras , the format was supported on YouTube .

The basic idea is quite simple. In the "usual" VR-video - 360-video - you can turn your head horizontally in all directions, while the main action takes place, as a rule, from one side or another, and the whole stream is transmitted to the device, which leads to transmission and storage. redundant information. In fact, in the overwhelming majority of cases, there is no need to implement a 360-degree view - to achieve the same effect, 180 degrees are enough. In this case, the “second half” of the frame is used for the second angle, that is, stereo is obtained.

Thus, the proposed format provides an even greater sense of immersion than from 360-video, is cheaper to produce, easier to shoot, and has no problems with stitch .

')

How is this possible, and what did Google offer?

Anyone interested in a VR video of the near future - welcome under the cat!

First about the good.

VR180 is noticeably easier to shoot than 360 videos. For shooting high - quality 360-video, up to 17 cameras are used (example from Xiaomi below), which causes a lot of problems with the size of working video, partial failure, overheating, unstable focus of cameras, etc. At the same time, from the point of view of a simple user, the best were recognized cameras with two fisheye lenses ( one , two , three ).

A source

The new format is also removed by two cameras. This markedly reduces the cost of the end device. At the same time, the shooting technique is greatly simplified, since all methods of working with a conventional camera remain relevant (only the result is potentially more spectacular and with greater immersion). For the success of the format, it is important that every housewife and every student can easily use it. Therefore, the simpler - the better.

Further, in VR180, the problems of the so-called stiching (gluing) disappear - extremely noticeable artifacts in places where images from two cameras were stitched together. Recently it seemed that a little time would have passed, and the problems of stitching would be solved. Alas, they were much more difficult. If there is a fast moving or translucent object on the border of gluing, then at the current level of development of video processing algorithms in automatic mode the problem is not solved. Of course, automatic matting algorithms are evolving, but the absence of artifacts is not guaranteed even with Deep Learning methods . There is no stiching in VR180, which means there are no problems in principle either.

And finally, almost always shot 360-video is flat. That is, from the binocular point of view, the picture is perceived hanging on some screen before the eyes, which often reduces the “wow effect” and the immersion effect, and the VR180 initially and by default - the stereo format.

All these points look very promising from the point of view of predicting the success of the format. As a result, manufacturers rather actively began to produce cameras specifically targeted at the VR180, for example:

The fact that Xiaomi has entered the VR180 market is certainly encouraging.

Also, there were solutions that allow you to assemble the camera for shooting VR180 from two ordinary cameras with fisheye-lenses. Sometimes it is enough just to print or buy a mount to start experiments (below are examples of GoPro, digital soap, Sony mirrors):

A source

Source: http://products.entaniya.co.jp/en/products/equipment-for-3d-stereo-180-vr/

In addition, funny solutions appeared when one camera supports shooting in both VR180 and 360 video formats (this is a clamshell, which shoots 360 when folded and VR180 when maximized):

A source

Among other things, new horizons of experiments on shooting VR-video have opened (in the photo YI Horizon VR180 camera from Xiaomi):

The number of new devices for shooting in the VR180 is very large, and this markedly contributes to the popularity of the new format.

Today, companies are trying to introduce VR wherever possible, they want to make the format more popular and popular. And most importantly - cheap. Google is no exception. Everyone remembers their budget solution for the introduction of “virtual reality helmets” (English Head Mounted Display, HMD) for wide use - Google Cardboard .

Its functionality, of course, can not be compared with expensive HMDs, but the main goal has been achieved: to make VR more accessible and turn every smartphone into a virtual reality headset at an additional cost of less than $ 1.

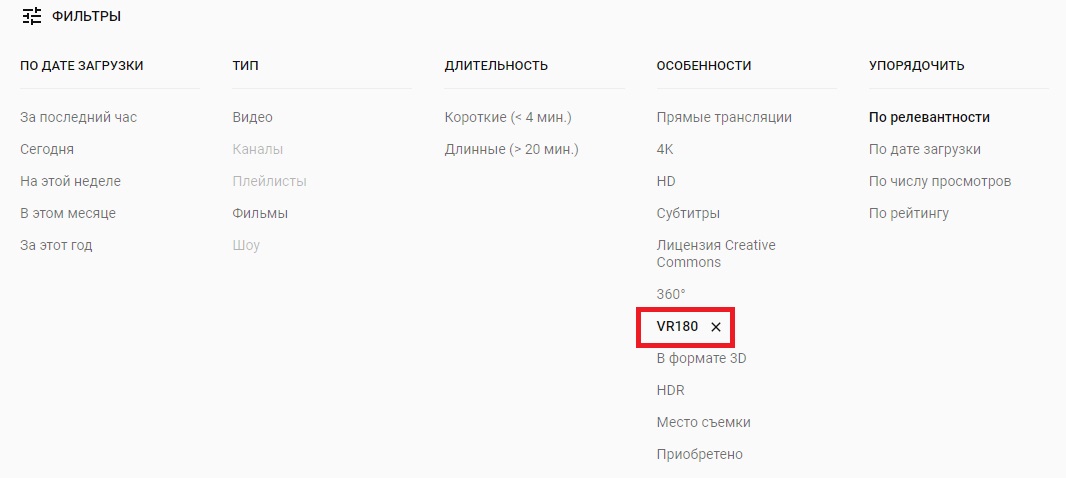

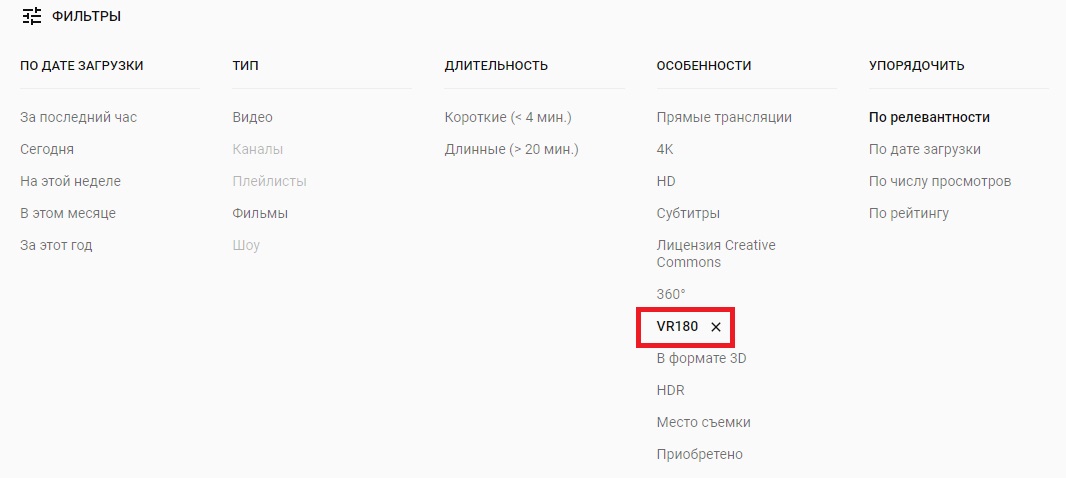

Building on success, Google launches the new VR180 format with support for uploading to YouTube and with a special search filter:

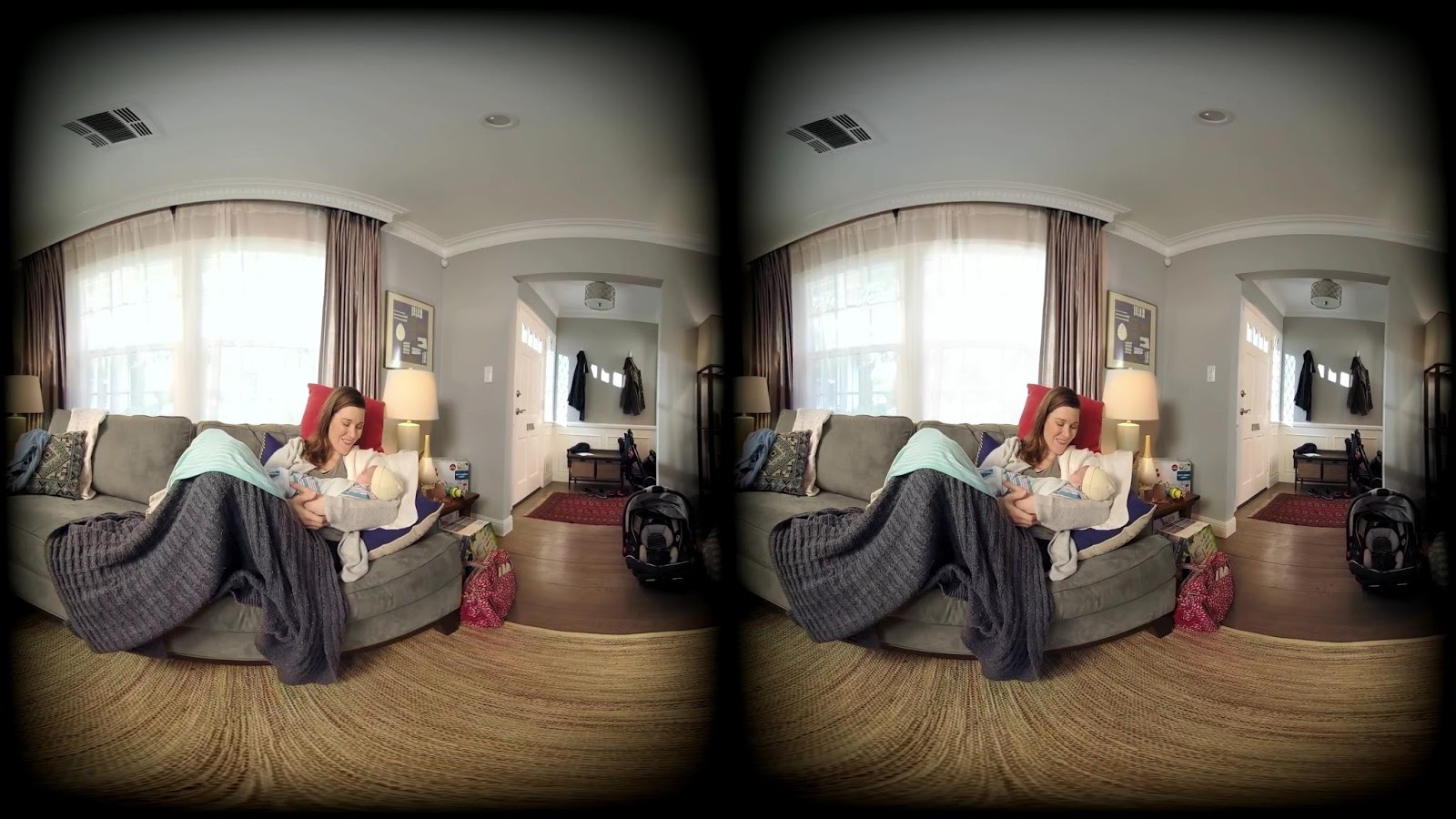

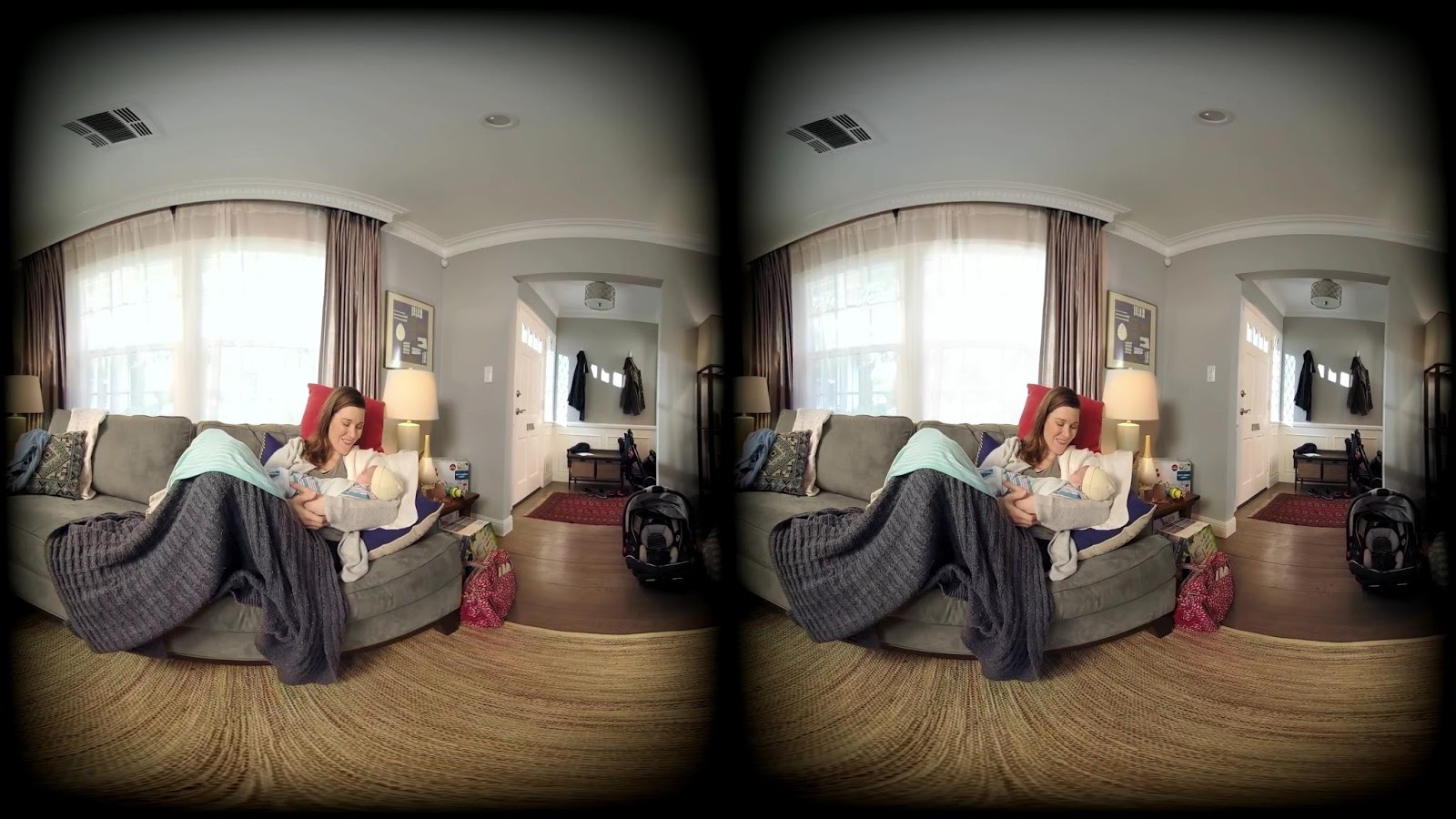

This is the frame of the video from the inside:

Special meta-data has been added to MP4, which turns the video into spherical. Generally speaking, if you simply click on the link, then most likely you will see a plain flat video. This is due to the fact that besides the VR180 video, a projection of one of the views (left) onto a regular rectangle is also uploaded to the site. To see the picture as in the picture above, you need, for example, to download the video in pure MP4 format. Basically, they have 4K resolution. The possibility of camera movement is guaranteed when viewed on a mobile device with the Cardboard application ( Google Play , AppStore ). And, of course, in a full HMD.

Shooting such videos, by analogy with cardboard helmets, was also supposed to be cheap enough for wide distribution among users. A camera that shoots video in this format costs around $ 300. Compared with expensive stereo, this is a completely new level. It would seem that everything is fine. However, the problem is that the new format is a stereo format, and in stereo, as you know, there are many difficult problems to solve.

As soon as it comes to stereo (3D), headaches from trips to 3D cinemas are immediately recalled. We considered the reasons for such discomfort in a large series of articles ( one , two , three , four , five , six , seven ) in much more detail, but with reference to stereo films. In short, for a number of reasons, many 3D movies are shot (or converted) so that viewers who are sensitive to artifacts of a stereo video can only take citramon in advance. Unfortunately, most of the problems in 3D movies are related to stereoscopic artifacts, which are also found in VR180. This means that all the factors causing discomfort in such films will also cause discomfort when watching a video in virtual reality. Even the basic quality check of the VR180 content showed that it is comparable to the quality of conventional stereos around the middle of the last century ...

In other words, enthusiasts will be delighted, but the mass audience will complain.

For the analysis of the quality of stereovideo, the VQMT3D project was used , which is being developed in the video group of a computer graphics and multimedia laboratory at the faculty of VMK, MSU. Its purpose is to provide authors of stereo films with the ability to track the occurrence of all possible artifacts at the post-production stage. And since the VR180 is also stereo, the de facto project is applicable to this format with some reservations. In the following examples, frame information is obtained using VQMT3D.

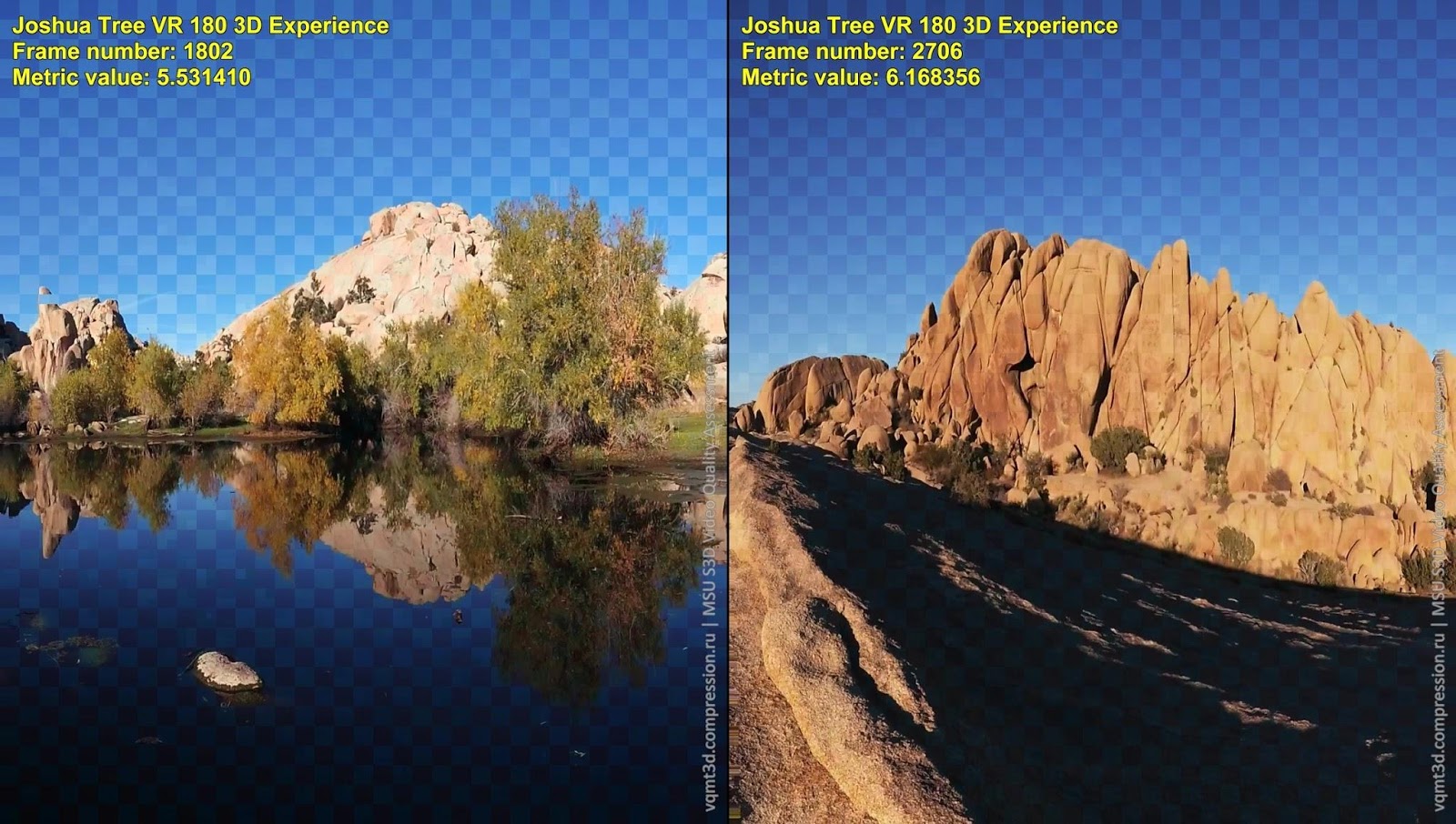

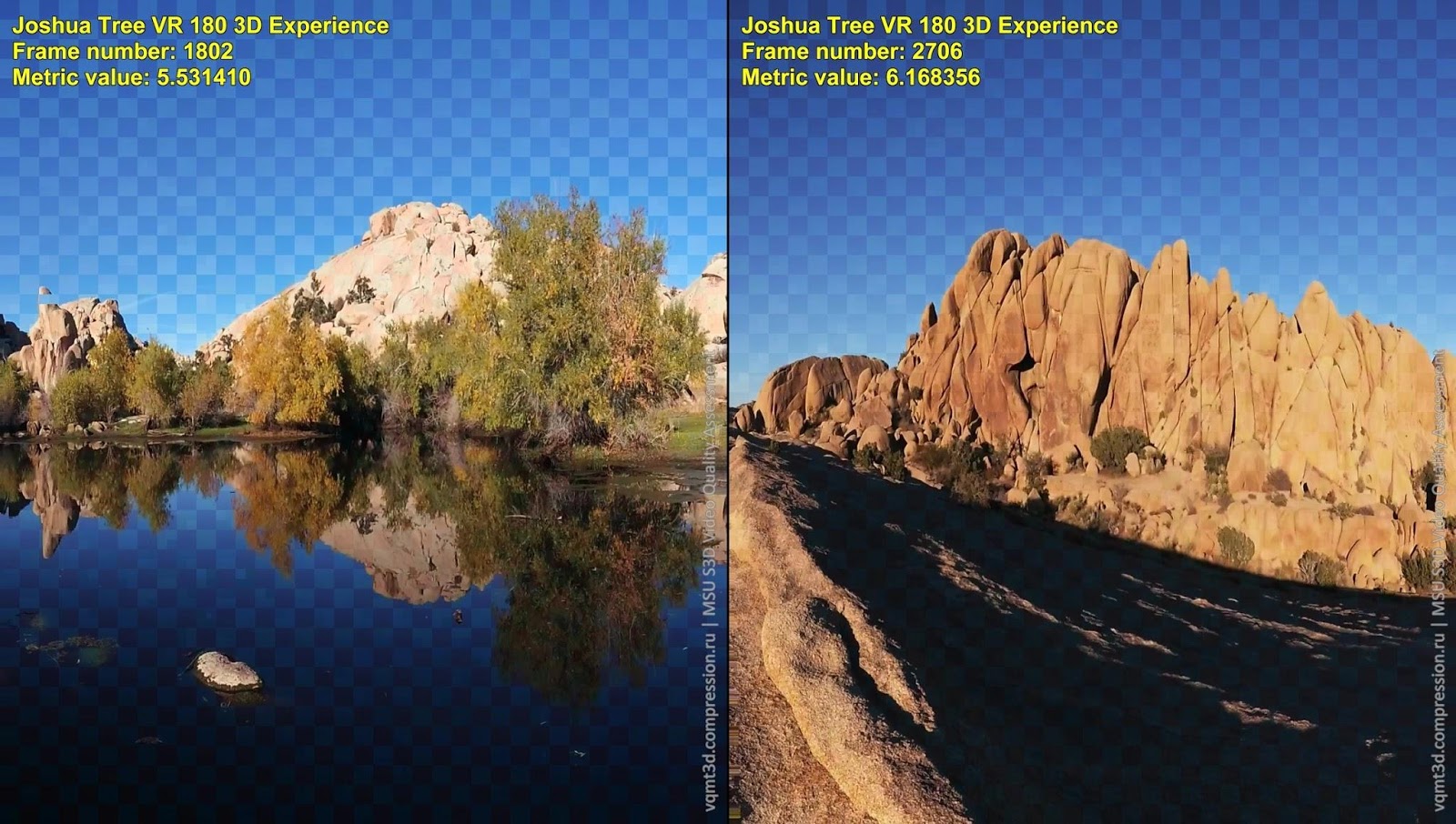

This problem is the easiest to understand and relatively easy to fix. Close one eye and look at some object. Now do the same with the other eye and answer the question: do the colors change when the eye changes? In general, no. So in stereovideo there should be no differences in the color of the same objects for the left and right angles. However, this is what we see in real videos taken on YouTube (pay attention to monochrome areas, for example, sky or water):

Link to video

Color distortions can occur for many reasons, for example, due to different calibrations of cameras, heating of their matrices or when the edge of the lens is lit. Therefore, even with identical shooting parameters for identical cameras, the colors can diverge noticeably.

It is most convenient to visualize this artifact using “chess”, when the right angle is brought to the left using motion compensation, and then blocks are selected from the left and reduced right angles in a checkerboard pattern.

Below is an example when light sources fall into the frame:

Link to video

Not only do the light sources themselves vary greatly in their perspectives, they also distort the colors in the whole image with highlights.

A tougher example is when the sun hits the frame:

Link to video

Due to the unsuccessful setting of the camera in front of the sun, a terrible artifact appears in the form of a red highlight on the matrix. Color distortions are quite rare in real life, and artifacts of the above type are not found at all, which ultimately leads to an accumulation of fatigue when viewing. Unfortunately, in the most sensitive part of the audience fatigue turns into a headache.

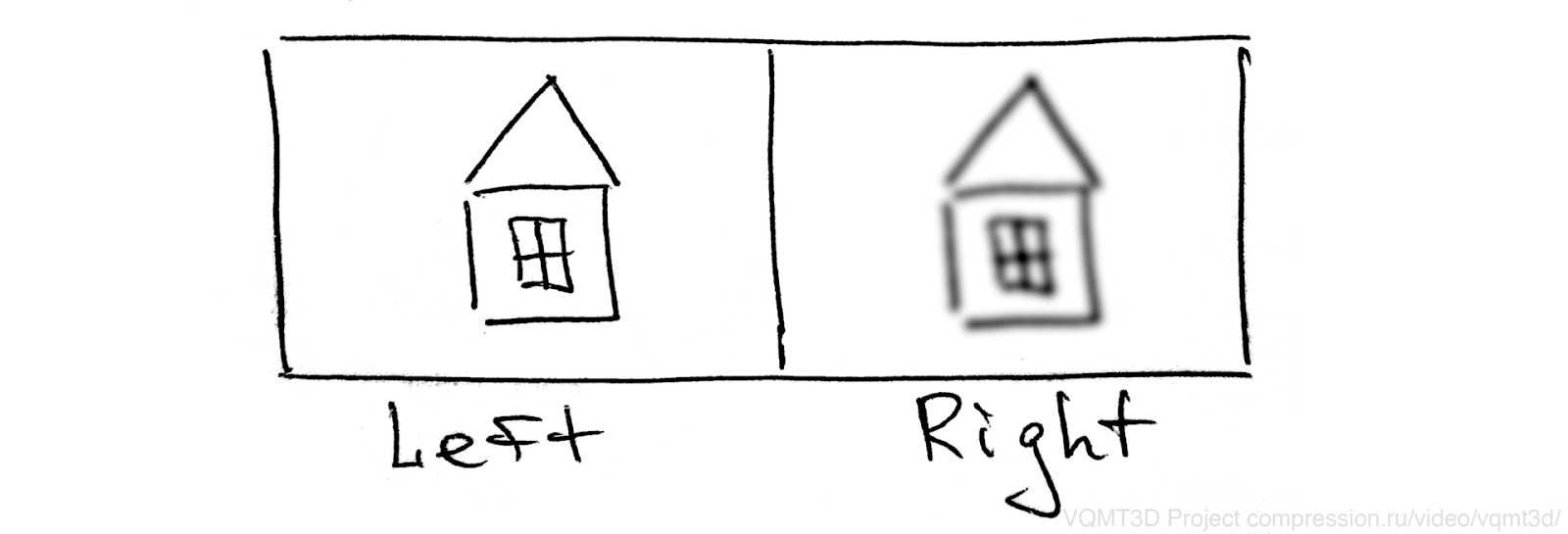

Another problem that arises when shooting stereovideo is the differences in sharpness in the left and right angles. In real life, this problem is quite common. For example, if you spend 10–12 hours at a computer, peering intensely at the screen (you will agree, this happens) , then at the end of the day, the right and left eyes can noticeably diverge in focus, and light myopia / hyperopia is guaranteed until the evening. In this case, the brain successfully compensates for this problem. Relatively speaking, we get clear image details from the right or left eye for distant / close objects. That is, in engineering language, the problem is regularly solved with built-in tools. And in the morning, as a rule, vision is restored. And everything would be fine, but in real stereo video, focusing can “jump” from scene to scene. It turns out that the “far-sighted” becomes either the right eye or the left, and sometimes both see well, which leads to noticeable discomfort when viewed. Especially for people in the age, whose eyes are already "stationary" diverged in sharpness.

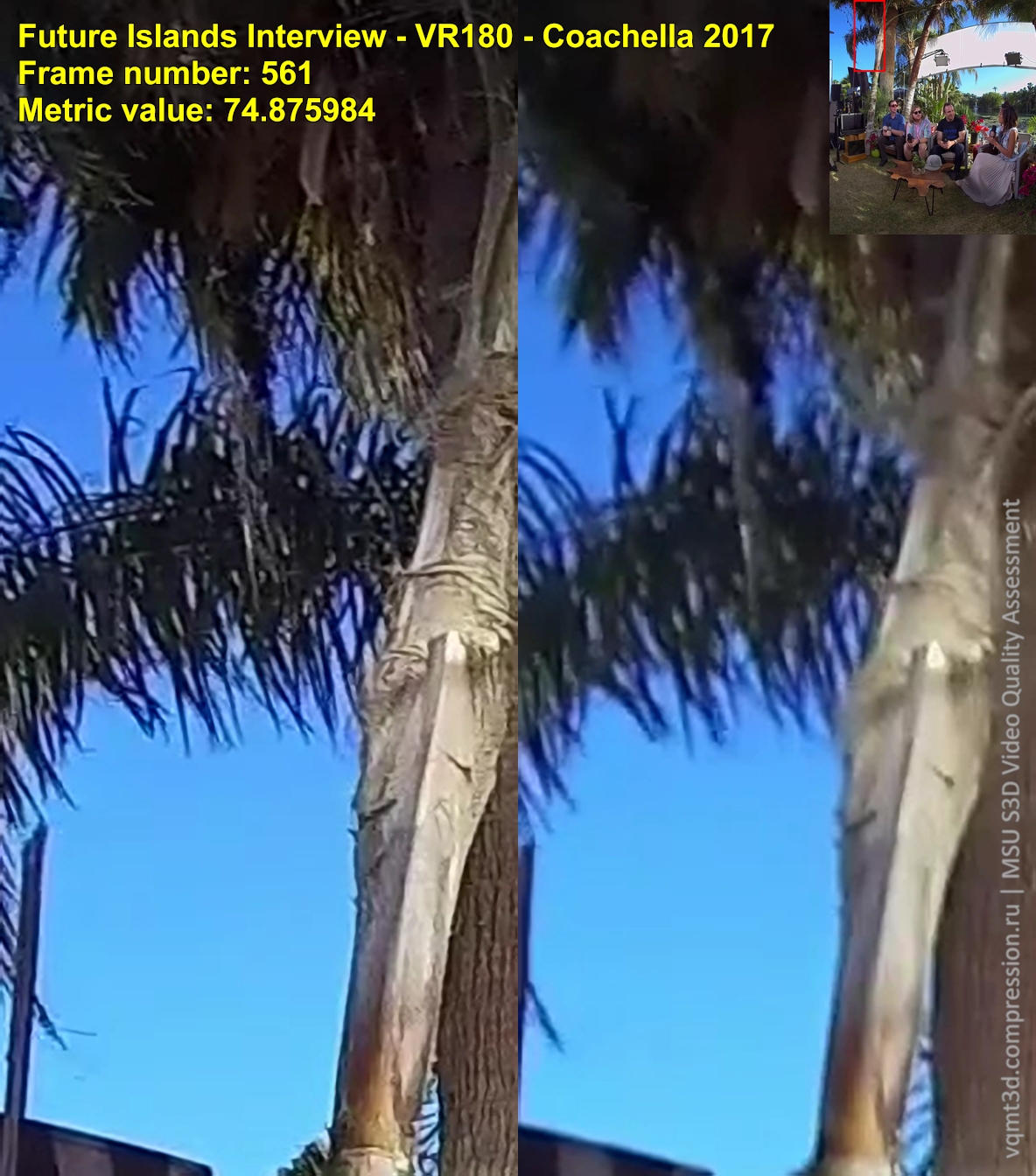

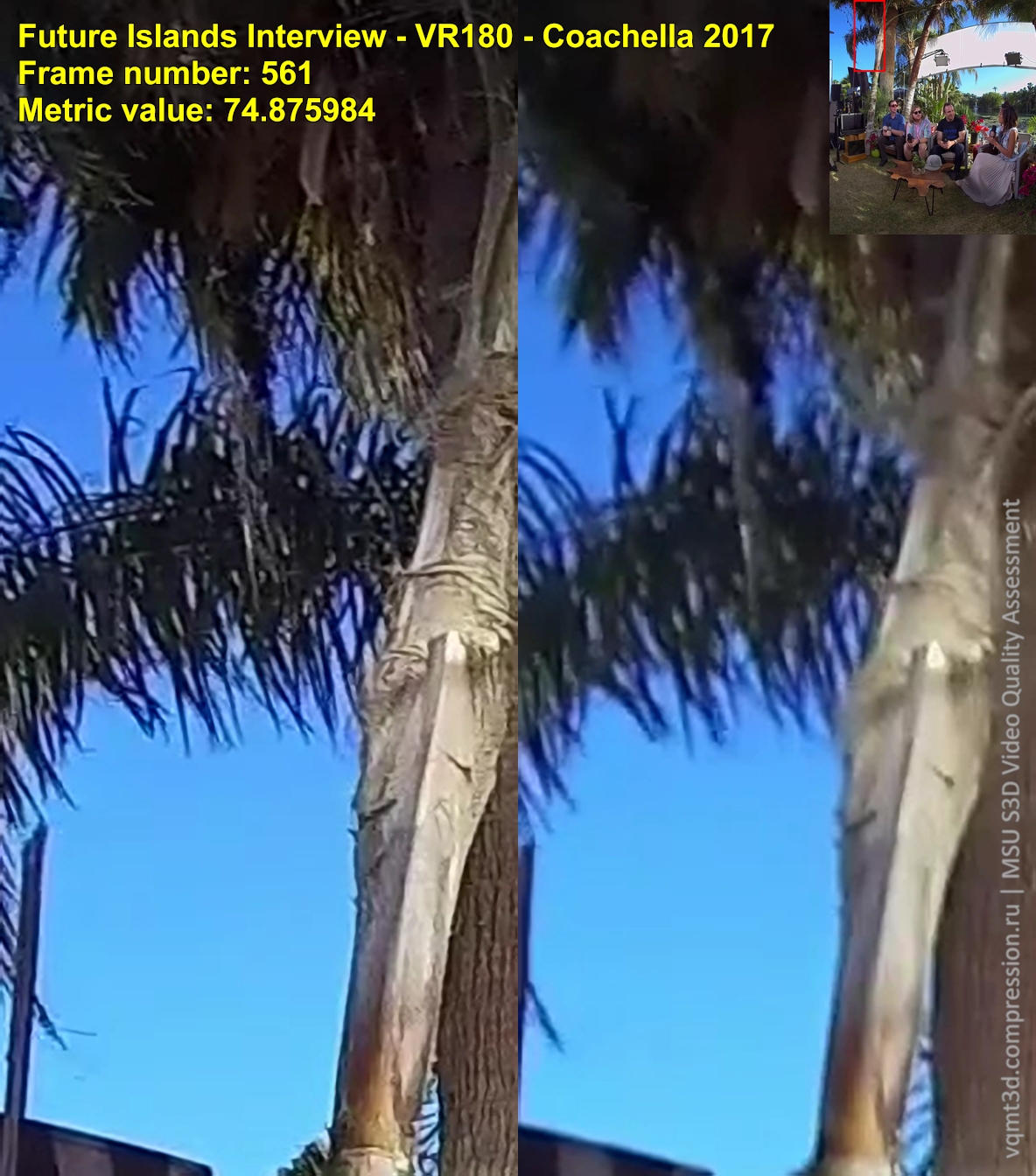

Examples of discrepancies for VR180, where for better clarity, enlarged fragments of the same area are presented for two views:

Link to video

Here is another fragment of this frame:

Link to video

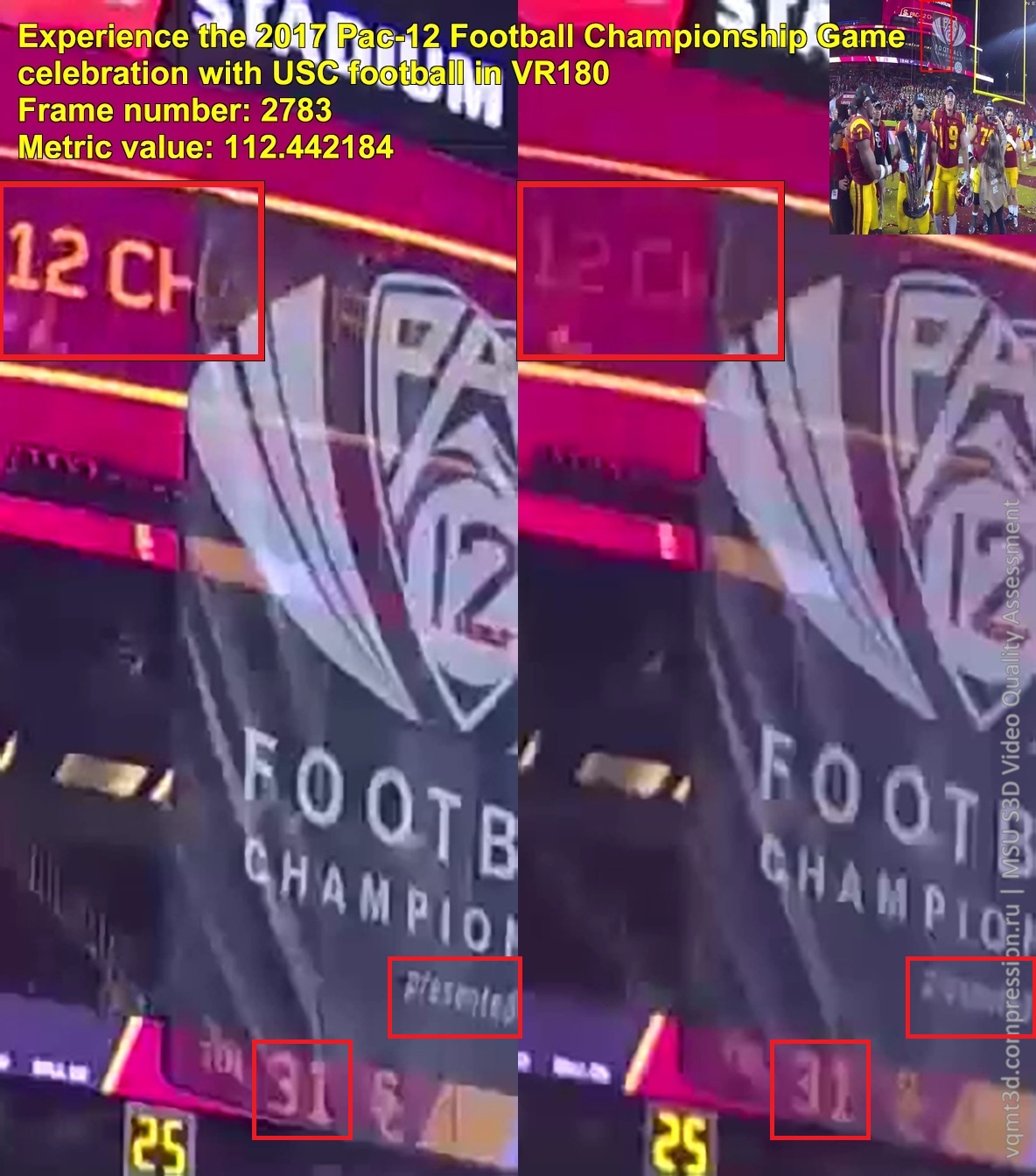

The appearance of this artifact is due to the divergence of focusing cameras for technical reasons. And because of the lack of professional post-processing, even “vygvlaznye” scenes fall on YouTube.

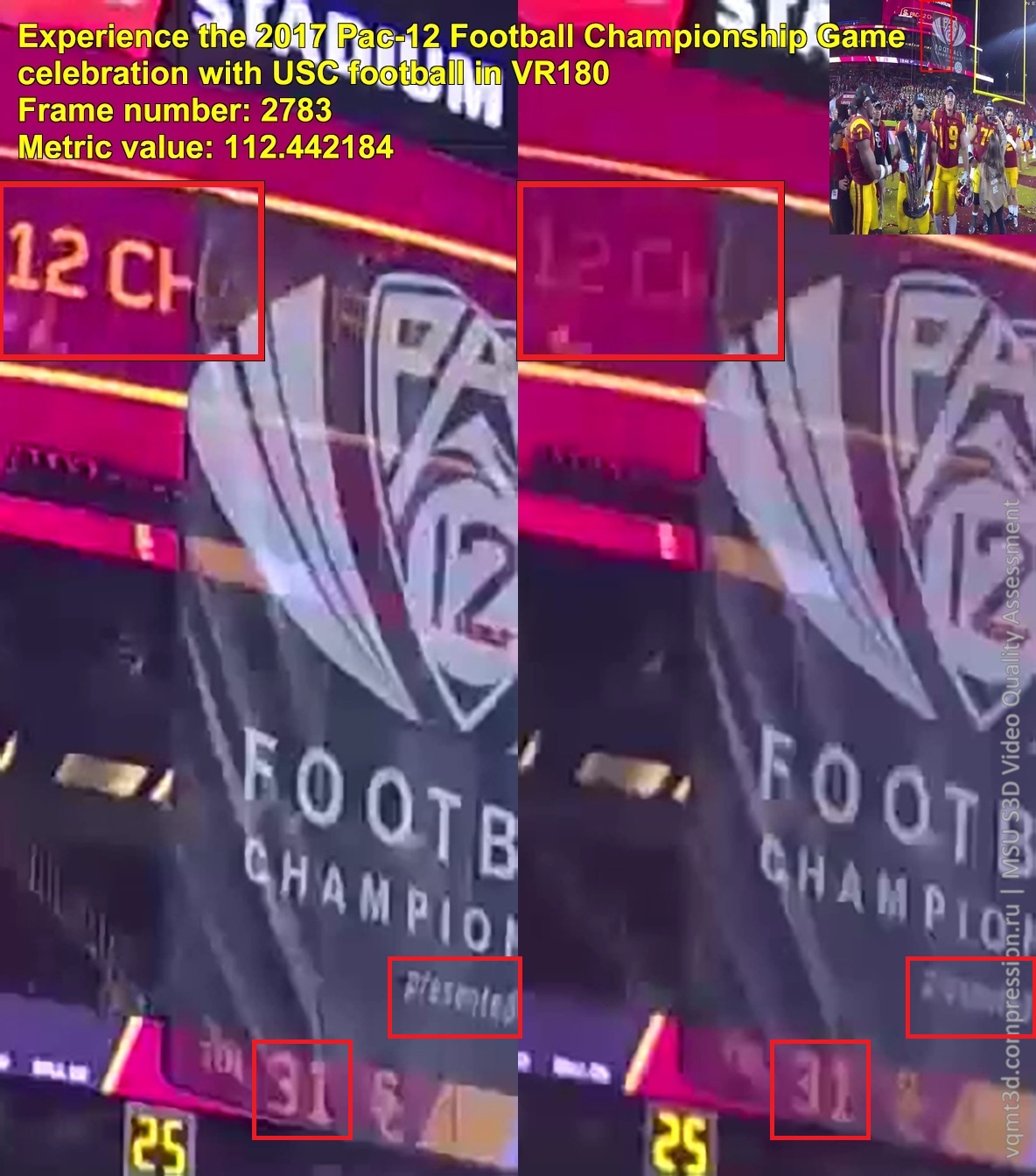

Link to video

Almost all the inscriptions on the zoomed image differ in sharpness. Pay attention to the inscription "12 CH", which will be uncomfortable to "strobe" when viewing.

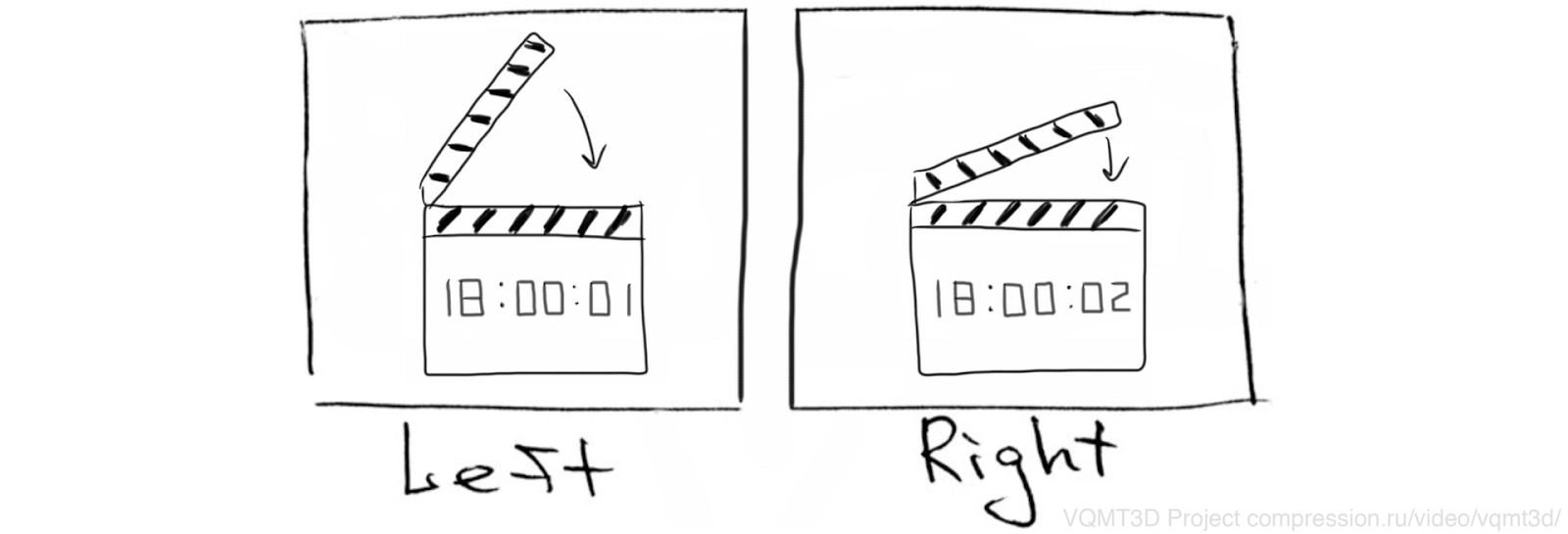

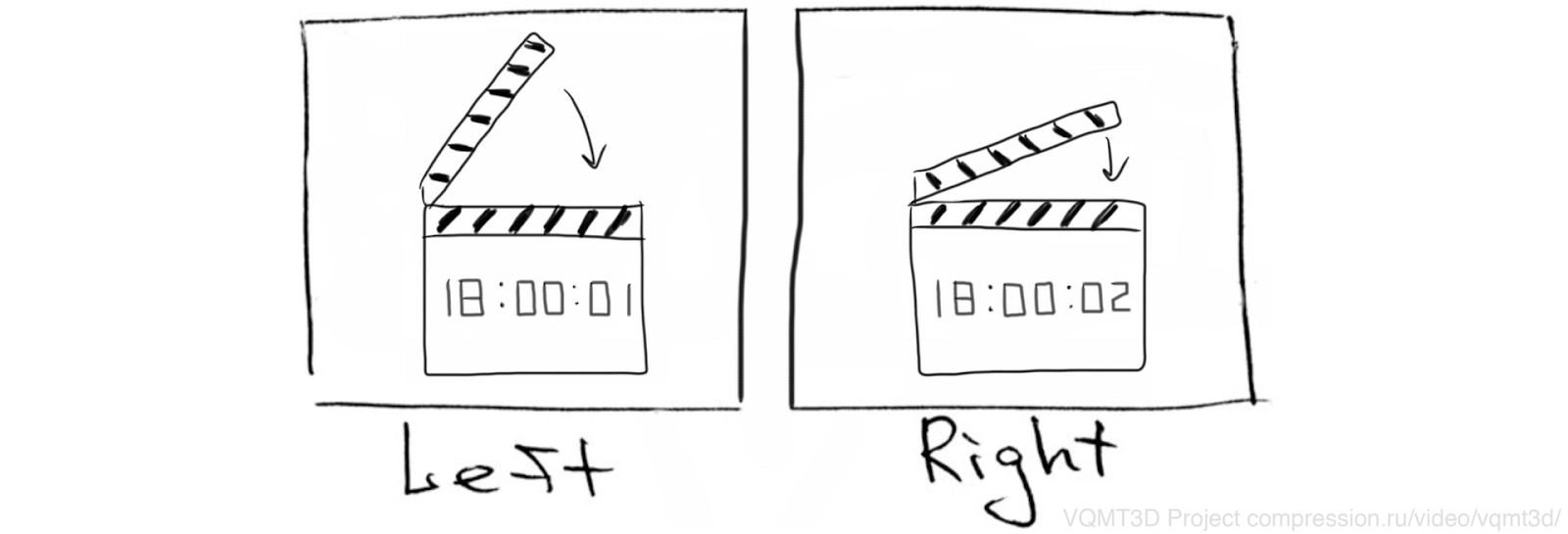

Strangely enough, although computer cores have long been successfully synchronized for millionths of a second, stereo cameras when shooting still differ in time by hundredths or even tenths of a second. One eye sees events that have not yet happened for the other eye! You can not even come up with an analogue for this problem in the real world. And this artifact was also found in VR180.

Pay attention to the window with a neon sign on the right side of the frame:

Link to video

This time shift was noticed by chance when analyzing discrepancies in color, which is also present here. The glimmering sign catches the eye, even without the use of special metrics aimed at finding a time shift . Simply left the frame behind the right!

Here is another example from the same scene. Look at the feet of pedestrians:

Link to video

It is clearly visible that on the right frame the leg is moved further than on the left, as if one frame lags behind the other for a few moments, although they should have been shot at exactly the same moment. We conducted an experiment in which we showed 302 viewers short fragments of stereo films with different artifacts and asked after each fragment from a smartphone / laptop to fill out a form indicating the level of pain. The shift in time has shown itself to be the most painful artifact - this is an impossible situation for the brain and an attempt to "process" it leads to obvious tangible discomfort. Unfortunately, a shift of less than 1 frame is most common, and it is not so easy to correct.

By the way, the example above shows with the naked eye also the rotation of the frame between the angles (especially in the lower left corner), which is also quite uncomfortable, but it is much easier to correct. However, other artifacts are a separate big story, to which we will, I hope, return.

It could well seem that these artifacts are inherent in the video obtained during amateur photography, and if used correctly, the same cameras can give a good image. Unfortunately, this is not the case. Here is the VR180 format promotional video , which is located on the official VR180 page. It would seem that he should set the standard of quality. But if you look ...

Differences in color:

Absolutely at all points there is color distortion. As if at one of the angles a slightly larger white balance was mistakenly set.

And here is a large part of the frame without distortion. But the lower right corner still differs noticeably in color, which causes a characteristic visual “gating” when viewed.

It is noteworthy that the color distortion on the road was also noticed without special tools for stereo analysis. It was discovered just when watching video frame by frame (similar to video processing with different parameters).

Differences in sharpness:

Here the difference is most noticeable on the floor and on the seams of the sofa. The greatest difference in sharpness is precisely at the edges of the objects.

VR, including 360-video, is actively distributed. The technology attracts users and looks very promising. But the technical quality of the current implementation causes discomfort from viewing. As a result, a certain number of people interested in trying a new format get a headache, and when shooting is unsuccessful (as a rule, when the camera moves sharply), in addition, dizziness and nausea, which leads to disappointment in the format.

At the moment, many (including us) are developing tools for quality control , as well as to correct stereo problems.

For example, here are examples of color correction:

On the left - the original angles, on the right - color-corrected using our algorithm. The illuminated view is completely corrected.

The color on the ceiling returned to normal.

For more examples of automatic color correction, see a separate article on stereo color distortion .

In total, at the moment, about 20 types of artifacts of the shot and converted stereo video are detected, most of which are also relevant for the VR180. In the future we plan to expand the methods of monitoring and improving the quality of VR video:

It is obvious that the current problems are the problems of the infancy of technology and they will be actively solved. It can be expected that:

From the funny: now came the fashion for smartphones with 3-4 cameras on the back side, which provide a dramatic improvement in the quality of photos.

Source: Samsung Galaxy A7 (2018) & Samsung Galaxy A9 (2018)

It is logical to expect that with the growing popularity of the VR180, its out-of-box support will be built into all major smartphone models.

Yes, most likely the angle of view will be not 180 °, but less.

Yes, most likely the quality will be worse than that of specialized cameras.

Yes, shooting stereovideo will require compression of large streams of information (which today's smartphones are still poorly designed for).

Yes, you will need to put two wide-angle cameras at a greater distance.

But technically, today there are no major obstacles to the implementation of support for the VR180 flagship models. The question is only in the growing popularity of the format, so that demand becomes massive, creating an incentive for this.

And it is clear that when VR180 shooting will start to support top smartphones, the number of relevant videos on YouTube will start to grow like an avalanche.

Also at exhibitions you can see autostereoscopic displays of smartphones and tablets, which with increasing resolution show more and more interesting quality. At least, it cannot be compared with what could be massively observed in 2010–2011 during the last wave. At the time of writing this article, RED Hydrogen One was announced - the first SERIAL smartphone with a 3D screen of a new generation, so it was possible to buy what the professionals saw at the exhibitions. The process goes on and, as screen resolution grows, it will obviously go faster and faster. The main obstacle is the lack of content.

Obviously, this “chicken and egg” problem will be solved soon.

All smaller headaches!

Yours Konstantin Kozhemyakov and Dmitry Vatolin

I would like to sincerely thank:

See also:

S3D: No pain IS gain

In April of this year, Google announced the technical details of the new format for VR-video - VR180 . The format specifications were uploaded to the Google repository on GitHub , camera manufacturers were asked to make special cameras , the format was supported on YouTube .

The basic idea is quite simple. In the "usual" VR-video - 360-video - you can turn your head horizontally in all directions, while the main action takes place, as a rule, from one side or another, and the whole stream is transmitted to the device, which leads to transmission and storage. redundant information. In fact, in the overwhelming majority of cases, there is no need to implement a 360-degree view - to achieve the same effect, 180 degrees are enough. In this case, the “second half” of the frame is used for the second angle, that is, stereo is obtained.

Thus, the proposed format provides an even greater sense of immersion than from 360-video, is cheaper to produce, easier to shoot, and has no problems with stitch .

')

How is this possible, and what did Google offer?

Anyone interested in a VR video of the near future - welcome under the cat!

Introduction to the VR180

First about the good.

VR180 is noticeably easier to shoot than 360 videos. For shooting high - quality 360-video, up to 17 cameras are used (example from Xiaomi below), which causes a lot of problems with the size of working video, partial failure, overheating, unstable focus of cameras, etc. At the same time, from the point of view of a simple user, the best were recognized cameras with two fisheye lenses ( one , two , three ).

A source

The new format is also removed by two cameras. This markedly reduces the cost of the end device. At the same time, the shooting technique is greatly simplified, since all methods of working with a conventional camera remain relevant (only the result is potentially more spectacular and with greater immersion). For the success of the format, it is important that every housewife and every student can easily use it. Therefore, the simpler - the better.

Further, in VR180, the problems of the so-called stiching (gluing) disappear - extremely noticeable artifacts in places where images from two cameras were stitched together. Recently it seemed that a little time would have passed, and the problems of stitching would be solved. Alas, they were much more difficult. If there is a fast moving or translucent object on the border of gluing, then at the current level of development of video processing algorithms in automatic mode the problem is not solved. Of course, automatic matting algorithms are evolving, but the absence of artifacts is not guaranteed even with Deep Learning methods . There is no stiching in VR180, which means there are no problems in principle either.

And finally, almost always shot 360-video is flat. That is, from the binocular point of view, the picture is perceived hanging on some screen before the eyes, which often reduces the “wow effect” and the immersion effect, and the VR180 initially and by default - the stereo format.

All these points look very promising from the point of view of predicting the success of the format. As a result, manufacturers rather actively began to produce cameras specifically targeted at the VR180, for example:

The fact that Xiaomi has entered the VR180 market is certainly encouraging.

Also, there were solutions that allow you to assemble the camera for shooting VR180 from two ordinary cameras with fisheye-lenses. Sometimes it is enough just to print or buy a mount to start experiments (below are examples of GoPro, digital soap, Sony mirrors):

A source

Source: http://products.entaniya.co.jp/en/products/equipment-for-3d-stereo-180-vr/

In addition, funny solutions appeared when one camera supports shooting in both VR180 and 360 video formats (this is a clamshell, which shoots 360 when folded and VR180 when maximized):

A source

Among other things, new horizons of experiments on shooting VR-video have opened (in the photo YI Horizon VR180 camera from Xiaomi):

The number of new devices for shooting in the VR180 is very large, and this markedly contributes to the popularity of the new format.

VR180 implementation

Today, companies are trying to introduce VR wherever possible, they want to make the format more popular and popular. And most importantly - cheap. Google is no exception. Everyone remembers their budget solution for the introduction of “virtual reality helmets” (English Head Mounted Display, HMD) for wide use - Google Cardboard .

Its functionality, of course, can not be compared with expensive HMDs, but the main goal has been achieved: to make VR more accessible and turn every smartphone into a virtual reality headset at an additional cost of less than $ 1.

Building on success, Google launches the new VR180 format with support for uploading to YouTube and with a special search filter:

This is the frame of the video from the inside:

Special meta-data has been added to MP4, which turns the video into spherical. Generally speaking, if you simply click on the link, then most likely you will see a plain flat video. This is due to the fact that besides the VR180 video, a projection of one of the views (left) onto a regular rectangle is also uploaded to the site. To see the picture as in the picture above, you need, for example, to download the video in pure MP4 format. Basically, they have 4K resolution. The possibility of camera movement is guaranteed when viewed on a mobile device with the Cardboard application ( Google Play , AppStore ). And, of course, in a full HMD.

Shooting such videos, by analogy with cardboard helmets, was also supposed to be cheap enough for wide distribution among users. A camera that shoots video in this format costs around $ 300. Compared with expensive stereo, this is a completely new level. It would seem that everything is fine. However, the problem is that the new format is a stereo format, and in stereo, as you know, there are many difficult problems to solve.

VR stereo quality

As soon as it comes to stereo (3D), headaches from trips to 3D cinemas are immediately recalled. We considered the reasons for such discomfort in a large series of articles ( one , two , three , four , five , six , seven ) in much more detail, but with reference to stereo films. In short, for a number of reasons, many 3D movies are shot (or converted) so that viewers who are sensitive to artifacts of a stereo video can only take citramon in advance. Unfortunately, most of the problems in 3D movies are related to stereoscopic artifacts, which are also found in VR180. This means that all the factors causing discomfort in such films will also cause discomfort when watching a video in virtual reality. Even the basic quality check of the VR180 content showed that it is comparable to the quality of conventional stereos around the middle of the last century ...

In other words, enthusiasts will be delighted, but the mass audience will complain.

For the analysis of the quality of stereovideo, the VQMT3D project was used , which is being developed in the video group of a computer graphics and multimedia laboratory at the faculty of VMK, MSU. Its purpose is to provide authors of stereo films with the ability to track the occurrence of all possible artifacts at the post-production stage. And since the VR180 is also stereo, the de facto project is applicable to this format with some reservations. In the following examples, frame information is obtained using VQMT3D.

Color distortion

This problem is the easiest to understand and relatively easy to fix. Close one eye and look at some object. Now do the same with the other eye and answer the question: do the colors change when the eye changes? In general, no. So in stereovideo there should be no differences in the color of the same objects for the left and right angles. However, this is what we see in real videos taken on YouTube (pay attention to monochrome areas, for example, sky or water):

Link to video

Color distortions can occur for many reasons, for example, due to different calibrations of cameras, heating of their matrices or when the edge of the lens is lit. Therefore, even with identical shooting parameters for identical cameras, the colors can diverge noticeably.

It is most convenient to visualize this artifact using “chess”, when the right angle is brought to the left using motion compensation, and then blocks are selected from the left and reduced right angles in a checkerboard pattern.

Below is an example when light sources fall into the frame:

Link to video

Not only do the light sources themselves vary greatly in their perspectives, they also distort the colors in the whole image with highlights.

A tougher example is when the sun hits the frame:

Link to video

Due to the unsuccessful setting of the camera in front of the sun, a terrible artifact appears in the form of a red highlight on the matrix. Color distortions are quite rare in real life, and artifacts of the above type are not found at all, which ultimately leads to an accumulation of fatigue when viewing. Unfortunately, in the most sensitive part of the audience fatigue turns into a headache.

Sharpness Differences

Another problem that arises when shooting stereovideo is the differences in sharpness in the left and right angles. In real life, this problem is quite common. For example, if you spend 10–12 hours at a computer, peering intensely at the screen (you will agree, this happens) , then at the end of the day, the right and left eyes can noticeably diverge in focus, and light myopia / hyperopia is guaranteed until the evening. In this case, the brain successfully compensates for this problem. Relatively speaking, we get clear image details from the right or left eye for distant / close objects. That is, in engineering language, the problem is regularly solved with built-in tools. And in the morning, as a rule, vision is restored. And everything would be fine, but in real stereo video, focusing can “jump” from scene to scene. It turns out that the “far-sighted” becomes either the right eye or the left, and sometimes both see well, which leads to noticeable discomfort when viewed. Especially for people in the age, whose eyes are already "stationary" diverged in sharpness.

Examples of discrepancies for VR180, where for better clarity, enlarged fragments of the same area are presented for two views:

Link to video

Here is another fragment of this frame:

Link to video

The appearance of this artifact is due to the divergence of focusing cameras for technical reasons. And because of the lack of professional post-processing, even “vygvlaznye” scenes fall on YouTube.

Link to video

Almost all the inscriptions on the zoomed image differ in sharpness. Pay attention to the inscription "12 CH", which will be uncomfortable to "strobe" when viewing.

Time shift

Strangely enough, although computer cores have long been successfully synchronized for millionths of a second, stereo cameras when shooting still differ in time by hundredths or even tenths of a second. One eye sees events that have not yet happened for the other eye! You can not even come up with an analogue for this problem in the real world. And this artifact was also found in VR180.

Pay attention to the window with a neon sign on the right side of the frame:

Link to video

This time shift was noticed by chance when analyzing discrepancies in color, which is also present here. The glimmering sign catches the eye, even without the use of special metrics aimed at finding a time shift . Simply left the frame behind the right!

Here is another example from the same scene. Look at the feet of pedestrians:

Link to video

It is clearly visible that on the right frame the leg is moved further than on the left, as if one frame lags behind the other for a few moments, although they should have been shot at exactly the same moment. We conducted an experiment in which we showed 302 viewers short fragments of stereo films with different artifacts and asked after each fragment from a smartphone / laptop to fill out a form indicating the level of pain. The shift in time has shown itself to be the most painful artifact - this is an impossible situation for the brain and an attempt to "process" it leads to obvious tangible discomfort. Unfortunately, a shift of less than 1 frame is most common, and it is not so easy to correct.

By the way, the example above shows with the naked eye also the rotation of the frame between the angles (especially in the lower left corner), which is also quite uncomfortable, but it is much easier to correct. However, other artifacts are a separate big story, to which we will, I hope, return.

Google "nakosyachil"?

It could well seem that these artifacts are inherent in the video obtained during amateur photography, and if used correctly, the same cameras can give a good image. Unfortunately, this is not the case. Here is the VR180 format promotional video , which is located on the official VR180 page. It would seem that he should set the standard of quality. But if you look ...

Differences in color:

Absolutely at all points there is color distortion. As if at one of the angles a slightly larger white balance was mistakenly set.

And here is a large part of the frame without distortion. But the lower right corner still differs noticeably in color, which causes a characteristic visual “gating” when viewed.

It is noteworthy that the color distortion on the road was also noticed without special tools for stereo analysis. It was discovered just when watching video frame by frame (similar to video processing with different parameters).

Differences in sharpness:

Here the difference is most noticeable on the floor and on the seams of the sofa. The greatest difference in sharpness is precisely at the edges of the objects.

Conclusion

What do we have in the end?

VR, including 360-video, is actively distributed. The technology attracts users and looks very promising. But the technical quality of the current implementation causes discomfort from viewing. As a result, a certain number of people interested in trying a new format get a headache, and when shooting is unsuccessful (as a rule, when the camera moves sharply), in addition, dizziness and nausea, which leads to disappointment in the format.

What can be done with this?

At the moment, many (including us) are developing tools for quality control , as well as to correct stereo problems.

For example, here are examples of color correction:

On the left - the original angles, on the right - color-corrected using our algorithm. The illuminated view is completely corrected.

The color on the ceiling returned to normal.

For more examples of automatic color correction, see a separate article on stereo color distortion .

In total, at the moment, about 20 types of artifacts of the shot and converted stereo video are detected, most of which are also relevant for the VR180. In the future we plan to expand the methods of monitoring and improving the quality of VR video:

- adaptation of current quality control methods under VR

- addition and implementation of artifact correction methods

- compiling automatic video reports with the prediction of pain caused by viewing, in order to warn users and review the content of producers, motivating them to be more attentive to quality

What are the prospects for the format as a whole?

It is obvious that the current problems are the problems of the infancy of technology and they will be actively solved. It can be expected that:

- camera makers bundled with the camera will supply software, a crucial part of the basic problems

- over time (if there is demand) a professional software will appear to correct artifacts

- it is very likely that YouTube will be implemented to correct part of the artifacts automatically when loading VR180 video, much the same way the jitter and interlacing of good old 2D video is now automatically corrected

From the funny: now came the fashion for smartphones with 3-4 cameras on the back side, which provide a dramatic improvement in the quality of photos.

Source: Samsung Galaxy A7 (2018) & Samsung Galaxy A9 (2018)

It is logical to expect that with the growing popularity of the VR180, its out-of-box support will be built into all major smartphone models.

Yes, most likely the angle of view will be not 180 °, but less.

Yes, most likely the quality will be worse than that of specialized cameras.

Yes, shooting stereovideo will require compression of large streams of information (which today's smartphones are still poorly designed for).

Yes, you will need to put two wide-angle cameras at a greater distance.

But technically, today there are no major obstacles to the implementation of support for the VR180 flagship models. The question is only in the growing popularity of the format, so that demand becomes massive, creating an incentive for this.

And it is clear that when VR180 shooting will start to support top smartphones, the number of relevant videos on YouTube will start to grow like an avalanche.

Also at exhibitions you can see autostereoscopic displays of smartphones and tablets, which with increasing resolution show more and more interesting quality. At least, it cannot be compared with what could be massively observed in 2010–2011 during the last wave. At the time of writing this article, RED Hydrogen One was announced - the first SERIAL smartphone with a 3D screen of a new generation, so it was possible to buy what the professionals saw at the exhibitions. The process goes on and, as screen resolution grows, it will obviously go faster and faster. The main obstacle is the lack of content.

Obviously, this “chicken and egg” problem will be solved soon.

General conclusions:

VR180 has the following significant advantages:

- Significantly higher immersion in 3D for VR180 compared to 360-video

- There are no stitching artifacts (gluing) video from multiple cameras

- Cameras for shooting VR180 are cheap enough and will be cheaper

- Shooting the VR180 is noticeably closer to shooting with a regular camera and much easier for non-professionals, i.e., it will be relatively easy for a huge mass of fans to shoot their own video in the VR180 format

- You can expect VR180 support in smartphones over time

- You can expect cheap high-quality autostereoscopic displays of smartphones that will allow you to view VR180 without glasses and helmets (at the moment such solutions can already be seen at exhibitions, and the lack of content separates them from mass production)

Cons VR180:

- Cheap cameras are now filming bad stereo that causes discomfort when watching

- Currently there are no available post-processing programs for the received VR180 videos and fixes for artifacts. , , , …

Total:

- VR180 , , , , «»

- VR180 « », , , .

- The popularity of the format rests on the problem of "chicken and eggs": there should be enough devices for shooting and viewing. At the same time, unlike the 360-video, the VR180 will technically soon be easy to shoot on any smartphone. And if Google Pixel 5 XL will support VR180 out of the box - it will be natural

- As a result: in the future 10 years VR180 is doomed to popularity!

All smaller headaches!

Yours Konstantin Kozhemyakov and Dmitry Vatolin

PS Thanks

I would like to sincerely thank:

- our colleagues from the video group, thanks to which the algorithms presented above were created, and the results were calculated,

- Google for the VR180 commercials artifacts, as well as the fact that it promotes new formats no matter what,

- . .. ,

- ,

- , , , , , , , !

See also:

Source: https://habr.com/ru/post/429414/

All Articles