How to test an application when interacting with an API using SoapUI

Many use SoapUI to test both the API itself and applications accessing the API. Quite a flexible tool that allows, for example, to export the swagger API file and generate a Mock-service based on it.

Not so long ago, in our company, I was faced with a similar task, but with non-trivial conditions. Initial data: it is necessary to test the server application, which receives a task as input, calls the API during execution, each subsequent request depends on the API response. Logic embedded in the application. That is a kind of black box, where you need to test a lot of exits from a script, when there is only one entrance to a script.

')

Below, I offer an example of a solution that made it possible to simply integrate it into the regression infrastructure, as well as having a margin to scale in the event of an increase in the range of scenarios and their complexity.

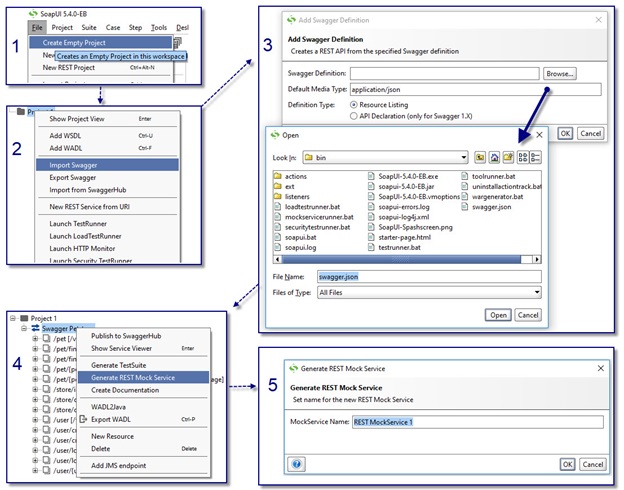

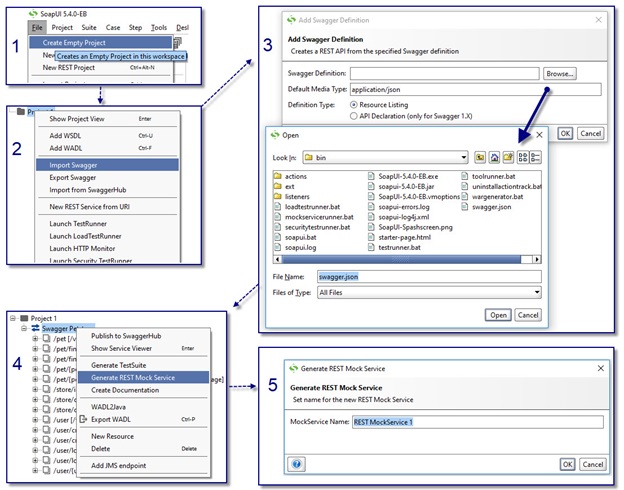

To start, create a Mock-service in SoapUI. This is done in a few clicks:

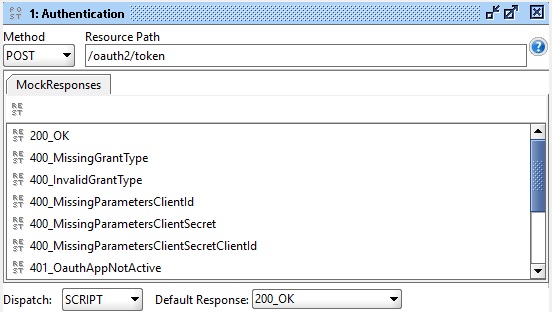

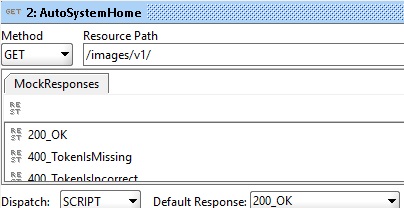

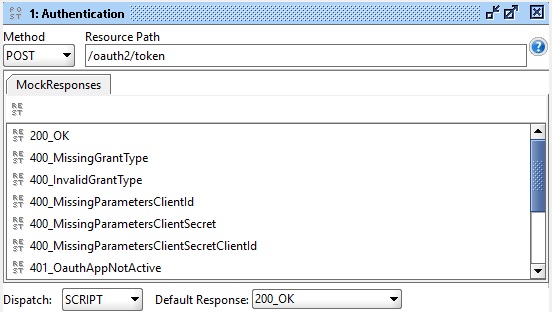

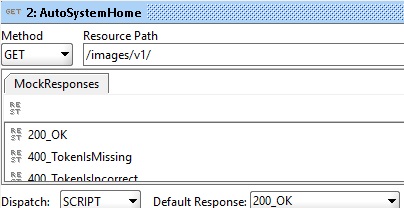

We now turn to creating stubs for requests. Since the task in the script is one, we have options:

The first option can be used in the case when it is necessary to receive a response from the stub simultaneously for several requests. The implementation requires the client application's ability to pass a specific identifier in each request to the API. In our case it was almost impossible, and simultaneous testing of several scenarios was not required, so the second option was chosen.

So, to assign a list of answers stubs run the following request before running the test:

In the Mock-service we add the processing of the “SetScenario” request. It has only two answers: “200_OK” in the case of correct processing of the incoming request, and “400_BadRequest” if the request was not correctly composed:

In the script, we distribute the response values for each stub into global variables:

The assigned variables can be seen in the service settings window:

Thus, we describe all the logic of the Mock-service operation in this method, and in the stubs for the API methods it is enough to write a script that reads the response value from the global variable:

If you need to add timeout scripts, delays in responses, then add the delay variable, for example:

And in the script stub add:

If it is necessary to support a repeated request, then we send a list of answers:

And in the processing script add tokenize and delete the already sent response, in case it is not the last:

As a result, we got a simple Mock-service, which is easy to move between test benches and environments, because the project file is an xml file. The service starts immediately after importing, without additional settings (except for changing the server address and port, of course). At the moment, he helps us to test the application regardless of the stability of the API server and the possible temporary periods of its inaccessibility.

What we plan to add: integrate this solution to test applications before and during the development of the API itself. For example, when its description is already ready as a swagger-file, but the server is in the process of configuration. The development cycles of the API and client applications do not always coincide. At this point, it is useful to test the client application that is being developed, and the Mock service can help a lot.

UPD: if you have questions and useful comments.

Not so long ago, in our company, I was faced with a similar task, but with non-trivial conditions. Initial data: it is necessary to test the server application, which receives a task as input, calls the API during execution, each subsequent request depends on the API response. Logic embedded in the application. That is a kind of black box, where you need to test a lot of exits from a script, when there is only one entrance to a script.

')

Below, I offer an example of a solution that made it possible to simply integrate it into the regression infrastructure, as well as having a margin to scale in the event of an increase in the range of scenarios and their complexity.

To start, create a Mock-service in SoapUI. This is done in a few clicks:

We now turn to creating stubs for requests. Since the task in the script is one, we have options:

- use the script identifier and transmit it in each request, and in each stub determine the response depending on this identifier;

- specify in advance the list of answers for each stub and store them in global variables before running the test.

The first option can be used in the case when it is necessary to receive a response from the stub simultaneously for several requests. The implementation requires the client application's ability to pass a specific identifier in each request to the API. In our case it was almost impossible, and simultaneous testing of several scenarios was not required, so the second option was chosen.

So, to assign a list of answers stubs run the following request before running the test:

Post: http://mockserver:8080/setscenario Body: ScenarioId=0&Authentication=200_OK&AutoSystemHome=400_TokenIsMissing… In the Mock-service we add the processing of the “SetScenario” request. It has only two answers: “200_OK” in the case of correct processing of the incoming request, and “400_BadRequest” if the request was not correctly composed:

In the script, we distribute the response values for each stub into global variables:

def reqBody = mockRequest.getRequestContent() // def reqBodyParams = [:] reqBody.tokenize("&").each // , { param-> def keyAndValue = param.split("=") reqBodyParams[keyAndValue[0]]=keyAndValue[1] } if (reqBodyParams.containsKey('ScenarioId')) // ID ( ); { // , , “?:” – : context.mockService.setPropertyValue("ScenarioId", reqBodyParams["ScenarioId"] ?: "0") context.mockService.setPropertyValue("Authentication", reqBodyParams["Authentication"] ?: "200_OK") context.mockService.setPropertyValue("AutoSystemHome", reqBodyParams["AutoSystemHome"] ?: "200_OK") // … return "200_OK" } else { return "400_BadRequest" } The assigned variables can be seen in the service settings window:

Thus, we describe all the logic of the Mock-service operation in this method, and in the stubs for the API methods it is enough to write a script that reads the response value from the global variable:

Authentication = context.mockService.getPropertyValue("Authentication") return "${Authentication}"

AutoSystemHome = context.mockService.getPropertyValue("AutoSystemHome") return "${AutoSystemHome}" If you need to add timeout scripts, delays in responses, then add the delay variable, for example:

Post: http://mockserver:8080/setscenario Body: ScenarioId=0&Delay=600&Authentication=200_OK &AutoSystemHome=400_TokenIsMissing… And in the script stub add:

… Authentication = context.mockService.getPropertyValue("Authentication") Delay = context.mockService.getPropertyValue("Delay").toInteger() sleep(Delay) return "${Authentication}" If it is necessary to support a repeated request, then we send a list of answers:

Body: Authentication:400_MissingParametersClientId;400_MissingParametersClientId;200_OK And in the processing script add tokenize and delete the already sent response, in case it is not the last:

def Authentication = [] Authentication = context.mockService.getPropertyValue("Authentication").tokenize("%3B") if (Authentication.size() > 1) { Authentication.remove(0) Authentication = Authentication.join("%3B") context.mockService.setPropertyValue("Authentication", Authentication) } As a result, we got a simple Mock-service, which is easy to move between test benches and environments, because the project file is an xml file. The service starts immediately after importing, without additional settings (except for changing the server address and port, of course). At the moment, he helps us to test the application regardless of the stability of the API server and the possible temporary periods of its inaccessibility.

What we plan to add: integrate this solution to test applications before and during the development of the API itself. For example, when its description is already ready as a swagger-file, but the server is in the process of configuration. The development cycles of the API and client applications do not always coincide. At this point, it is useful to test the client application that is being developed, and the Mock service can help a lot.

UPD: if you have questions and useful comments.

Source: https://habr.com/ru/post/429396/

All Articles