These new tricks are still able to outsmart Deepfake videos.

For a few weeks, computer science specialist Suiwe Lü [Siwei Lyu] watched the deepfake videos created by his team with agonizing anxiety. These fake movies, created using a machine learning algorithm, showed celebrities doing things that they wouldn't do. They seemed to him strangely frightening, and not only because he knew that they were fake. “They look wrong,” he recalls his thoughts, “but it’s very difficult to pinpoint exactly what makes up such an impression.”

But once a childish memory appeared in his brain. Like many other children, he played peepers with children. “I have always lost such competitions,” he says, “because when I looked at their unblinking faces, I felt very uncomfortable.”

')

He realized that these fake films caused him similar discomfort: he lost sight of these movie stars because they did not open and close their eyes with such frequency as real people do.

To find out why, Lui, a professor at the University of Albany, and his team studied every step of the DeepFake software that creates these videos.

Deepfake programs take a lot of images of a specific person at the entrance - you, your ex-girlfriend, Kim Jong-un - so that they can be seen from different angles, with different facial expressions, saying different words. Algorithms learn how this character looks like, and then synthesize the knowledge gained in a video showing how this person does what he never did. Pornography Stephen Colbert speaking the words of John Oliver. President, warning about the dangers of fake videos.

These videos look convincing for a few seconds on the screen of the phone, but they are (yet) not yet perfect. They can be seen signs of forgery, for example, in a strange way, constantly open eyes, resulting from the shortcomings of the process of their creation. Looking into the giblets of DeepFake, Lui realized that among the images from which the program studied, there were not too many photos with closed eyes (you do not save yourself a selfie on which you blink?). “It becomes a distortion,” he says. Neural network does not understand blinking. Programs can also miss other “physiological signals inherent in humans,” says Liu’s work , which describes this phenomenon — breathing at a normal speed, or the presence of a pulse. And although this study focused on videos created using specific software, it is generally recognized that even a large set of photographs may not be able to adequately describe a person’s physical perception, so any software trained on these images will be imperfect.

Revelation about blinking revealed a lot of fake videos. But a few weeks after Luy and the team posted a draft of the work online, they received an anonymous letter that contained links to the next fake video posted on YouTube, where the stars opened and closed their eyes in a more normal way. Fake creators have evolved.

And this is natural. As Liu noted in an interview with The Conversation, “you can add a blink to the deepfake videos by including images with closed eyes in the base, or using training videos.” Knowing what a sign of a fake is, one can avoid it - this is “only” a technical problem. Which means that the fake videos will get involved in an arms race between creators and recognizers. Studies like Lüy’s work can only complicate the life of fake manufacturers. “We are trying to raise the bar,” he says. “We want to complicate this process and make it more time-consuming.”

Because now it is very simple. Download the program, google photos of celebrities, feed them to the entrance of the program. She digests them, learns from them. And although she is not yet completely independent, with a little help she bears and gives birth to something new and fairly realistic looking.

“It’s very blurry,” says Lui. And he does not mean images. “This is the line between truth and fake,” he clarifies.

This is both annoying and not surprising to anyone who has recently been alive, and sat on the Internet. But this is especially worried about the military and intelligence agencies. In particular, this is why Liu’s research, like some other works, is funded by a program from DARPA called MediFor - Media Forensics [media forensics].

The MediFor project was launched in 2016, when the agency noticed an increase in the activity of fake manufacturers. The project is trying to create an automated system that studies three levels of counterfeit signs and provides an outstanding "assessment of the reality" of an image or video. At the first level, dirty digital traces are looked for - noise from the camera of a particular model or compression artifacts. The second level is physical: not the lighting on the face, the reflection does not look like it should look like with this lamp arrangement. The latter is semantic: a comparison of data with verified real information. If, for example, it is argued that the video that captured the game of football was filmed in Central Park at 14 o'clock on Tuesday, October 9, 2018, does the sky condition coincide with the weather archive? Put all the levels together and get an estimate of the reality of the data. DARPA hopes that by the end of MediFor, prototypes of systems will appear that can be used for large-scale testing.

However, the watch is ticking (or is it just a repetitive sound created by an AI trained on data related to time tracking?) "In a few years, you will be able to face such a thing as fabrication of events," says Durpa’s program manager, Mat Turek. “Not just a single image or edited video, but several images or videos trying to convey a convincing message.”

In the Los Alamos National Laboratory, an informatics specialist Juston Moore imagines a potential future more vividly. Suppose: we inform the algorithm that we need a video in which Moore robs the pharmacy; we introduce it in the video of the security system of this institution; send him to jail. In other words, he is concerned that if the standards for verifying evidence will not (or will not) develop in parallel with the manufacture of fakes, it will be easy to expose people. And if the courts cannot rely on visual data, it may turn out that real evidence will be ignored.

We come to the logical conclusion that seeing once will be no better than hearing a hundred times. “It may happen that we will not trust any photographic evidence,” he says, “but I don’t want to live in such a world.”

Such a world is not so incredible. And the problem, according to Moore, extends far beyond replacing faces. “Algorithms can create images of people who do not belong to real people, they can strangely alter images, turning a horse into a zebra ,” says Moore. They can delete parts of images and remove foreground objects from video .

Maybe we will not be able to deal with fakes faster than they will do. But maybe it will work out - and this opportunity provides motivation for Moore’s team on the study of digital evidence study methods. The Los Alamos program, which combines knowledge of cyber systems, information systems, theoretical biology and biophysics, is about a year younger than DARPA. One approach focuses on “compressibility”, on cases where the image contains not so much information as it seems. “In essence, we are repelled by the idea that all AI image generators have a limited set of things that they can create,” says Moore. “So even if the image seems rather complicated to me, you can find a repetitive structure in it.” When re-processing the pixels there is not too much of everything.

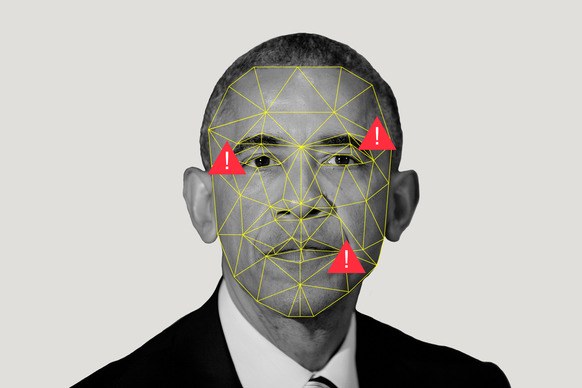

They also use sparse coding algorithms to play the game with matches. Suppose we have two collections: a bunch of real images, and a bunch of artificially created AI images. The algorithm studies them, creating what Moore calls the “vocabulary of visual elements,” noting that the artificial pictures have a common thing, and what real images have in common. If Moore's friend retweets the image of Obama, and Moore considers that the image was made using AI, he can drive it through the program and find out which of the dictionaries it will be closer to.

Los Alamos, where one of the world's most powerful supercomputers is located, downloads resources to this program not only because someone can substitute Moore through a fake robbery. The mission of the laboratory is “to solve problems of national security with the help of scientific excellence”. Their central task is nuclear safety, ensuring that bombs do not explode when they should not, and will explode when they should (please do not), as well as help in non-proliferation. All this requires a general knowledge of machine learning, since it helps, as Moore says, “to draw big conclusions from small data sets”.

But besides all this, businesses like Los Alamos should be able to believe their eyes — or know when they don’t need to be trusted. Because what if you see satellite images of how a country mobilizes or tests nuclear weapons? What if someone forges sensor readings?

This is a frightening future that the work of Moore and Liu should ideally avoid. But in a world where everything is lost, to see doesn’t mean to believe, and seemingly absolute measurements can be fake. Everything digital is in doubt.

But maybe “doubt” is the wrong word. Many people will take fakes at face value (remember the shark photo in Houston?), Especially if the content matches their beliefs. “People will believe in what they tend to believe,” says Moore.

The likelihood of this is higher for the general public watching the news than in the area of national security. And in order to prevent the spread of misinformation among us, simpletons, DARPA offers cooperation to social networks, in an attempt to help users determine that the credibility of the video where Kim Jong-un is dancing macaroons is rather low. Turek points out that social networks can spread the story that disproves the video as quickly as the video itself.

But will they do it? Exposing is a troublesome process (although not as ineffective as rumor says). And people need to truly show the facts before they can change their opinion about fiction.

But even if no one can change the opinion of the masses about the truthfulness of the video, it is important that people who make political and legal decisions - about who is carrying rockets or killing people - try to use machines to separate the obvious reality from the AI sleep.

Source: https://habr.com/ru/post/429192/

All Articles