Stream analytics: quick start with SAS ESP

The use of analytical algorithms on the data stream is now one of the most pressing problems in the field of building analytical systems. Many high-precision predictive models, for example, developed on readings from sensors of industrial installations, are ready to prevent serious accidents at work, but for this they need to be performed on end devices ("edge devices"), where readings from sensors arrive in real time. The product SAS Event Stream Processing is designed to solve this problem and transfer analytics to “online”. In this publication, I wanted to share my experience in setting it up using the example of an applied task - analyzing images from video cameras.

The SAS product line has for many years been presenting and developing its own stream processing solution, SAS Event Stream Processing (hereinafter referred to as ESP). The main task before the developers was to achieve high performance, by orders of magnitude higher than the existing solutions on the market. To this end, when developing ESP, they completely abandoned the storage of intermediate calculations and indexes on the disk. All stream conversions are performed in RAM, and a one-by-one count is saved.

Working with memory in SAS ESP. The event repository in RAM stores intermediate results and is not blocked in case of parallel calls of internal or external processes due to additional indices.

')

The engine is able to handle large streams of data - several million events per second. At the same time maintaining low latency, which is why he quickly took his place in the solutions for online marketing and countering bank fraud. There, it is an intelligent filter of client operations and instantly detects nonstandard account activity, extracting fraudulent transactions from the data stream.

But still it is a secondary function of ESP. The engine is designed as a mechanism for continuous use of analytical models (SAS, C, Python, and others) on the data stream.

The principle of streaming event handling.

As a visual example, at the SAS Viya Business Breakfast forum, we at SAS decided to set up a demonstration stand, based on the task of image analysis. And that's how we set it up and what we encountered in the process.

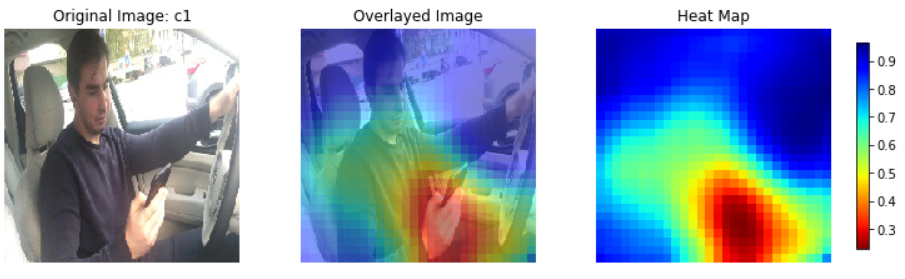

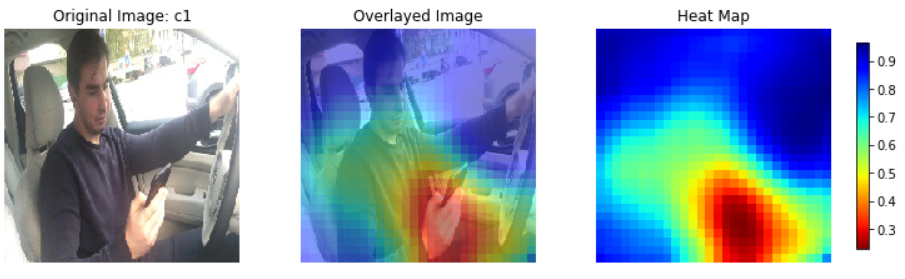

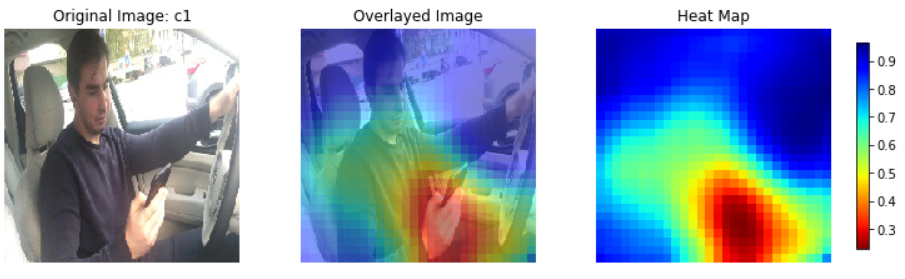

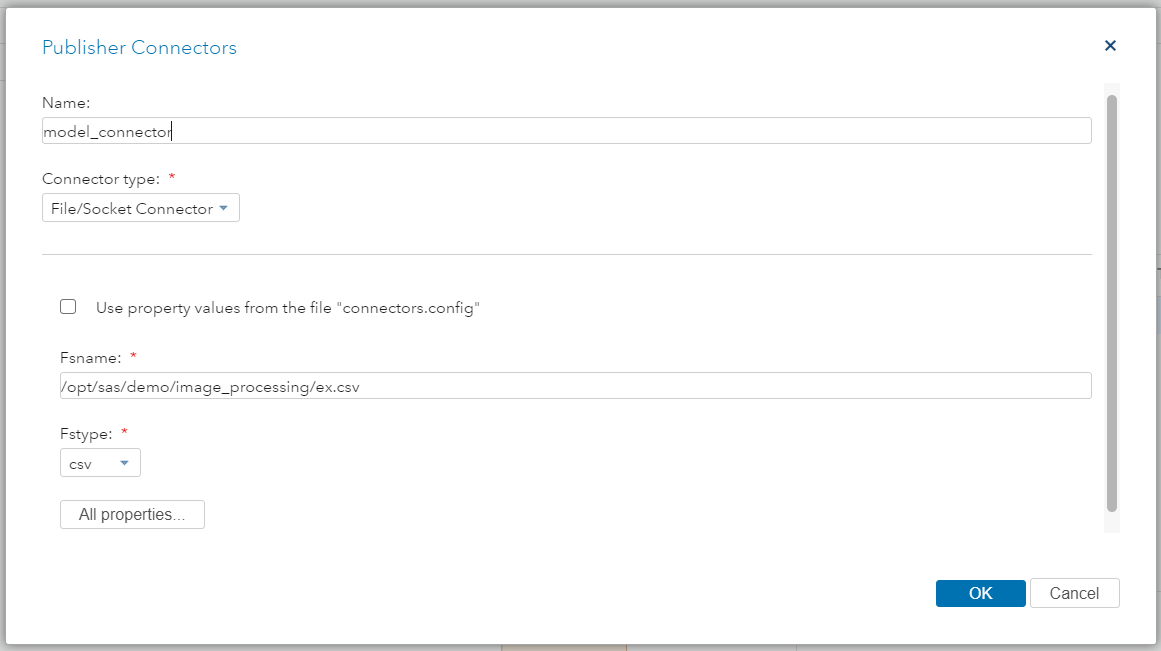

We have at our disposal an analytical model built by colleagues from the in-depth analytics department on the SAS Viya platform. Its essence was that, receiving the driver’s image in the car’s interior, the algorithm (in this case, a trained convolutional neural network) classified the driver’s behavior into classes: “normal driving”, “distracted by a conversation with the passenger”, “talking telephone "," writes SMS ", etc.

Example of the model: the input image is assigned to the class “writes SMS”, based on the part of the image where the hand with the phone fell.

Example of the model: the input image is assigned to the class “writes SMS”, based on the part of the image where the hand with the phone fell.

The question arose how to run this algorithm in real time in order to be able to respond to unwanted behavior. According to the scenario, camera images in online mode have already been transferred as files to a network directory on the disk.

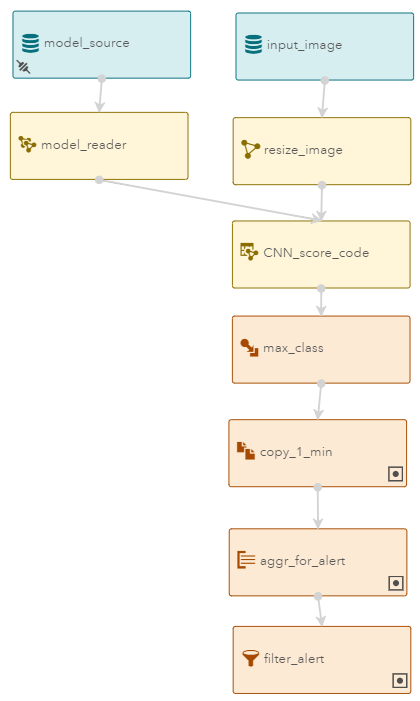

It only remained: to connect to the data, convert images to the desired format, apply the model, and, according to the probability of belonging to a class of behavior, display real-time warnings on online dashboards. And it turned out that everything can be configured in the SAS ESP GUI, without a single line of program code (!).

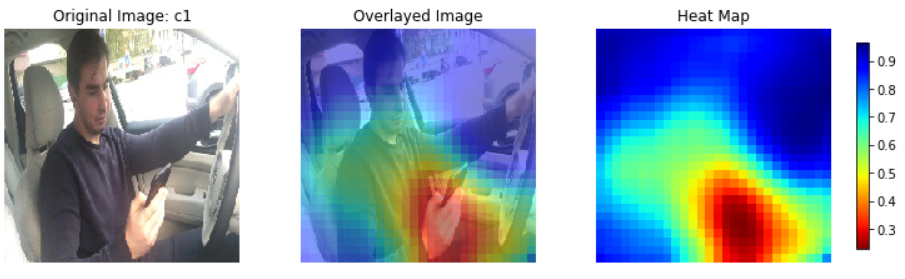

The project of detection of dangerous situations in the SAS ESP Studio GUI.

The project of detection of dangerous situations in the SAS ESP Studio GUI.

In the ESP graphics editor, we added the following data stream transformations:

It is important to note that each transformation in ESP has a setting - “State” (State). It characterizes whether data transformation is streaming, i.e. when processing the input event, the result is immediately transmitted further along the diagram and is deleted from the memory of this node. Or we can store the result of processing each event and build an index on it to be able to access it at any time. For example, an aggregation window always stores a state, and is continuously and casually calculated based on the input stream.

Let's go back to the project. We can only run it. .

.

For the convenience of viewing data streams, ESP Stream Viewer, an online report designer, is supplied with ESP. In the setup mode, we see all the running projects on the servers, and we can connect to them and choose a convenient visualization.

Online dashboards in SAS ESP Stream Viewer

That's all. We were able to automate the response in real time to the video signal. In this case, we set up online monitoring, but using the same adapters / connectors, we can send a message in each case of detected violations, or generate a control action to an external system.

Technically, the case was completed, but for the purpose of the demonstration on the forum, it seemed not to be interactive enough. It was inconvenient, and quite unsafe (!) To show in real time, so the photos were taken in advance and the car was in the parking lot.

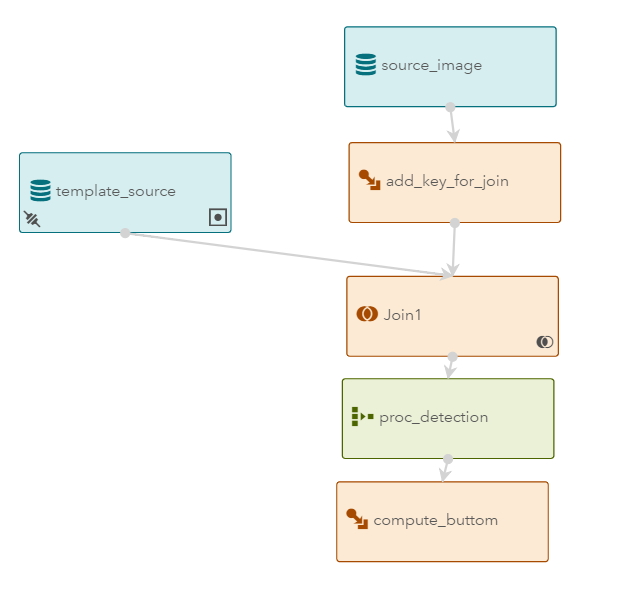

SAS ESP supports streaming models made in Python. To confirm, we set up image analysis, namely, searching for objects in an image, using the OpenCV libraries.

Model ESP to search for a construction helmet on an input stream of images

Model ESP to search for a construction helmet on an input stream of images

In the ESP diagram, we have 2 data sources: frames from an online laptop camera and a photo of the object we are looking for. In this case, we determine whether the potential worker at the construction site has forgotten the safety rules: is there a helmet on it? On the proc_detection node, we execute the following Python code:

Identification of the presence of a helmet in the image from the webcam, on the forum SAS Viya Business Breakfast (colleague, maxxts test stand)

If we talk about the further development of stream processing, it is likely that the emphasis will shift to the training of analytical models on the stream. Those. Unlike the previous examples, the models will not only execute, but also train inside SAS ESP. This will require an additional data stream on which to perform the train operation of the selected algorithm (for example, to begin with, simple clustering using the k-means method). Then the machine with ESP on board, will be able to analyze the situation on the new sensors that are just connected. This will provide an opportunity to quickly enable and automate new devices in the concept of Industry 4.0.

Please write in the comments what tasks you faced in stream analytics, and of course, I will be happy to answer questions about SAS Event Stream Processing .

Technology

The SAS product line has for many years been presenting and developing its own stream processing solution, SAS Event Stream Processing (hereinafter referred to as ESP). The main task before the developers was to achieve high performance, by orders of magnitude higher than the existing solutions on the market. To this end, when developing ESP, they completely abandoned the storage of intermediate calculations and indexes on the disk. All stream conversions are performed in RAM, and a one-by-one count is saved.

Working with memory in SAS ESP. The event repository in RAM stores intermediate results and is not blocked in case of parallel calls of internal or external processes due to additional indices.

')

The engine is able to handle large streams of data - several million events per second. At the same time maintaining low latency, which is why he quickly took his place in the solutions for online marketing and countering bank fraud. There, it is an intelligent filter of client operations and instantly detects nonstandard account activity, extracting fraudulent transactions from the data stream.

But still it is a secondary function of ESP. The engine is designed as a mechanism for continuous use of analytical models (SAS, C, Python, and others) on the data stream.

The principle of streaming event handling.

As a visual example, at the SAS Viya Business Breakfast forum, we at SAS decided to set up a demonstration stand, based on the task of image analysis. And that's how we set it up and what we encountered in the process.

Video stream analysis

We have at our disposal an analytical model built by colleagues from the in-depth analytics department on the SAS Viya platform. Its essence was that, receiving the driver’s image in the car’s interior, the algorithm (in this case, a trained convolutional neural network) classified the driver’s behavior into classes: “normal driving”, “distracted by a conversation with the passenger”, “talking telephone "," writes SMS ", etc.

Example of the model: the input image is assigned to the class “writes SMS”, based on the part of the image where the hand with the phone fell.

Example of the model: the input image is assigned to the class “writes SMS”, based on the part of the image where the hand with the phone fell.The question arose how to run this algorithm in real time in order to be able to respond to unwanted behavior. According to the scenario, camera images in online mode have already been transferred as files to a network directory on the disk.

It only remained: to connect to the data, convert images to the desired format, apply the model, and, according to the probability of belonging to a class of behavior, display real-time warnings on online dashboards. And it turned out that everything can be configured in the SAS ESP GUI, without a single line of program code (!).

SAS Event Stream Processing

The project of detection of dangerous situations in the SAS ESP Studio GUI.

The project of detection of dangerous situations in the SAS ESP Studio GUI.In the ESP graphics editor, we added the following data stream transformations:

- As input, we have two nodes of type source: model_source and input_image. In model_source we publish our model by classification. To do this, set up a file connector that reads a command from a text file containing three parameters: the name of the operation, the type format of the model, and the physical path to the binary file. ESP leaves behind each data source the opportunity to be both static and streaming, thus, we can publish new commands for loading models to this node at any time, which is convenient in commercial operation - we do not need to stop the project to update the versions of the algorithms.

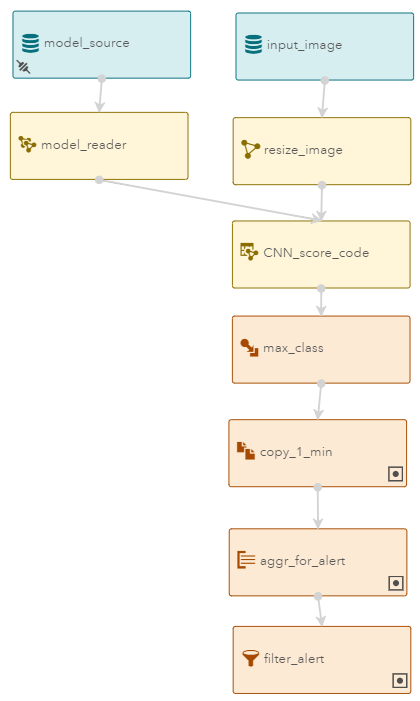

Setting up command publication in model_source

Content of the input model file:I,N,1,action,load

I,N,2,type,astore

I,N,3,reference,/opt/sas/demo/image_processing/d.astore - The second source, input_image, is images from the camera. To publish data, we use a regular adapter - a program that publishes data from an ESP source. In this case, we used the file adapter, but we can connect to the camera directly, for example, via the UVC adapter that comes with the solution.

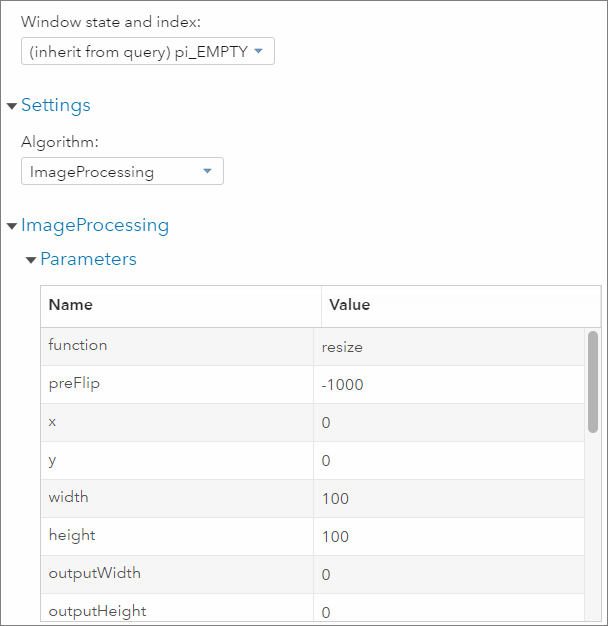

Set up a UVC connector for common camera types. - Images may come in a format different from the one on which the model was trained, and this may affect the quality of the classification. Therefore, after input_image, we immediately add the resize_image node and set the required format in its settings. In this case, compressing to a square of 100/100 pixels.

Customize image processing

Customize image processing - Model execution is customizable in CNN_score_code. The interface will automatically pull out the output attributes of the model's meta-description — P__label_c0, P__label_c1, P__label_c2, and P__label_c9 — the probabilities of each behavior class. Next, in the max_class node, we will define a new calculated field that will select the class with the maximum probability.

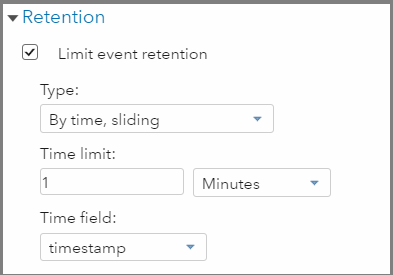

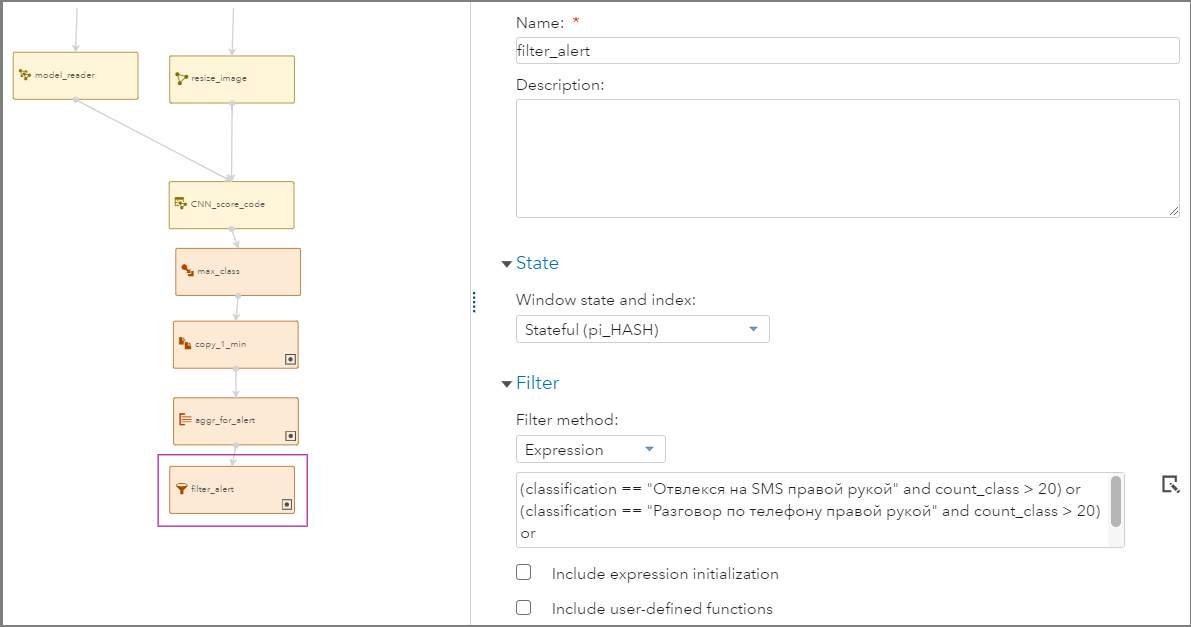

- Now, having started the project, we will be able to receive real-time classification for each input image. In our history, an unfair driver is periodically distracted from the road. But it is important to separate those cases when he was distracted for a second from cases of prolonged dangerous driving. To do this, add 3 nodes: storing events for the last minute (copy_1_min), aggregation by classes of behavior (aggr_for_alert) and filter the facts of “dangerous driving” (filter_alert).

Configuring event storage settings - for 1 last minute.

Configuring event storage settings - for 1 last minute.

Customize the counting of events in the context of each class of behavior.

Setting filtering rules.

Indices

It is important to note that each transformation in ESP has a setting - “State” (State). It characterizes whether data transformation is streaming, i.e. when processing the input event, the result is immediately transmitted further along the diagram and is deleted from the memory of this node. Or we can store the result of processing each event and build an index on it to be able to access it at any time. For example, an aggregation window always stores a state, and is continuously and casually calculated based on the input stream.

Launch

Let's go back to the project. We can only run it.

.

.For the convenience of viewing data streams, ESP Stream Viewer, an online report designer, is supplied with ESP. In the setup mode, we see all the running projects on the servers, and we can connect to them and choose a convenient visualization.

Online dashboards in SAS ESP Stream Viewer

That's all. We were able to automate the response in real time to the video signal. In this case, we set up online monitoring, but using the same adapters / connectors, we can send a message in each case of detected violations, or generate a control action to an external system.

Or Python?

Technically, the case was completed, but for the purpose of the demonstration on the forum, it seemed not to be interactive enough. It was inconvenient, and quite unsafe (!) To show in real time, so the photos were taken in advance and the car was in the parking lot.

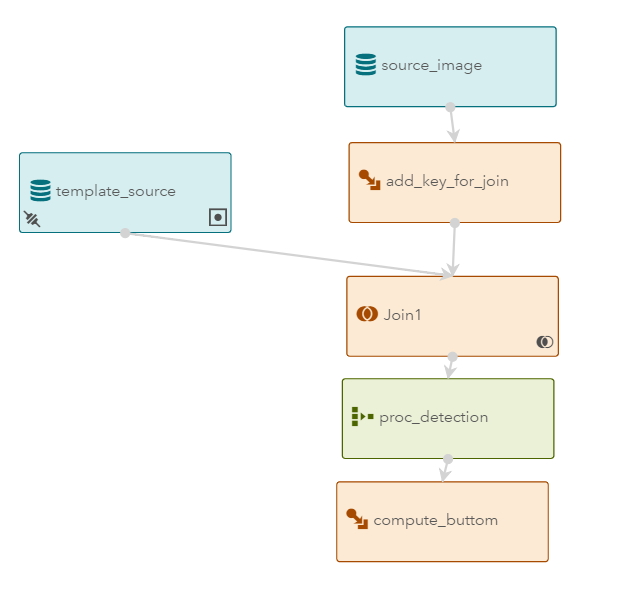

SAS ESP supports streaming models made in Python. To confirm, we set up image analysis, namely, searching for objects in an image, using the OpenCV libraries.

Model ESP to search for a construction helmet on an input stream of images

Model ESP to search for a construction helmet on an input stream of imagesIn the ESP diagram, we have 2 data sources: frames from an online laptop camera and a photo of the object we are looking for. In this case, we determine whether the potential worker at the construction site has forgotten the safety rules: is there a helmet on it? On the proc_detection node, we execute the following Python code:

def compute_total(Image,image_template): "Output: score_point" import sys import cv2 import numpy as np import os import base64 import io from imageio import imread MIN_MATCH_COUNT = 10 # DIST_COEFF = 0.55 img_big = imread(io.BytesIO(base64.b64decode(image_template))) sift = cv2.xfeatures2d.SIFT_create() # Initiate SIFT detector matcher = cv2.BFMatcher() # BFMatcher with default params kp_big, des_big = sift.detectAndCompute(img_big,None) img_tpl = imread(io.BytesIO(base64.b64decode(Image))) kp_tpl, des_tpl = sift.detectAndCompute(img_tpl,None) matches = matcher.knnMatch(des_tpl,des_big,k=2) good = [] for m,n in matches: if m.distance < n.distance * DIST_COEFF: good.append(m) score_point = len(good) return score_point

Identification of the presence of a helmet in the image from the webcam, on the forum SAS Viya Business Breakfast (colleague, maxxts test stand)

Machine learning

If we talk about the further development of stream processing, it is likely that the emphasis will shift to the training of analytical models on the stream. Those. Unlike the previous examples, the models will not only execute, but also train inside SAS ESP. This will require an additional data stream on which to perform the train operation of the selected algorithm (for example, to begin with, simple clustering using the k-means method). Then the machine with ESP on board, will be able to analyze the situation on the new sensors that are just connected. This will provide an opportunity to quickly enable and automate new devices in the concept of Industry 4.0.

Please write in the comments what tasks you faced in stream analytics, and of course, I will be happy to answer questions about SAS Event Stream Processing .

Source: https://habr.com/ru/post/429176/

All Articles