The impostor syndrome affects men no less than women ... and other findings from 10,000 technical interviews

Modern technical interviewing is a dedication ceremony for software engineers, which (hopefully!) Precedes getting excellent work. But also a huge source of stress, endless questions for candidates. A simple search “How to prepare for a technical interview” gives millions of posts on Medium, programming programming blogs, Quora discussions, and even entire books.

Despite all this information, people are still struggling to learn how to behave in an interview. In the previous article, we found that surprisingly a large number of users interviewing.io underestimate their abilities, which increases the likelihood of a negative outcome of the interview. Now we have a lot more data (more than 10,000 interviews with real software engineers), so we want to dig deeper: what causes candidates to underestimate self-esteem?

Some general facts are known from scientific studies that interfere with proper self-esteem: for example, people do not always appreciate or even remember their performance in complex cognitive tasks, such as writing code 1 . Technical interviews are especially difficult to assess if candidates do not have much experience with questions without one correct answer. Since many companies do not discuss the results after the interview with the candidates, many will never understand what they did well or what could be better 2 3 . In the end, removing the curtains of secrecy around job interviews in the entire industry was one of the main reasons for creating interviewing.io !

Until now, there was little data on how people feel after real interviews in various IT companies. Therefore, we collected this data on a large scale, which allowed us to test interesting theories about developers and their confidence in their programming skills.

')

One of the main factors that interested us is the impostor syndrome . It is known that many programmers suffer from it 4 . Many are wondering if they really correspond in level to their colleagues, and convincing evidence of their competence is considered an accident. The impostor syndrome makes you wonder to what extent you can rely on positive feedback on performance and how many opportunities come from the candidate’s own efforts, compared to luck. Of particular interest to us was the extent to which women suffer from this syndrome. There are many studies showing that candidates from underrepresented segments of the population are more deficient in the sense of belonging to a community that feeds on the impostor syndrome 5 , and it may distort the assessment of their own abilities in the interview.

Interviewing.io is a platform where people anonymously undergo technical interviews, and if all is well, they get a job at leading companies. We launched the project because the resume does not give a complete picture and because we believe that anyone, regardless of the resume, should be able to declare itself.

When the interviewer and the candidate coincide, they meet in a joint coding environment with voice communication, text chat, “writing board” and are immersed in a technical task (you can watch this process in action on the interview recording page ). After each interview, they leave feedback to each other, and each side sees what the other person has said about him as soon as both send feedback.

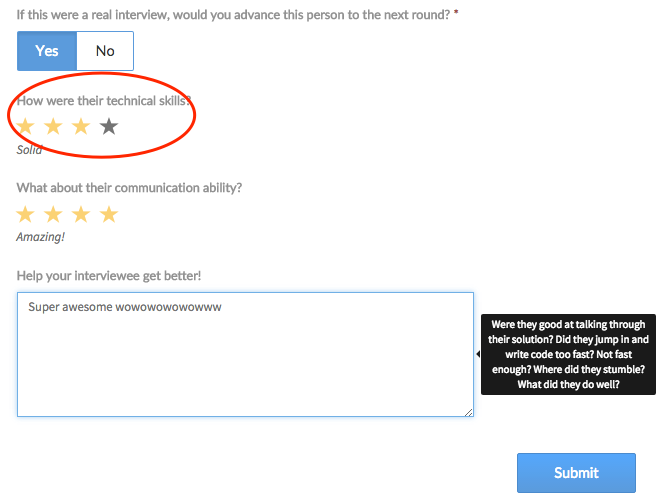

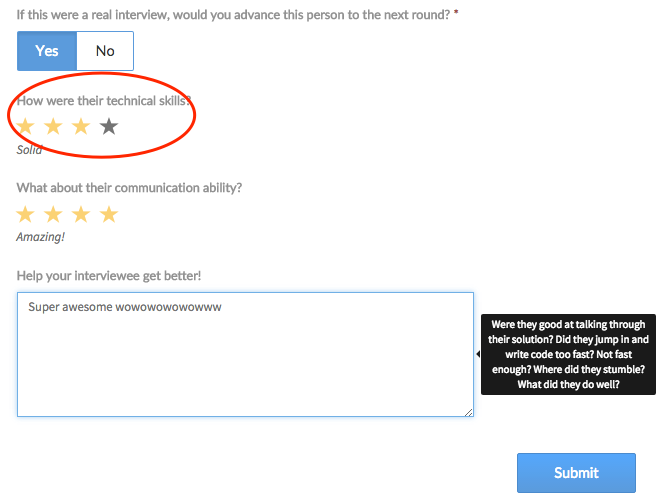

Here is an example of an interviewer feedback form:

Feedback form for interviewers

Immediately after the interview, candidates evaluate on the same scale from 1 to 4, how well, in their opinion, they did:

Feedback form for candidates

For this article, we reviewed more than 10 thousand technical interviews conducted by real software engineers from leading companies. During each interview, the interviewer assessed the candidate according to his ability to solve problems, technical abilities and communication skills, as well as whether the candidate deserved the transition to the next round. This gives us an indication of how different the self-assessment of candidates is from the rating that the interviewer actually gives them, and in what direction. In other words, how distorted is the self-esteem of a candidate compared to his true qualities?

Initially, we had some assumptions that could affect the result:

Contrary to expectations regarding gender and self-confidence, we found no significant statistically significant gender difference. At first, it seemed that women candidates often underestimate their results, but when we normalized the result in other variables, such as experience and technical abilities, it turned out that experience was the key difference . More experienced engineers more accurately assess the results of their interviews, while men on average are more experienced. However, experienced female engineers are equally accurate in assessing their technical skills.

Based on previous studies, we assumed that the impostor syndrome and a greater deficit in the sense of belonging to the IT community could lead to the fact that female candidates would underestimate their assessment at the interview, but we did not find such regularity 6 . Our conclusion echoes the research project of the Kleiman Stanford Institute for Gender Studies , which was attended by 1,795 mid-level technical workers from high-tech companies. They found that women in IT are not necessarily less accurate in assessing their own abilities, but they are very different from men in their ideas about what is required for success (for example, long work hours and risk taking). In other words, women in IT can have no doubt about their own abilities, but they otherwise represent what is expected of them . And the Harvard Business Review survey , in which more than a thousand professionals asked about their decisions when applying for a job, also confirms this point. The results emphasize that gender differences in the process of evaluating a candidate are more based on different expectations of how processes like interviews are considered .

Nevertheless, we found one interesting difference: women undergo less training interviews than men. The difference is small, but statistically significant, and it returns us to the previous conclusion that after a bad interview, women leave interviewing.io about 7 times more often than men .

But in the same previous article, we also found that disguising votes by gender did not affect the results of the interview. This entire cluster of results confirms what we suspected, and what experts who carry out in-depth studies of the gender difference in IT confirm: everything is complicated . The lack of perseverance in women in interviews cannot be explained only by impostor syndrome and an underestimation of one’s own abilities. But it is still likely that they take negative feedback more seriously and are more likely to make different assumptions and conclusions after the interview.

The diagram below shows the distance distribution of self-assessment accuracy for women and for male candidates on our platform (zero indicates a rating that corresponds to the interviewer’s score, while negative values indicate an underestimated score, and positive values indicate an overestimated score). The two groups look almost the same:

Another surprise: the interviewer's experience does not help . Even former interviewers do not seem to demonstrate a more accurate self-assessment because of this. Personal brand also does not give effect . People with more followers on GitHub were not more accurate than people with several followers. The rating of the interviewer also does not matter (i.e. how well the interviewers were rated by other candidates), although for justice, the interviewers are usually rated fairly high on the site.

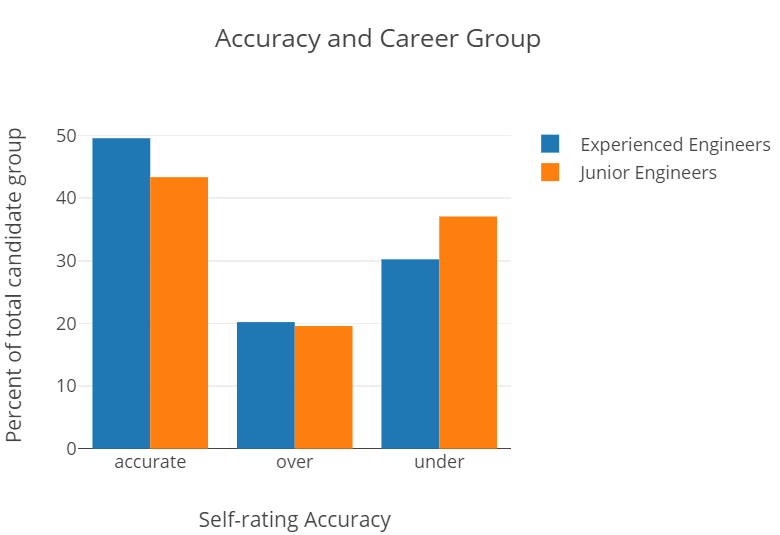

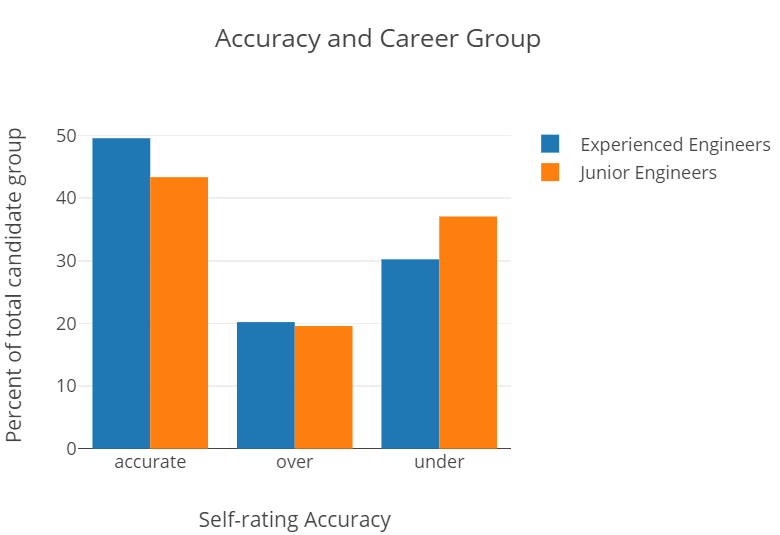

Experienced specialists better understand how well they were interviewed, compared to engineers at the beginning of their career 7 . And it seems that the point is not only that the best programming skills allow you to better evaluate your effectiveness; although there is a slight correlation: engineers, who more accurately evaluate their effectiveness, have a really higher level of programming. But if you look at novice programmers, then even the best of them often underestimate their skills. 8 .

Our data reflects the trend observed in the Stack Overflow 2018 developer survey . They asked respondents a few questions about self-confidence and competition with other developers, and noted that more experienced engineers feel more competitive and more confident 9 . This is not surprising: in the end, experience correlates with skill levels, and highly skilled people are likely to be more confident. But our analysis allowed us to normalize the effectiveness of the interview and the programming skills of the career groups - and we still found that experienced engineers better predict their interview results, regardless of the qualifications and actual results of the interview. Probably, several factors have an effect here: experienced engineers have already been interviewed, they themselves have been conducted, and have a stronger sense of belonging to the community, which helps to fight the impostor syndrome.

It seems that insider knowledge and context also helps : residents of the Bay Area and employees of top companies make a more accurate assessment. As well as experience, knowledge of the context of the industry allows you to more adequately assess the situation. We found a small but statistically significant difference from such factors as living in the Gulf area and working for a leading company. However, the bonus from working in a leading company seems to correspond mainly to a bonus from general technical skills: being in a top company is essentially a proxy parameter indicating a more experienced engineer with more advanced skills.

Finally, as the results of interviews improve and you move to real company interviews, you improve the accuracy of self-esteem. People demonstrate more accurate self-esteem in real interviews as compared to training ones, and their overall rating on the site also correlates with self-assessment accuracy: interviewing.io calculates the overall rating for users based on their effectiveness in all interviews and weighted in relation to later indicators . People in the top 25% rated are more likely to be accurate in assessing their results at the interview.

How do people generally evaluate their effectiveness at the interview? We studied it earlier , at about a thousand interviews, and now with an increase in the sample ten times the conclusions are the same. Candidates accurately estimate their result only in 46% of interviews, underestimate themselves in 35% (and in the remaining 19% overestimate the result). However, candidates usually guess approximately: it does not happen that people with four stars always evaluate themselves as one 10 . Self-assessment statistically significantly predicts the actual result of the interview (and positively correlates with it), but in this relationship a lot of noise.

Accurate judgment about your interview result is a specific skill that comes with experience and knowledge of the IT industry. But it turned out that many of the assumptions that we made about the accuracy of the assessment did not stand up to criticism : female engineers have the same accurate understanding of their skills as men, and engineers who conducted more interviews or are well represented on GitHub are not much better evaluate your result.

What does this mean for the industry as a whole? First, the impostor syndrome seems to be a grim monster who attacks engineers of any gender, of any qualification, regardless of their fame or place of residence. Experience helps alleviate pain a little, but imposter syndrome affects everyone, no matter who they are or where they are from. So perhaps the time has come for a kinder, more sensitive interview culture. A culture that is kinder to all. Although certain groups less experienced in technical interviews suffer most from the shortcomings of the interview procedure , no one is immune from self-doubt.

Earlier we discussed the qualities of a good interviewer, and empathy plays a disproportionately large role . And we saw that in order to prevent the loss of candidates, it is really important to provide immediate feedback after the interview . So, regardless of whether you are driven by kindness and principles, or cold, hard pragmatism, a little more kindness and understanding towards the candidates will not interfere.

1. Self-assessment has been studied in a number of areas and has often been used to assess the degree of training. One important criticism is that it is strongly influenced by the motivation and emotional state of people during the survey. - Sitzmann, Eli, Brown, Bauer. (2010). Self-assessment of knowledge: cognitive learning or affective dimension? .. Academy of Management Learning & Education , 9 (2), 169-191. ↑

2. Developing a good technical interview is not an easy task for the interviewer. For an informal discussion of this topic, see here . ↑

3. Considerations about the self-assessment of the interview . ↑

4. For example, this article and this . ↑

5. Some additional literature on research in the social sciences:

6. One problem for our data set is not too large a sample of experienced female engineers: this corresponds to the real demography of the IT industry, but also means a possible bias in the statistical results when assessing the intergroup differences. We want to continue collecting statistics with women to fully explore the topic. ↑

7. These effects and the previous lack of correlation are studied in a linear mixed model. All significant results for individual effects p <0.05. ↑

8. For experienced engineers, the mean deviation is −0.14; juniors have −0.22, graduates have −0.25. ↑

9. See also here . ↑

10. Another drawback of our data set is that there is a maximum and minimum score: for example, when getting a real score, 4 people cannot overestimate themselves, because they are already at the top of the scale. We corrected this in several ways: by eliminating people with maximum and minimum results and reanalysis on the middle subset, and also by dividing it into exact or inaccurate. The results have not changed. ↑

Despite all this information, people are still struggling to learn how to behave in an interview. In the previous article, we found that surprisingly a large number of users interviewing.io underestimate their abilities, which increases the likelihood of a negative outcome of the interview. Now we have a lot more data (more than 10,000 interviews with real software engineers), so we want to dig deeper: what causes candidates to underestimate self-esteem?

Some general facts are known from scientific studies that interfere with proper self-esteem: for example, people do not always appreciate or even remember their performance in complex cognitive tasks, such as writing code 1 . Technical interviews are especially difficult to assess if candidates do not have much experience with questions without one correct answer. Since many companies do not discuss the results after the interview with the candidates, many will never understand what they did well or what could be better 2 3 . In the end, removing the curtains of secrecy around job interviews in the entire industry was one of the main reasons for creating interviewing.io !

Until now, there was little data on how people feel after real interviews in various IT companies. Therefore, we collected this data on a large scale, which allowed us to test interesting theories about developers and their confidence in their programming skills.

')

One of the main factors that interested us is the impostor syndrome . It is known that many programmers suffer from it 4 . Many are wondering if they really correspond in level to their colleagues, and convincing evidence of their competence is considered an accident. The impostor syndrome makes you wonder to what extent you can rely on positive feedback on performance and how many opportunities come from the candidate’s own efforts, compared to luck. Of particular interest to us was the extent to which women suffer from this syndrome. There are many studies showing that candidates from underrepresented segments of the population are more deficient in the sense of belonging to a community that feeds on the impostor syndrome 5 , and it may distort the assessment of their own abilities in the interview.

Research organization

Interviewing.io is a platform where people anonymously undergo technical interviews, and if all is well, they get a job at leading companies. We launched the project because the resume does not give a complete picture and because we believe that anyone, regardless of the resume, should be able to declare itself.

When the interviewer and the candidate coincide, they meet in a joint coding environment with voice communication, text chat, “writing board” and are immersed in a technical task (you can watch this process in action on the interview recording page ). After each interview, they leave feedback to each other, and each side sees what the other person has said about him as soon as both send feedback.

Here is an example of an interviewer feedback form:

Feedback form for interviewers

Immediately after the interview, candidates evaluate on the same scale from 1 to 4, how well, in their opinion, they did:

Feedback form for candidates

For this article, we reviewed more than 10 thousand technical interviews conducted by real software engineers from leading companies. During each interview, the interviewer assessed the candidate according to his ability to solve problems, technical abilities and communication skills, as well as whether the candidate deserved the transition to the next round. This gives us an indication of how different the self-assessment of candidates is from the rating that the interviewer actually gives them, and in what direction. In other words, how distorted is the self-esteem of a candidate compared to his true qualities?

Initially, we had some assumptions that could affect the result:

- Paul Will it be more difficult for women to evaluate their programming skills than men?

- Previously, he conducted interviews . It seems reasonable that having been on the other side, you will demonstrate a more accurate self-assessment at the interview.

- He worked in the top companies . Similar to the previous paragraph.

- He showed excellent results at interviews - people who show the best result in general may have more confidence and knowledge about when they are doing everything right (or wrong!).

- Accommodation in the Bay Area or not. Since IT companies are still geographically concentrated in the Gulf region, we found that people who live in an area with a more intensive engineering culture are more familiar with professional interviewing standards.

- The quality of the questions and the qualifications of the interviewer directly at the interview . Presumably, the best interviewer is better able to communicate, and a stupid interviewer may confuse the candidate’s self-esteem. We also consider whether this was a study interview or for a specific position.

- For some candidates, we could also look at the performance of their personal brand in the industry, such as the number of followers on GitHub and Twitter. Maybe people with strong influence on the Internet are more confident in themselves when they are interviewed?

What have we discovered?

Women evaluate their technical skills in the same way as men

Contrary to expectations regarding gender and self-confidence, we found no significant statistically significant gender difference. At first, it seemed that women candidates often underestimate their results, but when we normalized the result in other variables, such as experience and technical abilities, it turned out that experience was the key difference . More experienced engineers more accurately assess the results of their interviews, while men on average are more experienced. However, experienced female engineers are equally accurate in assessing their technical skills.

Based on previous studies, we assumed that the impostor syndrome and a greater deficit in the sense of belonging to the IT community could lead to the fact that female candidates would underestimate their assessment at the interview, but we did not find such regularity 6 . Our conclusion echoes the research project of the Kleiman Stanford Institute for Gender Studies , which was attended by 1,795 mid-level technical workers from high-tech companies. They found that women in IT are not necessarily less accurate in assessing their own abilities, but they are very different from men in their ideas about what is required for success (for example, long work hours and risk taking). In other words, women in IT can have no doubt about their own abilities, but they otherwise represent what is expected of them . And the Harvard Business Review survey , in which more than a thousand professionals asked about their decisions when applying for a job, also confirms this point. The results emphasize that gender differences in the process of evaluating a candidate are more based on different expectations of how processes like interviews are considered .

Nevertheless, we found one interesting difference: women undergo less training interviews than men. The difference is small, but statistically significant, and it returns us to the previous conclusion that after a bad interview, women leave interviewing.io about 7 times more often than men .

But in the same previous article, we also found that disguising votes by gender did not affect the results of the interview. This entire cluster of results confirms what we suspected, and what experts who carry out in-depth studies of the gender difference in IT confirm: everything is complicated . The lack of perseverance in women in interviews cannot be explained only by impostor syndrome and an underestimation of one’s own abilities. But it is still likely that they take negative feedback more seriously and are more likely to make different assumptions and conclusions after the interview.

The diagram below shows the distance distribution of self-assessment accuracy for women and for male candidates on our platform (zero indicates a rating that corresponds to the interviewer’s score, while negative values indicate an underestimated score, and positive values indicate an overestimated score). The two groups look almost the same:

What else matters?

Another surprise: the interviewer's experience does not help . Even former interviewers do not seem to demonstrate a more accurate self-assessment because of this. Personal brand also does not give effect . People with more followers on GitHub were not more accurate than people with several followers. The rating of the interviewer also does not matter (i.e. how well the interviewers were rated by other candidates), although for justice, the interviewers are usually rated fairly high on the site.

So, what was the statistically significant incentive for accurate judgments about the effectiveness of the interview? Mostly experience

Experienced specialists better understand how well they were interviewed, compared to engineers at the beginning of their career 7 . And it seems that the point is not only that the best programming skills allow you to better evaluate your effectiveness; although there is a slight correlation: engineers, who more accurately evaluate their effectiveness, have a really higher level of programming. But if you look at novice programmers, then even the best of them often underestimate their skills. 8 .

Our data reflects the trend observed in the Stack Overflow 2018 developer survey . They asked respondents a few questions about self-confidence and competition with other developers, and noted that more experienced engineers feel more competitive and more confident 9 . This is not surprising: in the end, experience correlates with skill levels, and highly skilled people are likely to be more confident. But our analysis allowed us to normalize the effectiveness of the interview and the programming skills of the career groups - and we still found that experienced engineers better predict their interview results, regardless of the qualifications and actual results of the interview. Probably, several factors have an effect here: experienced engineers have already been interviewed, they themselves have been conducted, and have a stronger sense of belonging to the community, which helps to fight the impostor syndrome.

It seems that insider knowledge and context also helps : residents of the Bay Area and employees of top companies make a more accurate assessment. As well as experience, knowledge of the context of the industry allows you to more adequately assess the situation. We found a small but statistically significant difference from such factors as living in the Gulf area and working for a leading company. However, the bonus from working in a leading company seems to correspond mainly to a bonus from general technical skills: being in a top company is essentially a proxy parameter indicating a more experienced engineer with more advanced skills.

Finally, as the results of interviews improve and you move to real company interviews, you improve the accuracy of self-esteem. People demonstrate more accurate self-esteem in real interviews as compared to training ones, and their overall rating on the site also correlates with self-assessment accuracy: interviewing.io calculates the overall rating for users based on their effectiveness in all interviews and weighted in relation to later indicators . People in the top 25% rated are more likely to be accurate in assessing their results at the interview.

How do people generally evaluate their effectiveness at the interview? We studied it earlier , at about a thousand interviews, and now with an increase in the sample ten times the conclusions are the same. Candidates accurately estimate their result only in 46% of interviews, underestimate themselves in 35% (and in the remaining 19% overestimate the result). However, candidates usually guess approximately: it does not happen that people with four stars always evaluate themselves as one 10 . Self-assessment statistically significantly predicts the actual result of the interview (and positively correlates with it), but in this relationship a lot of noise.

Value

Accurate judgment about your interview result is a specific skill that comes with experience and knowledge of the IT industry. But it turned out that many of the assumptions that we made about the accuracy of the assessment did not stand up to criticism : female engineers have the same accurate understanding of their skills as men, and engineers who conducted more interviews or are well represented on GitHub are not much better evaluate your result.

What does this mean for the industry as a whole? First, the impostor syndrome seems to be a grim monster who attacks engineers of any gender, of any qualification, regardless of their fame or place of residence. Experience helps alleviate pain a little, but imposter syndrome affects everyone, no matter who they are or where they are from. So perhaps the time has come for a kinder, more sensitive interview culture. A culture that is kinder to all. Although certain groups less experienced in technical interviews suffer most from the shortcomings of the interview procedure , no one is immune from self-doubt.

Earlier we discussed the qualities of a good interviewer, and empathy plays a disproportionately large role . And we saw that in order to prevent the loss of candidates, it is really important to provide immediate feedback after the interview . So, regardless of whether you are driven by kindness and principles, or cold, hard pragmatism, a little more kindness and understanding towards the candidates will not interfere.

Notes

1. Self-assessment has been studied in a number of areas and has often been used to assess the degree of training. One important criticism is that it is strongly influenced by the motivation and emotional state of people during the survey. - Sitzmann, Eli, Brown, Bauer. (2010). Self-assessment of knowledge: cognitive learning or affective dimension? .. Academy of Management Learning & Education , 9 (2), 169-191. ↑

2. Developing a good technical interview is not an easy task for the interviewer. For an informal discussion of this topic, see here . ↑

3. Considerations about the self-assessment of the interview . ↑

4. For example, this article and this . ↑

5. Some additional literature on research in the social sciences:

- Hood, Rattan, Dvek. (2012). Why do women leave? Sense of belonging and representation of women in mathematics. Journal of personality and social psychology , 102 (4), 700.

- Master, Cheryan, Meltzoff. (2016). IT affiliation: stereotypes undermine the interest and involvement of girls in computer science. Journal of Educational Psychology , 108 (3), 424.

6. One problem for our data set is not too large a sample of experienced female engineers: this corresponds to the real demography of the IT industry, but also means a possible bias in the statistical results when assessing the intergroup differences. We want to continue collecting statistics with women to fully explore the topic. ↑

7. These effects and the previous lack of correlation are studied in a linear mixed model. All significant results for individual effects p <0.05. ↑

8. For experienced engineers, the mean deviation is −0.14; juniors have −0.22, graduates have −0.25. ↑

9. See also here . ↑

10. Another drawback of our data set is that there is a maximum and minimum score: for example, when getting a real score, 4 people cannot overestimate themselves, because they are already at the top of the scale. We corrected this in several ways: by eliminating people with maximum and minimum results and reanalysis on the middle subset, and also by dividing it into exact or inaccurate. The results have not changed. ↑

Source: https://habr.com/ru/post/429158/

All Articles