NetApp Nearest Future

Clouds, clouds and clouds again. Movement in the cloud, whether it is your private cloud in your data center, private cloud from the provider or public providers such as Amazon AWS, Microsoft Azure, IBM Cloud, Google Cloud, is inexorable. I especially noticed this when I moved to the USA. Here they talk about everything and always - the air is literally saturated with this topic. Manufacturers of both software and hardware understood this perfectly and don’t want to miss this window of opportunity in this changing world.

Kuberneties Service

It becomes an important and powerful tool for creating and managing Data Fabric (which is further), and for competition in the field of HCI systems. Previously, NetApp HCI was a fairly simple solution that only works with VMware, as Nutanix actually started. Now that NKS has appeared, NetApp HCI will make Nutanix much tougher competition in terms of container-based Next Generation DataCenter & Next generation applications, but the most interesting is leaving a niche in which the Nutanix hypervisor can theoretically run on NetApp HCI. NetApp Kubernetes Service Demo .

HCI

One of the first and well-deserved HCI, which is familiar to many, is Nutanix. Despite the fact that Nutanix is a very interesting product, it is not without flaws. The most interesting thing about Nutanix, in my opinion, is the internal ecosystem, not the storage architecture. If we talk about the shortcomings, it is worth noting that marketing could blur some eyes to some engineers by sending the wrong message, that all data is always locally and that is good. While competitors hinting at NetApp HCI, the data should be accessed over the network, which is “long” and generally “not real HCI”, while not giving a definition of what a real HCI is, although it cannot be in principle, as, for example, and in "Clouds", which also no one undertakes to define. Because it was invented by people for marketing purposes, so that in the future it was possible to supplement and change the content of this concept, and not for purely technical purposes, in which the object always has an unchanged definition.

Initially, it was easier to enter the HCI market with the architecture available to the masses, which can be consumed more easily, namely, just use the Komoditi server with full disks.

')

But this does not mean that these are the defining properties of HCI and the single best variant of HCI. Setting the task actually gave rise to a problem or, as I already said, a nuance: when you use storage in a share-nothing architecture, which includes Nutanix HCI on the basis of equipment storage, you need to ensure data availability and protection, for this you need to at least copy all from one server to another. And when the virtual machine generates a new record on the local disk, everything would be fine if it were not necessary to synchronously duplicate this record and transfer it to the second server and wait for the answer that it is safe and sound. In other words, in Nutanix, and in principle to any share-nothing architecture, the recording speed plus minus is at best equivalent to the fact that these records would be immediately transmitted over the network to shared storage.

The main basic design slogans of HCI have always been the simplicity of the initial installation and configuration, the ability to start from the minimum, simple and inexpensive configuration and expand to the desired size, ease of expansion / compression, ease of management from a single console, automation, all this is somehow connected with, simplicity, cloud-like experience, flexibility and granularity of resource consumption. But whether the storage system in the HCI architecture is a separate box or part of the server, in fact, the architecture does not play a big role in achieving this simplicity.

Summarizing this, we can say that marketing has surprisingly misled many engineers, that local drives are always better, which is not a reality, because from a technical point of view, unlike marketing, everything is not so simple. By making the wrong conclusion that there are HCI architectures that use only local disks, you can make the following incorrect conclusion that storage systems connected over the network will always run slower. But the principles of information protection, logic and physics in this world are arranged in such a way that this cannot be achieved, and there is no way to get rid of network communication between disk subsystems in the HCI infrastructure, therefore there will be a fundamental difference in whether disks will be locally with computing nodes or in a separate storage node is not connected via the network. Quite the contrary, the allocated storage nodes allow you to achieve smaller dependencies of the HCI components from each other, for example, if you reduce the number of nodes in the HCI cluster based on the same Nutanix or vSAN, you automatically reduce the space in the cluster. And vice versa: when adding computing nodes, you must add disks to this server. While in the NetApp HCI architecture, these components are not related to each other. The fee for such an infrastructure is not in the speed of the disk subsystem, but in the fact that to start it is necessary to have at least 2 storage nodes and at least 2 computing nodes, for a total of 4 nodes. 4 NetApp HCI nodes can be packaged in 2U using half blades.

NetApp HCI

With the purchase of SolidFire in the NetApp product line, HCI appeared (this product does not have its own name, which is why it is simply called HCI) system. Its main difference from the "classic" HCI systems is a separate storage node connected via the network. The SolidFire system itself, which is the “one hundredfold” layer for HCI, is radically different from the All-Flash AFF systems that have evolved from the FAS line, which in turn has been on the market for about 26 years. Having got acquainted with it closer ( which you can do as well ), it becomes clear that this is a product of a completely different ideology, aimed at a different segment of consumers (cloud providers) and ideally fitting in exactly with their tasks, both in terms of performance, fault tolerance, and point of view of integration into a single billing ecosystem.

Annealing for all 200 NetApp SolidFire Testing Arthur Alikulov, Evgeny Elizarov. NetApp Directions 2018 .

NetApp moves to the clouds

and has long ceased to be just a manufacturer of storage systems. Now it’s probably already cloudy, the data management company, at least they are stubbornly moving in this direction. A huge number of those or other implementations of NetApp products speaks precisely about this - SaaS backup for O365, SaaS backup for Salesforce (and plans for Google Apps, Slack & ServiceNow). ONTAP Select was a very important decision, independent, and not as before a little lotion for replication to the "full-fledged" FAS. NDAS is increasingly integrating the previously seemingly incompatible - data storage systems and the cloud by providing backups, google-like file directory searches and a graphical management interface as a service. FabricPool went even deeper and combined the SSD and the cloud, which seemed earlier not likely or even crazy.

The mere fact that NetApp in a rather short period of time turned out to be in these four of the world's largest cloud providers indicates the seriousness of their intentions. Not only that, the NFS service in Azure is built entirely on NetApp AFF systems.

Azure NetApp Files Demo .

NetApp storage systems are available in Azure, AWS and Google as a service, NetApp is responsible for their support, upgrading and maintenance, and cloud users can consume the space of AFF systems and the functions of snooping, cloning, QoS as a service. NetApp Cloud Volumes Service for AWS Demo .

The IBM Cloud uses ONTAP Select, which has exactly the same functionality as the AFF system, but is used in the form of software that can be installed on the server's locker. Active IQ is a web portal that displays information on NetApp software and hardware, and provides recommendations on how to anticipate problems and update using AI algorithms running on collected telemetry from NetApp devices and services. NetApp Active IQ Demo .

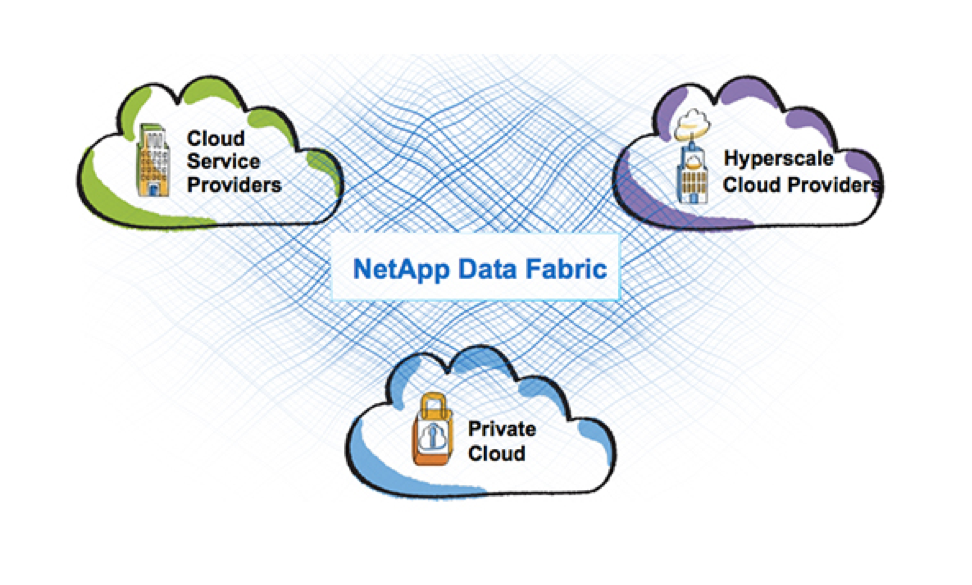

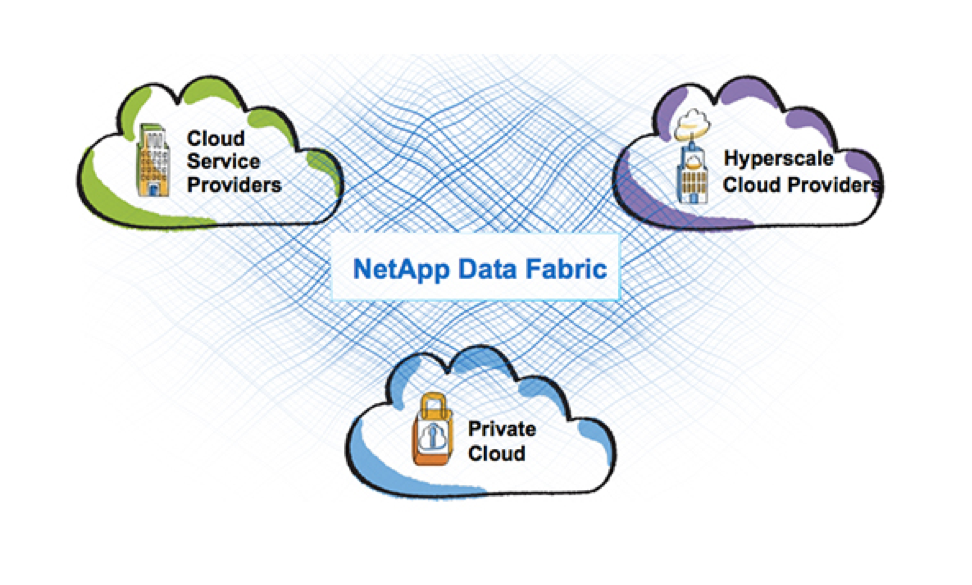

Data fabric

Overall, the Data Fabric strategy that NetApp now adheres to is designed to combine these completely different environments: public and private clouds, and all NetApp products. After all, it is not enough just to launch a product in the cloud, so that it would logically and harmoniously fit into the services of cloud providers. And today NetApp does it more than successfully. Data Fabric from the marketing idea smoothly acquires quite tangible features in the form of separately taken technologies and products: FabricPool tearing between SSD & S3, SnapMirror between ONTAP & SolidFier / HCI, SnapCenter for ONTAP & SolidFier (which is not yet implemented), NDAS data backup service their storage in the cloud, MAX Data software for software defined memory, these are all products or features of NetApp that embody this vision of Data Fabric. ONTAP Select can now work on Nutanix, delivering rich file functionality to their ecosystem.

Data Fabric is partly a vision of the process of merging or integrating all NetApp products and even clouds, and I even see not just a marketing, but even a physical merger of individual products.

Not only that, I want to make a prediction. I have been playing this game for several years on account of predictions with some of my colleagues, and so far I have always been very close or have guessed the train of thought in this company, let's see how lucky I am this time. I want to emphasize that this is my personal opinion, based on pieces of information, obtained for all 7 years of my experience with NetApp and intuition, no one from NetApp has told me this, so this can only be a figment of my imagination:

NetApp ends up being a storage vendor

this may be too loud the title of the title, but NetApp goes into a phase where the company becomes data management, software defined, cloud-native operator and continues to release storage in the old fashioned way. The storage system has not gone away and the edge is not visible to the fact that this may happen in the near future, but the emphasis is shifted to cloudiness and the data itself.

This text is written in close collaboration with Dmitry bbk , if you want to know more about NetApp technologies - do not be lazy to put him +, and new articles will not keep you waiting.

If you are interested in news from the NetApp world, welcome to the StorageTalks telegram channel, but if you are looking for an active Russian-speaking storage community, come to the StorageDiscussions telegram chat.

Kuberneties Service

It becomes an important and powerful tool for creating and managing Data Fabric (which is further), and for competition in the field of HCI systems. Previously, NetApp HCI was a fairly simple solution that only works with VMware, as Nutanix actually started. Now that NKS has appeared, NetApp HCI will make Nutanix much tougher competition in terms of container-based Next Generation DataCenter & Next generation applications, but the most interesting is leaving a niche in which the Nutanix hypervisor can theoretically run on NetApp HCI. NetApp Kubernetes Service Demo .

HCI

One of the first and well-deserved HCI, which is familiar to many, is Nutanix. Despite the fact that Nutanix is a very interesting product, it is not without flaws. The most interesting thing about Nutanix, in my opinion, is the internal ecosystem, not the storage architecture. If we talk about the shortcomings, it is worth noting that marketing could blur some eyes to some engineers by sending the wrong message, that all data is always locally and that is good. While competitors hinting at NetApp HCI, the data should be accessed over the network, which is “long” and generally “not real HCI”, while not giving a definition of what a real HCI is, although it cannot be in principle, as, for example, and in "Clouds", which also no one undertakes to define. Because it was invented by people for marketing purposes, so that in the future it was possible to supplement and change the content of this concept, and not for purely technical purposes, in which the object always has an unchanged definition.

Initially, it was easier to enter the HCI market with the architecture available to the masses, which can be consumed more easily, namely, just use the Komoditi server with full disks.

')

But this does not mean that these are the defining properties of HCI and the single best variant of HCI. Setting the task actually gave rise to a problem or, as I already said, a nuance: when you use storage in a share-nothing architecture, which includes Nutanix HCI on the basis of equipment storage, you need to ensure data availability and protection, for this you need to at least copy all from one server to another. And when the virtual machine generates a new record on the local disk, everything would be fine if it were not necessary to synchronously duplicate this record and transfer it to the second server and wait for the answer that it is safe and sound. In other words, in Nutanix, and in principle to any share-nothing architecture, the recording speed plus minus is at best equivalent to the fact that these records would be immediately transmitted over the network to shared storage.

The main basic design slogans of HCI have always been the simplicity of the initial installation and configuration, the ability to start from the minimum, simple and inexpensive configuration and expand to the desired size, ease of expansion / compression, ease of management from a single console, automation, all this is somehow connected with, simplicity, cloud-like experience, flexibility and granularity of resource consumption. But whether the storage system in the HCI architecture is a separate box or part of the server, in fact, the architecture does not play a big role in achieving this simplicity.

Summarizing this, we can say that marketing has surprisingly misled many engineers, that local drives are always better, which is not a reality, because from a technical point of view, unlike marketing, everything is not so simple. By making the wrong conclusion that there are HCI architectures that use only local disks, you can make the following incorrect conclusion that storage systems connected over the network will always run slower. But the principles of information protection, logic and physics in this world are arranged in such a way that this cannot be achieved, and there is no way to get rid of network communication between disk subsystems in the HCI infrastructure, therefore there will be a fundamental difference in whether disks will be locally with computing nodes or in a separate storage node is not connected via the network. Quite the contrary, the allocated storage nodes allow you to achieve smaller dependencies of the HCI components from each other, for example, if you reduce the number of nodes in the HCI cluster based on the same Nutanix or vSAN, you automatically reduce the space in the cluster. And vice versa: when adding computing nodes, you must add disks to this server. While in the NetApp HCI architecture, these components are not related to each other. The fee for such an infrastructure is not in the speed of the disk subsystem, but in the fact that to start it is necessary to have at least 2 storage nodes and at least 2 computing nodes, for a total of 4 nodes. 4 NetApp HCI nodes can be packaged in 2U using half blades.

NetApp HCI

With the purchase of SolidFire in the NetApp product line, HCI appeared (this product does not have its own name, which is why it is simply called HCI) system. Its main difference from the "classic" HCI systems is a separate storage node connected via the network. The SolidFire system itself, which is the “one hundredfold” layer for HCI, is radically different from the All-Flash AFF systems that have evolved from the FAS line, which in turn has been on the market for about 26 years. Having got acquainted with it closer ( which you can do as well ), it becomes clear that this is a product of a completely different ideology, aimed at a different segment of consumers (cloud providers) and ideally fitting in exactly with their tasks, both in terms of performance, fault tolerance, and point of view of integration into a single billing ecosystem.

Annealing for all 200 NetApp SolidFire Testing Arthur Alikulov, Evgeny Elizarov. NetApp Directions 2018 .

NetApp moves to the clouds

and has long ceased to be just a manufacturer of storage systems. Now it’s probably already cloudy, the data management company, at least they are stubbornly moving in this direction. A huge number of those or other implementations of NetApp products speaks precisely about this - SaaS backup for O365, SaaS backup for Salesforce (and plans for Google Apps, Slack & ServiceNow). ONTAP Select was a very important decision, independent, and not as before a little lotion for replication to the "full-fledged" FAS. NDAS is increasingly integrating the previously seemingly incompatible - data storage systems and the cloud by providing backups, google-like file directory searches and a graphical management interface as a service. FabricPool went even deeper and combined the SSD and the cloud, which seemed earlier not likely or even crazy.

The mere fact that NetApp in a rather short period of time turned out to be in these four of the world's largest cloud providers indicates the seriousness of their intentions. Not only that, the NFS service in Azure is built entirely on NetApp AFF systems.

Azure NetApp Files Demo .

NetApp storage systems are available in Azure, AWS and Google as a service, NetApp is responsible for their support, upgrading and maintenance, and cloud users can consume the space of AFF systems and the functions of snooping, cloning, QoS as a service. NetApp Cloud Volumes Service for AWS Demo .

The IBM Cloud uses ONTAP Select, which has exactly the same functionality as the AFF system, but is used in the form of software that can be installed on the server's locker. Active IQ is a web portal that displays information on NetApp software and hardware, and provides recommendations on how to anticipate problems and update using AI algorithms running on collected telemetry from NetApp devices and services. NetApp Active IQ Demo .

Data fabric

Overall, the Data Fabric strategy that NetApp now adheres to is designed to combine these completely different environments: public and private clouds, and all NetApp products. After all, it is not enough just to launch a product in the cloud, so that it would logically and harmoniously fit into the services of cloud providers. And today NetApp does it more than successfully. Data Fabric from the marketing idea smoothly acquires quite tangible features in the form of separately taken technologies and products: FabricPool tearing between SSD & S3, SnapMirror between ONTAP & SolidFier / HCI, SnapCenter for ONTAP & SolidFier (which is not yet implemented), NDAS data backup service their storage in the cloud, MAX Data software for software defined memory, these are all products or features of NetApp that embody this vision of Data Fabric. ONTAP Select can now work on Nutanix, delivering rich file functionality to their ecosystem.

Data Fabric is partly a vision of the process of merging or integrating all NetApp products and even clouds, and I even see not just a marketing, but even a physical merger of individual products.

Not only that, I want to make a prediction. I have been playing this game for several years on account of predictions with some of my colleagues, and so far I have always been very close or have guessed the train of thought in this company, let's see how lucky I am this time. I want to emphasize that this is my personal opinion, based on pieces of information, obtained for all 7 years of my experience with NetApp and intuition, no one from NetApp has told me this, so this can only be a figment of my imagination:

- AFF will become part of HCI

- It will be possible to migrate LUN online between AFF / HCI

NetApp ends up being a storage vendor

this may be too loud the title of the title, but NetApp goes into a phase where the company becomes data management, software defined, cloud-native operator and continues to release storage in the old fashioned way. The storage system has not gone away and the edge is not visible to the fact that this may happen in the near future, but the emphasis is shifted to cloudiness and the data itself.

This text is written in close collaboration with Dmitry bbk , if you want to know more about NetApp technologies - do not be lazy to put him +, and new articles will not keep you waiting.

If you are interested in news from the NetApp world, welcome to the StorageTalks telegram channel, but if you are looking for an active Russian-speaking storage community, come to the StorageDiscussions telegram chat.

Source: https://habr.com/ru/post/429122/

All Articles