We test SharxBase, a hardware-software virtualization platform from the Russian vendor SharxDC

Today I will talk about the SharxBase hyper-convergent platform. On Habré there was still no review of this complex, and it was decided to end this injustice. Our team was able to test the solution "in battle", the results - below.

PS Under the cut a lot of tables, real numbers and other "meat". For those who like to dive into the essence - welcome!

The SharxBase platform is built on the basis of Intel-made servers and the OpenNebula and StorPool open source software. It is delivered in the form of a boxed solution that includes server hardware with pre-installed virtualization and distributed storage software.

')

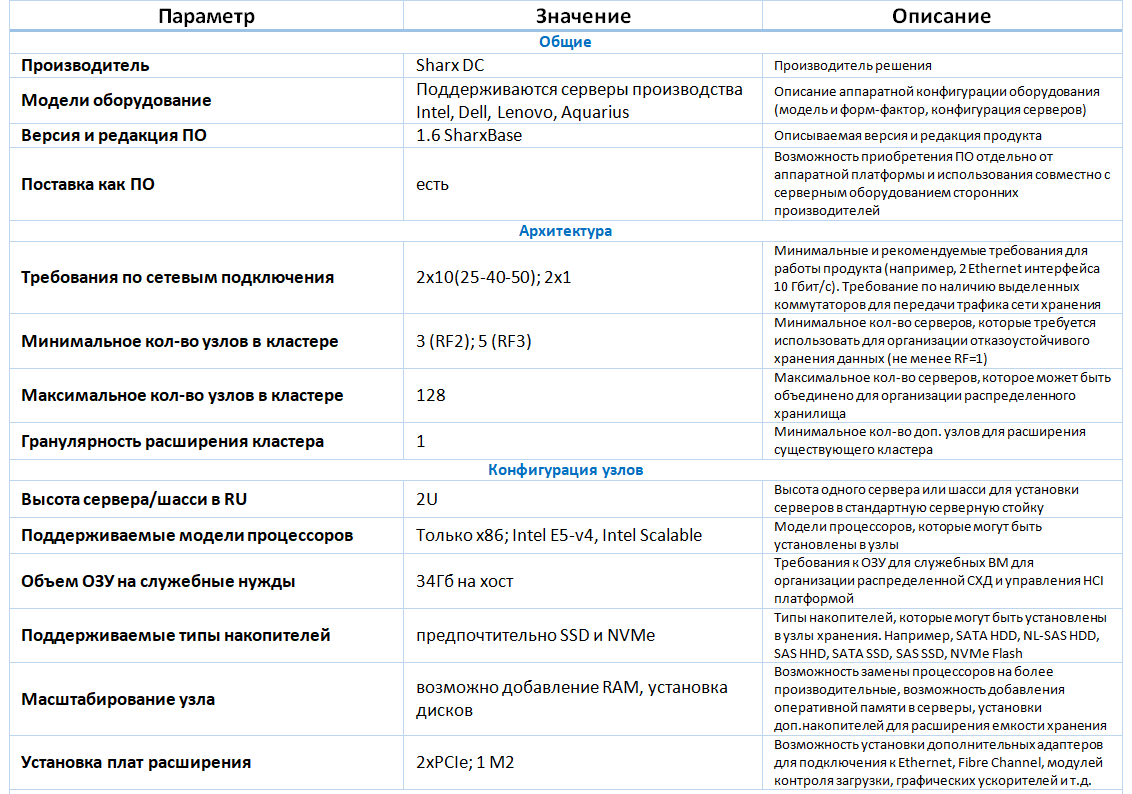

One of four basic typical configurations is available for ordering - Small, Medium, Large, Storage, which differ in the amount of available computing resources (processors, RAM) and disk space. The servers are designed as modules: a typical 2RU chassis, in which up to four servers can be installed, for installation in a standard 19 "server rack. The platform supports both horizontal scaling by increasing the number of nodes and vertical by increasing the amount of RAM in the nodes , installation of additional drives and expansion cards. Currently, installation of network adapters, load control modules, NVMe drives is supported.

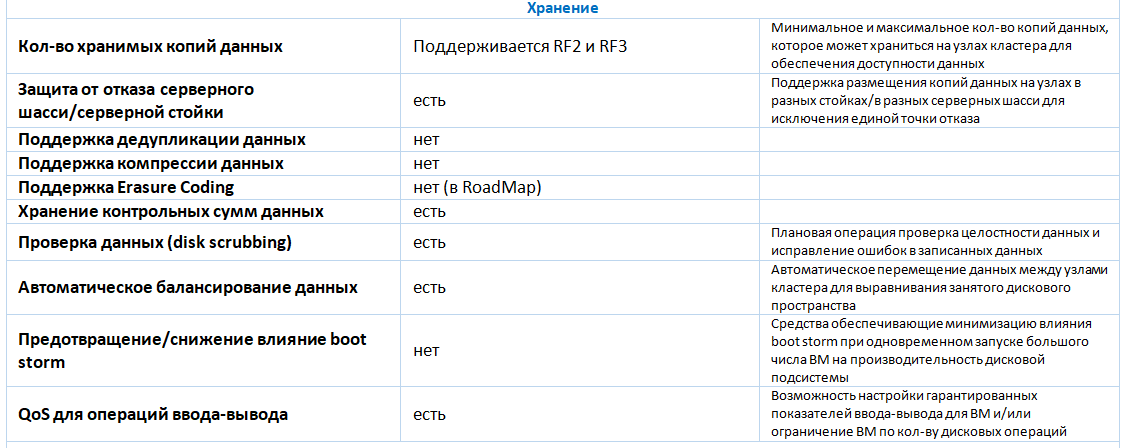

To organize distributed fault-tolerant storage, flash-drives (SSD and / or NVMe) are used. Ethernet is used as data transmission medium. To transfer traffic to the storage system, you must use dedicated network interfaces - at least two 25 GbE interfaces. Services that provide distributed storage are performed on each server in the cluster and use part of its computational resources. The amount of resources depends on the number and size of installed drives, on average overhead costs amount to 34 GB of RAM per host. Connection to distributed storage occurs via the iSCSI block access protocol. To ensure fault tolerance, dual or triple data backup is supported. For productive installations, the manufacturer recommends the use of triple redundancy. Currently, only thin provisioning is supported from storage optimization technologies. Deduplication and data compression using distributed storage are not supported. In future versions, erasure coding support is claimed.

To start a virtual machine (VM), the KVM hypervisor is used. All the basic functionalities for their creation and management are supported:

Typical block diagram (4 knots)

Platform management is performed from the GUI or the command line (locally or remotely when connected via SSH), as well as through a public API.

Among the limitations of the virtualization platform, it is possible to note the absence of mechanisms for automatic balancing of VMs between cluster hosts.

In addition to supporting server virtualization, SharxBase has the ability to create software-configured data centers and private cloud infrastructures. As an example of such functions include:

Using the developed information security module, SharxBase implemented additional measures to ensure the information security system of the platform management system: customizable password requirements for user accounts (complexity, length, duration of use, repeatability, etc.), blocking users, managing current access sessions to the management console, registering events, etc. The software is brought in the Register of the Russian software (number 4445). The positive conclusion of the testing laboratory on successfully completed certification tests of SharxBase software in the FSTEC RF certification system on level 4 of the NDV absence control, as well as on compliance with specifications (protection requirements of virtualization environments) up to class 1 GIS / level IPPDn inclusive was obtained. Obtaining a certificate of compliance with the requirements of the system of certification of information security tools №ROSS RU.0001.01BI00 (FSTEK RF) is expected in December 2018

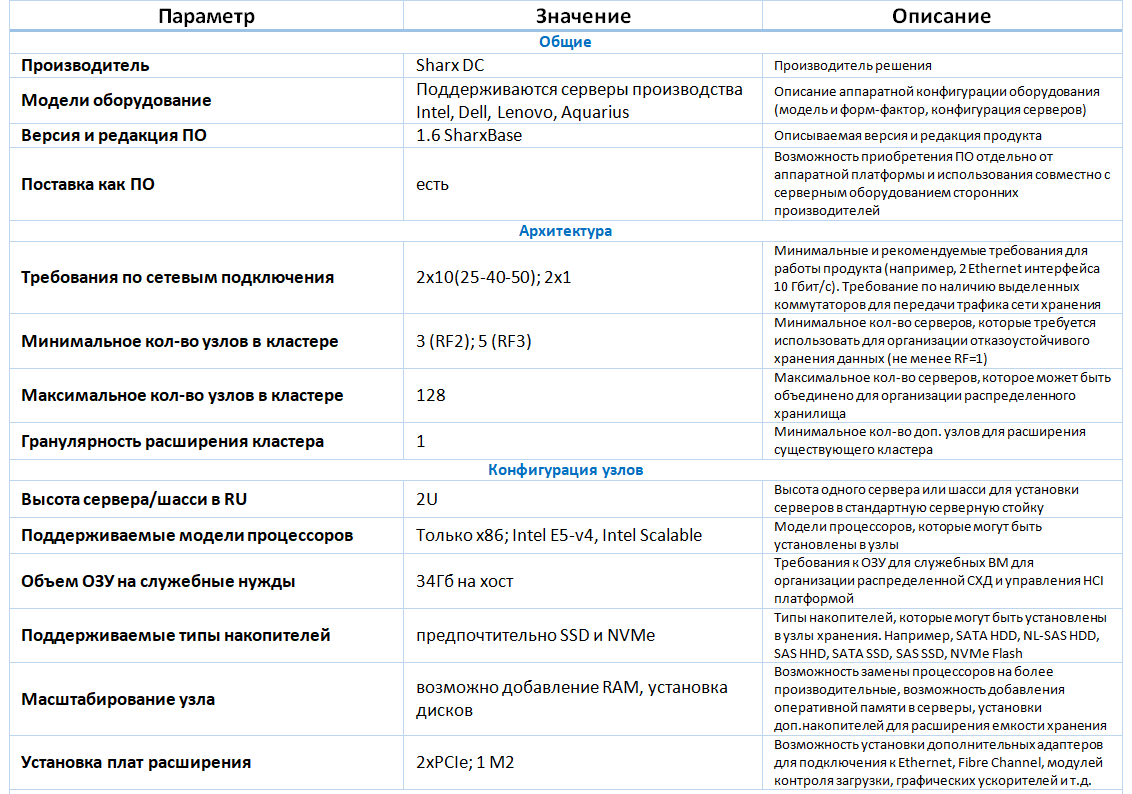

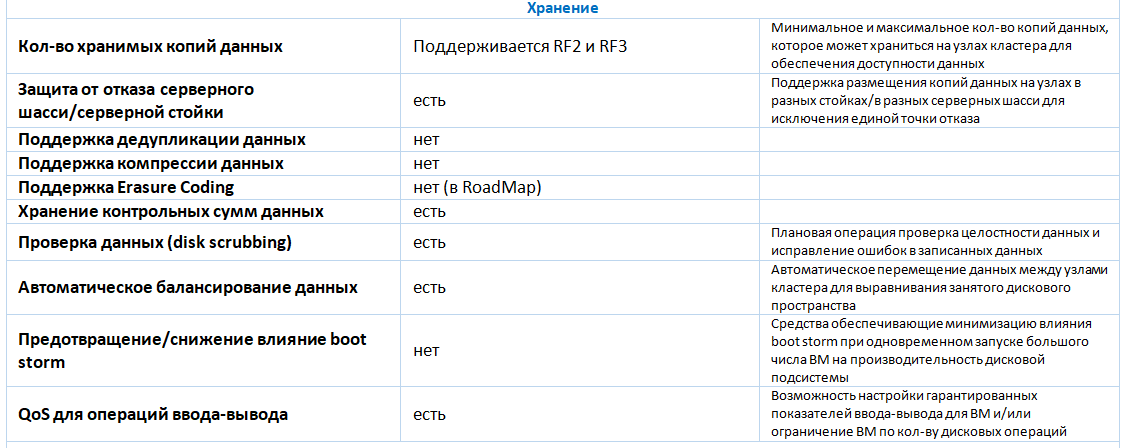

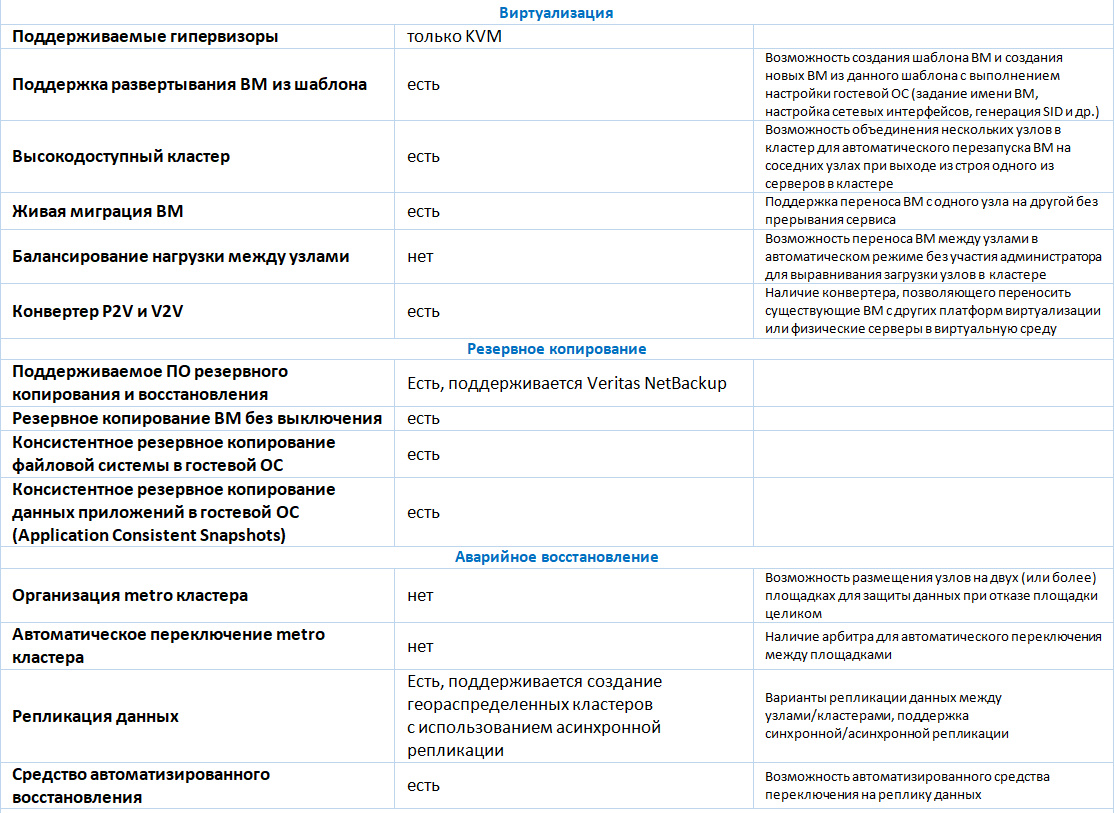

A detailed description of the functionality is given in the table below.

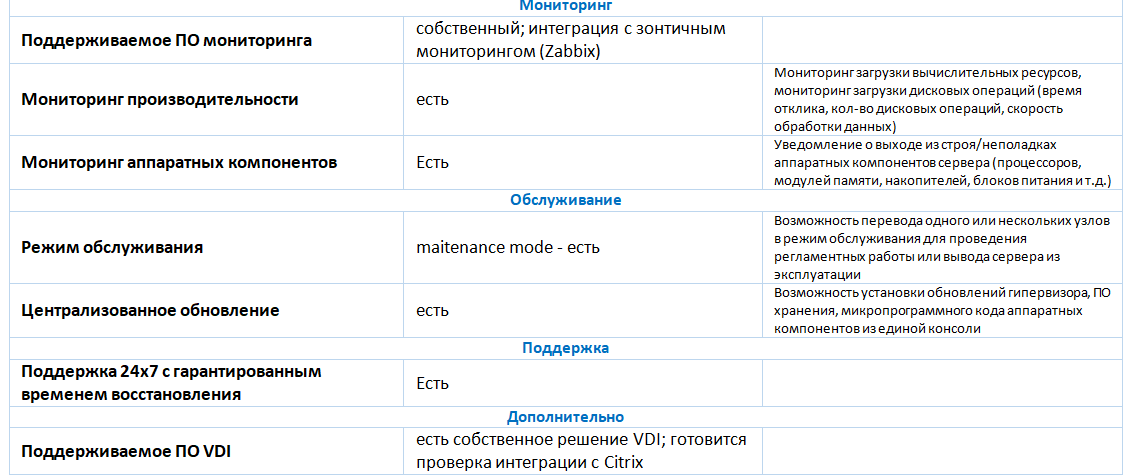

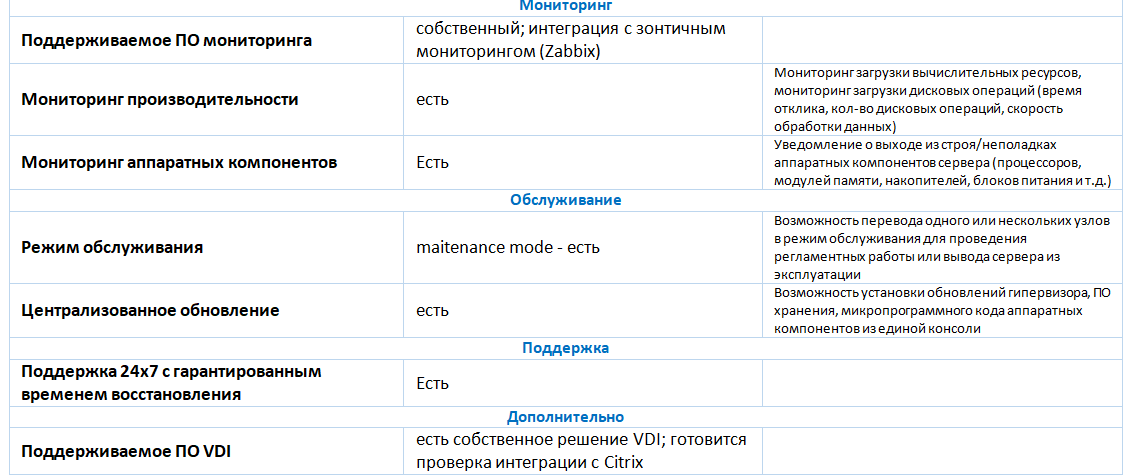

Monitoring SharxBase provides access to extended information about the platform status, setting up alerts and analyzing the platform status.

The monitoring subsystem is a distributed system installed on each of the cluster nodes and providing data on the state of the platform to the virtualization management system.

The real-time monitoring subsystem collects information about platform resources, such as:

The advantages of the solution include:

The disadvantages of the solution include:

While expanding the portfolio of hyperconvergent solutions, we conducted tests on performance and fault tolerance together with the vendor.

The test bench consisted of a 4-node cluster of Intel HNS2600TP servers. The configuration of all servers was identical. The servers had the following hardware characteristics:

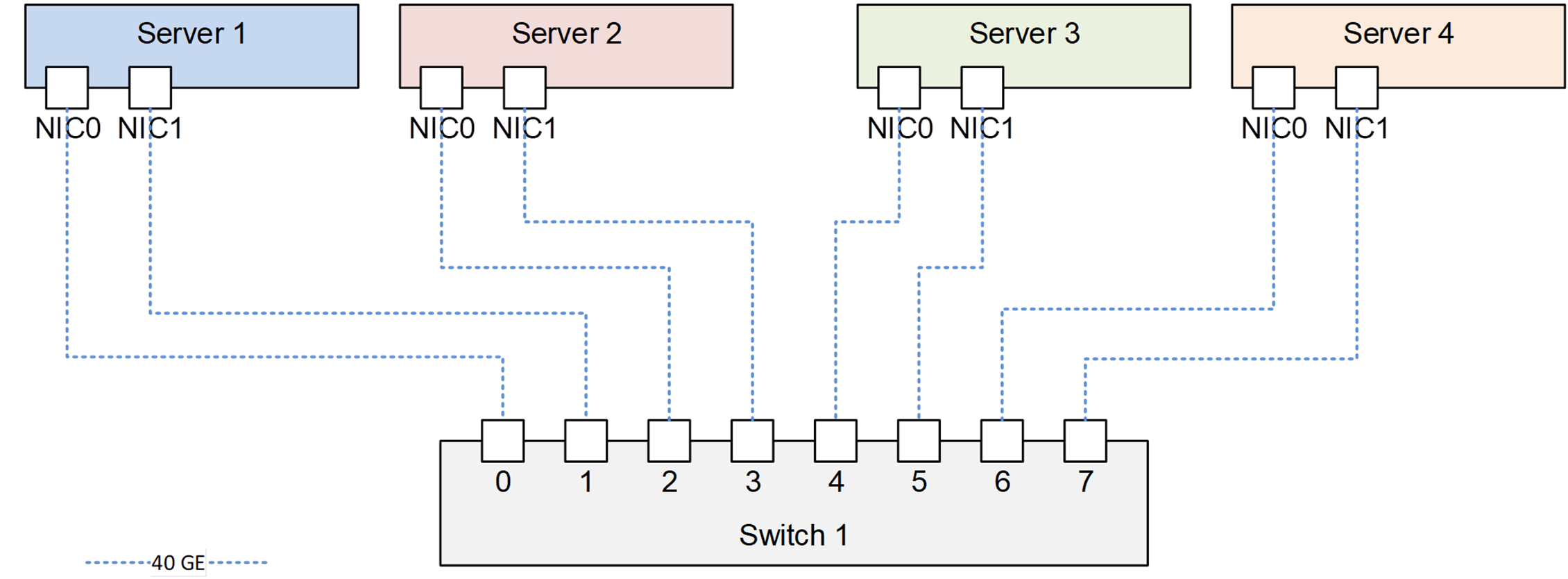

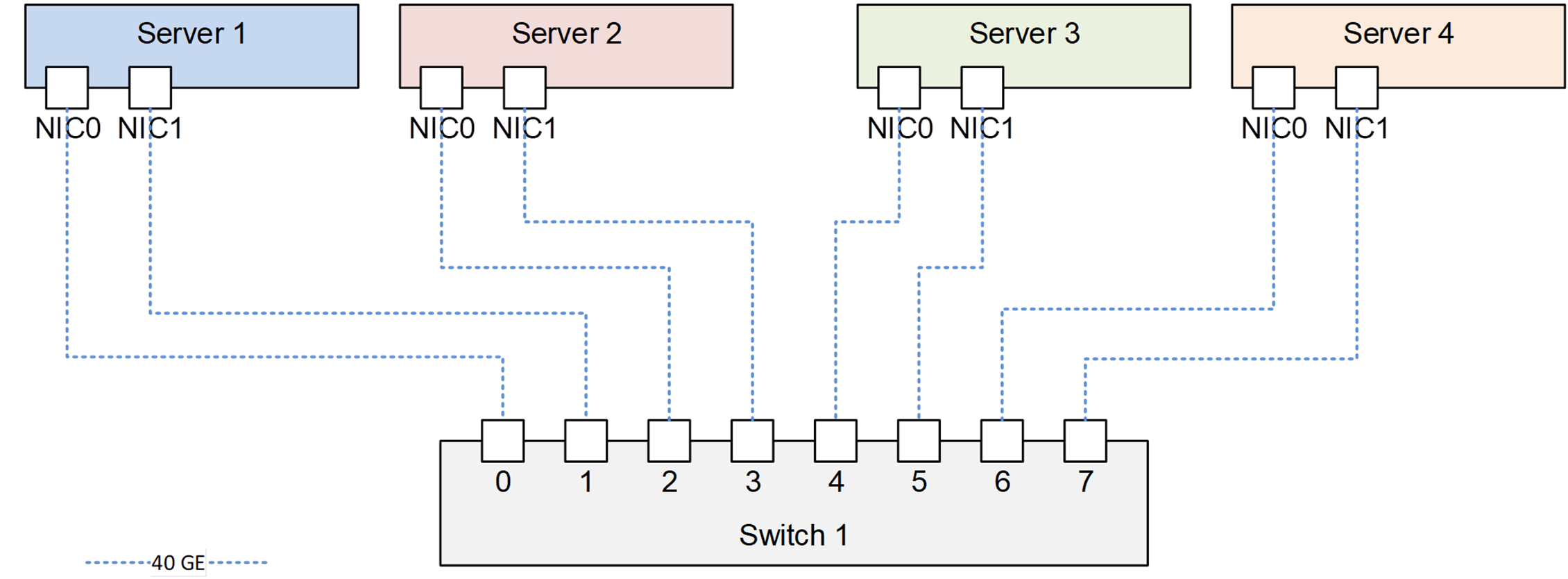

All servers were connected to the Mellanox network switch. Connection diagram is shown in the figure.

Connection diagram of servers on the test bench

All the functionality described earlier, have been confirmed as a result of functional tests conducted.

Testing of the disk subsystem was carried out using the Vdbench software version 5.04.06. On each physical server, one VM with Linux OS with 8 vCPU, 16 GB of RAM was created. For testing, 8 virtual disks with a capacity of 100 GB each were created on each VM.

During the tests, the following types of loads were checked:

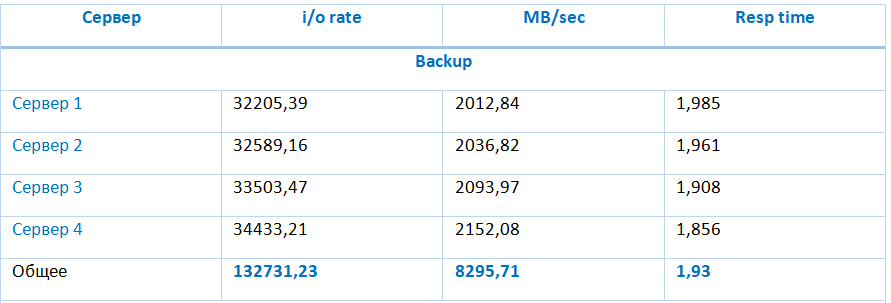

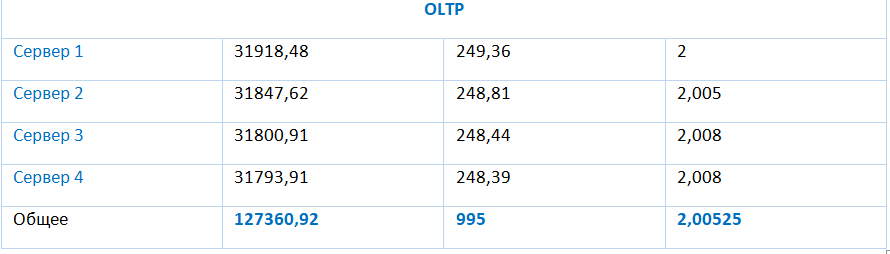

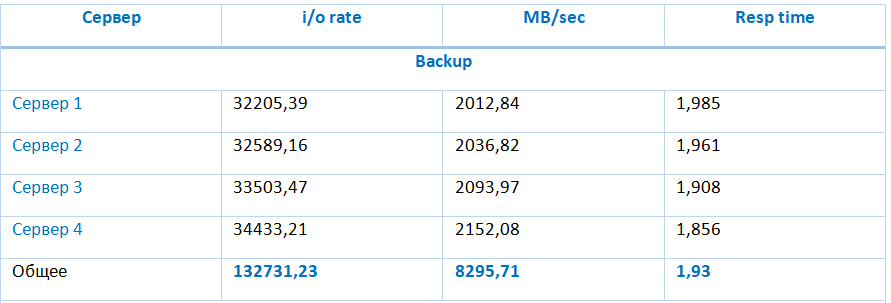

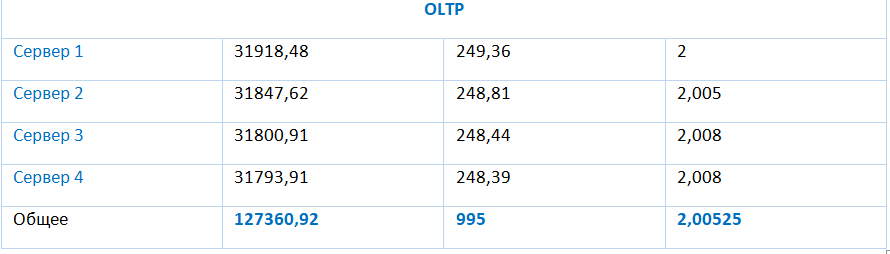

The results of testing these types are presented in the table:

The storage provides particularly high performance on successive read and write operations of 8295.71 MB and 2966.16 MB, respectively. Storage performance under typical load (random I / O in 4K blocks with 70% read) reaches 1,33977.94 IOPS with an average I / O delay of 1.91 ms, and decreases with increasing ratio of write operations to read operations.

These tests allowed to make sure that the failure of one of the components of the system does not stop the entire system.

The SharxBase platform provides a high level of availability and fault tolerance when one of the main hardware components fails. Due to the triple redundancy for the disk subsystem, the platform guarantees the availability and safety of data in case of double failure.

The disadvantages of the platform include high requirements for disk space, caused by the need to store and synchronize three full copies of data, and the lack of mechanisms for more efficient utilization of disk space, such as deduplication, compression, or erasure coding.

Based on the results of all the tests performed, it can be concluded that the SharxBase hyperconvergent platform is able to provide a high level of availability and performance for various types of loads, including OLTP systems, VDI and infrastructure services.

Ilya Kuykin,

Lead Design Engineer Computing Complexes,

Jet Infosystems

PS Under the cut a lot of tables, real numbers and other "meat". For those who like to dive into the essence - welcome!

About the product

The SharxBase platform is built on the basis of Intel-made servers and the OpenNebula and StorPool open source software. It is delivered in the form of a boxed solution that includes server hardware with pre-installed virtualization and distributed storage software.

')

One of four basic typical configurations is available for ordering - Small, Medium, Large, Storage, which differ in the amount of available computing resources (processors, RAM) and disk space. The servers are designed as modules: a typical 2RU chassis, in which up to four servers can be installed, for installation in a standard 19 "server rack. The platform supports both horizontal scaling by increasing the number of nodes and vertical by increasing the amount of RAM in the nodes , installation of additional drives and expansion cards. Currently, installation of network adapters, load control modules, NVMe drives is supported.

Storage architecture

To organize distributed fault-tolerant storage, flash-drives (SSD and / or NVMe) are used. Ethernet is used as data transmission medium. To transfer traffic to the storage system, you must use dedicated network interfaces - at least two 25 GbE interfaces. Services that provide distributed storage are performed on each server in the cluster and use part of its computational resources. The amount of resources depends on the number and size of installed drives, on average overhead costs amount to 34 GB of RAM per host. Connection to distributed storage occurs via the iSCSI block access protocol. To ensure fault tolerance, dual or triple data backup is supported. For productive installations, the manufacturer recommends the use of triple redundancy. Currently, only thin provisioning is supported from storage optimization technologies. Deduplication and data compression using distributed storage are not supported. In future versions, erasure coding support is claimed.

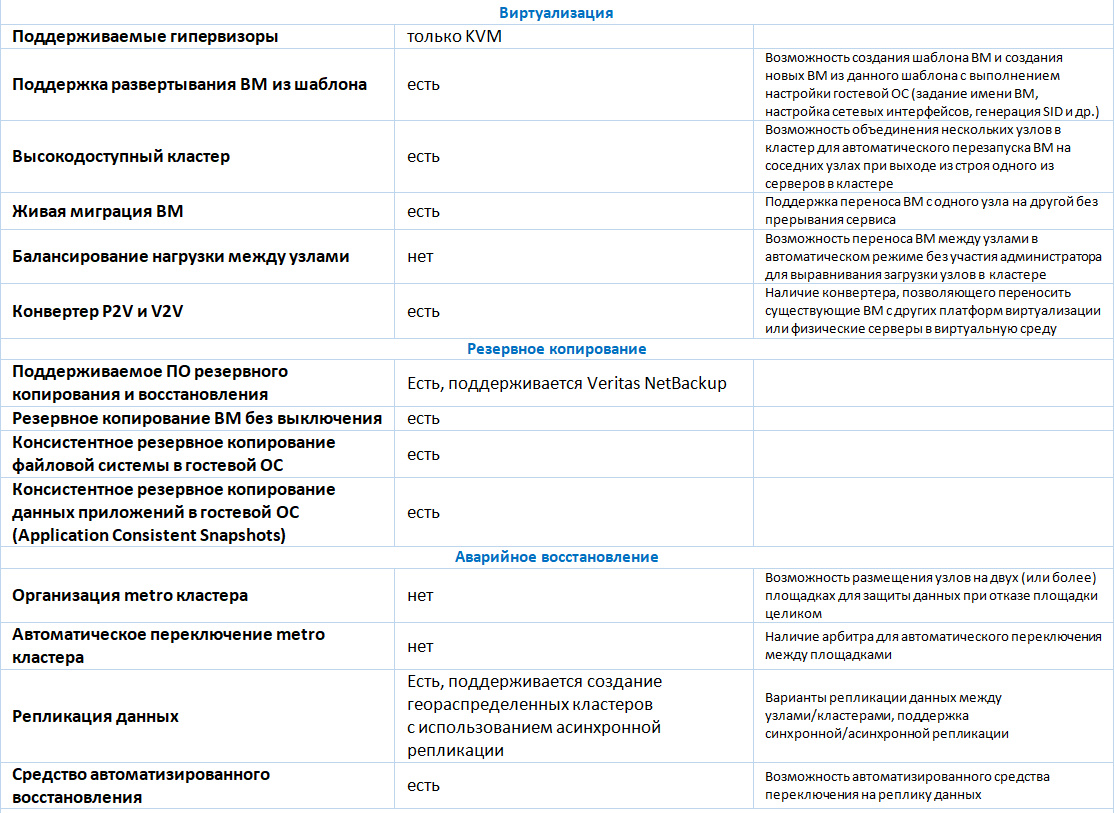

Virtualization

To start a virtual machine (VM), the KVM hypervisor is used. All the basic functionalities for their creation and management are supported:

- creating a VM from scratch with an indication of the required hardware configuration (processor cores, RAM size, number and size of virtual disks, number of network adapters, etc.);

- clone VM from existing or template;

- creating a snapshot (snapshot), deleting a snapshot, rolling back changes made to a VM from the moment of snapshot creation;

- changing the hardware configuration of a previously created VM, including connecting or disconnecting a virtual disk or network adapter for the VM turned on (hotplug / hot unplug);

- VM migration between virtualization servers;

- monitoring the state of the VM, including monitoring the load of computational resources and virtual disks (current size, I / O volume in MB / s or IOPS);

- scheduling VM operations on a schedule (on, off, creating a snapshot, etc.);

- connect and control VMs via VNC or SPICE protocols from the web console.

Typical block diagram (4 knots)

Platform management is performed from the GUI or the command line (locally or remotely when connected via SSH), as well as through a public API.

Among the limitations of the virtualization platform, it is possible to note the absence of mechanisms for automatic balancing of VMs between cluster hosts.

In addition to supporting server virtualization, SharxBase has the ability to create software-configured data centers and private cloud infrastructures. As an example of such functions include:

- management of access rights based on user membership in groups and access control lists (ACLs): rights can be assigned to different user groups restricting access to components of the virtual infrastructure;

- accounting of consumption of resources (accounting): processors, RAM, disk resources;

- estimation of the cost of consumption of computing resources (showback) in arbitrary units based on the consumed resources and their prices;

- basic IPAM (IP Address Management): automatic assignment of IP addresses for VM network interfaces from a predetermined range;

- Basic SDN features: creating a virtual router to transfer traffic between virtual networks.

Using the developed information security module, SharxBase implemented additional measures to ensure the information security system of the platform management system: customizable password requirements for user accounts (complexity, length, duration of use, repeatability, etc.), blocking users, managing current access sessions to the management console, registering events, etc. The software is brought in the Register of the Russian software (number 4445). The positive conclusion of the testing laboratory on successfully completed certification tests of SharxBase software in the FSTEC RF certification system on level 4 of the NDV absence control, as well as on compliance with specifications (protection requirements of virtualization environments) up to class 1 GIS / level IPPDn inclusive was obtained. Obtaining a certificate of compliance with the requirements of the system of certification of information security tools №ROSS RU.0001.01BI00 (FSTEK RF) is expected in December 2018

A detailed description of the functionality is given in the table below.

Monitoring

Monitoring SharxBase provides access to extended information about the platform status, setting up alerts and analyzing the platform status.

The monitoring subsystem is a distributed system installed on each of the cluster nodes and providing data on the state of the platform to the virtualization management system.

The real-time monitoring subsystem collects information about platform resources, such as:

| Server nodes | Power supplies | Switches | Virtual machines | Distributed Data Warehouse |

|---|---|---|---|---|

| - unit serial number - Serial number of the node and motherboard - block and node temperature - Model and CPU load - Slot numbers, frequency, size and availability of RAM - Site and storage address - Speed of rotation of cooling fans - Network adapter status - Serial number of the network adapter - The status of the disk and its system information | - Serial number of the power supply - The state of the power supply and its load | - Switch model - Status of the switch and its ports - Speed of rotation of cooling fans - Status of cooling fans - Display VLAN list | - CPU load - RAM load - Network load - The state of the virtual machine - Disk write / read speed - Speed of incoming / outgoing connections | - Display of free / occupied space - Condition of the disks - Occupied disk space - Disk errors |

Subtotals

The advantages of the solution include:

- possibility of delivery to organizations on the sanction lists;

- the solution is based on the OpenNebula project, which has been actively developing for a long time;

- support of all necessary functions in the part of server virtualization, sufficient for small and medium installations (up to 128 hosts);

- availability of an information security module to ensure the implementation of regulatory requirements in the field of information security.

The disadvantages of the solution include:

- less functionality compared to other HCI solutions on the market (for example, Dell VxRail, Nutanix);

- limited support from backup systems (Veritas NetBackup support is currently announced);

- Some administration tasks are performed from the console and are not accessible via the web.

Functionality

While expanding the portfolio of hyperconvergent solutions, we conducted tests on performance and fault tolerance together with the vendor.

Performance testing

The test bench consisted of a 4-node cluster of Intel HNS2600TP servers. The configuration of all servers was identical. The servers had the following hardware characteristics:

- server model - Intel HNS2600TP;

- two Intel Xeon E5-2650 v4 processors (12 cores with a clock frequency of 2.2 GHz and support for Hyper Threading);

- 256 GB of RAM (224 GB of memory is available to start the VM);

- network adapter with 2 QSFP + ports with 40 Gb / s data transfer rate;

- one RAID controller LSI SAS3008;

- 6 SATA SSD Intel DC S3700 drives of 800 GB each;

- two power supplies with a rated power of 1600 watts each.

- SharxBase v1.5 virtualization software is installed on the servers.

All servers were connected to the Mellanox network switch. Connection diagram is shown in the figure.

Connection diagram of servers on the test bench

All the functionality described earlier, have been confirmed as a result of functional tests conducted.

Testing of the disk subsystem was carried out using the Vdbench software version 5.04.06. On each physical server, one VM with Linux OS with 8 vCPU, 16 GB of RAM was created. For testing, 8 virtual disks with a capacity of 100 GB each were created on each VM.

During the tests, the following types of loads were checked:

- (Backup) 0% Random, 100% Read, 64 KB block size, 1 Outstanding IO;

- (Restore) 0% Random, 100% Write, 64 KB block size, 1 Outstanding IO;

- (Typical) 100% Random, 70% Read, 4 KB block size, 4 Outstanding IO;

- (VDI) 100% Random, 20% Read, 4 KB block size, 8 Outstanding IO;

- (OLTP) 100% Random, 70% Read, 8 KB block size, 4 Outstanding IO.

The results of testing these types are presented in the table:

The storage provides particularly high performance on successive read and write operations of 8295.71 MB and 2966.16 MB, respectively. Storage performance under typical load (random I / O in 4K blocks with 70% read) reaches 1,33977.94 IOPS with an average I / O delay of 1.91 ms, and decreases with increasing ratio of write operations to read operations.

Fault tolerance testing

These tests allowed to make sure that the failure of one of the components of the system does not stop the entire system.

| Test | Test details | Comments |

|---|---|---|

| Disk Failure in Storage Pool | 14:00 - the system is operating normally; 14:11 - disable the first SSD in Server 1; 14:12 - SSD failure is displayed in the platform management console; 14:21 - disable the first SSD in Server 2; 14:35 - in the console of management of a platform failure of two SSD is displayed; 14:38 - Return of disks to servers 1 and 2. LED indicators on the SSD are not displayed; 14:40 - the engineer through the CLI completed the addition of SSD to the storage; 14:50 - in the platform control console are displayed as working; 15:00 - synchronization of components of VM disks is completed; | The system worked out failure regularly. The fault tolerance indicator is as stated. |

| Network failure | 15:02 - the system is operating normally; 15:17 - Disable one of the two ports of Server 1; 15:17 - loss of one Echo request to the IP address of the web console (isolated server served as a leader), VM running on the server is available on the network; 15:18 - disabling the second port on Server 1, the VM and the server management console are now unavailable; 15:20 - VM restarted on Server 3 node; 15:26 - Server 1 network interfaces are connected, the server is back in the cluster; 15:35 - synchronization of the components of VM disks is completed; | The system worked out failure regularly. |

| Failure of one physical server | 15:35 - the system is operating normally; 15:36 - shutting down Server 3 via the poweroff command on the IPMI interface; 15:38 - test VM rebooted to Server 1; 3:40 pm — Server 3 turned on; 15:43 - server operation was restored; 15:47 - Synchronization completed. | The system worked out failure regularly. |

Test results

The SharxBase platform provides a high level of availability and fault tolerance when one of the main hardware components fails. Due to the triple redundancy for the disk subsystem, the platform guarantees the availability and safety of data in case of double failure.

The disadvantages of the platform include high requirements for disk space, caused by the need to store and synchronize three full copies of data, and the lack of mechanisms for more efficient utilization of disk space, such as deduplication, compression, or erasure coding.

Based on the results of all the tests performed, it can be concluded that the SharxBase hyperconvergent platform is able to provide a high level of availability and performance for various types of loads, including OLTP systems, VDI and infrastructure services.

Ilya Kuykin,

Lead Design Engineer Computing Complexes,

Jet Infosystems

Source: https://habr.com/ru/post/429042/

All Articles