How to create a game AI: guide for beginners

I stumbled upon an interesting material about artificial intelligence in games. With an explanation of basic things about AI on simple examples, and still inside a lot of useful tools and methods for its convenient development and design. How, where and when to use them - too.

Most examples are written in pseudocode, so deep knowledge of programming is not required. Under the cut of 35 sheets of text with pictures and gifs, so get ready.

')

UPD. I apologize, but I already did my own translation of this article on Habré PatientZero . You can read his version here , but for some reason the article passed by me (I used the search, but something went wrong). And since I am writing to a game dev blog, I decided to leave my translation version for subscribers (some of my details are different, some are intentionally missed on the advice of the developers).

What is AI?

Game AI focuses on what actions the object must perform, based on the conditions in which it is located. This is usually called the management of "intelligent agents", where the agent is a game character, a vehicle, a bot, and sometimes something more abstract: a whole group of entities or even civilization. In each case, this is a thing that must see its environment, make decisions on its basis and act in accordance with them. This is called the Sense / Think / Act cycle (Feel / Think / Act):

- Sense: the agent finds or receives information about things in his environment that may affect his behavior (threats nearby, items to collect, interesting places to explore).

- Think: the agent decides how to react (considers whether it is safe enough to collect items or first he must fight / hide).

- Act: the agent performs actions to implement the previous decision (starts moving towards the enemy or object).

- ... now the situation has changed due to the actions of the characters, so the cycle repeats with new data.

AI typically concentrates on the Sense part of the loop. For example, autonomous cars take pictures of the road, combine them with radar and lidar data, and interpret. Usually, it makes machine learning that processes incoming data and gives them meaning, extracting semantic information like "there is another car 20 yards ahead of you." These are the so-called classification problems.

Games do not need a complex system to extract information, since most of the data is already an integral part of it. There is no need to run image recognition algorithms to determine whether there is an enemy ahead - the game already knows and conveys information right in the decision-making process. Therefore, part of the Sense cycle is often much simpler than Think and Act.

Game Restrictions AI

AI has a number of restrictions that must be observed:

- AI does not need to train in advance, as if it is an algorithm for machine learning. It makes no sense to write a neural network during development to observe tens of thousands of players and learn the best way to play against them. Why? Because the game is not released, but there are no players.

- The game should entertain and challenge, so agents should not find a better approach against people.

- Agents need to look realistic in order for players to feel like they are playing against real people. AlphaGo program surpassed the person, but the selected steps were far from the traditional understanding of the game. If the game imitates the human opponent, there should be no such feeling. The algorithm needs to be changed to make plausible decisions, rather than ideal ones.

- AI should work in real time. This means that the algorithm cannot monopolize the use of the processor for a long time to make decisions. Even 10 milliseconds for this is too long, because most games only have 16 to 33 milliseconds to do all the processing and move on to the next frame of graphics.

- Ideally, if at least part of the system is data-driven, so that non-coders can make changes and make adjustments happen faster.

Consider the approaches of AI, which cover the entire cycle of Sense / Think / Act.

Making basic decisions

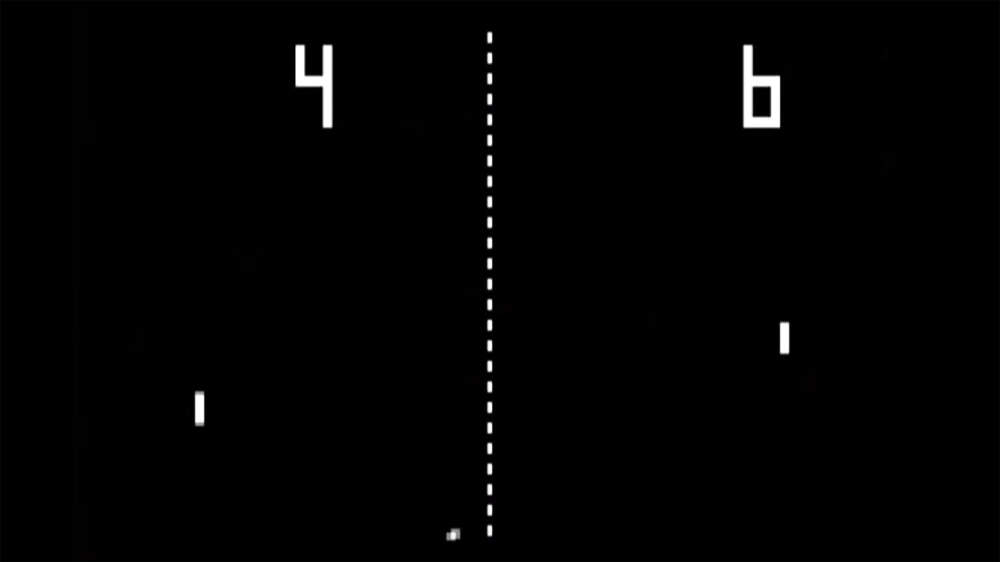

Let's start with the simplest game - Pong. Purpose: to move the platform (paddle) so that the ball bounces off of it, rather than flying past. It's like tennis, in which you lose if you don't hit the ball. Here, AI has a relatively easy task - to decide in which direction to move the platform.

Conditional statements

For AI in Pong there is the most obvious solution - always try to position the platform under the ball.

A simple algorithm for this, written in pseudocode:

every frame / update while the game is running:

if the ball is:

move paddle left

else if the ball is:

move paddle right

If the platform moves with the speed of the ball, then this is the perfect algorithm for AI in Pong. There is no need to complicate anything if there is not so much data and possible actions for the agent.

This approach is so simple that the entire Sense / Think / Act cycle is barely noticeable. But he is:

- The Sense part is in two if statements. The game knows where the ball is and where the platform is, so the AI calls on it for this information.

- The Think part is also included in two if statements. They embody two solutions that are mutually exclusive in this case. As a result, one of the three actions is selected - move the platform to the left, move to the right, or do nothing if it is already properly located.

- The Act part is located in the Move Paddle Left and Move Paddle Right operators. Depending on the design of the game, they can move the platform instantly or at a certain speed.

Such approaches are called reactive — there is a simple set of rules (in this case, if statements in the code) that react to the current state of the world and act.

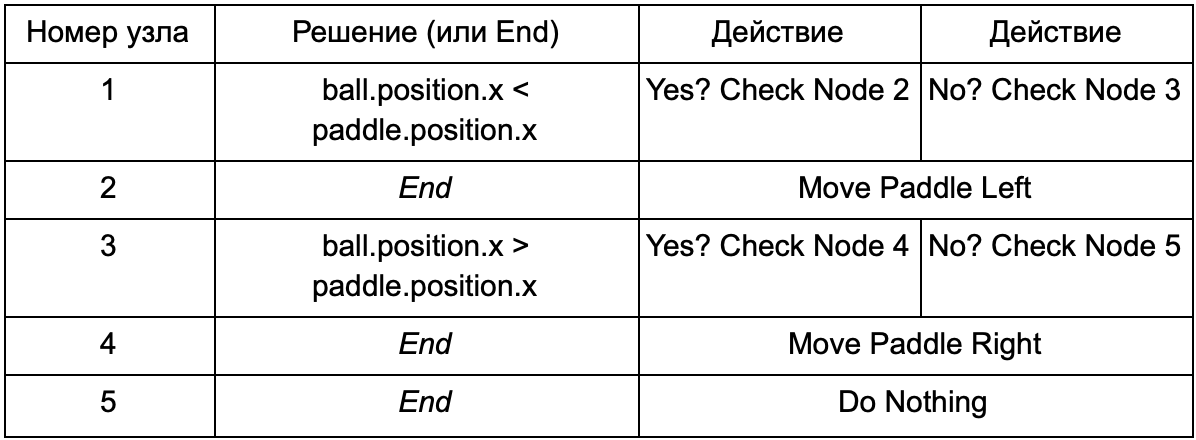

Decision tree

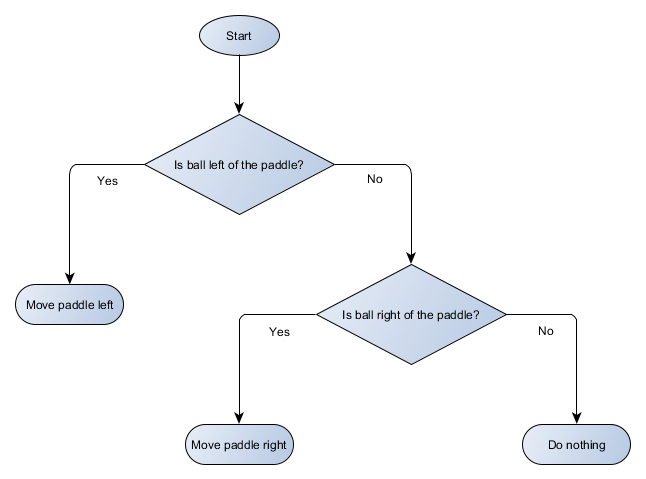

The example with the game Pong is actually equal to the formal concept of AI, called the decision tree. The algorithm passes it in order to achieve a “sheet” - a decision on what action to take.

Let's make a block diagram of the decision tree for the algorithm of our platform:

Each part of the tree is called a node (node) - AI uses graph theory to describe such structures. There are two types of nodes:

- Decision nodes: the choice between two alternatives based on checking a certain condition, where each alternative is represented as a separate node.

- End nodes: action to perform, representing the final decision.

The algorithm begins with the first node (the "root" of the tree). It either decides which child node to go to, or performs the action stored in the node and ends.

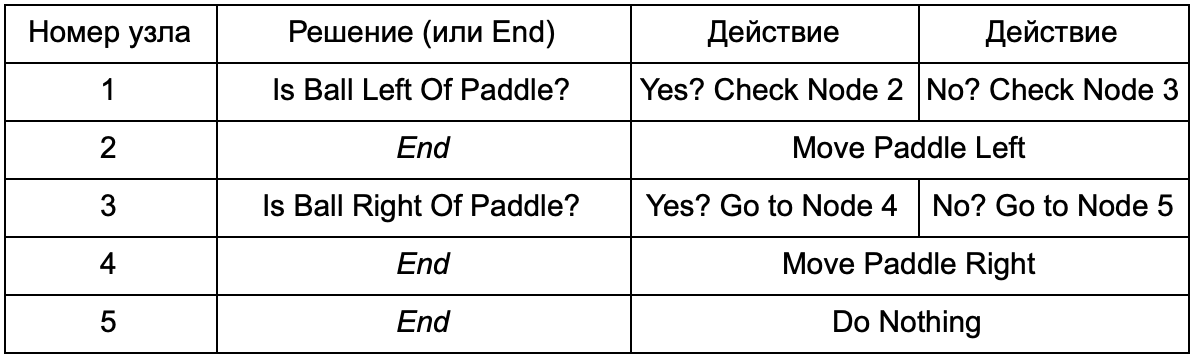

What is the advantage, if the decision tree, does the same work as the if-operators in the previous section? There is a common system, where each solution has only one condition and two possible results. This allows the developer to create AI from data representing solutions in the tree, avoiding its hard-coding. Let's present in the form of a table:

On the code side, you get a system for reading lines. Create a node for each of them, connect the decision logic based on the second column and the child nodes based on the third and fourth columns. You still need to program the conditions and actions, but now the structure of the game will be more difficult. In it, you add additional solutions and actions, and then configure the entire AI, simply by editing the text file with the tree definition. Next, transfer the file to the game designer, who can change the behavior without recompiling the game and changing the code.

Decision trees are very useful when they are built automatically based on a large set of examples (for example, using the ID3 algorithm). This makes them an effective and high-performance tool for classifying situations on the basis of the data obtained. However, we go beyond the simple system for agents to choose actions.

Scenarios

We analyzed the decision tree system, which used pre-created conditions and actions. The person designing the AI can arrange the tree as he wants, but he still has to rely on the coder who programmed it all. What if we could give the designer the tools to create our own conditions or actions?

To prevent the programmer from writing code for Is Ball Left Of Paddle and Is Ball Right Of Paddle conditions, he can create a system in which the designer will record conditions for checking these values. Then the decision tree data will look like this:

In essence, this is the same as in the first table, but the solutions within themselves have their own code, a bit like the conditional part of an if-operator. On the code side, this would be read in the second column for decision nodes, but instead of searching for a specific condition for execution (Is Ball Left Of Paddle), it evaluates the conditional expression and returns true or false, respectively. This is done using the Lua scripting language or Angelscript. Using them, the developer can take objects in his game (ball and paddle) and create variables that will be available in the script (ball.position). In addition, the scripting language is simpler than C ++. It does not require a complete compilation stage, so it is ideal for quickly adjusting game logic and allows non-coders to create the necessary functions themselves.

In the above example, the scripting language is used only to evaluate the conditional expression, but it can also be used for actions. For example, the Move Paddle Right data can become a script operator (ball.position.x + = 10). So that the action is also defined in the script, without the need to program the Move Paddle Right.

You can go even further and write the decision tree completely in the scripting language. This will be code in the form of hard-coded (hardcoded) conditional statements, but they will be in external script files, that is, they can be changed without recompiling the entire program. Often, you can edit the script file during the game to quickly test different AI reactions.

Event Response

The examples above are perfect for pong. They continuously run the Sense / Think / Act cycle and act on the basis of the latest state of the world. But in more complex games you need to respond to individual events, and not to evaluate everything at once. Pong is already a bad example. Choose another.

Imagine a shooter where enemies are still until they find a player, and then act depending on their “specialization”: someone will run “rashit”, someone will attack from afar. This is still a basic reactive system - “if a player is noticed, do something”, but it can be logically divided into the Player Seen event and the reaction (select the answer and execute it).

This brings us back to the Sense / Think / Act cycle. We can pin the Sense part that each frame will check to see if the AI sees the player. If not, nothing happens, but if it sees, then the Player Seen event is raised. The code will have a separate section that says: “when the Player Seen event occurs, do it”, where is the answer you need to refer to the Think and Act parts. Thus, you will set up reactions to the Player Seen event: ChargeAndAttack to the “bashing” character, and HideAndSnipe to the sniper. These links can be created in the data file for quick editing without the need to recompile. And here you can also use the scripting language.

Making difficult decisions

Although simple reaction systems are very effective, there are many situations where they are not enough. Sometimes you need to make various decisions based on what the agent is doing at the moment, but it’s hard to imagine this as a condition. Sometimes there are too many conditions to effectively present them in a decision tree or script. Sometimes you need to assess in advance how the situation will change before deciding on the next step. To solve these problems, more complex approaches are needed.

Finite state machine

Finite state machine or FSM (finite state machine) is a way of saying that our agent is currently in one of several possible states, and that he can move from one state to another. There are a number of such states - hence the name. The best example from life is a traffic light. In different places there are different sequences of lights, but the principle is the same - each state represents something (stop, go, etc.). A traffic light is in only one state at any given time, and moves from one to another based on simple rules.

With NPC in games a similar story. For example, take guards with such states:

- Patrolling

- Attacking.

- Fleeing.

And such conditions for changing its state:

- If the guard sees the enemy, he attacks.

- If the guardian attacks, but no longer sees the enemy, he returns to patrol.

- If the guardian attacks, but is badly injured, he runs away.

You can also write if-operators with a guardian-state variable and various checks: is there an enemy nearby, what is the health level of the NPC, etc. Add some more states:

- Inaction (Idling) - between patrols.

- Search (Searching) - when the observed enemy disappeared.

- Ask for help (Finding Help) - when the enemy is seen, but too strong to fight it alone.

The choice for each of them is limited - for example, the guard will not go looking for a hiding enemy if he has poor health.

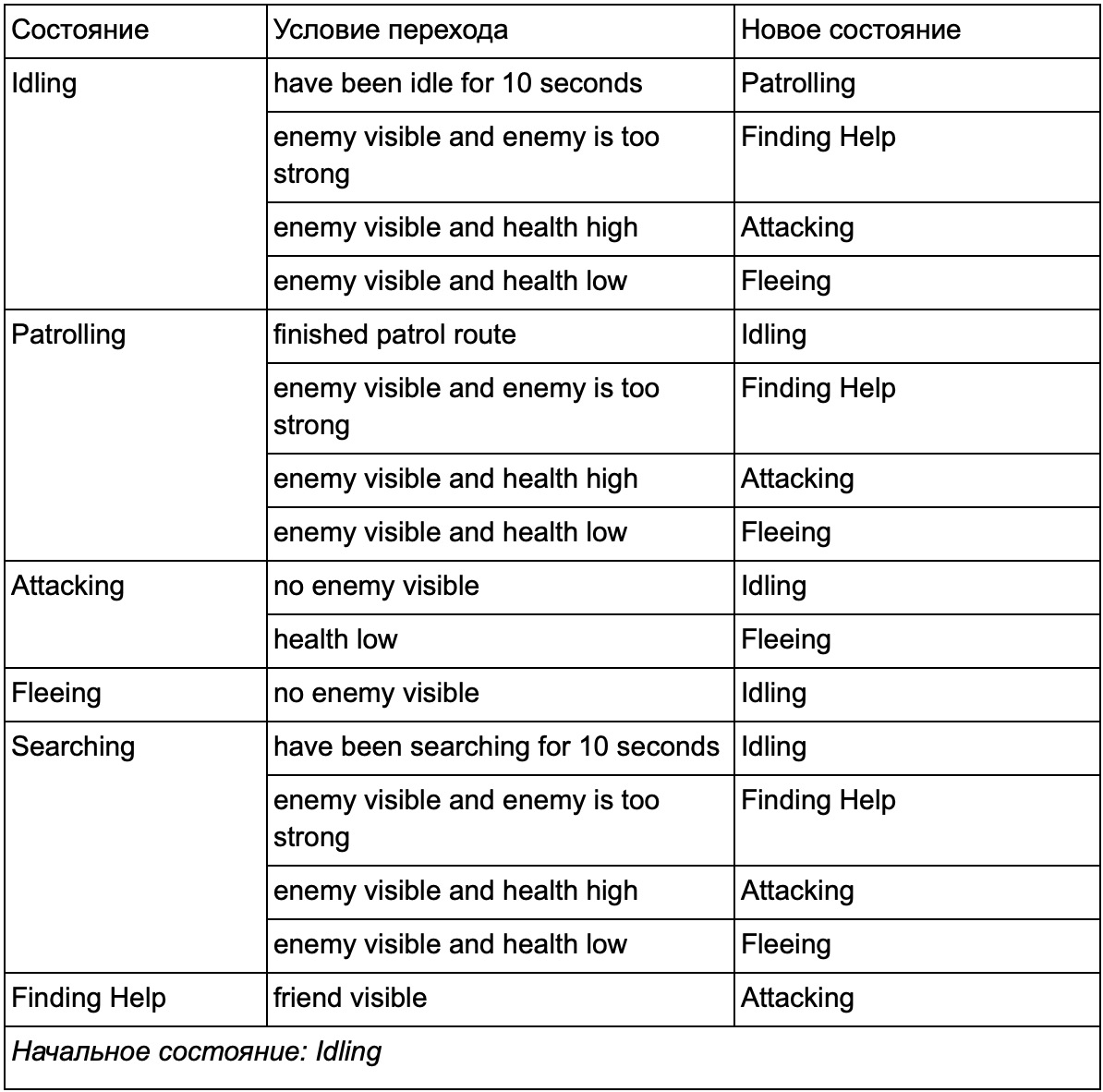

In the end, the huge list “if <x and y, but not z> then <p>” can become too cumbersome, so you should formalize the method that allows us to keep in mind the states and transitions between states. To do this, we take into account all the states, and under each state we write into the list all the transitions to other states, along with the conditions necessary for them.

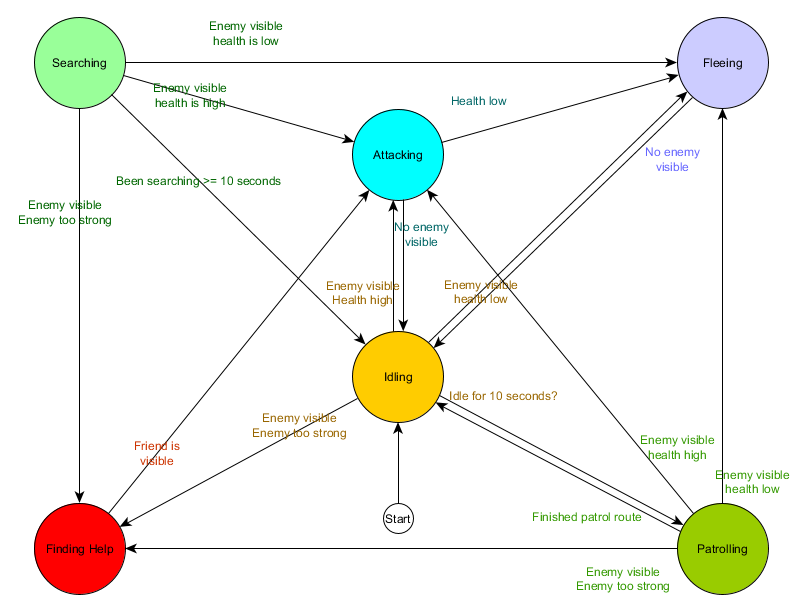

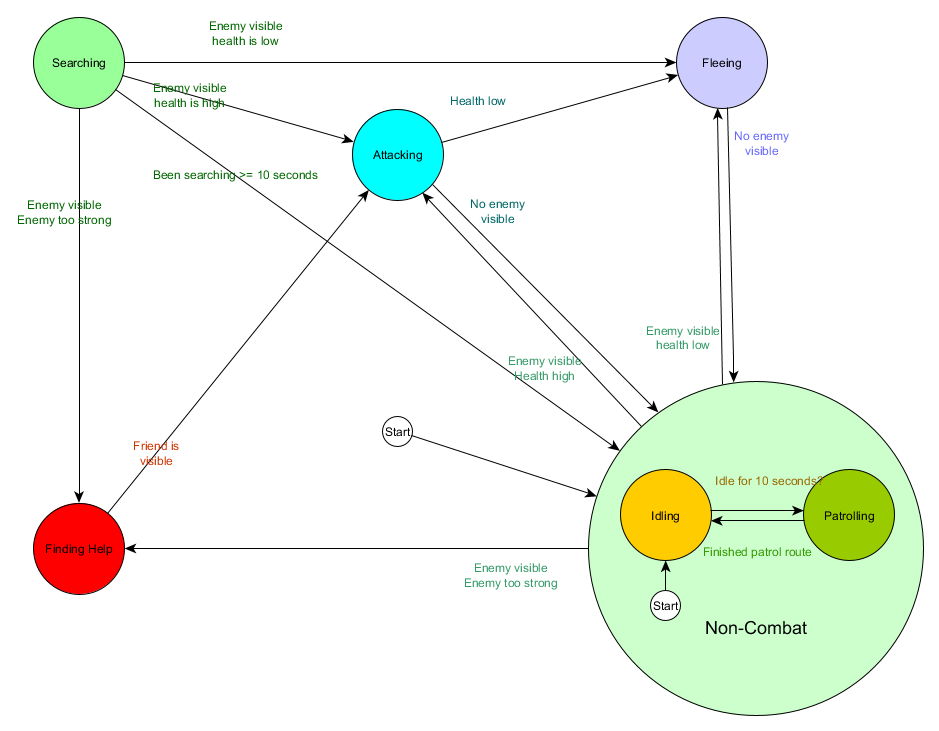

This state transition table is a comprehensive way of representing FSM. Draw a diagram and get a complete overview of how the behavior of the NPC is changing.

The diagram reflects the essence of decision making for this agent based on the current situation. And each arrow shows the transition between states, if the condition next to it is true.

Each update we check the current state of the agent, review the list of transitions, and if the conditions for the transition are met, it takes a new state. For example, each frame is checked if the 10-second timer has expired, and if so, the guard enters Patrolling from the Idling state. In the same way, the Attacking state checks the health of the agent — if it is low, it goes into the Fleeing state.

This is a state transition processing, but what about the behavior associated with the states themselves? Regarding the implementation of the actual behavior for a particular state, there are usually two types of “hooks” where we assign actions to the FSM:

- Actions that we periodically perform for the current state.

- The actions that we take during the transition from one state to another.

Examples for the first type. The Patrolling state each frame will move the agent along the patrol route. Attacking state Each frame will try to start an attack or go to a state where it is possible.

For the second type, consider the transition “if the enemy is visible and the enemy is too strong, then go to the Finding Help state. The agent must choose where to go for help and save this information so that the Finding Help state knows where to turn. Once assistance is found, the agent goes back to the Attacking state. At this point, he will want to tell the ally about the threat, so the NotifyFriendOfThreat action may occur.

Again, we can look at this system through the lens of the Sense / Think / Act cycle. Sense is embodied in the data used by the transition logic. Think - transitions available in every condition. And Act is carried out by actions performed periodically within a state or on transitions between states.

Sometimes continuous polling of transition conditions can be costly. For example, if each agent performs complex calculations every frame to determine whether it sees enemies and see if it is possible to move from the Patrolling state to Attacking - this will take a lot of processor time.

Important changes in the state of the world can be viewed as events that will be processed as they appear. Instead of the FSM checking the transition condition “can my agent see the player?” Each frame, you can configure a separate system to perform checks less frequently (for example, 5 times per second). And the result is to give out Player Seen when the check passes.

This is transmitted to the FSM, which should now go to the condition Player Seen event received and respond accordingly. The resulting behavior is the same except for an almost imperceptible delay before the answer. But the performance became better as a result of separating part of Sense into a separate part of the program.

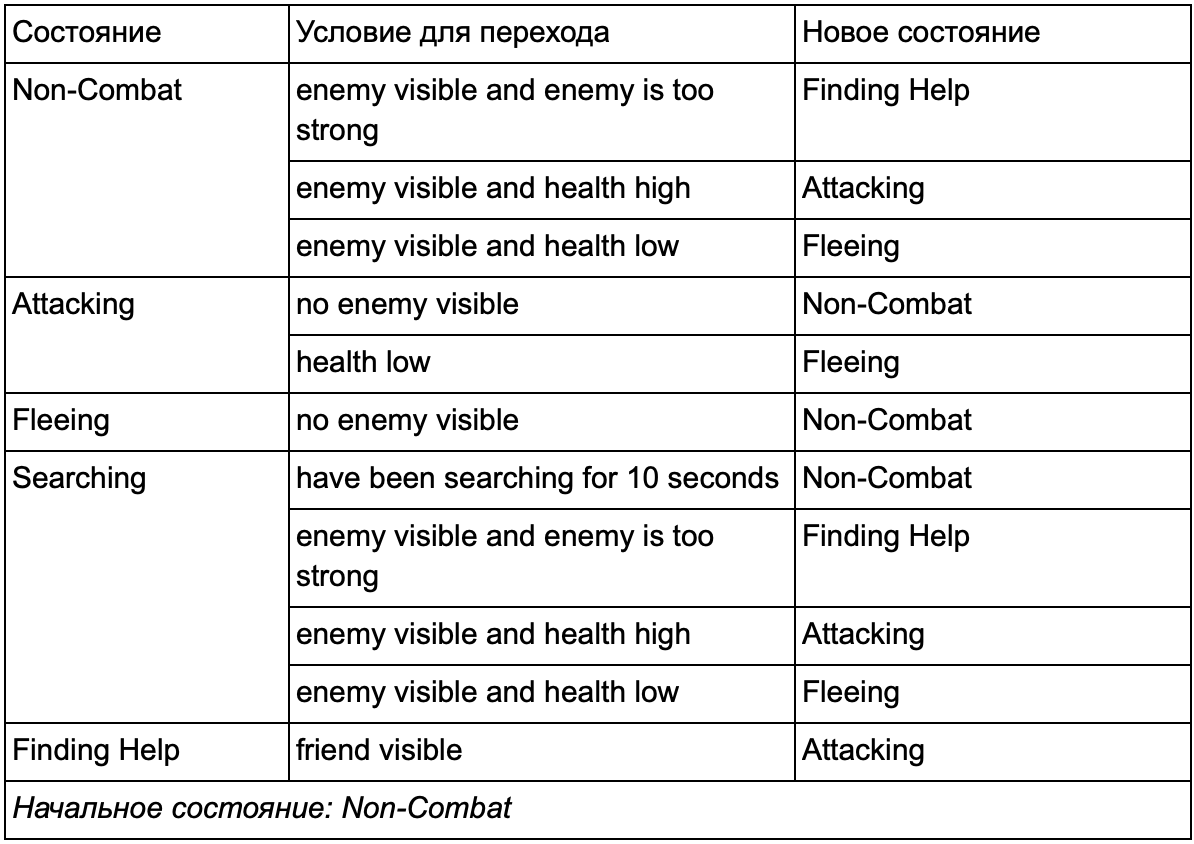

Hierarchical finite state machine

However, working with large FSM is not always convenient. If we want to expand the attack state, replacing it with separate MeleeAttacking (melee combat) and RangedAttacking (long-range combat), we will have to change the transitions from all other states that lead to the Attacking state (current and future).

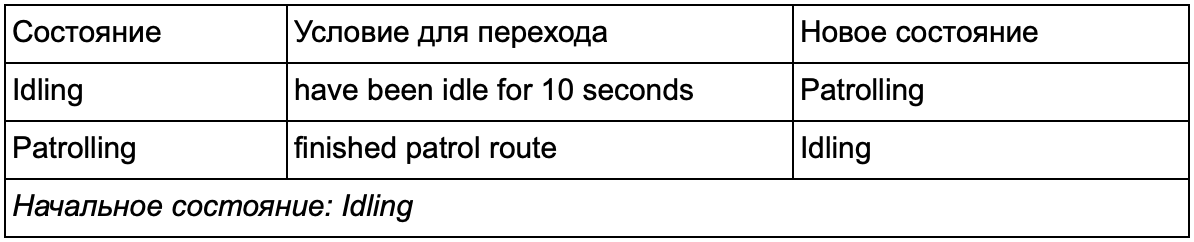

You probably noticed that in our example there are a lot of duplicate transitions. Most transitions in the Idling state are identical to transitions in the Patrolling state. It would be nice not to repeat, especially if we add more similar states. It makes sense to group Idling and Patrolling under the general label "non-combat", where there is only one common set of transitions to combat states. If we represent this label as a state, then Idling and Patrolling will become substates. An example of using a separate transition table for the new non-combat substate:

Basic states:

Condition out of combat:

And in the form of a diagram:

This is the same system, but with a new non-combat condition that includes Idling and Patrolling. With each state containing FSM with substates (and these substates, in turn, contain their own FSM - and so on, as much as you need), we get a Hierarchical Finite State Machine or HFSM (hierarchical finite state machine). Having grouped a non-combat state, we cut out a bunch of redundant transitions. We can do the same for any new states with common transitions. For example, if in the future we extend the Attacking state to the MeleeAttacking and MissileAttacking states, they will be substates, moving between each other based on the distance to the enemy and the availability of ammunition. As a result, complex behaviors and sub-models of behavior can be represented with a minimum of duplicate transitions.

Behavior tree

HFSM creates complex combinations of behaviors in a simple way. However, there is a slight difficulty that decision making in the form of transition rules is closely related to the current state. And in many games this is exactly what you need. A careful use of the state hierarchy can reduce the number of repetitions during a transition. But sometimes you need rules that work no matter what state you are in or that apply in almost any state. For example, if an agent’s health dropped to 25%, you want him to run away regardless of whether he was in a fight, lounging, or talking - you will have to add this condition to each condition. And if your designer later wants to change the low health threshold from 25% to 10%, then you’ll have to do it again.

Ideally, this situation requires a system in which decisions “in which state to be” are outside the states themselves, in order to make changes only in one place and not to touch the conditions of the transition. Here are trees of behavior.

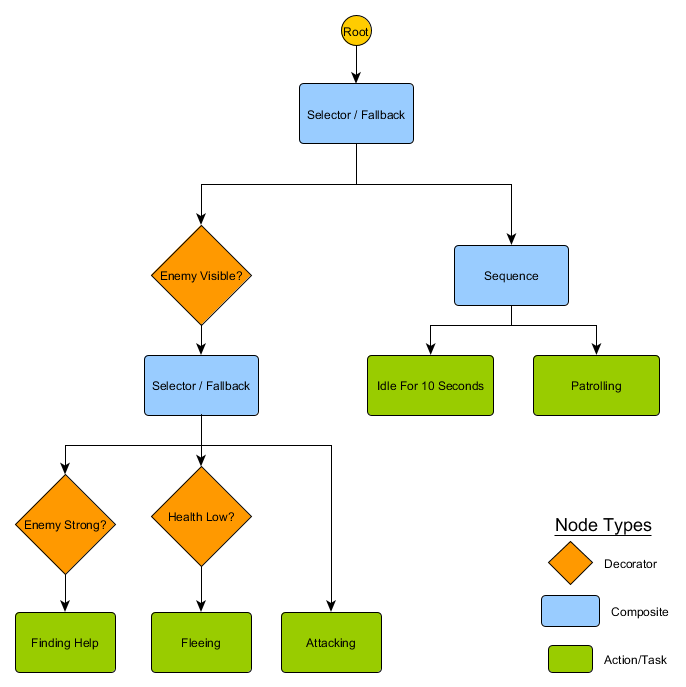

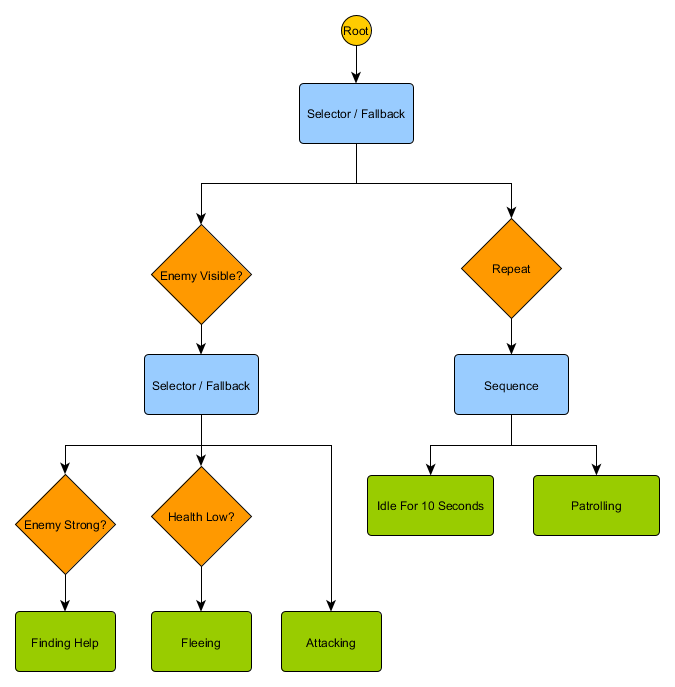

There are several ways to implement them, but the essence for all is about the same and similar to the decision tree: the algorithm starts from the root node, and the tree contains nodes representing either solutions or actions. True, there are a few key differences:

- Now the nodes return one of three values: Succeeded (if the job is completed), Failed (if it cannot be started) or Running (if it is still running and there is no final result).

- No more decision nodes for choosing between two alternatives. Instead, Decorator nodes that have one child node. If they are Succeed, then they perform their only child node.

- Nodes that perform actions return a Running value to represent the actions they are performing.

This small set of nodes can be combined to create a large number of complex behaviors. Imagine the guardian HFSM from the previous example as a behavior tree:

With this structure, there should be no explicit transition from the Idling / Patrolling state to the Attacking state or any other state. If the enemy is visible, and the character’s health is low, execution will stop at the Fleeing node, no matter which node he previously performed — Patrolling, Idling, Attacking, or whatever.

Trees of behavior are complex - there are many ways to make them, and finding the right combination of decorators and composite nodes can be problematic. There are also questions about how often to check a tree - do we want to go through it every part or only when one of the conditions has changed? How to store the node state — how to know when we were in the Idling state for 10 seconds, or how to know which nodes were executed the last time in order to process the sequence correctly?

That is why there are many implementations. For example, on some systems, decorator nodes have been replaced by built-in decorators. They re-evaluate the tree when the conditions of the decorator change, help to join nodes and provide periodic updates.

Utility-based system

Some games have many different mechanics. It is desirable that they receive all the advantages of simple and general rules of transition, but not necessarily in the form of a complete tree of behavior. Instead of having a clear set of choices or a tree of possible actions, it is easier to study all the actions and choose the most suitable at the moment.

Utility-based system (system based on utility) will help with just that. This is a system where an agent has many actions, and he chooses which one to perform, based on the relative utility of each. Where utility is an arbitrary measure of how important or desirable this action is for an agent.

The calculated utility of the action based on the current state and environment, the agent can check and select the most appropriate other state at any time. This is similar to FSM, except where transitions are determined by the score for each potential state, including the current one. Please note that we choose the most useful action for the transition (or stay if we have already completed it). For more variety, this may be a weighted but random selection from a small list.

The system assigns an arbitrary range of utility values - for example, from 0 (completely undesirable) to 100 (fully desirable). Each action has a number of parameters that affect the calculation of this value. Returning to our example with the guard:

Transitions between actions are ambiguous - any state can follow any other. Action priorities are in the returned utility values. If the enemy is visible, and this enemy is strong, and the character's health is low, then both Fleeing and FindingHelp will return high non-zero values. At the same time FindingHelp will always be higher. Similarly, non-combat actions never return more than 50, so they will always be lower than combat. You need to take this into account when creating actions and calculating their utility.

In our example, actions return either a fixed constant value or one of two fixed values. A more realistic system involves returning the estimate from a continuous range of values. For example, the Fleeing action returns higher utility values if the health of the agent is low, and the Attacking action returns lower values if the enemy is too strong. Because of this, Fleeing takes precedence over Attacking in any situation where the agent feels that he does not have enough health to defeat the enemy. This allows you to change the priorities of actions based on any number of criteria, which makes this approach more flexible and variable than the behavior tree or FSM.

Each action has many conditions for the calculation of the program. They can be written in a scripting language or as a series of mathematical formulas. In The Sims, which models the character's daily routine, adds an extra level of computation — the agent gets a number of “motivations” that affect the utility's scores. If a character is hungry, then over time he will starve even more, and the result of the usefulness of the action of EatFood will grow until the character performs it, reducing the level of hunger, and returning the value of EatFood to zero.

The idea of choosing actions based on a rating system is quite simple, so the Utility-based system can be used as part of AI's decision-making processes, and not as a complete replacement. A decision tree can request a utility estimate of two child nodes and select a higher one. Similarly, a behavior tree can have a composite Utility node for evaluating the usefulness of actions to decide which child to execute.

Movement and navigation

In the previous examples, we had a platform that we moved left or right, and a guard who patrolled or attacked. But how exactly do we handle the moving agent for a specific period of time? , , , , ? .

Control

, , , . , , . . Sense/Think/Act, , Think , Act . , , . — , . , , . :

desired_travel = destination_position – agent_position

2D-. (-2,-2), - - (30, 20), , — (32, 22). , — 5 , (4.12, 2.83). 8 .

. , , 5 / ( ), . , .

— , , , . . steering behaviours, : Seek (), Flee (), Arrival () . . , , , .

. Seek Arrival — . Obstacle Avoidance ( ) Separation () , . Alignment () Cohesion () . steering behaviours . , Arrival, Separation Obstacle Avoidance, . .

, — , - Arrival Obstacle Avoidance. , , . : , .

, , - .

Steering behaviours ( ), — . pathfinding ( ), .

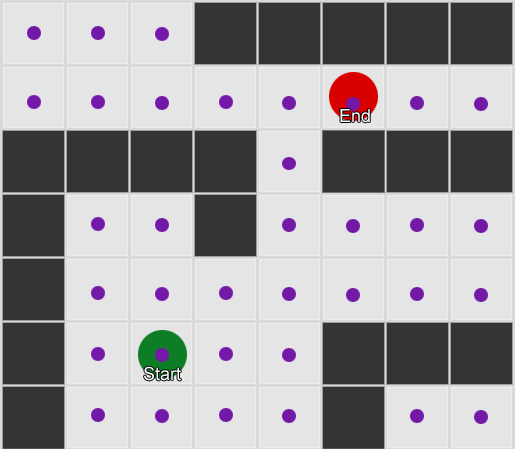

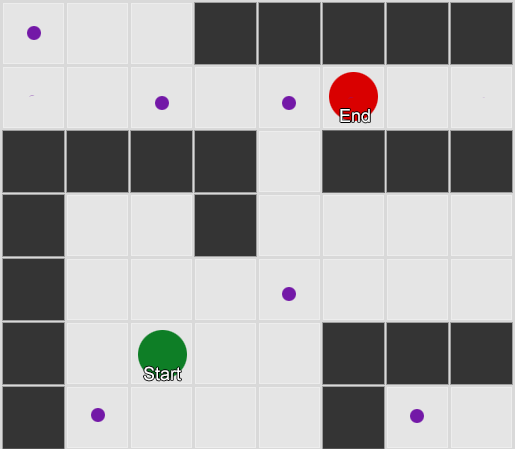

— . - , , . . , ( , ). , Breadth-First Search BFS ( ). ( breadth, «»). , , — , , .

, . (, pathfinding) — , , .

, , steering behaviours, — 1 2, 2 3 . — , — . - .

BFS — «» , «». A* (A star). , - ( , ), , , . , — «» ( ) , ( ).

, , , . , BFS, — .

, . . ? — , — , .

, — . A* BFS . : , — , . (waypoint), , .

1: . , , .

2: ( ). , , .

. , waypoint, . , .

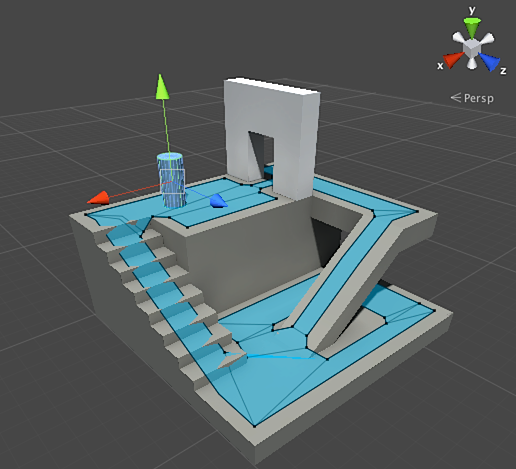

navigation mesh navmesh ( ). 2D- , — , . , .

Unity — navmesh ( - ). navmesh — , . , — , , .

, A*. , .

Pathfinding — , . , .

pathfinding, — , . : , , , , . . Pathfinding . Sense/Think/Act, , Think Act .

Magic: The Gathering. :

- Swamp — 1 ( ).

- Forest — 1 ( ).

- Fugitive Wizard — 1 .

- Elvish Mystic — 1 .

, . 1 , «» , , ( ) . - , Forest, «» 1 , Elvish Mystic. ?

— , . , , Swamp. . ? Elvish Mystic, Fugitive Wizard, , Swamp . Forest, Swamp. , , . .

, . , ( pathfinding), . , .

, « , ». , :

1. Swamp (: Swamp )

2. Forest (: Forest )

, . , Swamp — Swamp ( ), Forest ( ). — 1 , . Tap the Swamp, 1 .

1. Swamp (: Swamp )

1.1 «» Swamp (: Swamp «», +1 )

–

2. Forest (: Forest )

, . . Forest, « 1 », — Elvish Mystic.

1. Swamp (: Swamp )

1.1 «» Swamp (: Swamp «», +1 )

–

2. Forest (: Forest )

2.1 «» Forest (: Forest «», +1 )

2.1.1 Elvish Mystic (: Elvish Mystic , -1 )

–

, , .

. , , - . , . 1 3 . Swamp , 1 . Forest → Tap the Forest → Elvish Mystic — 4 .

Magic: The Gathering, . , , . , XCOM . , .

, . Magic: The Gathering: , — . .

— backwards chaining ( ). , . , — . .

1 , « 1 ». :

1. — .

2. — .

3. — .

4. — .

— best-first search ( ). , . . A* — — , .

best-first search Monte Carlo Tree Search. , , , ( ). «» . , , , ( , ).

Goal-Oriented Action Planning GOAP ( ). , , , backwards chaining, . « », , : → → .

, . ( « », ), .

, , . , - . , - . , . , -, . .

Before we move on to complex examples, let's estimate how far we can go, taking a few simple measurements and using them to make decisions. For example, a real-time strategy - how can we determine whether a player will be able to launch an attack in the first few minutes of the game and what defense against this to prepare? We can study the past experience of the player to understand what the future reaction may be. Let's start with the fact that we do not have such source data, but we can collect them - every time the AI plays against a person, he can record the time of the first attack. After several sessions, we will get an average value of the time through which the player will attack in the future.

Mean values have a problem: if a player “rashil” 20 times, and played 20 times slowly, then the necessary values will be somewhere in the middle, and this will not give us anything useful. One solution is to limit the input data - you can consider the last 20 pieces.

A similar approach is used in assessing the likelihood of certain actions, assuming that the player’s past preferences will be the same in the future. If a player attacks us five times with a fireball, twice with lightning and once hand to hand, it is obvious that he prefers the fireball. We extrapolate and see the probability of using various weapons: fireball = 62.5%, lightning = 25% and hand-to-hand = 12.5%. Our game AI needs to prepare for fire protection.

Another interesting method is to use the Naive Bayes Classifier (a naive Bayes classifier) to study large amounts of input data and classify the situation so that the AI responds as needed. Bayesian classifiers are best known for using email in spam filters. There they investigate words, compare them with where these words appeared earlier (in spam or not), and draw conclusions about incoming letters. We can do the same with even less input. Based on all the useful information that the AI sees (for example, which enemy units are created, or what spells they use, or what technologies they explored), and the final result (war or peace, "crush" or defend, etc.) - we will select the desired behavior of the AI.

All of these learning methods are sufficient, but it is advisable to use them based on data from testing. The AI will learn to adapt to the different strategies that your playters used. An AI that adapts to the player after release may become too predictable or, on the contrary, too difficult to win.

Value based adaptation

Given the content of our game world and rules, we can change the set of values that influence decision making, and not just use the input data. We do this:

- Let AI collect data on the state of the world and key events during the game (as indicated above).

- Change some important values based on this data.

- We implement our solutions based on the processing or evaluation of these values.

For example, an agent has several rooms to choose from a first-person shooter map. Each room has its own value, which determines how desirable it is to visit. The AI randomly chooses which room to go to based on the value value. Then the agent remembers which room he was killed in and reduces its value (the probability that he will return there). Similarly, for the opposite situation - if the agent destroys many opponents, then the value of the room increases.

Markov model

What if we use the collected data for forecasting? If we remember every room in which we see a player for a certain period of time, we will predict which room the player can go to. Having tracked and recorded the player's movement through the rooms (values), we can predict them.

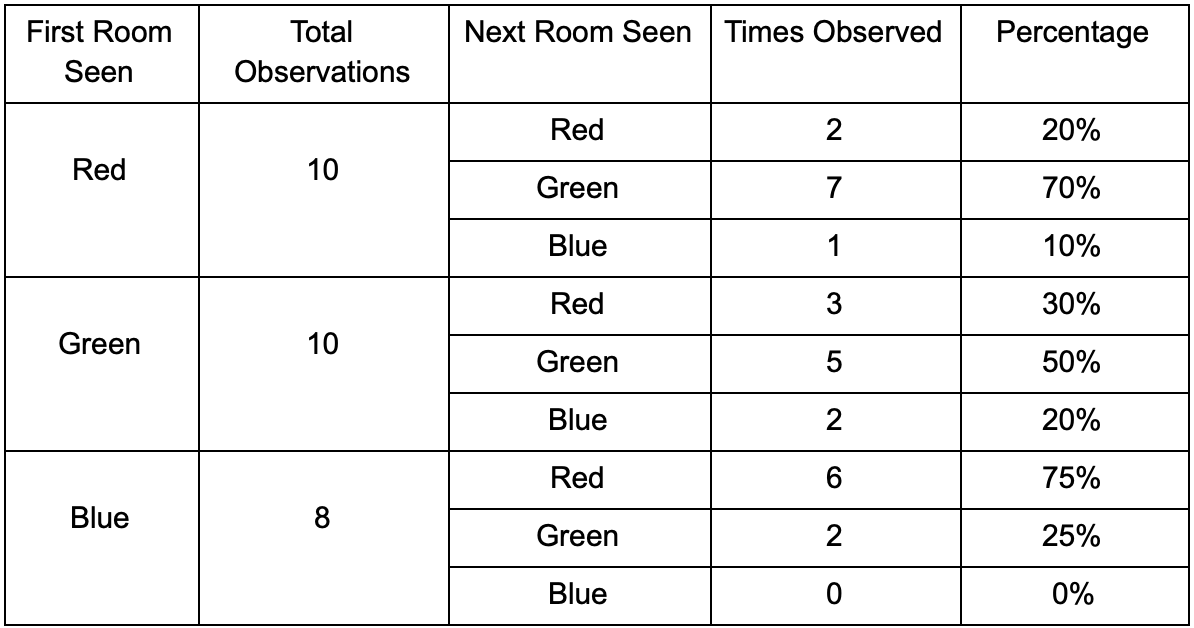

Take three rooms: red, green and blue. As well as the observations that we recorded when watching a game session:

The number of observations of each room is almost equal - we still do not know where to make a good place for an ambush. Statistics collection is also complicated by respawn players, which appear evenly throughout the map. But the data on the next room, which they enter after appearing on the map, is already useful.

It can be seen that the green room suits the players - most of the people from the red one go into it, 50% of which remain there further. The blue room, on the contrary, does not enjoy popularity, they almost don’t go to it, and if they do, they don’t linger.

But the data tells us something more important - when a player is in the blue room, the next room in which we will likely see him will be red, not green. Despite the fact that the green room is more popular than red, the situation changes if the player is in the blue. The next state (i.e. the room into which the player will move) depends on the previous state (i.e. the room in which the player is now). Because of the study of dependencies, we will make predictions more accurately than if we simply calculated the observations independently of each other.

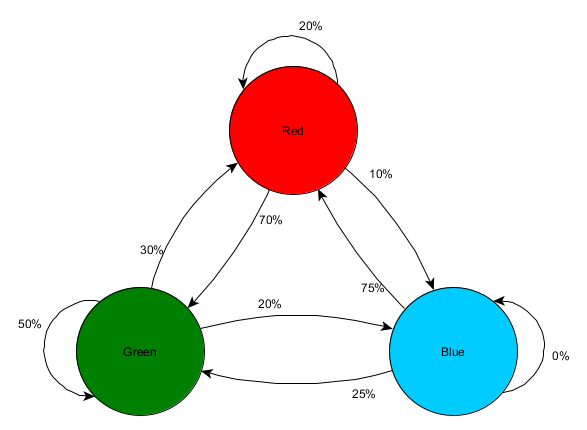

Predicting the future state based on past data is called the Markov model, and such examples (with rooms) are called Markov chains. Since the models represent the probability of changes between successive states, they are visually displayed as FSM with a probability around each transition. Previously, we used FSM to represent the behavioral state in which the agent was located, but this concept applies to any state, regardless of whether it is related to the agent or not. In this case, the states represent the room occupied by the agent:

This is a simple version of the representation of the relative probability of state changes, which gives AI some possibility to predict the next state. You can predict a few steps forward.

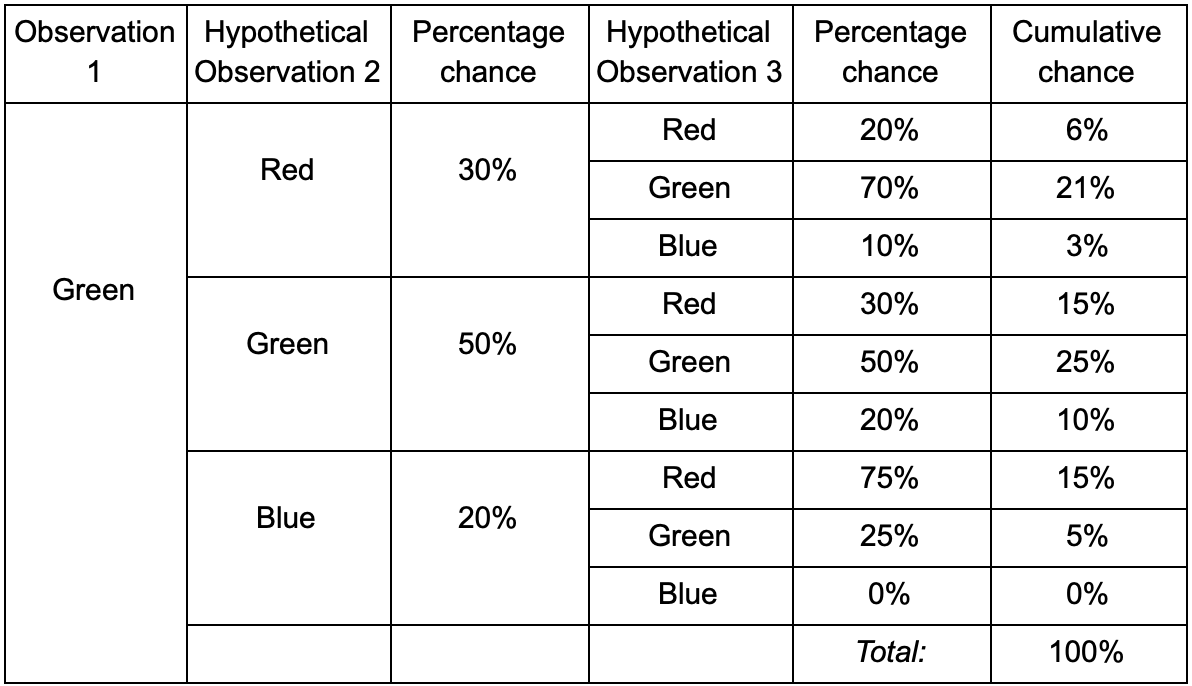

If the player is in the green room, then there is a 50% chance that he will remain there on the next observation. But what is the likelihood that he will still be there even after? There is not only a chance that the player remained in the green room after two observations, but also a chance that he left and returned. Here is a new table with new data:

It shows that the chance to see the player in the green room after two observations will be 51% - 21%, that he will have from the red room, 5% of them, that the player will visit the blue room between them, and 25% that the player does not will leave the green room.

The table is simply a visual tool - the procedure only requires multiplying the probabilities at each step. This means that you can look far into the future with one amendment: we assume that the chance to enter the room depends entirely on the current room. This is called the Markov Property - the future state depends only on the present. But this is not absolutely accurate. Players can change decisions depending on other factors: the level of health or the amount of ammunition. Since we do not fix these values, our predictions will be less accurate.

N-grams

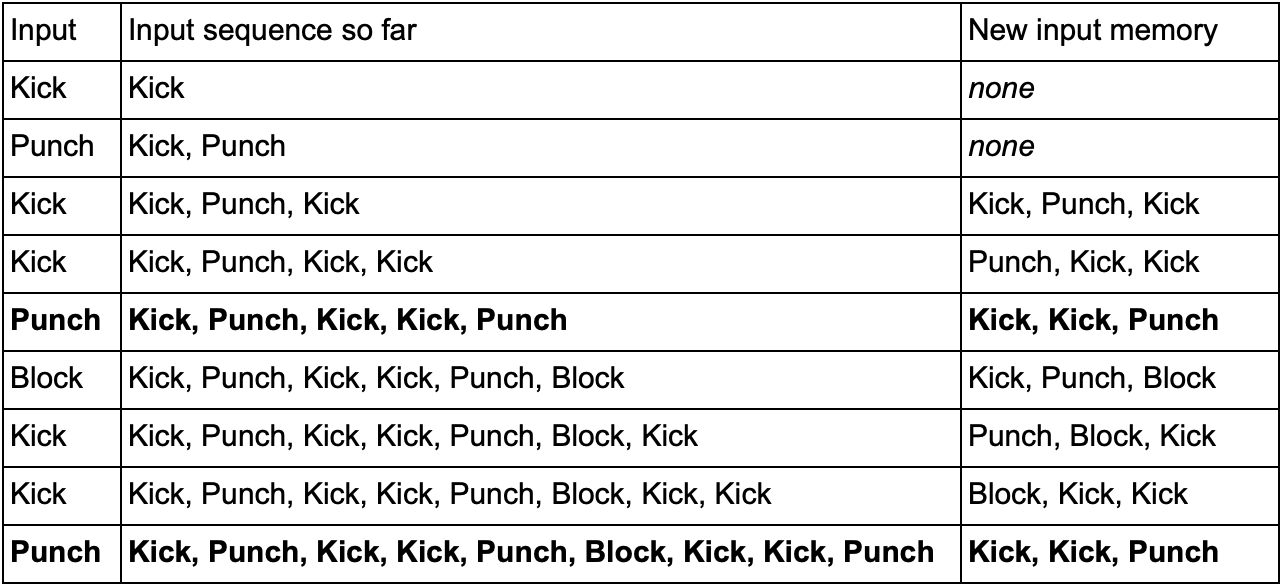

And what about the example of the fighting game and the prediction of player combo tricks? Same! But instead of a single state or event, we will explore the whole sequences that make up the combo strike.

One way to do this is to save each input (for example, Kick, Punch or Block) to the buffer and record the entire buffer as an event. So, the player repeatedly presses Kick, Kick, Punch to use the SuperDeathFist attack, the AI system stores all entries in the buffer and remembers the last three used in each step.

(Bold lines are highlighted when the player launches the SuperDeathFist attack.)

The AI will see all the options when the player chooses a Kick, followed by another Kick, and then notice that the next entry is always Punch. This will allow the agent to predict the SuperDeathFist combo method and block it, if possible.

These sequences of events are called N-grams (N-grams), where N is the number of stored items. In the previous example, it was a 3-gram (trigram), which means: the first two records are used to predict the third. Accordingly, in the 5-gram first four records predict the fifth and so on.

The developer needs to carefully choose the size of N-grams. A smaller number N requires less memory, but also stores a smaller history. For example, a 2-gram (bigram) will record Kick, Kick or Kick, Punch, but will not be able to store Kick, Kick, Punch, so the AI will not respond to the SuperDeathFist combo.

On the other hand, larger numbers require more memory and AI will be harder to learn, as there will be many more options. If you had three possible Kick, Punch or Block inputs, and we used a 10-gram, you will get about 60 thousand different variants.

A bigram model is a simple Markov chain — each pair of “past state / current state” is a bigram, and you can predict a second state based on the first. 3-grams and larger N-grams can also be regarded as Markov chains, where all the elements (except the last in the N-gram) together form the first state, and the last element is the second. The example with the fighting game shows the chance of transition from the state of Kick and Kick to the state of Kick and Punch. Considering several entries in the input history as one unit, we, in essence, convert the input sequence into a part of the whole state. This gives us the Markov property, which allows us to use Markov chains to predict the next input and guess which combo move will be next.

Conclusion

We talked about the most common tools and approaches in the development of artificial intelligence. They also examined situations in which they need to be applied and where they are especially useful.

This should be enough to understand the basic things in game AI. But, of course, this is not all methods. The less popular, but no less effective are:

- optimization algorithms, including hill climbing, gradient descent and genetic algorithms

- competitive search / scheduling algorithms (minimax and alpha-beta pruning)

- classification methods (perceptrons, neural networks and support vector machines)

- systems for processing perception and agent memory

- architectural approaches to AI (hybrid systems, a subset of architectures and other ways of imposing AI systems)

- animation tools (motion planning and coordination)

- performance factors (level of detail, algorithms anytime, and timeslicing)

Online resources on the topic:

1. On GameDev.net there is a section with articles and tutorials on AI , as well as a forum .

2. AiGameDev.com contains many presentations and articles on a wide range of related to the development of game AI.

3. The GDC Vault includes topics from the GDC AI Summit, many of which are available for free.

4. Useful materials can also be found on the site AI Game Programmers Guild .

5. Tommy Thompson, an AI researcher and game developer, makes videos on the AI and Games YouTube channel with an explanation and study of AI in commercial games.

Books on the subject:

1. The Game AI Pro book series is a collection of short articles explaining how to implement specific functions or how to solve specific problems.

Game AI Pro: Collected Wisdom of Game AI Professionals

Game AI Pro 2: Collected Wisdom of Game AI Professionals

Game AI Pro 3: Collected Wisdom of Game AI Professionals

2. AI Game Programming Wisdom Series - the predecessor of the Game AI Pro series. It has older methods, but almost all are relevant even today.

AI Game Programming Wisdom 1

AI Game Programming Wisdom 2

AI Game Programming Wisdom 3

AI Game Programming Wisdom 4

3. Artificial Intelligence: A Modern Approach is one of the basic texts for everyone who wants to understand the general field of artificial intelligence. This book is not about game development - it teaches the basics of AI.

Source: https://habr.com/ru/post/428892/

All Articles