WDM Technologies: Integrating Data Centers into Resilient Clusters

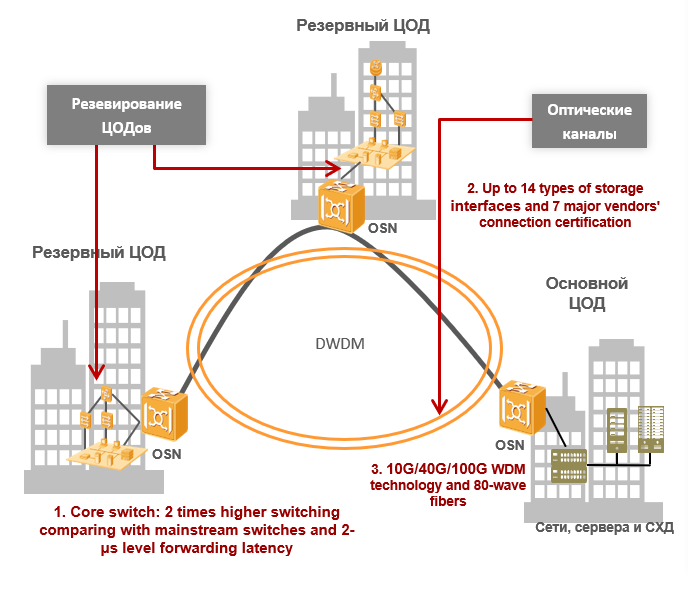

Despite the reliability of modern data processing centers, another level of redundancy is needed for critical facilities, because the entire IT infrastructure may fail due to a man-made or natural disaster. To ensure disaster recovery, we have to build backup data centers. Under the cut, our story about the problems that arise when they are combined (DCI - Data Center Interconnection).

The volume of data processed by mankind has grown to incredible values, and the role of IT infrastructure in business processes is so great that even short-term failures can completely paralyze the company's activities. Digital technologies are being introduced everywhere, and the financial sector, telecom, or, for example, large-scale online retail, is particularly strongly dependent on them. For a large cloud provider, a bank or a large telecom operator, the reliability provided by data centers is not enough: losses from a small idle time can amount to astronomical amounts and, in order to avoid them, disaster-proof infrastructure is needed. You can create it only by increasing redundancy - you have to build backup data centers.

')

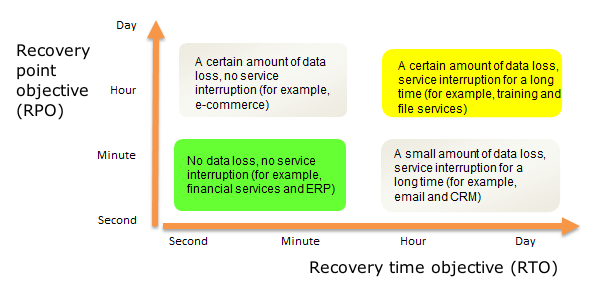

Corporate data centers or equipment installed on leased areas can be combined. Fault tolerance of geo-distributed solutions is achieved through a software architecture, and owners can save on their own facilities: they do not have to build a data center, for example, Tier III or even Tier II. You can opt out of diesel generators, use unpackaged servers, play with extreme temperature conditions and do other interesting tricks. On leased areas, the degrees of freedom are less, here the rules of the game are determined by the provider, but the principles of unification are the same. Before we talk about disaster-resistant IT-services, it is worth remembering three magic abbreviations: RTO, RPO and RCO. These key performance indicators determine the ability of the IT infrastructure to withstand disruptions.

RTO (Recovery time objective) - the permissible time to recover an IT system after an incident;

RPO (Recovery point objective) - the loss of data allowed during a disaster recovery. Usually measured as the maximum period during which data may be lost;

RCO (Recovery capacity objective) - part of the IT-load, which is able to take the backup system. The latter figure can be measured in percentages, transactions and other "parrots".

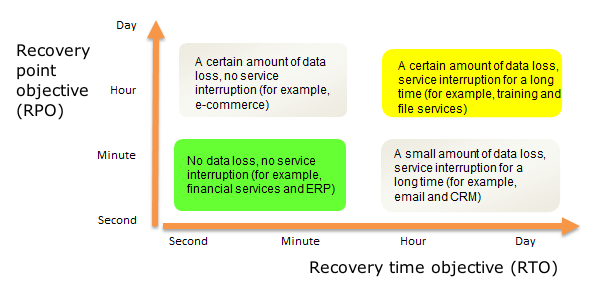

Here it is important to distinguish between high availability solutions (HA - High Availability) and disaster recovery solutions (DR - Disaster Recovery). The difference between them can be represented in the form of a diagram with RPO and RTO as coordinate axes:

Ideally, we do not lose data and do not spend time on recovery after a failure, and the backup site will ensure full serviceability of the services, even if the main one is destroyed. Zero RTOs and RPOs can only be achieved with synchronous operation of data centers: in fact, it is a geographically distributed fault-tolerant cluster with real-time data replication and other joys. In asynchronous mode, the integrity of the data is no longer guaranteed: since replication is done at regular intervals, some of the information may be lost. The switching time to the backup site in this case ranges from several minutes to several hours, if we are talking about a so-called. cold standby when most of the backup equipment is turned off and does not consume electricity.

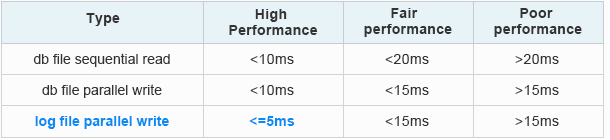

The technical difficulties arising from the combination of two or more data centers fall into three categories: data transfer delays, insufficient bandwidth of communication channels and information security problems. Communication between data centers usually provide their own or leased fiber-optic communication lines, so we will talk further about them. For data centers operating in synchronous mode, the main problem is delays. To provide real-time data replication, they should not exceed 20 milliseconds, and sometimes even 10 milliseconds - this depends on the type of application or service.

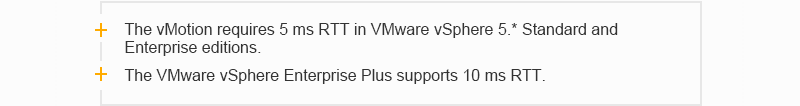

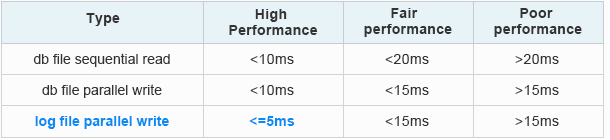

Otherwise, the protocols of the Fiber Channel family will not work, for example, it is practically impossible to do without them in modern data storage systems. There, the higher the speed, the less should be the delay. There are, of course, protocols that allow you to work with storage networks via Ethernet, but here much depends on the applications used in the data center and the equipment installed. Below is an example of delay requirements for common Oracle and VMware applications:

Oracle Extended Distance Cluster Delay Requirements:

From the Database of the Database is Slow [ID 1275596.1]

VMware Delay Requirements:

VMware vSphere Metro Storage Cluster Case Study (VMware vSphere 5.0)

When transmitting data, the signal delay can be represented as two components: T total = T equip. + T s , where T is equipped. - the delay caused by the signal passing through the equipment, and T ov - the delay caused by the signal passing through the optical fiber. The delay caused by the signal passing through the equipment (T equipments ) depends on the equipment architecture and the method of data encapsulation in the optical-electrical signal conversion. In the DWDM equipment, this functionality is assigned to the transponder or muxplyadera modules. Therefore, when organizing communication between two data centers, the choice of the type of transponder (muxer) is especially carefully chosen so that the delay on the transponder (muxliver) is the smallest.

In synchronous mode, an important role is played by the speed of signal propagation in the optical fiber (T s ). It is known that the speed of light propagation in a standard (for example, G.652) optical fiber depends on the refractive index of its core and is approximately equal to 70% of the speed of light in a vacuum (~ 300 000 km / s). We will not go deep into the physical bases, but it is easy to calculate that the delay in this case is about 5 microseconds per kilometer. Therefore, two data centers can operate synchronously at a distance of only about 100 kilometers.

In asynchronous mode, the delay requirements are not so hard, but if you greatly increase the distance between objects, the attenuation of the optical signal in the fiber begins to affect. The signal has to be amplified and regenerated, that is, you have to create your own transmission systems or rent the main communication channels. The volumes of traffic passing between two data centers are quite large and tend to constantly grow. The main drivers of traffic growth between data centers: virtualization, cloud services, migration and connection of new servers and storage systems. Here you can also face the problem of insufficient bandwidth of data transmission channels. Increase it to infinity will not work because of the lack of its own loose fibers or the high cost of rent. The last important point is related to information security: data running between data centers must be encrypted, which also increases latency. There are other issues, such as the complexity of administering a distributed system, but their influence is not so great, and all technical obstacles are mainly related to the characteristics of communication channels and terminal equipment.

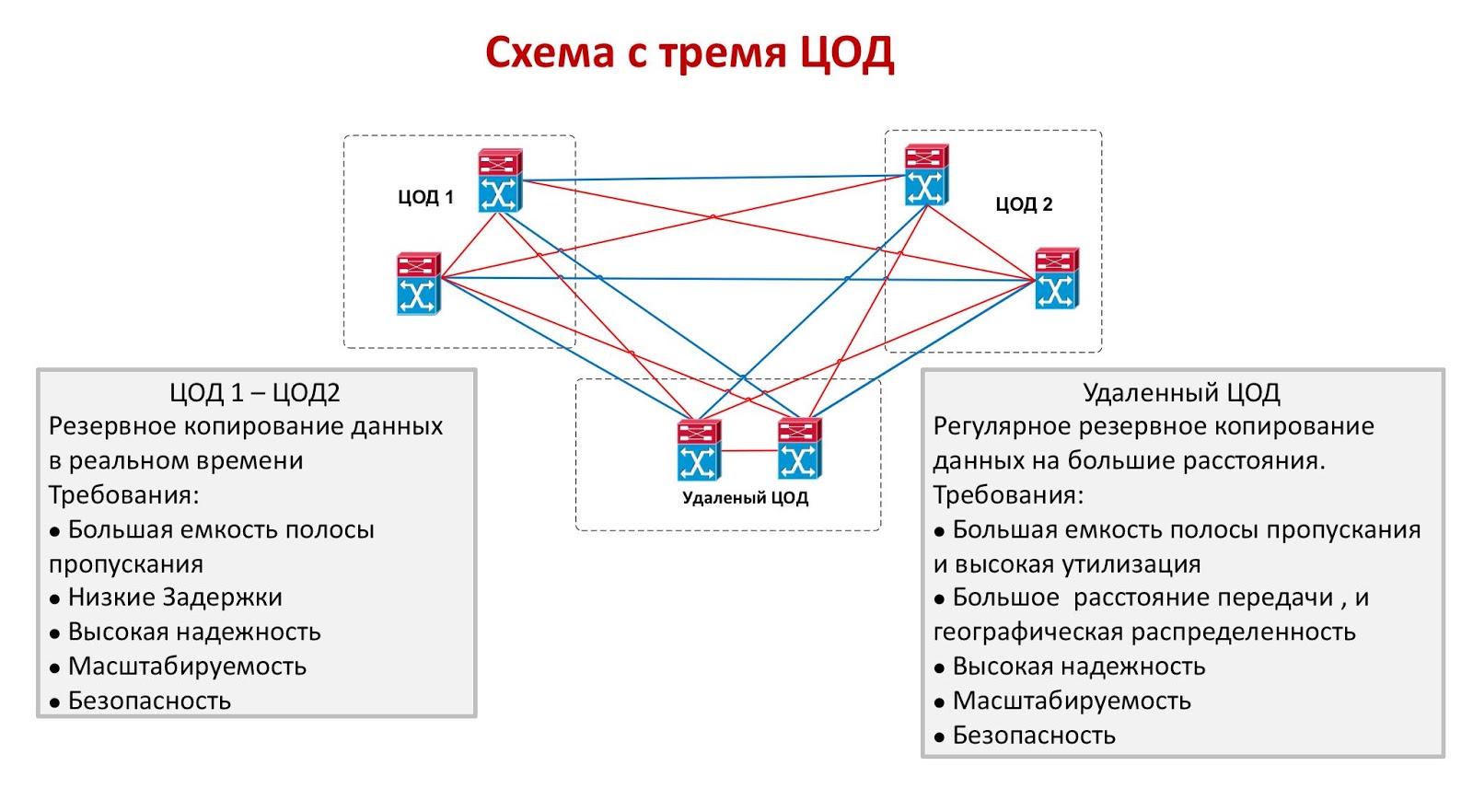

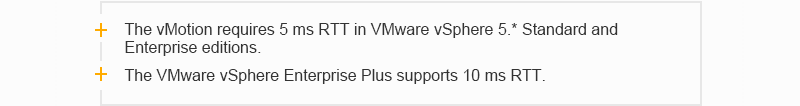

Both modes of combining data centers have significant drawbacks. Objects working synchronously must be located close to each other, which does not guarantee the survival of at least one of them in the event of a large-scale disaster. Yes, this option is reliably protected from human error, from fire, from the destruction of the machine room as a result of a plane crash or from another local emergency, but it’s far from the fact that both data centers will withstand, for example, a catastrophic earthquake. In asynchronous mode, objects can be spread over thousands of kilometers, but providing acceptable values for RTO and RPO is no longer possible. The ideal solution would be a circuit with three data centers, two of which operate synchronously, and the third is located as far as possible from them and plays the role of an asynchronous reserve.

The only problem with a three-data center scheme is its extremely high cost. Organizing even a single backup site is expensive, and only a few can afford to keep two idle data centers. A similar approach is sometimes used in the financial sector if the cost of a transaction is very high: a large exchange can run a scheme with three small data centers, but in the banking sector they prefer to use a synchronous combination of the two. In other industries, two data centers are usually combined in synchronous or asynchronous mode.

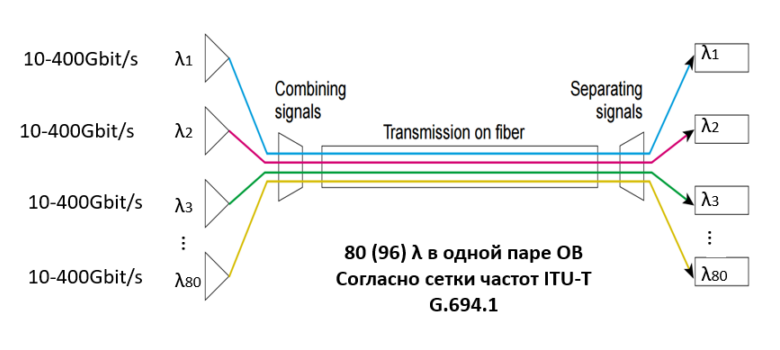

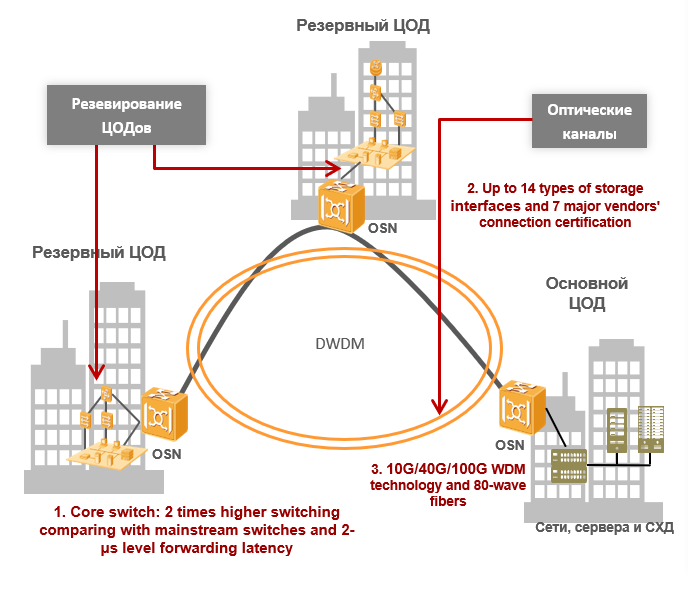

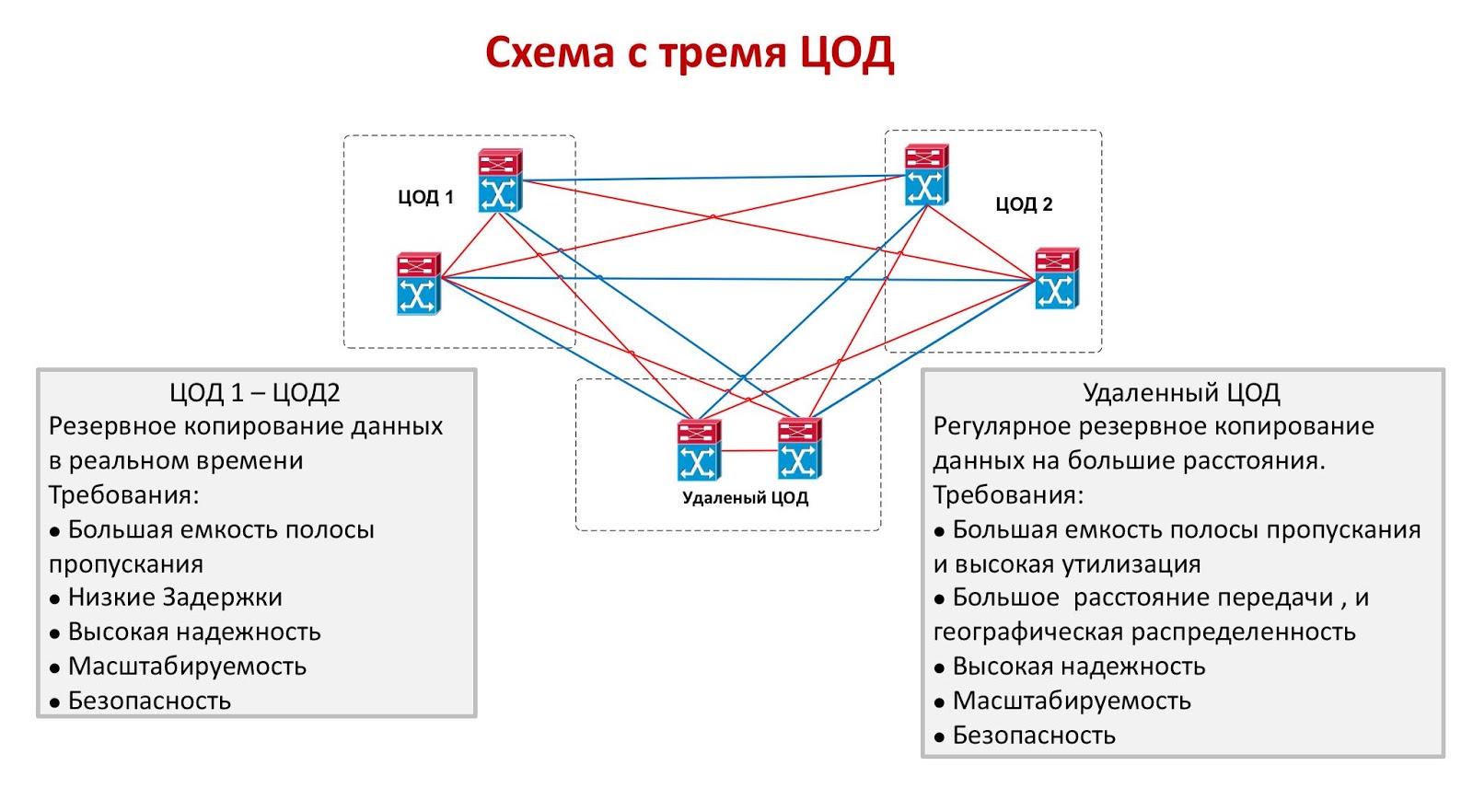

If a customer needs to combine two data centers, he will inevitably run into the problems mentioned above. To solve them, we use the DWDM spectral multiplexing technology, which allows multiplexing a number of carrier signals into a single optical fiber using different wavelengths (λ, i.e., lambda). At the same time in one optical pair can be up to 80 (96) wavelengths according to the grid frequency ITU-T G.694.1. The data transmission rate of each wavelength is 100 Gbit / s, 200 Gbit / s or 400 Gbit / s, and the capacity of one optical pair can reach 80 λ * 400 Gbit / s = 32Tbit / s. There are already ready-made designs that provide 1 Tbit / s per wavelength: they will give even more bandwidth in the near future. This solves the problem of throughput of channels completely: instead of additional fibers, the customer will use more efficiently the available ones - the utilization of traffic will reach fantastic values.

Spectral multiplexing solves problems with bandwidth, and for data centers operating in synchronous mode, this is quite sufficient, since data transfer delays between them are small due to the small distance and depend more on the type of transponder (or muxer) used in the DWDM system. It is worth noting one of the main features of the DWDM spectral compression technology: a completely transparent transmission of traffic due to the fact that the technology works on the first physical layer of the seven-level OSI model. If you can put it that way, the DWDM system is “transparent” for its client connections, as if they were connected by a direct patch cord. If we talk about the asynchronous mode, the main value of the delay depends on the distance between the data centers (we remember that the delay in the computer is 5 microseconds per kilometer), and there are no strict requirements for delays. Therefore, the transmission distance is determined by the capabilities of the DWDM system and is limited by three factors: signal attenuation, signal-to-noise ratio and mode polarization dispersion of light.

When calculating the optical part of the DWDM line, all these factors are taken into account and, based on the calculations, the types of transponders (or muxponders), the required number and type of amplifiers, and other components of the optical path are selected. With the development of DWDM systems and the emergence in their composition of transponders supporting coherent reception with speeds of 40 Gbit / s and 100 Gbit / s and higher, the polarization mode light dispersion as a limiting factor is no longer taken into account. The question of calculating the optical line and choosing the type of amplifier is a large separate topic that requires the reader to know the basics of physical optics, and we do not discuss it in detail in this article.

WDM technology can solve information security problems. Of course, encryption does not necessarily have to be performed at the optical level, but this approach has several undeniable advantages. Encryption at higher levels often requires autonomous devices for different traffic flows and contributes to significant delays. As the number of such devices increases, so do the delays, and the complexity of managing the network also increases. OTN optical level encryption (G.709 - ITU-T recommendation describing the frame format in DWDM systems) does not depend on the type of service, does not require separate devices and runs very quickly - the difference between encrypted and unencrypted data flow does not exceed 10 milliseconds

Without the use of DWDM spectral multiplexing technology, it is almost impossible to unite large data centers and create a disaster-tolerant distributed cluster. The amount of information transmitted over the network grows exponentially and sooner or later the possibilities of the existing fiber-optic communication lines will be exhausted. Laying or renting additional costs to the customer is much more expensive than purchasing equipment, in fact today sealing is the only economically viable option. At short distances, DWDM technology makes it possible to more efficiently use existing optical fibers, raising the utilization of traffic to the skies, and at far distances, it also minimizes data transfer delays. Today it is probably the best technology available on the market and it is worth looking at it more closely.

The volume of data processed by mankind has grown to incredible values, and the role of IT infrastructure in business processes is so great that even short-term failures can completely paralyze the company's activities. Digital technologies are being introduced everywhere, and the financial sector, telecom, or, for example, large-scale online retail, is particularly strongly dependent on them. For a large cloud provider, a bank or a large telecom operator, the reliability provided by data centers is not enough: losses from a small idle time can amount to astronomical amounts and, in order to avoid them, disaster-proof infrastructure is needed. You can create it only by increasing redundancy - you have to build backup data centers.

')

Separate high availability concepts from disaster recovery

Corporate data centers or equipment installed on leased areas can be combined. Fault tolerance of geo-distributed solutions is achieved through a software architecture, and owners can save on their own facilities: they do not have to build a data center, for example, Tier III or even Tier II. You can opt out of diesel generators, use unpackaged servers, play with extreme temperature conditions and do other interesting tricks. On leased areas, the degrees of freedom are less, here the rules of the game are determined by the provider, but the principles of unification are the same. Before we talk about disaster-resistant IT-services, it is worth remembering three magic abbreviations: RTO, RPO and RCO. These key performance indicators determine the ability of the IT infrastructure to withstand disruptions.

RTO (Recovery time objective) - the permissible time to recover an IT system after an incident;

RPO (Recovery point objective) - the loss of data allowed during a disaster recovery. Usually measured as the maximum period during which data may be lost;

RCO (Recovery capacity objective) - part of the IT-load, which is able to take the backup system. The latter figure can be measured in percentages, transactions and other "parrots".

Here it is important to distinguish between high availability solutions (HA - High Availability) and disaster recovery solutions (DR - Disaster Recovery). The difference between them can be represented in the form of a diagram with RPO and RTO as coordinate axes:

Ideally, we do not lose data and do not spend time on recovery after a failure, and the backup site will ensure full serviceability of the services, even if the main one is destroyed. Zero RTOs and RPOs can only be achieved with synchronous operation of data centers: in fact, it is a geographically distributed fault-tolerant cluster with real-time data replication and other joys. In asynchronous mode, the integrity of the data is no longer guaranteed: since replication is done at regular intervals, some of the information may be lost. The switching time to the backup site in this case ranges from several minutes to several hours, if we are talking about a so-called. cold standby when most of the backup equipment is turned off and does not consume electricity.

Technical nuances

The technical difficulties arising from the combination of two or more data centers fall into three categories: data transfer delays, insufficient bandwidth of communication channels and information security problems. Communication between data centers usually provide their own or leased fiber-optic communication lines, so we will talk further about them. For data centers operating in synchronous mode, the main problem is delays. To provide real-time data replication, they should not exceed 20 milliseconds, and sometimes even 10 milliseconds - this depends on the type of application or service.

Otherwise, the protocols of the Fiber Channel family will not work, for example, it is practically impossible to do without them in modern data storage systems. There, the higher the speed, the less should be the delay. There are, of course, protocols that allow you to work with storage networks via Ethernet, but here much depends on the applications used in the data center and the equipment installed. Below is an example of delay requirements for common Oracle and VMware applications:

Oracle Extended Distance Cluster Delay Requirements:

From the Database of the Database is Slow [ID 1275596.1]

VMware Delay Requirements:

VMware vSphere Metro Storage Cluster Case Study (VMware vSphere 5.0)

When transmitting data, the signal delay can be represented as two components: T total = T equip. + T s , where T is equipped. - the delay caused by the signal passing through the equipment, and T ov - the delay caused by the signal passing through the optical fiber. The delay caused by the signal passing through the equipment (T equipments ) depends on the equipment architecture and the method of data encapsulation in the optical-electrical signal conversion. In the DWDM equipment, this functionality is assigned to the transponder or muxplyadera modules. Therefore, when organizing communication between two data centers, the choice of the type of transponder (muxer) is especially carefully chosen so that the delay on the transponder (muxliver) is the smallest.

In synchronous mode, an important role is played by the speed of signal propagation in the optical fiber (T s ). It is known that the speed of light propagation in a standard (for example, G.652) optical fiber depends on the refractive index of its core and is approximately equal to 70% of the speed of light in a vacuum (~ 300 000 km / s). We will not go deep into the physical bases, but it is easy to calculate that the delay in this case is about 5 microseconds per kilometer. Therefore, two data centers can operate synchronously at a distance of only about 100 kilometers.

In asynchronous mode, the delay requirements are not so hard, but if you greatly increase the distance between objects, the attenuation of the optical signal in the fiber begins to affect. The signal has to be amplified and regenerated, that is, you have to create your own transmission systems or rent the main communication channels. The volumes of traffic passing between two data centers are quite large and tend to constantly grow. The main drivers of traffic growth between data centers: virtualization, cloud services, migration and connection of new servers and storage systems. Here you can also face the problem of insufficient bandwidth of data transmission channels. Increase it to infinity will not work because of the lack of its own loose fibers or the high cost of rent. The last important point is related to information security: data running between data centers must be encrypted, which also increases latency. There are other issues, such as the complexity of administering a distributed system, but their influence is not so great, and all technical obstacles are mainly related to the characteristics of communication channels and terminal equipment.

Two or three - economic difficulties

Both modes of combining data centers have significant drawbacks. Objects working synchronously must be located close to each other, which does not guarantee the survival of at least one of them in the event of a large-scale disaster. Yes, this option is reliably protected from human error, from fire, from the destruction of the machine room as a result of a plane crash or from another local emergency, but it’s far from the fact that both data centers will withstand, for example, a catastrophic earthquake. In asynchronous mode, objects can be spread over thousands of kilometers, but providing acceptable values for RTO and RPO is no longer possible. The ideal solution would be a circuit with three data centers, two of which operate synchronously, and the third is located as far as possible from them and plays the role of an asynchronous reserve.

The only problem with a three-data center scheme is its extremely high cost. Organizing even a single backup site is expensive, and only a few can afford to keep two idle data centers. A similar approach is sometimes used in the financial sector if the cost of a transaction is very high: a large exchange can run a scheme with three small data centers, but in the banking sector they prefer to use a synchronous combination of the two. In other industries, two data centers are usually combined in synchronous or asynchronous mode.

DWDM - the optimal solution for DCI

If a customer needs to combine two data centers, he will inevitably run into the problems mentioned above. To solve them, we use the DWDM spectral multiplexing technology, which allows multiplexing a number of carrier signals into a single optical fiber using different wavelengths (λ, i.e., lambda). At the same time in one optical pair can be up to 80 (96) wavelengths according to the grid frequency ITU-T G.694.1. The data transmission rate of each wavelength is 100 Gbit / s, 200 Gbit / s or 400 Gbit / s, and the capacity of one optical pair can reach 80 λ * 400 Gbit / s = 32Tbit / s. There are already ready-made designs that provide 1 Tbit / s per wavelength: they will give even more bandwidth in the near future. This solves the problem of throughput of channels completely: instead of additional fibers, the customer will use more efficiently the available ones - the utilization of traffic will reach fantastic values.

Spectral multiplexing solves problems with bandwidth, and for data centers operating in synchronous mode, this is quite sufficient, since data transfer delays between them are small due to the small distance and depend more on the type of transponder (or muxer) used in the DWDM system. It is worth noting one of the main features of the DWDM spectral compression technology: a completely transparent transmission of traffic due to the fact that the technology works on the first physical layer of the seven-level OSI model. If you can put it that way, the DWDM system is “transparent” for its client connections, as if they were connected by a direct patch cord. If we talk about the asynchronous mode, the main value of the delay depends on the distance between the data centers (we remember that the delay in the computer is 5 microseconds per kilometer), and there are no strict requirements for delays. Therefore, the transmission distance is determined by the capabilities of the DWDM system and is limited by three factors: signal attenuation, signal-to-noise ratio and mode polarization dispersion of light.

When calculating the optical part of the DWDM line, all these factors are taken into account and, based on the calculations, the types of transponders (or muxponders), the required number and type of amplifiers, and other components of the optical path are selected. With the development of DWDM systems and the emergence in their composition of transponders supporting coherent reception with speeds of 40 Gbit / s and 100 Gbit / s and higher, the polarization mode light dispersion as a limiting factor is no longer taken into account. The question of calculating the optical line and choosing the type of amplifier is a large separate topic that requires the reader to know the basics of physical optics, and we do not discuss it in detail in this article.

WDM technology can solve information security problems. Of course, encryption does not necessarily have to be performed at the optical level, but this approach has several undeniable advantages. Encryption at higher levels often requires autonomous devices for different traffic flows and contributes to significant delays. As the number of such devices increases, so do the delays, and the complexity of managing the network also increases. OTN optical level encryption (G.709 - ITU-T recommendation describing the frame format in DWDM systems) does not depend on the type of service, does not require separate devices and runs very quickly - the difference between encrypted and unencrypted data flow does not exceed 10 milliseconds

Without the use of DWDM spectral multiplexing technology, it is almost impossible to unite large data centers and create a disaster-tolerant distributed cluster. The amount of information transmitted over the network grows exponentially and sooner or later the possibilities of the existing fiber-optic communication lines will be exhausted. Laying or renting additional costs to the customer is much more expensive than purchasing equipment, in fact today sealing is the only economically viable option. At short distances, DWDM technology makes it possible to more efficiently use existing optical fibers, raising the utilization of traffic to the skies, and at far distances, it also minimizes data transfer delays. Today it is probably the best technology available on the market and it is worth looking at it more closely.

Source: https://habr.com/ru/post/428249/

All Articles