Million video calls per day or “Call Mom!”

From the user's point of view, call services look pretty simple: you go to a page to another user, call, he picks up the phone, you talk to him. From the outside it seems that everything is simple, but few know how to make such a service. But Alexander Tobol ( alatobol ) not only knows, but willingly shares his experience.

Further, the text version of the report on HighLoad ++ Siberia, from which you will learn:

')

About the speaker: Alexander Tobol leads the development of Video and Tapes platforms at ok.ru.

Video call history

The first video call device appeared in 1960, it was called a picture chip, used dedicated networks and was extremely expensive. In 2006, Skype added video calls to its application. In 2010, Flash supported the RTMFP protocol, and we started video calls to Flash on Odnoklassniki. In 2016, Chrome stopped support for Flash, and in August 2017 we restarted calls on a new technology, which I will discuss today. Having finished the service, in half a year we received a significant increase in successfully made calls. Recently, we also have masks in calls.

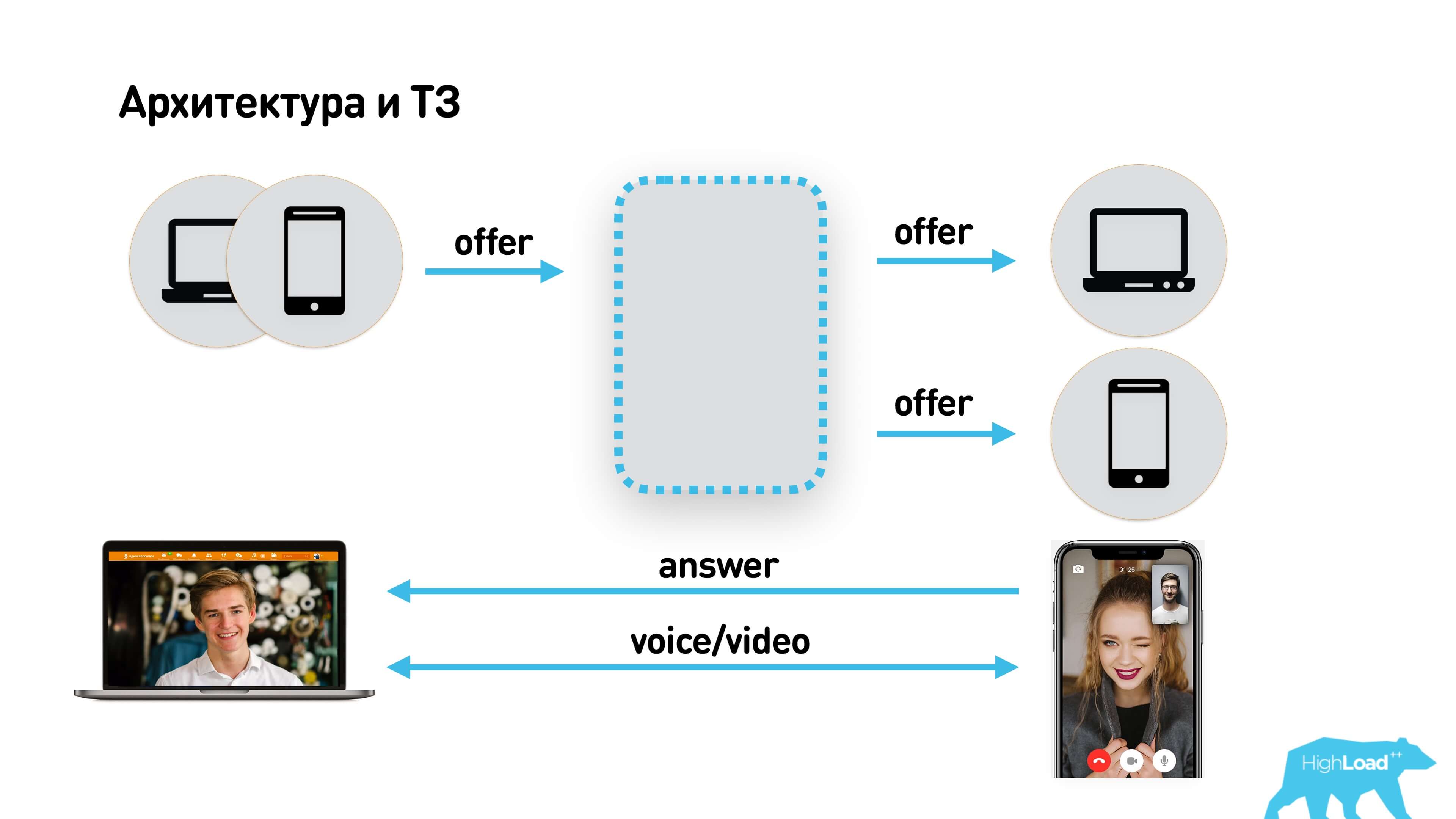

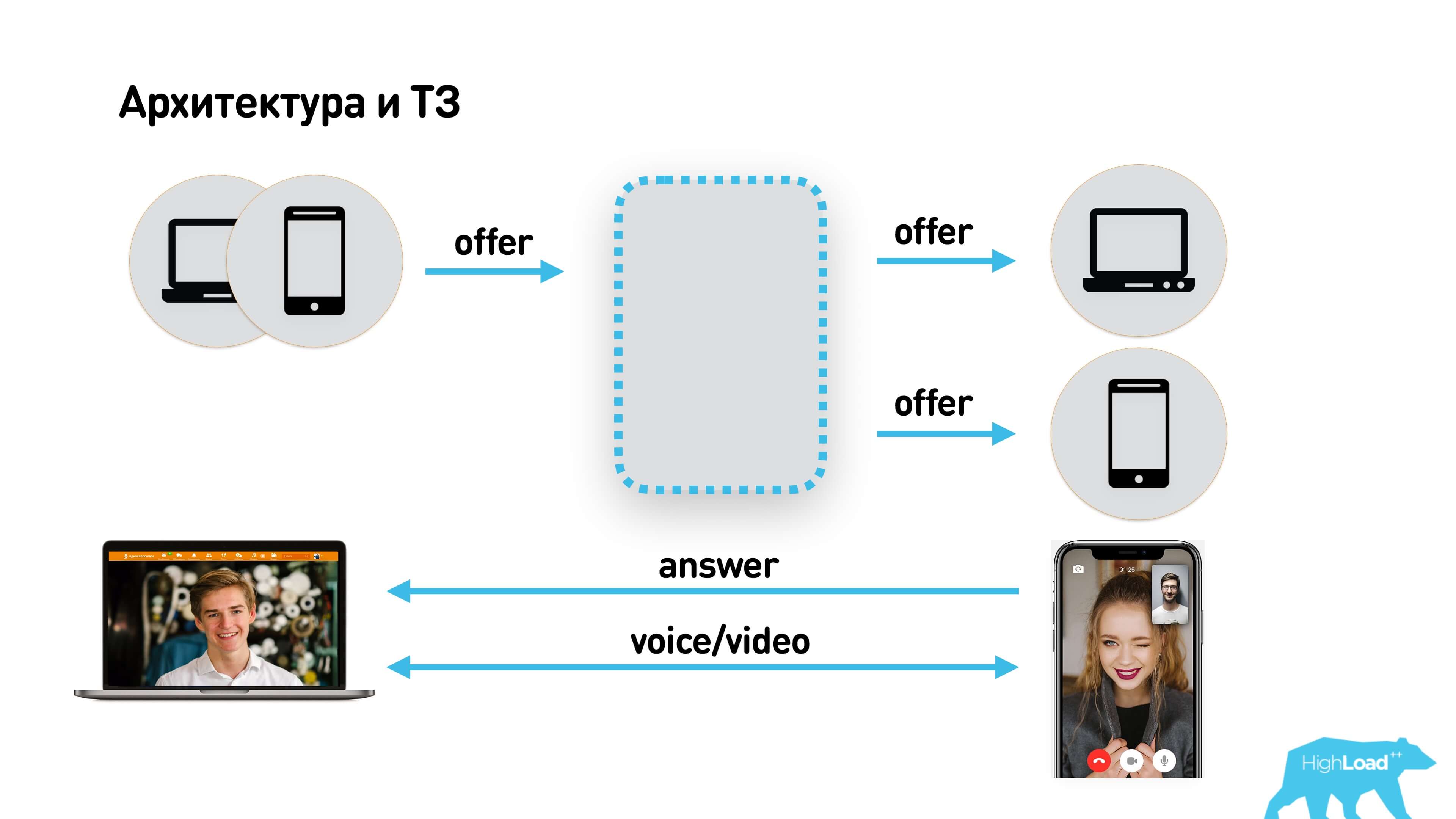

Architecture and TK

Since we work in a social network, we have no technical tasks, and we do not know what TK is. Usually the whole idea fits on one page and looks like this.

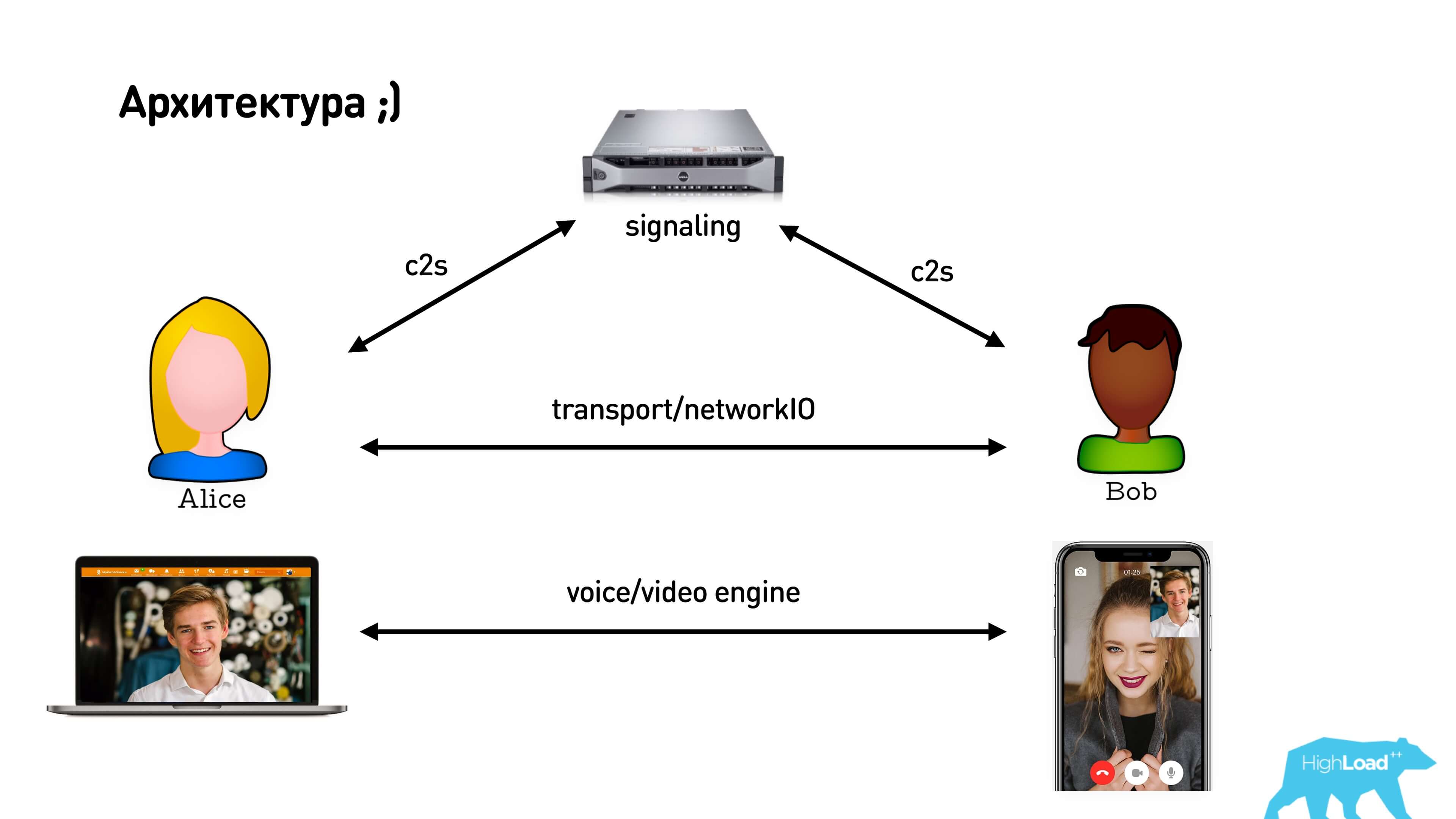

The user wants to call other users using web or iOS / Android applications. Another user may have several devices. The call comes to all devices, the user picks up the phone on one of them, they talk. It's simple.

Specifications

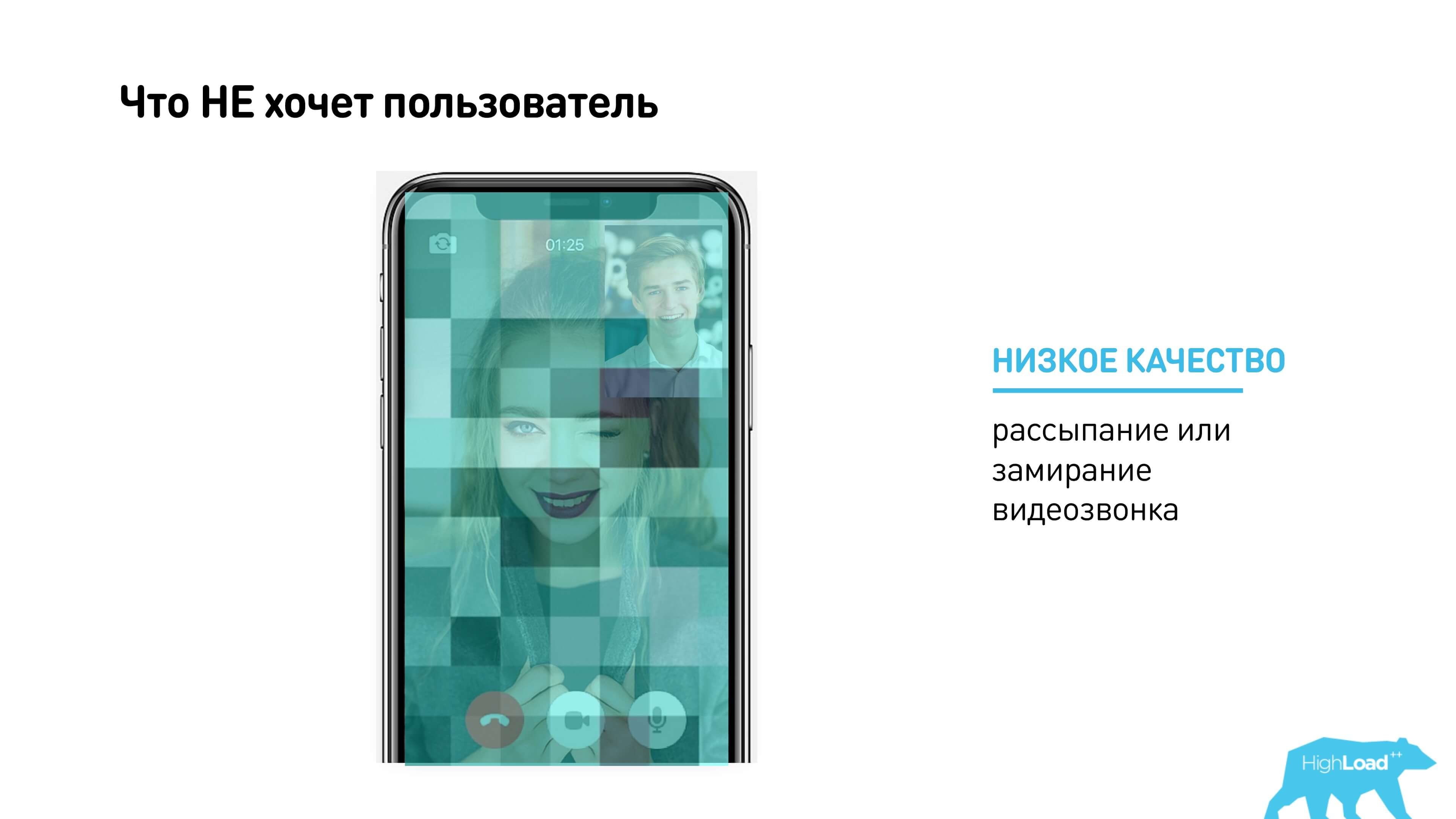

In order to make a quality call service, we need to understand what characteristics we want to track. We decided to start by looking for what is most annoying to the user.

The user is exactly annoyed if he picks up the phone and has to wait for the connection to be established.

The user is annoyed if the quality of the call is low - something is interrupted, the video crumbles, the sound gurgles.

But the most annoying to the user is the delay in calls. Latency is one of the important characteristics of calls. When latency in a conversation of about 5 seconds is absolutely impossible to conduct a dialogue.

We have defined acceptable characteristics:

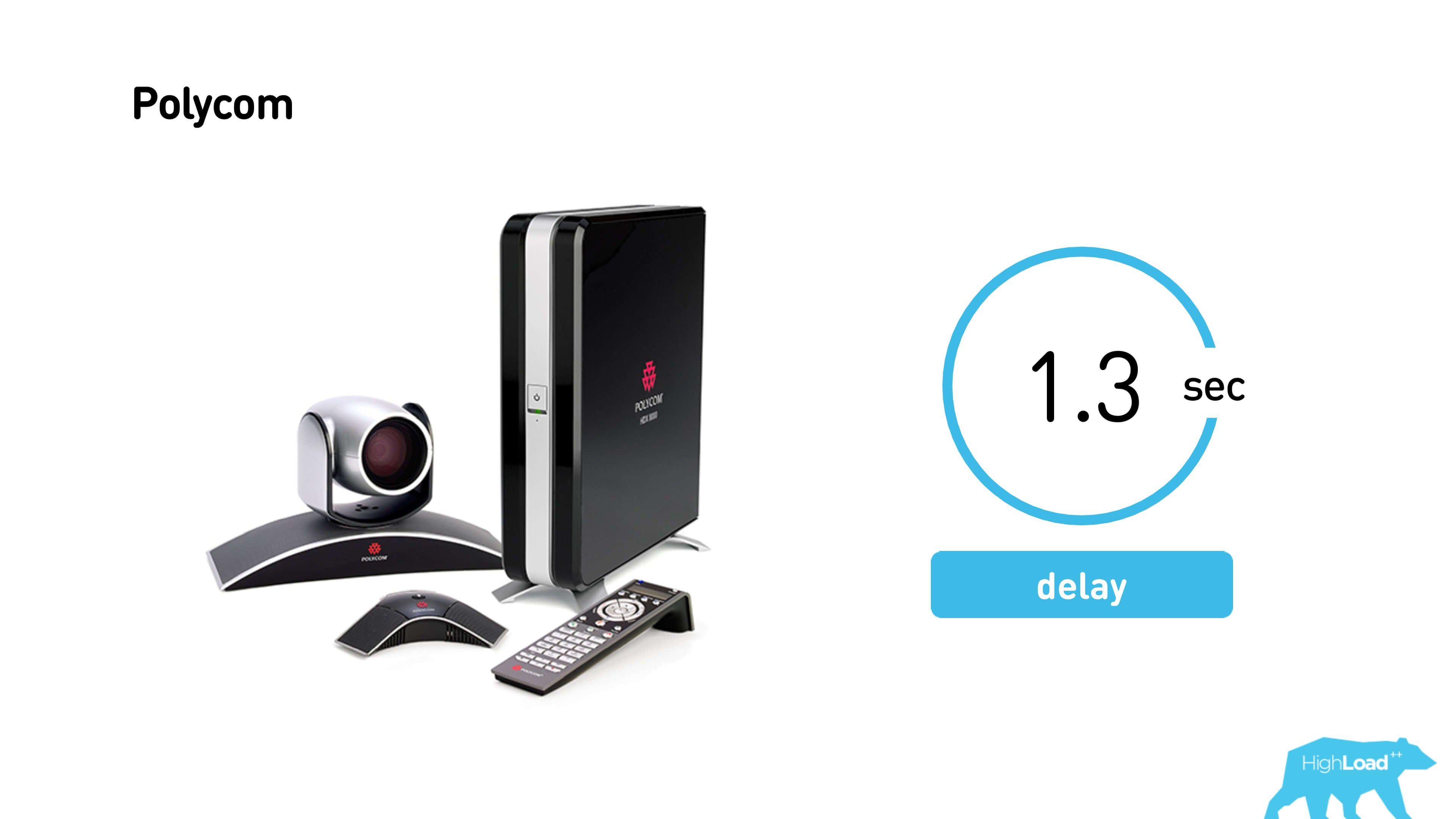

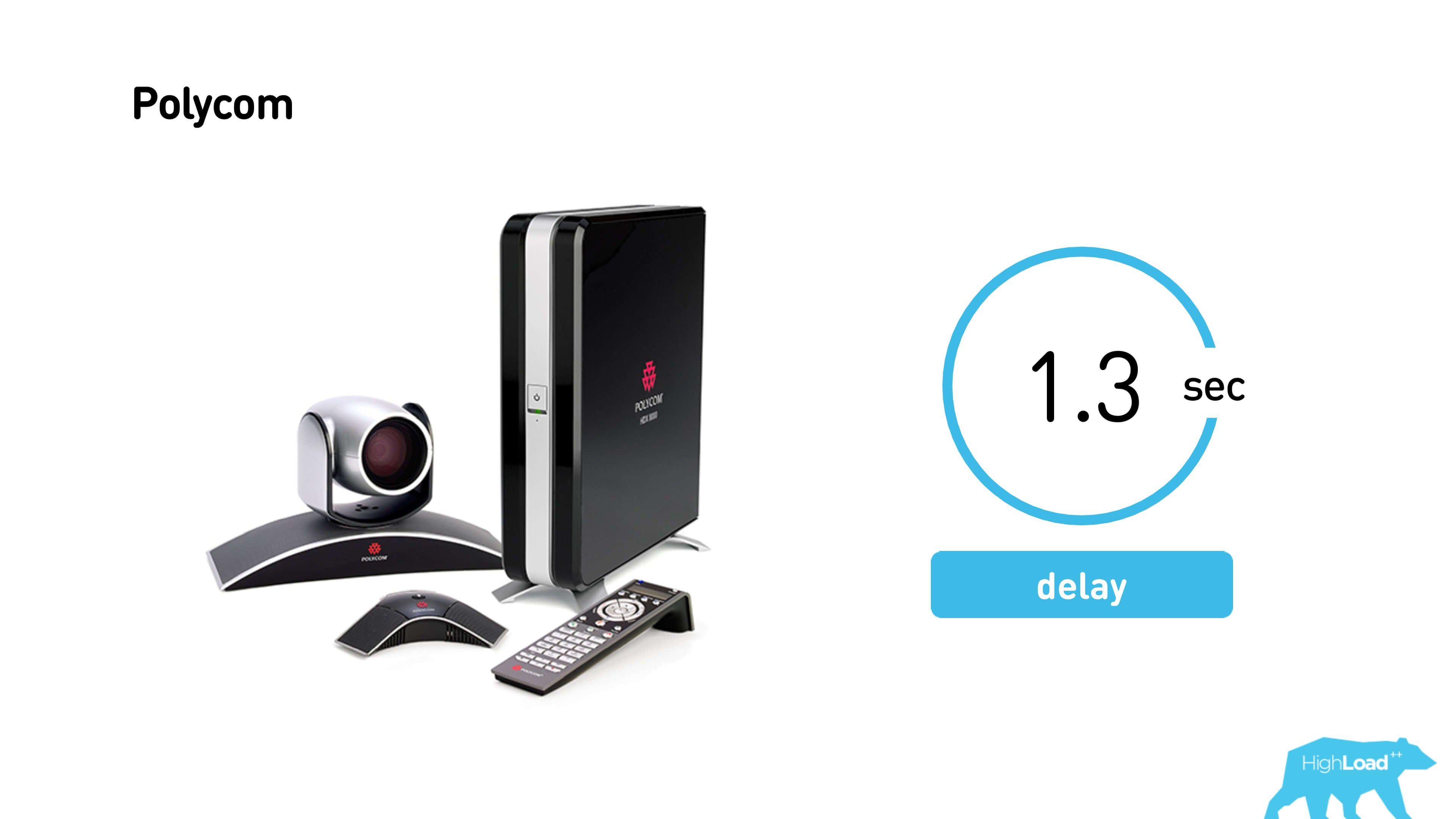

Polycom is a conferencing system installed in our offices. The average polycom delay is about 1.3 seconds. With such a delay is not always understand each other. If the delay increases to 2 seconds, then the dialogue will be impossible.

Since we already had a platform running, we roughly expected that we would have a million calls a day. This is a thousand calls in parallel. If all calls are sent through the server, there will be a thousand megabit calls per call. This is just 1 gigabit / s of one iron server will be enough.

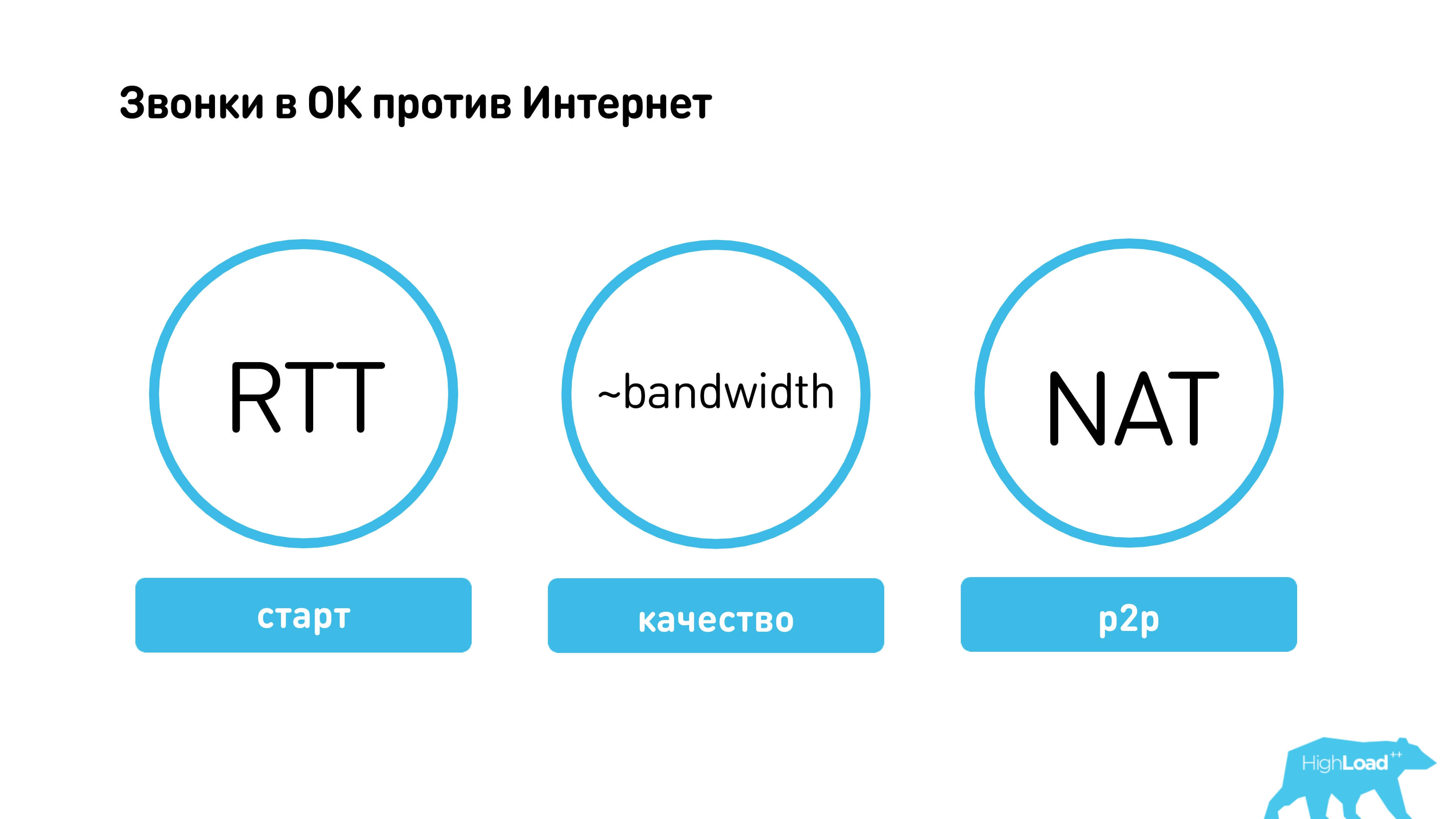

Internet vs TTX

What can prevent to achieve such class performance? The Internet!

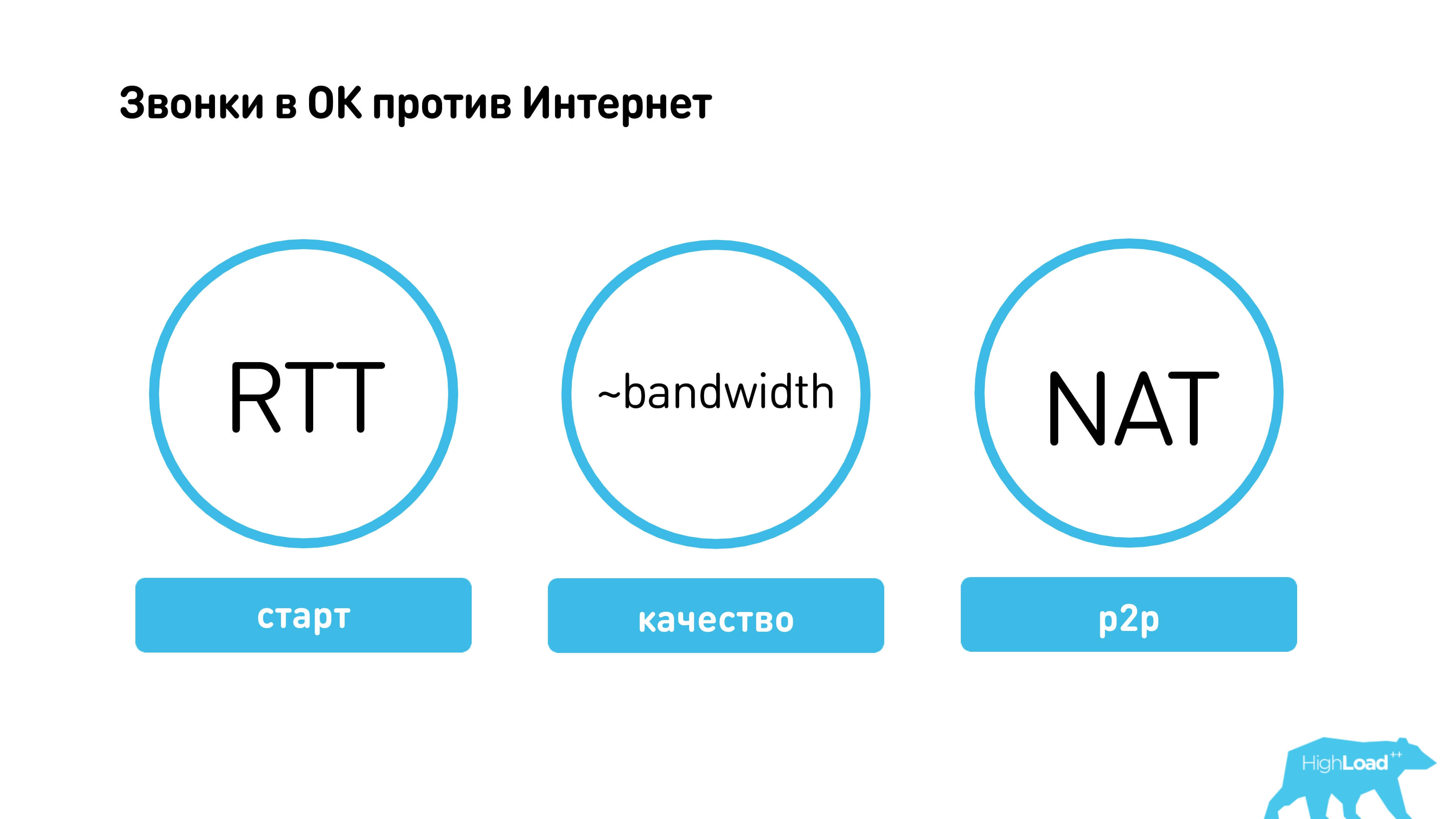

On the Internet, there are things like round-trip time (RTT), which cannot be overcome, there is variable bandwidth, there is NAT.

Previously, we measured the transmission speed in the networks of our users.

We smashed by the type of connection, looked at the average RTT, packet loss, speed, and decided that we would test calls on the average values of each of these networks.

There are other troubles on the Internet:

Consider the above network settings for a simple example.

I pinged the site of Novosibirsk State University from my office and received such a strange ping.

The average jitter in this example is 30 ms, that is, the average interval between adjacent ping times is about 30 ms, and the average ping is 105 ms.

What is important in calls, why will we fight for p2p?

Obviously, if between our users who are trying to talk to each other in St. Petersburg, we managed to establish a p2p connection, and not through a server that is located in Novosibirsk, we will save about 100 ms round-trip and traffic to this service.

Therefore, most of the article is devoted to how to make a good p2p.

History or legacy

As I said, we had a call service from 2010, and now we have restarted it.

In 2006, when Skype was launched, Flash bought Amicima, which made RTMFP. Flash already had the RTMP protocol, which in principle can be used for calls, and it is often used for streaming. Flash later opened the RTMP specification. I wonder why they needed RTMFP? In 2010 we used exactly RTMFP.

Let's compare the requirements for call protocols and real streaming protocols and see where this border is.

RTMP is more like a video streaming protocol. It uses TCP, it has an accumulating delay. If you have a good internet connection, calls to RTMP will work.

The RTMFP protocol , despite the difference in just one letter, is the UDP protocol. It is free from buffering problems — those on TCP; devoid of head-of-line locks - this is when you have lost one packet, and TCP does not give up the following packets until it is time to send the lost one again. RTMFP was able to cope with NAT and was experiencing a change in the IP address of clients. Therefore, we launched the web on RTMFP in 2010.

Then, only in 2011, the initial draft WebRTC appeared, which was not yet fully operational. In 2012, we started supporting calls to iOS / Android, then something else happened, and in 2016, Chrome stopped supporting Flash. We had to do something.

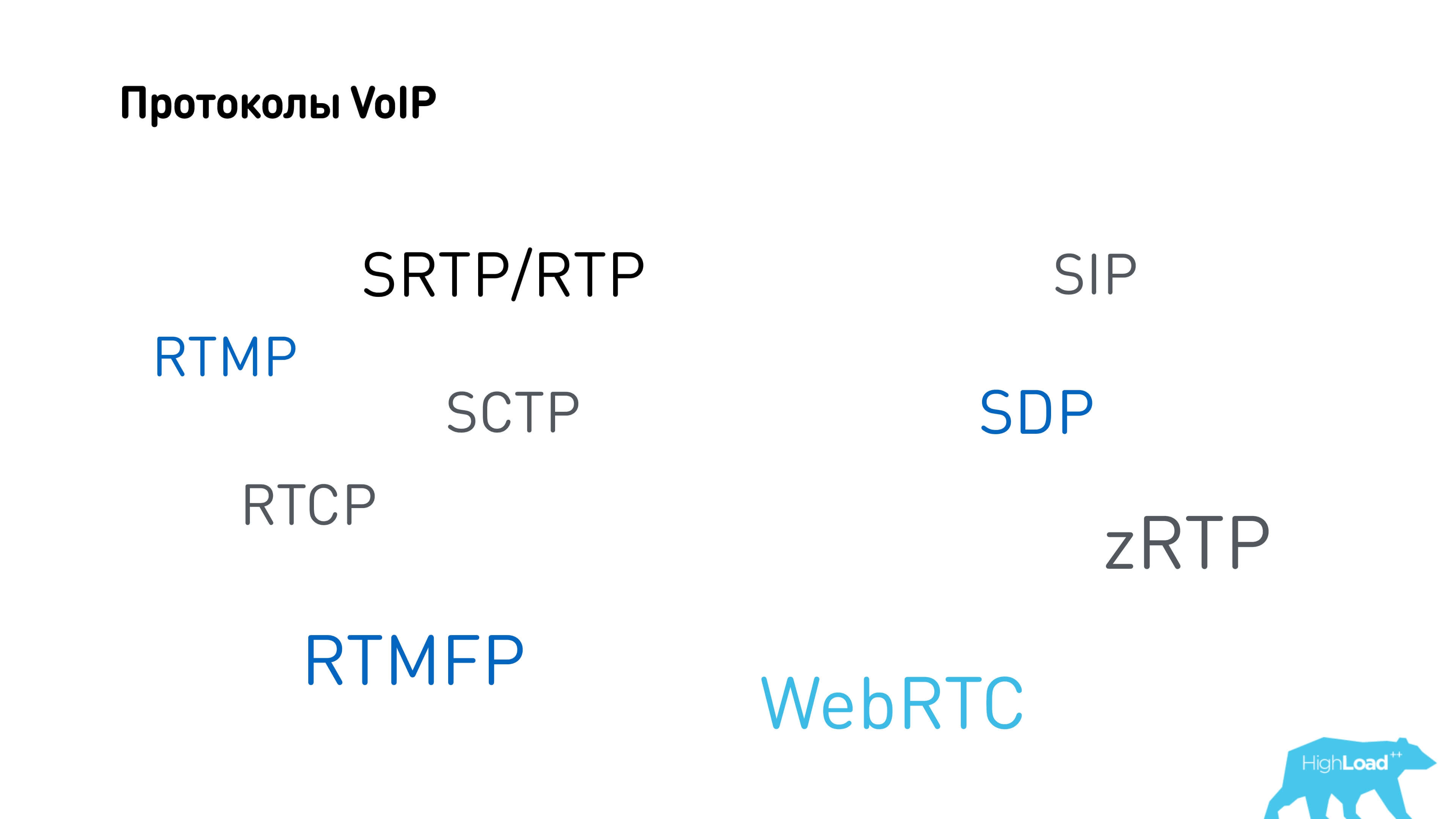

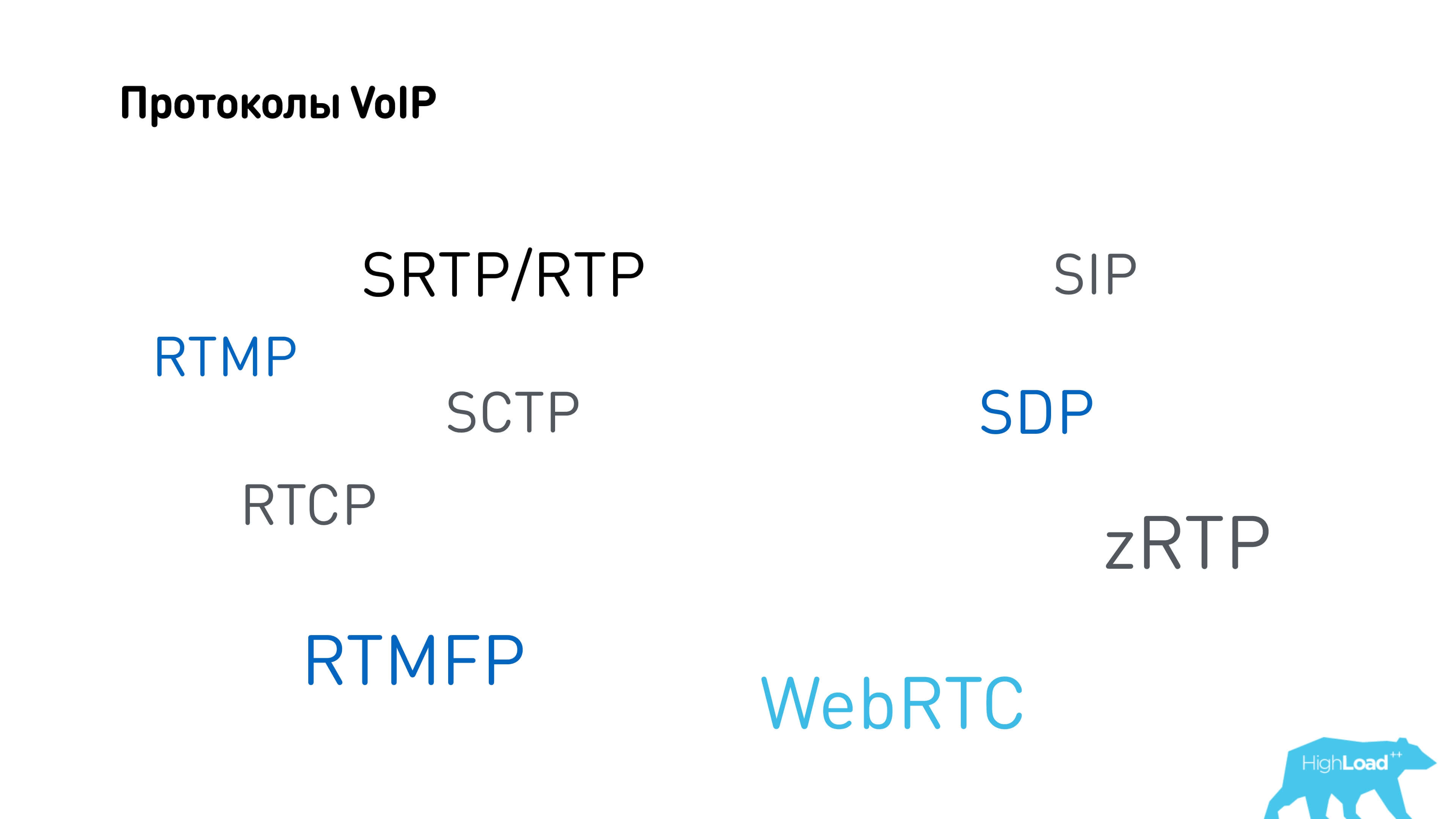

We looked at all the VoIP protocols: as always, in order to do something, we start by studying the competitors.

Competitors or where to start

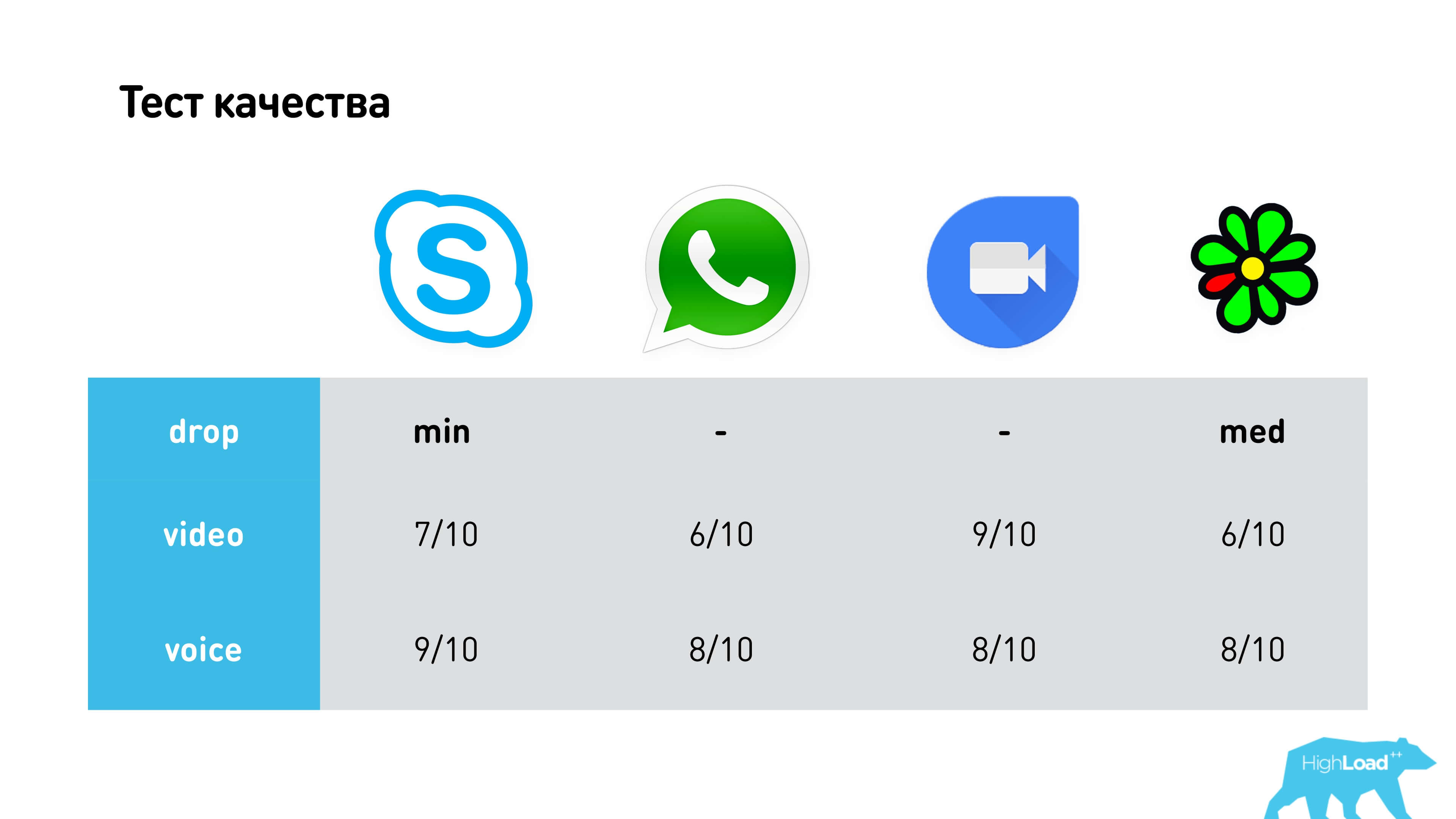

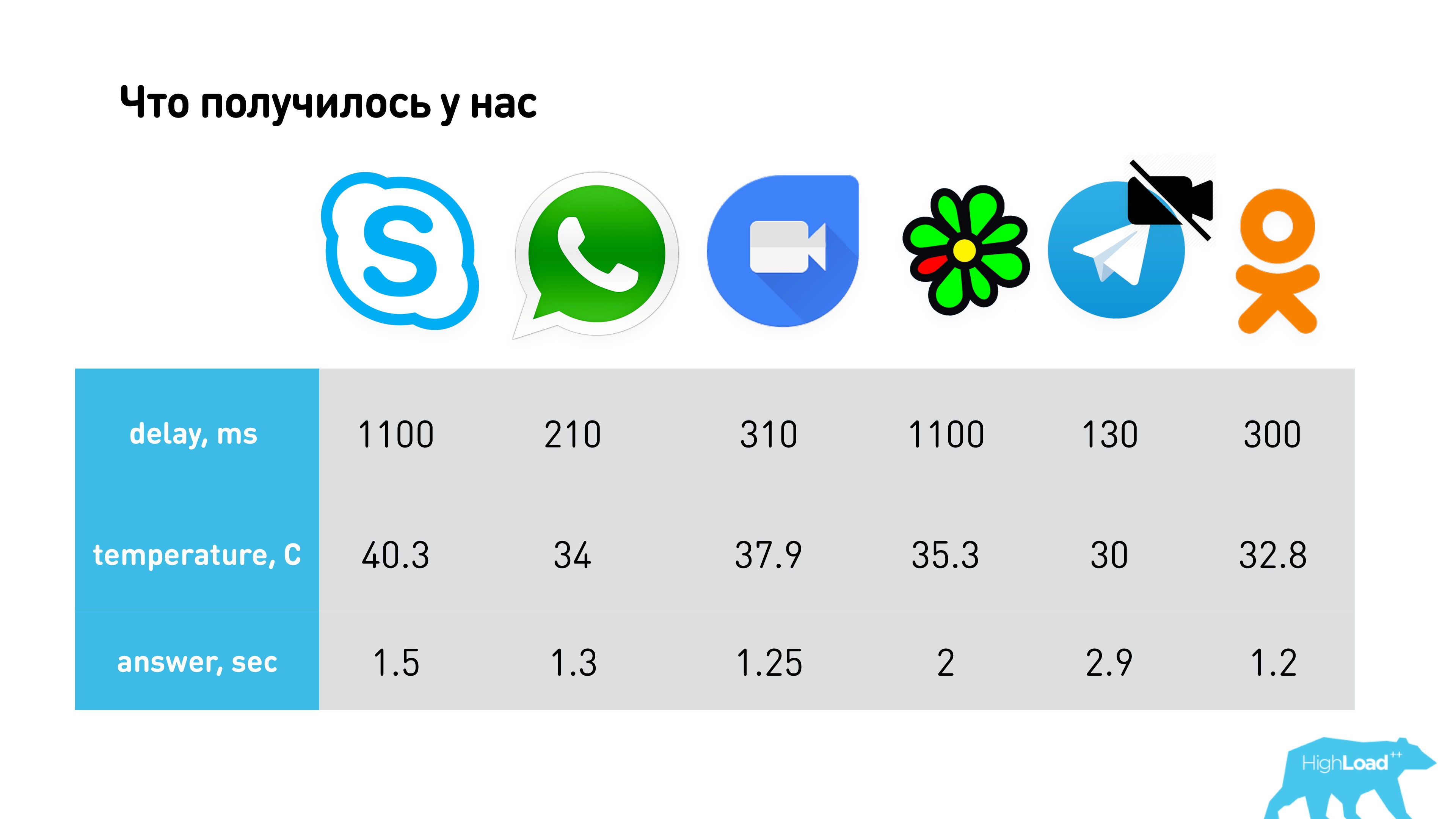

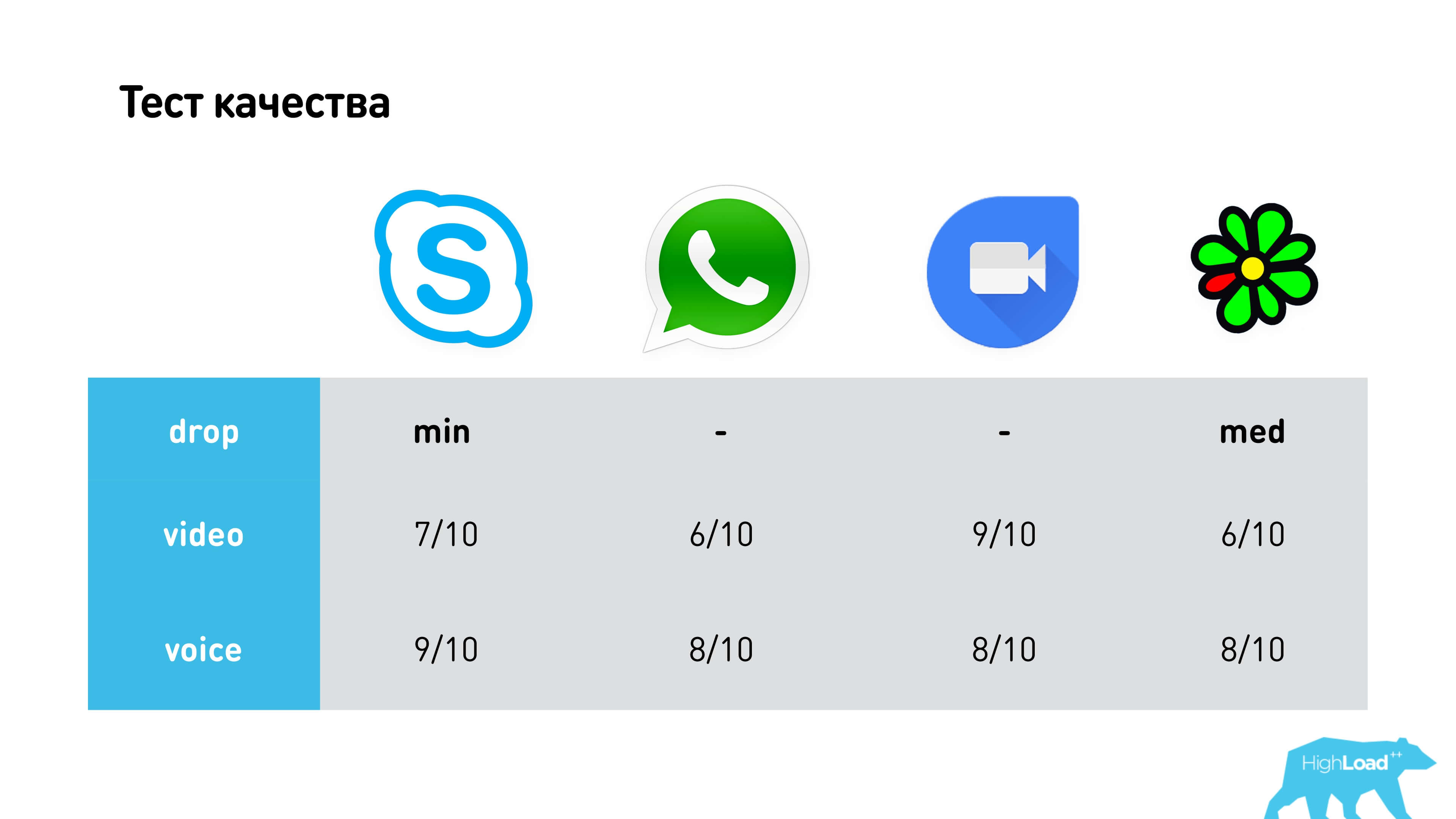

We chose the most popular competitors: Skype, WhatsApp, Google Duo (similar to Hangouts) and ICQ.

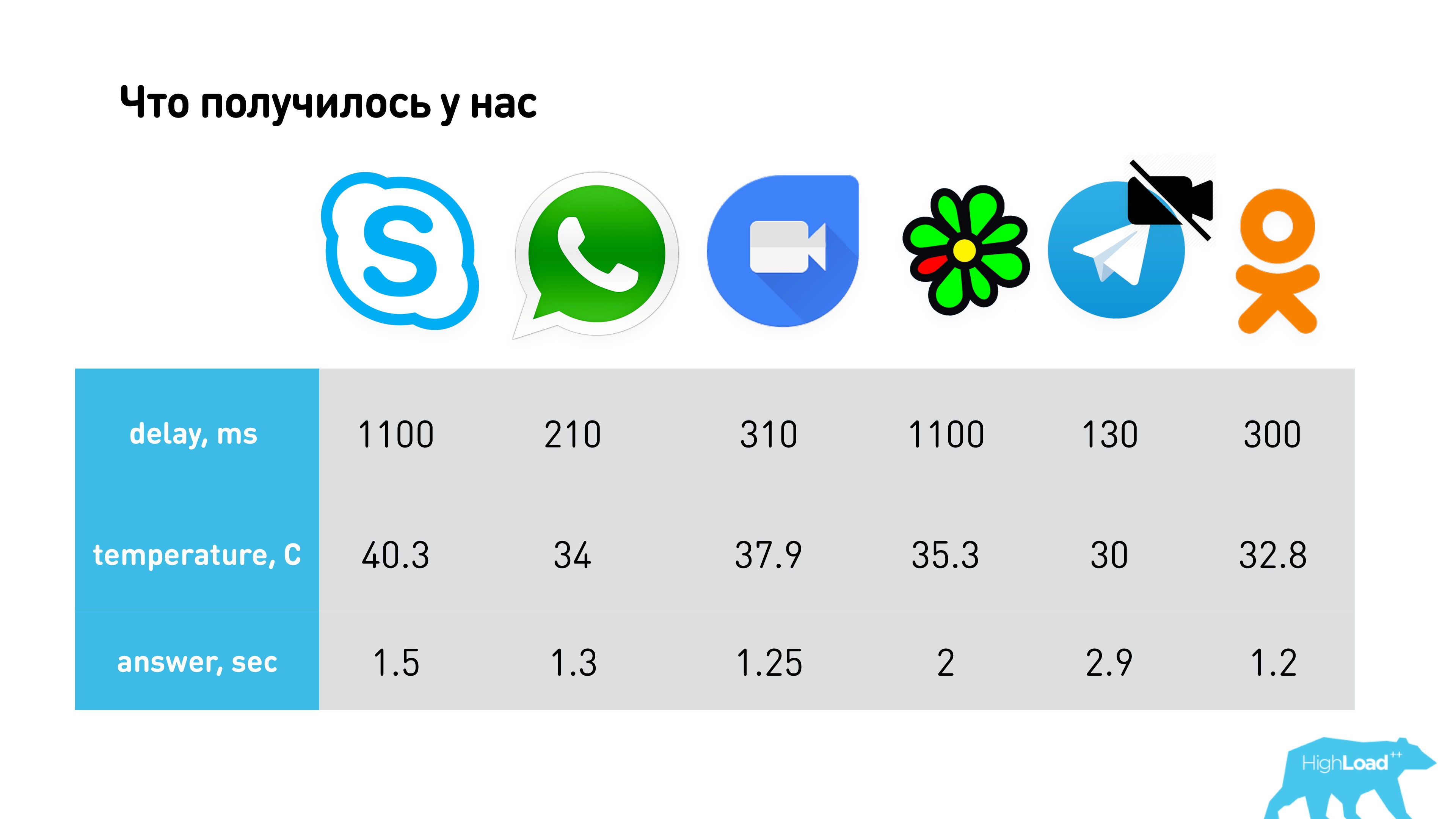

To start, measure the delay.

Make it easy. Above is a picture in which:

I will not reveal all the cards so far, but we did it so that these devices could not establish p2p connections. Of course, the measurements were carried out in different networks, and this is just an example.

Skype still interrupts a bit. It turned out that Skype, in case it fails to connect p2p, has a delay of 1.1 s.

The test environment was complicated. We tested in different conditions (EDGE, 3G, LTE, WiFi), we took into account that the channels are asymmetric, and I give the averaged values of all measurements.

In order to estimate the battery consumption, the load on the processor and everything else, we decided that we can just measure the temperature of the phone with a pyrometer and assume that this is some average load on the GPU of the phone on the processor, on the battery. In principle, it is very unpleasant to bring a hot phone to your ear, and hold it in your hands too. It seems to the user that now the application will use up his entire battery.

The result was:

Great, we got some metrics!

We tested the quality of video and voice in different networks, with different drops and everything else. As a result, we came to the conclusion that the highest quality video on Google Duo, and the voice - on Skype , but this is in “bad” networks, when there is already a distortion. In general, all work about mediocre. WhatsApp has the most blurred picture.

Let's see what all this is implemented on.

Skype has its own proprietary protocol, and all others use either a modification of WebRTC, or WebRTC directly. Hangouts, Google Duo, WhatsApp, Facebook Messenger can work with the web, and they all have WebRTC under the hood. They are all so different, with different characteristics, and they all have the same WebRTC! So, you need to be able to cook it properly. Plus there is a Telegram, which has some parts of WebRTC responsible for the audio part, there is ICQ, which forks WebRTC a long time ago and went to develop its own way.

WebRTC. Architecture

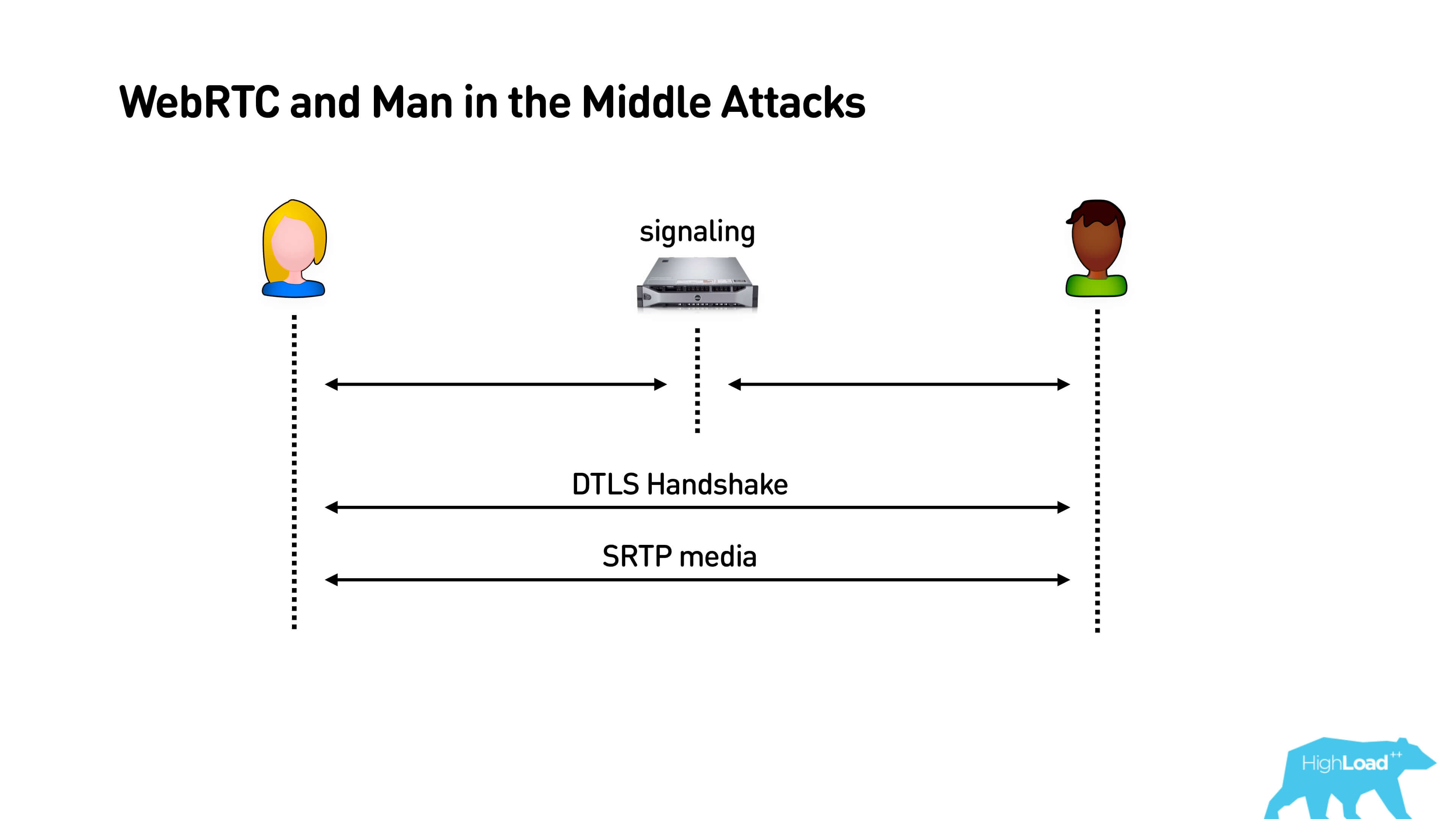

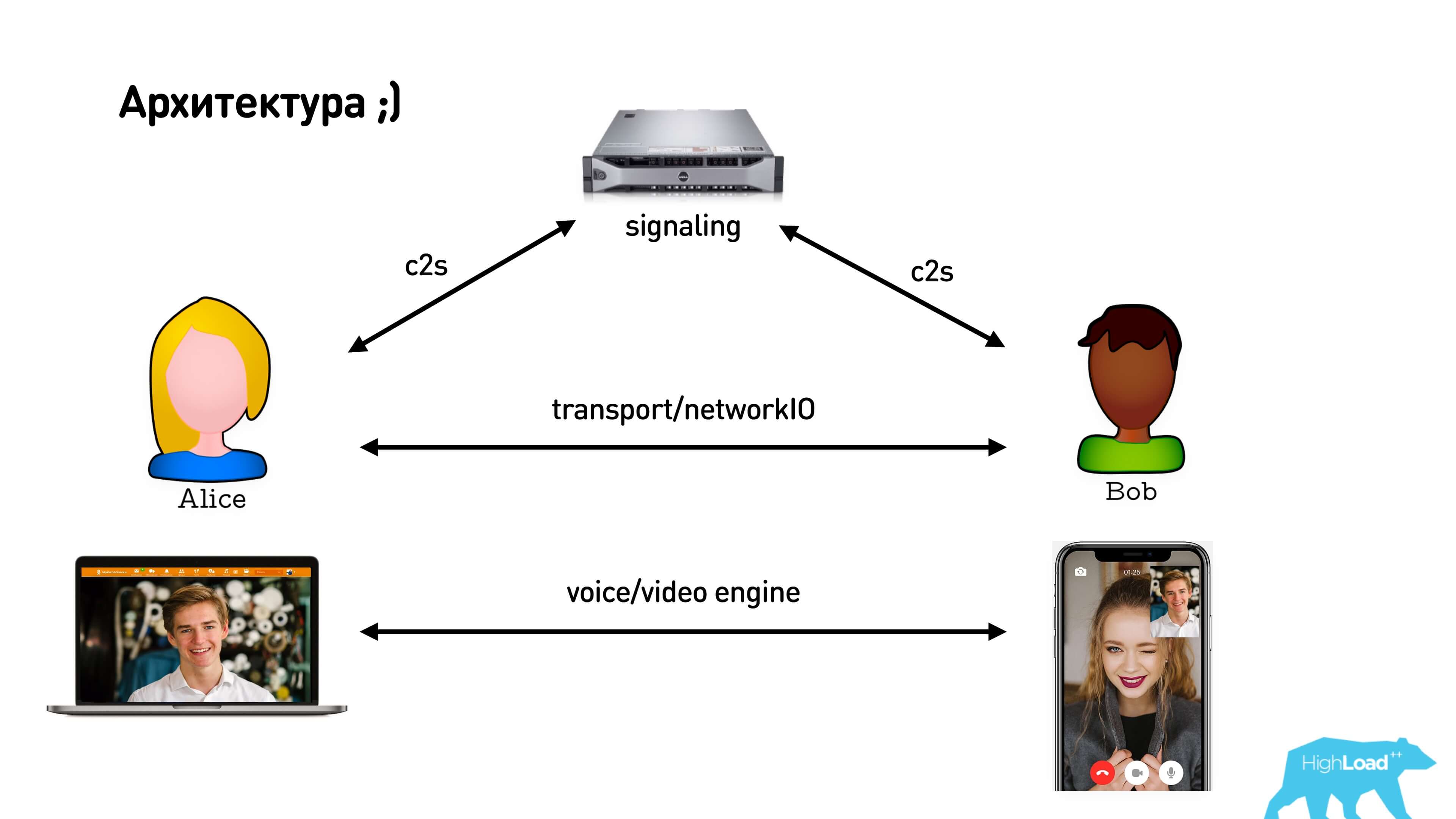

WebRTC implies a signaling server, an intermediary between clients, which is used to exchange messages during the installation of a p2p connection between them. After installing a direct connection, clients begin to exchange media with each other.

WebRTC. Demo

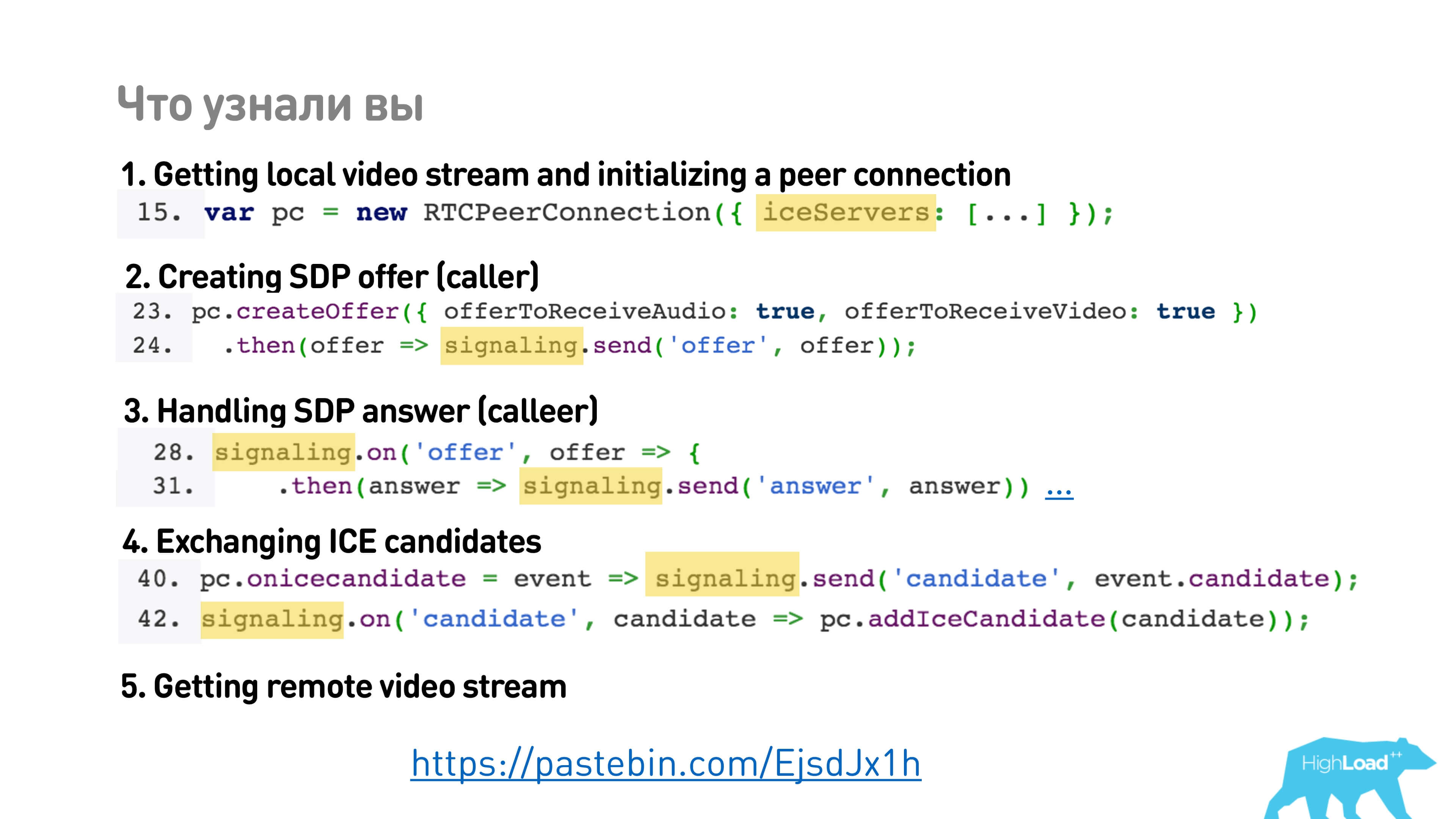

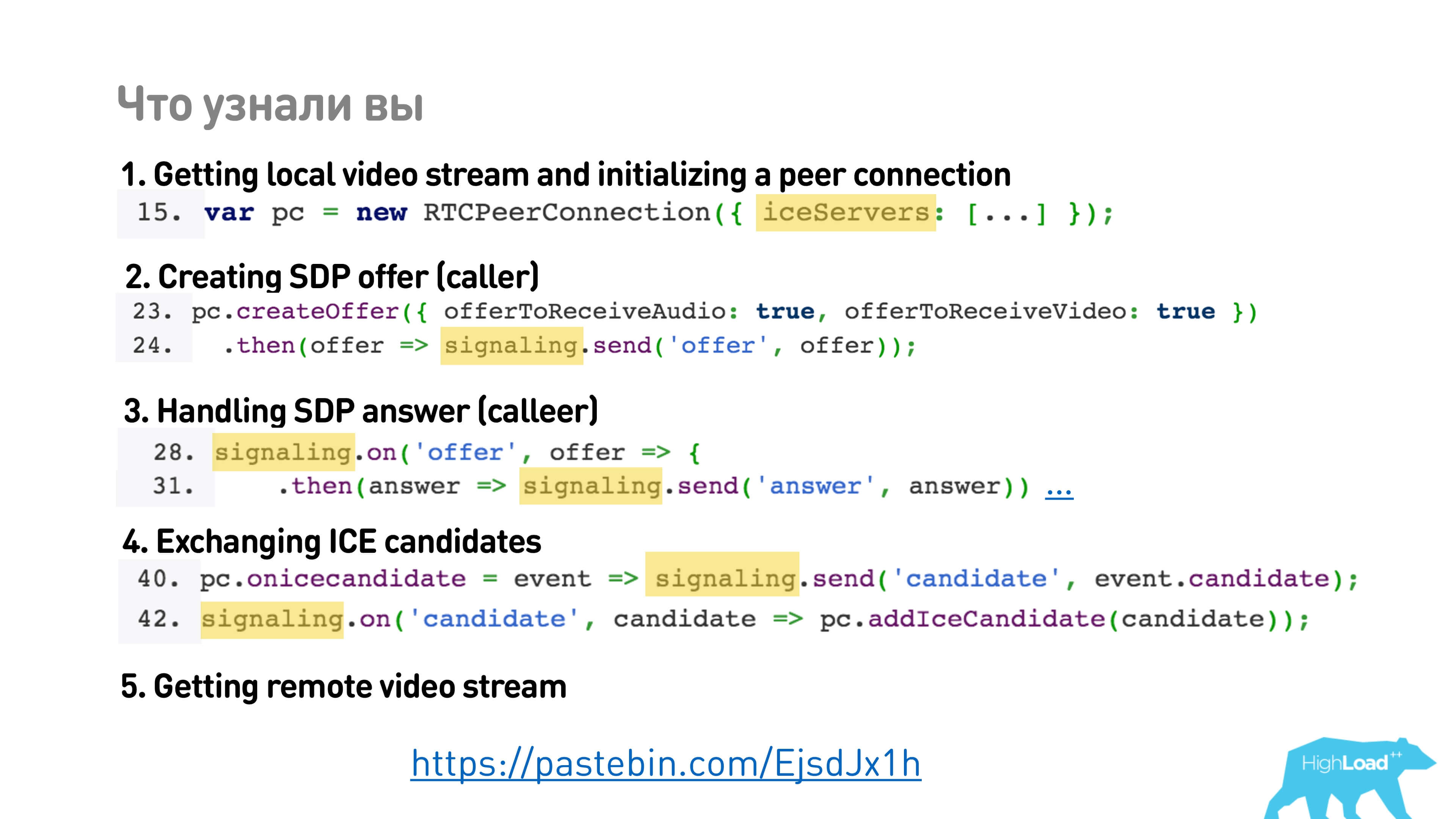

Let's start with a simple demo. There are simple 5 steps how to establish a WebRTC connection.

It says the following:

Let's still understand what is happening there and what we need to implement ourselves.

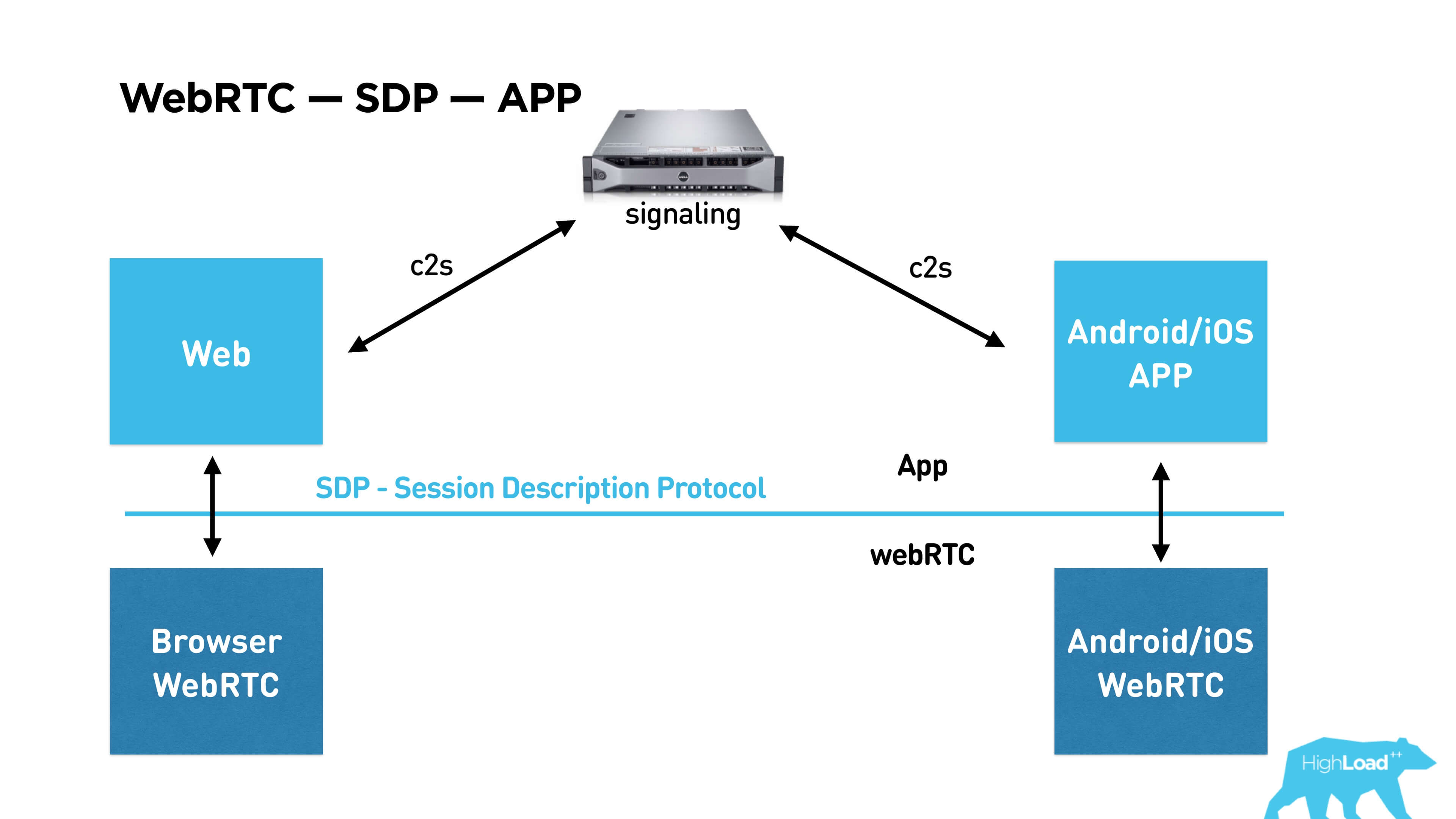

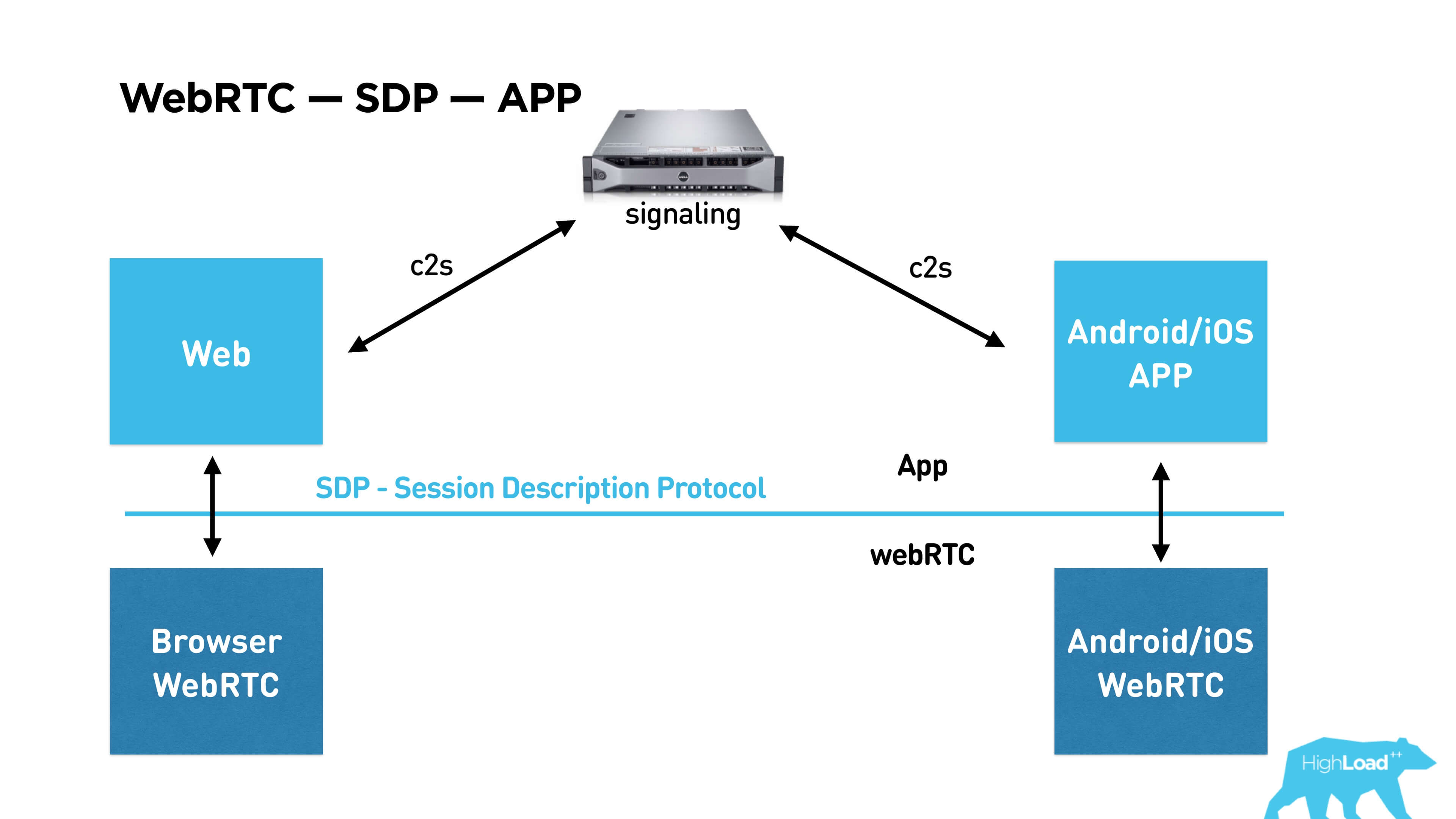

See the picture upwards. There is a WebRTC library, which is already built into the browser, supported by Chrome, Firefox, etc. You can build it under Android / iOS and communicate with it through the API and SDP (Session Description Protocol), which describes the session itself. Below I will tell you what is included in it. To use this library in your application, you must establish a connection between subscribers via signaling. Signaling is also your service that you have to write yourself, WebRTC does not provide it.

Further in the article we will discuss the network in order, then video / audio, and in the end we will write our own signaling.

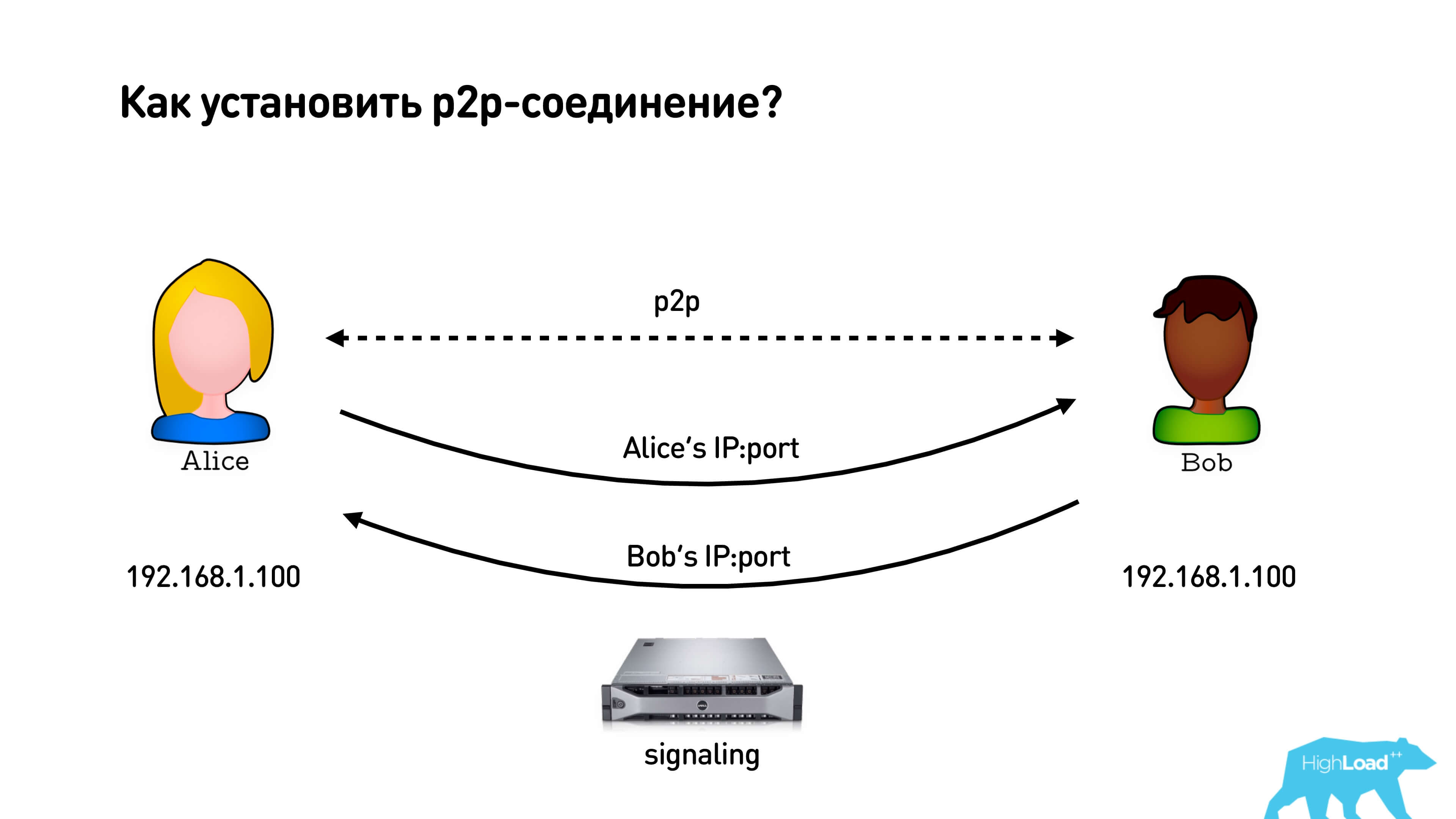

WebRTC network or p2p (actually c2s2c)

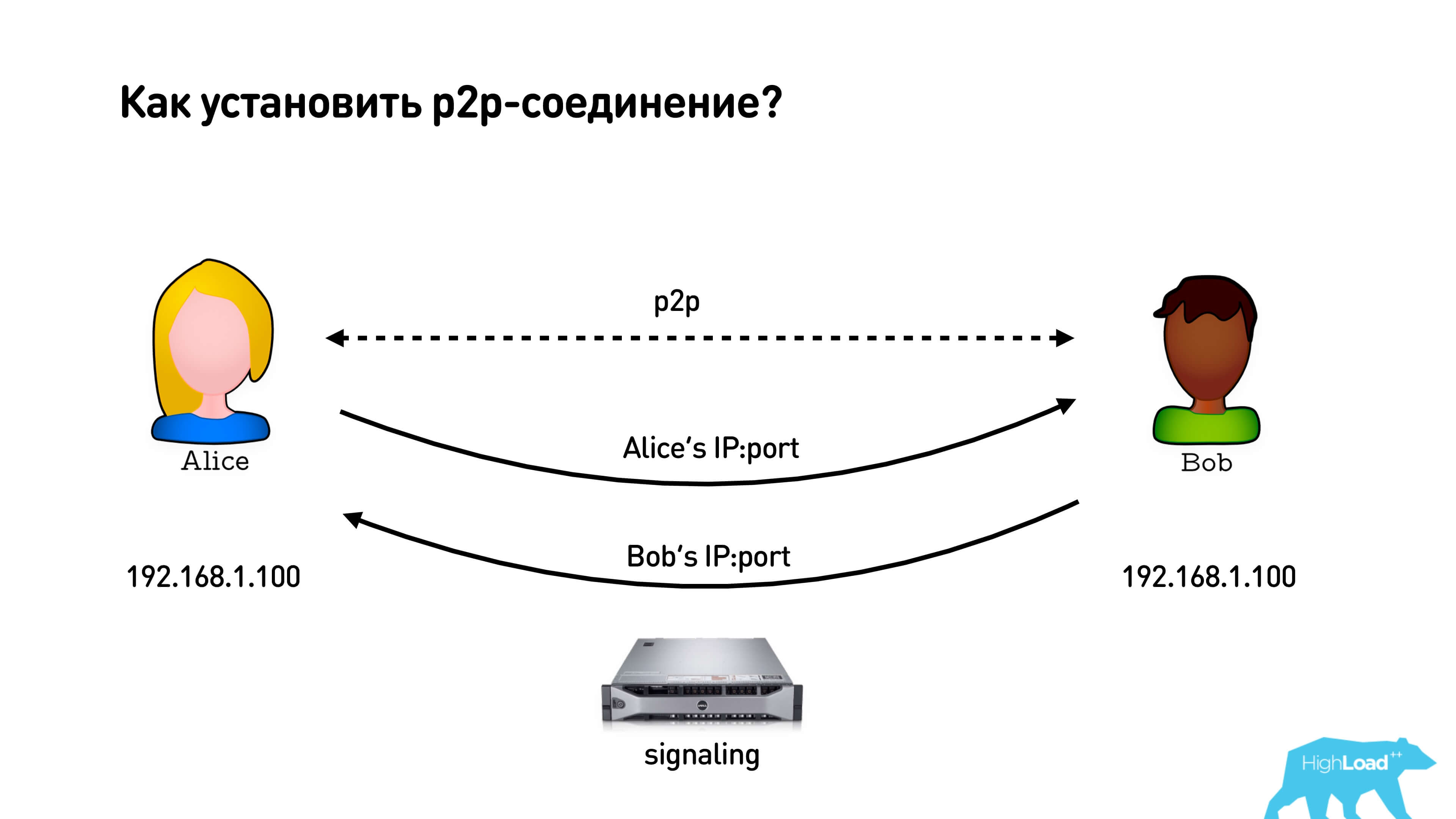

It seems that installing a p2p connection is quite simple.

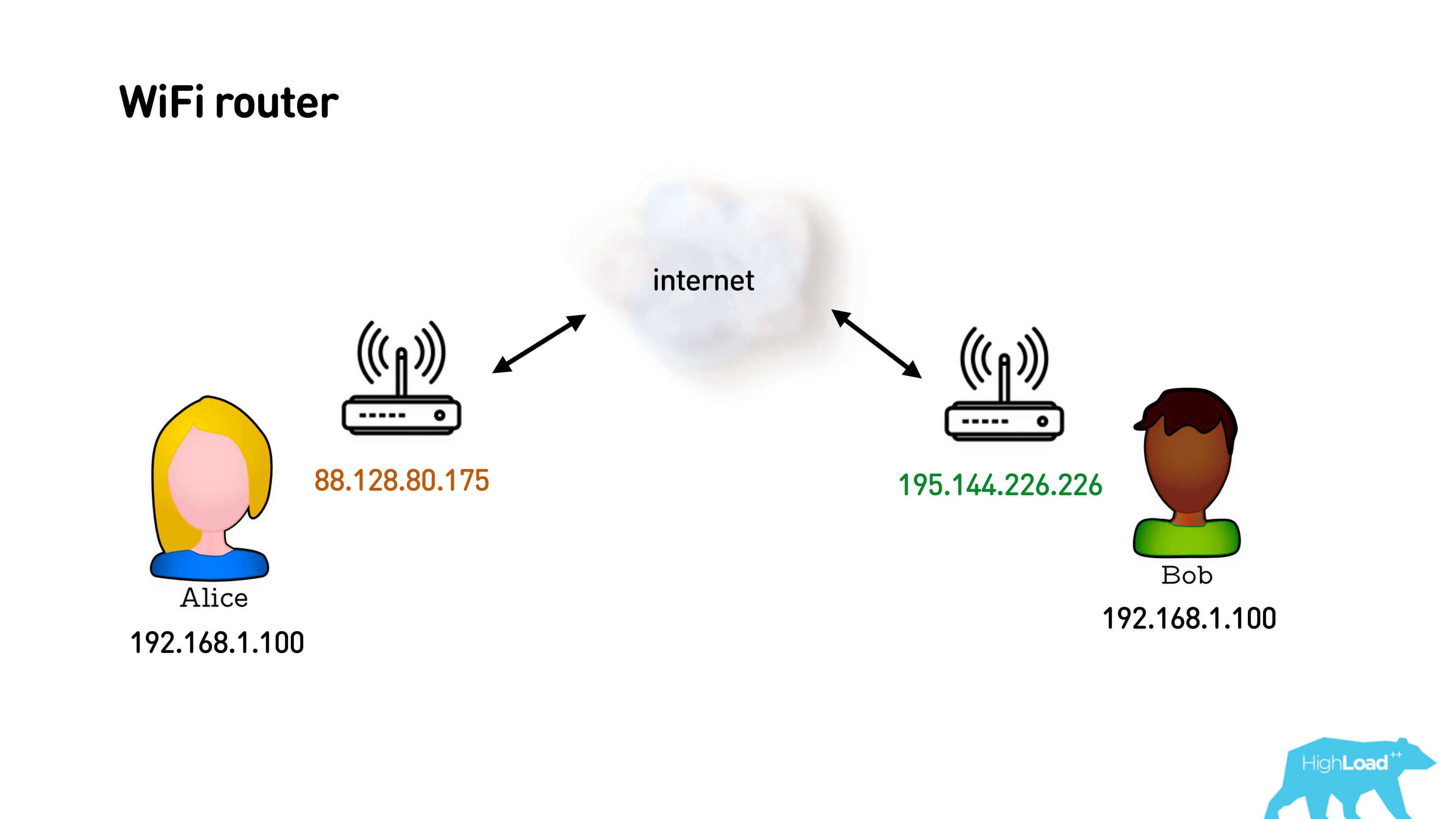

We have Alice and Bob, who want to establish a p2p connection. They take their IP addresses, they have a signaling server to which they both are connected, and through which they can exchange these addresses. They exchange addresses, and oh! Their addresses are the same, something went wrong!

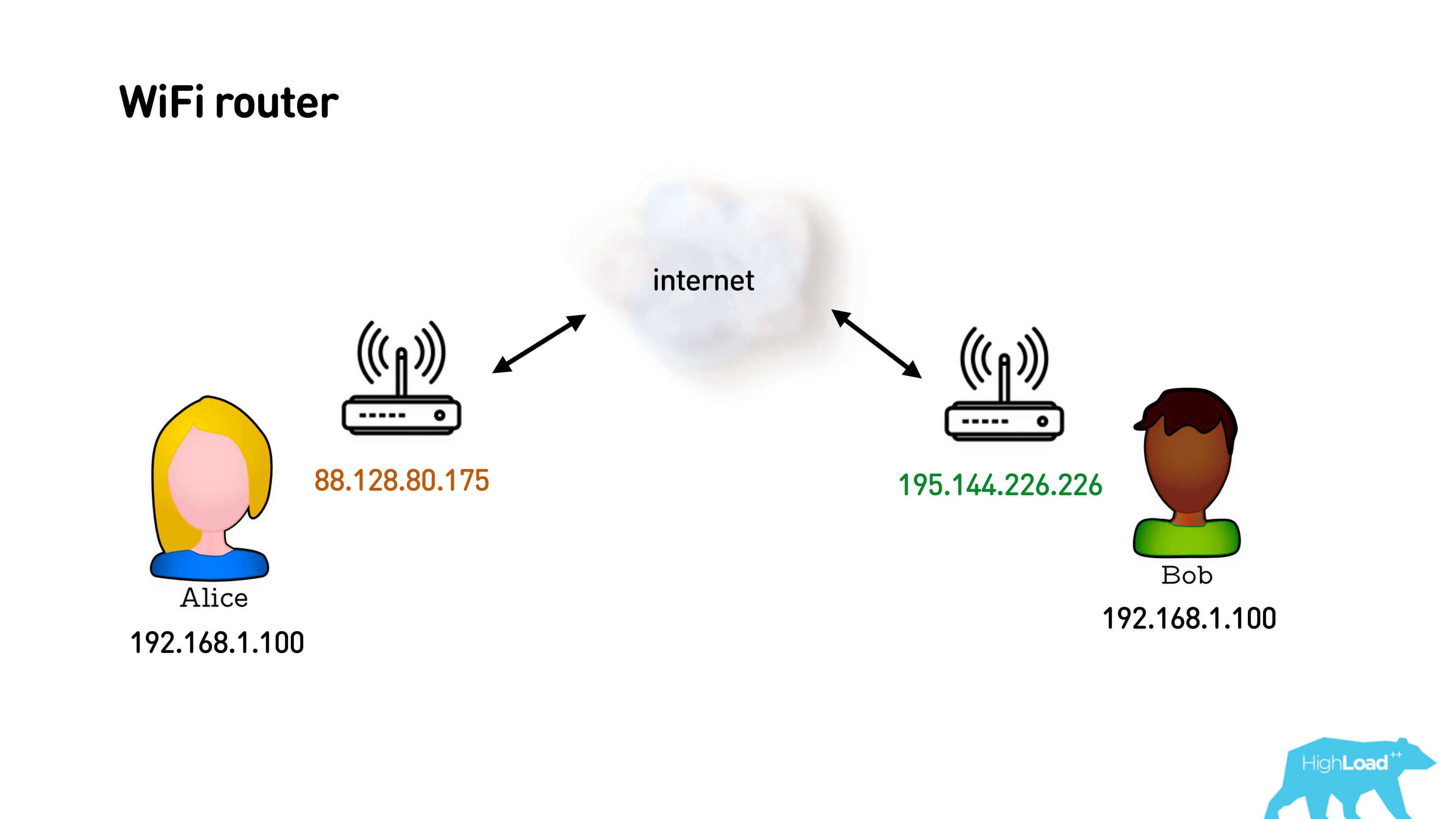

In fact, both users are most likely sitting behind Wi-Fi routers and these are their local gray IP addresses. A router provides them with a feature such as Network Address Translation (NAT). How does she work?

You have a gray subnet and an external IP address. You send a packet to the Internet from your gray address, NAT replaces your gray address with a white one and remembers the mapping: which port it sent from, to which user and which port it corresponds to. When the return packet arrives, it resolves the map for this map and sends it to the sender. It's simple.

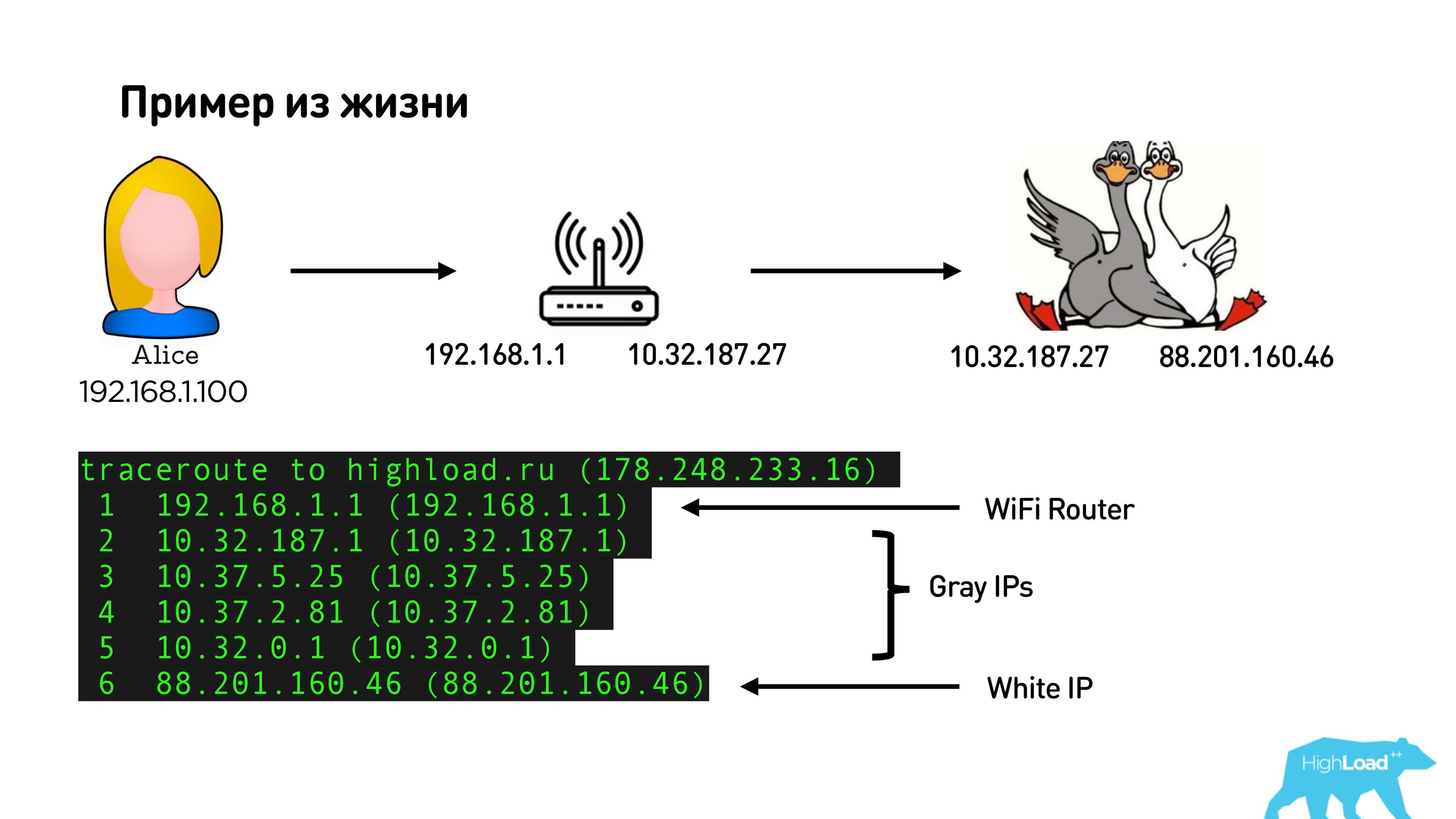

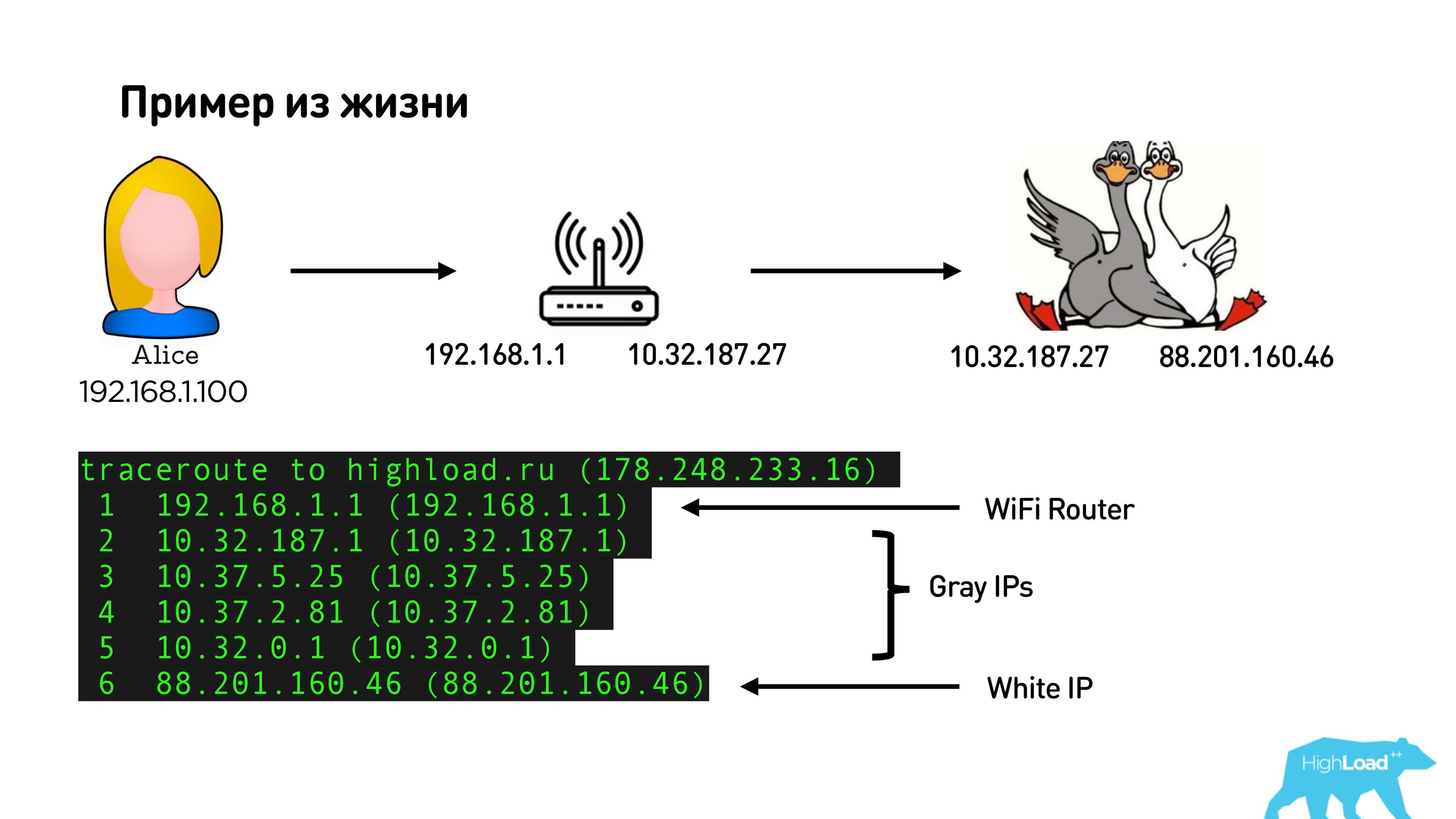

Below is an illustration of how it looks in my home.

This is my internal IP-address and the address of the router (by the way, also gray). If I trace and see the route, we will see my Wi-Fi router: a pack of gray provider addresses and an external white IP. Thus, in fact, I will have two NATs: one, on which I am on Wi-Fi, and the other from the provider, if I, of course, have not bought a dedicated external IP address.

NAT is so popular because:

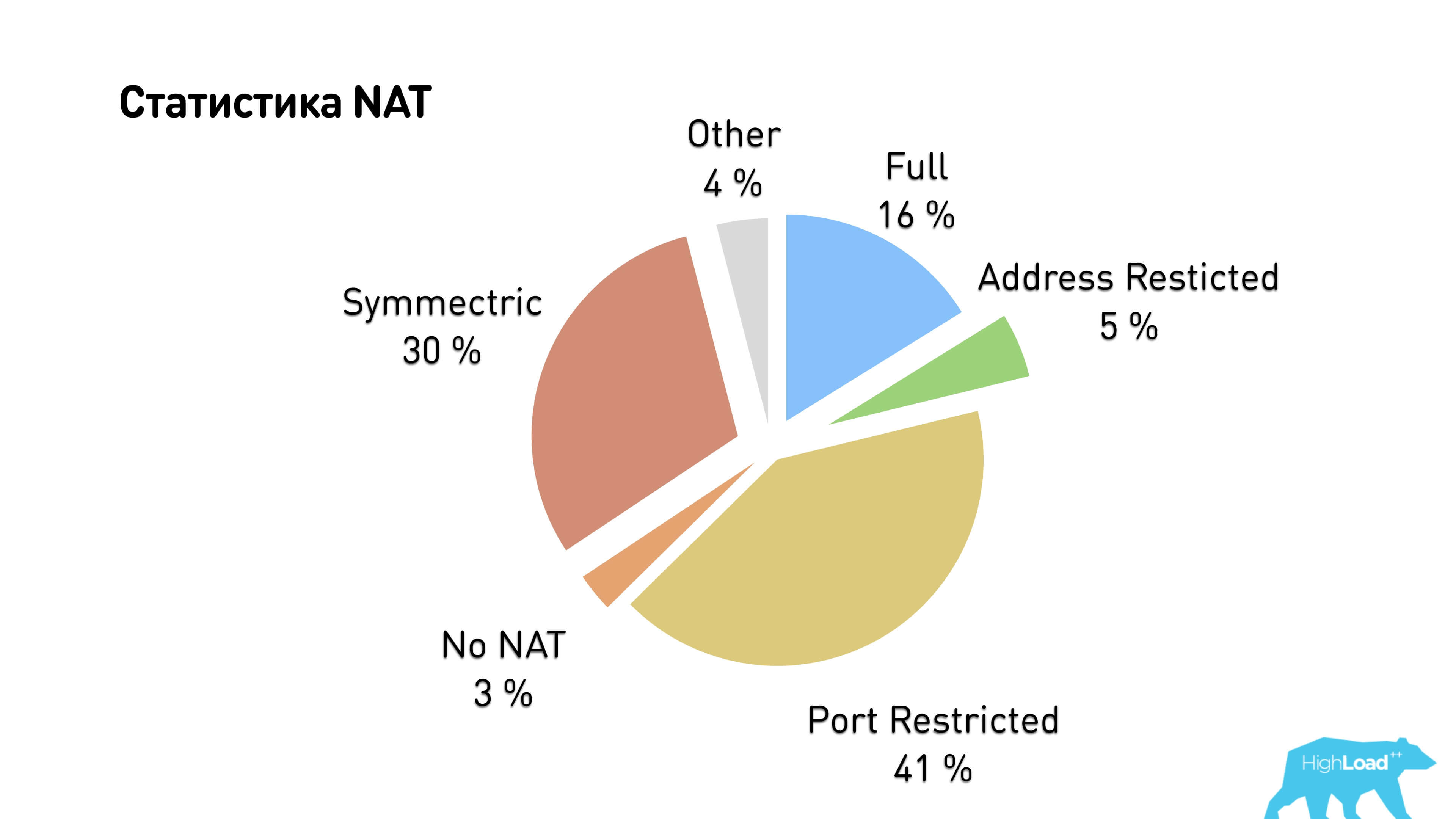

Therefore, only 3% of users sit with an external IP, and all others go through NAT.

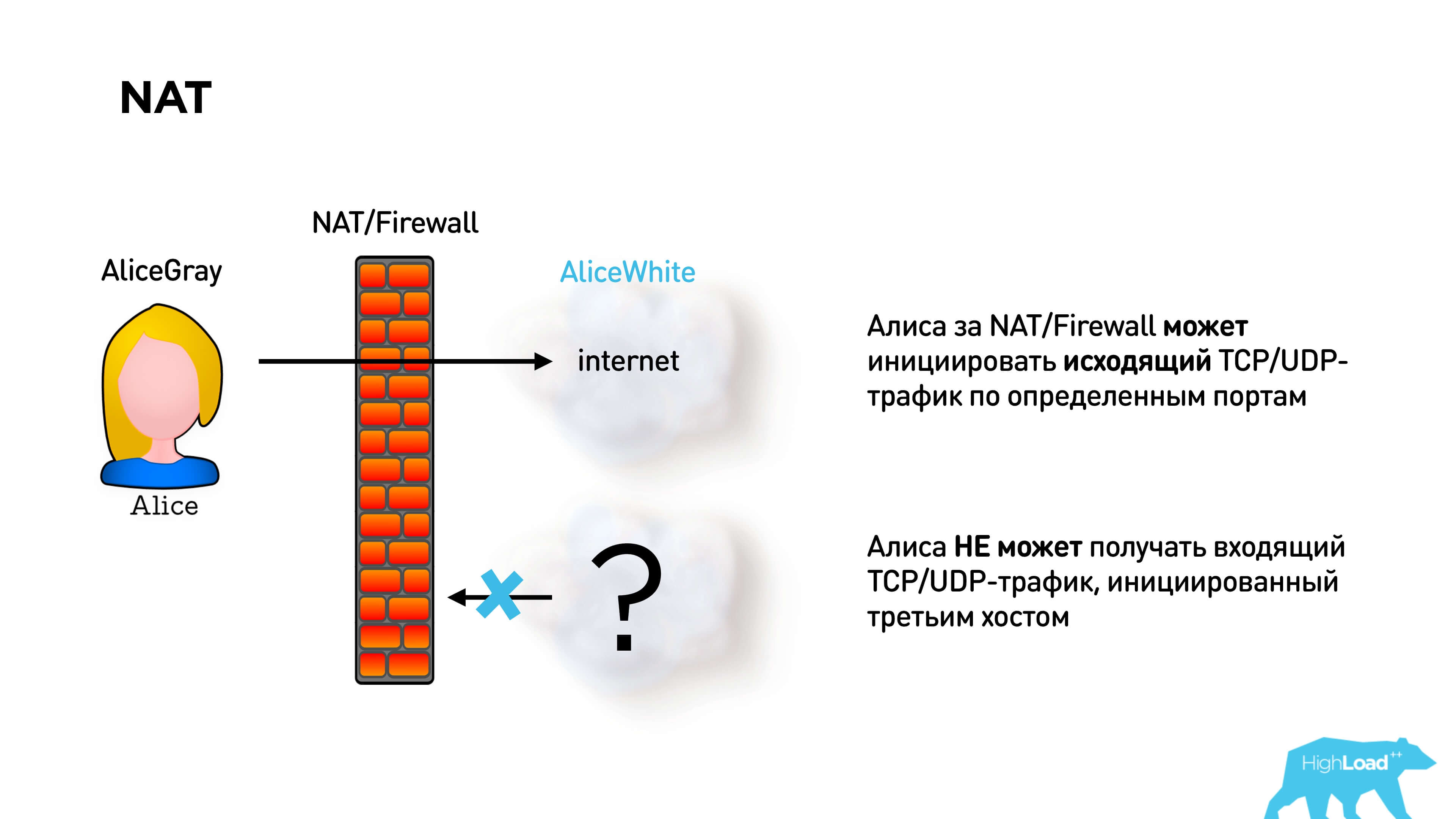

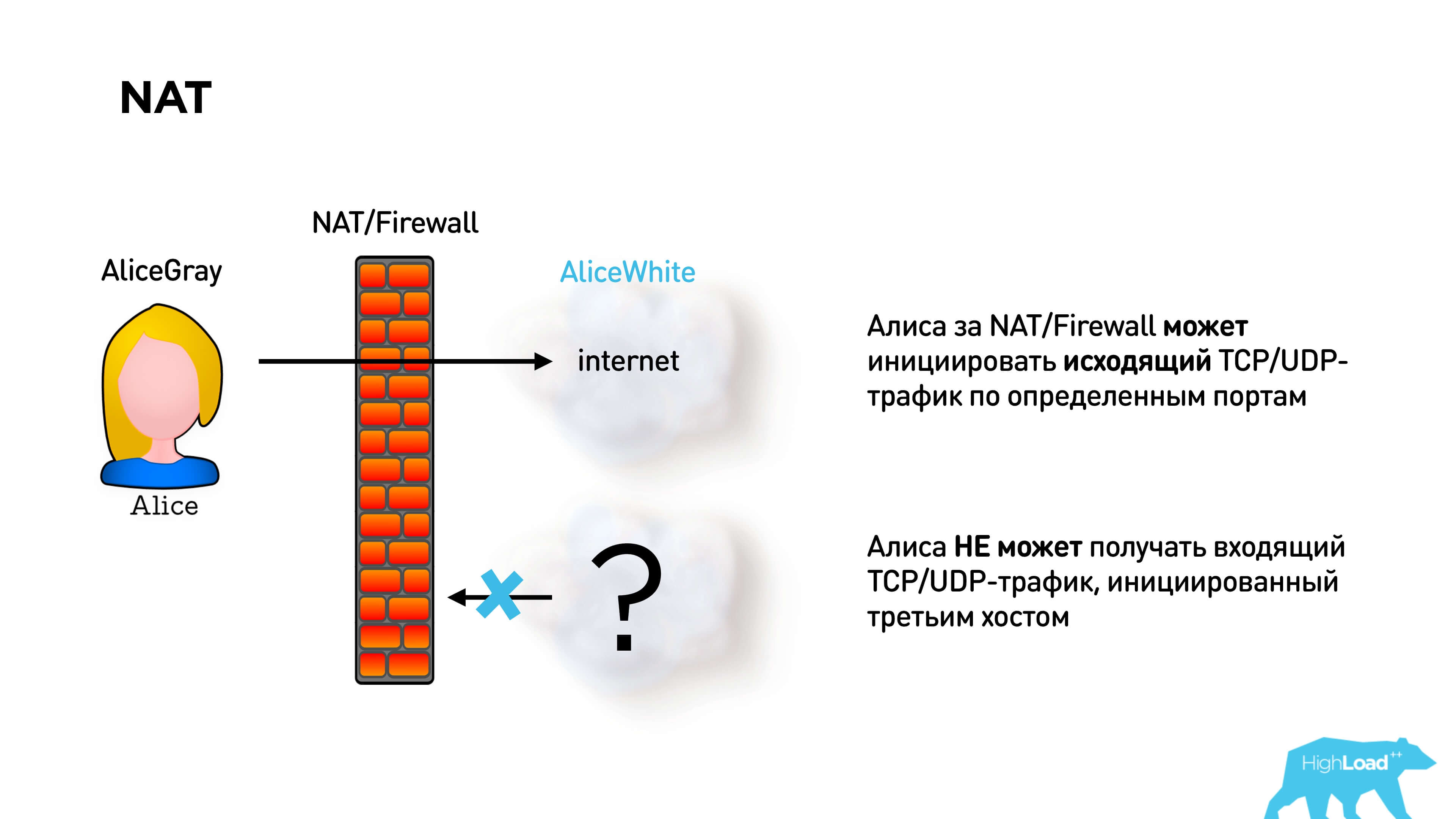

NAT allows you to safely go to any white addresses. But if you did not go anywhere, then no one can come to you.

To establish a p2p connection this is the problem. In fact, Alice and Bob cannot send each other packets if they are both behind NAT.

There is a STUN protocol in WebRTC to solve this problem. It is proposed to deploy a STUN server. Then Alice connects to the STUN server, gets her IP address, sends it to Bob via signaling. Bob also gets his IP address and sends it to Alice. They send packets to each other and thus break through NAT.

Question : Alice has a certain port open, NAT / Firewall is already pierced to this port, and Bob is open. They know each other's addresses. Alice tries to send the package to Bob, he sends the package to Alice. Do you think they can talk or not?

In fact, you are right in any case, the result depends on the type of NAT pair that users have.

Network Address Translation

There are 4 types of NAT:

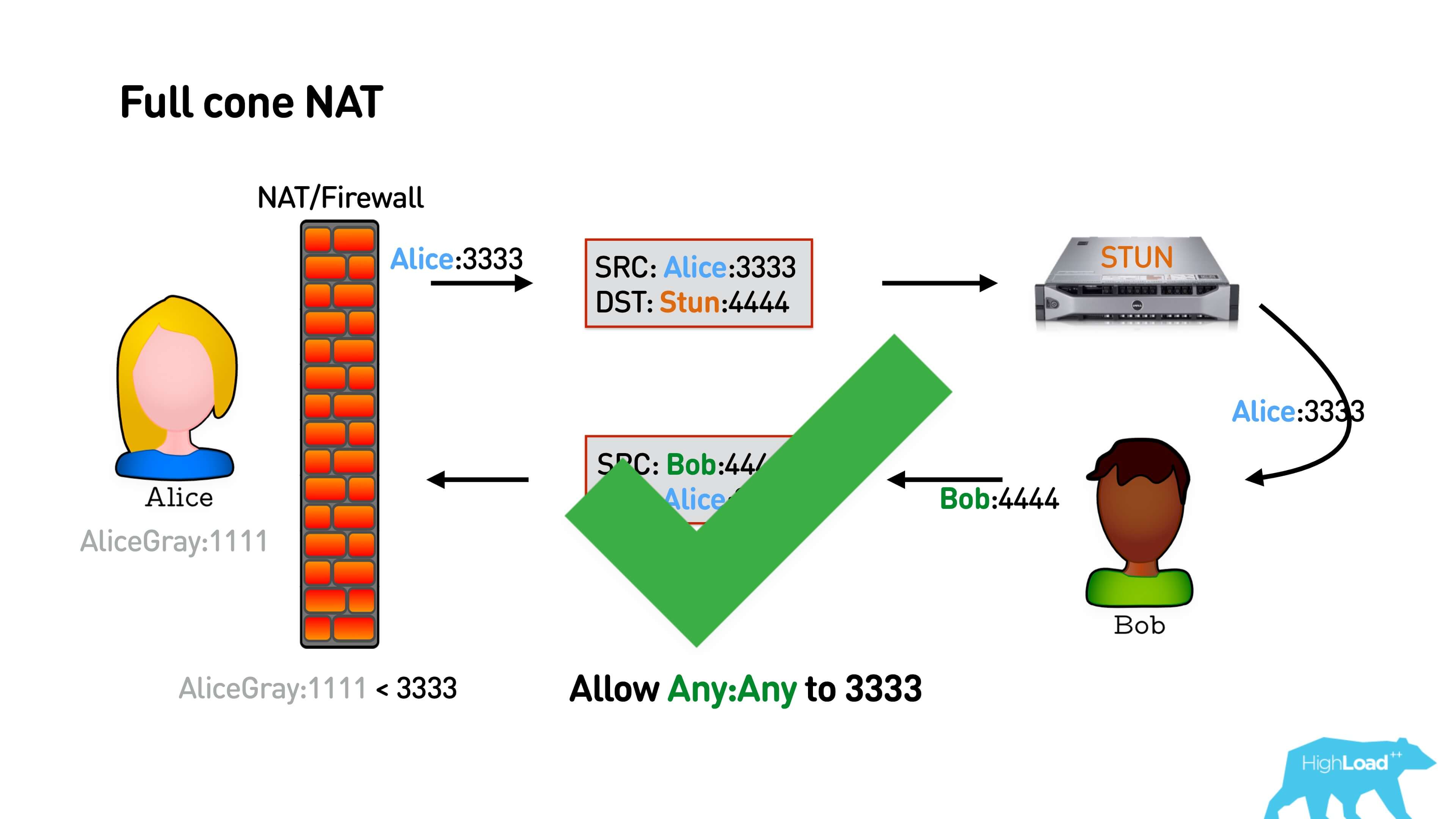

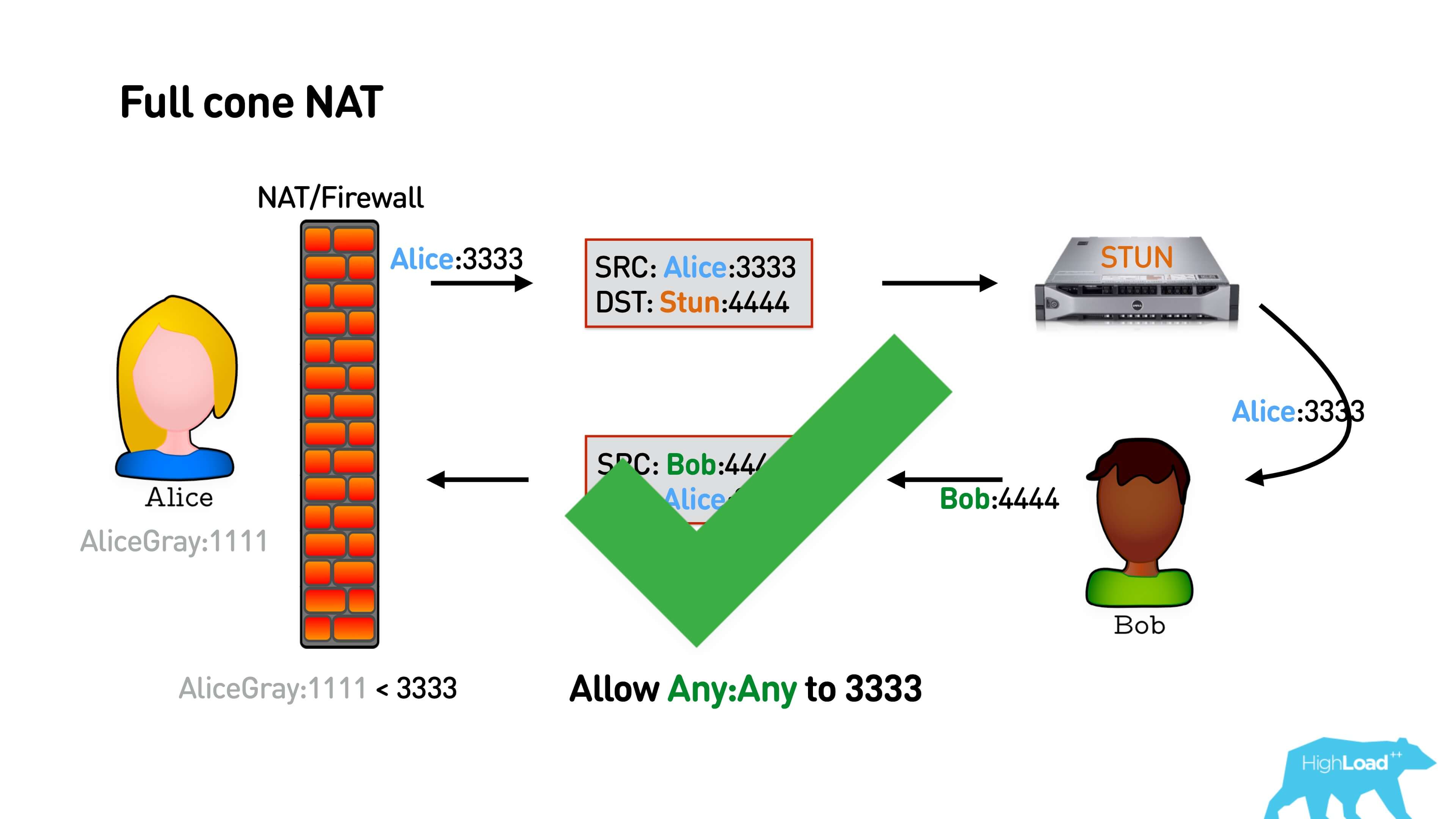

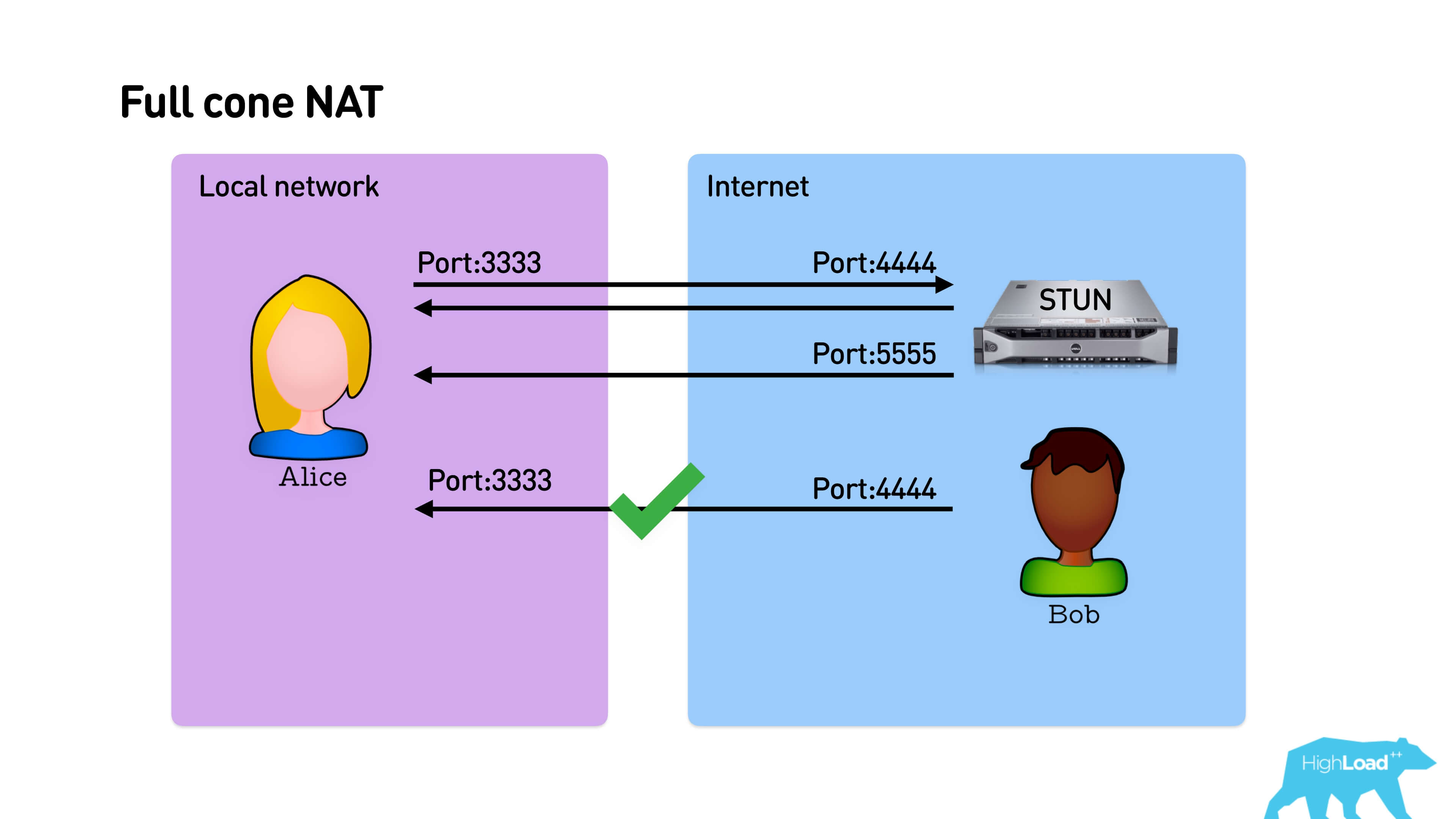

In the basic version, Alice sends a packet to the server STUN, she opens a port. Bob somehow finds out about her port and sends a reverse packet. If this is Full cone NAT — the simplest one that simply maps an external port to an internal one, then Bob will immediately be able to send a packet to Alice, establish a connection, and they will talk.

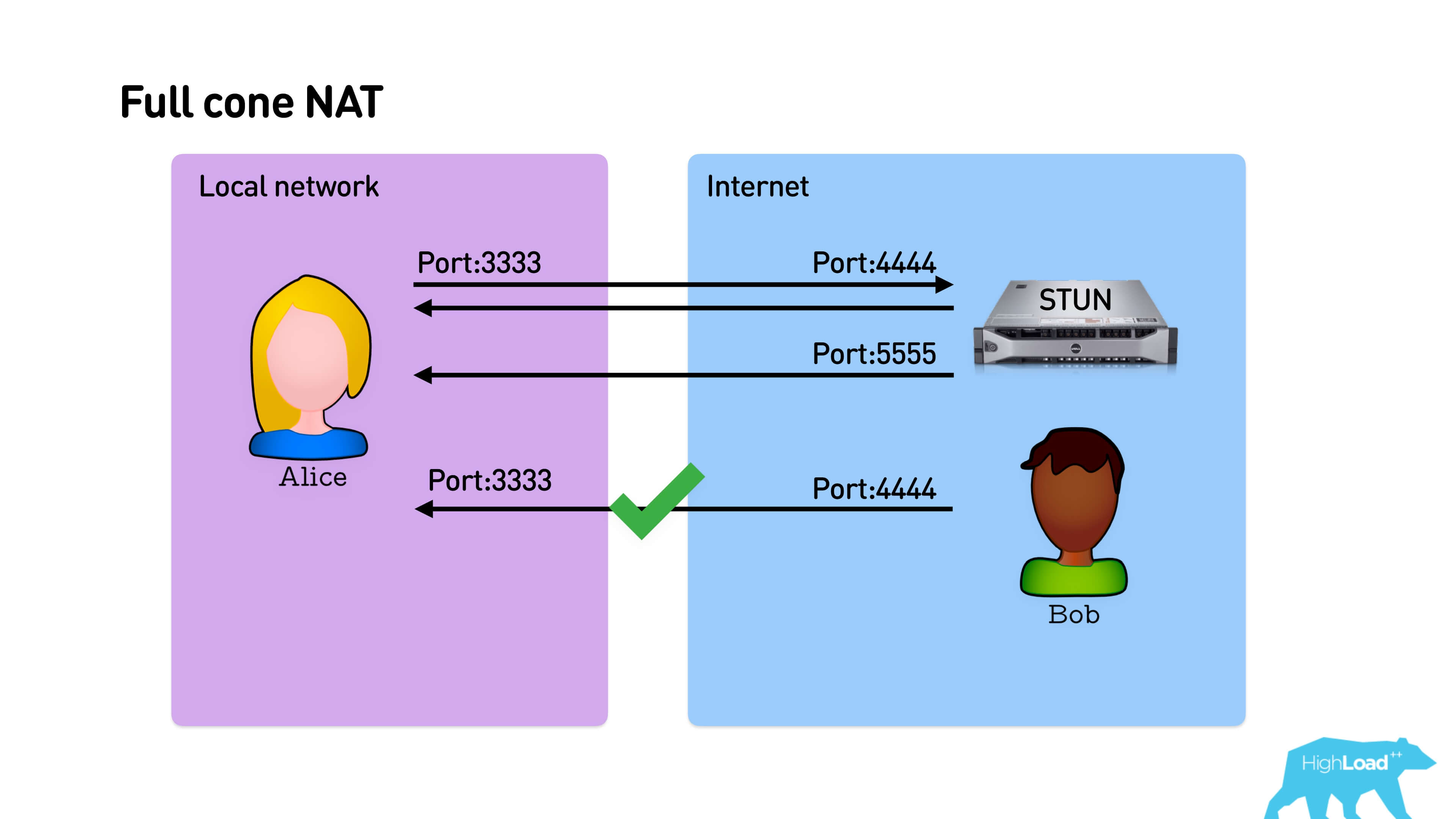

Below is the interaction scheme: Alice sends a packet to the STUN port from some port, STUN responds with its external address. STUN can reply from any address, if it is a Full cone NAT, it still breaks through NAT, and Bob can reply to the same address.

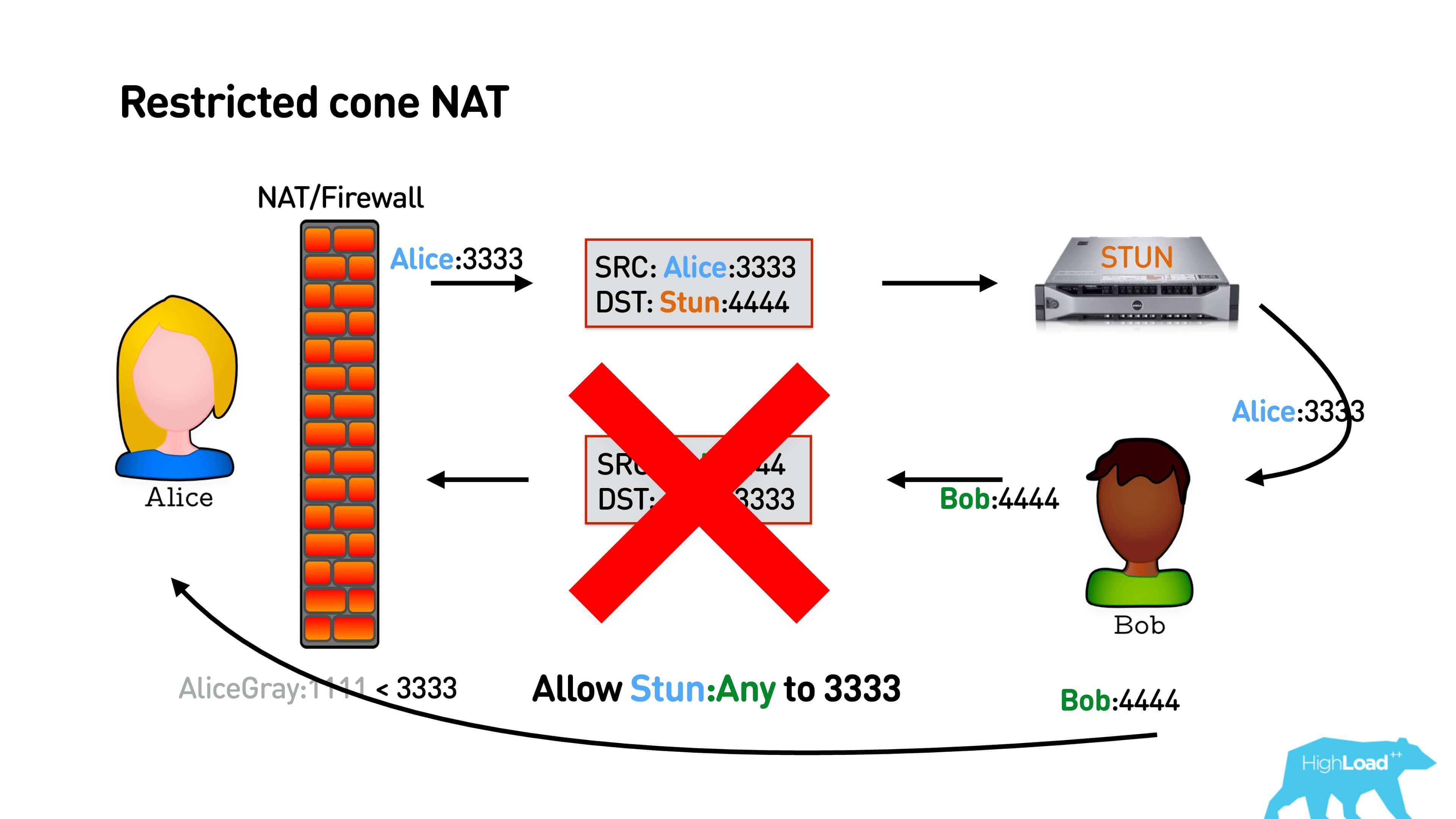

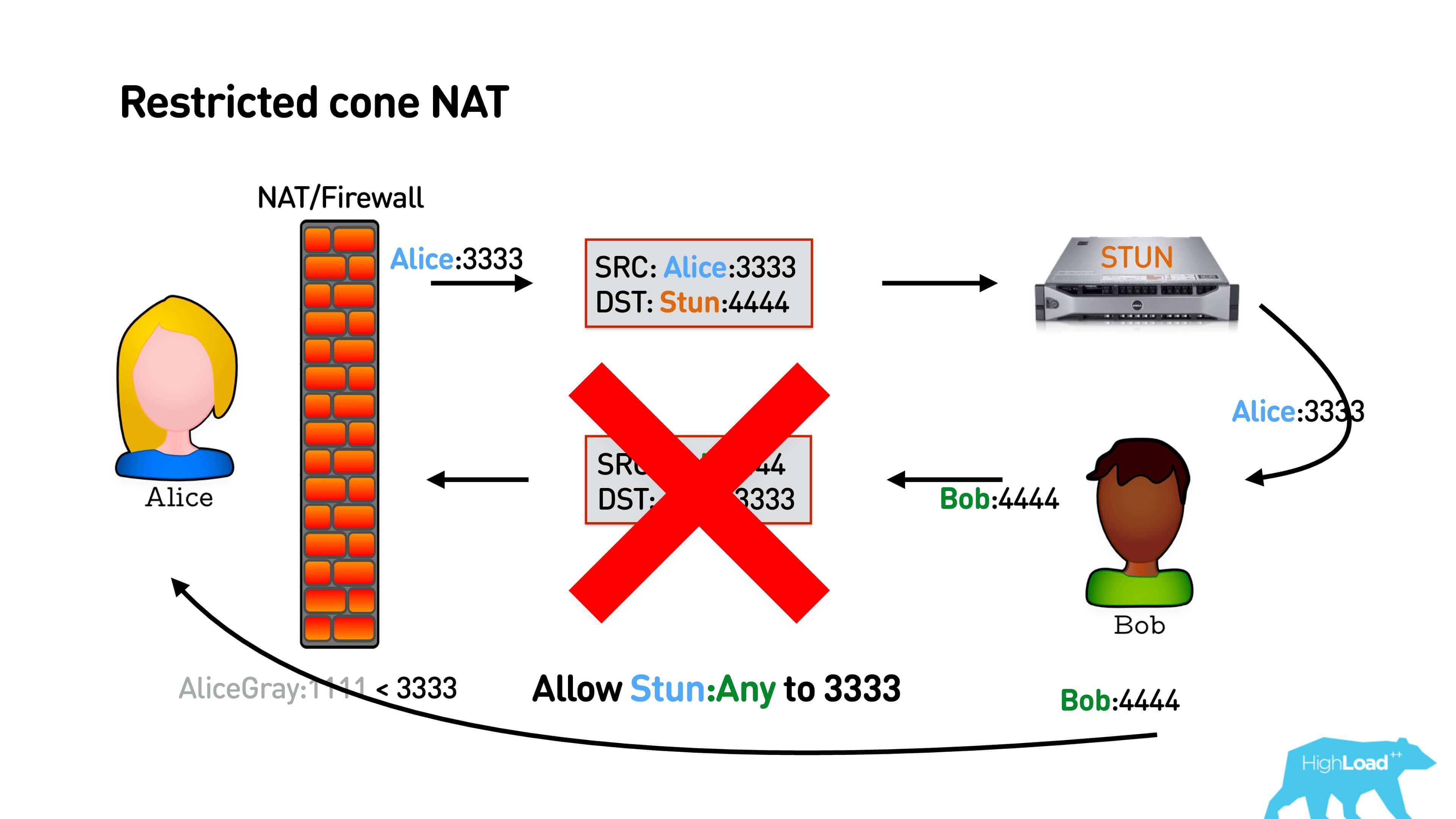

In the case of Restricted cone, NAT is a bit more complicated. He remembers not just the port from which you want to map to the internal address, but also the external address to which you went. That is, if you have established a connection only to the IP of the STUN server, then no one else on the network can answer you, and then the package of Bob will not reach.

How is this problem solved? In a simple scheme (see illustration below), so: Alice sends a packet to STUN, he answers her IP. STUN can respond to it from any port while it is Restricted cone NAT. Bob cannot answer Alice because he has a different address. Alice responds with a package, knowing Bob’s IP address. She opens NAT to Bob, Bob answers her. Hooray, they talked.

A slightly more complicated option is Port restricted cone NAT . All the same, only STUN must respond exactly from the port to which it was addressed. Everything will work too.

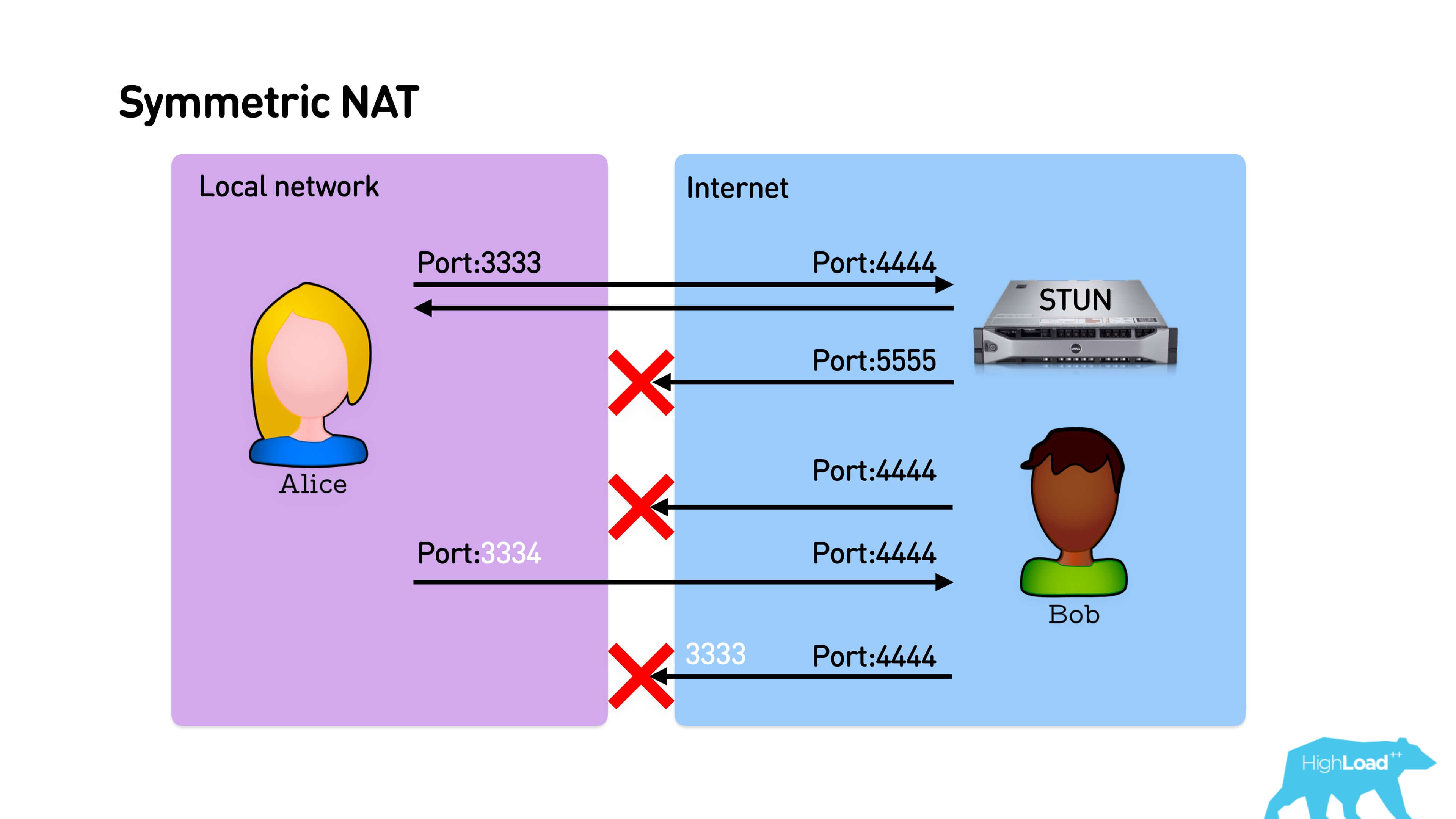

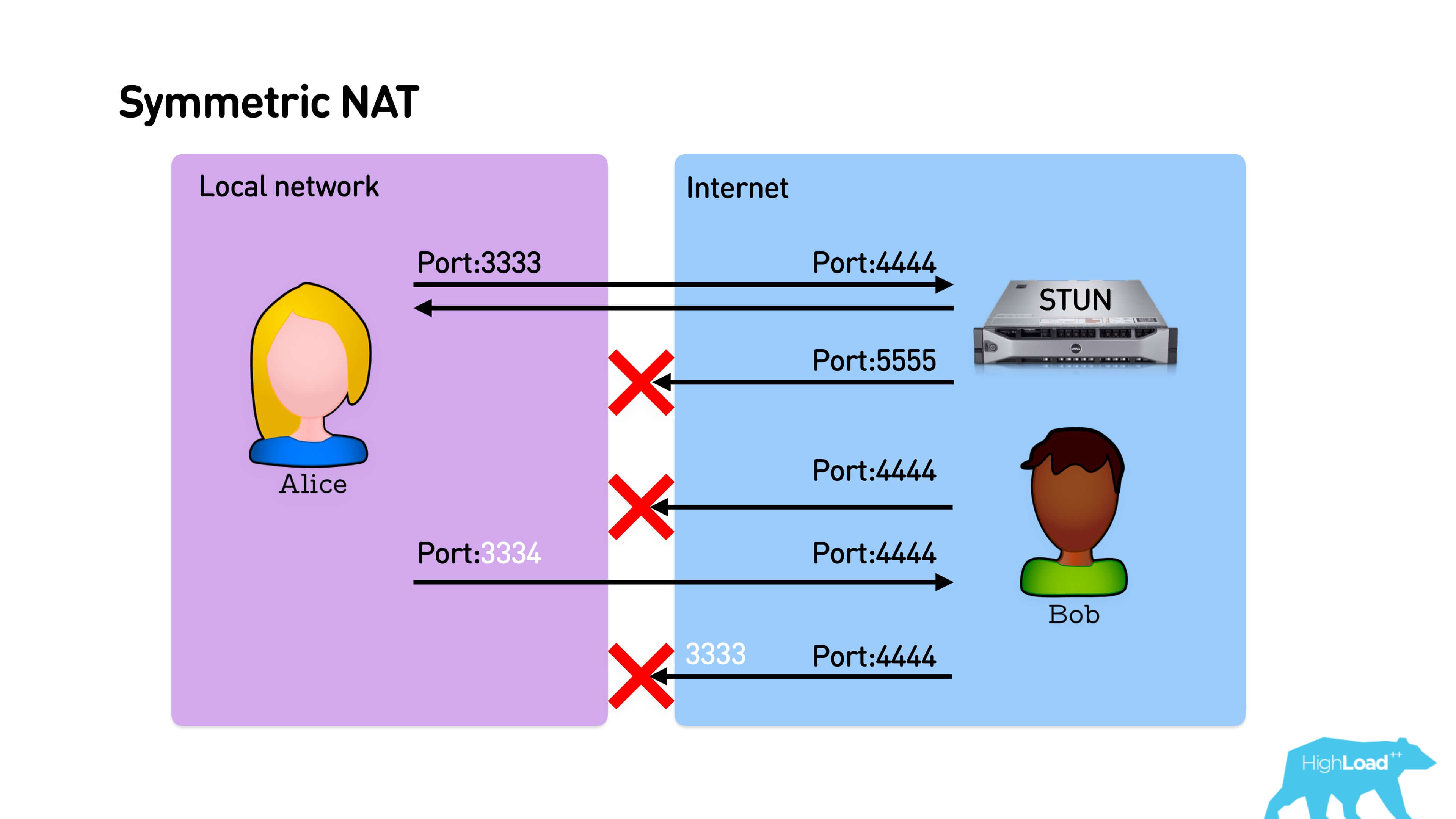

The most harmful thing is Symmetric NAT .

Initially, everything works exactly the same - Alice sends a packet to the STUN server, he responds from the same port. Bob cannot answer Alice, but she sends the package to Bob. And here, despite the fact that Alice sends a packet to port 4444, the mapping allocates a new port to it. Symmetric NAT is different in that when each new connection is established, it issues a new port on the router every time. Accordingly, Bob fights at the port from which Alice went to STUN, and they can’t connect.

In the opposite direction, if Bob is with an open IP address, Alice can just come to him, and they will establish a connection.

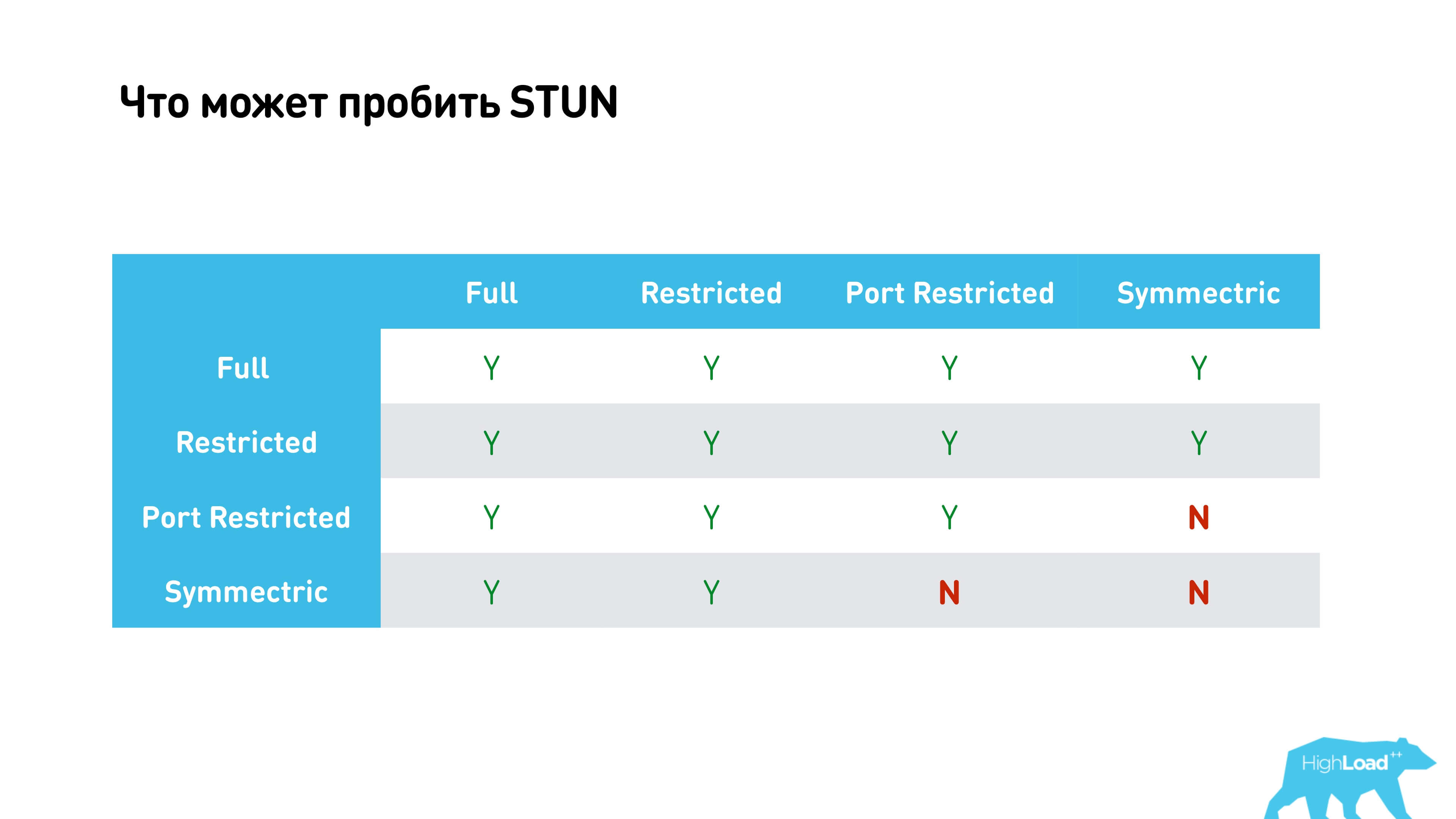

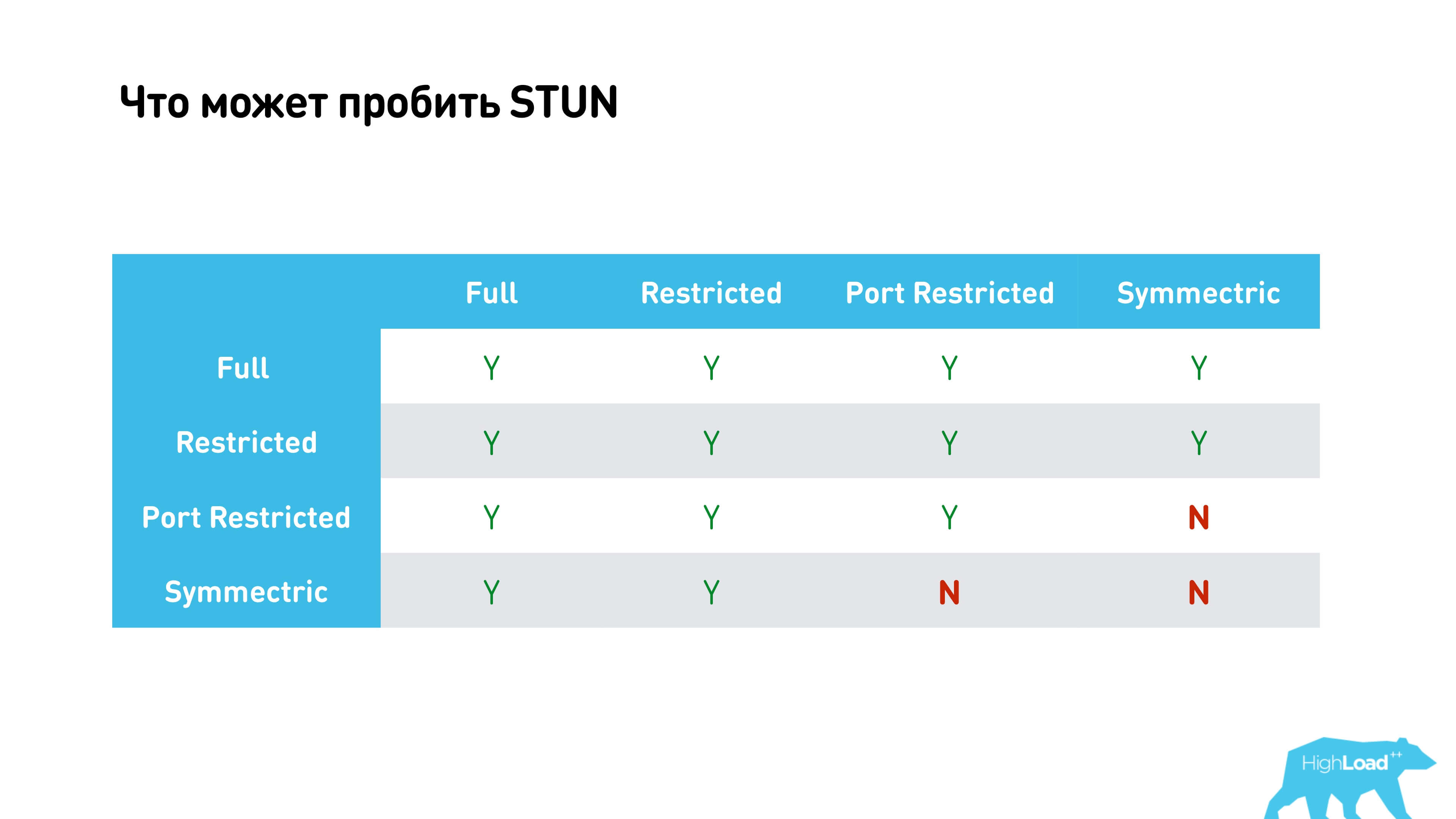

All options are collected in one table below.

It shows that almost everything is possible except when we try to establish connections through Symmetric NAT with Port restricted cone NAT or Symmetric NAT at the other end.

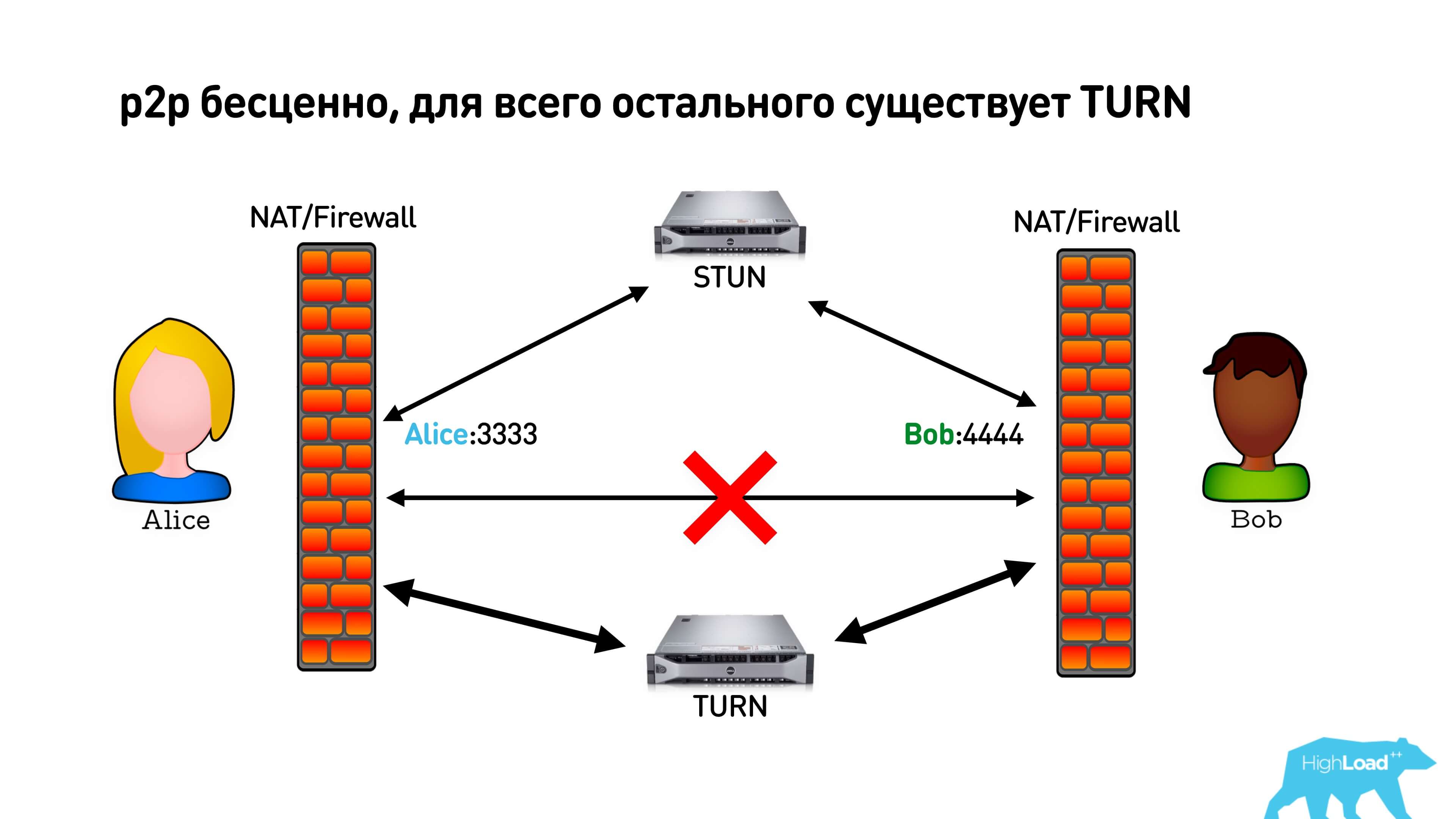

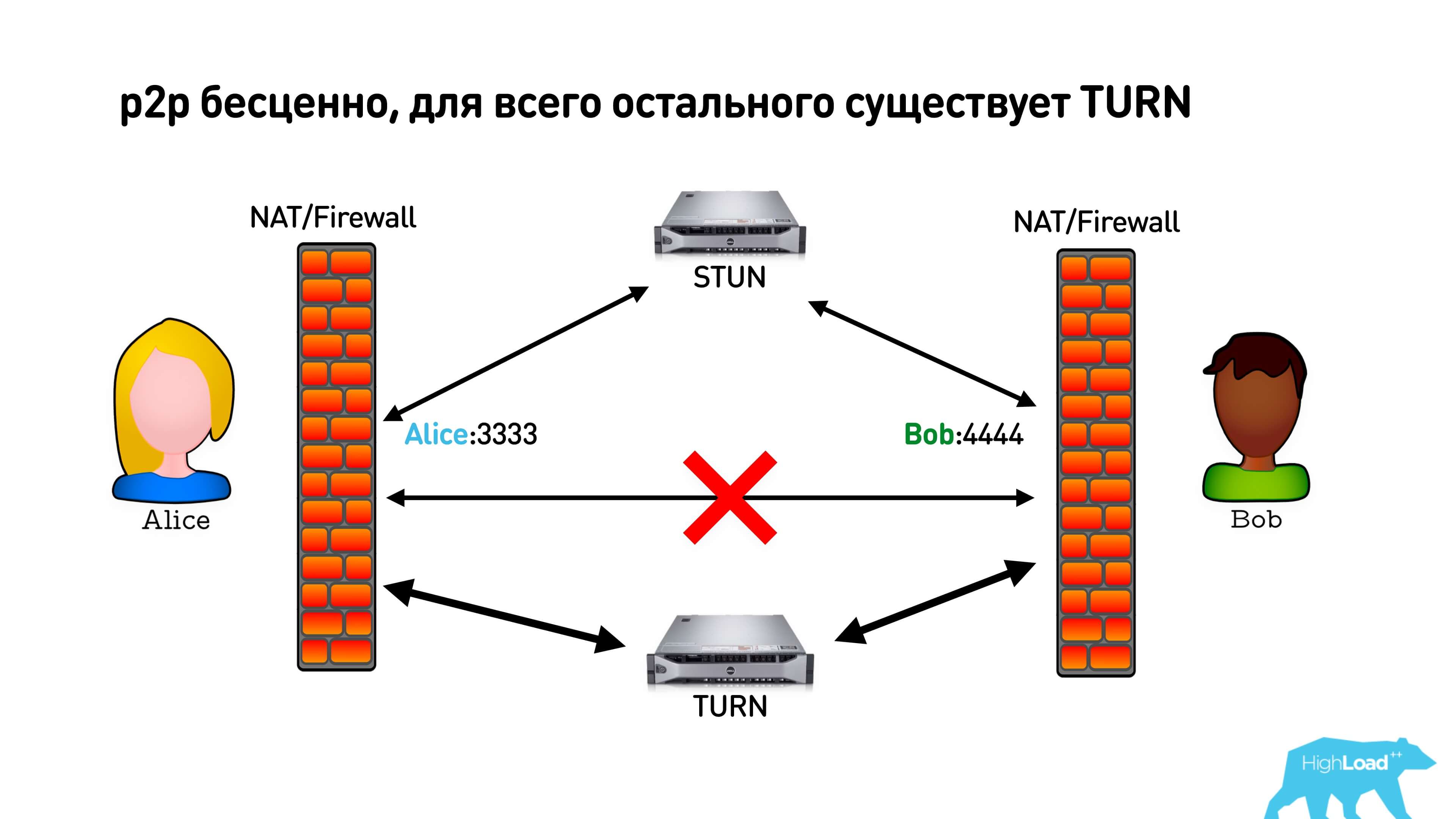

As we found out, p2p is invaluable for us in terms of latency, but if we failed to install it, then WebRTC offers us a TURN server. When we realized that p2p is not established, we can simply connect to the TURN, which will proxy all traffic. True, in this case you will pay for traffic, and users may have some additional delays.

Practice

Free STUN servers are at Google. You can put them in the library will work.

TURN servers have credential (login and password). Most likely, you will have to raise your own, it is quite difficult to find free.

Examples of free STUN servers from Google:

And the free TURN server with passwords: url: 'turn: 192.158.29.39: 3478? Transport = udp', credential: 'JZEOEt2V3Qb0y27GRntt2u2PAYA =', username: '28224511: 1379330808 ′.

We use coturn .

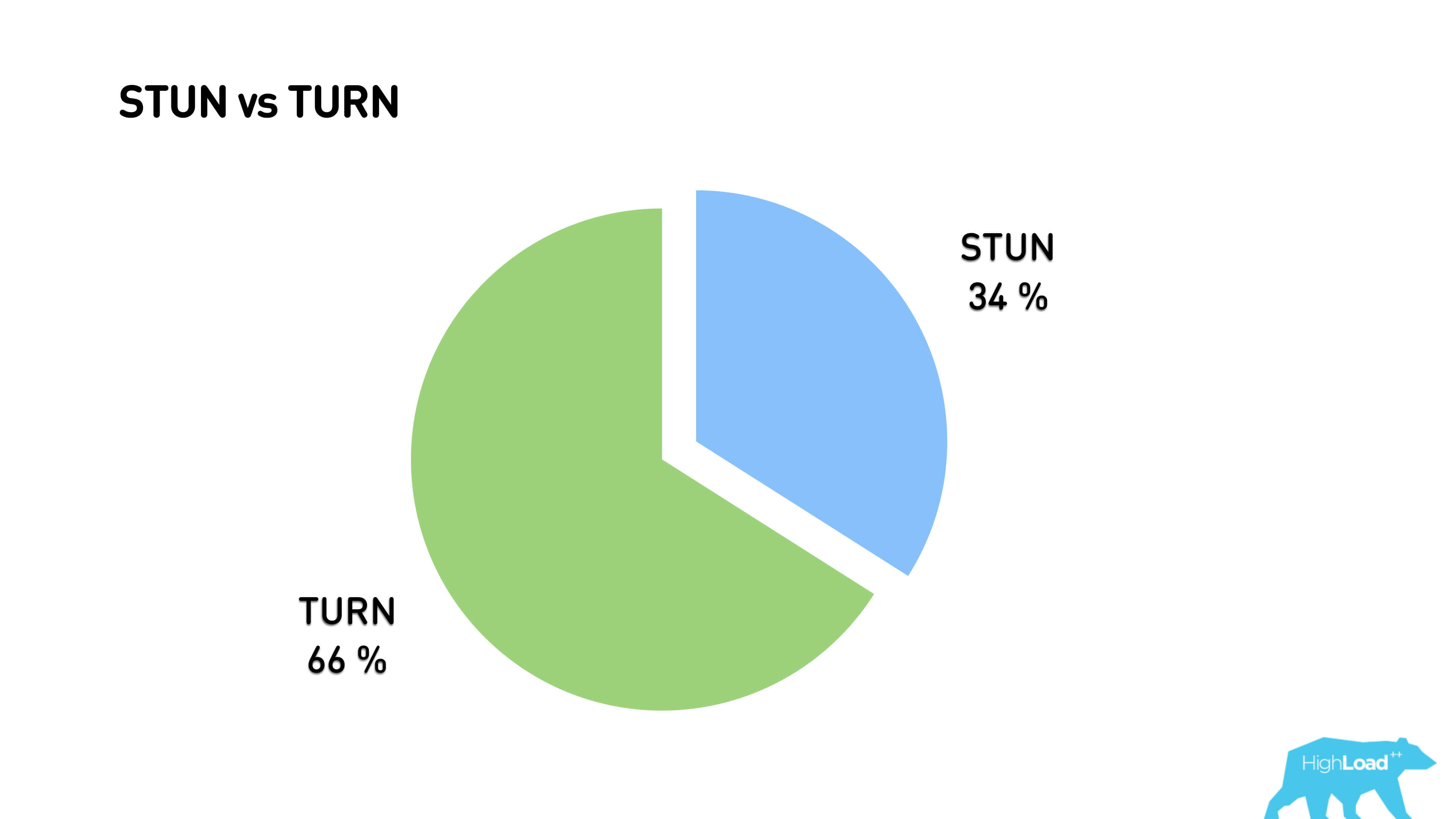

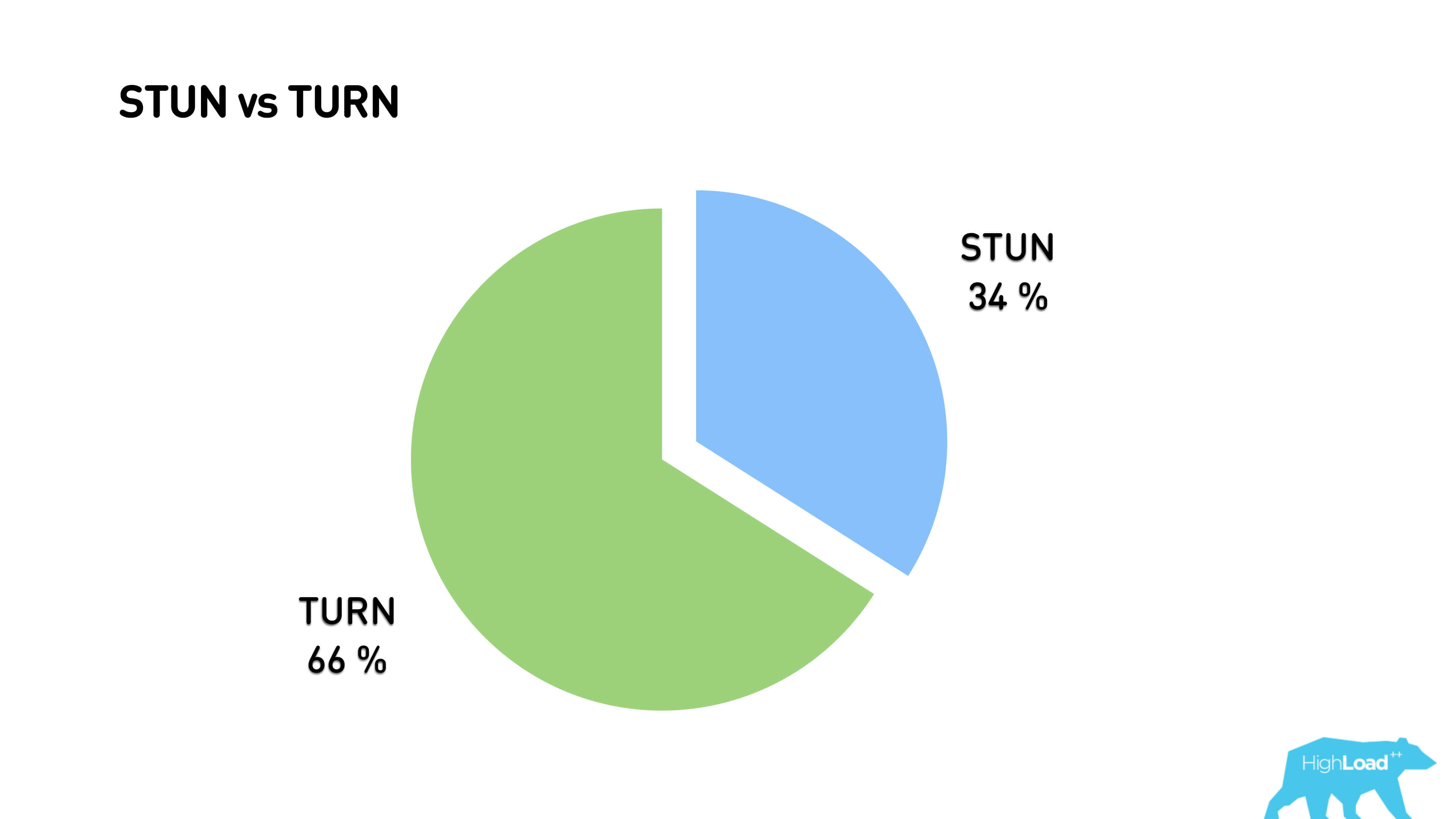

As a result, 34% of traffic passes through the p2p connection, everything else is proxied through the TURN server.

What else is interesting about the STUN protocol?

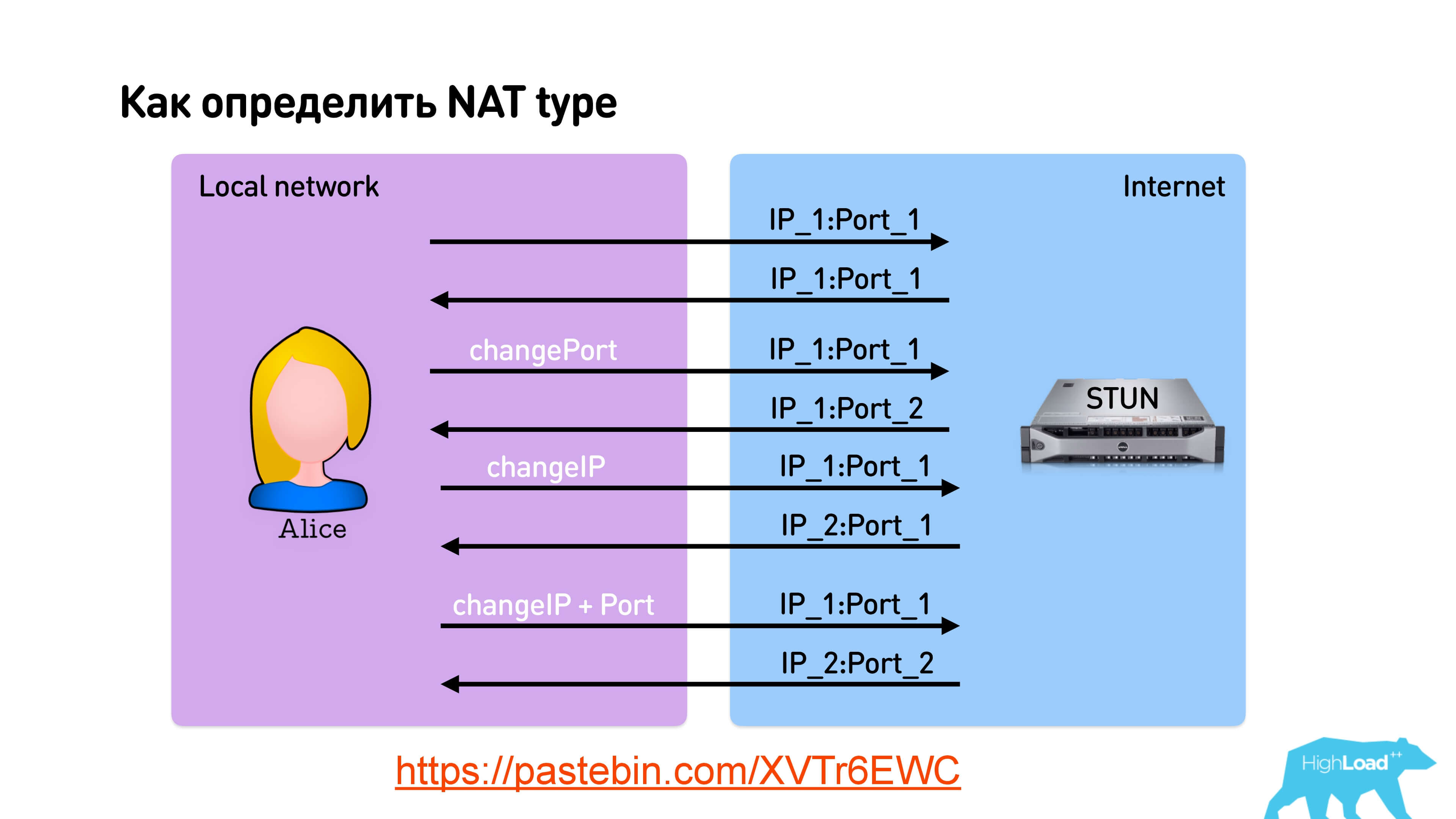

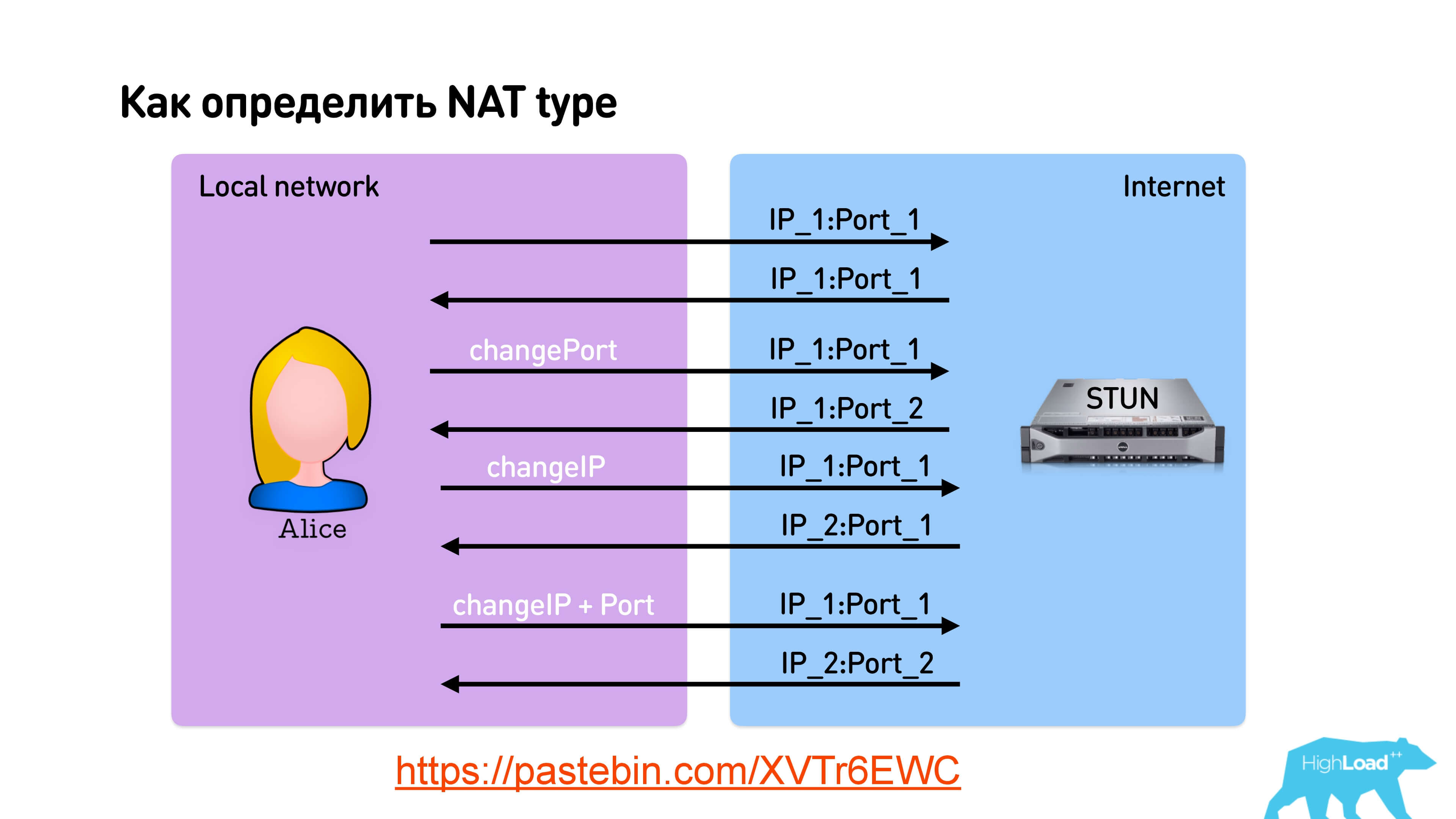

STUN allows you to determine the type of NAT.

Link on the slide

When sending a packet, you can indicate that you want to receive a response from the same port or ask STUN to respond from another port, from another IP, or from another IP and port in general. Thus, for 4 requests to the STUN-server, you can determine the type of NAT .

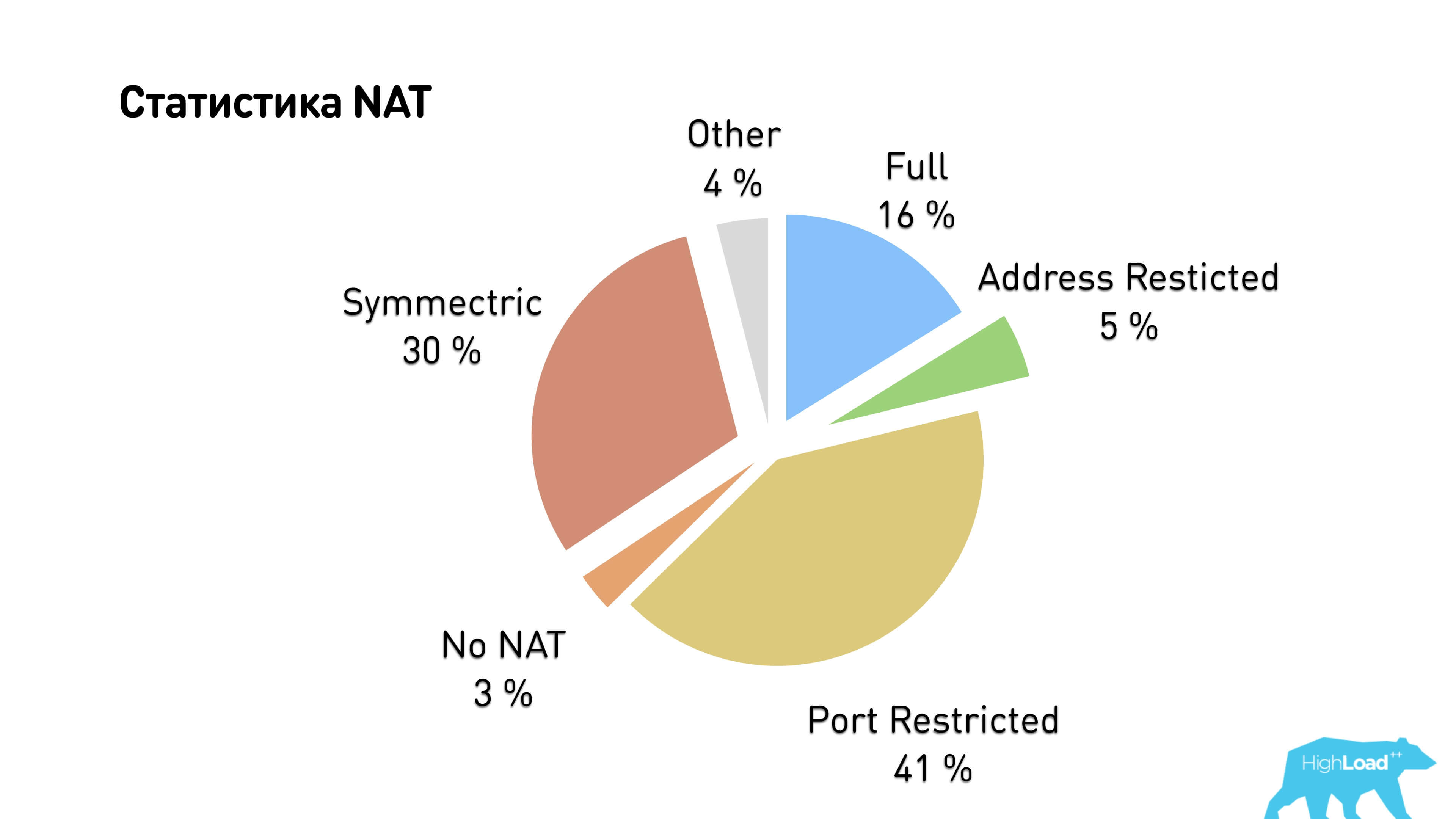

We considered NAT types and got that almost all users have either Symmetric NAT or Port restricted cone NAT. Hence, it turns out that only a third of users can establish a p2p connection.

You may ask why I am telling all this, if you could just take STUN from Google, stick it in WebRTC, and it seems like everything will work.

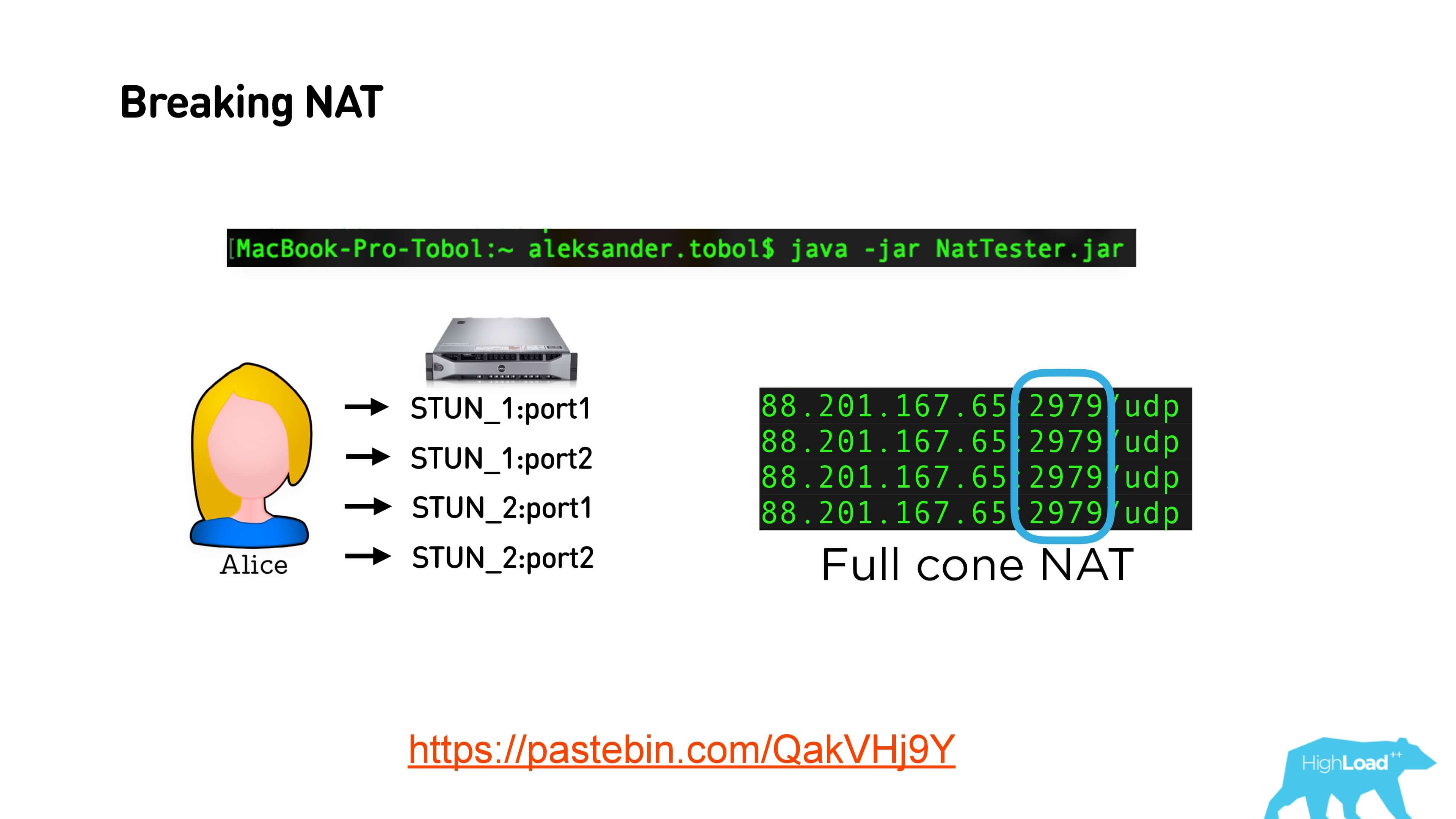

Because you can actually define the type of NAT yourself.

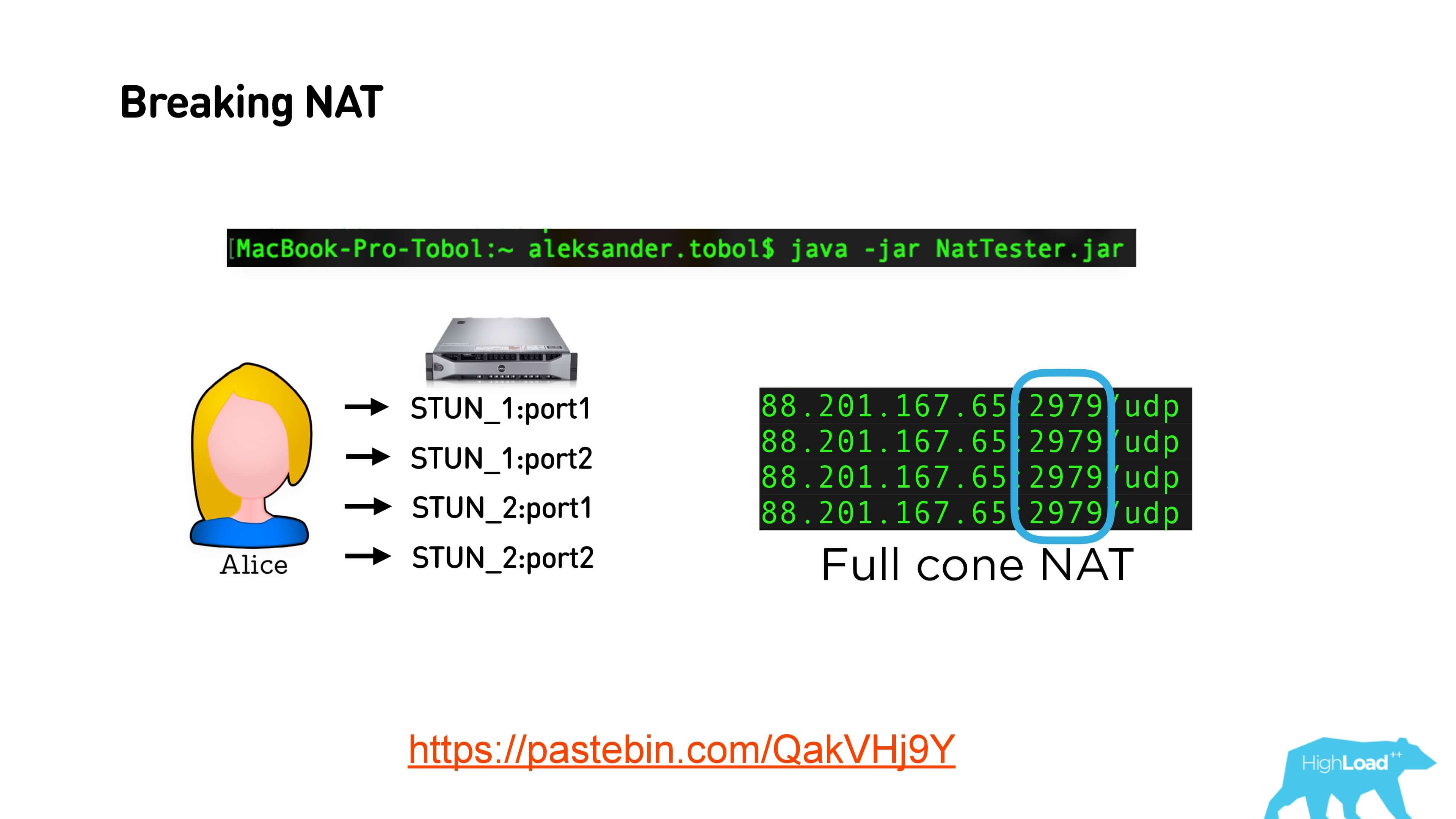

This is a link to a Java application that doesn't do anything tricky: it just pings different ports and different STUN servers, and looks at what port it sees in the end. If you have an open Full cone NAT, then the STUN server answers will have the same port. With Restricted cone NAT, you will receive different ports for each STUN request.

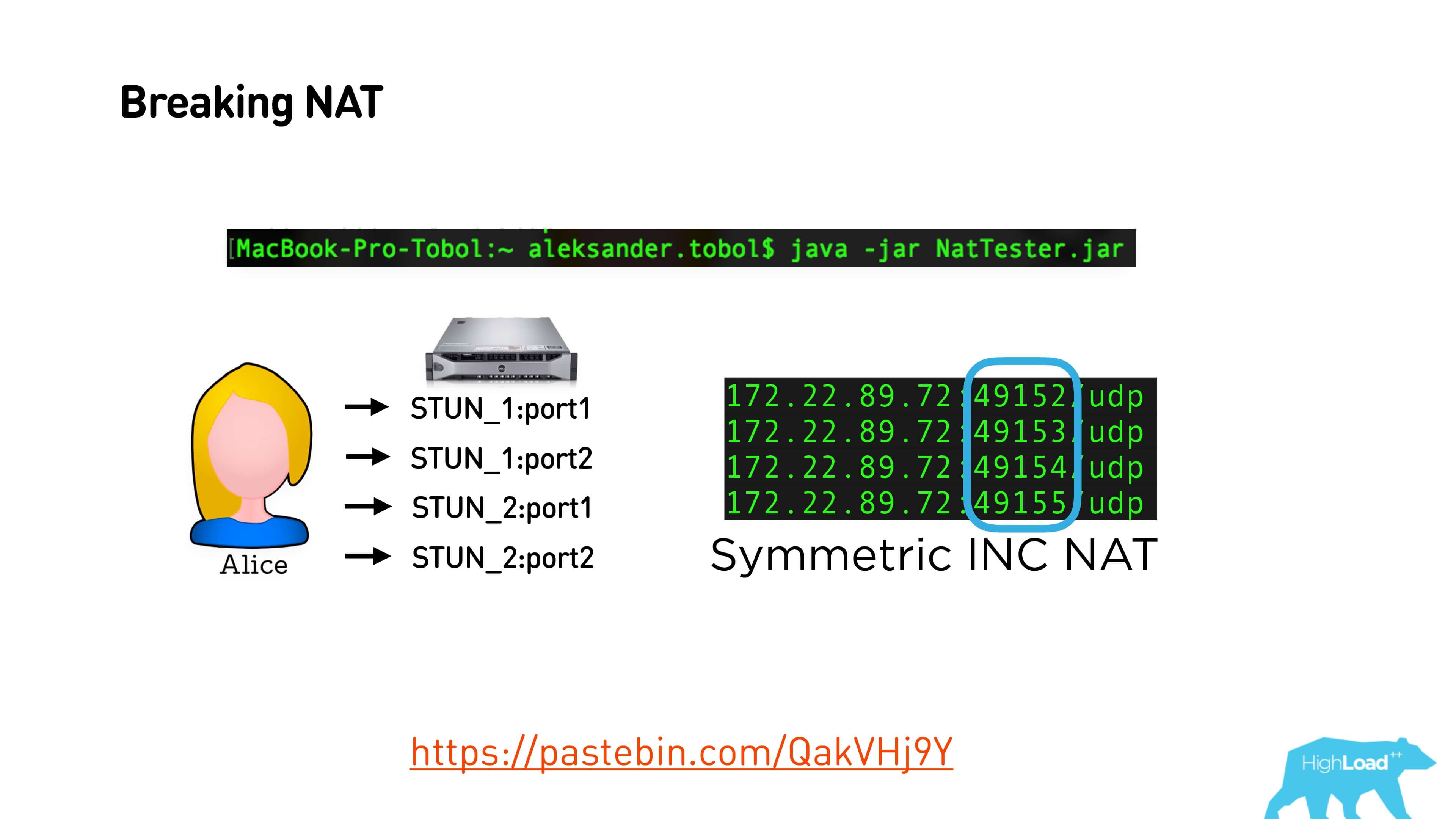

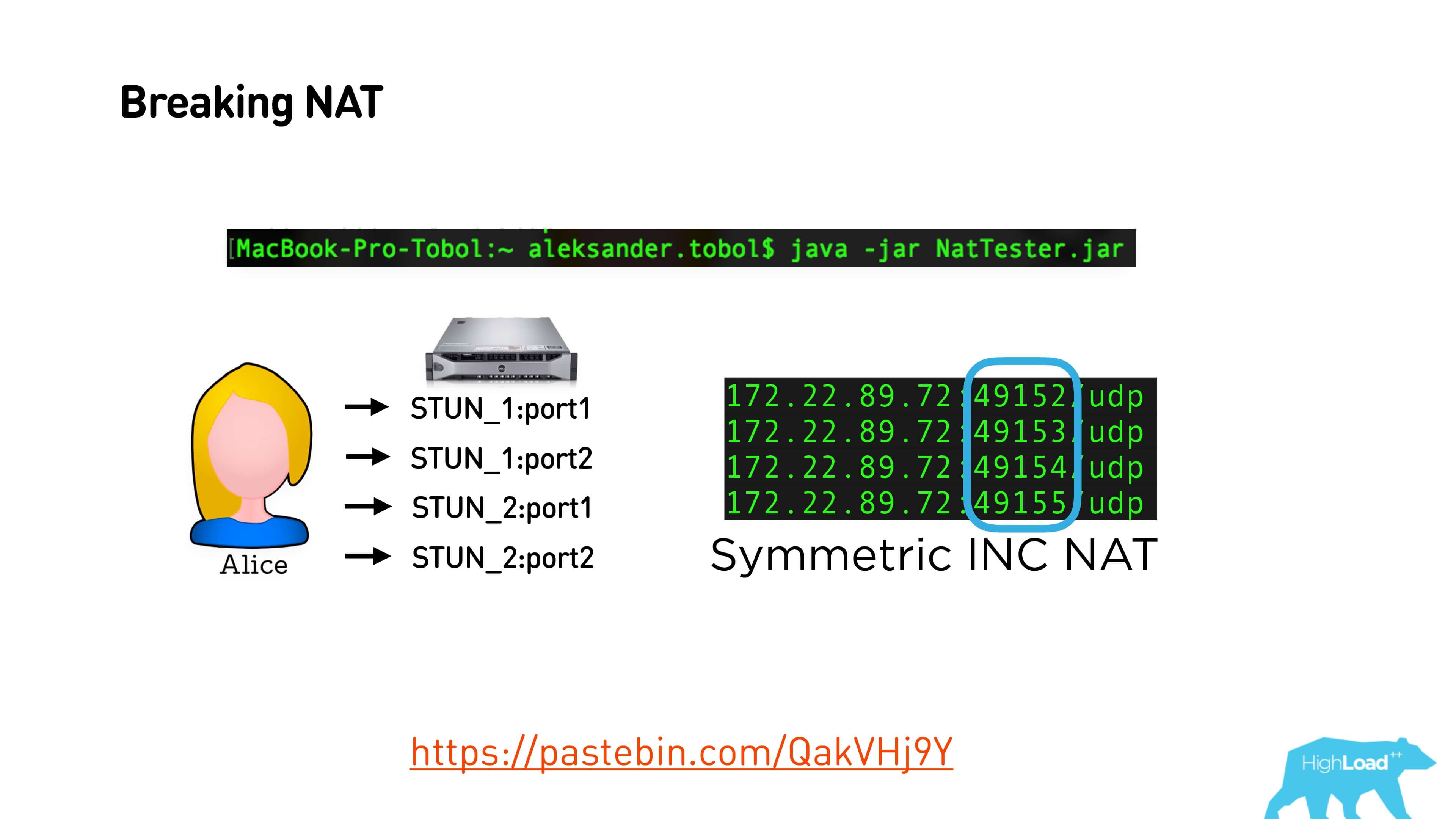

With Symmetric NAT, my office turns out like this. There are completely different ports.

But sometimes there is an interesting pattern that for each connection the port number is increased by one.

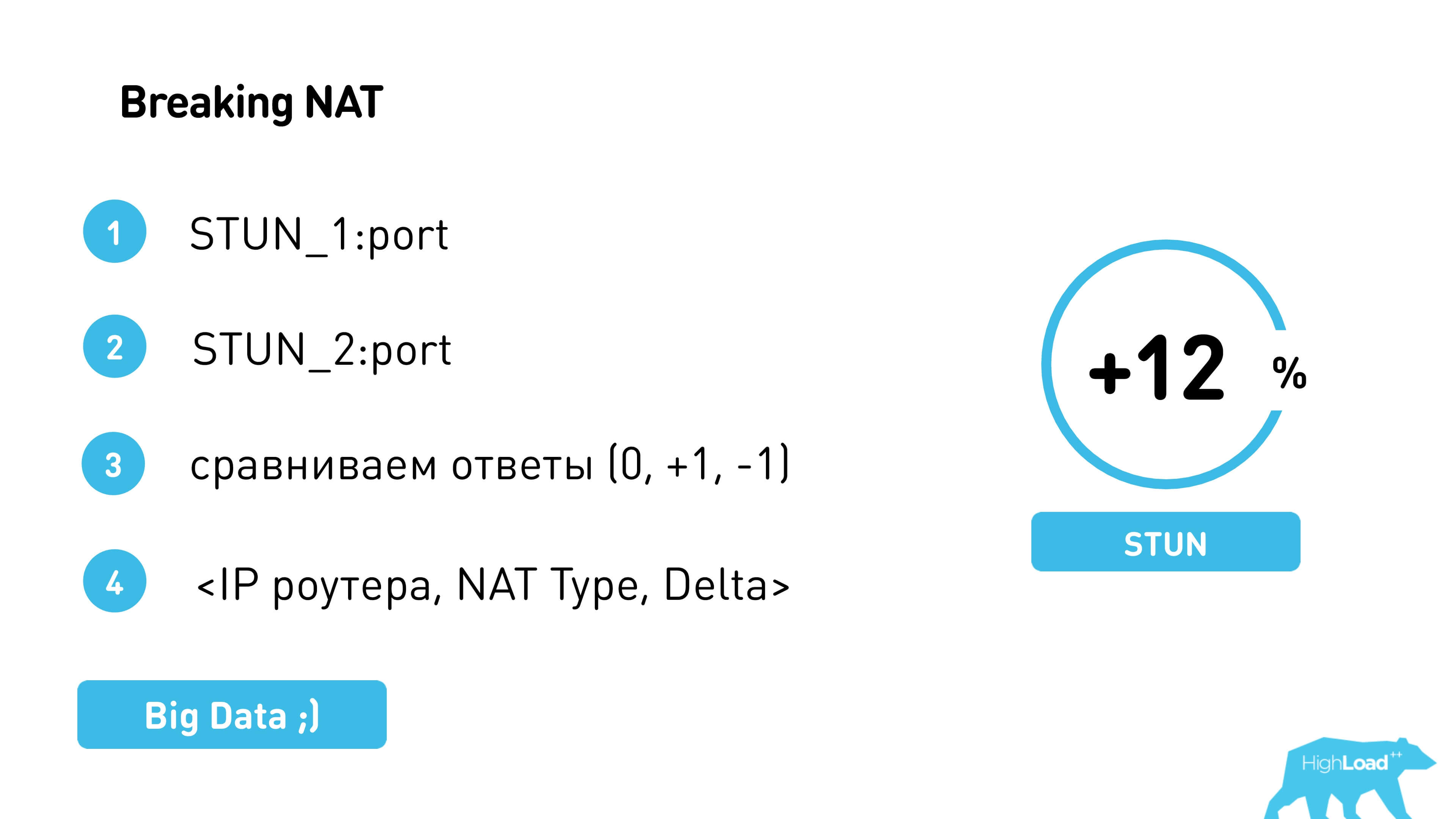

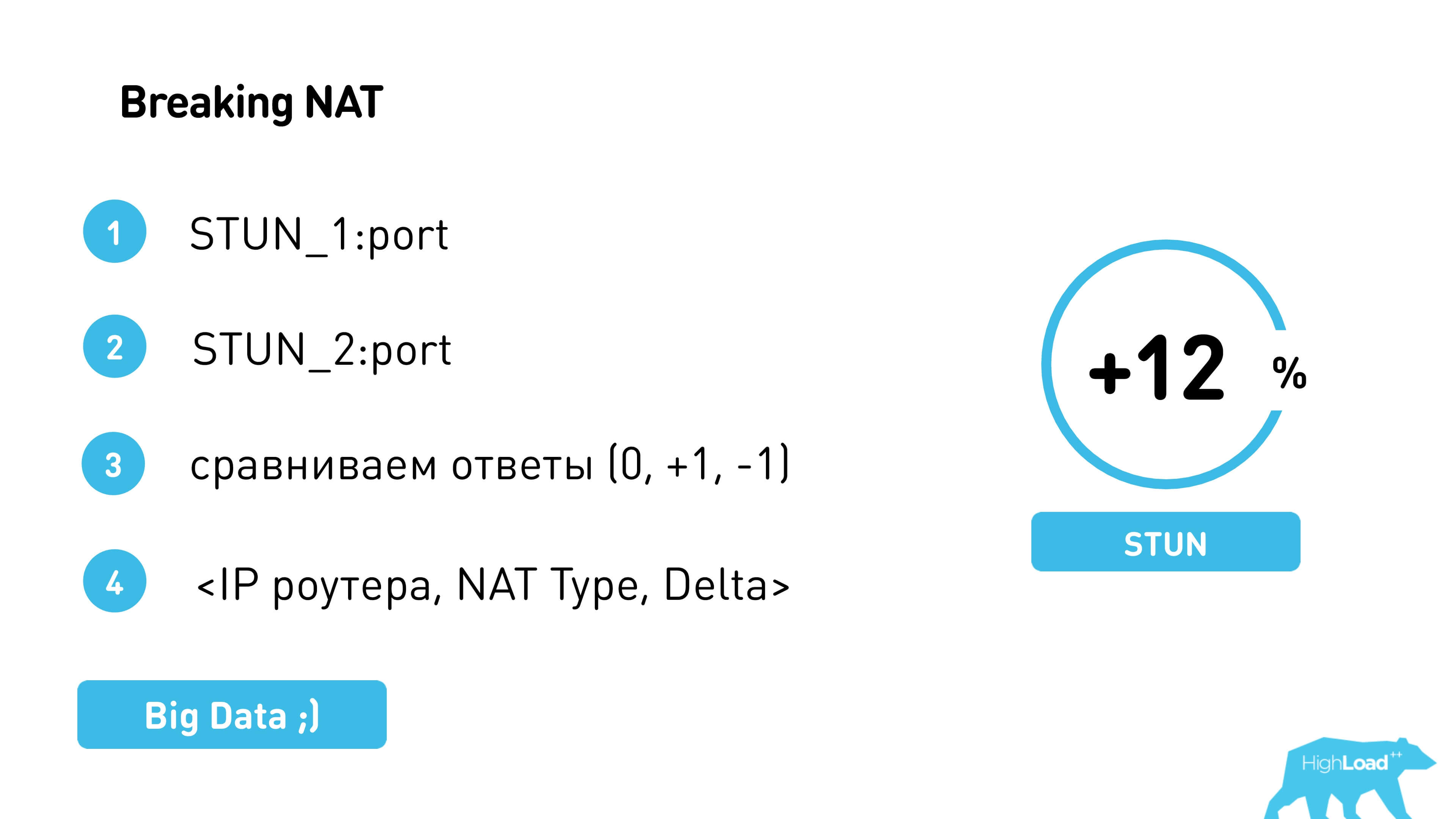

That is, many NATs are configured so that they increase or decrease the port by a constant. You can find this constant and thus break through Symmetric NAT.

Thus, we break through NAT - we go to one STUN-server, to another, we look at the difference, compare and try again to give our port with this increment or decrement. That is, Alice is trying to give Bob his port, which has already been adjusted for a constant, knowing that next time it will be exactly like that.

So we managed to make another 12% peer-to-peer .

In fact, sometimes external routers with the same IP behave the same way. Therefore, if you compile statistics and if Symmetric NAT is a feature of the provider, and not a feature of the user's Wi-Fi router, then the delta can be predicted, immediately sent to the user, so that he uses it and does not spend too much time on its determination.

CDN Relay or what to do if a p2p connection failed

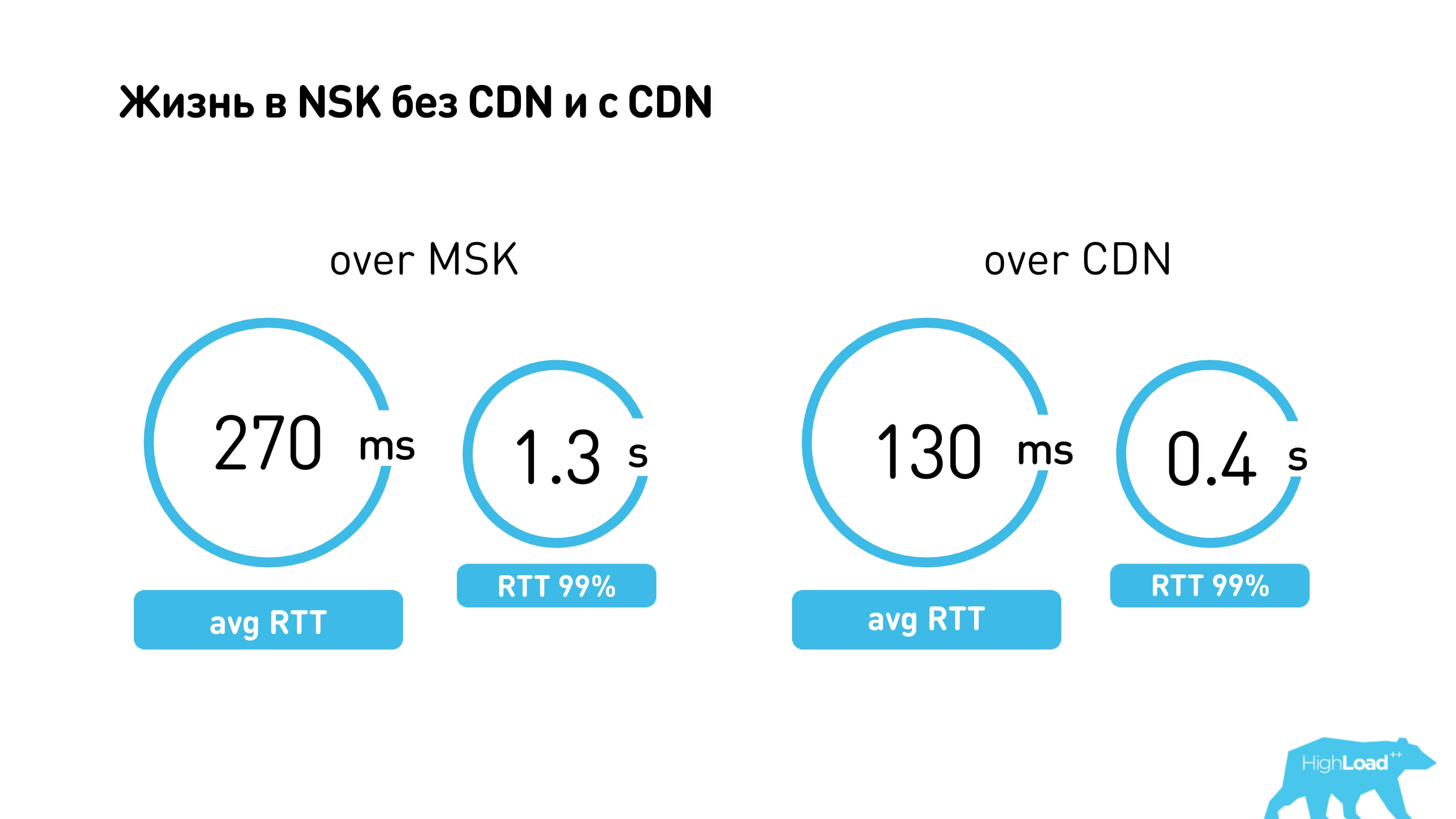

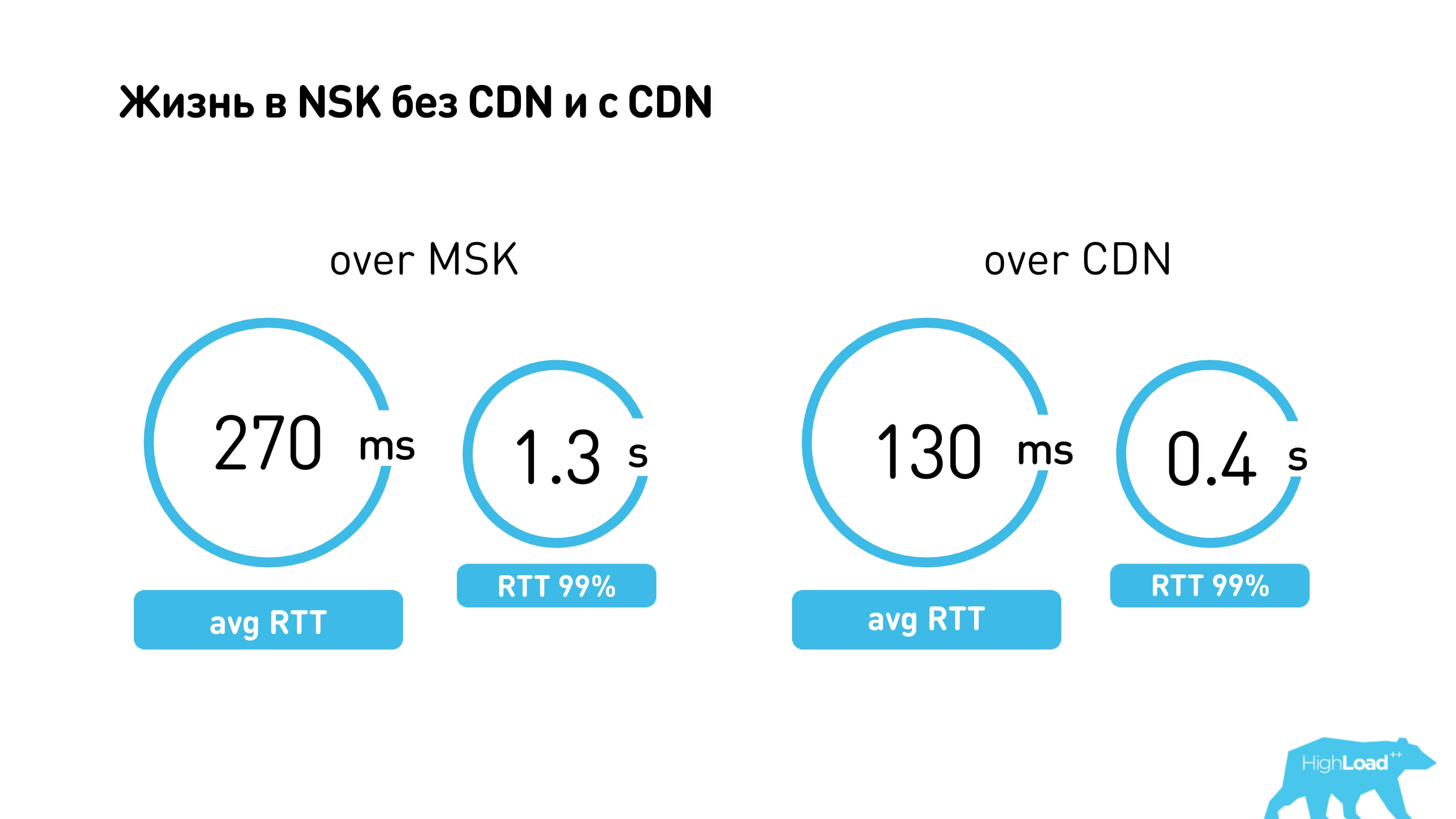

If we still use the TURN server and work not in p2p, but in real mode, transferring all the traffic through the server, we can also add a CDN. Unless, of course, you have a playground. We have our own CDN sites, so for us it was quite simple. But it was necessary to determine where it is better to send a person: to the CDN site or, say, to the channel to Moscow. This is not a very trivial task, so we did this:

There is a CDN in Novosibirsk. If everything works for you through Moscow, then 99 percentile RTT - 1.3 seconds. Through the CDN, everything works much faster (0.4 seconds).

Is it always better to use a p2p connection and not use a server? An interesting example is the two Krasnoyarsk providers Optibyte and Mobra (perhaps the names have been changed). For some reason, the connection between them on p2p is much worse than through MSK. Probably, they are not friends with each other.

We analyzed all such cases, randomly sending users to p2p or via MSK, collected statistics and built predictions. We know that statistics need to be updated, so we specifically set up different connections for some users to check if something has changed in the networks.

We measured such simple characteristics as round time, packet loss, bandwidth - it remains to learn how to compare them correctly.

How to understand which is better: 2 Mbit / s of the Internet, 400 ms RTT and 5% packet Loss or 100 Kbit / s, 100 ms delay and meager packet loss?

There is no exact answer, the video call quality assessment is very subjective. Therefore, after the end of the call, we asked users to evaluate the quality in asterisks and set up constants based on the results. It turned out that, for example, RTT is less than 300 ms - it does not matter anymore, bitrate is more important.

Higher average user ratings on Android and iOS. It can be seen that iOS users often put the unit and more often the top five. I do not know why, probably, the specificity of the platform. But with them we adjusted the constants so that we have, as it seems to us, good.

Back to our article plan, we are still discussing the network.

What is the connection setup?

Sent to PeerConnection () STUN and TURN servers, a connection is being established. Alice finds out her IP, sends it to signaling; Bob learns about Alice's IP. Alice gets Bob's IP. They exchange packets, perhaps ping NAT, perhaps set TURN and communicate.

In the 5 steps of the connection setup, which we discussed earlier, we sorted out the servers, understood where to get them, and that the ICE candidates are external IP addresses that we exchange via signaling. The internal IP addresses of clients, if they are within the range of one Wi-Fi, you can also try to punch.

We turn to the video.

Video and audio

WebRTC supports a certain set of video and audio codecs, but you can add your own codec there. Basicly supported are H.264 and VP8 for video . VP8 is a software codec, so it consumes a lot of battery. H.264 is not available on all devices (usually it is native), so the default priority is set to VP8.

Inside SDP (Session Description Protocol) there is a codec negotiation: when one client sends a list of their codecs, another - their own with priority, and they agree on which codecs they will use to communicate. If desired, you can change the priority of the VP8 and H.264 codecs, and thus you can save battery on some devices, where 264 is native. Here is an example of how this can be done. We did it, it seemed to us that users did not complain about the quality, but at the same time, the battery charge is much less.

For audio, WebRTC has OPUS or G711 , usually all OPUS always works, you don’t need to do anything with it.

Lower temperature measurements after 10 minutes of use.

It is clear that we tested different devices. This is an example of an iPhone, and on it, the OK application spends the least battery on it, because the temperature of the device is the least.

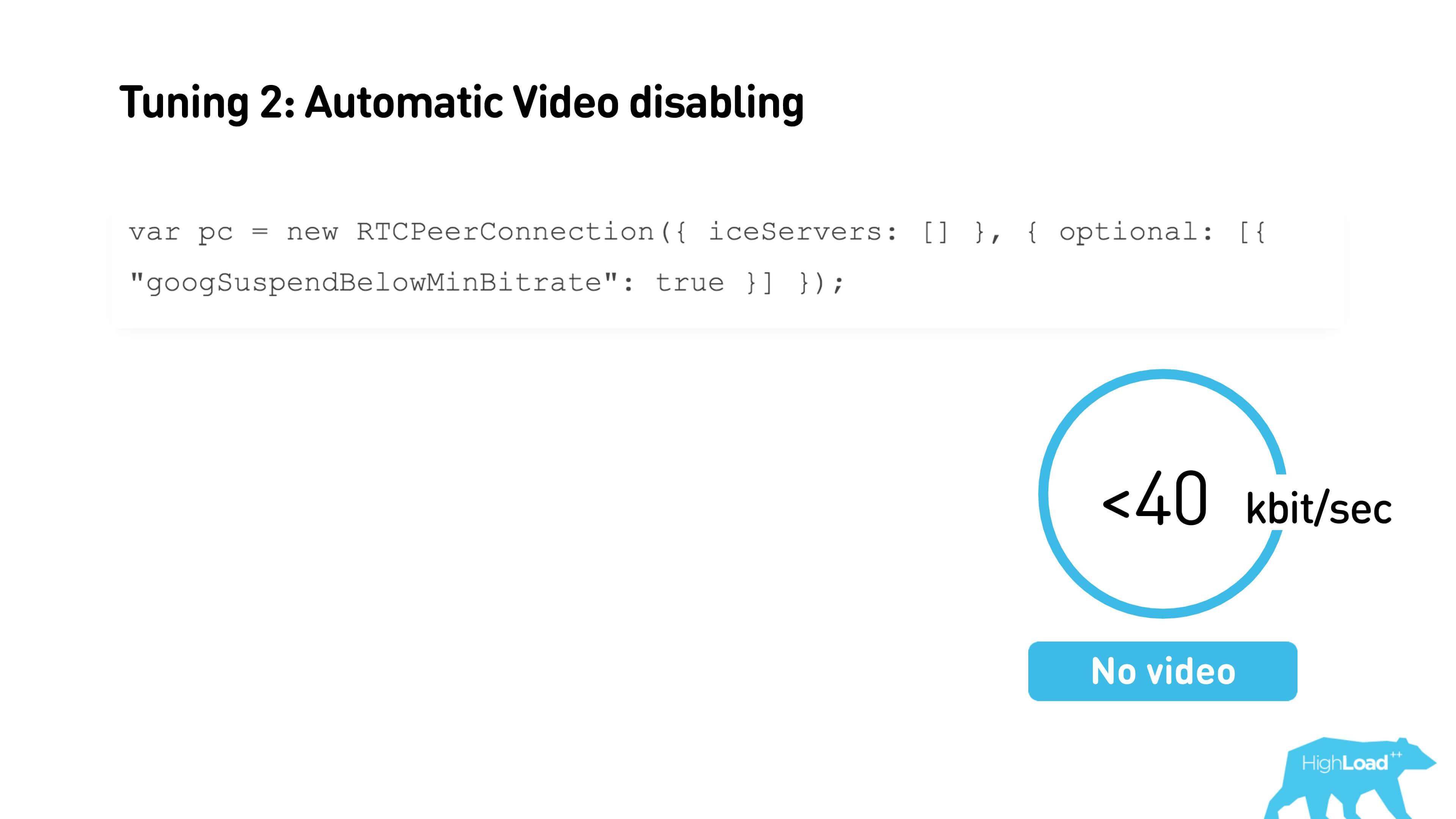

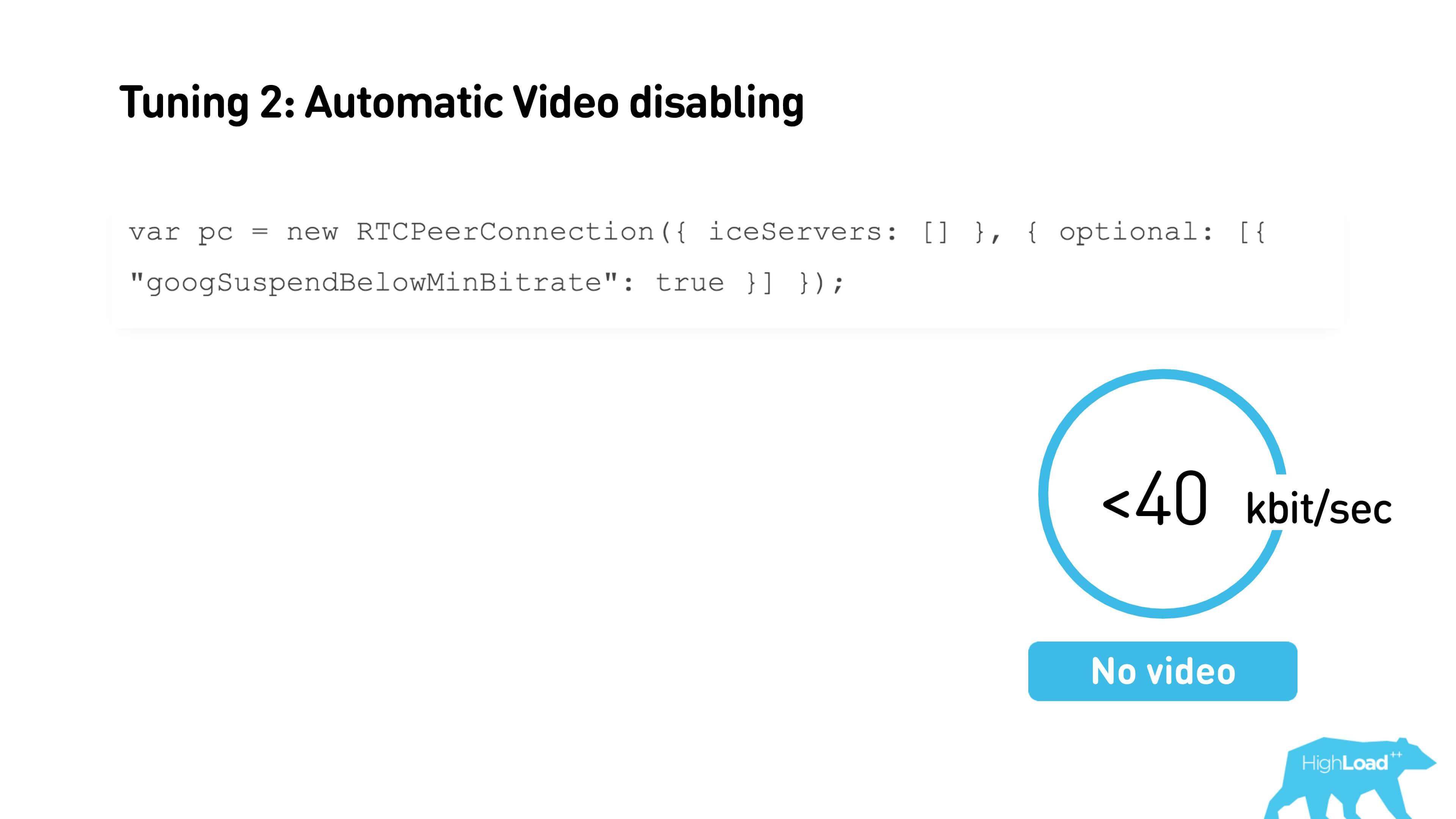

The second thing you can turn on if you use WebRTC is to automatically turn off the video during a very bad connection .

If you have less than 40 Kbps, the video will turn off. You just need to check the box when creating a connection, the threshold value can be configured through the interface. You can also set the minimum and maximum starting current bitrate.

This is a very useful thing. If you set up a connection and you know in advance what bitrate you expect, you can transfer it, the call will start from it, and you will not need to adapt the bitrate. Plus, if you know that you often have packet loss or bandwidth subsidence on your channel, then the maximum value can also be limited.

WhatsApp works with a very soapy video, but with small delays, because the bitrate is aggressively pressing upward.

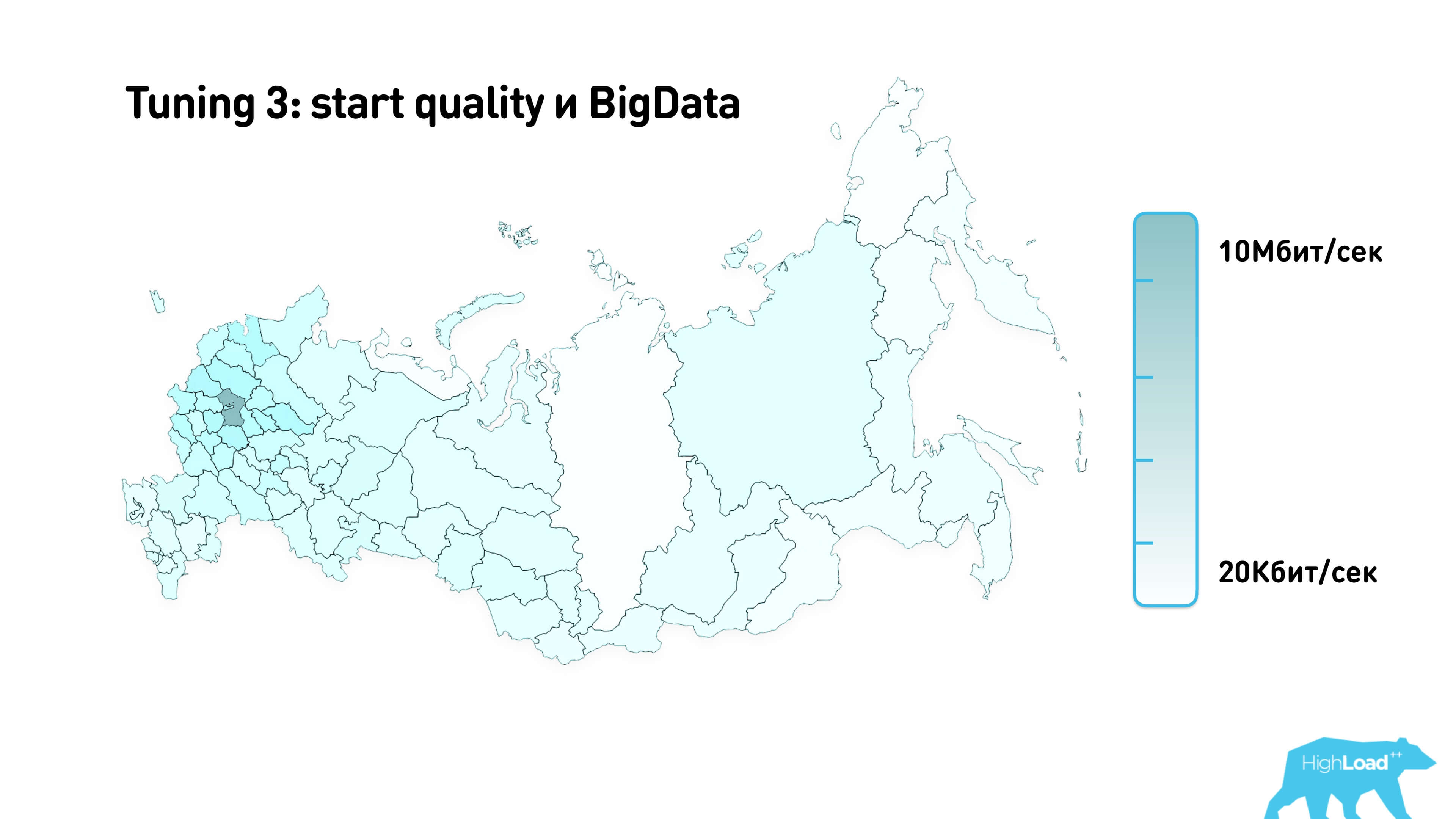

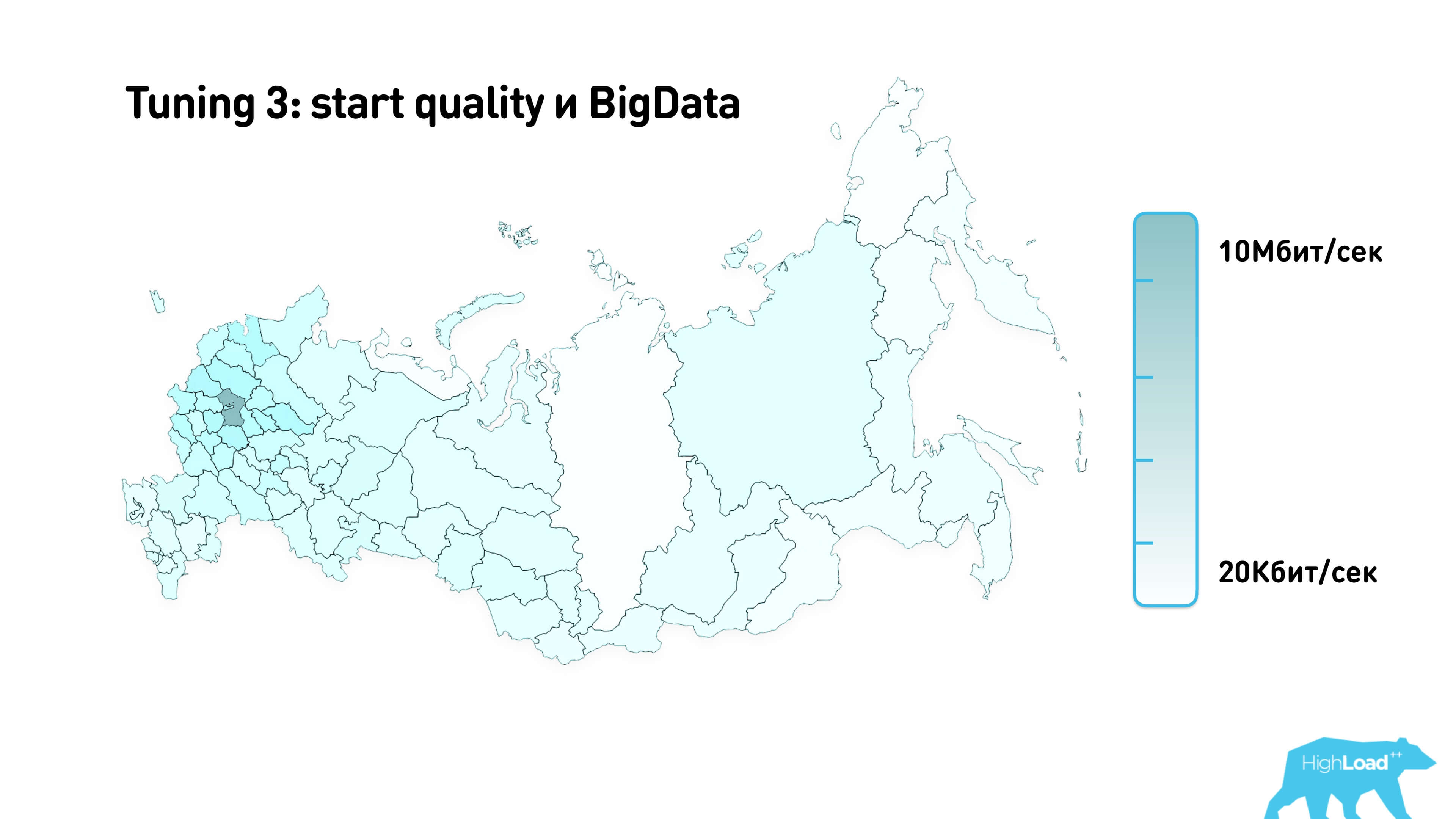

We collected statistics with the help of MaxMind and mapped it.

This is an approximate starting quality that we use for calls in different regions of Russia.

Signaling

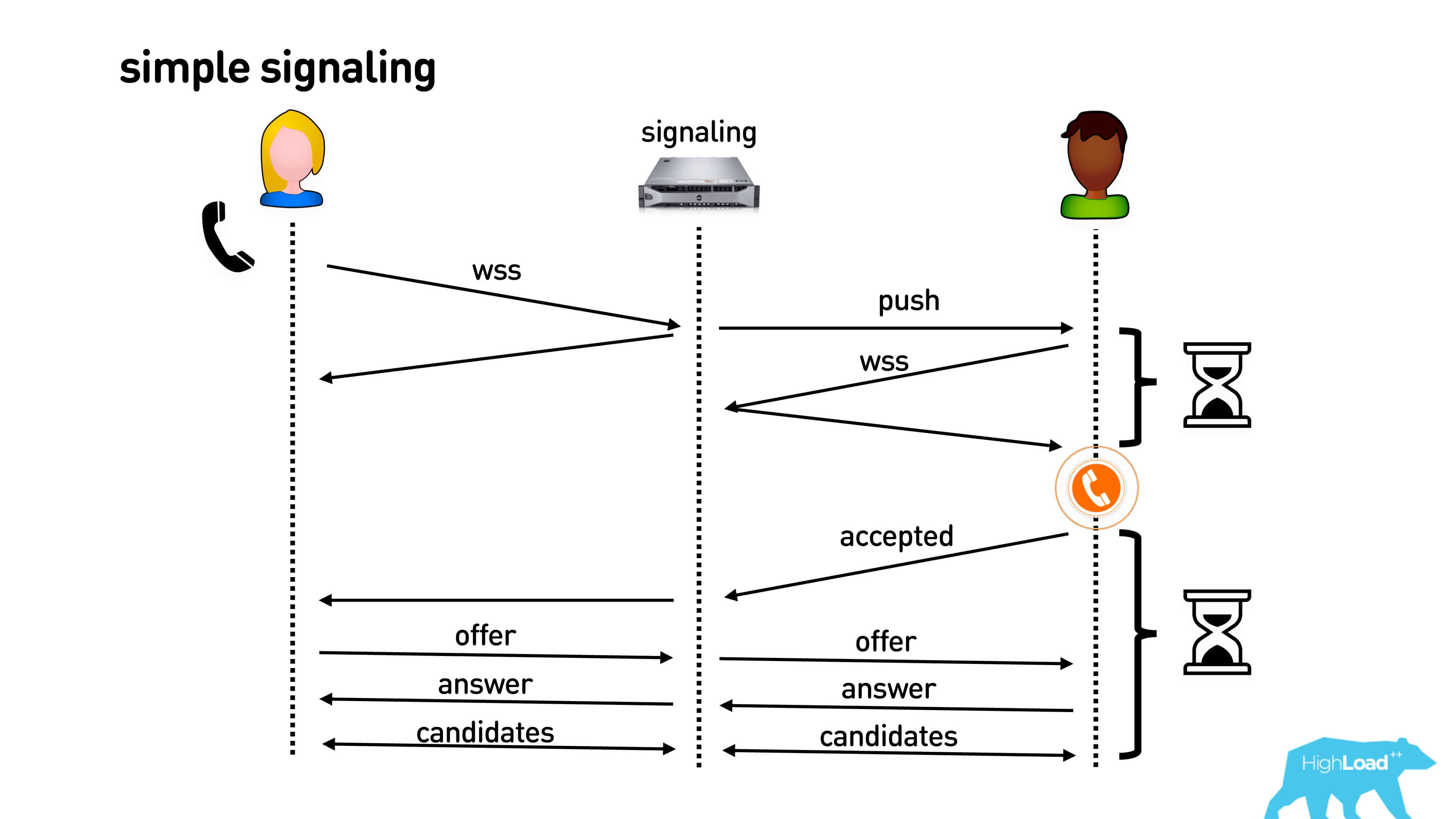

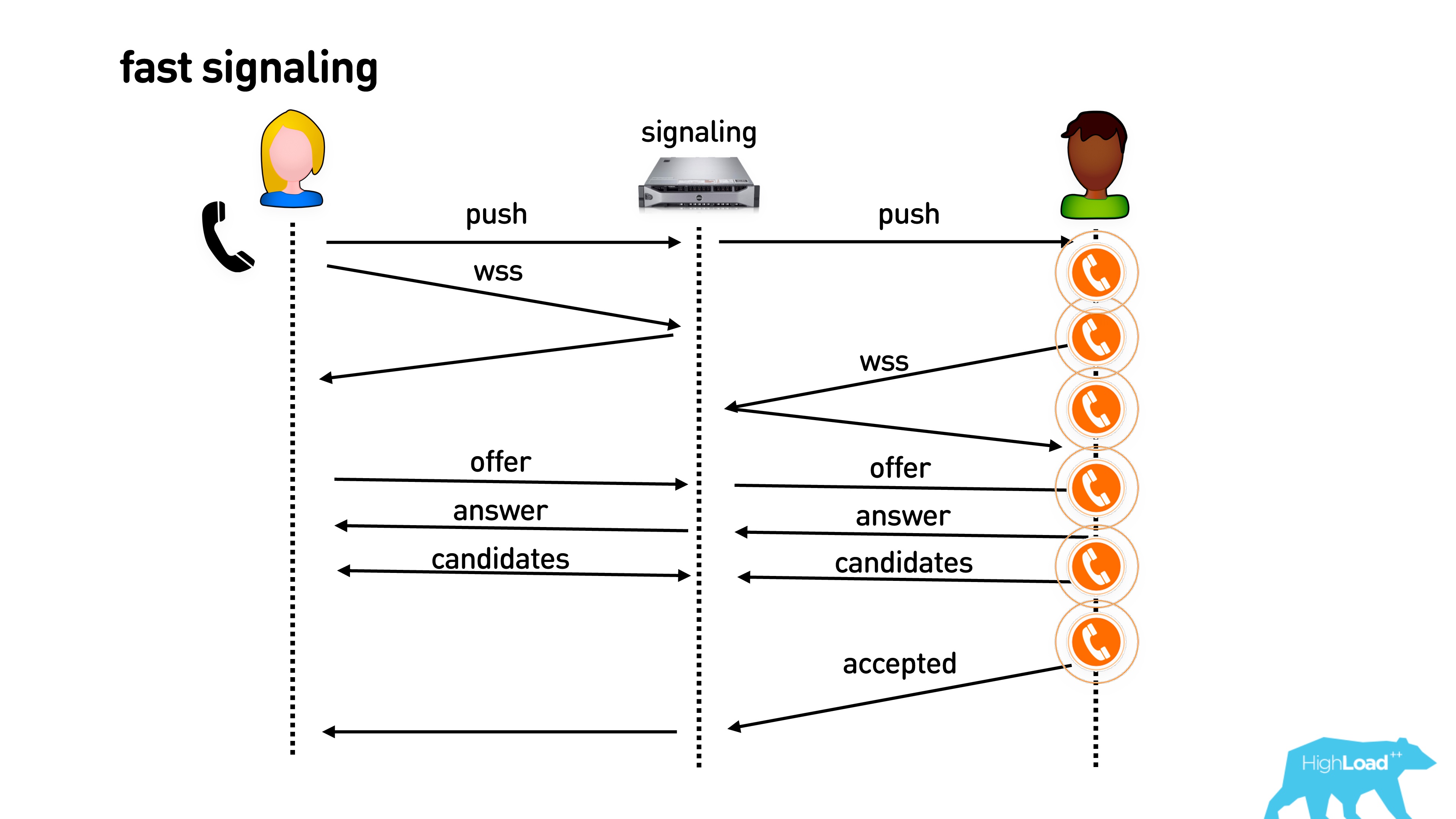

You will most likely have to write this part if you want to make calls. There are all sorts of pitfalls. Recall what it looks like.

There is an application with signaling that connects and exchanges with SDP, and the SDP below is an interface to WebRTC.

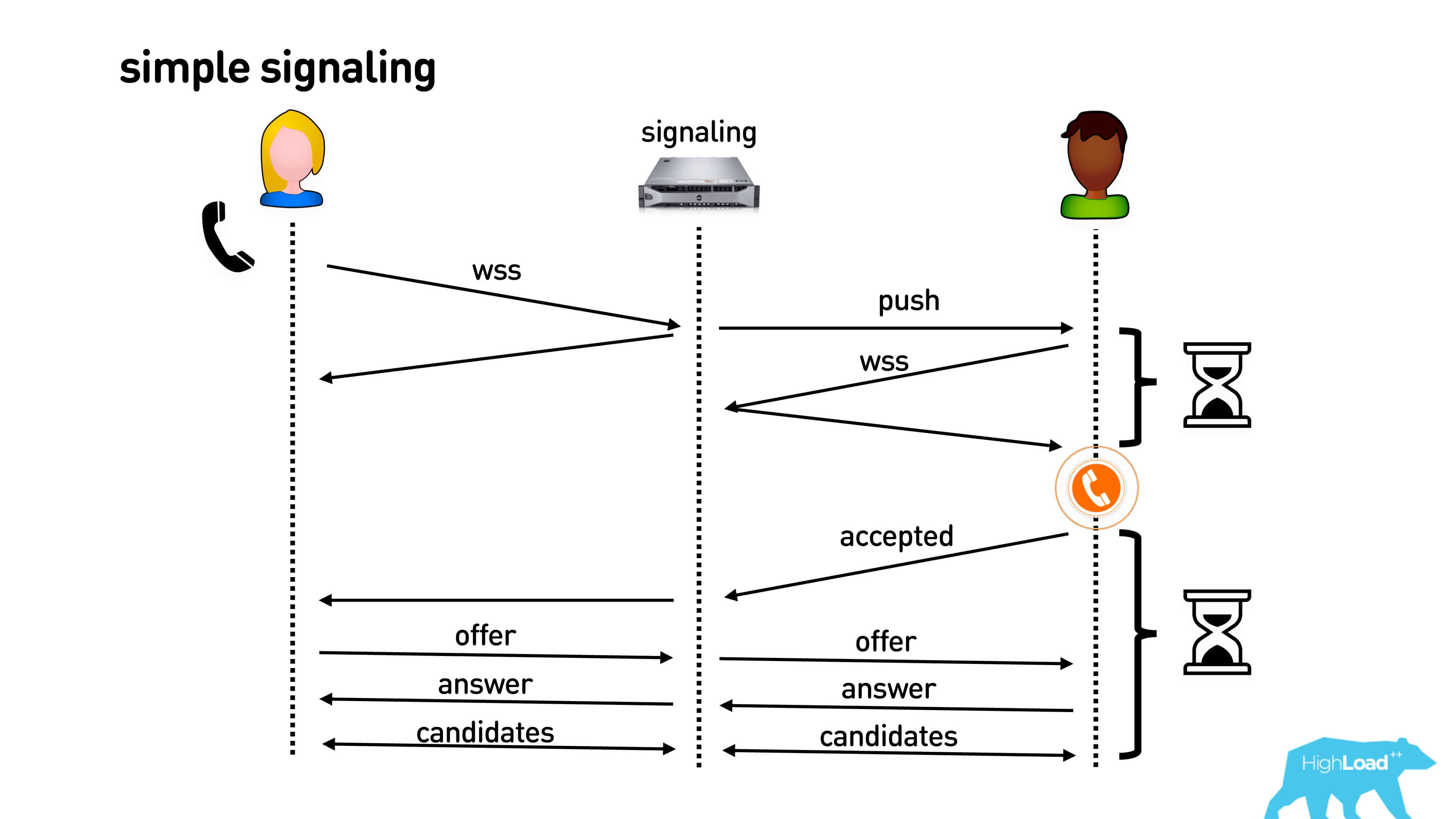

This is simple signaling:

Alice calls Bob. It connects, for example, via a web-socket connection. Bob gets a push to his mobile phone or to the browser, or to some kind of open connection, connects via web-socket and after that he starts to ring the phone in his pocket. Bob picks up the phone, Alice sends him his codecs and other features of WebRTC that she supports. Bob answers her the same way, and after that they exchange the candidates they see. Hooray, call!

It all looks pretty long. First, until you set up a web-socket connection, until a push comes in and everything else, Bob will not ring the phone in his pocket. Alice will be waiting all the time, thinking where is Bob, why he doesn’t pick up the phone. After confirmation, it all takes seconds, and even on good connections it can be 3-5 seconds, and on bad connections, all 10.

We must do something about it! You will tell me that everything can be done very simply.

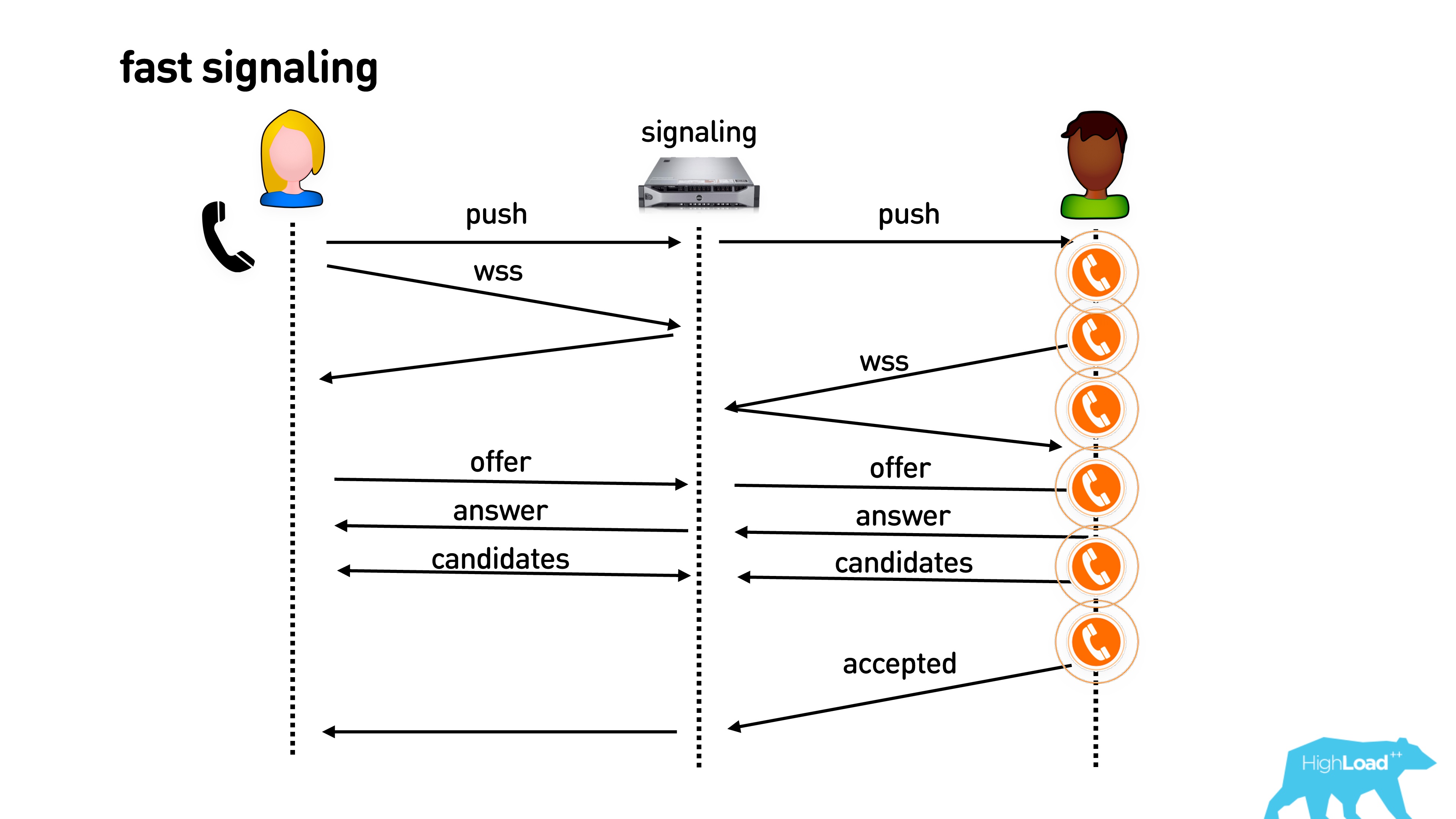

If you already have an open connection to your application, you can immediately send a push to establish a connection, connect to the signaling server you need and immediately start calling.

Then another optimization. Even if the phone is still ringing in your pocket and you didn’t pick up the phone, you can actually exchange information about supported codecs, external IP addresses, start sending empty video packets, and in general everything will be warm with you. As soon as you pick up the phone, everything will be great.

We did it, and it seemed that everything was cool. But no.

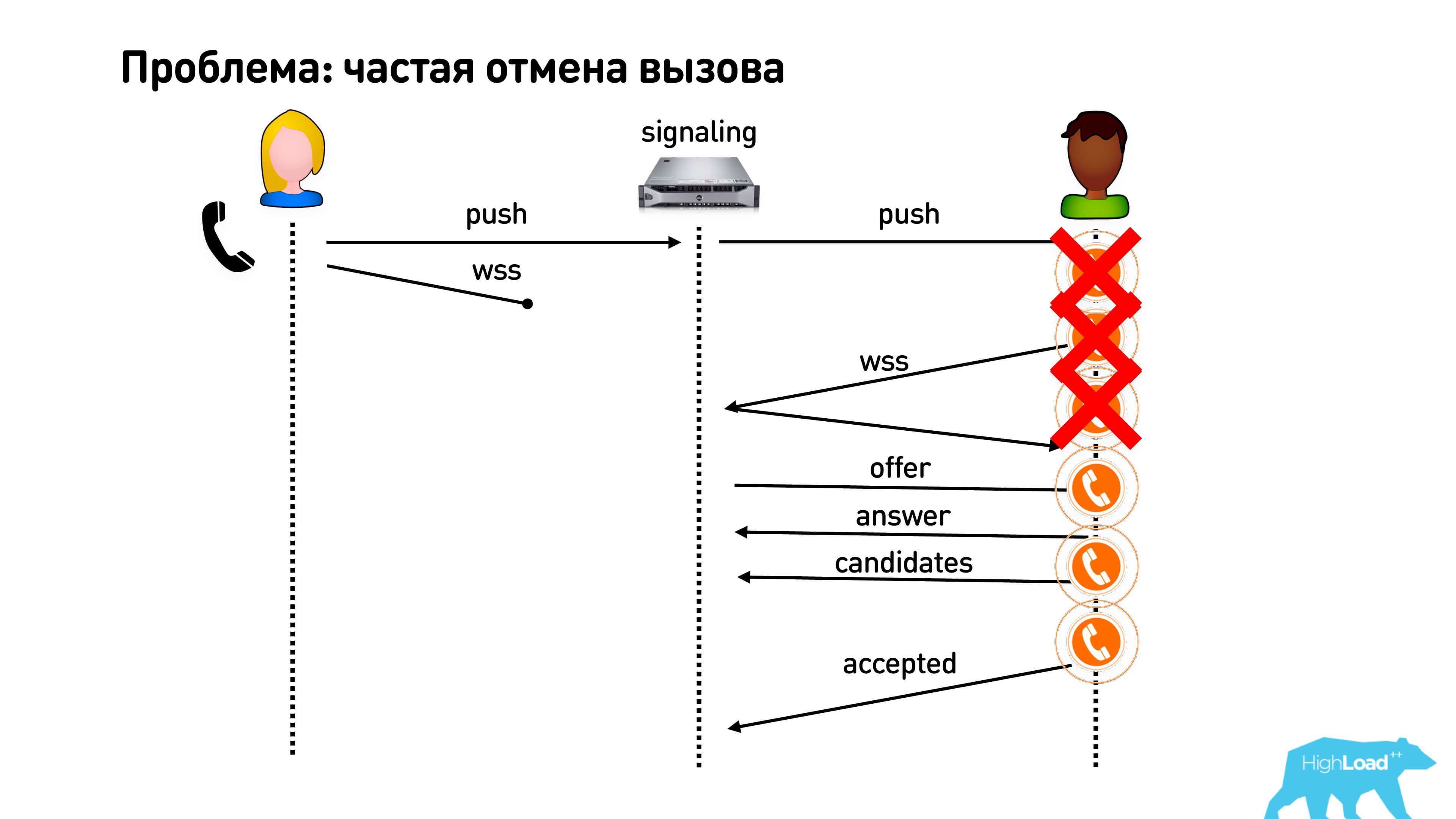

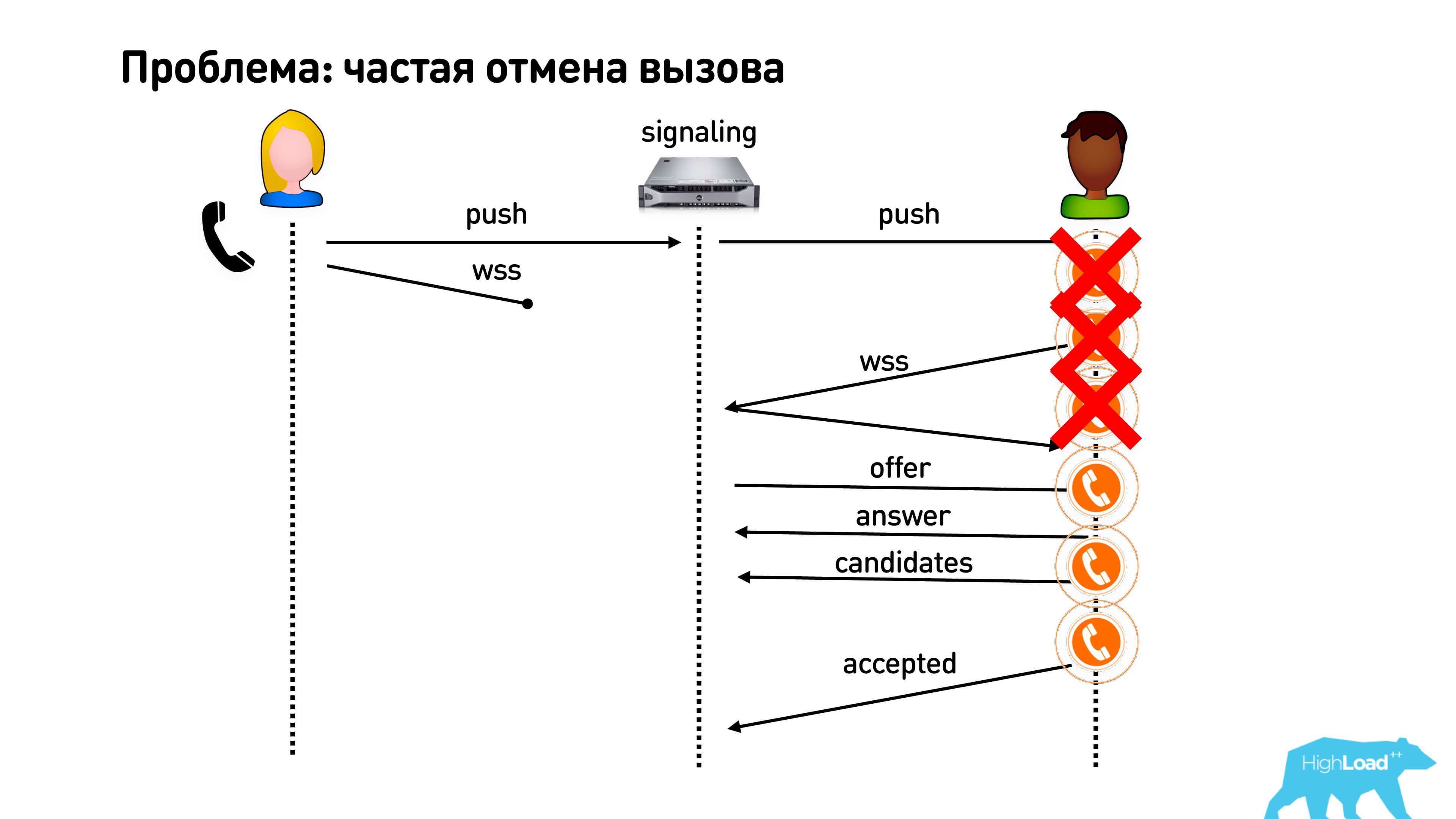

The first problem - users often cancel the call. They click "Call" and immediately make the cancellation. Accordingly, the push goes to the call, and the user disappears (the Internet or something else disappears from him). In the meantime, someone has a phone ringing, he picks up the phone, and they don’t wait for him there. Therefore, our primitive optimization in order to start calling as soon as possible does not really work.

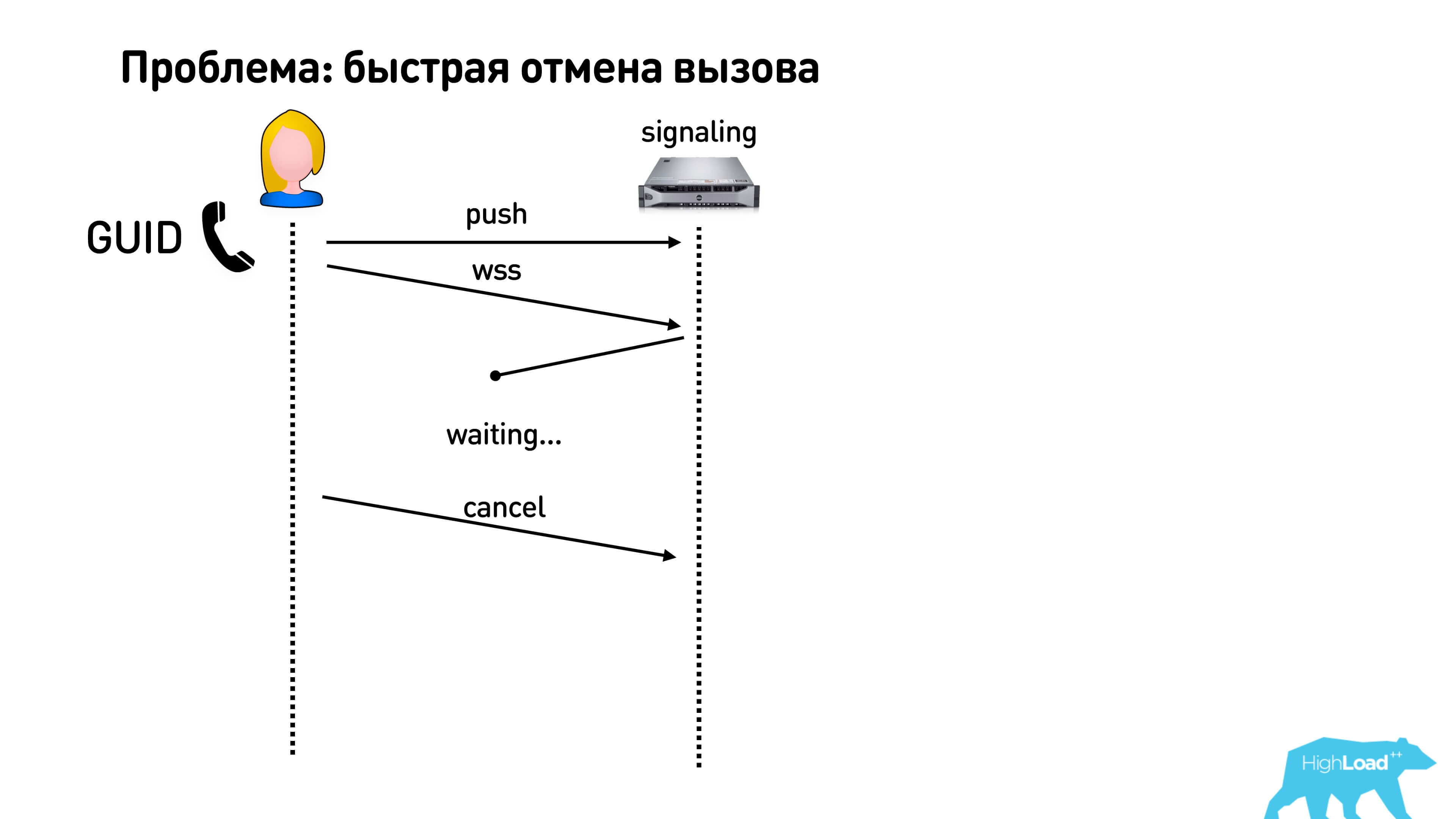

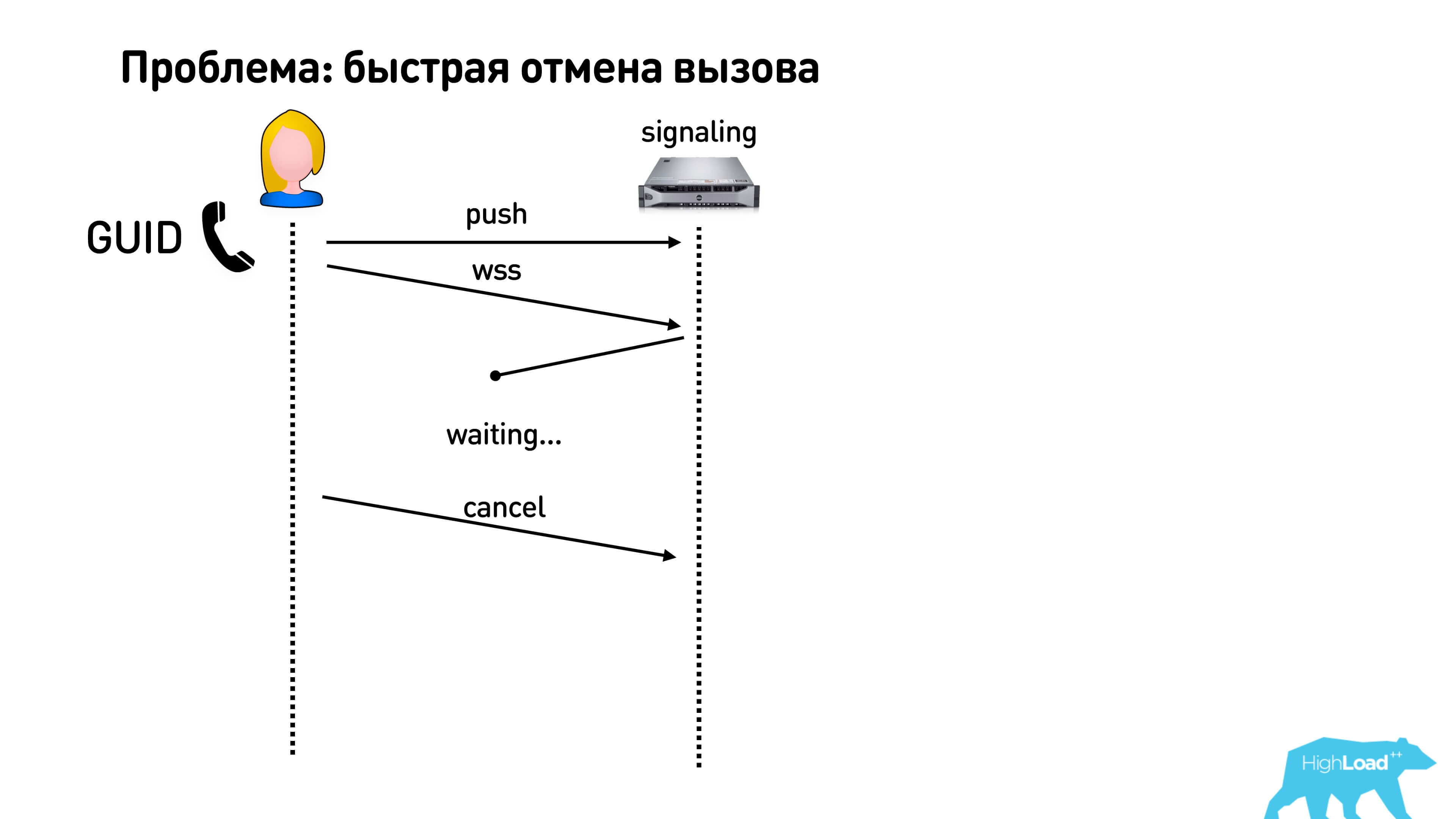

With a quick cancel call there is a second harmful thing. If you generate your conversation ID on the server, then you need to wait for a response. That is, you create a call, get an ID, and only after that you can do whatever you want: send packets, exchange, including, cancel the call. This is a very bad story, because it turns out that as long as your response has not come, you cannot actually cancel anything from the client. Therefore, it is best to generate some kind of ID on a client like GUID and say that you started the call. People still often do this: he called, canceled and immediately called again. To avoid confusion, make a GUID and send it.

It seems to be nothing, but there is another problem. If Bob has two phones, or somewhere else the browser remains open, then our entire magic scheme in order to exchange packets, establish a connection, does not work if he suddenly responded from another device.

What to do? Let us return to our basic simple signaling slow scheme and optimize it, send a push a little bit earlier. The user will start to connect faster, but it will save some pennies.

What to do with the longest part after he picked up the phone and started the exchange?

You can do the following. It’s clear that Alice already knows all her codecs and can send them to both Bob’s phones. She can cut all her IP addresses and send them to the signaling too, which will keep them in her queue, but will not send to any of the clients so that they start to establish a connection with her ahead of time.

What can bob?Having received the offer, he can see what codecs were there, generate his own, write what he has, and send it too. But Bob has two phones, and there are different codec negotiation, so signaling will keep it all on himself and will keep in line until he finds out on which device he picked up the phone. Candidates of their own will also generate both devices and send them to signaling.

Thus, it turns out that signaling has one message queue from Alice and several message queues from Bob on different devices. He stores all this, and as soon as one of these devices is picked up, he simply transfers the entire set of already prepared packets.

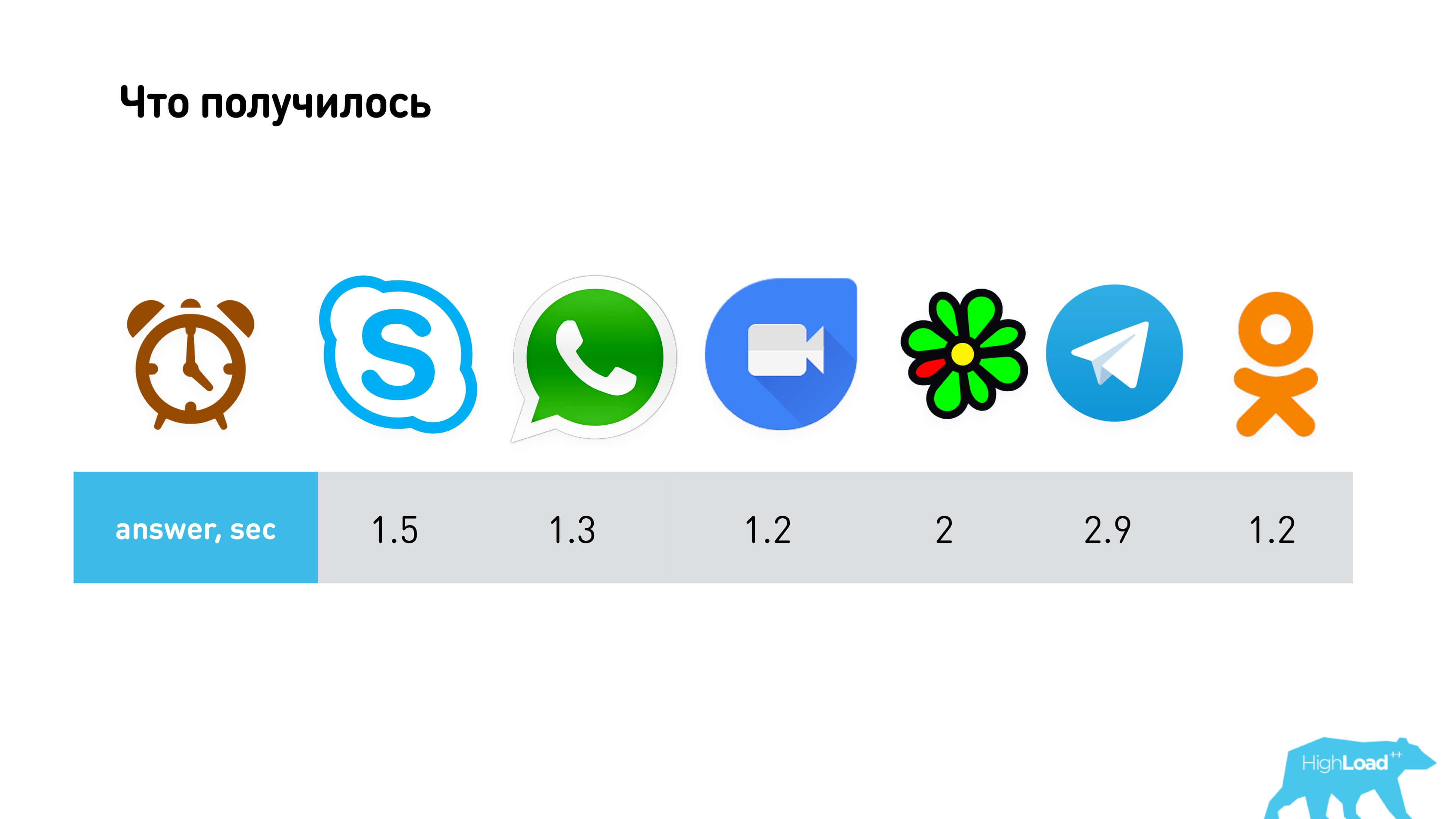

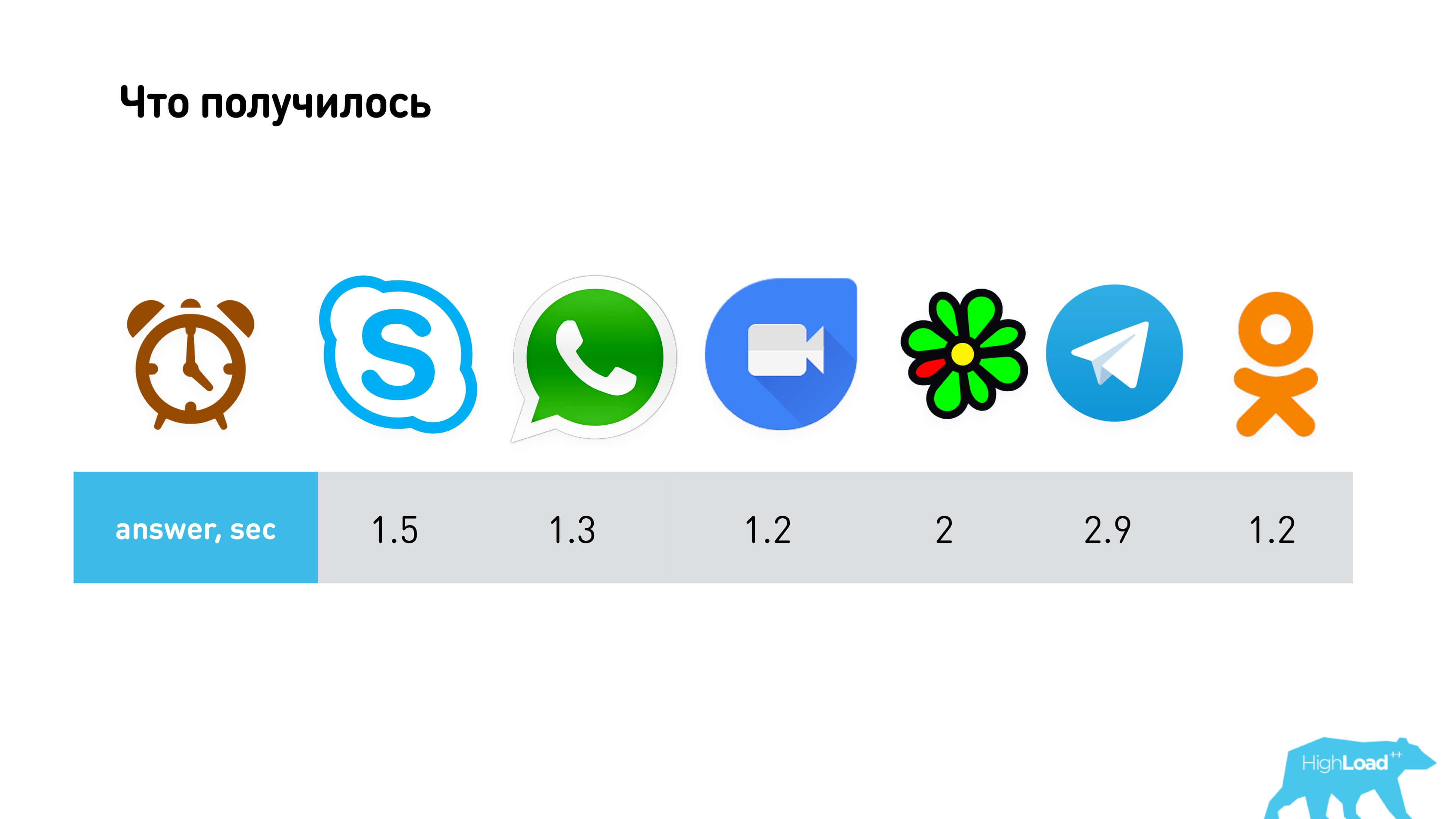

It works pretty fast. We got such an algorithm to reach the characteristics similar to Google Duo and WhatsApp.

Perhaps you can think of something else better. For example, several queues do not keep on signaling, but send them to the client, and then say which number, but most likely, the gain will be very small. We decided to stop at this.

What other problems await you?

There is such a thing as a counter call: one calls the other, the other calls back. It would be great if they did not try to compete - at the signaling level, add a command that says that if someone came second, you need to switch to the mode when you just accept the call and immediately pick up the phone.

It so happens that the network disappears, messages are lost, so everything must be done through the queue. That is, you must have a send queue on the client. Messages that you send from a client should be removed from the queue only after the server has confirmed that it has processed them. The server also has a queue for sending, and also with confirmation.

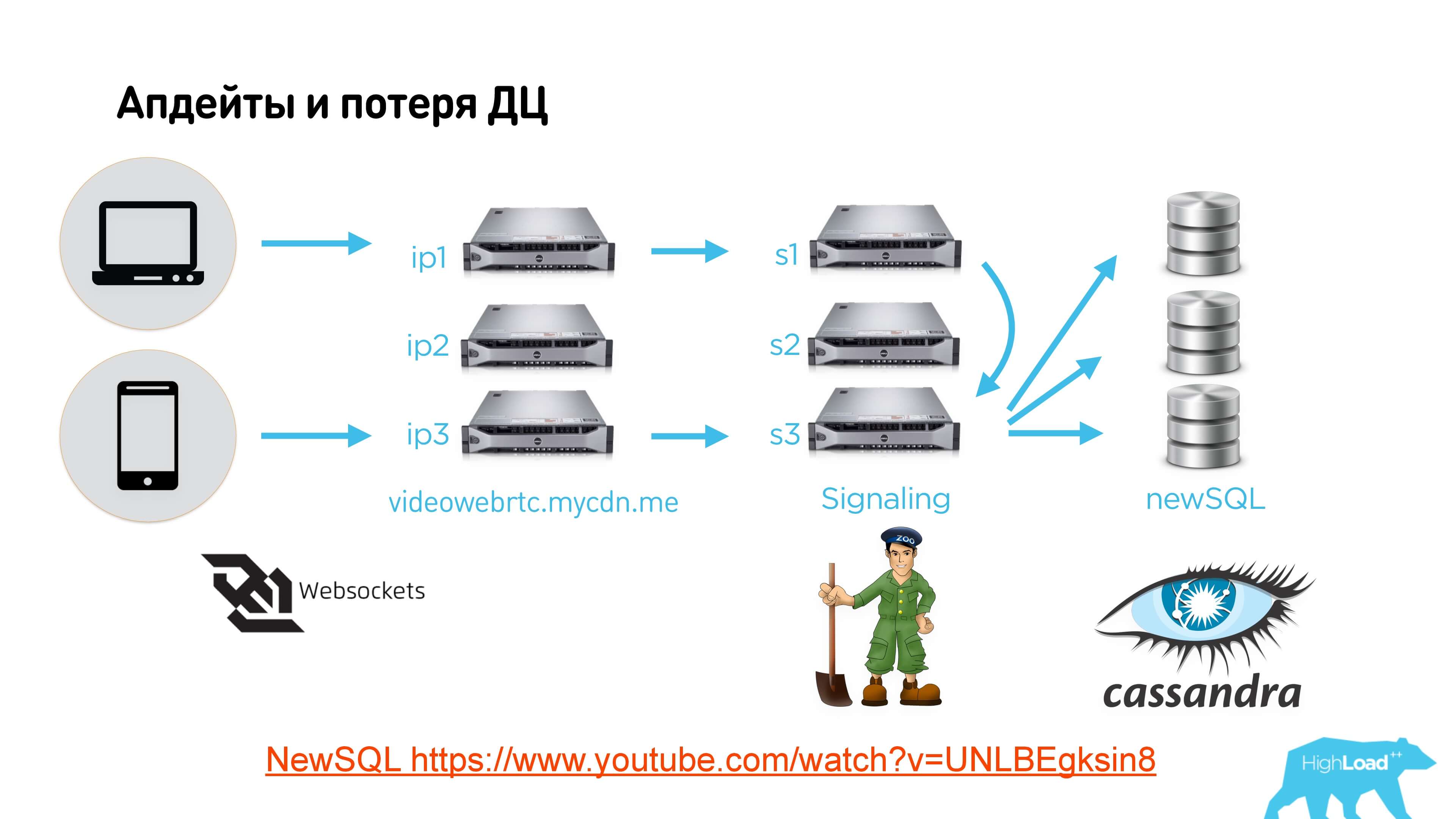

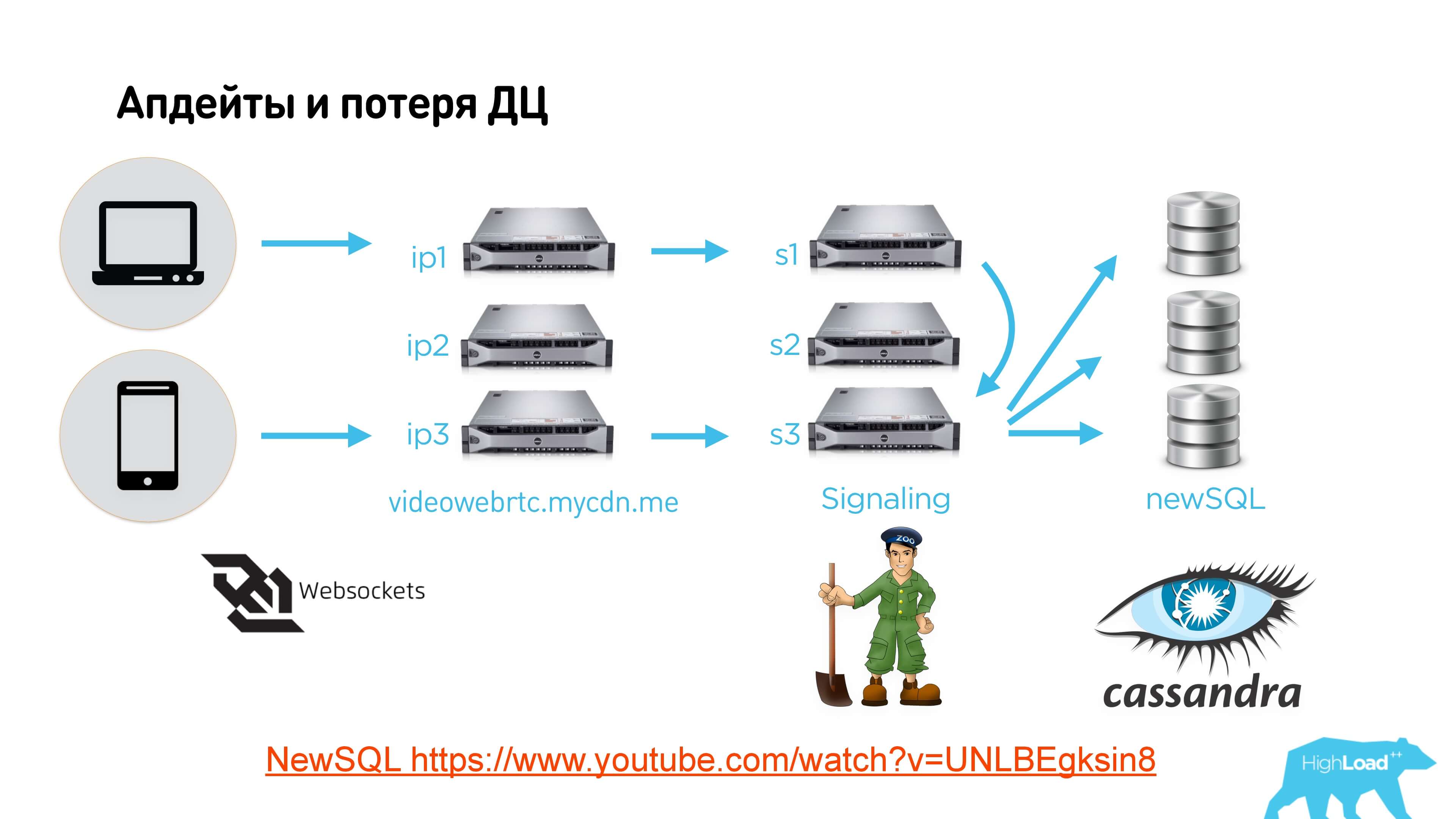

So this is all implemented inside of us, given that we have a 24/7 service, we want to be able to lose data centers, shift and update the version of our software.

Link on the slide to the video and link to the text version

Clients connect via web-socket to some kind of load balancer, it sends to signaling servers in different data centers, different clients can come to different servers. At Zookeeper, we do Leader Election, which defines the signaling server that is currently managing this conversation. If the server is not the leader of this conversation, it simply passes all messages to another.

Next we use some distributed storage, we have this NewSQL on top of Cassandra. Actually no matter what to use. You can save anywhere the status of all the queues that are on signaling, so that if the signaling server goes down, the electricity goes down or something else happens, Leader Election will work on Zookeeper, another server will rise, which will become the leader, will restore all queues from the database messages and start sending.

The algorithm looks like this:

All packages are supplied with unique numbers, so as not to be confused.

From the point of view of the database, we use an add-on over Cassandra, which allows you to make transactions on it (the video is just about that).

So you found out:

We got:

Great!

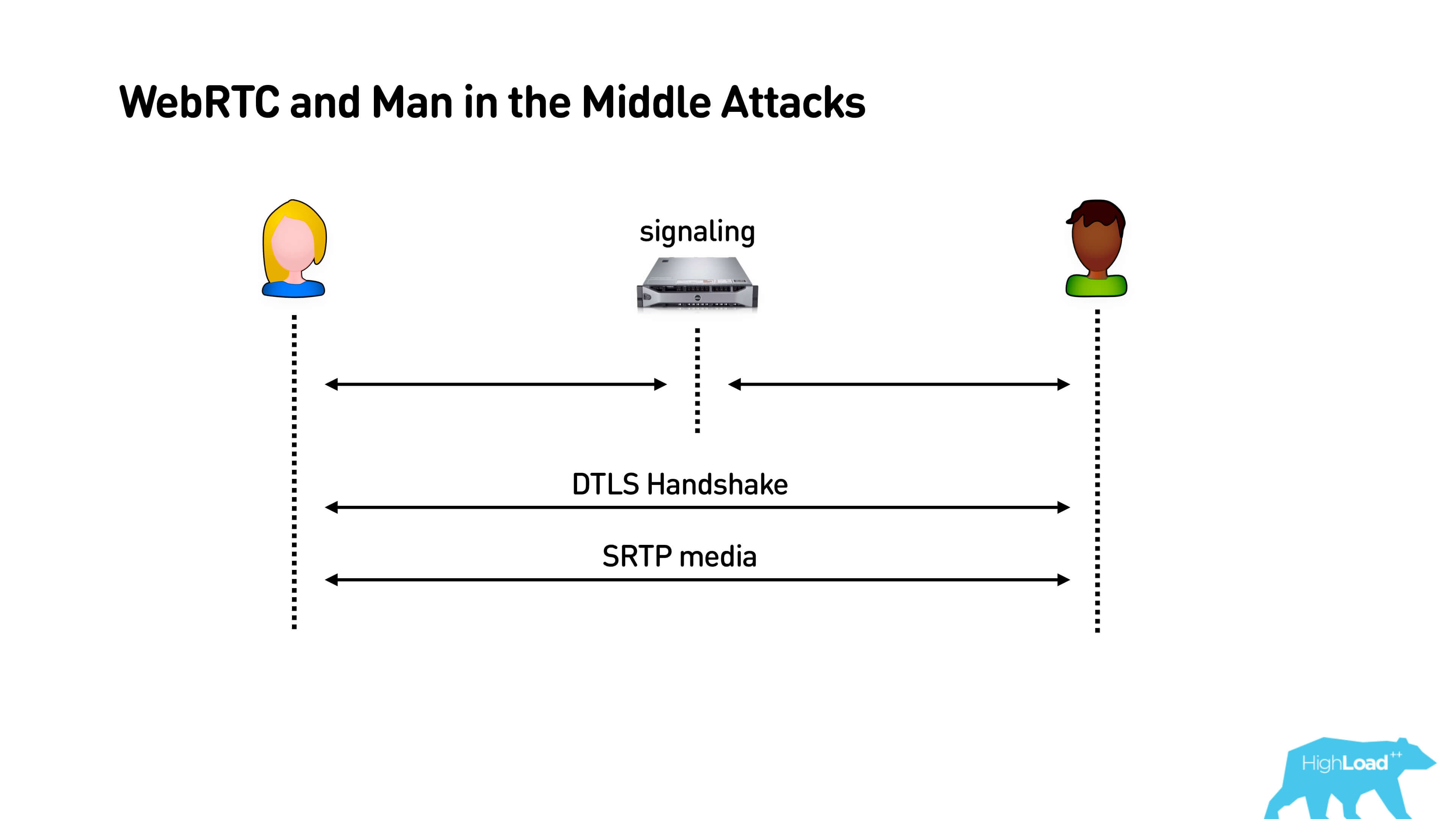

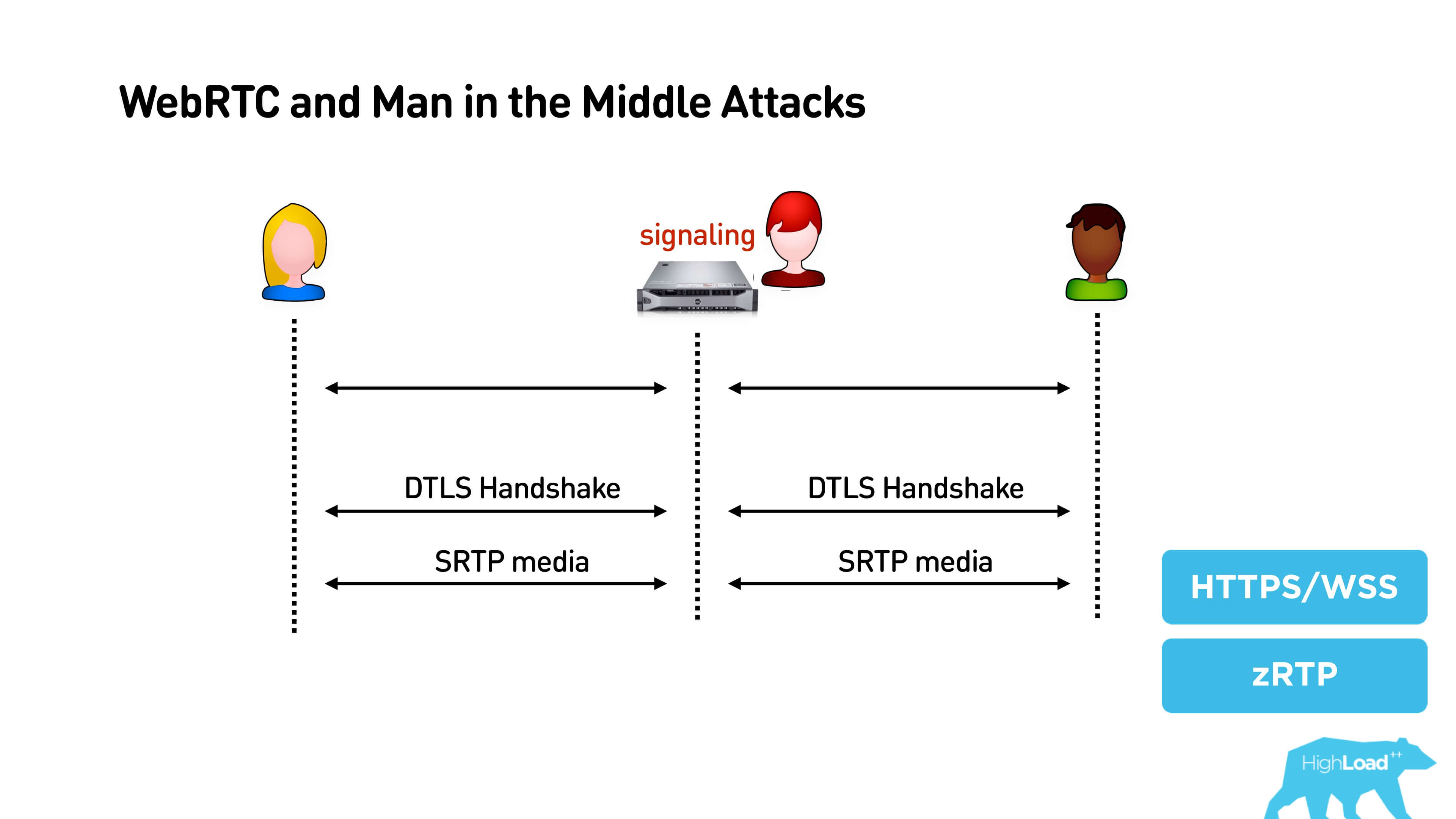

Security. Man in the middle attack for WebRTC

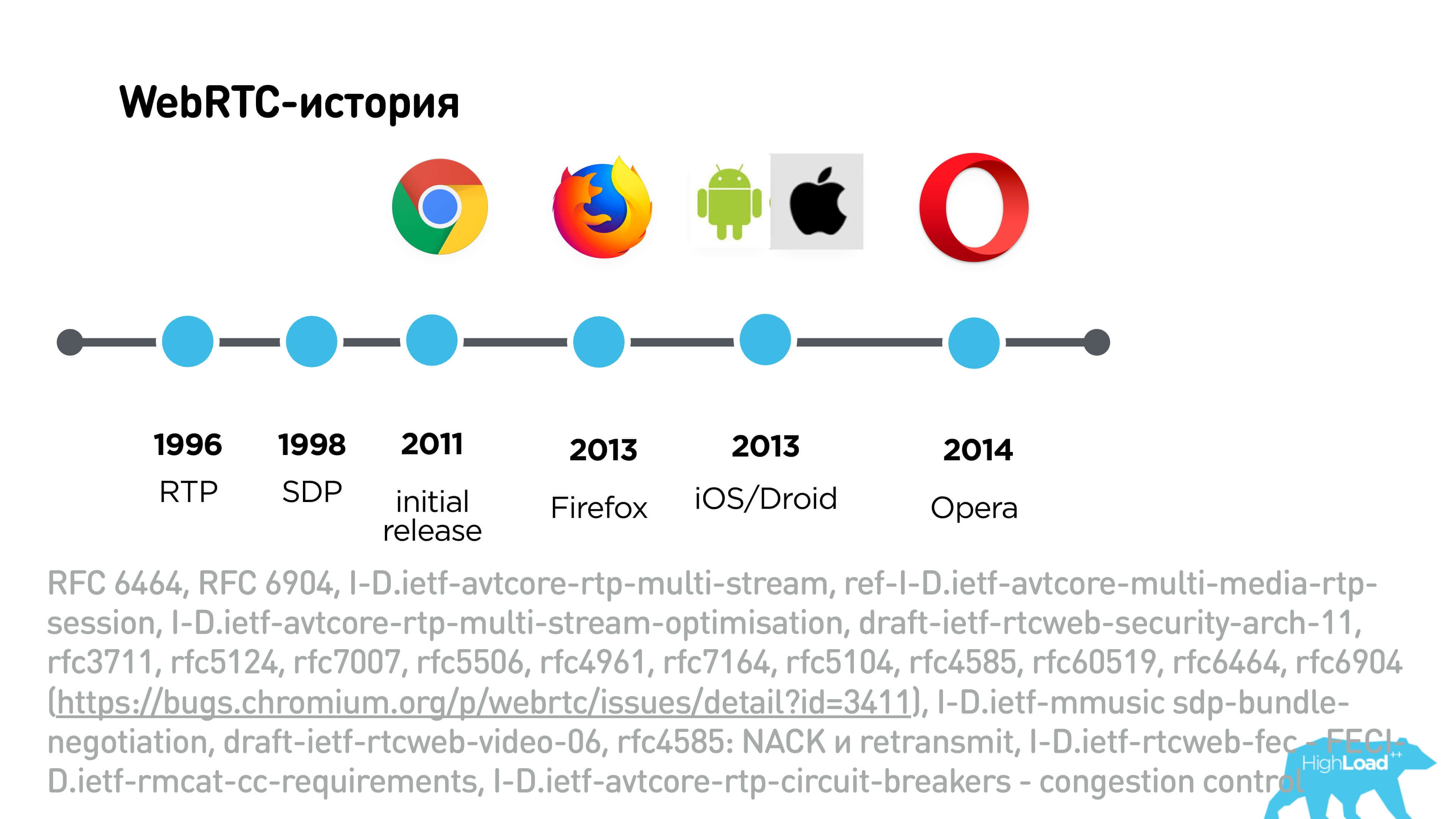

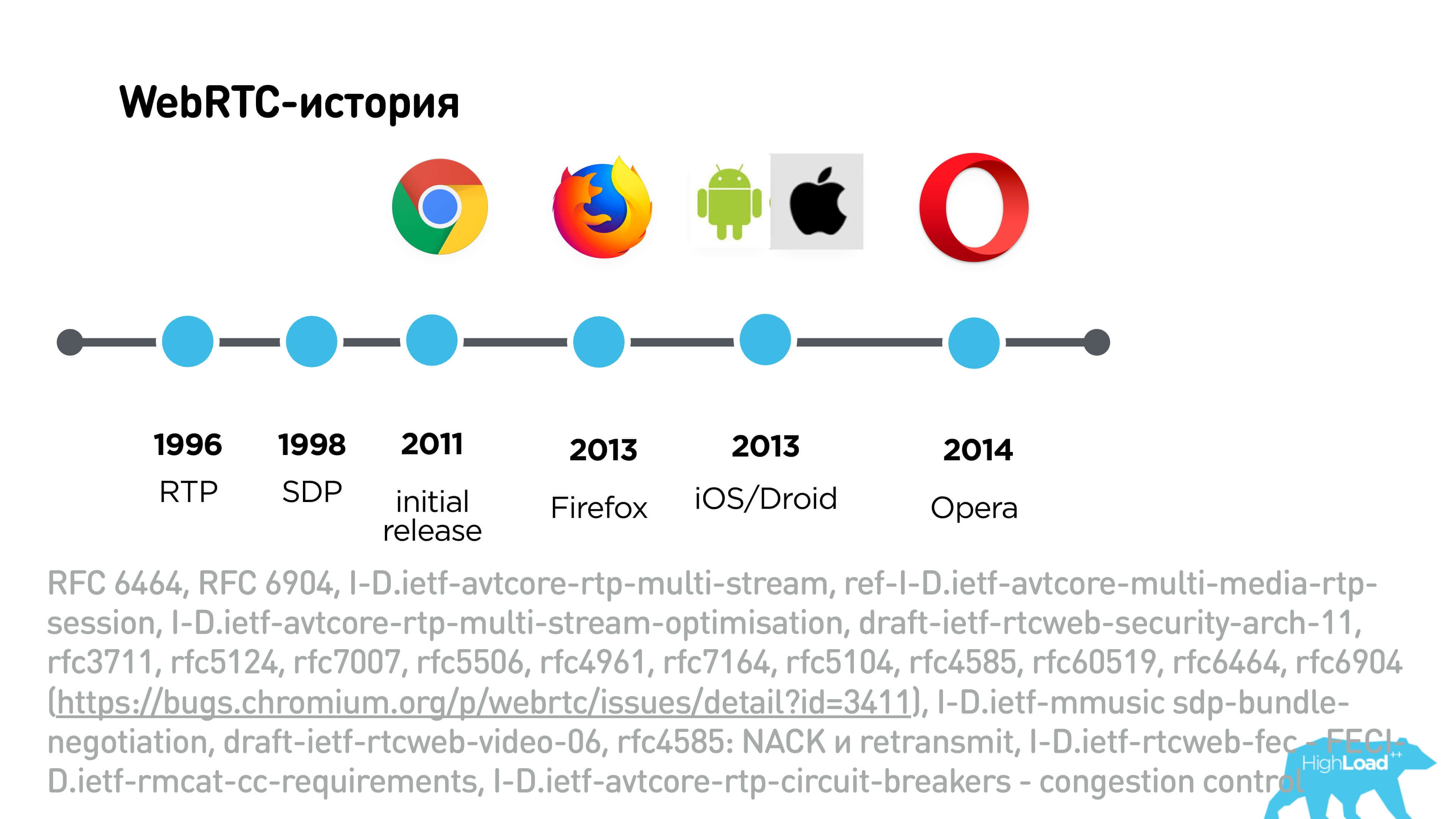

Let's talk about man in the middle attack for WebRTC. In fact, WebRTC is a very difficult protocol in terms of the fact that it is based on RTP, which is still 1996, and SDP came in 1998 from SIP.

The huge list below is a bunch of RFCs and other RTP extensions that make WebRTC out of RTP.

The first on the list are two interesting RFCs - one of them adds audio levels to packets, and the other says that it is not safe to transmit audio levels openly in packets and encrypts them. Accordingly, when you exchange SDP, it is important for you to know which set of extensions clients support. There are even several congestion algorithms, several algorithms for recovering lost packets and everything.

The history of WebRTC was complicated. In 2011, the first draft release was released, in 2013, this protocol was supported by Firefox, then it began to build on iOS / Android, in 2014 Opera. In general, it has been developing for many, many years, but it still does not solve one interesting problem.

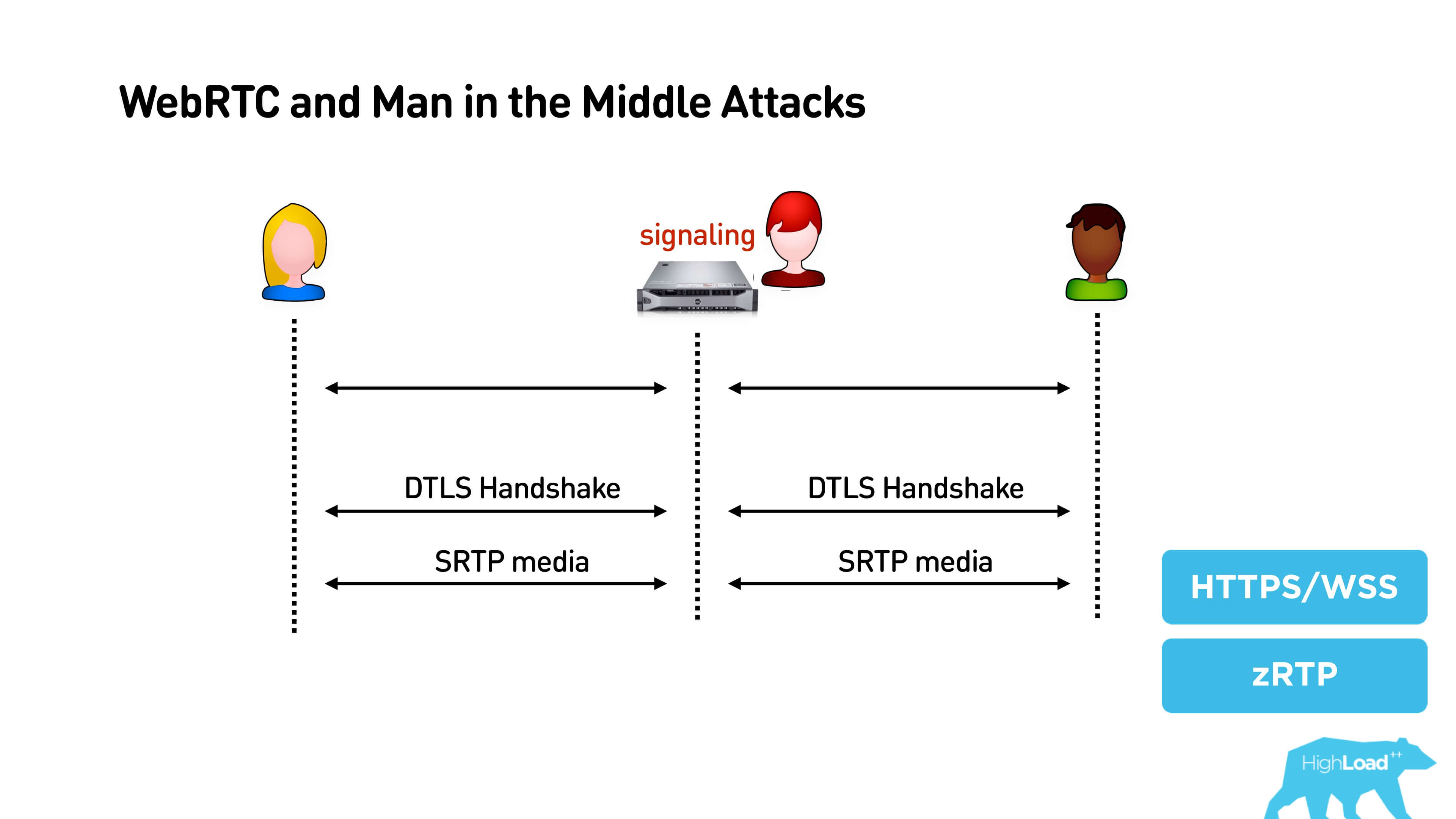

When Alice and Bob connect to signaling, then they use this channel, establish a DTLS Handshake and secure connection. Everything is great, but if it was not our signaling, then in principle a person in the middle has the opportunity to “sniff” both with Alice and with Bob, to send all traffic and listen in on what is happening there.

If you have a high-trust service, then, of course, you should definitely use HTTPS, WSS, etc. There is another interesting solution - ZRTP, it is used, for example, by Telegram.

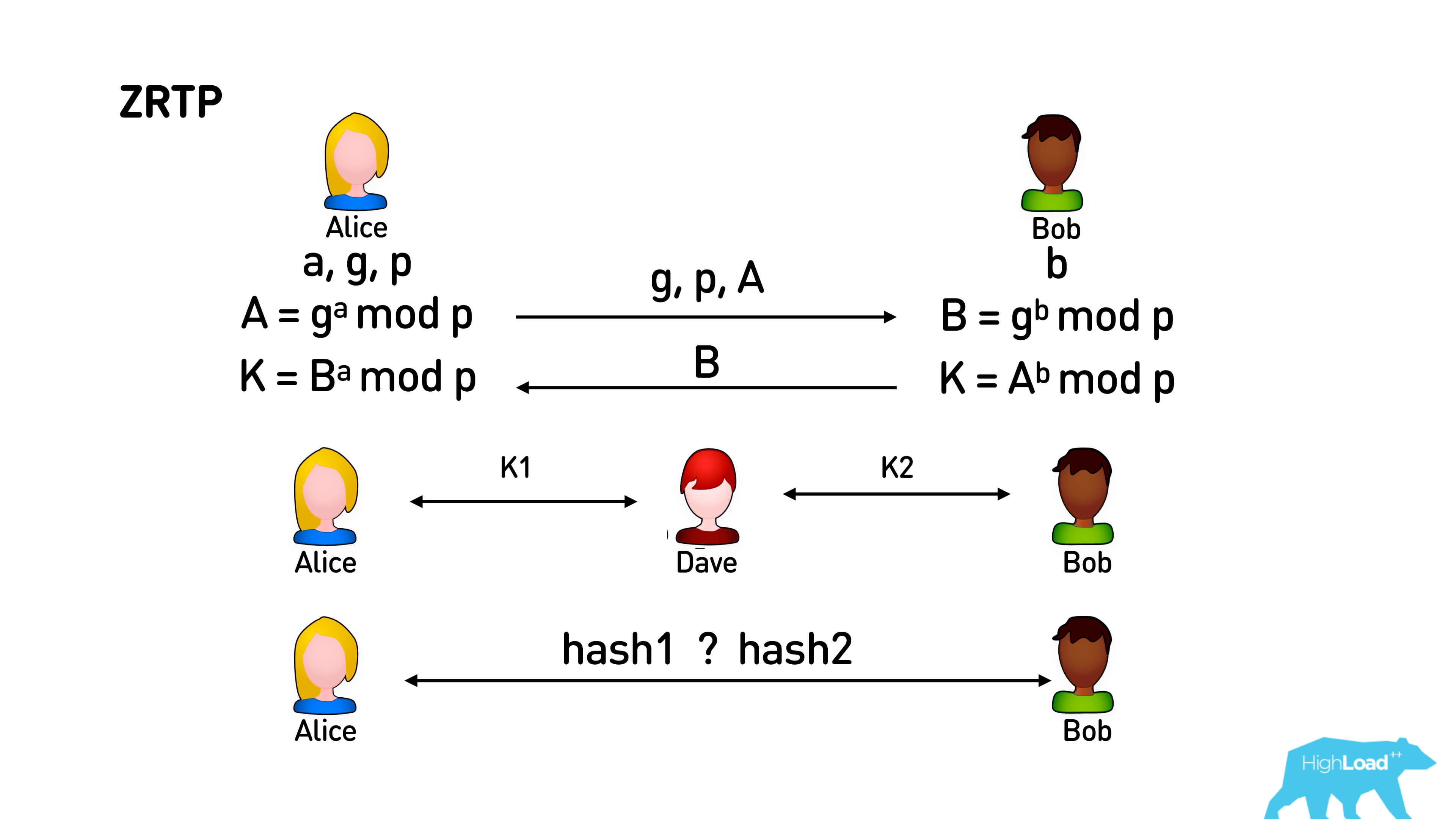

Many have seen Emodji in a Telegram when a connection is established, but few use them. In fact, if you tell a friend what kind of emoji you have, he will check that he has exactly such, then you have absolutely guaranteed secure p2p connection.

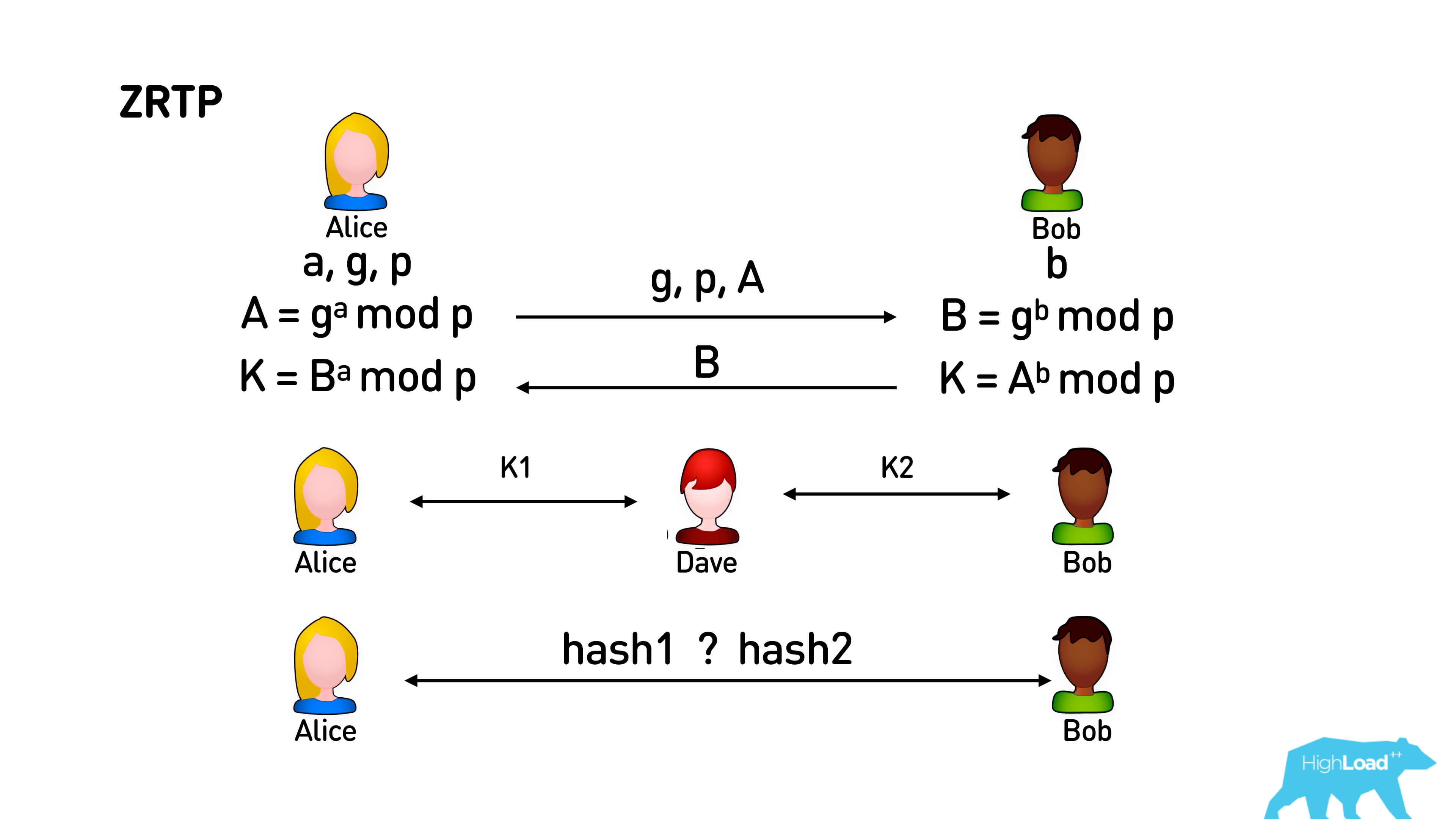

How it works?

Inside all of these protocols, the usual Diffie-Hellman algorithm is initially used. Alice generates some numbers, sends them all but one to Bob. Bob also generates a random number and sends it to Alice. As a result of this exchange, Alice and Bob get some large number K, about which the man in the middle, who listened to their whole channel, knows nothing and cannot guess at all.

When Dave appears between Alice and Bob, they exchange the same keys with him, and they get K 1and K 2 respectively. Track the presence of this person in the middle is not possible. Then this trick is applied. These keys K 1 and K 2 Dave will definitely be different, since Alice and Bob generate their keys randomly. We just take some hash from K 1 and K 2 and display it in emodzhi: in an apple, in a pear - in everything, anything - and people simply use the voice to name those pictures that they see. Since the voice can identify each other, and if these pictures are different, then someone is between you and maybe he is listening to you.

results

The graph shows that first there were old calls to RTMFP, then, when we switched to WebRTC, there is a small failure, and then the peak is up. Not everything worked out right away! As a result, now we have the number of calls held increased by 4 times.

Simple instruction

If you do not need all this, there is a very simple instruction:

Everything will call and call pretty well.

Further, the text version of the report on HighLoad ++ Siberia, from which you will learn:

')

- how video call services work under the hood;

- how beautiful it is to break through NAT - it will be interesting also for specialists from the gaming industry, who need a peer-to-peer connection;

- how WebRTC works, what protocols it includes;

- How can you tying WebRTC through BigData.

About the speaker: Alexander Tobol leads the development of Video and Tapes platforms at ok.ru.

Video call history

The first video call device appeared in 1960, it was called a picture chip, used dedicated networks and was extremely expensive. In 2006, Skype added video calls to its application. In 2010, Flash supported the RTMFP protocol, and we started video calls to Flash on Odnoklassniki. In 2016, Chrome stopped support for Flash, and in August 2017 we restarted calls on a new technology, which I will discuss today. Having finished the service, in half a year we received a significant increase in successfully made calls. Recently, we also have masks in calls.

Architecture and TK

Since we work in a social network, we have no technical tasks, and we do not know what TK is. Usually the whole idea fits on one page and looks like this.

The user wants to call other users using web or iOS / Android applications. Another user may have several devices. The call comes to all devices, the user picks up the phone on one of them, they talk. It's simple.

Specifications

In order to make a quality call service, we need to understand what characteristics we want to track. We decided to start by looking for what is most annoying to the user.

The user is exactly annoyed if he picks up the phone and has to wait for the connection to be established.

The user is annoyed if the quality of the call is low - something is interrupted, the video crumbles, the sound gurgles.

But the most annoying to the user is the delay in calls. Latency is one of the important characteristics of calls. When latency in a conversation of about 5 seconds is absolutely impossible to conduct a dialogue.

We have defined acceptable characteristics:

- Start - we decided it was good to start a call in a second. Those. connecting after a user has answered should take no more than 1 second.

- Quality is a very subjective indicator. You can measure, for example, the signal-to-noise ratio (SNR), but there are still missing frames and other artifacts. We measured the quality rather subjectively and then evaluated the happiness of users.

- Latency should be less than 0.5 seconds. If Latency is more than 0.5 seconds, then you already hear delays and start interrupting each other.

Polycom is a conferencing system installed in our offices. The average polycom delay is about 1.3 seconds. With such a delay is not always understand each other. If the delay increases to 2 seconds, then the dialogue will be impossible.

Since we already had a platform running, we roughly expected that we would have a million calls a day. This is a thousand calls in parallel. If all calls are sent through the server, there will be a thousand megabit calls per call. This is just 1 gigabit / s of one iron server will be enough.

Internet vs TTX

What can prevent to achieve such class performance? The Internet!

On the Internet, there are things like round-trip time (RTT), which cannot be overcome, there is variable bandwidth, there is NAT.

Previously, we measured the transmission speed in the networks of our users.

We smashed by the type of connection, looked at the average RTT, packet loss, speed, and decided that we would test calls on the average values of each of these networks.

There are other troubles on the Internet:

- Packet loss - we measured 0.6% random packet loss (we do not take into account congestion packet loss with an excess number of packets).

- Reordering - you send packets in the same order, and the network re-sorts them.

- Jitter - you give a video or audio stream at a certain interval, and on the client side packets come glued together in bundles, for example, due to buffering on network devices.

- NAT - we got that more than 97% of users are behind NAT. Let's talk further, why, what and how.

Consider the above network settings for a simple example.

I pinged the site of Novosibirsk State University from my office and received such a strange ping.

The average jitter in this example is 30 ms, that is, the average interval between adjacent ping times is about 30 ms, and the average ping is 105 ms.

What is important in calls, why will we fight for p2p?

Obviously, if between our users who are trying to talk to each other in St. Petersburg, we managed to establish a p2p connection, and not through a server that is located in Novosibirsk, we will save about 100 ms round-trip and traffic to this service.

Therefore, most of the article is devoted to how to make a good p2p.

History or legacy

As I said, we had a call service from 2010, and now we have restarted it.

In 2006, when Skype was launched, Flash bought Amicima, which made RTMFP. Flash already had the RTMP protocol, which in principle can be used for calls, and it is often used for streaming. Flash later opened the RTMP specification. I wonder why they needed RTMFP? In 2010 we used exactly RTMFP.

Let's compare the requirements for call protocols and real streaming protocols and see where this border is.

RTMP is more like a video streaming protocol. It uses TCP, it has an accumulating delay. If you have a good internet connection, calls to RTMP will work.

The RTMFP protocol , despite the difference in just one letter, is the UDP protocol. It is free from buffering problems — those on TCP; devoid of head-of-line locks - this is when you have lost one packet, and TCP does not give up the following packets until it is time to send the lost one again. RTMFP was able to cope with NAT and was experiencing a change in the IP address of clients. Therefore, we launched the web on RTMFP in 2010.

Then, only in 2011, the initial draft WebRTC appeared, which was not yet fully operational. In 2012, we started supporting calls to iOS / Android, then something else happened, and in 2016, Chrome stopped supporting Flash. We had to do something.

We looked at all the VoIP protocols: as always, in order to do something, we start by studying the competitors.

Competitors or where to start

We chose the most popular competitors: Skype, WhatsApp, Google Duo (similar to Hangouts) and ICQ.

To start, measure the delay.

Make it easy. Above is a picture in which:

- Stopwatch (see phone at the top left), which shows the time (03:08).

- The middle phone makes a call and takes the first phone as a video. From the moment the image got into the camera of the phone, and you saw it, it took about 100 ms.

- Call to another phone (white) and one more time. Here the delay is about 310 ms with Google Duo.

I will not reveal all the cards so far, but we did it so that these devices could not establish p2p connections. Of course, the measurements were carried out in different networks, and this is just an example.

Skype still interrupts a bit. It turned out that Skype, in case it fails to connect p2p, has a delay of 1.1 s.

The test environment was complicated. We tested in different conditions (EDGE, 3G, LTE, WiFi), we took into account that the channels are asymmetric, and I give the averaged values of all measurements.

In order to estimate the battery consumption, the load on the processor and everything else, we decided that we can just measure the temperature of the phone with a pyrometer and assume that this is some average load on the GPU of the phone on the processor, on the battery. In principle, it is very unpleasant to bring a hot phone to your ear, and hold it in your hands too. It seems to the user that now the application will use up his entire battery.

The result was:

- ICQ and Skype were the slowest in delay , and Telegram was the fastest. This is not a completely correct comparison, since Telegram has no video calls, but on audio, they have minimal latency. WhatsApp works fine (about 200 ms) and Hangouts - 390 ms.

- In terms of temperature, Telegram eats the least without video, and most of all by Skype.

- In terms of response time, Telegram establishes the longest connection, and the fastest is WhatsApp and Google Duo.

Great, we got some metrics!

We tested the quality of video and voice in different networks, with different drops and everything else. As a result, we came to the conclusion that the highest quality video on Google Duo, and the voice - on Skype , but this is in “bad” networks, when there is already a distortion. In general, all work about mediocre. WhatsApp has the most blurred picture.

Let's see what all this is implemented on.

Skype has its own proprietary protocol, and all others use either a modification of WebRTC, or WebRTC directly. Hangouts, Google Duo, WhatsApp, Facebook Messenger can work with the web, and they all have WebRTC under the hood. They are all so different, with different characteristics, and they all have the same WebRTC! So, you need to be able to cook it properly. Plus there is a Telegram, which has some parts of WebRTC responsible for the audio part, there is ICQ, which forks WebRTC a long time ago and went to develop its own way.

WebRTC. Architecture

WebRTC implies a signaling server, an intermediary between clients, which is used to exchange messages during the installation of a p2p connection between them. After installing a direct connection, clients begin to exchange media with each other.

WebRTC. Demo

Let's start with a simple demo. There are simple 5 steps how to establish a WebRTC connection.

Detailed example code

1. // Step #1: Getting local video stream and initializing a peer connection with it (both caller and callee) 2. 3. var localStream = null; 4. var localVideo = document.getElementById('localVideo'); 5. 6. navigator 7. .mediaDevices 8. .getUserMedia({ audio: true, video: true }) 9. .then(stream => { 10. localVideo.srcObject = stream; 11. localStream = stream; 12. }); 13. 14. var pc = new RTCPeerConnection({ iceServers: [...] }); 15. 16. localStream 17. .getTracks() 18. .forEach(track => pc.addTrack(track, localStream)); 19. 20. // Step #2: Creating SDP offer (caller) 21. 22. pc.createOffer({ offerToReceiveAudio: true, offerToReceiveVideo: true }) 23. .then(offer => signaling.send('offer', offer)); 24. 25. // Step #3: Handling SDP offer and sending SDP answer (callee) 26. 27. signaling.on('offer', offer => { 28. pc.setRemoteDescription(offer) 29. .then(() => pc.createAnswer()) 30. .then(answer => signaling.send('answer', answer)) 31. }); 32. 33. // Step #4: Handling SDP answer (calleer) 34. 35. signaling.on('answer', answer => pc.setRemoteDescription(answer)); 36. 37. // Step #5: Exchanging ICE candidates 38. 39. pc.onicecandidate = event => signaling.send('candidate', event.candidate); 40. 41. signaling.on('candidate', candidate => pc.addIceCandidate(candidate)); 42. 43. // Step #6: Getting remote video stream (both caller and callee) 44. 45. var remoteVideo = document.getElementById('remoteVideo'); 46. 47. pc.onaddstream = event => remoteVideo.srcObject = event.streams[0]; It says the following:

- Take a video and set a peer connection, transfer some iceServers (it is not immediately clear what it is).

- Create an SDP offer and send it to signaling, and signaling WebRTC does not implement it for you.

- Then you need to make a wrapper for coming from signaling, and this is not included in WebRTC either.

- Further exchange some candidates.

- Finally get the remote video stream.

Let's still understand what is happening there and what we need to implement ourselves.

See the picture upwards. There is a WebRTC library, which is already built into the browser, supported by Chrome, Firefox, etc. You can build it under Android / iOS and communicate with it through the API and SDP (Session Description Protocol), which describes the session itself. Below I will tell you what is included in it. To use this library in your application, you must establish a connection between subscribers via signaling. Signaling is also your service that you have to write yourself, WebRTC does not provide it.

Further in the article we will discuss the network in order, then video / audio, and in the end we will write our own signaling.

WebRTC network or p2p (actually c2s2c)

It seems that installing a p2p connection is quite simple.

We have Alice and Bob, who want to establish a p2p connection. They take their IP addresses, they have a signaling server to which they both are connected, and through which they can exchange these addresses. They exchange addresses, and oh! Their addresses are the same, something went wrong!

In fact, both users are most likely sitting behind Wi-Fi routers and these are their local gray IP addresses. A router provides them with a feature such as Network Address Translation (NAT). How does she work?

You have a gray subnet and an external IP address. You send a packet to the Internet from your gray address, NAT replaces your gray address with a white one and remembers the mapping: which port it sent from, to which user and which port it corresponds to. When the return packet arrives, it resolves the map for this map and sends it to the sender. It's simple.

Below is an illustration of how it looks in my home.

This is my internal IP-address and the address of the router (by the way, also gray). If I trace and see the route, we will see my Wi-Fi router: a pack of gray provider addresses and an external white IP. Thus, in fact, I will have two NATs: one, on which I am on Wi-Fi, and the other from the provider, if I, of course, have not bought a dedicated external IP address.

NAT is so popular because:

- still many IPv4, and addresses are missing;

- NAT seems to protect the network;

- This is the standard function of the router: connect to Wi-Fi, there is NAT right there, it works.

Therefore, only 3% of users sit with an external IP, and all others go through NAT.

NAT allows you to safely go to any white addresses. But if you did not go anywhere, then no one can come to you.

To establish a p2p connection this is the problem. In fact, Alice and Bob cannot send each other packets if they are both behind NAT.

There is a STUN protocol in WebRTC to solve this problem. It is proposed to deploy a STUN server. Then Alice connects to the STUN server, gets her IP address, sends it to Bob via signaling. Bob also gets his IP address and sends it to Alice. They send packets to each other and thus break through NAT.

Question : Alice has a certain port open, NAT / Firewall is already pierced to this port, and Bob is open. They know each other's addresses. Alice tries to send the package to Bob, he sends the package to Alice. Do you think they can talk or not?

In fact, you are right in any case, the result depends on the type of NAT pair that users have.

Network Address Translation

There are 4 types of NAT:

- Full cone NAT;

- Restricted cone NAT;

- Port restricted cone NAT;

- Symmetric NAT.

In the basic version, Alice sends a packet to the server STUN, she opens a port. Bob somehow finds out about her port and sends a reverse packet. If this is Full cone NAT — the simplest one that simply maps an external port to an internal one, then Bob will immediately be able to send a packet to Alice, establish a connection, and they will talk.

Below is the interaction scheme: Alice sends a packet to the STUN port from some port, STUN responds with its external address. STUN can reply from any address, if it is a Full cone NAT, it still breaks through NAT, and Bob can reply to the same address.

In the case of Restricted cone, NAT is a bit more complicated. He remembers not just the port from which you want to map to the internal address, but also the external address to which you went. That is, if you have established a connection only to the IP of the STUN server, then no one else on the network can answer you, and then the package of Bob will not reach.

How is this problem solved? In a simple scheme (see illustration below), so: Alice sends a packet to STUN, he answers her IP. STUN can respond to it from any port while it is Restricted cone NAT. Bob cannot answer Alice because he has a different address. Alice responds with a package, knowing Bob’s IP address. She opens NAT to Bob, Bob answers her. Hooray, they talked.

A slightly more complicated option is Port restricted cone NAT . All the same, only STUN must respond exactly from the port to which it was addressed. Everything will work too.

The most harmful thing is Symmetric NAT .

Initially, everything works exactly the same - Alice sends a packet to the STUN server, he responds from the same port. Bob cannot answer Alice, but she sends the package to Bob. And here, despite the fact that Alice sends a packet to port 4444, the mapping allocates a new port to it. Symmetric NAT is different in that when each new connection is established, it issues a new port on the router every time. Accordingly, Bob fights at the port from which Alice went to STUN, and they can’t connect.

In the opposite direction, if Bob is with an open IP address, Alice can just come to him, and they will establish a connection.

All options are collected in one table below.

It shows that almost everything is possible except when we try to establish connections through Symmetric NAT with Port restricted cone NAT or Symmetric NAT at the other end.

As we found out, p2p is invaluable for us in terms of latency, but if we failed to install it, then WebRTC offers us a TURN server. When we realized that p2p is not established, we can simply connect to the TURN, which will proxy all traffic. True, in this case you will pay for traffic, and users may have some additional delays.

Practice

Free STUN servers are at Google. You can put them in the library will work.

TURN servers have credential (login and password). Most likely, you will have to raise your own, it is quite difficult to find free.

Examples of free STUN servers from Google:

- stun: stun.l.google.com: 19302

- stun: stun1.l.google.com: 19302

- stun: stun2.l.google.com: 19302

- stun: stun3.l.google.com: 19302

And the free TURN server with passwords: url: 'turn: 192.158.29.39: 3478? Transport = udp', credential: 'JZEOEt2V3Qb0y27GRntt2u2PAYA =', username: '28224511: 1379330808 ′.

We use coturn .

As a result, 34% of traffic passes through the p2p connection, everything else is proxied through the TURN server.

What else is interesting about the STUN protocol?

STUN allows you to determine the type of NAT.

Link on the slide

When sending a packet, you can indicate that you want to receive a response from the same port or ask STUN to respond from another port, from another IP, or from another IP and port in general. Thus, for 4 requests to the STUN-server, you can determine the type of NAT .

We considered NAT types and got that almost all users have either Symmetric NAT or Port restricted cone NAT. Hence, it turns out that only a third of users can establish a p2p connection.

You may ask why I am telling all this, if you could just take STUN from Google, stick it in WebRTC, and it seems like everything will work.

Because you can actually define the type of NAT yourself.

This is a link to a Java application that doesn't do anything tricky: it just pings different ports and different STUN servers, and looks at what port it sees in the end. If you have an open Full cone NAT, then the STUN server answers will have the same port. With Restricted cone NAT, you will receive different ports for each STUN request.

With Symmetric NAT, my office turns out like this. There are completely different ports.

But sometimes there is an interesting pattern that for each connection the port number is increased by one.

That is, many NATs are configured so that they increase or decrease the port by a constant. You can find this constant and thus break through Symmetric NAT.

Thus, we break through NAT - we go to one STUN-server, to another, we look at the difference, compare and try again to give our port with this increment or decrement. That is, Alice is trying to give Bob his port, which has already been adjusted for a constant, knowing that next time it will be exactly like that.

So we managed to make another 12% peer-to-peer .

In fact, sometimes external routers with the same IP behave the same way. Therefore, if you compile statistics and if Symmetric NAT is a feature of the provider, and not a feature of the user's Wi-Fi router, then the delta can be predicted, immediately sent to the user, so that he uses it and does not spend too much time on its determination.

CDN Relay or what to do if a p2p connection failed

If we still use the TURN server and work not in p2p, but in real mode, transferring all the traffic through the server, we can also add a CDN. Unless, of course, you have a playground. We have our own CDN sites, so for us it was quite simple. But it was necessary to determine where it is better to send a person: to the CDN site or, say, to the channel to Moscow. This is not a very trivial task, so we did this:

- The Moscow sites were randomly given out to some users, some to the remote ones.

- Collected statistics on user's IP, on servers and on network characteristics.

- By maxMind, we grouped the subnets, looked at the statistics, and were able to understand by IP what the nearest TURN server was for the user to connect to.

There is a CDN in Novosibirsk. If everything works for you through Moscow, then 99 percentile RTT - 1.3 seconds. Through the CDN, everything works much faster (0.4 seconds).

Is it always better to use a p2p connection and not use a server? An interesting example is the two Krasnoyarsk providers Optibyte and Mobra (perhaps the names have been changed). For some reason, the connection between them on p2p is much worse than through MSK. Probably, they are not friends with each other.

We analyzed all such cases, randomly sending users to p2p or via MSK, collected statistics and built predictions. We know that statistics need to be updated, so we specifically set up different connections for some users to check if something has changed in the networks.

We measured such simple characteristics as round time, packet loss, bandwidth - it remains to learn how to compare them correctly.

How to understand which is better: 2 Mbit / s of the Internet, 400 ms RTT and 5% packet Loss or 100 Kbit / s, 100 ms delay and meager packet loss?

There is no exact answer, the video call quality assessment is very subjective. Therefore, after the end of the call, we asked users to evaluate the quality in asterisks and set up constants based on the results. It turned out that, for example, RTT is less than 300 ms - it does not matter anymore, bitrate is more important.

Higher average user ratings on Android and iOS. It can be seen that iOS users often put the unit and more often the top five. I do not know why, probably, the specificity of the platform. But with them we adjusted the constants so that we have, as it seems to us, good.

Back to our article plan, we are still discussing the network.

What is the connection setup?

Sent to PeerConnection () STUN and TURN servers, a connection is being established. Alice finds out her IP, sends it to signaling; Bob learns about Alice's IP. Alice gets Bob's IP. They exchange packets, perhaps ping NAT, perhaps set TURN and communicate.

In the 5 steps of the connection setup, which we discussed earlier, we sorted out the servers, understood where to get them, and that the ICE candidates are external IP addresses that we exchange via signaling. The internal IP addresses of clients, if they are within the range of one Wi-Fi, you can also try to punch.

We turn to the video.

Video and audio

WebRTC supports a certain set of video and audio codecs, but you can add your own codec there. Basicly supported are H.264 and VP8 for video . VP8 is a software codec, so it consumes a lot of battery. H.264 is not available on all devices (usually it is native), so the default priority is set to VP8.

Inside SDP (Session Description Protocol) there is a codec negotiation: when one client sends a list of their codecs, another - their own with priority, and they agree on which codecs they will use to communicate. If desired, you can change the priority of the VP8 and H.264 codecs, and thus you can save battery on some devices, where 264 is native. Here is an example of how this can be done. We did it, it seemed to us that users did not complain about the quality, but at the same time, the battery charge is much less.

For audio, WebRTC has OPUS or G711 , usually all OPUS always works, you don’t need to do anything with it.

Lower temperature measurements after 10 minutes of use.

It is clear that we tested different devices. This is an example of an iPhone, and on it, the OK application spends the least battery on it, because the temperature of the device is the least.

The second thing you can turn on if you use WebRTC is to automatically turn off the video during a very bad connection .

If you have less than 40 Kbps, the video will turn off. You just need to check the box when creating a connection, the threshold value can be configured through the interface. You can also set the minimum and maximum starting current bitrate.

This is a very useful thing. If you set up a connection and you know in advance what bitrate you expect, you can transfer it, the call will start from it, and you will not need to adapt the bitrate. Plus, if you know that you often have packet loss or bandwidth subsidence on your channel, then the maximum value can also be limited.

WhatsApp works with a very soapy video, but with small delays, because the bitrate is aggressively pressing upward.

We collected statistics with the help of MaxMind and mapped it.

This is an approximate starting quality that we use for calls in different regions of Russia.

Signaling

You will most likely have to write this part if you want to make calls. There are all sorts of pitfalls. Recall what it looks like.

There is an application with signaling that connects and exchanges with SDP, and the SDP below is an interface to WebRTC.

This is simple signaling:

Alice calls Bob. It connects, for example, via a web-socket connection. Bob gets a push to his mobile phone or to the browser, or to some kind of open connection, connects via web-socket and after that he starts to ring the phone in his pocket. Bob picks up the phone, Alice sends him his codecs and other features of WebRTC that she supports. Bob answers her the same way, and after that they exchange the candidates they see. Hooray, call!

It all looks pretty long. First, until you set up a web-socket connection, until a push comes in and everything else, Bob will not ring the phone in his pocket. Alice will be waiting all the time, thinking where is Bob, why he doesn’t pick up the phone. After confirmation, it all takes seconds, and even on good connections it can be 3-5 seconds, and on bad connections, all 10.

We must do something about it! You will tell me that everything can be done very simply.

If you already have an open connection to your application, you can immediately send a push to establish a connection, connect to the signaling server you need and immediately start calling.

Then another optimization. Even if the phone is still ringing in your pocket and you didn’t pick up the phone, you can actually exchange information about supported codecs, external IP addresses, start sending empty video packets, and in general everything will be warm with you. As soon as you pick up the phone, everything will be great.

We did it, and it seemed that everything was cool. But no.

The first problem - users often cancel the call. They click "Call" and immediately make the cancellation. Accordingly, the push goes to the call, and the user disappears (the Internet or something else disappears from him). In the meantime, someone has a phone ringing, he picks up the phone, and they don’t wait for him there. Therefore, our primitive optimization in order to start calling as soon as possible does not really work.

With a quick cancel call there is a second harmful thing. If you generate your conversation ID on the server, then you need to wait for a response. That is, you create a call, get an ID, and only after that you can do whatever you want: send packets, exchange, including, cancel the call. This is a very bad story, because it turns out that as long as your response has not come, you cannot actually cancel anything from the client. Therefore, it is best to generate some kind of ID on a client like GUID and say that you started the call. People still often do this: he called, canceled and immediately called again. To avoid confusion, make a GUID and send it.

It seems to be nothing, but there is another problem. If Bob has two phones, or somewhere else the browser remains open, then our entire magic scheme in order to exchange packets, establish a connection, does not work if he suddenly responded from another device.

What to do? Let us return to our basic simple signaling slow scheme and optimize it, send a push a little bit earlier. The user will start to connect faster, but it will save some pennies.

What to do with the longest part after he picked up the phone and started the exchange?

You can do the following. It’s clear that Alice already knows all her codecs and can send them to both Bob’s phones. She can cut all her IP addresses and send them to the signaling too, which will keep them in her queue, but will not send to any of the clients so that they start to establish a connection with her ahead of time.

What can bob?Having received the offer, he can see what codecs were there, generate his own, write what he has, and send it too. But Bob has two phones, and there are different codec negotiation, so signaling will keep it all on himself and will keep in line until he finds out on which device he picked up the phone. Candidates of their own will also generate both devices and send them to signaling.

Thus, it turns out that signaling has one message queue from Alice and several message queues from Bob on different devices. He stores all this, and as soon as one of these devices is picked up, he simply transfers the entire set of already prepared packets.

It works pretty fast. We got such an algorithm to reach the characteristics similar to Google Duo and WhatsApp.

Perhaps you can think of something else better. For example, several queues do not keep on signaling, but send them to the client, and then say which number, but most likely, the gain will be very small. We decided to stop at this.

What other problems await you?

There is such a thing as a counter call: one calls the other, the other calls back. It would be great if they did not try to compete - at the signaling level, add a command that says that if someone came second, you need to switch to the mode when you just accept the call and immediately pick up the phone.

It so happens that the network disappears, messages are lost, so everything must be done through the queue. That is, you must have a send queue on the client. Messages that you send from a client should be removed from the queue only after the server has confirmed that it has processed them. The server also has a queue for sending, and also with confirmation.

So this is all implemented inside of us, given that we have a 24/7 service, we want to be able to lose data centers, shift and update the version of our software.

Link on the slide to the video and link to the text version

Clients connect via web-socket to some kind of load balancer, it sends to signaling servers in different data centers, different clients can come to different servers. At Zookeeper, we do Leader Election, which defines the signaling server that is currently managing this conversation. If the server is not the leader of this conversation, it simply passes all messages to another.

Next we use some distributed storage, we have this NewSQL on top of Cassandra. Actually no matter what to use. You can save anywhere the status of all the queues that are on signaling, so that if the signaling server goes down, the electricity goes down or something else happens, Leader Election will work on Zookeeper, another server will rise, which will become the leader, will restore all queues from the database messages and start sending.

The algorithm looks like this:

- The client sends some message, say, its external IP to signaling

- Signaling accepts, writes to the database.

- After he realizes that everything has come, he replies that he has received this message.

- The client removes this message from its queue.

All packages are supplied with unique numbers, so as not to be confused.

From the point of view of the database, we use an add-on over Cassandra, which allows you to make transactions on it (the video is just about that).

So you found out:

- what is iceServers and how to transfer them;

- what is the Session Description Protocol;

- that it needs to be generated and sent to the other side;

- that it needs to be received from signaling and transmitted to WebRTC on the other side, to exchange external IP addresses;

- and start posting videos!

We got:

- calls with delay below the market average;

- we didn’t heat the phones much;

- response time in our application at the top level.

Great!

Security. Man in the middle attack for WebRTC

Let's talk about man in the middle attack for WebRTC. In fact, WebRTC is a very difficult protocol in terms of the fact that it is based on RTP, which is still 1996, and SDP came in 1998 from SIP.

The huge list below is a bunch of RFCs and other RTP extensions that make WebRTC out of RTP.

The first on the list are two interesting RFCs - one of them adds audio levels to packets, and the other says that it is not safe to transmit audio levels openly in packets and encrypts them. Accordingly, when you exchange SDP, it is important for you to know which set of extensions clients support. There are even several congestion algorithms, several algorithms for recovering lost packets and everything.

The history of WebRTC was complicated. In 2011, the first draft release was released, in 2013, this protocol was supported by Firefox, then it began to build on iOS / Android, in 2014 Opera. In general, it has been developing for many, many years, but it still does not solve one interesting problem.

When Alice and Bob connect to signaling, then they use this channel, establish a DTLS Handshake and secure connection. Everything is great, but if it was not our signaling, then in principle a person in the middle has the opportunity to “sniff” both with Alice and with Bob, to send all traffic and listen in on what is happening there.

If you have a high-trust service, then, of course, you should definitely use HTTPS, WSS, etc. There is another interesting solution - ZRTP, it is used, for example, by Telegram.

Many have seen Emodji in a Telegram when a connection is established, but few use them. In fact, if you tell a friend what kind of emoji you have, he will check that he has exactly such, then you have absolutely guaranteed secure p2p connection.

How it works?

Inside all of these protocols, the usual Diffie-Hellman algorithm is initially used. Alice generates some numbers, sends them all but one to Bob. Bob also generates a random number and sends it to Alice. As a result of this exchange, Alice and Bob get some large number K, about which the man in the middle, who listened to their whole channel, knows nothing and cannot guess at all.

When Dave appears between Alice and Bob, they exchange the same keys with him, and they get K 1and K 2 respectively. Track the presence of this person in the middle is not possible. Then this trick is applied. These keys K 1 and K 2 Dave will definitely be different, since Alice and Bob generate their keys randomly. We just take some hash from K 1 and K 2 and display it in emodzhi: in an apple, in a pear - in everything, anything - and people simply use the voice to name those pictures that they see. Since the voice can identify each other, and if these pictures are different, then someone is between you and maybe he is listening to you.

results

- We namini NAT type and probed symmetric NAT.

- Statistically estimated, which is better: p2p or relay, quality, CDN; and improved star quality in terms of users.

- Changed the priorities of codecs, saved a little battery.

- Minimized the signaling delay.

The graph shows that first there were old calls to RTMFP, then, when we switched to WebRTC, there is a small failure, and then the peak is up. Not everything worked out right away! As a result, now we have the number of calls held increased by 4 times.

Simple instruction

If you do not need all this, there is a very simple instruction:

- download the code from WebRTC ( https://webrtc.org/native-code/development/ ), compile it under iOS / Android, it already exists in all browsers;

- deploy coturn ( https://github.com/coturn/coturn );

- write signaling.

Everything will call and call pretty well.

Listen to the answers to the questions after the report.

HighLoad++ 4-.

, . , 19 (10 9 -) , - . , , .

Source: https://habr.com/ru/post/428217/

All Articles