Moral Machine: merciless or meaningless?

I decided to write this article in the wake of this post .

Let me remind you of the brief point: the journal Nature published the results of a study conducted with the help of this test .

What do I want to write about?

First, why this study is absolutely useless precisely to solve the stated problem, and in the form in which it was carried out.

Secondly, how it would be worth setting priorities in such a study.

And third, try to simulate various variants of an accident within the conditions specified by the test.

In that post, the author in vain did not insert a link to the test from the very beginning. This would help avoid meaningless comments from those who did not catch the initial message of the study.

Please pass the test at least a couple of times to understand the subject.

What we were promised to show in the study

The discussion of the first post that was epic for Habr showed that people in the mass do not know how to think within the framework of the given conditions, but begin to dream: “why do people necessarily die in the test? After all, you can drive around and pedestrians, and block / sideways to the chipper to get used to / brake handbrake / transfer? But I would ... ". Understand, this is a simplified example for the development of algorithms of action and policies regulating the behavior of the autopilot on the road! From the point of view of the development of safety rules, such extremes and simplifications are just justified. We are talking about the potential consequences for which people should be prepared. Soldiers do not go to the minefields not because every square centimeter is mined there, but because of the very probability of dying. It is usually not very high, but no one will risk. Therefore, in the test, the choice between 100% death of all passengers or all pedestrians is adequate - this is how we designate risk, bearing in mind that it is unacceptable to risk lives in our society.

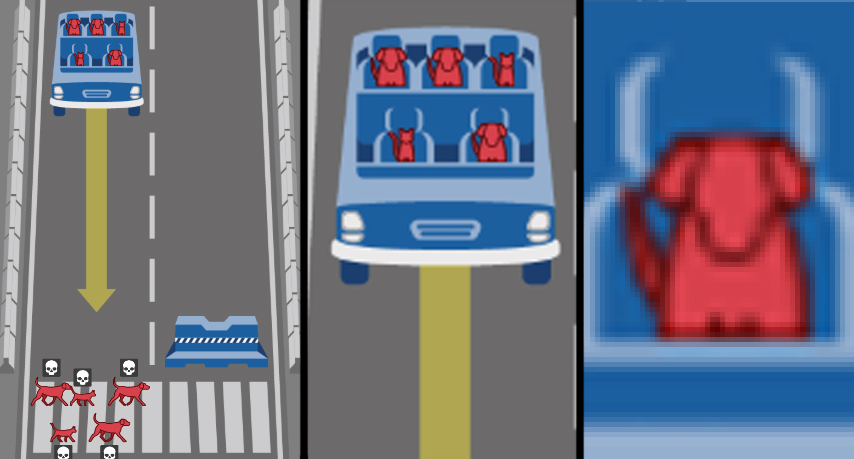

The research message is this: you, the people who live now, have to live in a world of the future filled with cars on autopilot. And for us, car developers, AI and others, it is important to know, but how do you think car-robots should behave? Well, you, yes, yes, it is you - answer what to do with the robot if it has a head and a cat in it, and it is about to crush the homeless and the dog? What ethics should robomobils have if they have to choose?

And after the initial motive of the study, we identified, I want to discuss several aspects of it.

What actually showed in the study

The first thing that clearly catches the eye - the design of the study is not directed at the stated goal. The tasks in the form of dilemmas are not suitable for creating the "ethics" of the behavior of a robot car. Rather, not all. Here are the dilemmas that meet the task of “developing rules for the behavior of a robot car to reduce the severity of an accident”:

- "Passenger / pedestrian" - choose whom to save;

- "Violation of traffic rules" - choose whether to donate unconscious pedestrians;

- “Number of potential victims” - choose whether the number of victims has priority.

And then come the parameters, which, as it turns out, are very important for the sentencing in our civilized society. People were honestly and innocently asked: your level of sexism, lookism, ageism? Are you for discriminating fat or declassed? And after all, hundreds of thousands of people honestly answered ...

Bravo!

Right in the best traditions of entertainment films and TV shows, when the world of the main characters is actually a big sandbox behind a high fence, and it is their behavior that is an experiment! Under the sauce of super-important research in the field of robotics and AI, sociologists, psychologists, and cultural scientists have received such a powerful sample on the problem of the trolley that no one had dreamed of before! Well, except that they didn’t add color to the survey, but then the racist undertones of the study would be white ... oh.

Seriously, this gender-phenotypic part of the study is cut off by categorical arguments. The first argument is humanism, as civilized people, we must put the primacy of the value of human life over any individual differences. That is, the question itself is outrageous, as discriminatory. The second argument - accidents happen very much, and in the limit the distribution of victims in appearance, education, sex, age will tend to their proportions in society, so it is at least strange to regulate it additionally. The third argument is that it does not seem expedient to create an artificial intelligence that distinguishes the costume from Brioni from the pullover from Berschka, in order to further compare whether a person is of status status and whether it is worth crushing him. Moreover, I would not trust the AI to judge - a homeless pedestrian or a scientist? (hello hairstyles of respected scientists Perelman or Gelfand :))

In addition to these unnecessary parameters, we can easily discard the remaining two: species specificity and non-interference. Yes, we will crush small animals in order to save people, who would have thought. And about the “interference / non-interference” parameter by maneuvering - this essential part of the trolley’s problem is not just a hindrance to the car, because the machine acts not according to ethics, but according to the algorithms embedded in it. And since we set the task “how a car should act in an accident with a run over people,” then in the wording itself we assume that we must act somehow. Rail transport is successfully coping with straightforward collisions in our time, and we are developing a policy to minimize the victims of road accidents.

So, we have separated the rational grains of research from the sociopsychological experiment to study the level of intolerance in world society. We continue to work with the first three mentioned dilemmas. And there is something to disassemble. Upon closer inspection, they are incomplete ...

Three dilemmas of robots cars

Do pedestrians violating traffic rules? Here, the majority appears in explicit form expressed social Darwinism or a tacitly-agreeable regretful answer - rather, it is necessary to sacrifice the violators to the innocent. Conscious people do not walk on rails, knowing that the train will not stop - so let them know that the mobile will not stop either. It's all logical, though cynical. But in this cunning dilemma one-sidedness, incompleteness is hidden. Pedestrians violating traffic rules are just one of many variants of the situation. But if roboom violates ?? This situation has not been considered, and she (the car’s cameras missed a sign or a traffic light) was in theory much more likely than a sudden failure of the brakes. However, I again hit into fantasies and particulars. It is more important to simply show in the test an equivalent reciprocity of the situation. That is, to imagine this: if pedestrians violate traffic rules, they die, and this is “logical” and “right”, and if it violates the mobile, does he have to commit suicide for this mistake? Do not forget that while sitting inside the head, tramp and the cat die! This is an important amendment, and in the test of this aspect is not.

Further. The number of potential victims. Here, too, is not so simple. We discard gender stereotypes and respect for old age, contempt for fat and homeless. Let us assume that in an accident they die proportional to the frequency with which they occur in nature. And decide: it is better to die some three than some five. Sounds logical? Oh well. Let's reduce this all hell absurdity , we have an abstract simulation. Which is better - kill 50 or 51 people? 1051 or 1052? So it's not that important? Then which is better - kill 1 pedestrian or 50 people on the bus? And now it has become important? And where is the boundary? Is every extra person valuable? Is it important if in a traffic accident thousands will die in the future? As in the case of appearance, in reality, an adequate estimate using the AI of the number of potential victims will be an extremely difficult task. The only thing that makes sense is to make a condition of non-intervention (non-maneuvering) if the number of victims is the same.

The third aspect caused a lot of controversy in the comments to that first article on Habré. And judging by the results of the study, it is quite ambiguous for society, and here lies the main problem that needs to be solved. Is it about who to risk - pedestrians or passengers?

Some say that pedestrians are not to blame for anything, which means that they should be saved first of all. In general, it is now fashionable to take care of pedestrians among the urbanists, make whole streets pedestrian, create junctions with a traffic light every 50 meters, reduce the speed in the center, give priority to pedestrians. And here it is necessary to secure them somehow, and a robotic machine flying without brakes on a crowd should self-destruct in the name of saving the most vulnerable road users. In what, from my point of view, they are right - that pedestrians did not subscribe to the conditions of the behavior of someone else there robot car. They could be generally against their introduction. At the same time, it is impossible to imagine a passenger of such a machine who does not agree with the conditions of its use. Therefore, the situation is a conflict of interest. It is more convenient for me to kill you and I will kill you, as one person says to another.

The latter say that everyone who buys and generally gets into a robotic machine should have guarantees that it will save him in the event of an accident, and not kill in order to save the doctor, child or two cats passing the road. On the one hand, it looks logical and justified, on the other hand, the passenger in this case deliberately puts his life above the others. When buying a car with an autopilot, each of its owners has at its disposal a perfect weapon, a silver bullet, an unguided rocket, a machine that will completely legitimately kill any other person in its path.

Both sides are foaming at the mouth of the "first law of robotics", taken from science fiction. It sounds demagogically beautiful, but no one even tries to comprehend it or challenge it in relation to the problem. And it is not applicable to this problem statement, because the concept is being replaced: the heuristics / AI of a machine does not choose between the values of human lives, but acts strictly according to alogrhythms created on the basis of subjective priorities invented by people. And here it absolutely does not matter what kind of social construct is taken as a priority when choosing “kill / spare”: we previously rejected the body mass, age and status or social Darwinist selfishness of the owner of the car.

The second approach, being a one-sided encroachment on the lives of pedestrians, turns the study from the problem of a trolley into a classic prisoner's dilemma . If the parties come to a compromise, there may be a general development (the introduction of robotic vehicles) with minimal deterioration for some (minimizing the number of inevitable deaths from robotic vehicles) - which is the desire for Pareto-optimum. However, there is always an egoist who stakes only on his own interests. "He will get 20 years, but I will go free." "He will die crossing the road, although the brakes have failed in my car." Perhaps this approach is justified when events are rare in life, and there are two participants in the game. When there are tens or hundreds of thousands of participants, and trips are daily, such a one-gate game will turn into discrimination against pedestrians.

Personally, I believe that in the framework of the formulated task, the passenger / pedestrian dilemma leads to a dead end. The machine, potentially killing those who sat in it, is absurd from the point of view of common sense and naturally will not find buyers in the market. A car that knowingly kills pedestrians is impossible in a civilized society as an element of positive discrimination and a threat to people's lives.

We go further. The article does not really discuss and does not designate a policy of minimizing the tragic consequences of an accident involving robot vehicles. The final data is divided into "regions", quite significantly differing in priorities, and rather ambiguously formed (there are explanations about "religious characteristics and colonial influence", but ... well, hello to Iraq with Afghanistan in "Western" and France with the Czech Republic in " Southern "sector). And so the question revolves in the language: will you make robomobils with different “ethics” for each country?

The authors of the article in the discussion denote the three “basic fundamental blocks” they identified: to save people (not animals), save more lives, save younger ones. But the diagrams clearly show that people in the Eastern Sector do not care about the number and the youth. It turns out that the policies of priorities selected as a result will go against the opinion of the overwhelming majority? Why, then, did people interview at all?

Maybe just count?

But let's move on to the entertainment part of this post.

Instead of asking for robotics advice from people with different sociocultural overtones and probably 99% of non-core education, let's turn to an impartial tool. Take the dilemmas selected at the beginning of the article. In the conditions of the test we will create the simplest computer simulation. And we will estimate the number of dead participants in the movement.

And remember: our task as politicians in the field of transport security is to reduce the total number of victims. We will work within the framework and conventions of the original Moral Machine test, which focuses on the risk to the lives of crash participants, rather than difficult realistic assessments of a car’s collision with an obstacle or people. We do not have EuroNCAP, we will have Python.

The first thing to do is write a code that meets the dilemma of "saving those who die more." As part of the Moral Machine test, we will randomly make from 1 to 5 passengers and pedestrians, set a condition if pedestrians> passengers, immediately kill the car on a concrete block. We carry out, for example, 10,000 such accidents.

npedtotal = 0

npasstotal = 0

ndeadped = 0

ndeadpass = 0

# 0 0

n=0

while n < 10000:

#10000

nped = random.randint(1, 5)

npass = random.randint(1, 5)

# 1 5

npedtotal += nped

npasstotal += npass

#

if nped > npass:

ndeadpass += npass

else:

ndeadped += nped

# ,

# .

# .

n += 1

print (" ", npedtotal)

print (" ", npasstotal)

print (" ", ndeadped, "(",100*ndeadped/npedtotal, "%",")")

print (" ", ndeadpass, "(",100*ndeadpass/npasstotal,"%"")")

print (" ", ndeadped + ndeadpass, "(",100*(ndeadped + ndeadpass)/(npasstotal+npedtotal), "%", ")")…

29960

29924

13903 ( 46.4052069426 % )

8030 ( 26.8346477744 %) 21933 ( 36.6258098991 % )

, ! , !

. . , . – , « » 4 5, == . , 20 20 , , , 5 – , 5 – . : , , . > >= :

29981, occupants, - , . , . 30000 , 100% — .

29865

7859 ( 26.2132684033 % )

14069 ( 47.1086556169 %)

21928 ( 36.6407111586 % )

, , . – , , 50% , 30000.

– . , , , ! , : , , , , , . , : .

import random

npedtotal = 0

npasstotal = 0

ndeadped = 0

ndeadpass = 0

# 0 0

n=0

while n < 10000:

#10000

nped = random.randint(1, 5)

npass = random.randint(1, 5)

trafficlight = random.randint(0, 1)

# 1 5

#

npedtotal += nped

npasstotal += npass

#

if trafficlight == 0:

ndeadped += nped

else:

if nped > npass:

ndeadpass += npass

else:

ndeadped += nped

# ,

# .

# .

n += 1

print (« », npedtotal)

print (« », npasstotal)

print (« », ndeadped, "(",100*ndeadped/npedtotal, "%",")")

print (« », ndeadpass, "(",100*ndeadpass/npasstotal,"%"")")

print (« », ndeadped + ndeadpass, "(",100*(ndeadped + ndeadpass)/(npasstotal+npedtotal), "%", ")")

```

">", , . ( ).

29978

29899

21869 ( 72.9501634532 % )

4042 ( 13.5188467842 %)

25911 ( 43.2737111078 % )

">="

30152

30138

19297 ( 63.9990713717 % )

6780 ( 22.4965160263 %)

26077 ( 43.2526123735 % )

, . – .

. , , . Moral Machine, , , . - , .

, , , . – , , . . – , , , . , – .

, - , . , , , . – , , .

. . , . .

')

Source: https://habr.com/ru/post/428181/

All Articles