Analysis of the performance of WSGI-servers: return uWSGI to the place

Last week, a translation of a two-year-old article on the performance analysis of WSGI servers was published : Part Two , where it was undeservedly deprived of the glory of uWSGI.

It is urgent to double-check the tests!

Goals

I have been using uWSGI for a long time, and I want to show that it is not as bad as described in the 2016 tests.

Initially, I just wanted to reproduce the tests, and figure out what was wrong with uWSGI.

There are no versions of used packages and modules in the code .

Therefore, to run tests and get similar results does not work.

Next, my quest, to run the tests and compare the results on the charts.

At the request of readers, added NginX Unit.

Steps

wrk 4.1.0

Find specs , patch , collect

Information added to readme.rd.

docker stats

For 2 years, the output of statistics has changed.

A second column, NAME , was added, and this broke the statistics parser.

In order not to meet a similar problem in two years, we will use a formatted output:

- docker stats > "$BASE/$1.$2.stats" & + docker stats --format "{{.CPUPerc}} {{.MemUsage}}" > "$BASE/$1.$2.stats" & And accordingly, let's slightly simplify the parser code.

debian

Now the latest debian image is version 9.5 , display it in the Dokerfile:

- FROM debian + FROM debian:9.5 In April 2016 latest corresponded to version 8.4

Nevertheless, Apache remained almost the same: now the version of Apache 2.4.25, and in 2016 it was Apache 2.4.10.

cherrypy tornado uwsgi gunicorn bjoern meinheld mod_wsgi

It even makes no sense to say that the modules have changed.

We indicate the current version:

- RUN pip install cherrypy tornado uwsgi gunicorn bjoern meinheld mod_wsgi + RUN pip install cherrypy==17.4.0 \ + uwsgi==2.0.17.1 \ + gunicorn==19.9.0 \ + bjoern=2.2.3 \ + meinheld==0.6.1 \ + mod_wsgi==4.6.5 What is there doing tornado, where is running a wsgi file to run tornado? Remove the artifact.

It would not be bad to put it in separate requirements.txt, but for now let's leave it that way.

cherrypy -> cheroot.wsgi

As shown above, the current version is 17.4.0.

In April 2016, version v5.1.0 was probably used.

And in 2017, in version 9.0 there were changes, which affected the import of the server:

- from cherrypy import wsgiserver - server = wsgiserver.CherryPyWSGIServer( + from cheroot.wsgi import Server as WSGIServer + server = WSGIServer( Socket errors: read 100500

After the edits described above, the first full test was launched.

The results were good: uwsgi did not give out 3 ... 200, but 7500 ... 5000 requests per second.

But when we looked at the graphs in detail, it turned out that all the responses of wrk were detected as read errors.

After checking a dozen keys for launching uwsgi, it turned out that there were no errors when http1.1 was enabled: --http-keepalive and --http11-socket .

Moreover, the first one gives 7500 ... 5000 requests per second, and the second one is stable 29 thousand!

what has changed in uWSGI at the moment

Most likely, in August 2016, the version uWSGI 2.0.12 (20151230) was used.

After, in May, uWSGI 2.0.13 was released .

It was a significant event, but this did not solve the performance problem according to wrk until 2018, with the release of uWSGI 2.0.16 :

Back-ported HTTP / 1.1 support (--http11-socket) from 2.1

This is why uWSGI recommended using with NginX,

And why this is important in the framework of the article can be understood from this 2012 ticket .

Why then were these results

I tried these versions:

- 2.0.12 for debian 8.4, on the nine it is not collected because of the fresh openssl.

- 2.0.13 ... 2.0.17 for debian 8.4 and 9.5

But I could not get such bad results as 3 ... 200 requests per second.

Attention attracted the application launch line:

uwsgi --http: 9808 --plugin python2 --wsgi-file app.py --processes ...

Specifying the plug-in that says it installs uwsgi from the repository, not pip.

This may indicate that the author does not have the necessary experience with this stack.

Therefore, I admit several possibilities:

- The test of each of the uwsgi-servers was performed separately, at different times.

- there was some conflict between the uwsgi system version, and the one installed via pip.

- wrk version of the author in 2016, had some features to work on http1.0

- uwsgi started with a different set of parameters

- custom article numbers from the ceiling.

What are the results now

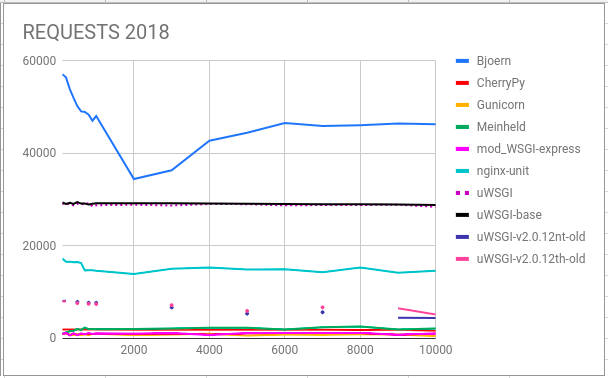

There are several uWSGI diagrams:

The first two uWSGI and uWSGIbase (v2.0.17.1), were performed in a long test, with their competitors, with parameters:--http11 :9808 --processes 5 --threads 2 --enable-threads .--http11 :9808 --processes 5 .

As practice has shown, there is NO qualitative difference for a dummy application test.

And, separately, versions and uWSGI 2016:

uWSGI-v2.0.12th-old and uWSGI-v2.0.12nt-old are respectively v2.0.12 with threads and without in a debian 8.4 container.--http :9808 --http-keepalive --processes 5 --threads 2 --enable-threads--http :9808 --http-keepalive --processes 5

uWSGI takes 2nd place, without reducing the results with increasing load.

In third place - NginX Unit.

In the low-segment uWSGI-v2.0.12 best of all, even with increasing load.

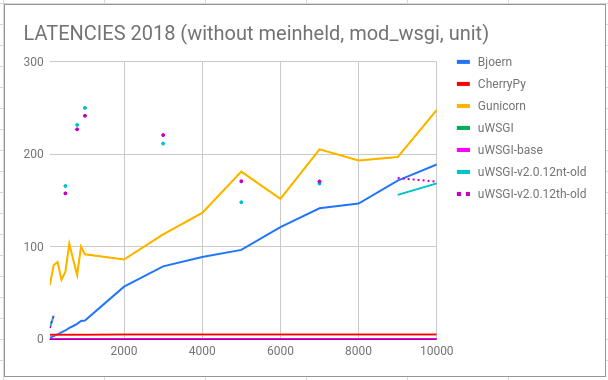

Here we see how Unit didn’t show itself from the best side.

uWSGI and CherryPy are undoubted latency winners.

This picture is similar to the 2016th year.

Here we see how the old uWSGI ate the memory with increasing load.

Unit also linearly increases memory consumption.

A new uWSGI, gunicorn, mod_wsgi - confidently "keep the bar", and that says a lot.

The old uWSGIs start picking up mistakes after 300 open connections.

Unit and CherryPy - no mistakes!

Bjoern starts donating with 1000 connections.

But with the new uWSGI weirdness, starting 200 connections, we get 50 errors, and the number no longer increases. This moment requires detailed consideration.

All data is collected here.

All code changes can be seen in RP .

findings

uWSGI is not so bad, or even very good!

If you do not like the results of the WRK tests, try using other tools.

')

Source: https://habr.com/ru/post/428047/

All Articles