How I tried to make a static analyzer GLSL (and what went wrong)

Once I was preparing for Ludum Dare and made a simple game where I used pixel shaders (I didn't bring others into the Phaser engine).

Shaders are programs on the SI-like language GLSL, which are executed on a video card. There are two types of shaders, in this article we are talking about pixel (they are also "fragment", fragment shaders), which can be very roughly represented in this form:

color = pixelShader(x, y, ...other attributes) Those. the shader is executed for each pixel of the displayed image, determining or specifying its color.

Introductory can be read on another article on Habré - https://habr.com/post/333002/

Having tested it, I threw the link to a friend, and I received a screenshot from him with the question "is this normal?"

No, it was not normal. Looking carefully at the shader code, I found an error in the calculations:

if (t < M) { realColor = mix(color1,color2, pow(1. - t / R1, 0.5)); } Since Since the constant R1 was less than M, in some cases in the first argument of pow, a number less than zero was obtained. The square root of a negative number is a mysterious thing, at least for the GLSL standard. My video card was not embarrassed, and it somehow got out of this position (it seems, by returning from pow 0), but at a friend it turned out to be more discriminating.

And then I wondered: can I avoid such problems in the future? No one is immune from mistakes, especially those that are not reproduced locally. You cannot write unit tests on GLSL. At the same time, the transformations inside the shader are quite simple - multiplications, divisions, sines, cosines ... Is it really impossible to track the values of each variable and make sure that under no circumstances does it go beyond the permissible limits of the values?

So I decided to try static analysis for GLSL. What came out of it - you can read under the cut.

Immediately I will warn you: it was not possible to get some finished product, only a training prototype.

Preliminary analysis

After a bit of study of the existing articles on this topic (and at the same time finding out that the topic is called Value Range Analysis), I was glad that I had GLSL, and not some other language. Judge for yourself:

- no "dynamics" - references to functions, interfaces, automatically deducible types, etc.

- no direct work with memory

- no modules, linking, late binding - the source code of the shader is available entirely

ranges for incoming values are generally well known. - some data types, and even those revolve around float. int / bool is rarely used, and it’s not so important to keep track of them

- Ifs and cycles are rarely used (due to performance issues). if cycles are used, then more often simple counters for traversing an array or repeating a certain effect several times. Nobody will write such horror in GLSL (I hope).

// - https://homepages.dcc.ufmg.br/~fernando/classes/dcc888/ementa/slides/RangeAnalysis.pdf k = 0 while k < 100: i = 0 j = k while i < j: i = i + 1 j = j – 1 k = k + 1 In general, given the limitations of GLSL, the problem seems to be solved. The basic algorithm is as follows:

- parse the shader code and build a sequence of commands that change the values of any variables

- knowing the initial ranges for variables, go through the sequence, updating the ranges when they change

- if the range violates any given boundaries (for example, a negative number may come to pow, or gl_FragColor will come to a red component in the red color, something more than 1. will come in), a warning should be shown

Used technologies

Here I had a long and painful choice. On the one hand, my main scop is to check WebGL shaders, so why not javascript - to run everything in the browser during development. On the other hand, I have been planning to get off the Phaser for a long time and try another engine like Unity or LibGDX. There will also be shaders, but there will be no javascript anymore.

And on the third hand, the task was done primarily for entertainment. And the best entertainment in the world is a zoo. Therefore:

- parsing GLSL-code is made on javascript. It's just that I quickly found the GLSL parsing library in AST on it, and the test UI seems to be as usual web-based. AST turns into a sequence of commands that is sent to ...

- ... the second part, which is written in C ++ and compiled into WebAssembly. I decided this way: if I suddenly want to fasten this analyzer to some other engine, with the C ++ library this should be done the easiest way.

- as the main IDE, I took the Visual Studio Code and in general I am satisfied with it. I need a little bit of happiness - the main thing is that Ctrl + Click works and autocomplete when typing. Both functions work fine in both C ++ and JS parts. Well, the ability to not switch between different IDEs among themselves is also great.

- to compile C ++ in WebAssembly, the cheerp tool is used (it is paid, but free for open-source projects). I have not encountered any problems with its use, except that the code has optimized it is rather strange, but here I am not sure whose fault it is - of the cheerp itself or of the clang compiler it uses.

- for unit tests in C ++ took the good old gtest

- for the assembly of js in bundle took a certain microbundle. He met my requirements for “I want 1 npm-package and a couple of command line flags”, but not without problems, alas. Let's say watch falls on any error while parsing incoming javascript with the message

[Object object], which does not help much.

Everything, now it is possible to go.

Briefly about the model

The analyzer keeps in memory a list of variables that occur in the shader, and for each stores the current possible range of values (such as [0,1] or [1,∞) ).

The analyzer accepts the following type of workflow:

cmdId: 10 opCode: sin arguments: [1,2,-,-,3,4,-,-] Here, we call the sin function, input variables with id = 3 and 4, and the result is written to variables 1 and 2. This call corresponds to the GLSL function:

vec2 a = sin(b); Note the empty arguments (marked with "-"). In GLSL, almost all built-in functions are overloaded for different sets of input types, i.e. there are sin(float) , sin(vec2) , sin(vec3) , sin(vec4) . For convenience, I bring all the overloaded versions to one form - in this case, sin(vec4) .

The output of the analyzer produces a list of changes for each variable, like

cmdId: 10 branchId: 1 variable: 2 range: [-1,1] What does "variable 2 in line 10 in branch 1 have a range from -1 to 1 inclusive" (what is a branch (branch) we will talk about later). Now you can beautifully highlight the ranges of values in the source code.

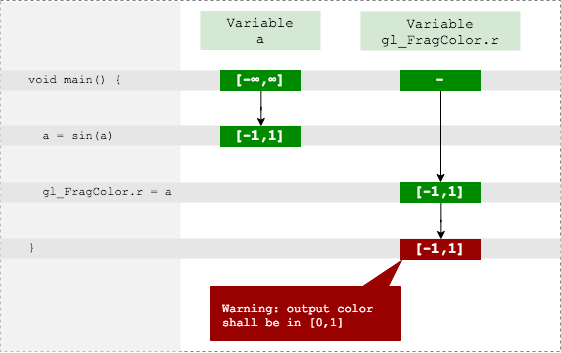

Good start

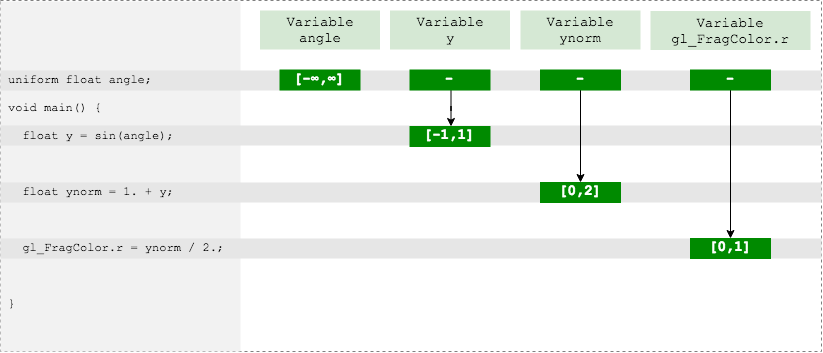

When the AST-tree has already started somehow turning into a list of commands, it is time to implement the standard functions and methods. There are quite a few of them (and they still have a lot of overloads, as I wrote above), but in general they have predictable range conversions. Let's say that for such an example everything is pretty obvious:

uniform float angle; // -> (-∞,∞) //... float y = sin(angle); // -> [-1,1] float ynorm = 1 + y; // -> [0,2] gl_FragColor.r = ynorm / 2.; // -> [0,1]

The red channel of the output color is in the acceptable range, there are no errors.

If you cover more built-in functions, then for half of the shaders, such an analysis is enough. But what about the second half - with the conditions, cycles and functions?

Branching

Take for example such a shader.

uniform sampler2D uSampler; uniform vec2 uv; // [0,1] void main() { float a = texture2D(uSampler, uv).a; // -> [0,1] float k; // -> ? if (a < 0.5) { k = a * 2.; } else { k = 1. - a; } gl_FragColor = vec4(1.) * k; } The variable a is taken from the texture, and therefore the value of this variable is from 0 to 1. But what values can k take?

You can go on a simple path and "merge branches" - count the range in each case and give the total. For the if branch, we get k = [0,2] , and for the else branch, k = [0,1] . If you combine, it turns out [0,2] , and you need to give an error, because values greater than 1 fall into the output color gl_FragColor .

However, this is an obvious false alarm, and for a static analyzer there is nothing worse than false positives - if it is not disabled after the first shout of "wolves", then after the tenth exactly.

It means that we need to process both branches separately, and in both branches we should specify the range of the variable a (although nobody has formally changed it). Here is what it might look like:

Branch 1:

if (a < 0.5) { //a = [0, 0.5) k = a * 2.; //k = [0, 1) gl_FragColor = vec4(1.) * k; } Branch 2:

if (a >= 0.5) { //a = [0.5, 1] k = 1. - a; //k = [0, 0.5] gl_FragColor = vec4(1.) * k; } Thus, when the analyzer encounters a certain condition that behaves differently depending on the range, it creates branches (branches) for each of the cases. In each case, he refines the range of the source variable and moves on through the list of commands.

It should be clarified that the branches in this case are not related to the if-else construct. Branches are created when a variable is divided into sub-ranges by a range, and the cause may be an optional conditional statement. For example, the step function also creates branches. The next GLSL shader does the same thing as the previous one, but does not use branching (which, by the way, is better in terms of performance).

float a = texture2D(uSampler, uv).a; float k = mix(a * 2., 1. - a, step(0.5, a)); gl_FragColor = vec4(1.) * k; The step function should return 0 if a <0.5 and 1 otherwise. Therefore, branches will be created here too - similar to the previous example.

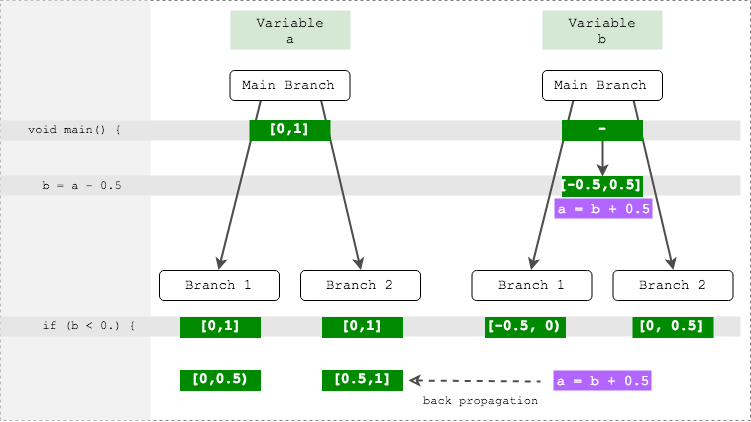

Refinement of other variables

Consider the slightly modified previous example:

float a = texture2D(uSampler, uv).a; // -> [0,1] float b = a - 0.5; // -> [-0.5, 0.5] if (b < 0.) { k = a * 2.; // k,a -> ? } else { k = 1. - a; } Here the nuance is as follows: the branching occurs in the variable b , and the calculations take place with the variable a . That is, within each branch there will be a correct value of the range b , but completely unnecessary, and the original value of the range a is completely incorrect.

However, the analyzer saw that the range b was obtained by calculating from a . If this information is memorized, then with branching, the analyzer can go through all the initial variables and refine their range by performing the reverse calculation.

Functions and Loops

In GLSL, there are no virtual methods, function pointers, or even recursive calls, so each function call is unique. Therefore, the easiest way is to insert the body of the function at the call (zainlaynit, in other words). This will fully comply with the sequence of command execution.

With cycles all the more difficult, because Formally, GLSL fully supports a C-like for loop. However, most often cycles are used in the simplest version, like this:

for (int i = 0; i < 12; i++) {} Such cycles are easy to "deploy", i.e. insert the loop body 12 times in succession. As a result, thinking, I decided so far to support only this option.

The advantage of this approach is that commands can be issued by the stream to the analyzer, without requiring it to memorize some fragments (such as function bodies or cycles) for further reuse.

Emerging issues

Problem # 1: Difficulty or Impossibility of Clarification

Above, we considered cases when, when specifying the value for one variable, we made conclusions about the values of another variable. And this problem is solved when addition / subtraction operations are involved. But, say, what about trigonometry? For example, the following condition:

float a = getSomeValue(); if (sin(a) > 0.) { // a? } How to count a range inside if? It turns out an endless set of ranges with step pi, with which then it will be very inconvenient to work.

And maybe this situation:

float a = getSomeValue(); // [-10,10] float b = getAnotherValue(); //[-20, 30] float k = a + b; if (k > 0) { //a? b? } Refine the ranges of a and b in the general case will be unrealistic. And, therefore, false positives are possible.

Issue # 2: Dependent ranges

Consider this example:

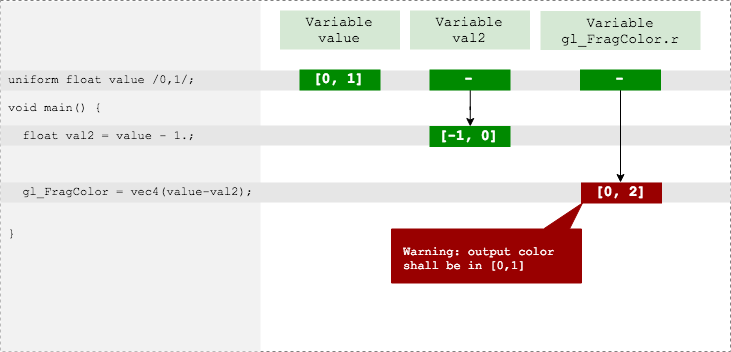

uniform float value //-> [0,1]; void main() { float val2 = value - 1.; gl_FragColor = vec4(value - val2); }

To begin with, the analyzer considers the range of the variable val2 - and it is expected to be [0,1] - 1 == [-1, 0]

However, then, considering the value - val2 , the analyzer does not take into account that val2 was derived from value , and works with ranges as if they are independent of each other. Receives [0,1] - [-1,0] = [0,2] , and reports an error. Although in reality he was supposed to get a constant of 1.

A possible solution: to store for each variable not just a history of ranges, but also the whole “pedigree” - from which variables was dependency, which operations, and so on. Another thing is that "expanding" this genealogy will be difficult.

Problem # 3: Dependent implicit ranges

Here is an example:

float k = sin(a) + cos(a); Here the analyzer will assume that the range is k = [-1,1] + [-1,1] = [-2,2] . What is wrong, because sin(a) + cos(a) for any a lies in the range [-√2, √2] .

The result of the calculation of sin(a) formally independent of the result of the calculation of cos(a) . However, they depend on the same range a .

Results and conclusions

As it turned out, making value range analysis even for such a simple and highly specialized language as GLSL is not an easy task. Covering the features of the language can still be enhanced: support for arrays, matrices and all embedded operations is a purely technical task, just requiring time-consuming. But how to solve situations with dependencies between variables is still an unclear question for me. Without solving these problems, false alarms are inevitable, the noise from which may ultimately outweigh the benefits of static analysis.

Given what I’ve encountered, I’m not particularly surprised by the absence of any well-known tools for value range analysis in other languages - they have obviously more problems than in relatively simple GLSL. At the same time in other languages, you can at least write unit tests, and then - no way.

An alternative solution could be compiling from other languages in GLSL - here recently there was an article about the compilation from kotlin . Then you can write unit tests on the source code and cover all boundary conditions. Or make a “dynamic analyzer”, which will drive the same data that goes to the shader, through the original code on kotlin and warn about possible problems.

So at this stage I stopped. Alas, the libraries did not work out, but maybe this prototype will be useful to someone.

Repository on github, for review:

Try:

Bonus: features of webassembly assembly with different compiler flags

Initially, I did the analyzer without using stdlib - in the old way, with arrays and pointers. At that moment I was very worried about the size of the output wasm file, I wanted it to be small. But starting from a certain moment I began to experience discomfort and therefore I decided to transfer everything to stdlib - smart pointers, normal collections, that's all.

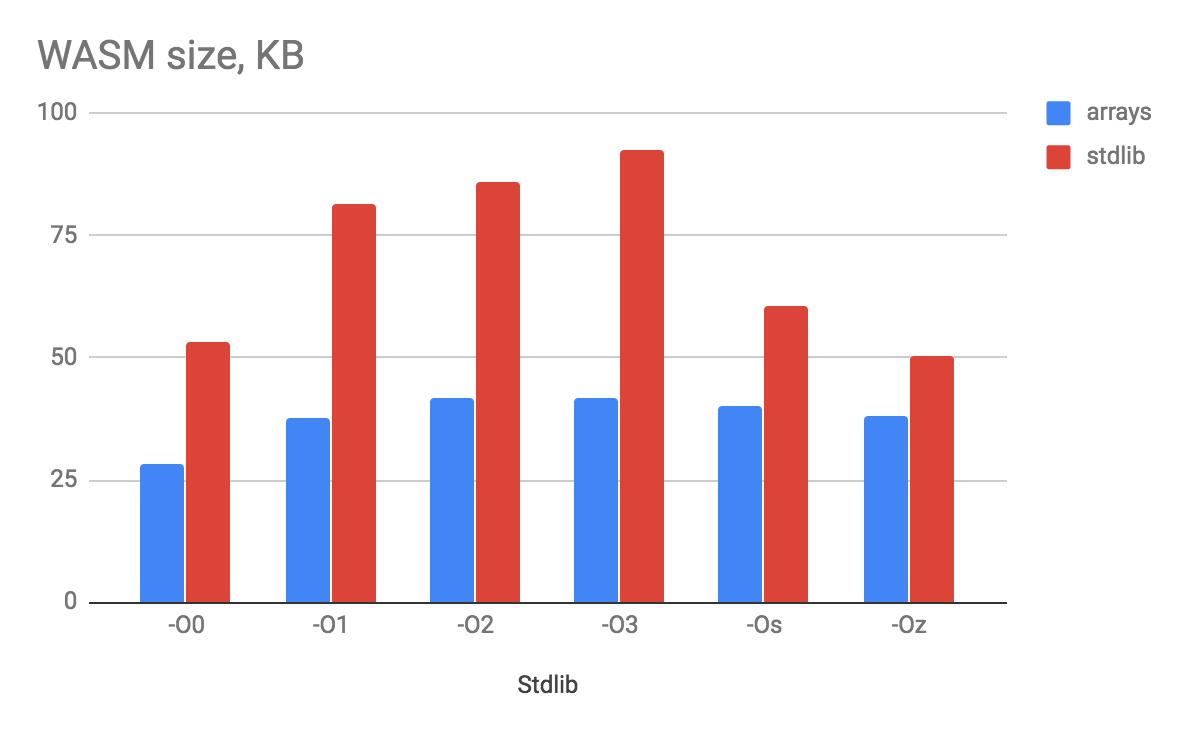

Accordingly, I was able to compare the results of building two versions of the library — with and without stdlib. Well and still to look, how good / badly cheerp (and clang used by it) optimizes the code.

Therefore, I compiled both versions with different sets of optimization flags ( -O0 , -O1 , -O2 , -O3 , -Os and -Oz ), and for some of these versions I measured 3000 analysis speeds with 1000 branches. It agree, not the biggest example, but for the comparative analysis it is enough.

What happened to the size of the wasm file:

Surprisingly, the size of the option with "zero" optimization is better than almost everyone else. I will assume that there is an aggressive inline of everything in the world in O3 , which inflates the binary. The expected version without stdlib turned out to be more compact, but not so much that endure such humiliation deprive yourself of the pleasure of working with convenient collections.

By execution speed:

Now I see that -O3 knowingly eats its bread, when compared with -O0 . At the same time, the difference between the versions with and without stdlib is practically absent (I did 10 measurements each time, I think the difference would have disappeared altogether).

It is worth noting 2 points:

- The graph shows the average values of 10 consecutive analysis runs, but on all tests the very first analysis lasted 2 times longer than the others (i.e., say, 120ms, and the next ones are already around 60ms). Some kind of initialization of WebAssembly probably occurred.

- With the

-O3flag, I grabbed off some horribly strange bugs that I did not catch for other flags. For example, the min and max functions suddenly started working in the same way - like min.

Conclusion

Thank you all for your attention.

Let the values of your variables never go beyond the allotted frame.

But you - go out.

')

Source: https://habr.com/ru/post/428027/

All Articles