How linear algebra is applied in machine learning

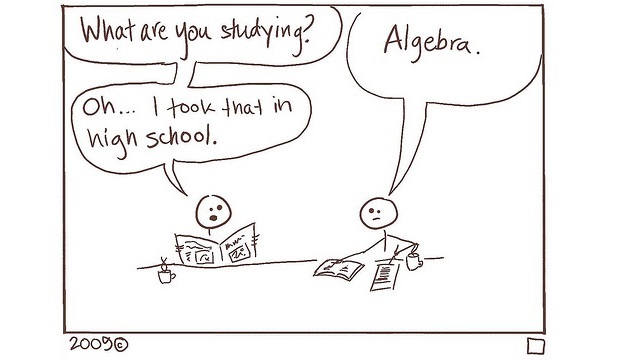

When you study, you may wonder: why? How are you going to apply all this inversions, transpositions, eigenvector and eigenvalues for practical purposes?

Well, if you’re learning, this is the answer for you.

In brief, you can use 3 different levels:

- application of a model to data;

- training the model;

- understanding how it works or why it does not work.

I like the idea of linear concepts (such as vectors, matrices, their products, inverse matrices, eigenvectors and eigenvalues), and the machine learning problems (such as regression, classification and dimensionality reduction) . If not, sign up for a MOOC on these subjects.

Application

What machine learning usually is fitting where is the input data, This is a useful representation of this data, and There are additional parameters, When we have this representation , we can use it (as in unsupervised learning), or to predict some value of interest, (as in supervised learning).

All of , , and are usually numeric arrays, and can be at least stored as vectors and matrices. But storage alone is not important. The important thing is that our function is often linear, that is, . Examples of such linear algorithms are:

- linear regression, where . It doesn’t have to be a problem. affect , other things being equal? "

- logistic regression, where . It is difficult to beat.

- principal component analysis, where is just a low-dimensional representation of high-dimensional , from which can be restored with high precision. You can think about it.

- It has been widely used as a matrix of algorithms that it has been used for it. transactions.

Other algorithms, like neural network, learn nonlinear transformations, but still rely heavily on linear operations (that is, matrix-matrix or matrix-vector multiplication). A simple neural network may look like - it uses two matrix multiplications, and a nonlinear transformation between them.

Training

Try to optimize it. The loss itself is sometimes convenient to write in terms of linear algebra. For example, the quadratic loss method (used in the least squares method) where is the vector of your prediction, and you try to predict. It is useful to minimize this loss. For example, if you want, then you can . Lots of linear operations in one place!

Where is the parameters of interest? are the first eigenvectors of the matrix , corresponding to the largest eigenvalues.

It is not necessary to use gradient descent. You need to multiply the matrices, because if (a composite function), then , and all these derivatives are matrices or vectors, because and are multidimensional.

Simple gradient descent is OK, but it is slow. You can speed it up by using Newtonian optimization methods. The basic method is where and wrt the parameters . But it can be unstable and / or it can be used with its approximations (for example, L-BFGS).

Analysis

You can see what's going on. But the real science (or magic) starts when your model refuses. Get to learn a bad point or suddenly go wild. In deep learning, it often happens to be due to vanishing or exploding gradients. It is a wheeler that you can use. You are trying to invert. If they are close to 0, you can lead to unstable results. If you multiply many matrices with large eigenvalues, the product explodes. The result fades to zero.

Different techniques, like L1 / L2 regularization, batch normalization, and LSTM were invented. If you want a lot of your particular problem. You can work at all. This again includes lots of manipulation with vectors, matrices, their decompositions, etc.

Conclusion

You can see it. numpy.array to apply the pre-trained models, eg, numpy.array in Python. If you need to implement a new one, it can be used to multiply, invert, and decompose lots of matrices.

In this text, I have referenced some concepts you may be unfamiliar with. It's okay. Whats your article is looking for?

You are welcome to find out what to do.

In one of my articles , I’m not sure if I’ve been in IT. However, I never said that maths is useless (otherwise, I wouldn’t be teaching it all the time). It is essential.

PPS Why in English ?! Well, just because I can. I was asked the original question in this language, and I answered it in English. And then he decided that the answer could be brought to the level of a small public article.

Why then Habr, and not, for example, Medium? Firstly, unlike Medium, formulas are normally supported here. Secondly, Habr was sort of going to enter international markets — so why not try to place a piece of English-language content here?

Let's see what happens.

')

Source: https://habr.com/ru/post/427185/

All Articles