Use offensive techniques to enrich Threat Intelligence

Today, Threat Intelligence, or the active collection of information on information security threats, is a tool of prime necessity in the process of identifying information security incidents. Typical TI sources include free subscriptions with malicious indicators, bulletins from manufacturers of equipment and software with descriptions of vulnerabilities, reports of security researchers with detailed descriptions of threats, as well as commercial subscriptions from TI vendors. At the same time, information obtained from the above sources is often not sufficiently complete and relevant. Improving the efficiency and improving the quality of TI can be facilitated by the use of OSINT (open source intelligence) and offensive methods (that is, methods that are characteristic not for the defender, but for the attacker) in information security, which will be discussed in this article.

DISCLAIMER

This article is for informational purposes only. The author is not responsible for the possible harm and / or disruption of the networks and / or services of third parties related to the implementation of certain actions described in the article. The author also encourages readers to comply with the law.

Not instead, but together

Just want to make a reservation that it will not be about refusing or replacing Threat Intelligence information obtained from traditional paid and free sources, but about its addition and enrichment.

Thus, the use of additional TI methods can increase its efficiency and help in solving a number of problems. For example, with another IoT epidemic affecting certain vulnerable versions of firmware, how soon can you get a feed with IP addresses of potentially vulnerable devices in order to quickly detect their activity on the perimeter? Or, if the monitoring system rules are triggered by an indicator (IP address or domain name) that has been flagged malicious a year or more ago, how to determine whether it still remains malicious, provided that it initiate any additional verification of the indicator as "For now" is usually impossible.

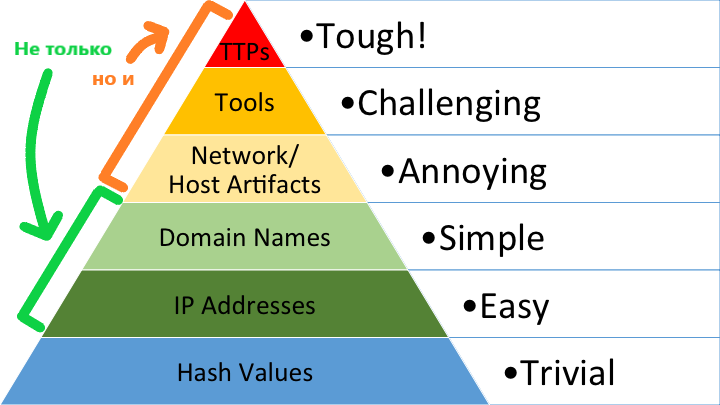

')

That is, addition and enrichment will be especially useful for David Bianco’s “pyramid of pain” medium indicators (IP addresses, domain names, network artifacts), but in some circumstances it is possible to get new long-lived indicators (up through the pyramid) by applying the corresponding analysts.

Internet scanning

One method useful for obtaining Threat Intelligence is network scanning.

What is usually collected when scanning

Most often during the scan, the most interesting results are obtained by collecting the so-called “banners” - the responses of the application software of the scanned system to scanner requests. The “banners” contain quite a lot of data identifying the application software and its various parameters on the “other side”, and such a check is done fairly quickly.

When solving the problem of expanding the scope of Threat Intelligence, the entire Internet will be the scan target. When scanning a public address space (~ 3.7 billion IPv4 addresses, excluding reserved addresses), you can extract the following useful information:

- Which nodes are exposed to vulnerabilities that are widely exploited within the framework of actual malicious campaigns and, in view of this fact, constitute potential sources of harmful effects.

- Which nodes are the managing servers of the botnet networks [1] , which can be identified by the compromised node in the protected perimeter.

- Which nodes belong to the non-public part of distributed anonymous networks that can be used for invisible, uncontrolled exit beyond the protected perimeter.

- More information about the nodes, "lit up" in the response of the monitoring system.

Tool selection

Over the years, the development of information networks has created a large number of tools for network scanning. Among the current software are the following scanners:

Briefly consider the main advantages and disadvantages of these tools for the enrichment of TI in the Internet space.

Nmap

Perhaps the most famous network scanner, created 20 years ago. Due to the possibility of using custom scripts (via the Nmap Scripting Engine), this is an extremely flexible tool, not limited to just collecting application banners. At the moment, quite a lot of NSE scripts have been written, many of which are available for free. Since Nmap tracks every connection, in other words, has a synchronous nature, it is not worth using it for large networks such as the Internet because of the low speed of the scanner. At the same time, it is advisable to use this tool on small samples of addresses obtained by faster tools, due to the power of the NSE.

About Nmap speed

Despite the initially low performance, since the release of this scanner, its developers have added a lot of functionality that improves the scanning speed. Among them are parallel modes of operation and setting the rate in packets per second. However, even with the “twisted” settings, Nmap lags behind its asynchronous competitors in speed. Using the example of scanning / 15 subnets on the 443 / tcp port from a virtual machine with 1 CPU Xeon Gold 6140 and a gigabit channel, we get:

- 71.08 seconds for Nmap with

-T5 -n -Pn -p 443 --open --min-rate 1000000 --randomize-hosts --defeat-rst-ratelimit –oG nmap.txt - 9.15 seconds for Zmap with

-p 443 –o zmap.txt

Zmap

Well-known, though not the first of its kind asynchronous network scanner, which appeared in 2013. According to the report of the developers, published at the 22nd USENIX Security Simposium conference [5] , the tool is able to scan the entire public address range running on an average computer with a gigabit Internet connection in less than 45 minutes (one port each). The advantages of Zmap include:

- High speed; using the PF_RING framework and 10-gigabit connectivity, the theoretical scan time for the public IPv4 address space is 5 minutes (one port each).

- Support random scans of network addresses.

- Module support for TCP SYN scans, ICMP, DNS queries, UPnP, BACNET [6] , UDP probes.

- Presence in the repositories of common distributions of GNU / Linux (in addition, there is support for Mac OS and BSD).

- In addition to Zmap, a series of related utility projects have been developed that extend the functionality of the scanner.

If we talk about the disadvantages of Zmap, here we can note the possibility of scanning addresses only on one port as at the moment.

Among the Zmap-related projects that extend its functionality for tasks of interest, the following can be distinguished:

- ZGrab is an application protocol scanner with support for TTP, HTTPS, SSH, Telnet, FTP, SMTP, POP3, IMAP, Modbus, BACNET, Siemens S7 and Tridium Fox protocols (advanced banner grabber).

- ZDNS is a utility for quick DNS queries.

- ZTag is a utility for tagging scan results issued by ZGrab.

- ZBrowse is a Chrome headless distribution utility for tracking website content changes.

About the possibilities of ZGrab

The advanced scanner of ZGrab application protocols, for which version 2.0 has already been released (there is no full functional parity with version 1.X yet, but it will be reached soon, after which we can talk about the complete replacement of the old version of the new one), allows you to flexibly filter the results scanning thanks to support for a structured output format (JSON). For example, to collect only the Distinguished Name of the Issuer and Subject from HTTPS certificates, a fairly simple command is:

zmap --target-port=443 --output-fields=saddr | ./zgrab2 tls -o - | jq '.ip + "|" + .data.tls.result.handshake_log.server_certificates.certificate.parsed.issuer_dn + "|" + .data.tls.result.handshake_log.server_certificates.certificate.parsed.subject_dn' > zmap-dn-443.txt Masscan

An asynchronous scanner created in the same year as Zmap, according to the command syntax, is similar to Nmap.

The creator (Robert David Graham) declared greater productivity than all asynchronous scanners that existed at that time, including Zmap, achieved through the use of a custom TCP / IP stack.

Advantages of the scanner:

- High performance with a theoretical performance of up to 10 million packets per second, which allows you to scan all IPv4 public space in a few minutes (one port) on a 10-gigabit connection - the same as in Zmap using the PF_RING framework.

- TCP, SCTP and UDP protocol support (via sending UDP payloads from Nmap).

- Support random scans of network addresses.

- Supports selection of both IP range and port range when scanning.

- Support for various formats of output scan results (XML, grepable, JSON, binary, as a list).

At the same time, the scanner has several disadvantages:

- In connection with the use of a custom TCP / IP stack, it is recommended to run a scan on a dedicated IP to avoid conflicts with the OS stack.

- Compared with ZGrab, banner grabber’s options are quite limited.

Scanner Comparison Chart

The summary table below presents a comparison of the functionality of the scanners considered.

Values in table rows

"++" - very well implemented

"+" - implemented

"±" - implemented with restrictions

"-" - not implemented

"+" - implemented

"±" - implemented with restrictions

"-" - not implemented

Selection of targets and scanning method

After choosing the tools to use, you need to decide on the object and purpose of the scan, that is, understand what and why to scan. If with the first, as a rule, there are no questions (this is a public IPv4 address space with a number of exceptions), then with the second, everything depends on the desired end result. For example:

- To identify nodes affected by a particular vulnerability, it is wise to first go over the scanned range with a fast tool (Zmap, Masscan) to identify nodes with open ports of vulnerable services; after finalizing the list of addresses, use Nmap with the appropriate NSE script, if one exists, or create a custom script based on the available information about the vulnerability in security researchers publications. Sometimes simple enough grep'a on the collected application banners, because many services display information about their version in it.

- To identify the nodes of the hidden infrastructure - both anonymous networks and C2 nodes (that is, the managing servers of the botnet networks) - you will need to create custom payloads / probes.

- For solving a wide range of tasks on identifying compromised nodes, parts of the infrastructure of anonymous networks and others, quite a lot can be obtained by collecting and parsing certificates during a TLS handshake.

Selection of infrastructure

The fact that modern fast scanners can show impressive results on fairly modest resources of an average processor does not mean that the load on the network infrastructure will be insignificant. Due to the specifics of the traffic generated during scanning, a rather significant packet rate per second is obtained. According to experience, one core of an average processor (on a CPU manufactured in recent years, in a virtual environment, under any OS, without any tuning of the TCP / IP stack) is able to generate a stream of about 100-150 thousand packets per second per ~ 50 Mbps band that is a serious load for software routers. Hardware networking equipment may also have problems reaching the ASIC performance limit. At the same time, with a scanning speed of 100-150 Kpps (thousands of packets per second), the crawling of the public IPv4 range may take more than 10 hours, i.e. quite a significant amount of time. For a really fast scan, you should use a distributed network of scanning nodes that divide the scanned pool into parts. It is also necessary to have a random scan order so that the utilization of Internet channels and batch loading of the providers' equipment on the “last mile” is not significant.

Difficulties and "thin" moments

In practice, when conducting mass network scans, one may encounter a number of difficulties of both technological and organizational nature.

Performance and Availability Issues

As mentioned earlier, a massive network scan creates a stream of traffic with a high PPS value, causing a heavy load on both the local infrastructure and the network of the provider to which the scan nodes are connected. Before carrying out any scans for research purposes, it is imperative to coordinate all actions with local administrators and representatives of the provider. On the other hand, performance problems may occur on the end scanning nodes when renting virtual servers on Internet hosting.

Not all VPS are equally useful.

On a VPS that is not rented from mainstream hosters, you may encounter a situation where, on productive VMs (several vCPUs and a couple of gigabytes of memory), Zmap and Masscan packet rates do not rise above a couple of dozen Kpps. Most often, the combination of obsolete hardware and a suboptimal combination of virtual environment software plays a negative role. Anyway, according to the author’s experience, more or less substantial guarantees of productivity can be obtained only from companies that are market leaders.

Also, when identifying vulnerable nodes, it should be borne in mind that certain NSE checks can lead to a denial of service on scanned nodes. Such a development of the situation is not just not ethical, but is quite definitely outside the legal field and entails the onset of consequences in the form of responsibility, including criminal liability.

What are we looking for and how?

In order to find something, you need to know how to look for it. Threats are constantly evolving, and new analytical work is constantly needed to identify them. Sometimes analytics published in research documents become obsolete, prompting them to conduct their own research.

One famous example

As an example, we can take the study [5] of 2013 by the authors of Zmap, dedicated to identifying nodes of the TOR network. At the time of publication of the document by analyzing the chain of certificates and identifying the specially generated subject name in self-signed certificates, it was possible to find ~ 67 thousand TOR nodes operating on the tcp / 443 port and ~ 2.9 thousand nodes working on tcp / 9001. Currently, the list of TOR nodes detected by this method is much smaller (a greater variety of ports are used, obfs transports are used, we are migrating to Let's Encrypt Certificates), which forces us to use other analytical methods to solve this problem.

Abuse complaints

When scanning the Internet, there is almost a 100% risk of receiving a stream of abuse complaints. Especially annoying automatically generated abuse on a couple of dozen SYN-packages. And if scans are repeated on a regular basis, a stream of manually created complaints may also go.

Who complains most often

The main sources of abuse (mostly automatic) are educational institutions in the domain zone * .edu. By experience, one should especially avoid scanning addresses from ASNs belonging to Indiana University (USA). Also in the presentations of the authors Zmap [5] and Masscan [7] you can find several interesting examples of inadequate response to network scanning.

You should not be frivolous about this abuse, because:

- In case of complaints, the hoster most often blocks traffic to or from rented virtual machines or even suspends their operation.

- The ISP may disable uplink in order to eliminate the risks of autonomous system blocking.

Best practice for minimizing complaints is to do the following:

- Familiarize yourself with the terms of service of your internet / hosting provider.

- Creating a scan of the information page on the source addresses, which tells about the purpose of scanning; Making the appropriate explanations in the DNS TXT-records.

- Clarification of scans when receiving abuse complaints.

- Maintain a list of exclusions from scanning, add requests to the subnets on request, provide clarifications on the procedure for entering exceptions on the information page.

- Scanning is no longer and no more frequently than is required to solve the problem.

- Distribution of scanning traffic by source, destination-addresses, and time when it is possible.

Network Scan Outsourcing

Conducting independent mass scans requires a weighted approach (the risks are described above), and you need to be aware that if the analytical processing of the scan results is not deep enough, then the final result may be unsatisfactory. At a minimum, the technical part can be facilitated by using existing commercial tools, such as:

More services

In addition to the mentioned services, there is a fairly large number of projects, one way or another connected with global network scanning. For example:

- Punk.sh (former web search engine PunkSPIDER)

- Project Sonar (dataset with the results of network scans from Rapid7)

- Thingful (another search engine for IoT)

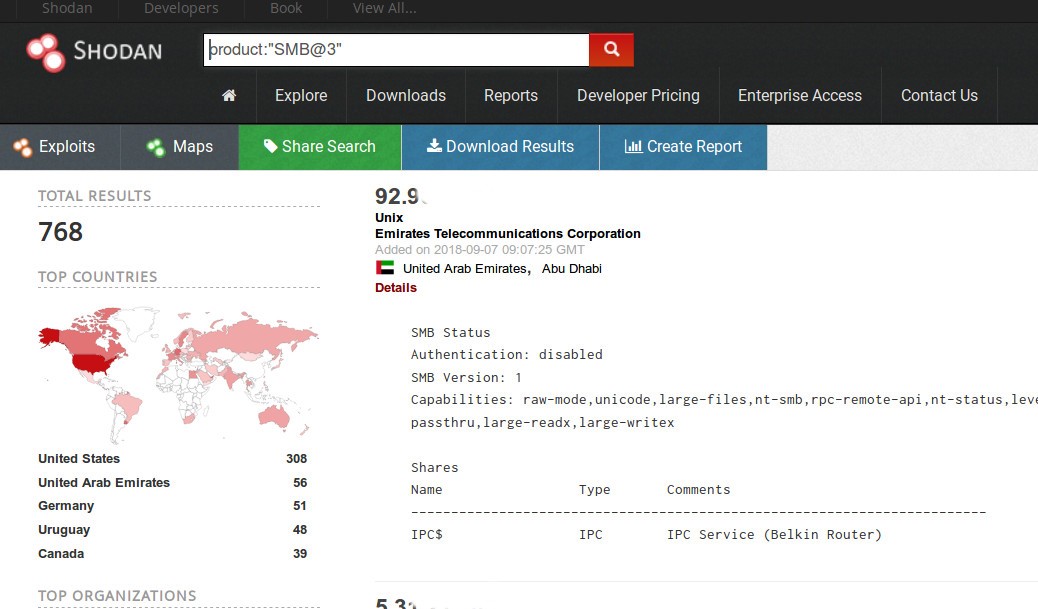

Shodan

The first and most well-known search engine for published services on the Internet, which was originally created as a search engine for IoT and was launched in 2009 by John Materley [11] . At the moment, the search engine offers several levels of access to information within itself. Without registration, the possibilities are very basic. When registering and purchasing a membership, the following functionality becomes available:

- Search by basic filters.

- Issue of detailed information about the nodes ("raw data").

- "Basic" API with the ability to integrate with common utilities, such as Maltego, Metasploit, Nmap and others.

- The ability to export search results (for a fee, for "Credits" - the internal currency of the service).

- Ability to scan on request individual addresses and ranges (for a fee, for "Credits").

The mentioned possibilities are already enough for the basic OSINT [12] , however, the search engine fully discloses its capabilities with an Enterprise subscription, namely:

- On-demand scanning of the entire public IPv4 range of the Internet, including checking the specified range of ports and specifying protocol-specific banner collectors.

- Real-time notifications on the results of triggered scans.

- The ability to upload raw scan results for further analysis (no limitations, the entire database is available).

- The possibility of developing custom collectors for specific tasks (non-standard protocols, etc.).

- Access to historical scan results (until October 2015).

As part of the Shodan project, a number of related projects were created, such as a malware-hunter, honeypot scan, exploits, which enrich the scan results.

Censys

Search engine for IoT and more, created by Zmap author Zakir Durumerik and publicly presented in 2015. The search engine uses Zmap technology and a number of related projects (ZGrab and ZTag). Without registration, unlike Shodan, the search engine is limited to 5 requests per day from one IP. After registration, the use possibilities are expanded: the API appears, search results are increased by queries (up to 1000 results), but the most complete access, including uploading historical data, becomes available only on the Enterprise plan.

The advantages of the search engine over Shodan include the following features:

- There are no restrictions on search filters on basic tariff plans.

- The high speed of updating the results of automatic scanning (daily, according to the authors; the speed of Shodan is significantly lower).

The disadvantages include:

- Fewer ports scanned compared to Shodan.

- The inability to scan "on demand" (both by IP-ranges and ports).

- The smaller depth of enrichment of the results out of the box (which is obvious, given the greater number of Shodan tools implemented through related projects).

If there are enough results collected on the Censys-supported port range to achieve this goal, the lack of on-demand scanning should not be a serious obstacle due to the high speed of updating scan results.

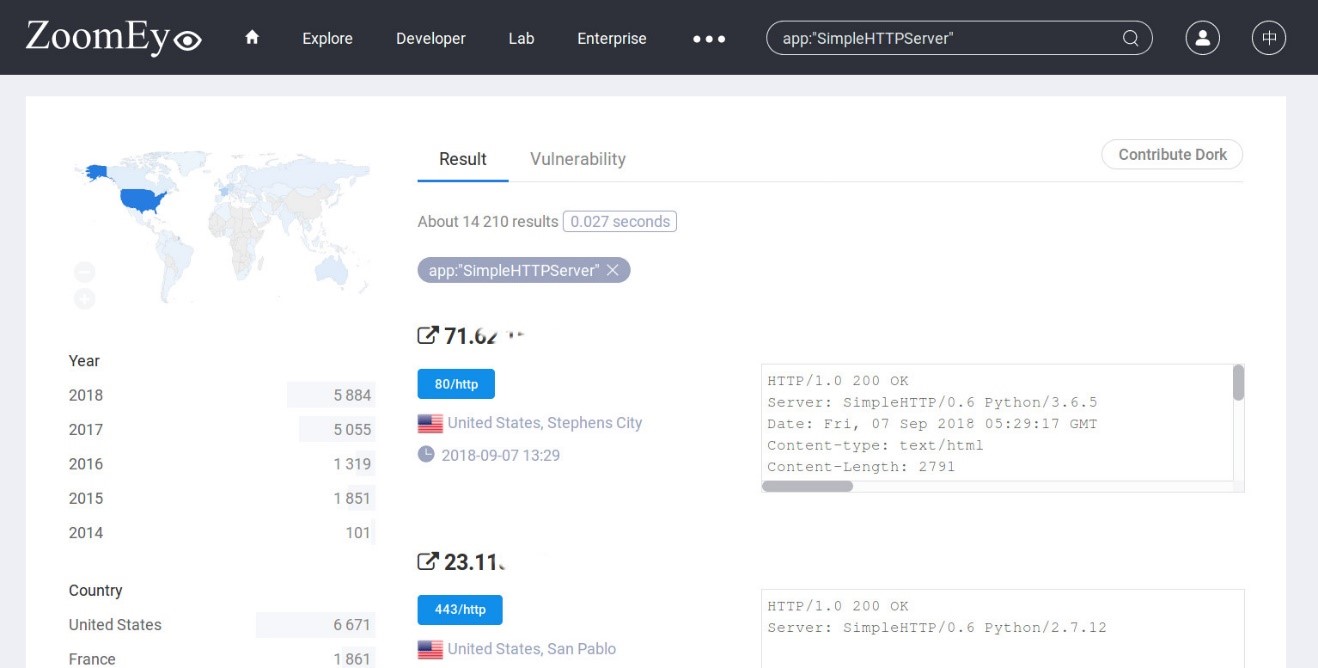

ZoomEye

IoT search engine created by the Chinese IB-company Knowsec Inc in 2013. To date, the search engine works using its own development - Xmap (host scanner) and Wmap (web scanner). The search engine collects information on a fairly wide range of ports, while searching it is possible to categorize according to a large number of criteria (ports, services, operating systems, applications) with details on each host (the contents of the application banners). The search results display a list of possible vulnerabilities for the identified application software from the related project SeeBug (without testing for applicability). For registered accounts, API and web search are also available with a full set of filters, but with a limit on the number of displayed results. To remove the restrictions proposed acquisition of Enterprise-plan. Of the advantages of the search engine can be noted:

- A large number of scanned ports (roughly similar to Shodan).

- Extended web support.

- The identifier of the application software and OS.

The disadvantages include:

- Slow update interval for scan results.

- Lack of scanning capability on request.

- Enrichment of scan results is limited to the identification of services, application software, operating systems and potential vulnerabilities.

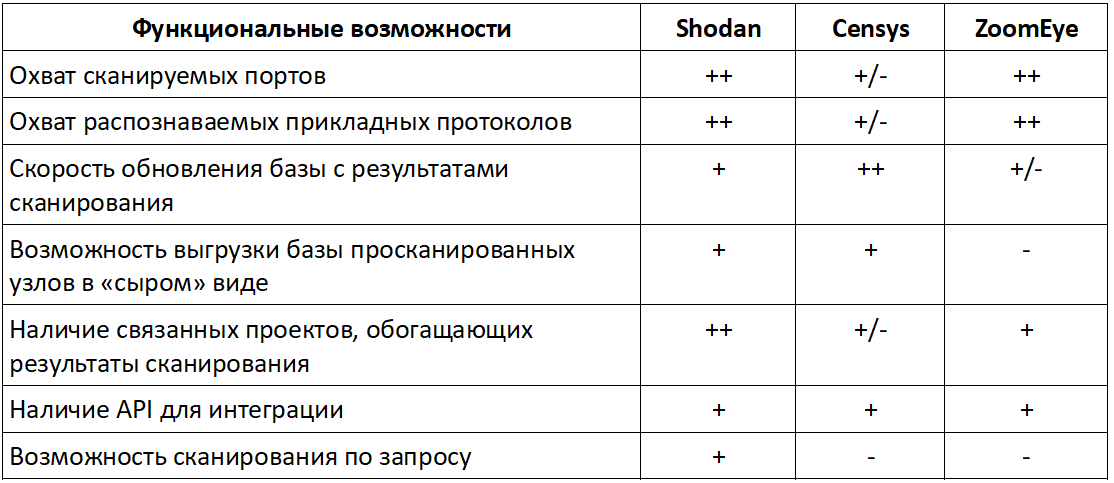

Comparative table of services

The summary table below provides a comparison of the functionality of the services considered.

Values in table rows

"++" - very well implemented

"+" - implemented

"±" - implemented with restrictions

"-" - not implemented

"+" - implemented

"±" - implemented with restrictions

"-" - not implemented

We scan ourselves

Own infrastructure can also be a useful source of TI so that you can understand which new hosts and services are on the network, are they vulnerable or even malicious? If it is possible to use external services for perimeter scanning (for example, this usage scenario is officially supported by Shodan), then all actions inside the perimeter can only be performed independently. The range of tools for network analysis in this case can be quite extensive: these are both passive analyzers, such as Bro [13] , Argus [14] , Nfdump [15] , p0f [16] , and active scanners — Nmap, Zmap, Masscan and their commercial competitors. And the IVRE framework [17] can help in interpreting the collected results, allowing you to get your own Shodan / Censys-like tool.

The framework was developed by a group of IB researchers, one of the authors is the active developer of the scapy utility [18] Pierre Lalette [19] . The framework features include:

- The use of visual analytics to identify patterns and abnormal deviations.

- Advanced search engine with detailed parsing of scan results.

- Integration with third-party utilities through an API with Python support.

IVRE is also well suited for analyzing large scans of the Internet.

findings

Scanning and active network intelligence are excellent tools that have long been used by various researchers in the field of information security. However, for the "classic" bezopasnik such methods of work is still new. The use of OSINT and offensive-methods in combination with classical defensive-means will significantly enhance protection and ensure its pro-activity.

Links

[0] https://detect-respond.blogspot.com/2013/03/the-pyramid-of-pain.html

[1] https://malware-hunter.shodan.io/

[2] https://nmap.org

[3] https://zmap.io

[4] https://github.com/robertdavidgraham/masscan

[5] https://zmap.io/paper.pdf

[6] https://en.wikipedia.org/wiki/BACnet

[7] https://www.defcon.org/images/defcon-22/dc-22-presentations/Graham-McMillan-Tentler/DEFCON-22-Graham-McMillan-Tentler-Masscaning-the-Internet.pdf

[8] https://shodan.io

[9] https://censys.io

[10] https://www.zoomeye.org

[11] https://twitter.com/achillean

[12] https://en.wikipedia.org/wiki/Open-source_intelligence

[13] https://www.bro.org/

[14] http://qosient.com/argus/

[15] http://nfdump.sourceforge.net/

[16] http://lcamtuf.coredump.cx/p0f/

[17] https://github.com/cea-sec/ivre

[18] https://scapy.net/

[19] https://github.com/pl-

[1] https://malware-hunter.shodan.io/

[2] https://nmap.org

[3] https://zmap.io

[4] https://github.com/robertdavidgraham/masscan

[5] https://zmap.io/paper.pdf

[6] https://en.wikipedia.org/wiki/BACnet

[7] https://www.defcon.org/images/defcon-22/dc-22-presentations/Graham-McMillan-Tentler/DEFCON-22-Graham-McMillan-Tentler-Masscaning-the-Internet.pdf

[8] https://shodan.io

[9] https://censys.io

[10] https://www.zoomeye.org

[11] https://twitter.com/achillean

[12] https://en.wikipedia.org/wiki/Open-source_intelligence

[13] https://www.bro.org/

[14] http://qosient.com/argus/

[15] http://nfdump.sourceforge.net/

[16] http://lcamtuf.coredump.cx/p0f/

[17] https://github.com/cea-sec/ivre

[18] https://scapy.net/

[19] https://github.com/pl-

Mikhail Larin, expert at the Jet CSIRT Information Security and Response Center, Jet Infosystems.

Source: https://habr.com/ru/post/426025/

All Articles