A / V tests on Android from A to Z

Most of the articles on A / B tests are devoted to web development, and despite the relevance of this tool for other platforms, mobile development unfairly stands aside. We will try to eliminate this injustice by describing the main steps and revealing the features of the implementation and conduct of A / B tests on mobile platforms.

A / B testing concept

The A / B test is needed to test hypotheses aimed at improving the key metrics of the application. In the simplest case, users are divided into 2 groups of control (A) and experimental (B). The feature that implements the hypothesis is rolled out only on the experimental group. Further, on the basis of a comparative analysis of metric indicators for each group, a conclusion is drawn about the relevance of the feature.

Implementation

1. We divide users into groups

First we need to understand how we will divide users into groups in the right percentage ratio with the ability to dynamically change it. Such an opportunity will be especially useful if it suddenly turns out that a new feature increases conversion by 146%, and is rolled out, for example, by only 5% of users! Surely we want to roll it out to all users and right now - without updating the applications in the store and the associated time costs.

')

Of course, you can organize a breakdown on the server and each time, if necessary, change something to pull backend-developers. But in real life, backing is often developed on the customer side or by a third company, and server developers have enough work to do, so it’s not always possible, or rather, almost never, to manage the breakdown quickly, so this option doesn’t suit us. And here Firebase Remote Config comes to the rescue!

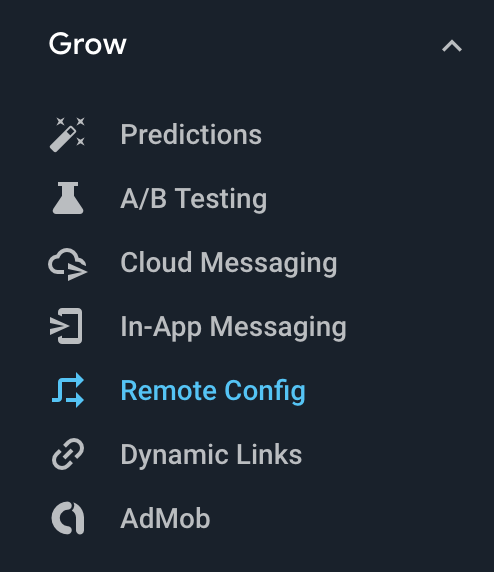

In the Firebase Console, in the Grow group there is a Remote Config tab, where you can create your own config file that Firebase delivers to users of your application.

In the Firebase Console, in the Grow group there is a Remote Config tab, where you can create your own config file that Firebase delivers to users of your application.

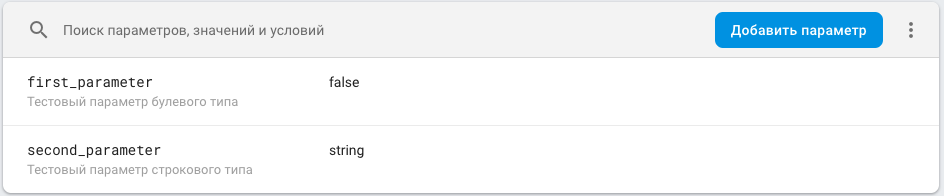

The config is a map <parameter key, parameter value> with the ability to assign a parameter value by condition. For example, for users with a specific version of the application, the value is X, the rest is Y. For more information about the config, see the corresponding section of the documentation .

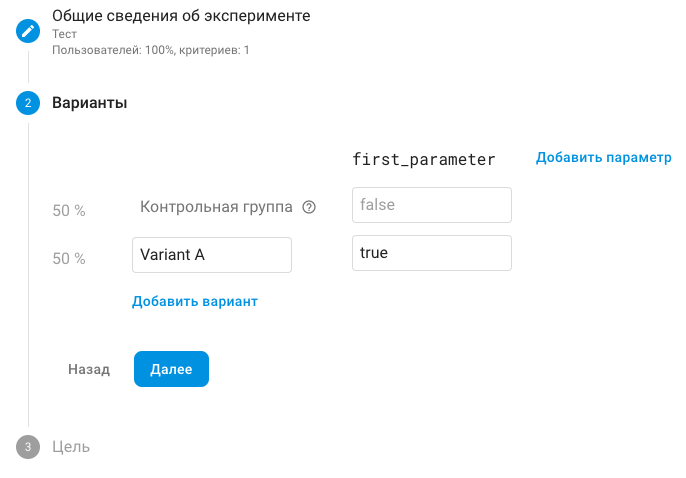

Also in the Grow group there is the A / B Testing tab. Here we can run tests with all the above described buns. The parameters are keys from our Remote Config. In theory, you can create new parameters directly in the A / B test, but this will only add unnecessary confusion, so it’s not worth doing, it’s easier to add the corresponding parameter to the config. The value in it is traditionally the default value and corresponds to the control group, and the experimental value of a parameter other than the default is experimental.

Note The control group is usually called group A, the experimental group is group B. As seen in the screenshot, in Firebase, the default experimental group is called “Variant A”, which causes some confusion. But nothing prevents to change its name.

Next, we run the A / B test, Firebase splits users into groups that correspond to different parameter values, getting the config on the client, we extract the required parameter from it and use the new feature based on the value. Traditionally, the parameter has a name corresponding to the name of the feature, and 2 values: True - the feature is applied, False - does not apply. Read more about A / B test settings in the corresponding section of the documentation .

2. Code

We will not dwell directly on integration with Firebase Remote Config - it is described in detail here .

Let us analyze the way the code is organized for A / B testing. If we just change the color of the button, then there is no point in talking about organization, because there is nothing to organize. We will consider a variant in which, depending on the parameter from Remote Config, the current (for the control group) or new (for the experimental) screen is displayed.

You need to understand that after the A / B test expires, one of the screen options will need to be removed, and therefore the code must be organized in such a way as to minimize changes in the current implementation. All files associated with the new screen should be called with the prefix AB and placed in folders with the same prefix.

If we are talking about MVP in the Presentation layer, it will look something like this:

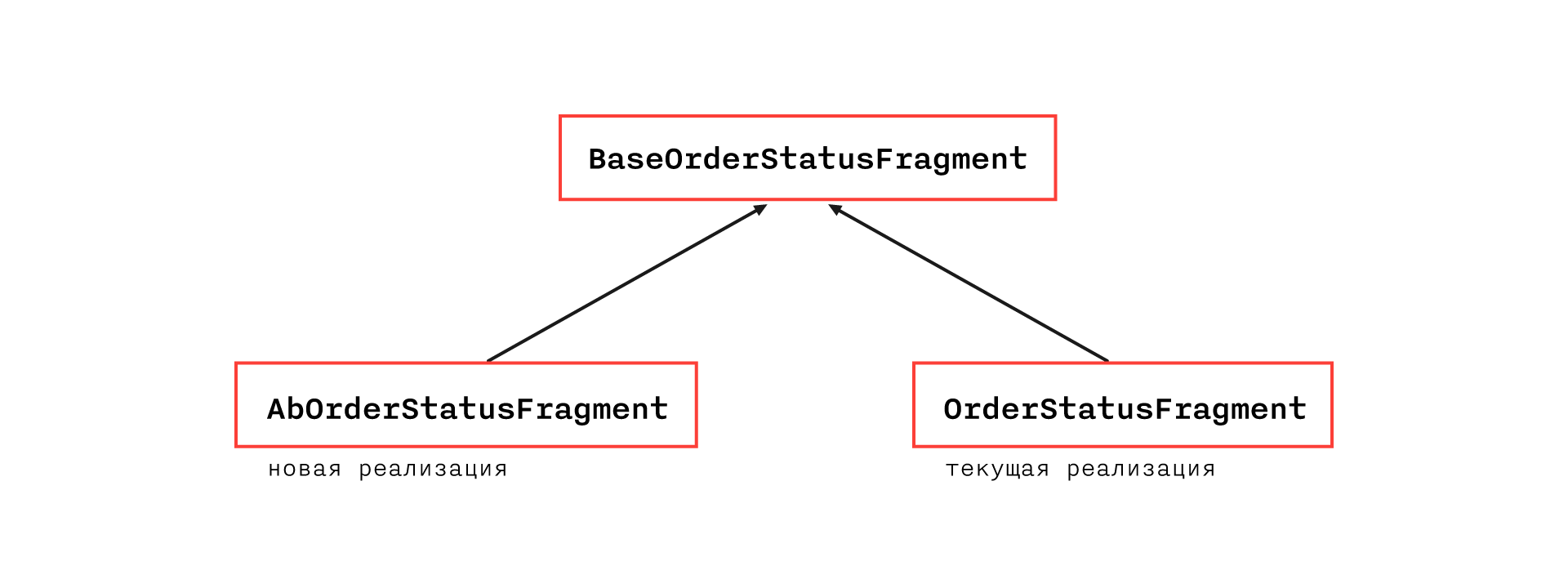

The following class hierarchy seems to be the most flexible and transparent:

BaseOrderStatusFragment will contain all the functionality of the current implementation, except for methods that cannot be placed in an abstract class due to architecture restrictions. They will be located in the OrderStatusFragment.

AbOrderStatusFragment will override methods that differ in implementation and have the necessary additional ones. Thus, in the current implementation, only a breakdown of one class into two will change and some methods in the base class will become protected open instead of private.

Note: if the architecture and the specific case allow, you can do without creating a base class and directly inherit the AbOrderStatusFragment from OrderStatusFragment.

Within the framework of such an organization, you will most likely have to deviate from the accepted CodeStyle, which in this case is permissible, because the corresponding code will be removed or refactored upon completion of the A / B test (but, of course, you should leave comments in the places where CodeStyle was violated)

Such an organization will allow us to quickly and painlessly remove a new feature if it is irrelevant, since all the files associated with it are easy to find by the prefix and its implementation does not affect the current functionality. In the case, if the feature improved the key metric and decided to leave it, we still have to work on cutting out the current functionality, which will affect the code of the new feature.

To get the config, it is worth creating a separate repository and injecting it to the application level, so that it is accessible everywhere, since we do not know which parts of the application will affect future A / B tests. For the same reasons, it should be requested as early as possible, for example, along with the basic information necessary for the application to work (usually such requests occur during the splash show, although this is a holivar topic, but it is important that they exist somewhere).

Well, and, of course, it is important not to forget to drop the value of the parameter from the config into the parameters of analytics events, so that it is possible to compare the metrics

Results analysis

There are quite a few articles that tell in detail about ways to analyze the results of A / B tests, for example . In order not to repeat, just point out the essence. It is necessary to understand that the difference in metrics on the control and experimental groups is a random variable, and we cannot conclude that the relevance of the feature is only based on the fact that the metric indicator in the experimental group is better. It is necessary to build a confidence interval (the choice of the level of reliability should be trusted by analysts) for the above described random variable and to conduct the experiment until the interval is completely in the positive or negative half-plane - then a statistically reliable conclusion can be made.

Underwater rocks

1. Error getting Remote Config

Comparative analysis is carried out on new users, as users with the same user experience and only those who have seen the only implementation option should participate in the experiments. Recall that receiving a config is a network request and may fail, in which case the default value will be applied, traditionally equal to the value for the control group.

Consider the following case: we have a user who Firebase referred to the experimental group. The user starts the application for the first time and the Remote Config request returns an error — the user sees the old screen. At the next launch, the Remote Config request is processed correctly and the user sees a new screen. It is important to understand that such a user is not relevant for the experiment, so you need to figure out how to sift such a user on the side of the analytics system, or prove that the number of such users is negligible.

In fact, such errors do occur infrequently, and most likely the last option will suit you, but there is essentially a similar, but much more pressing problem - the time to get the config. As mentioned above, it is better to shove the Remote Config request at the beginning of the session, but if the request goes too long, the user will get tired of waiting and he will exit the application. Therefore, we need to solve a nontrivial task - choose the timeout for which the Remote Config request is reset. If it is too small, then a large percentage of users may be in the list of irrelevant for the test, if too large - we risk causing the anger of users. We collected statistics on the time of receiving the config:

Note. Data for the last 30 days. Total number of requests 673 529 . The first column, in addition to network requests, contains the receipt of the config from the cache, so it is knocked out of the general form of distribution.

Milliseconds | Number of requests |

200 | 227485 |

400 | 51038 |

600 | 59249 |

800 | 84516 |

1000 | 63891 |

1200 | 39115 |

1400 | 24889 |

1600 | 16763 |

1800 | 12410 |

2000 | 9502 |

2200 | 7636 |

2400 | 6357 |

2600 | 5409 |

2800 | 4545 |

3000 | 3963 |

3200 | 2699 |

3400 | 3184 |

3600 | 2755 |

3800 | 2431 |

4,000 | 2176 |

4200 | 1950 |

4400 | 1804 |

4600 | 1607 |

4800 | 1470 |

5000 | 1310 |

> 5000 | 35375 |

2. Nakatka update Remote Config

You need to understand that Firebase caches the Remote Config request. The default lifetime of a cache is 12 hours. The time can be adjusted, but Firebase has a limit on the frequency of requests, and if you exceed it, Firebase will ban us and return an error to the config request (Note for testing you can write the setDeveloperModeEnabled setting, in this case the limit will not be applied, but possible for a limited number of devices).

Therefore, for example, if we want to complete the A / B test and roll out a new feature 100%, we need to understand that the transition will take place only within 12 hours, but this is not the main problem. Consider the following case: we performed an A / B test, completed it, and prepared a new release, in which there is another A / B test with the appropriate config. We have released a new version of the application, but our users already have a config cached from the past A / B test, and if the cache has not expired yet, the config request will not pull up new parameters, and we will again get users assigned to the experimental group, which at the first request will receive the default values of the config and in the future will spoil the data of the new experiment.

The solution to this problem is very simple - it is necessary to force the config request when updating the application version by resetting the cache lifetime:

val cacheExpiration = if (isAppNewVersion) 0L else TWELVE_HOURS_IN_SECONDS FirebaseRemoteConfig.getInstance().fetch(cacheExpiration) Since updates are not released as often, we will not exceed the limits

Read more about these issues here .

findings

Firebase provides a very convenient and simple tool for A / B testing, which you should use while paying particular attention to the bottlenecks described above. The proposed organization of the code will minimize the number of errors when making changes related to the cycle of A / B tests.

All good, successful A / B testing and increasing conversions by 100,500%.

Source: https://habr.com/ru/post/425501/

All Articles