Evolution of decomposition: from Linux servers to Kubernetes

What so attracts developers in microservices? Behind them there is no revolutionary technology, the advantages over the monolith are quite controversial. Only the ease with which modern development and deployment tools allow you to create systems to run on thousands of servers. We propose to follow the path to the present moment, when the development and deployment of such a distributed system is possible by the efforts of a single developer. About how virtualization technologies have evolved, what role Linux containers, Docker and Kubernetes have played, tells Alexander Trekhlebov holonavt , corporate architect of Promsvyazbank, has been developing software for more than 15 years. Started with C ++, then switched to Java. Recently, I developed a banking backend on the Spring Cloud platform.

If we recall the first implementations of the execution of scripts (Java Script, VB Script) within the framework of displaying pages in the browser, then these were single-threaded sequences of instructions. The same javascript is single-tasking. If JS is executed within one web page and a failure or delay occurs in one of the executable instructions, then everything that happens on the page will freeze all the code. And nothing can be done, just close or reload the page, and sometimes the browser or the entire operating system.

Clearly, it was not very convenient. Especially considering the fact that multitasking / multithreading was already everywhere: processors, operating systems, applications (unless the first operating systems for mobile devices were single-tasking), and JS was, as it were, single-threaded. What happened then? One after another, various frameworks began to appear, one way or another solving this problem. Facebook did React, Google released Angular.

Multitasking storms front-end and backend

How to make multitasking from a single-tasking system? Take instructions and scatter in different streams, plus, of course, monitor these streams. Surely, you still remember how in one of the FB versions you suddenly had the opportunity to simultaneously write a message and follow the changes in the tape. And if suddenly the tape fell, the messages continued to work. It was then that the first UI appeared on the modular React interface. Using the framework, multitasking began to work out of the box.

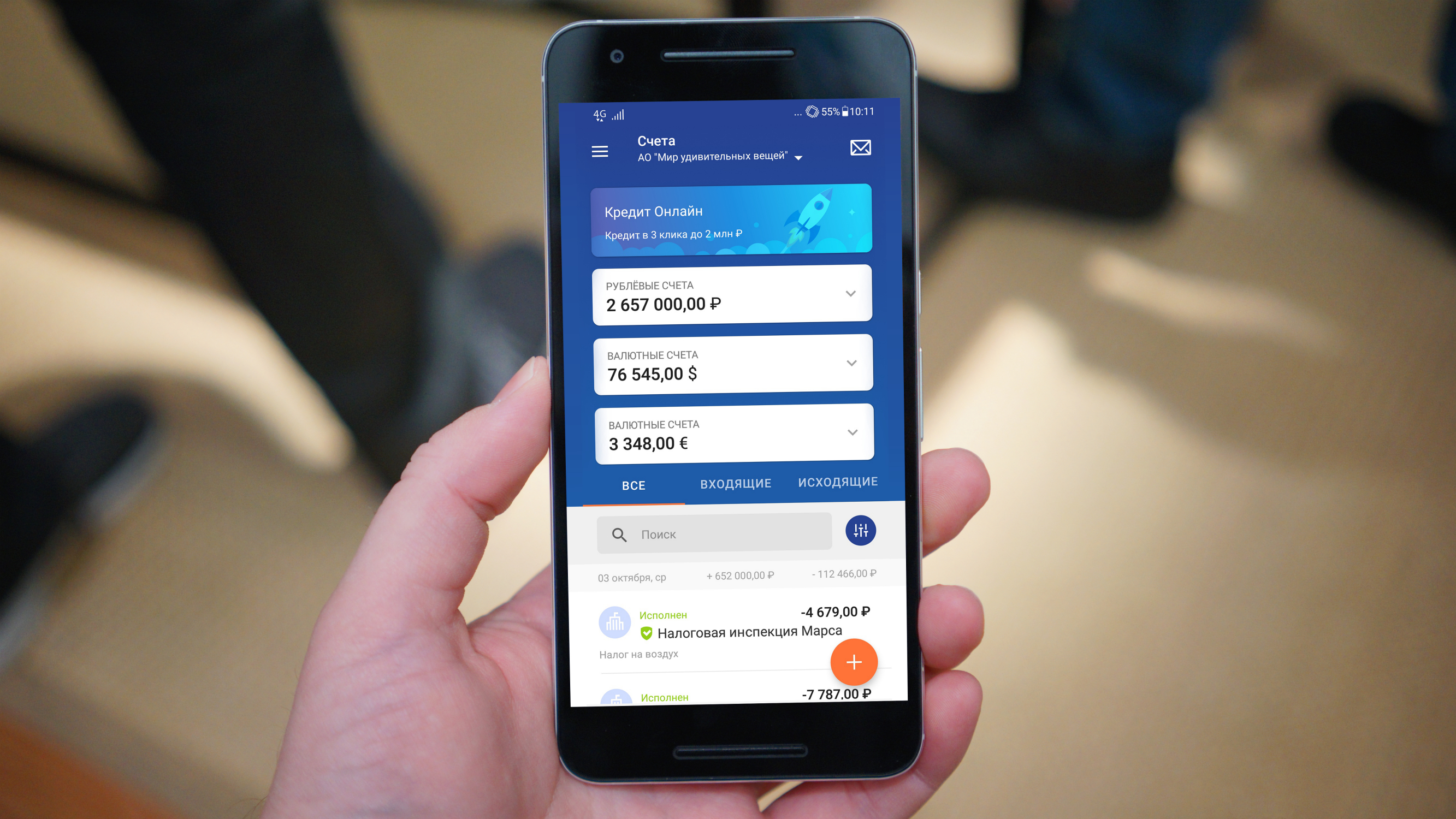

What does all this have to do with microservices? When the UI of Internet banks began to provide a fairly wide functionality, freezing, and even more, the fall of the application became for users, it can be said, a shocking event. Indeed, it is one thing when Facebook is bogged down, and another is when you have just made a mortgage payment, and the funds in the account are not displayed, because there was a failure on the form of account balances.

There was a simple idea - independent UI elements that allow you to make Angular and React, attach to equally independent elements of the backend. Each independent element of the backend is a microservice that can scale, rise after a failure, etc.

Here it is important to correctly build the user interface so that it is modified depending on the available backend components. If something on the backend does not work for you, then you do not show the corresponding functionality on the UI, or you show it in some default way - you can change the font color to gray or display an empty plate with the words “Account balance information is not available. Call back tomorrow "Actually, such a bundle of UI elements with microservices helps to increase the overall reliability and scalability of the banking application.

From Titanic to Docker

In my opinion, the main reason why microservices have become so popular, despite the significant memory consumption and the overhead of computing power, is decomposition. For the rest, by and large, microservices do not have great advantages over monolith. Decomposition, in my understanding, is when the functionality is divided into certain independent blocks for launching and deploying. This means that during the operation of the remaining blocks, it is possible to update one of them, often without stopping its operation (blue, green - deployment), to raise an additional instance.

All these technologists did not appear yesterday or the day before yesterday. Solutions for distributed computing were developed at the time of mainframes, because the lack of computing resources arose almost immediately, as soon as these resources appeared.

They began to think of how to rationally distribute all this, for example, graphic calculations at Silicon Graphix stations. It was all very expensive, and such solutions were available only to large organizations, not to mention the individual developers. The stations themselves and the server software for them were very expensive, so the corresponding capabilities were developed for the Linux kernel. For example, computer graphics for the scenes of the film “Titanic”, which was released back in 1997, was performed on servers with Alpha processors under the control of Linux. Most of the solutions needed for the operation of distributed systems were already developed and run in at that time. But it was still difficult for one specialist to use all these technologies; assembly, delivery and support of such a system required serious labor costs.

At first there were just physical servers that need to be routed somehow, then the era of virtualization began, virtual machines appeared, the work went merrily, but still starting and stopping the virtual machine remained a rather resource-intensive action. But I wanted this to happen as quickly as launching the process inside the operating system. A big step in the emergence of technology "in the people" was associated with the advent of Linux-containers.

A Linux container is almost a system process, but it has its own network interface and many other things that make it almost like a virtual machine. Why almost? Because the virtual machine rises in a fairly isolated environment. The Linux container uses the mother operating system, each container has its own version of the Linux operating system, but the system calls are translated to the core of the mother OS.

This has its advantages - when creating an LXC container, you do not need to re-raise the core. However, working with LXC containers in their original form was very time consuming and inconvenient. Actually at some point Docker appeared. This solution took care of the deployment and management of Linux containers, putting a more user-friendly interface.

The emergence of Docker was the impetus for the large-scale distribution of microservice architecture. Yes, technologies were invented a long time ago, but the possibility of their convenient use appeared only at this moment. Now, using Docker, a developer can literally raise a couple of virtual machines with a couple of commands and organize a distributed computing system, and then dynamically update and scale it.

Thus, the possibilities of decomposition allowed a wide range of developers to turn a monolith into a set of microservices inside containers. However, new difficulties arose. When there are several dozen containers and they are scattered across several servers, you need to somehow manage them, accompany them, and perform their orchestration. There have already appeared solutions such as Docker Swarm and Kubernetes. The individual developer received a new powerful tool.

Microservices in banks

What is the situation with microservices in the banking industry? How many of them, for example, are required to support online banking? There is a good example: in the UK, there is a fully digital bank, Monzo, it has no back office, no branches, no site, it’s all essentially in a mobile application. It all started with 40 microservices, then their number increased to 300, now it is more.

If you look at the implementation in Promsvyazbank, then we have a system where up to 40 microservices are deployed.

At the same time, development systems are being developed, which allow developing the main components of a microservice system with a few lines of code that can be simply scaled and updated. All these features are very popular when building systems with machine learning, analyzing large amounts of data in real time (Cloud Streaming, etc.).

Alexander Trekhlebov will tell about his development experience based on microservice architecture in the report “Microservices - fault tolerance based on end-to-end modularity” at the 404 Internet Figures Festival , which will be held on October 6-7, 2018 in Samara.

')

Source: https://habr.com/ru/post/425301/

All Articles