How we helped CDN MegaFon.TV not to fall on the 2018 World Cup

In 2016, we talked about how MegaFon.TV managed with everyone to watch the new season of “Game of Thrones”. The development of the service did not stop there, and by mid-2017 we had to deal with loads several times more. In this post we will describe how such rapid growth inspired us to drastically change the approach to organizing the CDN and how this new approach was tested by the World Cup.

MegaFon.TV is an OTT service for viewing various video content - movies, TV shows, TV channels, programs in recording. Through MegaFon.TV, you can access content on almost any device: on phones and tablets with iOS and Android, on smart TVs LG, Samsung, Philips, Panasonic of different exit years, with a whole OS zoo (Apple TV, Android TV), desktop browsers on Windows, MacOS, Linux, mobile browsers on iOS and Android, and even on exotic devices such as STB and children's Android projectors. There are practically no restrictions on devices - the only important thing is the availability of the Internet with a bandwidth of 700 Kbps. There will be a separate article in the future about how we organized support for such a number of devices.

The majority of service users are MegaFon subscribers, which is explained by profitable (and most often even free) offers included in the subscriber’s tariff plan. Although we also note a significant increase in users of other operators. In accordance with this distribution, 80% of MegaFon.TV traffic is consumed within the MegaFon network.

Architecturally, since the launch of the service, the content has been distributed via CDN. We have a separate post dedicated to the work of this CDN. In it, we told how it allowed to process the peak traffic that went to the service at the end of 2016, during the release of the new season of the Game of Thrones. In this post we will talk about the further development of MegaFon.TV and about new adventures that have fallen on the service together with the 2018 World Cup.

')

Compared to events from the previous post, by the end of 2017 the number of Megafon users. TV has increased several times, films and TV shows have also become an order of magnitude more. New functionality was launched, new packages appeared, available by subscription. The peaks of the traffic of the times of the Game of Thrones, we now saw every day, the share of films and TV series in the general stream grew steadily.

At the same time, problems began with the redistribution of traffic. Our monitoring, which is configured to load chunks for different types of traffic in different formats, has increasingly begun to generate download errors on a video timeout. In the MegaFon.TV service, the length of the chunk is 8 seconds. If the chunk does not have time to boot in 8 seconds, errors may occur.

The peak of errors was expected at the hours of maximum load. How should this affect the users? At a minimum, they could see a deterioration in video quality. It is not even always visible to the naked eye, due to the rather large number of multi-bitrate profiles. In the worst case, the video was hanging.

The search for the problem has begun. Almost immediately it became clear that a return error occurs on the EDGE servers of the CDN. Here you need to make a small digression and tell how the servers work with live and VOD traffic. The scheme is slightly different. A user who comes to the EDGE server for content (playlist or chunk), if there is content in the cache, gets it from there. Otherwise, the EDGE server follows Content on Origin, loading the main channel. Together with a playlist or a chunk, the Cache-Control: max-age header is given, which tells the EDGE server how much to cache this or that content unit. The difference between LIVE and VOD lies precisely in the time caching chunks. For live chunks, a small caching time is set, usually from 30 seconds to several minutes - this is due to the small time of live content. This cache is stored in RAM, as it is necessary to constantly give chunks and rewrite the cache. A longer time is set for VOD-chunks, from several hours to weeks and even months - depending on the size of the content library and the distribution of its views among users. As for playlists, they are cached, as a rule, in no more than two seconds, or they are not cached at all. It should be clarified that we are talking only about the so-called PULL-mode of the CDN, in which our servers worked. Using the PUSH mode in our case would not be entirely justified.

But back to finding the problem. As we have already noted, all servers simultaneously worked on the return of both types of content. At the same time, the servers themselves had a different configuration. As a result, some machines were overloaded by IOPS. Chunks did not have time to write / read due to the small performance, the number, volumes of disks, a large content library. On the other hand, more powerful machines that received more traffic began to fall through CPU usage. Processor resources were spent on the maintenance of SSL traffic, chunks delivered via https, while IOPS on the disks barely reached 35%.

A scheme was required which, with minimal cost, would allow the use of available power in an optimal way. Moreover, in six months, the World Cup should start, and according to preliminary calculations, the peaks in live traffic should have increased sixfold ...

After analyzing the problem, we decided to separate VOD- and live-traffic on different PADs made up of servers of different configuration. And also create a function for distributing traffic and balancing it across different groups of servers. In total, there were three such groups:

The distribution was as follows:

For the choice of PAD, from which the user had to receive content, a special logic module responded. Here are his tasks:

Origin servers were equipped with SSD. Unfortunately, HIT_RATE for VOD chunks when switching traffic to Origin left much to be desired (about 30%), but they performed their task, so we did not see any problems with the packers in the NNR.

Since there were few servers for EDGE_PAD configuration, it was decided to allocate them in the regions with the largest traffic share - Moscow and the Volga region. By means of GeoDNS, traffic was directed to the Volga region from the regions of the Volga and Ural federal districts. The Moscow node serviced everything else. We didn’t like the idea of delivering traffic to Siberia and the Far East from Moscow, but in total these regions provide about 1/20 of all traffic, and MegaFon channels were wide enough for such volumes.

After developing the plan, the following work was carried out:

Some work turned out to be paralleled, and in the end everything went for six weeks.

After tuning, the overall system performance was 250 Gbit / s. The decision with the introduction of VOD-traffic to individual servers immediately showed its effectiveness after rolling out in production. Since the beginning of the World Cup, there have been no problems with VOD traffic. Several times, for various reasons, it was necessary to switch VOD traffic to Origin, but in principle, they also coped. Perhaps this scheme is not very effective due to the very small use of the cache, since we force SSD-drives to constantly rewrite the content. But the circuit works.

As for the live traffic, the corresponding volumes for checking our solution appeared with the start of the World Cup. The problems started when we faced the second time with switching traffic when the limit was reached during the Russia-Egypt match. When the traffic switching worked, he poured all over the backup PAD. In these five minutes, the number of requests (growth curve) was so great that the backup CDN was completely clogged and started to make mistakes. In this case, the main PAD was released during this time and started to idle a little:

From this 3 conclusions were made:

Improvements took three days, and already at the match Russia - Croatia, we checked whether our optimization worked. In general, we are pleased with the result. At the peak, the system processed 215 Gbit / s of mixed traffic. This was not a theoretical limit of system performance - we still had a solid margin. If necessary, we can now connect any external CDN, if necessary, and “throw out” the excess traffic there. Such a model is good when you don’t want to pay substantial money every month for using someone else’s CDN.

We have plans for further development of the CDN. First of all, I would like to extend the EDGE_PAD scheme to all federal districts - this will lead to less use of channels. Also, tests are performed with the VOD_PAD redundancy scheme, and some of the results already look pretty impressive.

In general, everything that has been done over the past year makes me think that a CDN service for distributing video content is a must have. And not even because it saves a lot of money, but rather because the CDN becomes part of the service itself, directly affects the quality and functionality. In such circumstances, to give it in the wrong hands - at least unwise.

Briefly about MegaFon.TV

MegaFon.TV is an OTT service for viewing various video content - movies, TV shows, TV channels, programs in recording. Through MegaFon.TV, you can access content on almost any device: on phones and tablets with iOS and Android, on smart TVs LG, Samsung, Philips, Panasonic of different exit years, with a whole OS zoo (Apple TV, Android TV), desktop browsers on Windows, MacOS, Linux, mobile browsers on iOS and Android, and even on exotic devices such as STB and children's Android projectors. There are practically no restrictions on devices - the only important thing is the availability of the Internet with a bandwidth of 700 Kbps. There will be a separate article in the future about how we organized support for such a number of devices.

The majority of service users are MegaFon subscribers, which is explained by profitable (and most often even free) offers included in the subscriber’s tariff plan. Although we also note a significant increase in users of other operators. In accordance with this distribution, 80% of MegaFon.TV traffic is consumed within the MegaFon network.

Architecturally, since the launch of the service, the content has been distributed via CDN. We have a separate post dedicated to the work of this CDN. In it, we told how it allowed to process the peak traffic that went to the service at the end of 2016, during the release of the new season of the Game of Thrones. In this post we will talk about the further development of MegaFon.TV and about new adventures that have fallen on the service together with the 2018 World Cup.

')

Growth services. And problems

Compared to events from the previous post, by the end of 2017 the number of Megafon users. TV has increased several times, films and TV shows have also become an order of magnitude more. New functionality was launched, new packages appeared, available by subscription. The peaks of the traffic of the times of the Game of Thrones, we now saw every day, the share of films and TV series in the general stream grew steadily.

At the same time, problems began with the redistribution of traffic. Our monitoring, which is configured to load chunks for different types of traffic in different formats, has increasingly begun to generate download errors on a video timeout. In the MegaFon.TV service, the length of the chunk is 8 seconds. If the chunk does not have time to boot in 8 seconds, errors may occur.

The peak of errors was expected at the hours of maximum load. How should this affect the users? At a minimum, they could see a deterioration in video quality. It is not even always visible to the naked eye, due to the rather large number of multi-bitrate profiles. In the worst case, the video was hanging.

The search for the problem has begun. Almost immediately it became clear that a return error occurs on the EDGE servers of the CDN. Here you need to make a small digression and tell how the servers work with live and VOD traffic. The scheme is slightly different. A user who comes to the EDGE server for content (playlist or chunk), if there is content in the cache, gets it from there. Otherwise, the EDGE server follows Content on Origin, loading the main channel. Together with a playlist or a chunk, the Cache-Control: max-age header is given, which tells the EDGE server how much to cache this or that content unit. The difference between LIVE and VOD lies precisely in the time caching chunks. For live chunks, a small caching time is set, usually from 30 seconds to several minutes - this is due to the small time of live content. This cache is stored in RAM, as it is necessary to constantly give chunks and rewrite the cache. A longer time is set for VOD-chunks, from several hours to weeks and even months - depending on the size of the content library and the distribution of its views among users. As for playlists, they are cached, as a rule, in no more than two seconds, or they are not cached at all. It should be clarified that we are talking only about the so-called PULL-mode of the CDN, in which our servers worked. Using the PUSH mode in our case would not be entirely justified.

But back to finding the problem. As we have already noted, all servers simultaneously worked on the return of both types of content. At the same time, the servers themselves had a different configuration. As a result, some machines were overloaded by IOPS. Chunks did not have time to write / read due to the small performance, the number, volumes of disks, a large content library. On the other hand, more powerful machines that received more traffic began to fall through CPU usage. Processor resources were spent on the maintenance of SSL traffic, chunks delivered via https, while IOPS on the disks barely reached 35%.

A scheme was required which, with minimal cost, would allow the use of available power in an optimal way. Moreover, in six months, the World Cup should start, and according to preliminary calculations, the peaks in live traffic should have increased sixfold ...

New approach to CDN

After analyzing the problem, we decided to separate VOD- and live-traffic on different PADs made up of servers of different configuration. And also create a function for distributing traffic and balancing it across different groups of servers. In total, there were three such groups:

- Servers with a large number of high-performance disks, best suited for caching VOD-content. In fact, SSD RI disks of maximum capacity would be best suited, but there were no such ones available, and too large a budget would be required to purchase the required amount. As a result, it was decided to use the best that was available. Each server contained eight 1TB SAS 10k disks in RAID5. Of these servers was compiled VOD_PAD.

- Servers with a large amount of RAM for caching all possible delivery formats for live chunks, with processors capable of handling ssl traffic, and "thick" network interfaces. We used the following configuration: 2 processors with 8 cores / 192GB of RAM / 4 10GB interfaces. Of these servers, EDGE_PAD was compiled.

- The remaining group of servers that is not able to handle VOD traffic, but suitable for small amounts of live content. They can be used as a reserve. From the servers was compiled RESERVE_PAD.

The distribution was as follows:

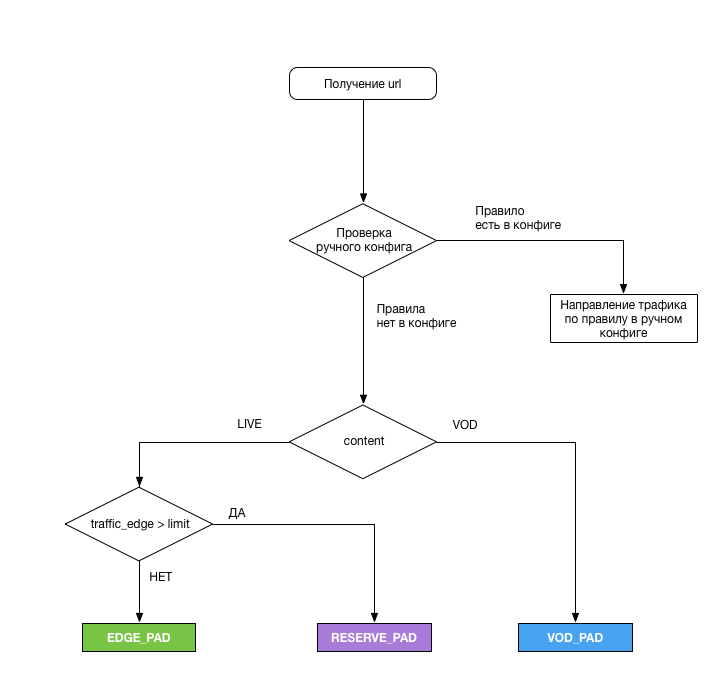

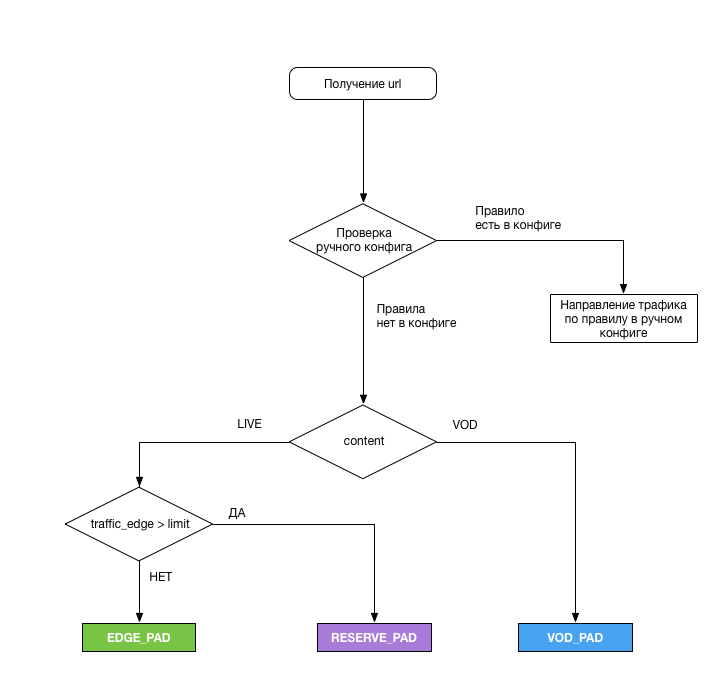

For the choice of PAD, from which the user had to receive content, a special logic module responded. Here are his tasks:

- Analyze the URL, apply the above scheme for each request stream and issue the necessary PAD

- Remove the load from the EDGE_PAD interfaces every 5 minutes ( and this was our mistake ), and when the limit is reached, switch the excess traffic to RESERVE_PAD. To remove the load, a small perl script was written that returned the following data:

- timestamp — the date and time when the load data was updated (in RFC 3339 format);

- total_bandwidth — the current interface load (total), Kbps;

- rx_bandwidth — current interface load (incoming traffic), Kbps;

- tx_badwidth - current interface load (outgoing traffic), Kbps. - Direct traffic in manual mode to any PAD or Origin server in case of unforeseen situations, or, if necessary, to work on one of the PADs. The config lay on the server in the yaml format and allowed either the entire traffic or the traffic to be led to the required PAD:

- Content Type

- Traffic Encryption

- Traffic payable

- Device Type

- Playlist type

- Region

Origin servers were equipped with SSD. Unfortunately, HIT_RATE for VOD chunks when switching traffic to Origin left much to be desired (about 30%), but they performed their task, so we did not see any problems with the packers in the NNR.

Since there were few servers for EDGE_PAD configuration, it was decided to allocate them in the regions with the largest traffic share - Moscow and the Volga region. By means of GeoDNS, traffic was directed to the Volga region from the regions of the Volga and Ural federal districts. The Moscow node serviced everything else. We didn’t like the idea of delivering traffic to Siberia and the Far East from Moscow, but in total these regions provide about 1/20 of all traffic, and MegaFon channels were wide enough for such volumes.

After developing the plan, the following work was carried out:

- Two weeks later, we developed CDN switching functionality.

- It took a month to install and configure EDGE_PAD servers, as well as to expand channels for them

- It took two weeks to divide the current server group into two parts, plus another two weeks to apply the settings on all network and server equipment to all servers.

- And finally, the week was spent on testing (unfortunately, not under load, which affected later)

Some work turned out to be paralleled, and in the end everything went for six weeks.

First results and future plans

After tuning, the overall system performance was 250 Gbit / s. The decision with the introduction of VOD-traffic to individual servers immediately showed its effectiveness after rolling out in production. Since the beginning of the World Cup, there have been no problems with VOD traffic. Several times, for various reasons, it was necessary to switch VOD traffic to Origin, but in principle, they also coped. Perhaps this scheme is not very effective due to the very small use of the cache, since we force SSD-drives to constantly rewrite the content. But the circuit works.

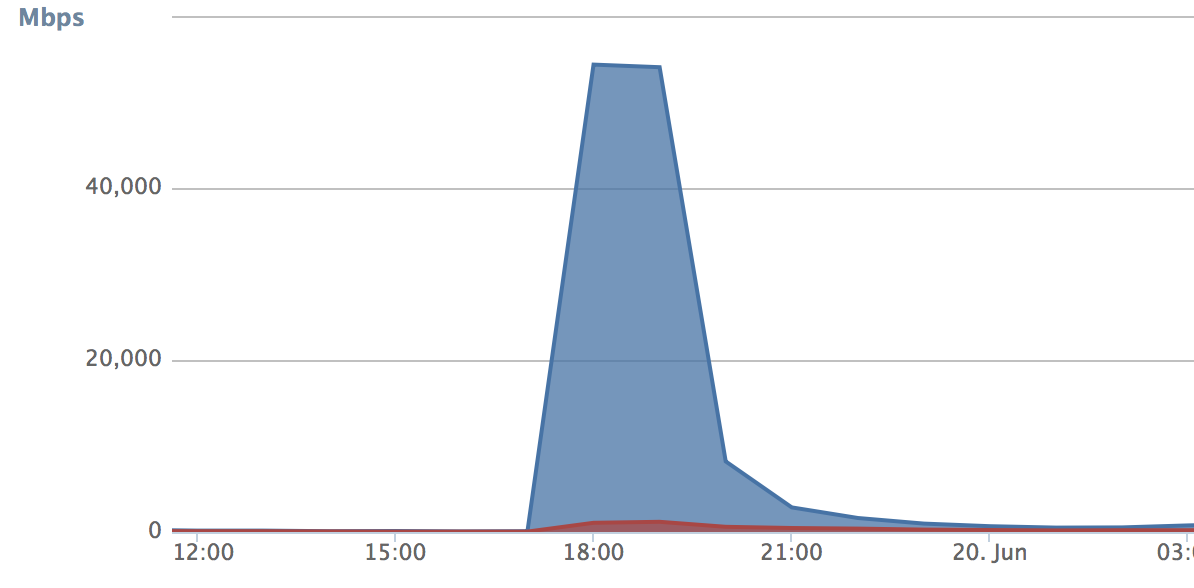

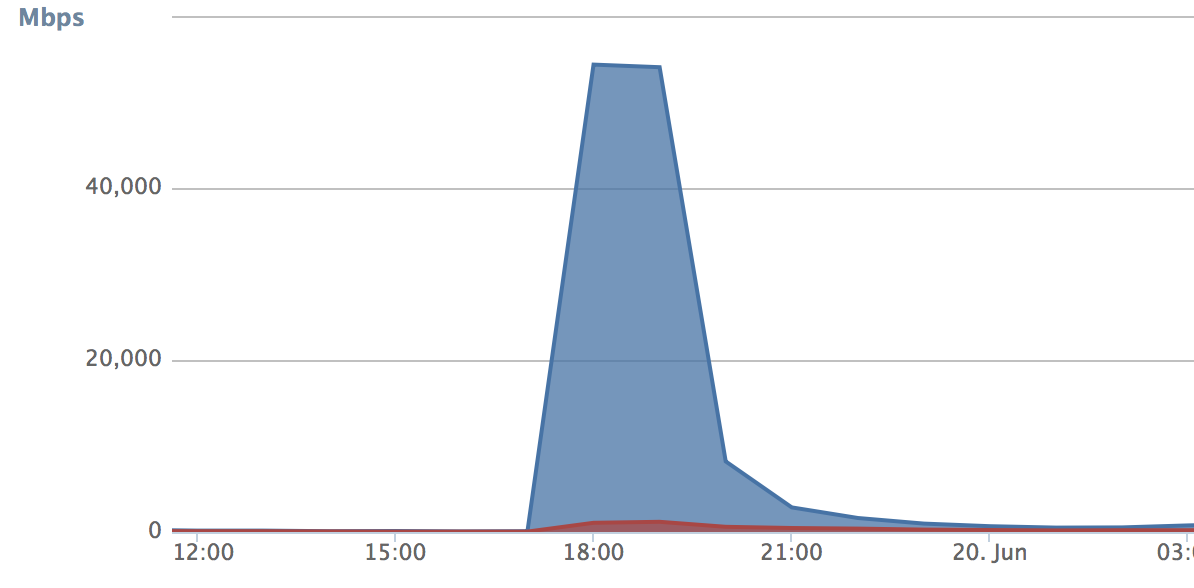

As for the live traffic, the corresponding volumes for checking our solution appeared with the start of the World Cup. The problems started when we faced the second time with switching traffic when the limit was reached during the Russia-Egypt match. When the traffic switching worked, he poured all over the backup PAD. In these five minutes, the number of requests (growth curve) was so great that the backup CDN was completely clogged and started to make mistakes. In this case, the main PAD was released during this time and started to idle a little:

From this 3 conclusions were made:

- Five minutes is still too much. It was decided to reduce the period of removal of the load to 30 seconds. As a result, the traffic on the backup PAD ceased to grow abruptly:

- It is necessary at a minimum to flip users between the PAD every time the switch is triggered. This should provide additional smooth switching. We decided to assign a cookie to each user (or rather the device), according to which the module responsible for the distribution understands whether to leave the user on the current PAD or switch. Here the technology may be at the discretion of the person who implements it. As a result, we do not drop traffic on the main PAD.

- The threshold for switching was set too low, as a result, traffic on the backup PAD grew like an avalanche. In our case, this was a reinsurance - we were not completely sure that we had made the correct tuning of the servers (the idea for which, by the way, was taken from Habr). The threshold has been increased to the physical performance of network interfaces.

Improvements took three days, and already at the match Russia - Croatia, we checked whether our optimization worked. In general, we are pleased with the result. At the peak, the system processed 215 Gbit / s of mixed traffic. This was not a theoretical limit of system performance - we still had a solid margin. If necessary, we can now connect any external CDN, if necessary, and “throw out” the excess traffic there. Such a model is good when you don’t want to pay substantial money every month for using someone else’s CDN.

We have plans for further development of the CDN. First of all, I would like to extend the EDGE_PAD scheme to all federal districts - this will lead to less use of channels. Also, tests are performed with the VOD_PAD redundancy scheme, and some of the results already look pretty impressive.

In general, everything that has been done over the past year makes me think that a CDN service for distributing video content is a must have. And not even because it saves a lot of money, but rather because the CDN becomes part of the service itself, directly affects the quality and functionality. In such circumstances, to give it in the wrong hands - at least unwise.

Source: https://habr.com/ru/post/425229/

All Articles