Docker + Laravel = ❤

In this article, I will talk about my experience in wrapping up a Laravel application in a Docker container, so that the frontend and backend developers could work with it locally, and launching it in production was as simple as possible. Also, CI will automatically run static code analyzers, phpunit tests, and build images.

"And what is the difficulty?" - you can say, and you will be partly right. The fact is that quite a lot of discussion has been devoted to this topic in the Russian-speaking and English-speaking communities, and I would conditionally divide almost all the studied threads into the following categories:

- "I use docker for local development. I put laradock and I don’t know the troubles." Cool, but what about the automation and production launch?

- "I collect one container (monolith) based on

fedora:latest(~ 230 Mb), put all the services in it (nginx, bd, cache, etc), run everything inside the supervisor." Too great, easy to run, but what about the ideology of "one container - one process"? How are things going with balancing and process control? What is the size of the image? - "Here you have the pieces of configs, we season with extracts from sh-scripts, add magical env-values, use." Thank you, but how about at least one living example, which I could fork and play fully?

Everything that you read below is a subjective experience that does not claim to be the ultimate truth. If you have additions or indications of inaccuracy - welcome to comments.

For the impatient - a link to the repository , which you can incline to run the Laravel application with one command. It is also not difficult to run it on the same rancher , properly linking the containers, or using the docker-compose.yml product version as a starting point.Part theoretical

What tools will we use in our work, and what will we do with accents? First of all, we will need the ones installed on the host:

docker- at the time of this writing, I used version18.06.1-cedocker-compose- it does an excellent job with linking containers and storing necessary environment values; version1.22.0make- you might be surprised, but it fits perfectly into the context of working with the docker

You cancurl -fsSL get.docker.com | sudo shdockerondebianlike systems with the commandcurl -fsSL get.docker.com | sudo shcurl -fsSL get.docker.com | sudo sh, butdocker-composebetter put with the help ofpip, since the most recent versions live in its repositories (aptfar behind, as a rule).

This list of dependencies can be completed. What you will use to work with source codes - phpstorm , netbeans or vim vandal - only you decide.

Next is an improvised QA in the context (I’m not afraid of the word) of designing images:

Q: Basic image - which one is better to choose?

A: The one that is "thinner", without excesses. On the basis of

alpine(~ 5 Mb), you can collect everything your heart desires, but most likely you will have to play around with assembling services from sources. Alternatively,jessie-slim(~ 30 Mb) . Or use the one that is most often used on your projects.Q: Why is image weight important?

A: Decrease in traffic volume, decrease in the probability of an error when downloading (less data - less probability), decrease in the space consumed. The rule "Reliability is Reliable" (© "Snatch") does not work here.

Q: But my friend

%friend_name%says that a "monolithic" image with all dependencies is the best way.A: Let's just count. The application has 3 dependencies - PG, Redis, PHP. And you wanted to test how it will behave in bundles of different versions of these dependencies. PG - versions 9.6 and 10, Redis - 3.2 and 4.0, PHP - 7.0 and 7.2. If each dependency is a separate image - you will need 6 of them, which you don’t even need to collect - everything is ready and lies on

hub.docker.com. If, for ideological reasons, all the dependencies are "packed" in one container, will you have to reassemble it with pens ... 8 times? Now add a condition that you still want to playopcachewithopcache. In the case of decomposition, it is simply a change in the tags of the images used. Monolith is easier to run and maintain, but this is a road to nowhere.Q: Why is a container supervisor evil?

A: Because

PID 1. You do not want an abundance of problems with zombie processes and be able to flexibly "add capacity" where it is needed - try to run one process per container. The peculiar exceptions arenginxwith its workers andphp-fpm, which tend to produce processes, but you have to put up with it (moreover, they are not bad at responding toSIGTERM, quite correctly “killing” their workers). Having run all the demons by the supervisor - in fact, you probably doom yourself to problems. Although, in some cases, it is difficult to manage without it, but these are exceptions.

Having decided on the main approaches let's move on to our application. It should be able to:

web|api- give statics bynginx, and generate dynamic content byfpmscheduler- run native task schedulerqueue- process jobs from queues

The basic set, which, if necessary, can be expanded. Now let's move on to the images that we have to collect in order for our application to “take off” (their code names are given in brackets):

PHP + PHP-FPM( app ) - the environment in which our code will run. Since the PHP and FPM versions will be the same for us, we collect them in one image. So with configs it is easier to manage, and the composition of the packages will be identical. Of course - FPM and application processes will run in different containers.nginx( nginx ) - that would not bother with the delivery of configs and optional modules fornginx- we will assemble a separate image with it. Since it is a separate service, it has its own docker-file and its context.- Application sources ( sources ) - the source will be delivered using a separate image, mounting the

volumewith them to the container with the app. Base image -alpine, inside - only source codes with installed dependencies and assets collected using webpacks (build artifacts)

The rest of the development services are launched in containers, pulling them from hub.docker.com ; in production, they are running on separate servers, clustered together. All that remains for us is to tell the application (via the environment) at which addresses / ports and with what details it is necessary to knock them. Even cooler is to use service-discovery for these purposes, but this is not about this time.

Having defined the part of the theoretical part, I suggest moving on to the next part.

Part practical

I propose to organize the files in the repository as follows:

. ├── docker # - │ ├── app │ │ ├── Dockerfile │ │ └── ... │ ├── nginx │ │ ├── Dockerfile │ │ └── ... │ └── sources │ ├── Dockerfile │ └── ... ├── src # │ ├── app │ ├── bootstrap │ ├── config │ ├── artisan │ └── ... ├── docker-compose.yml # Compose- ├── Makefile ├── CHANGELOG.md └── README.md You can view the structure and files by clicking on this link .

To build a service, you can use the command:

$ docker build \ --tag %local_image_name% \ -f ./docker/%service_directory%/Dockerfile ./docker/%service_directory% The only difference is the build of the image with the source code - it needs the build context (the last argument) to be equal to ./src .

I recommend using the rules for naming images in the local registry that use docker-compose by default, namely: %root_directory_name%_%service_name% . If the project directory is called my-awesome-project , and the service is called redis , then the name of the image (local) is better to choose my-awesome-project_redis respectively.

To speed up the build process, you can tell the docker to use the cache of the previously compiled image, and the launch--cache-from %full_registry_name%used for this. Thus, the docker daemon will look at the start of a particular instruction in the Dockerfile - has it changed? And if not (the hash will converge) - he will skip the instruction, using the already prepared layer from the image, which you tell it to use as a cache. This thing is not so bad that it will rebuild the process, especially if nothing has changed :)

Pay attention toENTRYPOINTapplication container launch scripts.

The image of the environment for launching an application (app) was collected taking into account the fact that it will work not only in production, but also locally, developers need to interact with it effectively. Installing and removing composer dependencies, running unit tests, tail logs and using familiar aliases ( php /app/artisan → art , composer → c ) should be without any discomfort. Moreover, it will also be used to run unit tests and static code analyzers ( phpstan in our case) on CI. That is why its Dockerfile, for example, contains the line for installing xdebug , but the module itself is not included (it is enabled only using CI).

Also for thecomposerglobally put the packagehirak/prestissimo, which greatly boosts the installation process of all dependencies.

In production, we mount inside it into the /app directory the contents of the /src directory from the source image. For development - we "prokidyvat" a local directory with source codes of the application ( -v "$(pwd)/src:/app:rw" ).

And here lies one difficulty - it is the access rights to files that are created from the container. The fact is that by default, the processes running inside the container are started from root ( root:root ), the files created by these processes (cache, logs, sessions, etc) are also, and as a result, you don’t have anything with them You can do this by not running sudo chown -R $(id -u):$(id -g) /path/to/sources .

As one of the solutions is to use fixuid , but this solution is straightforward "so-so." The best way I USER_ID local USER_ID and its GROUP_ID inside the container, and start processes with these values . By default, substituting 1000:1000 values (default values for the first local user) got rid of the $(id -u):$(id -g) call $(id -u):$(id -g) , and if necessary, you can always override them ( $ USER_ID=666 docker-compose up -d ) or put docker-compose in the .env file.

Also, when running php-fpm do not forget to disable opcache in it - otherwise the opcache is "what the hell is this!" you will be provided.

For the "direct" connection to redis and postgres, I’ve thrown additional ports "outward" ( 15432 and 15432 respectively), so there is no problem in principle to "connect and see what and how it really is".

I keep the container with the code name app running ( --command keep-alive.sh ) for convenient access to the application.

Here are some examples of solving "everyday" tasks with docker-compose :

| Operation | Executable command |

|---|---|

Installing the composer package | $ docker-compose exec app composer require package/name |

| Running phpunit | $ docker-compose exec app php ./vendor/bin/phpunit --no-coverage |

| Installing all node dependencies | $ docker-compose run --rm node npm install |

| Install node-package | $ docker-compose run --rm node npm i package_name |

| Launch of live asset reassembly | $ docker-compose run --rm node npm run watch |

All startup details can be found in the docker-compose.yml file .

Choi make alive!

Packing the same commands every time becomes boring after the second time, and since programmers are by their nature lazy creatures, let's take care of their “automation”. To keep a set of sh scripts is an option, but not as attractive as a Makefile , especially since its applicability in modern development is greatly underestimated.

You can find the complete Russian-language manual on it at this link .

Let's see how running make in the root of the repository looks like:

[user@host ~/projects/app] $ make help Show this help app-pull Application - pull latest Docker image (from remote registry) app Application - build Docker image locally app-push Application - tag and push Docker image into remote registry sources-pull Sources - pull latest Docker image (from remote registry) sources Sources - build Docker image locally sources-push Sources - tag and push Docker image into remote registry nginx-pull Nginx - pull latest Docker image (from remote registry) nginx Nginx - build Docker image locally nginx-push Nginx - tag and push Docker image into remote registry pull Pull all Docker images (from remote registry) build Build all Docker images push Tag and push all Docker images into remote registry login Log in to a remote Docker registry clean Remove images from local registry --------------- --------------- up Start all containers (in background) for development down Stop all started for development containers restart Restart all started for development containers shell Start shell into application container install Install application dependencies into application container watch Start watching assets for changes (node) init Make full application initialization (install, seed, build assets) test Execute application tests Allowed for overriding next properties: PULL_TAG - Tag for pulling images before building own ('latest' by default) PUBLISH_TAGS - Tags list for building and pushing into remote registry (delimiter - single space, 'latest' by default) Usage example: make PULL_TAG='v1.2.3' PUBLISH_TAGS='latest v1.2.3 test-tag' app-push He is very good at goal dependency. For example, to start watch ( docker-compose run --rm node npm run watch ) it is necessary for the application to be "raised" - all you have to do is set the target up as dependent - and you need not worry about what you will forget to do before calling watch - make will do everything for you. The same applies to running tests and static analyzers, for example, before committing changes - run make test and all the magic will happen for you!

Needless to say that for assembling images, downloading them, specifying --cache-from and everything else - no longer worry?

You can view the contents of the Makefile here .

Part automatic

Let's get to the final part of this article - this is an automation of the process of updating images in the Docker Registry. Although in my example GitLab CI is used - to transfer the idea to another integration service, I think it will be quite possible.

First of all, we define and name the image tags used:

| Tag name | Purpose |

|---|---|

latest | Images collected from the master branch.The state of the code is the most "fresh", but not yet ready to be released. |

some-branch-name | Images compiled in brunch some-branch-name .Thus, we can “roll out” changes on any environment that were implemented only within a specific brunch before they were merged with the master branch — it’s enough to “pull out” images with this tag.And - yes, the changes can affect both the code and the images of all services in general! |

vX.XX | Actually, the release of the application (use to deploy a specific version) |

stable | Alias, for the tag with the most recent release (use to deploy the most recent stable version) |

The release is done by posting a tag in the vX.XX format.

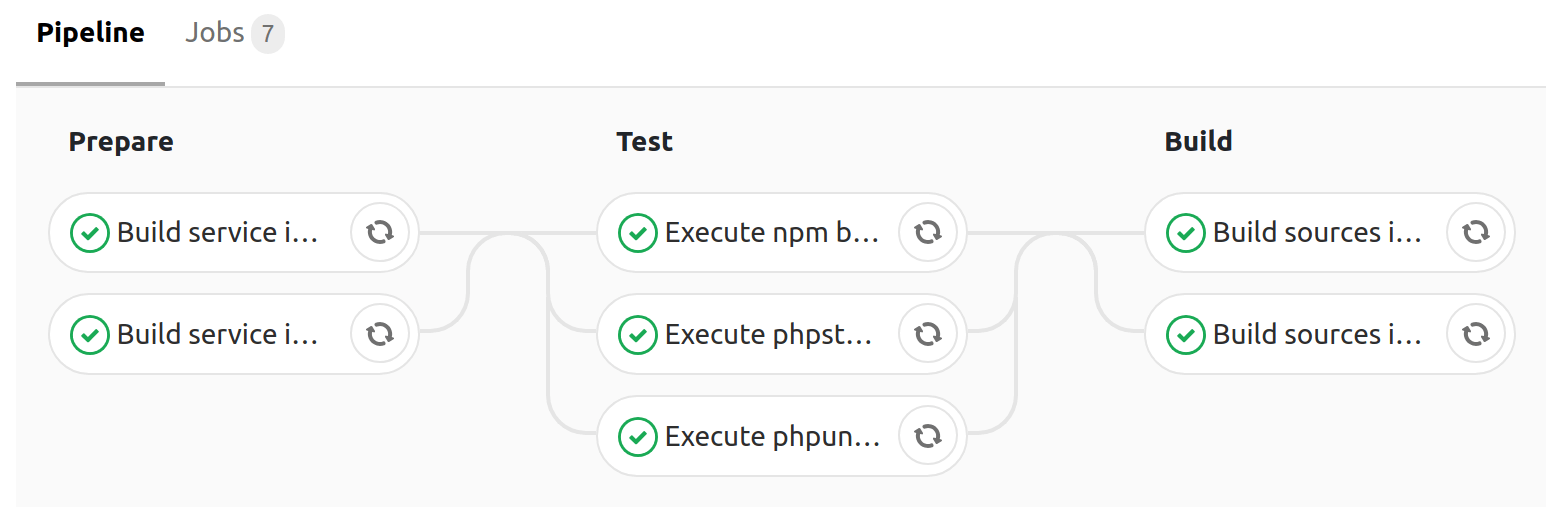

To speed up the build, the caching of the ./src/vendor and ./src/node_modules + --cache-from directories is used for the docker build , and consists of the following stages:

| Stage name | Purpose |

|---|---|

prepare | Preparatory stage - assembling images of all services except the source image |

test | Testing the application (running phpunit , static code analyzers) using images collected at the prepare stage |

build | Installing all composer dependencies ( --no-dev ), webpack assets with webpack , and webpack image with source code including artifacts obtained ( vendor/* , app.js , app.css ) |

Build on themasterbranch, producingpushwith thelatestandmastertags

On average, all the assembly steps take 4 minutes , which is a pretty good result (parallel execution of tasks is our everything).

You can familiarize yourself with the contents of the configuration ( .gitlab-ci.yml ) of the collector at this link .

Instead of conclusion

As you can see, organizing work with a php application (using the example of Laravel ) using Docker is not so difficult. As a test, you can fork the repository , and replacing all occurrences of tarampampam/laravel-in-docker with your own - try everything "live" on your own.

To start locally - execute only 2 commands:

$ git clone https://gitlab.com/tarampampam/laravel-in-docker.git ./laravel-in-docker && cd $_ $ make init Then open http://127.0.0.1:9999 in your favorite browser.

… Take this opportunity to

At the moment I am working on a TL autocode project, and we are looking for talented php developers and system administrators (the development office is located in Yekaterinburg). If you consider yourself to be the first or the second - write our HR letter with the text "I want to develop a team, resume:% link_on_resume%" on email hr@avtocod.ru , we help with relocation.

')

Source: https://habr.com/ru/post/425101/

All Articles