Another particle system. Postmortem

In September of this year, the mobile game Titan World from Unstoppable, the Minsk office of Glu mobile, was to be released. The project was canceled right before the world release. But the developments have remained, and the most interesting of them, with the kind permission of the heads of the studio Dennis Zdonov and Alex Paley, I would like to share with the public.

In March 2018, we had a meeting with the team leader, where we discussed what to do next: the render code was completed, and there were no new features and special effects in the plans. The logical choice was to rewrite the particle system from scratch - for all the tests it gave the greatest drawdowns in performance, plus drove the designers crazy with its interface (text-based config file) and extremely scarce features.

It should be noted that most of the time the team worked on the game in the "tomorrow release" mode, so I wrote all the subsystems, firstly, trying not to break what was already working, and secondly, with a short development cycle. In particular, most of the effects that the standard system was not capable of were done in the fragment shader, without affecting the main code.

')

The limitation on the number of particles (transformation matrices for each particle were formed on cpu, the conclusion - via gl-extension ios instancing) made it necessary to write, in particular, a shader that "emulated" a large array of particles, based on an analytical representation of the shape of objects, and composited with space podding fake data into the depth-buffer.

The z-coordinate of the fragment was calculated for a flat part, as if we were drawing a sphere, and the radius of this sphere was modulated by the sine of Perlin noise with respect to time:

r=.5+.5*sin(perlin(specialUV)+time) A full description of the reconstruction of the depth of the sphere can be found in Íñigo Quílez , I used a simplified, faster code. He, of course, was a rough approximation, but on complex geometric shapes (smoke, explosions) gave quite a decent picture.

Screenshot gameplay. The smoke “skirt” was made by one parktikl, several more were spent on the main body of the explosion. Most effectively, of course, it looked “from the ground”, when the smoke gently enveloped buildings and units, but the proposals to change the position of the camera during the explosion did not hit production.

Formulation of the problem

What I wanted to get on the way out? We came, rather, from the limitations with which we learned on the previous system of particles. The situation was worsened by the fact that the frame budget was almost exhausted, and on weak devices (like ipad air) both pixel and vertex pipelines were loaded to the full. Therefore, I wanted to get the most productive system as a result, even if I’ve limited functionality a little.

Designers formed a list of features and drew a sketch UI, based on their own experience and practice of working with unity, unreal and after effects.

Available technologies

Due to the legacy and limitations imposed by the head office, we were limited to opengl es 2. Thus, technologies like transform feedback used in modern particle systems were not available.

What is left? Use vertex texture fetch and store position / acceleration in textures? The working version, but the memory is also almost over, the performance of such a solution is not the most optimal, and the result is not the same as the architectural beauty.

By this time I read many articles on the implementation of particle systems on gpu. The overwhelming majority contained a bright heading (“millions of particles on mobile gpu, with preference and poetess”), but the implementation was reduced to examples of simple, though entertaining, emitters / attractors, and in general was almost useless for real use in the game.

This article brought the maximum benefit: the author solved the real problem, and did not do “spherical particles in a vacuum”. The benchmark figures from this article and the results of the profiling saved a lot of time at the design stage.

Search for approaches

I began with the classification of problems solved by a system of particles, and the search for special cases. It turned out about the following (a piece of the real concept docks from the correspondence with the team leader):

“- Particle / mesh arrays with cyclic motion. There is no processing position, all through the equation of motion. Applications - smoke from pipes, steam above water, snow / rain, volumetric fog, swinging trees, partial exploitation of aka explosions is possible on non-cyclical effects.

- Tapes. Formation of vb by event, processing only on gpu (shots by rays, flying along a fixed (?) Trajectory with a trace). Maybe the option will take off with the transfer of uniforms to start-finish coordinates and the construction of the tape using vertexID. with tz render cross with fresnel as on direct + uvscroll.

- Particle generation and processing speeds. The most versatile and the most complex / slowest option, see tech processing traffic. ”

In short: there are different particle effects, and some of them can be implemented easier than others.

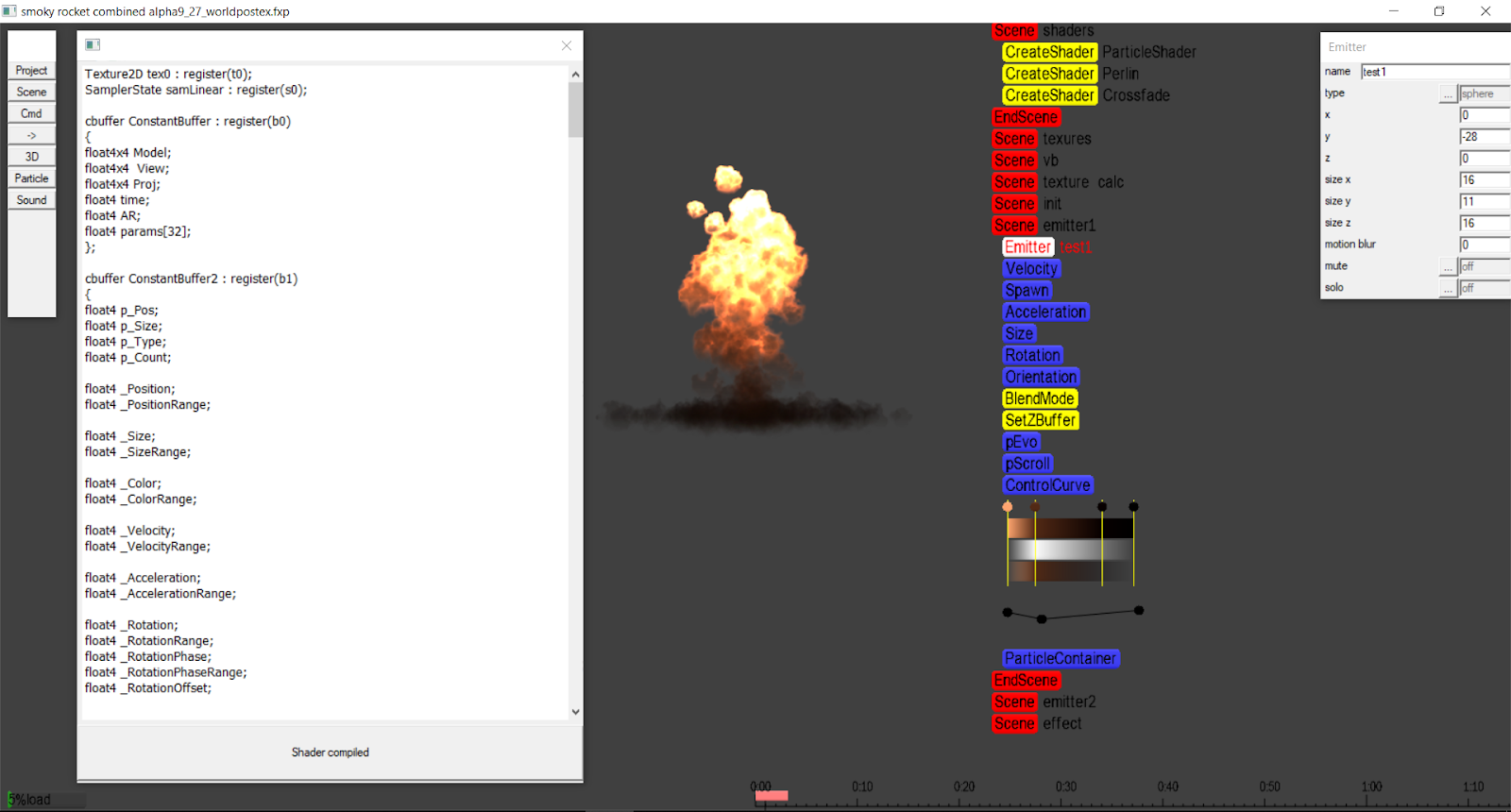

We decided to split the task into several iterations - from simple to complex. Prototyping was done on my engine / editor under windows / directx11 due to the fact that the speed of such development was several orders of magnitude higher. The project was compiled in a couple of seconds, and the shaders were edited altogether “on the fly” and compiled in the background, displaying the result in real time and without requiring any additional gestures such as pressing buttons. The one who collected big projects with a bunch of macbook / xcode, I think, will understand the reasons for this decision.

All code samples will be taken exactly from the windows-prototype.

Development environment for windows.

Implementation

The first stage is the static output of an array of particle files. Nothing complicated: we start vertex bufffer, fill it with quads (we write the correct uv for each quad), and sew the vertex id into the “additional” uv. After that, in the shader, using vertex id, based on the emitter settings, we form the positions of the particle, and by uv, we restore the screen coordinates.

If vertex_id is available natively, you can do without the buffer and without uv to restore the screen coordinates (as a result, done in the windows version).

Shader:

struct VS_INPUT { … uint v_id:SV_VertexID; … } //float index = input.uv2.x/6.0;// vertex_id index = floor(input.v_id/6.0);// vertex_id float2 map[6]={0,0,1,0,1,1,0,0,1,1,0,1}; float2 quaduv=map[frac(input.v_id/6.0)*6]; After that, it is possible to implement simple scenarios with a very small amount of code, for example, a cyclical motion with small deviations will be suitable for the snow effect. However, our goal was to give the control of the behavior of the particle to the side of the artists, and, as is known, they rarely know how to shaders. The variant with presets of behavior and editing parameters through sliders also did not deceive - switching shaders or branching inside, multiplying options of presets, lack of full control.

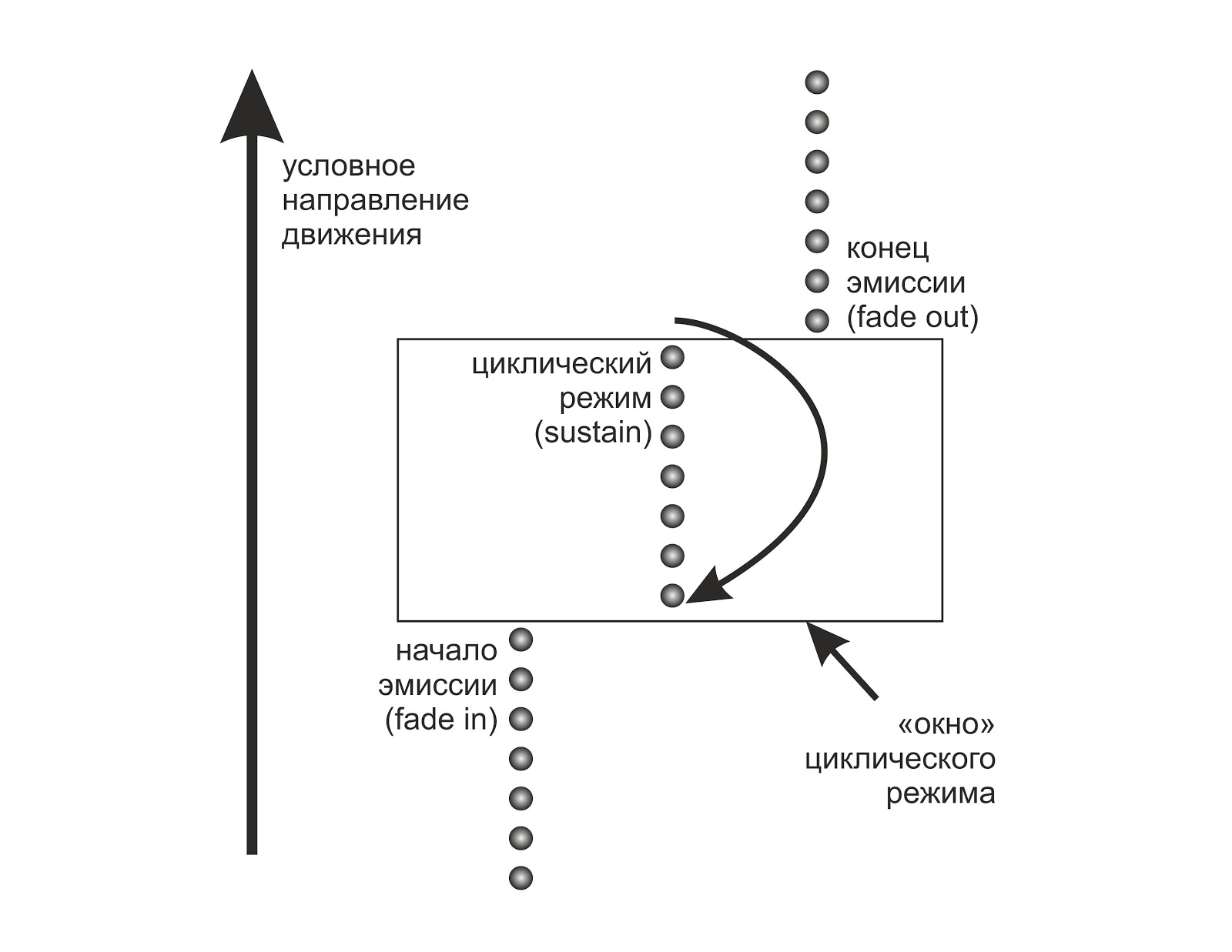

The next task was the implementation of fade in / fade out for such a system. Particles should not appear from nowhere and disappear to nowhere. In the classical implementation of a particle system, we process the buffer programmatically using cpu, creating new particles and removing old ones. In fact, to get a good speed, you need to write an intelligent memory manager. But what will happen if you just do not draw "dead" particles?

Suppose (for a start) that the time-interval of particle emission and the lifetime of a particle are constant within a single emitter.

Then we can speculatively present our buffer (which contains only the vertex id) as a ring and determine its maximum size as follows:

pCount = round (prtPerSec * LifeTime / 60.0); pCountT = floor (prtPerSec * EmissionEndTime / 60.0); pCount=min (pCount, pCountT); and in the shader calculate the time based on the index and time (time elapsed from the start of the effect)

pTime=time-index/prtPerSec; If the emitter is in the cyclic phase (all particles are emitted and now synchronously die and are born), we make frac particles against time and thus get a loop.

We do not need to draw particles with pTime less than zero - they have not yet been born. The same applies to particles in which the sum of the lifetime and the current time exceeds the end time of the emission. In both cases, we will not draw anything by setting the particle size and / or throwing it off the screen. This approach will give a small overhead on the fadein / fadeout phases, while maintaining maximum performance in the sustain phase.

The algorithm can be somewhat improved by sending only that part of the vertex buffer that contains live particles to the drawing. Due to the fact that the emission occurs sequentially, living particles will be segmented a maximum of one time, i.e. need two drawcall'a.

Now, knowing the current time of each particle, it is possible, by setting the speed, acceleration (and, in general, any other parameters) to write the equation of motion, having received as a result the coordinates in world space.

Using the restored from vertex_id uv, we will get four points (more precisely, we will move each of the quad points in the direction we need), on which the vertex shader, having completed the projection, will complete its work.

p.xy+=(quaduv-.5); With a free bonus, we were able to not only pause the emitter, but also rewind time back and forth with frame accuracy. This feature has been very useful when designing complex effects.

We increase the functionality

The next iteration in the development was to solve the problem of a moving emitter. Our party system knew nothing about its position, and as the emitter moved, the entire effect synchronously moved behind it. For smoke from the exhaust pipe and similar effects, it looked more than strange.

The idea was to record the position of the emitter in the vertex buffer at the birth of a new particle. Since the number of such particles is small, the overhead should have been minimal.

A colleague suggested that when developing his own UI, he used map / unmap only part of the vertex buffer and was quite pleased with the performance of this solution. I did the tests, and it turned out that this approach works really well both on the desktop and on mobile platforms.

The difficulty arose with the timing of cpu and gpu. It was necessary to ensure that the update buffer was made exactly when the "new", looped particle will be in its starting position. That is, in relation to the ring buffer, it is necessary to synchronize the boundaries of the update region with the emitter operation time.

I transferred the hlsl code to c ++, wrote the emitter movement along Lissajous for the test, and it all suddenly worked. However, from time to time the system “spat” one or several particles, firing them in an arbitrary direction, not removing them in time or creating new ones in arbitrary places.

The problem was solved by auditing the accuracy of counting the time in the engine and parallel to checking the time delta when recording the new position of the emitter - so that the entire portion of the buffer that was not affected on the previous iteration was updated. It was also necessary for the system to work under the conditions of forced desync, a sudden drawdown of fps should not break the effect, especially since for different devices our game recorded different fps according to the performance - 60/30/20.

The method code has expanded quite a lot (the ring buffer is difficult to handle elegantly), but after taking all the conditions into account, the system has worked correctly and stably.

Around this time, the partner already made the “fish” of the editor, sufficient for testing the system, and wrote out the / api templates for integrating the system into our engine.

I ported all code under ios / opengl, integrated and finally made real effects tests on a real device. It became clear that the system not only works, but also is suitable for production. It remained to finish the editor's UI and polish the code to the state “it’s not scary to release to release tomorrow.”

We’ve even got together to write a memory manager so as not to allocate / destroy the buffer (which ultimately kept the vertex_id, uv, position and initial particle vector) for each new effect with a dynamic emitter, as another idea came to mind.

The very fact of the existence of vertex buffer in this system did not give me rest. He clearly looked in her archaism, "the legacy of the dark ages of the fixed conveyor." While doing the test effects on the windows prototype, I thought that the movement of the emitter is always smooth and always happens much slower than the movement of particles. Moreover, with a large number of particles, a position update results in the same data being written to hundreds of particles. The solution turned out to be simple: we will get a fixed array, into which the “history” of the emitter position will fall, normalized by the particle lifetime. And on gpu we interpolate the data. After that, ios / gles2 version eliminated the need for dynamic buffers (only general static remained to implement vertex_id), and in windows / dx11 versions, the buffers disappeared altogether due to native vertex_id and the possibility of d3d api to accept null for drawing instead of a vertex buffer reference.

Thus, the win-version of the system, by modern standards, does not consume memory at all, no matter how many particles we want to display. Only a small constant buffer with parameters, a buffer of positions / bases (60 pairs of vectors was enough, with a margin, for any cases), and, if necessary, texture. Performance measurements show speeds close to synthetic tests.

In addition, the “tail” in effects like sparks began to look much more natural, since interpolation allowed removing frame sampling and thus the emitter changed position smoothly, as if drawing calls were performed at a frequency of hundreds of hertz.

Features

In addition to the basic functionality of the particle flight, (speed, acceleration, aggression, resistance to the environment), we needed a certain amount of functional “fat”.

As a result, motion blur (stretching a particle along a motion vector), particle orientation across the motion vector (this allows you to do, for example, a sphere of particles), changing the particle size according to the current time of its life and dozens of other trifles were implemented.

Difficulty arose with vector fields: since the system does not store its state (position, acceleration, etc.) for each particle, but calculates them every time through the equation of motion, a number of effects (like the movement of foam when stirring coffee) were impossible in principle. However, a simple modulation of the speed and acceleration by the noise of the perlin gave results that look quite modern. The computation of real-time noise for such a number of particles turned out to be too expensive (even with a limit of five octaves), so the texture was generated, from which the vertex shader then sampled. To enhance the effect of a fake vector field, a small offset of the sample coordinates was added depending on the current emitter time.

The cigarette smoke test works by distributing the initial velocity and accelerating through perlin noise.

Pixel conveyor

Initially we planned only to change the color / transparency of the particle depending on its time. I added several algorithms to the pixel shader.

Rotation of the texture color is simplified, sin (color + time). Allows you to simulate the effect of permutation from AfterEffects to some extent.

Fake lighting - modulation of a particle's color by a gradient in world coordinates, regardless of the angle of rotation of the particle.

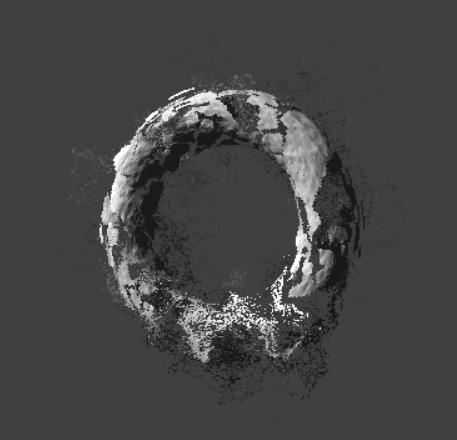

The evolution of boundaries - when a particle moves in space, its boundaries (alpha channel) are modulated by a combination of spotlight and perlin noise, which gives the dynamics of their flow, very similar to clouds, smoke and other fluid effects.

Shader pseudocode:

b=perlin(uv);// , uv a=saturate(1-length(input.uv.xy-.5)*2);// a-=abs(ab);//””, In a slightly sophisticated version, this shader could draw borders with arbitrary softness and with a highlight of the contour, which added realism to “explosive” effects.

The first experiments with the evolution of boundaries.

What's next?

Despite the editor that is already ready for work and integrated into the engine, the designers did not have time to make a single effect on it - the project was closed. However, there are no obstacles to using these practices anywhere else - for example, to do work on Revision demos.

From a technological point of view, there is also where to move - now, for example, there are several effects of the destruction of frame objects in the work:

The question of sorting partitions for alpha-blending is still open: since everything is considered analytically in the shader, there is not even the input data to be sorted. But there is a big field for experiments!

During the development of Titan World in the graphic part of the game, many more tricks were applied, but about this some time next.

PS You can dig into the source code of the alpha engine here . Examples are in the release / samples folder, the main control keys are space, alt | control + mouse. Shaders are right in fxp files, their code is available through the editor window.

Source: https://habr.com/ru/post/424995/

All Articles