Machine Learning: Room Elephant Fight

One is zero in favor of the human brain. In a new study , computer scientists have found that artificial intelligence systems fail to pass the test for visual recognition of objects that any child can easily cope with.

“This qualitative and important research reminds us that“ deep learning ”cannot really boast of the depth attributed to it,” says Gary Marcus, a neuroscientist from New York University who is not associated with this work.

The results of the study relate to the field of computer vision, when artificial intelligence systems are trying to detect and categorize objects. For example, they may be asked to find all pedestrians in a street scene or simply to distinguish a bird from a bicycle — an assignment that has already become famous for its complexity.

')

The stakes are high: computers are gradually beginning to perform important operations for people, such as automatic video surveillance and autonomous driving. And for successful work it is necessary that the ability of AI to visual processing at least not inferior to human.

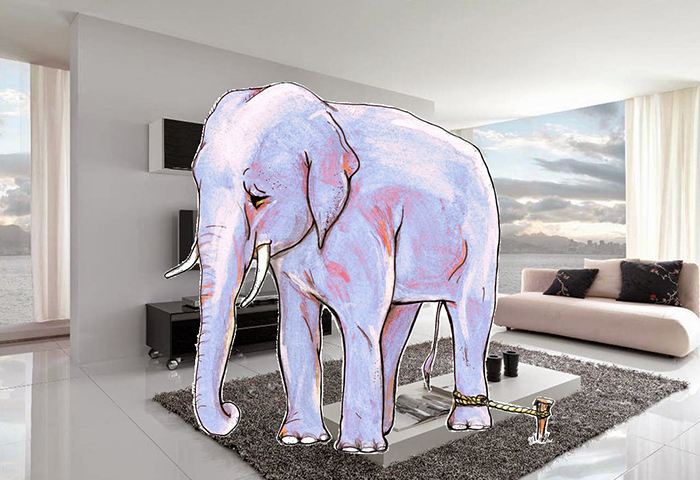

The task is not easy. The new study focuses on the sophistication of human vision and the difficulties in creating systems that mimic it. Scientists checked the accuracy of the computer vision system using the example of a living room. The AI did well, correctly defining the chair, the person and the book on the shelf. But when scientists added an unusual object to the scene - an image of an elephant - the very fact of its appearance made the system forget all previous results. Suddenly, she began to call the chair a sofa, the elephant - a chair, and ignore all other objects.

“There were a variety of oddities showing the fragility of modern object detection systems,” says Amir Rosenfeld, a scientist at York University in Toronto, and co-author of a study that he conducted with colleagues John Tsotsos , also from York, and Richard Zemel from the University of Toronto.

Researchers are still trying to clarify the reasons why the computer vision system is so easily confused, and they already have a good guess. The point is in human skill, which AI does not have, is the ability to realize that the scene is incomprehensible, and you need to look at it more closely again.

Elephant in the room

Looking at the world, we perceive a staggering amount of visual information. The human brain processes it on the go. “We open our eyes, and everything happens by itself,” says Totsos.

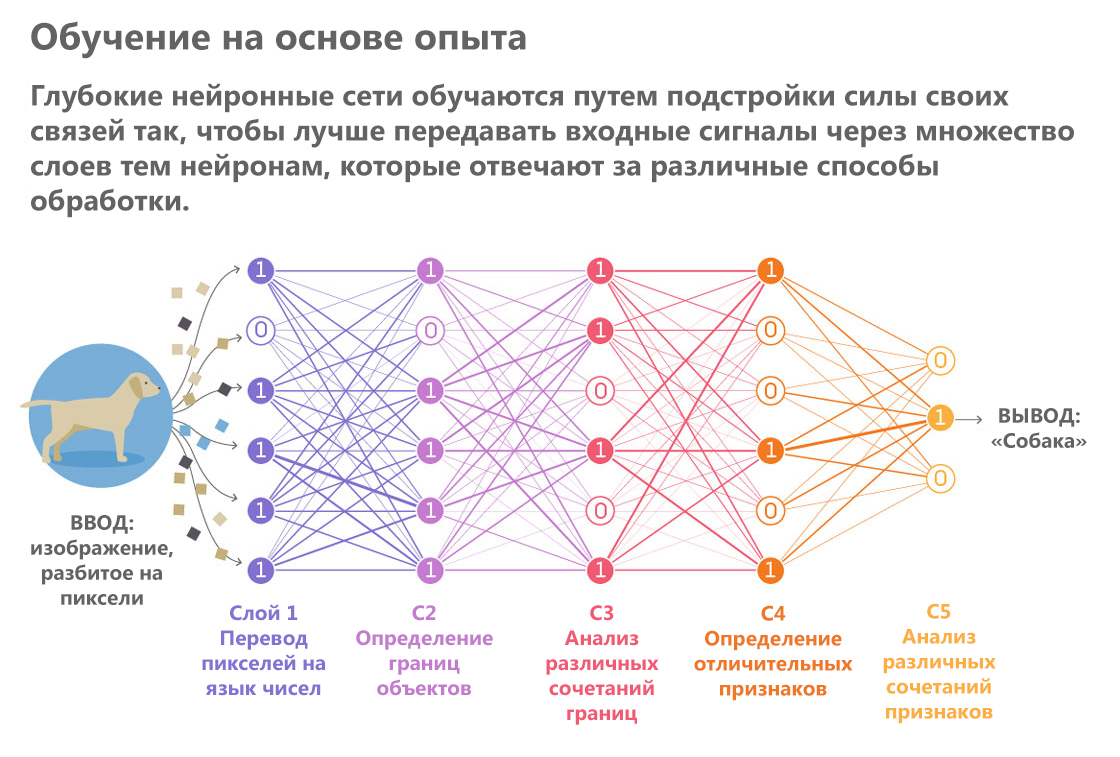

Artificial intelligence, on the contrary, painstakingly creates visual impressions, as if reading a description in Braille. He runs his pixels on his algorithmic fingertips, gradually forming more and more complex representations out of them. A variety of AI systems that performs such processes is neural networks. They pass the image through a series of "layers". As each layer passes, individual parts of the image are processed, such as the color and brightness of individual pixels, and based on this analysis, an increasingly abstract description of the object is formed.

"The results of the processing of the previous layer are transmitted to the next, and so on, as in a conveyor," explains Tsotsos.

By Lucy Reading-Ikkanda / Quanta Magazine

Neural networks are experts in specific routine tasks in the field of visual processing. They are better able to cope with highly specialized tasks such as determining the breed of dogs and other sorting of objects into categories better than people. These successful examples gave rise to the hope that computer vision systems will soon become so clever that they will be able to drive a car in crowded city streets.

It also prompted experts to explore their vulnerabilities. Over the past few years, researchers have made a whole series of attempts to imitate hostile attacks — they invented scenarios forcing neural networks to make mistakes. In one experiment, computer scientists deceived the network, forcing it to take a turtle for a gun. Another success story was that next to ordinary objects like a banana, researchers placed a psychedelic-colored toaster on the image.

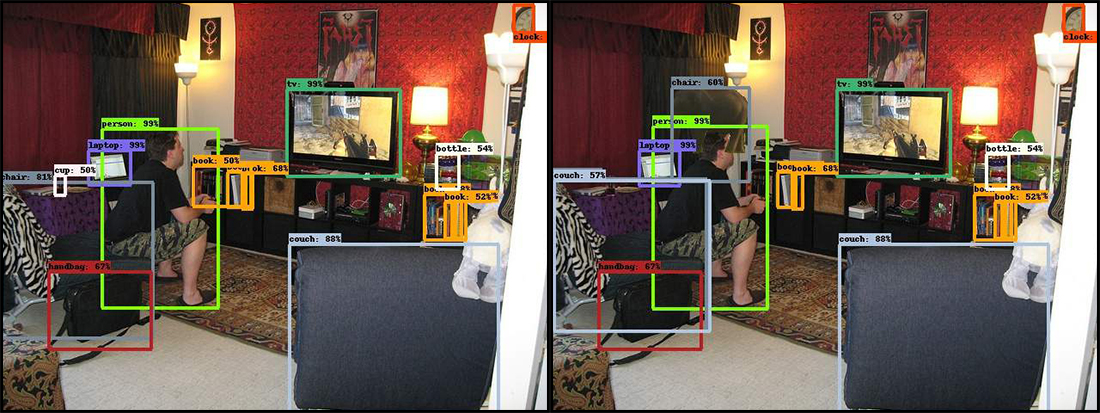

In the new work, scientists chose the same approach. Three researchers showed a neural network a photograph of the living room. It captures a man who plays a video game, sitting on the edge of an old chair and leaning forward. Having “digested” this scene, the AI quickly recognized several objects: a person, a sofa, a TV, a chair, and a couple of books.

Then the researchers added an object unusual for such scenes: the image of an elephant in a semi-profile. And the neural network is confused. In some cases, the appearance of an elephant forced her to take a chair for a sofa, and sometimes the system stopped seeing certain objects, with the recognition of which there had been no problems before. This is, for example, a book series. And misses happened even with objects located far from the elephant.

On the original left, the neural network correctly and with high confidence identified many of the objects located in the drawing room filled with various things. But it was enough to add an elephant (image on the right), and the program began to fail. The chair in the lower left corner turned into a sofa, the cup next to it disappeared, and the elephant became the chair.

Such system errors are completely unacceptable for the same autonomous driving. The computer will not be able to drive a car if it does not notice pedestrians simply because a few seconds before that, he saw a turkey on the side of the road.

As for the elephant itself, the results of its recognition also differed from the attempt to attempt. The system then determined it correctly, then called it a sheep, then did not notice it at all.

“If an elephant really does appear in the room, anyone will probably notice it,” says Rosenfeld. “And the system did not even record his presence.”

Close relationship

When people see something unexpected, they look at it better. No matter how simple it sounds, “take a closer look”, this has real cognitive consequences and explains why the AI makes mistakes when something unusual appears.

The best modern neural networks in processing and recognizing objects pass information through themselves only in the forward direction. They start by selecting pixels at the input, go to curves, shapes, and scenes, and make the most likely guesses at each stage. Any misconceptions in the early stages of the process lead to errors at the end, when the neural network puts together its “thoughts” to guess what it is looking at.

“In neural networks, all processes are closely interrelated with each other, so there is always a chance that any feature in any place can affect any possible result,” says Tsotsos.

The human approach is better. Imagine that you were given a glimpse of an image with a circle and a square, one red, the other blue. After that, you were asked to name the color of the square. One short glance may not be enough to memorize colors correctly. Immediately comes the understanding that you are not sure, and you need to look again. And, which is very important, during the second viewing you will already know what needs to be focused on.

“The human visual system says:“ I can’t give the right answer yet, so I’ll go back and check where the error could have happened, ”explains Tsotsos, who develops a theory called“ Electoral Tuning ”explaining this feature of visual perception.

Most neural networks lack the ability to go back. This feature is very difficult to design. One of the advantages of unidirectional networks is that it is relatively easy to train them - it is enough to “skip” images through the above six layers and get a result. But if neural networks should “look more closely”, they also need to distinguish between a fine line, when it is better to go back, and when to continue. The human brain easily and naturally switches between so different processes. And neural networks need a new theoretical base so that they can do the same.

Leading researchers from around the world are working in this direction, but they also need help. Recently, the Google AI project announced a crowdsourcing competition for image classifiers that can distinguish between cases of deliberate image distortion. Wins the decision that can uniquely distinguish the image of a bird from the image of a bicycle. This will be a modest, but very important first step.

Source: https://habr.com/ru/post/424855/

All Articles