How to make video cards or why those who criticize the 20X0 series of video cards are not quite right

Unfortunately, not all sites do tests in synthetics, but nevertheless they are very important for understanding what is happening on the market for video cards. Let's prove it.

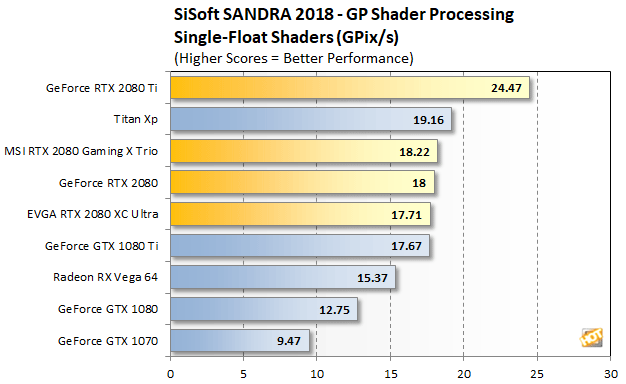

Consider the first chart:

')

We see that the RTX 2080 ti leads in this synthetic test, followed by Titan XP, RTX 2080, GTX 1080 ti, RX Vega 64, GTX 1080 and GTX 1070.

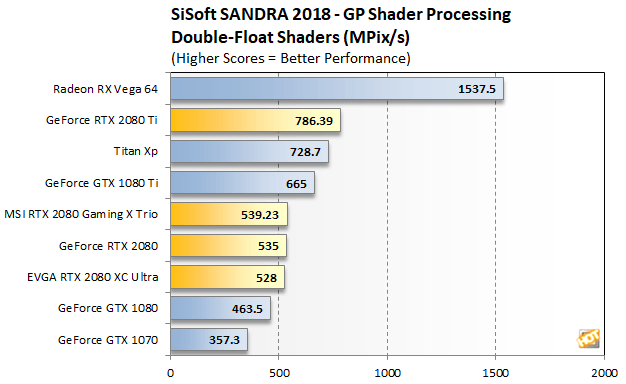

Consider the second graph:

We see that in the second synthetic test the RX Vega 64 leads by a large margin, followed by the RTX 2080 ti Titan XP, GTX 1080 ti, RTX 2080, GTX 1080 and GTX 1070.

And what do you ask? And the fact that on the first graph 2080ti is in the lead, and on the second it is literally breaking the competitors of the RX Vega 64. This means that the 2080ti has no graphical calculations to save space is minimized, while the RX Vega 64 has exactly a turn of the chip for non-graphical calculations . RX Vega 64 is a server card, which is thrown on the gaming market. I think now it is not necessary to explain why the RX Vega 64 accelerates so badly, why it has such a large TDP and such a large chip. And why did she perform so modestly? I think that when AMD released this card, she was well aware of what was happening and that she deliberately sacrificed the user card market in favor of the server market and professional calculations.

I realize that my argument about the RX Vega 64 is not 100% and yet:

In fact, everything is even worse (this is my opinion, which I do not impose on anyone) the entire line of AMD cards starting with the 7000 series and GCN is more server-oriented. Therefore, when many journalists enthusiastically wrote about the merits of GCN architecture, I still wrote that it was the sunset of the AMD graphic division and turned out to be right. Well, the RX Vega 64 is the cherry on the cake of this madness and the last straw for me - I was very disappointed in the actions of the company.

The proof is another chart:

Now there is a lot of talk that NVIDIA is bad, that prices are high, that there is no competition. Well, and who is to blame for the fact that there is no competition?

There is also a lot of talk that they say AMD invested little in R and D and therefore ... What AMD will switch to 7-nm and then show everyone ... I declare with all responsibility - all this is nonsense. The current situation in the user market does not have anything to do with AMD’s investments in development; in the same Vega there were enough funds and they did it well for a very long time, and as a result, complete zilch. And 7 nm. Well, will go AMD with this approach at 7-nm and then what? What NV on 7-nm can not go? And all happen again. So 7-nm give nothing without philosophy.

Can AMD make a normal gaming card right now? Yes, maybe it’s enough to throw out a maximum of non-graphical calculations from GCN and a decent card will turn out, maybe it will not be able to fully compete with GTX, but this will be a good card, although this recipe completely contradicts the philosophy of AMD. Moreover, if AMD would have taken the 5000 or 6000 line and added DX12 there, it would have also become a leader. The fact is that according to my calculations, VLIW4 is approximately 30% more efficient than GCN per area * frequency. And there is nothing surprising here because the superscalar architecture always loses in vector efficiency. The secret to closing the VLIW architecture is simple - it is somewhat worse for non-graphical calculations, so it was closed and replaced with Buldozer-GCN. AMD is interested in fat about the market, and not poor gamers who can not pay 50,000 bucks for a video card.

Having dealt with the efficiency of the architectures and the reason for the current situation on the video card market, let's move on to the announcement of 20X0 video cards, on which tons of dirt have been poured on the forums from some people. Basically, everything revolved around charges of high prices and low productivity growth compared to the 10X0 series. But it got the same "unnecessary" reytresingu, but about DLSS in every way silent.

The reason for the small increase in performance is trivial - about 1/4 of the chip is occupied by RT cores, and about 1/4 of the tensor and only about 1/2 of the graphics chip remains on the graphics chip itself. Hence the high cost - the chip turned out to be very large, almost like that of titanium.

To this it is worth adding that the 12-nm process technology for compactness is almost the same 16-nm. The announcement of the RTX to some extent strongly resembles the announcement of the 6000 series from AMD: maximum optimization and a small increase in performance with a small increase in computing resources.

As for the reitressing, in my opinion, 4K60fps can not wait for another two generations. Since for a number of information with shadows, you can play no higher than 60 fps 1080p, and shadow + reflections about 30 fps at maximum settings. The fact is that in fact, reissing is calculated on the same cores as shaders and so far either the number of transistors does not increase significantly or a separate hardware unit appears - the performance situation does not change, although there is hope for optimization, there are different photon maps and so on. d. Contrary to unnecessary claims, in my opinion, raytracing is at least a step more than a step from DX8 to DX9.

Why is everyone silent about DLSS?

As you can see, the inclusion of the DLSS mode literally breaks the cards of the previous generation. Yes, the 2080 becomes quite playable in 4K.

What is DLSS? This is in a sense, hardware anti-aliasing, where anti-aliasing occurs without or almost without the participation of the GPU, in a separate block. Those. The GPU works at about the same speed as if it were working without anti-aliasing. The difference between the old and the new smoothing is big, but without smoothing, the difference will drop to raw performance.

DLSS has two drawbacks: firstly, DLSS requires support from the game (at least as far as this is clear now), and not just can be turned on for any game and now how many games NV will turn on DLSS and the average performance of 20X0 and this is a big drawback. Secondly, anti-aliasing could be implemented with smaller forces through a separate hardware block, and it would be more compact and would not require support from the games. Why did NV implement hardware anti-aliasing through less efficient tensor kernels? Because NV wants to sell these cards and pro and gamers, well, a universal solution is almost always less effective than a specialized one.

Findings.

Is the conclusion 20X0 a complete disaster as shout forum "analysts"? No, but in many respects the weight of this will not depend on how many games, including those already released, DLSS will appear and what will be its average efficiency.

Secondly, it would be very nice if reitressing appeared with a superresolution on tensor cores from 1080p to 4K. (the super resolution on the tensor cores of NV has already been demonstrated, it remains only to find out whether it is compatible with reitressing).

I would also like to add that there is no competition and it will not be at least until the release of the new architecture from AMD, and what kind of architecture it will be is still a big question.

Buy cheap 10X0? It depends on whether you believe in new technologies. If not, then buy without a doubt, but if we say NV in many games introduces DLSS, then you can be foolish in hoping for a “raw” power of 10x0.

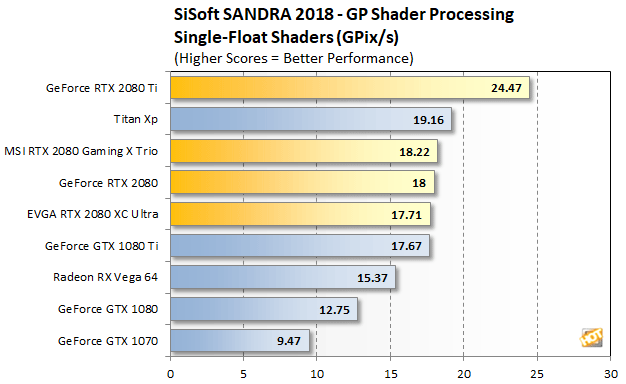

Consider the first chart:

')

We see that the RTX 2080 ti leads in this synthetic test, followed by Titan XP, RTX 2080, GTX 1080 ti, RX Vega 64, GTX 1080 and GTX 1070.

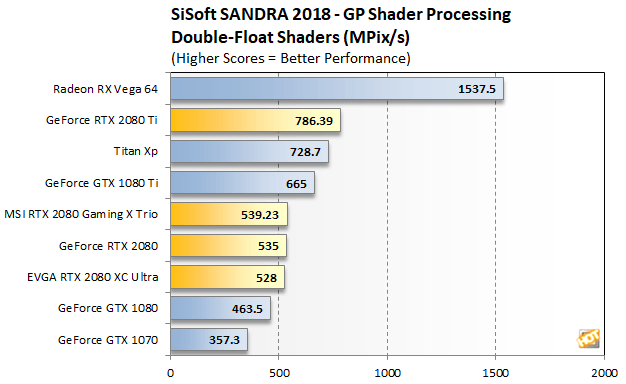

Consider the second graph:

We see that in the second synthetic test the RX Vega 64 leads by a large margin, followed by the RTX 2080 ti Titan XP, GTX 1080 ti, RTX 2080, GTX 1080 and GTX 1070.

And what do you ask? And the fact that on the first graph 2080ti is in the lead, and on the second it is literally breaking the competitors of the RX Vega 64. This means that the 2080ti has no graphical calculations to save space is minimized, while the RX Vega 64 has exactly a turn of the chip for non-graphical calculations . RX Vega 64 is a server card, which is thrown on the gaming market. I think now it is not necessary to explain why the RX Vega 64 accelerates so badly, why it has such a large TDP and such a large chip. And why did she perform so modestly? I think that when AMD released this card, she was well aware of what was happening and that she deliberately sacrificed the user card market in favor of the server market and professional calculations.

I realize that my argument about the RX Vega 64 is not 100% and yet:

- AMD has always been the leader in computing, and here in single calculations we see a lag and a significant one: Vega is between 1080ti and 1080, and the RX Vega 64 chip is 3% more than 1080ti.

- RX Vega 64 was done for a very long time, this is not an enlarged core of previous GPUs, but in terms of energy efficiency, a strong backlog means the resources were spent on something else, not on optimization. Hence the conclusion that the resources of both the developers and the chip were embedded in these 64-bit calculations.

- Well, now the iron argument. The number of transistors FuryX 8.9 billion. The number of transistors Vega 64 12.5 billion. The number of unified shader processors FuryX 4096 pcs, the number of unified shader processors Vega 64 ... 4096 pcs! Where did the 3.6 billion transistors go, which were so necessary if they didn’t go for non-graphical calculations? AMD had a chance to transfer FuryX to 14-nm process technology with HBM or not (better without HBM), but they, as a gambler, as soon as possible, the entire transistor budget was embedded in the calculations.

In fact, everything is even worse (this is my opinion, which I do not impose on anyone) the entire line of AMD cards starting with the 7000 series and GCN is more server-oriented. Therefore, when many journalists enthusiastically wrote about the merits of GCN architecture, I still wrote that it was the sunset of the AMD graphic division and turned out to be right. Well, the RX Vega 64 is the cherry on the cake of this madness and the last straw for me - I was very disappointed in the actions of the company.

The proof is another chart:

Now there is a lot of talk that NVIDIA is bad, that prices are high, that there is no competition. Well, and who is to blame for the fact that there is no competition?

There is also a lot of talk that they say AMD invested little in R and D and therefore ... What AMD will switch to 7-nm and then show everyone ... I declare with all responsibility - all this is nonsense. The current situation in the user market does not have anything to do with AMD’s investments in development; in the same Vega there were enough funds and they did it well for a very long time, and as a result, complete zilch. And 7 nm. Well, will go AMD with this approach at 7-nm and then what? What NV on 7-nm can not go? And all happen again. So 7-nm give nothing without philosophy.

Can AMD make a normal gaming card right now? Yes, maybe it’s enough to throw out a maximum of non-graphical calculations from GCN and a decent card will turn out, maybe it will not be able to fully compete with GTX, but this will be a good card, although this recipe completely contradicts the philosophy of AMD. Moreover, if AMD would have taken the 5000 or 6000 line and added DX12 there, it would have also become a leader. The fact is that according to my calculations, VLIW4 is approximately 30% more efficient than GCN per area * frequency. And there is nothing surprising here because the superscalar architecture always loses in vector efficiency. The secret to closing the VLIW architecture is simple - it is somewhat worse for non-graphical calculations, so it was closed and replaced with Buldozer-GCN. AMD is interested in fat about the market, and not poor gamers who can not pay 50,000 bucks for a video card.

Having dealt with the efficiency of the architectures and the reason for the current situation on the video card market, let's move on to the announcement of 20X0 video cards, on which tons of dirt have been poured on the forums from some people. Basically, everything revolved around charges of high prices and low productivity growth compared to the 10X0 series. But it got the same "unnecessary" reytresingu, but about DLSS in every way silent.

The reason for the small increase in performance is trivial - about 1/4 of the chip is occupied by RT cores, and about 1/4 of the tensor and only about 1/2 of the graphics chip remains on the graphics chip itself. Hence the high cost - the chip turned out to be very large, almost like that of titanium.

To this it is worth adding that the 12-nm process technology for compactness is almost the same 16-nm. The announcement of the RTX to some extent strongly resembles the announcement of the 6000 series from AMD: maximum optimization and a small increase in performance with a small increase in computing resources.

As for the reitressing, in my opinion, 4K60fps can not wait for another two generations. Since for a number of information with shadows, you can play no higher than 60 fps 1080p, and shadow + reflections about 30 fps at maximum settings. The fact is that in fact, reissing is calculated on the same cores as shaders and so far either the number of transistors does not increase significantly or a separate hardware unit appears - the performance situation does not change, although there is hope for optimization, there are different photon maps and so on. d. Contrary to unnecessary claims, in my opinion, raytracing is at least a step more than a step from DX8 to DX9.

Why is everyone silent about DLSS?

As you can see, the inclusion of the DLSS mode literally breaks the cards of the previous generation. Yes, the 2080 becomes quite playable in 4K.

What is DLSS? This is in a sense, hardware anti-aliasing, where anti-aliasing occurs without or almost without the participation of the GPU, in a separate block. Those. The GPU works at about the same speed as if it were working without anti-aliasing. The difference between the old and the new smoothing is big, but without smoothing, the difference will drop to raw performance.

DLSS has two drawbacks: firstly, DLSS requires support from the game (at least as far as this is clear now), and not just can be turned on for any game and now how many games NV will turn on DLSS and the average performance of 20X0 and this is a big drawback. Secondly, anti-aliasing could be implemented with smaller forces through a separate hardware block, and it would be more compact and would not require support from the games. Why did NV implement hardware anti-aliasing through less efficient tensor kernels? Because NV wants to sell these cards and pro and gamers, well, a universal solution is almost always less effective than a specialized one.

Findings.

Is the conclusion 20X0 a complete disaster as shout forum "analysts"? No, but in many respects the weight of this will not depend on how many games, including those already released, DLSS will appear and what will be its average efficiency.

Secondly, it would be very nice if reitressing appeared with a superresolution on tensor cores from 1080p to 4K. (the super resolution on the tensor cores of NV has already been demonstrated, it remains only to find out whether it is compatible with reitressing).

I would also like to add that there is no competition and it will not be at least until the release of the new architecture from AMD, and what kind of architecture it will be is still a big question.

Buy cheap 10X0? It depends on whether you believe in new technologies. If not, then buy without a doubt, but if we say NV in many games introduces DLSS, then you can be foolish in hoping for a “raw” power of 10x0.

Source: https://habr.com/ru/post/424667/

All Articles