Let's process the sound on Go

Disclaimer: I do not consider any algorithms and APIs for working with sound and speech recognition. This article is about audio issues and how to solve them with Go.

phono - application framework for working with sound. Its main function is to create a pipeline of different technologies that will process the sound. for you the way you want.

What does the conveyor, besides, from different technologies, and why another framework? Now we will understand.

Where does the sound come from?

By 2018, sound has become the standard way for human interaction with technology. Most IT giants have created their voice assistant or are doing it right now. Voice control is already in most operating systems, and voice messaging is a typical feature of any messenger. In the world, about a thousand startups are working on the processing of natural language and about two hundred on speech recognition.

With music, a similar story. It plays from any device, and sound recording is available to anyone who has a computer. Hundreds of companies and thousands of enthusiasts around the world are developing music software.

Common tasks

If you had to work with sound, then the following conditions should sound familiar:

- Audio must be obtained from a file, device, network, etc.

- Audio needs to be processed : add effects, transcode, analyze, etc.

- Audio must be transferred to a file, device, network, etc.

- Data is transmitted in small buffers.

It turns out the usual pipeline - there is a data stream that goes through several stages of processing.

Solutions

For clarity, we take the problem from real life. For example, you need to convert a voice to text:

- We record audio from the device

- Remove noise

- Equalize

- Passing a signal to the speech recognition API

Like any other problem, this one has several solutions.

Head-on

Only for hardcore cyclists programmers. We record sound directly through the sound card driver, we write a smart noise and multiband equalizer. This is very interesting, but you can forget about your original task for a few months.

Long and very difficult.

In a normal way

The alternative is to use existing APIs. You can record audio using ASIO, CoreAudio, PortAudio, ALSA and others. For processing, there are also several types of plug-ins: AAX, VST2, VST3, AU.

A rich choice does not mean that you can use everything at once. The following restrictions usually apply:

- Operating system. Not all APIs are available on all operating systems. For example, AU is a native OS X technology and is only available there.

- Programming language. Most audio libraries are written in C or C ++. In 1996, Steinberg released the first version of the VST SDK, which is still the most popular plug-in standard. After 20 years, it is not necessary to write in C / C ++: for VST there are wrappers for Java, Python, C #, Rust and who knows what else. Although the language remains a limitation, but now the sound is processed even in JavaScript.

- Functional. If the task is simple and clear, it is not necessary to write a new application. The same FFmpeg can do a lot.

In this situation, the complexity depends on your choice. In the worst case, you will have to deal with several libraries. And if you are not lucky at all, with complex abstractions and completely different interfaces.

What is the result?

You need to choose between very complex and complex :

- either deal with multiple low-level APIs to write your bikes

- either deal with multiple APIs and try to befriend them

No matter which method is chosen, the task always comes down to the pipeline. The technologies used may vary, but the essence is the same. The problem is that again, instead of solving a real problem, you have to write bicycle conveyor.

But there is a way out.

phono

phono designed to solve common problems - to " receive, process and transmit " sound. For this, he uses the pipeline as the most natural abstraction. There is an article in the official blog Go that describes the pattern pipeline. The main idea of the pipeline is that there are several stages of data processing that operate independently of each other and exchange data through channels. That is necessary.

Why go?

First, most audio programs and libraries are written in C, and Go is often mentioned as its successor. In addition, there are cgo and quite a lot of bindings for existing audio libraries. You can take and use.

Secondly, in my personal opinion, Go is a good language. I will not go deep, but I will note its multithreading . Channels and gorutiny greatly simplify the implementation of the pipeline.

Abstractions

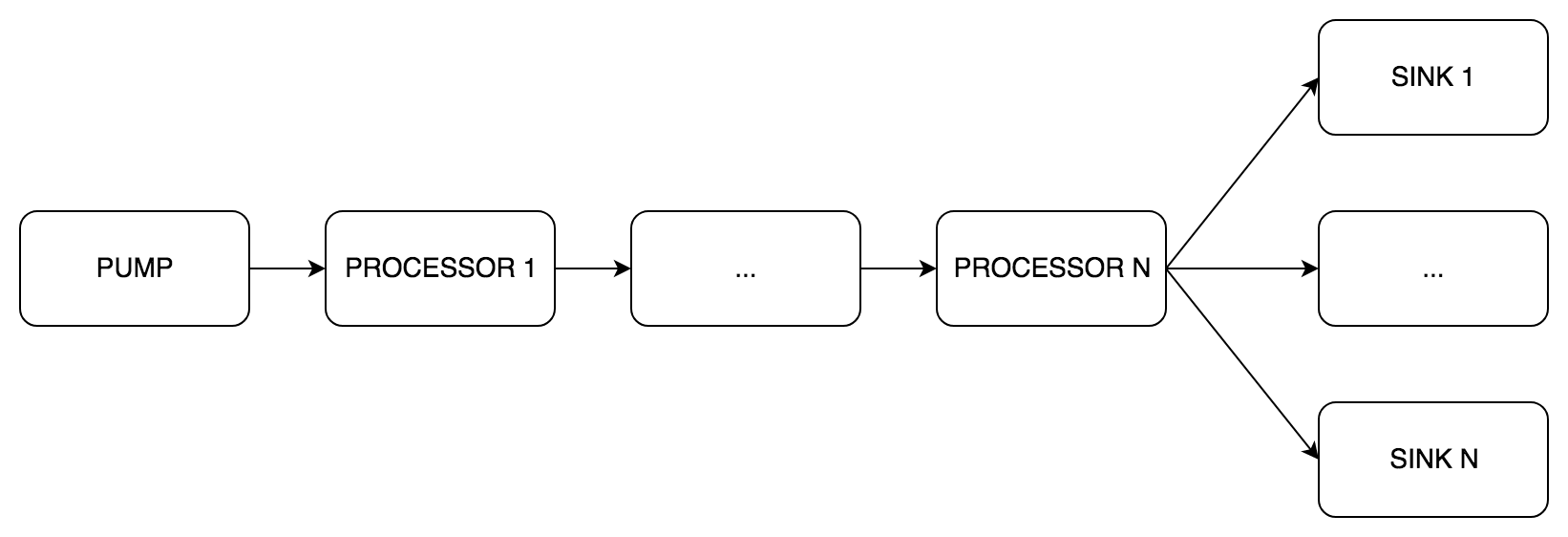

The heart of phono is the type of pipe.Pipe (English pipe). That it implements the pipeline. As in the sample from the blog , there are three types of stages:

pipe.Pump(English pump) - receiving sound, only output channelspipe.Processor(English handler) - sound processing , input and output channelspipe.Sink(English sink) - sound transmission , input channels only

Inside pipe.Pipe data is transmitted by buffers. The rules by which you can build the pipeline:

- One

pipe.Pump - Several

pipe.Processor, placed one after another - One or more

pipe.Sink, placed in parallel - All

pipe.Pipecomponents must have the same:- Buffer size (messages)

- Sampling rate

- Number of channels

The minimum configuration is Pump and one Sink, the rest is optional.

Let us examine a few examples.

Plain

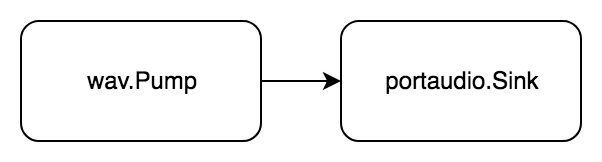

Task: play wav file.

Let's bring it to the form " to receive, process, transfer ":

- Get audio from wav file

- We transfer audio to portaudio device

Audio is read and immediately played.

package example import ( "github.com/dudk/phono" "github.com/dudk/phono/pipe" "github.com/dudk/phono/portaudio" "github.com/dudk/phono/wav" ) // Example: // Read .wav file // Play it with portaudio func easy() { wavPath := "_testdata/sample1.wav" bufferSize := phono.BufferSize(512) // wav pump wavPump, err := wav.NewPump( wavPath, bufferSize, ) check(err) // portaudio sink paSink := portaudio.NewSink( bufferSize, wavPump.WavSampleRate(), wavPump.WavNumChannels(), ) // build pipe p := pipe.New( pipe.WithPump(wavPump), pipe.WithSinks(paSink), ) defer p.Close() // run pipe err = p.Do(pipe.Run) check(err) } First, we create elements of the future pipeline: wav.Pump and portaudio.Sink and transfer them to the constructor pipe.New . The p.Do(pipe.actionFn) error function starts the pipeline and waits for completion.

More difficult

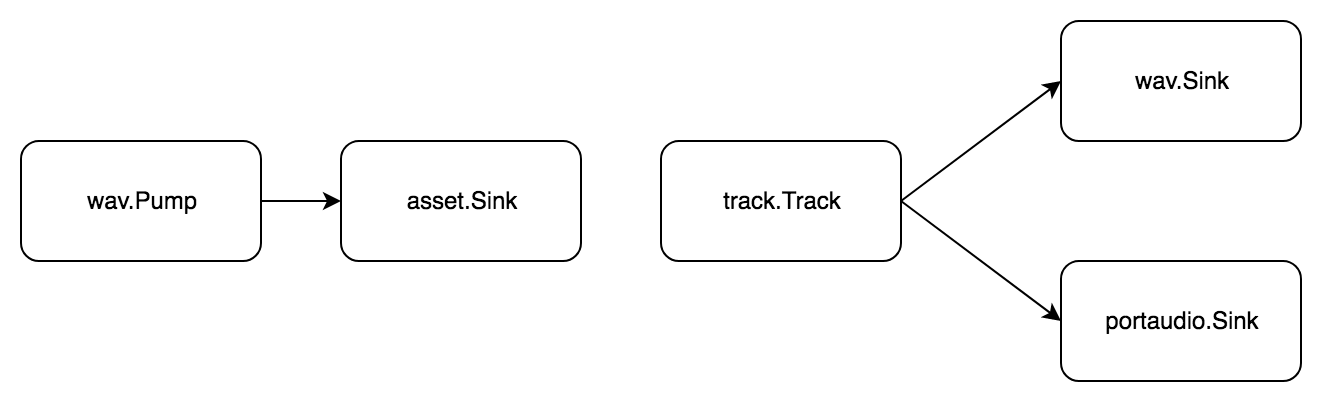

Task: split the wav file into samples, compose a track from them, save the result and simultaneously play back.

A track is a sequence of samples, and a sample is a small piece of audio. To cut audio, you must first load it into memory. To do this, use the type asset.Asset from the phono/asset package. We divide the task into standard steps:

- Get audio from wav file

- We transfer audio to memory

Now we make samples with our hands, add them to the track and finish off the task:

- Get audio from the track

- We transfer audio to

- wav file

- portaudio device

Again, without processing stage, but as many as two pipeline!

package example import ( "github.com/dudk/phono" "github.com/dudk/phono/asset" "github.com/dudk/phono/pipe" "github.com/dudk/phono/portaudio" "github.com/dudk/phono/track" "github.com/dudk/phono/wav" ) // Example: // Read .wav file // Split it to samples // Put samples to track // Save track into .wav and play it with portaudio func normal() { bufferSize := phono.BufferSize(512) inPath := "_testdata/sample1.wav" outPath := "_testdata/example4_out.wav" // wav pump wavPump, err := wav.NewPump(inPath, bufferSize) check(err) // asset sink asset := &asset.Asset{ SampleRate: wavPump.WavSampleRate(), } // import pipe importAsset := pipe.New( pipe.WithPump(wavPump), pipe.WithSinks(asset), ) defer importAsset.Close() err = importAsset.Do(pipe.Run) check(err) // track pump track := track.New(bufferSize, asset.NumChannels()) // add samples to track track.AddFrame(198450, asset.Frame(0, 44100)) track.AddFrame(66150, asset.Frame(44100, 44100)) track.AddFrame(132300, asset.Frame(0, 44100)) // wav sink wavSink, err := wav.NewSink( outPath, wavPump.WavSampleRate(), wavPump.WavNumChannels(), wavPump.WavBitDepth(), wavPump.WavAudioFormat(), ) // portaudio sink paSink := portaudio.NewSink( bufferSize, wavPump.WavSampleRate(), wavPump.WavNumChannels(), ) // final pipe p := pipe.New( pipe.WithPump(track), pipe.WithSinks(wavSink, paSink), ) err = p.Do(pipe.Run) } Compared to the previous example, there are two pipe.Pipe . The first transmits data into memory so that samples can be cut. The second has just two recipients at the end: wav.Sink and portaudio.Sink . With this scheme, the sound is simultaneously recorded in a wav file and played.

Even harder

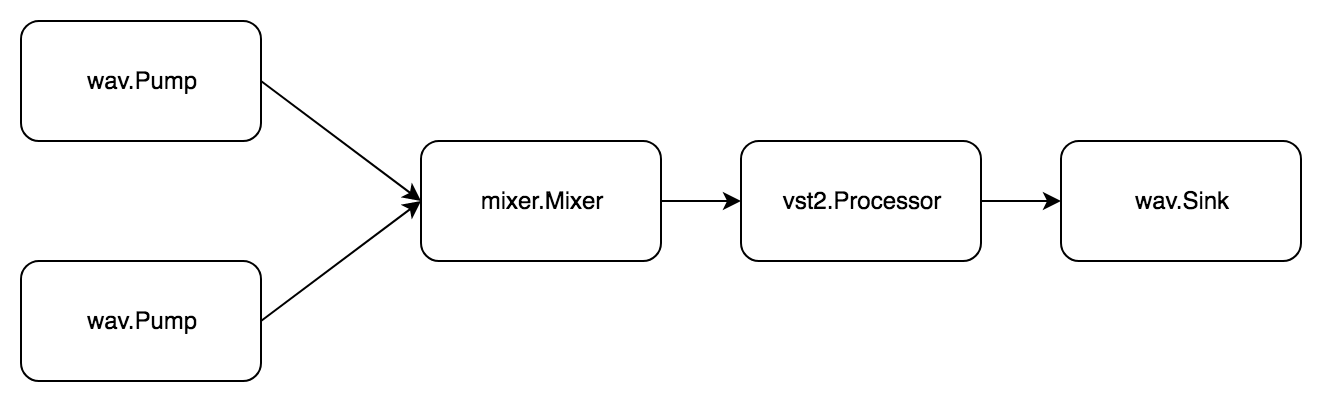

Task: read two wav files, mix, process the vst2 plugin and save to a new wav file.

The phono/mixer package has a simple mixer mixer.Mixer . You can send signals from multiple sources to it and get one mixed. For this, it simultaneously implements pipe.Pump and pipe.Sink .

Again the task consists of two subtasks. The first one looks like this:

- Get audio wav file

- We transfer audio to the mixer

The second:

- Get audio from the mixer

- We process audio plugin

- We transfer audio to the wav file

package example import ( "github.com/dudk/phono" "github.com/dudk/phono/mixer" "github.com/dudk/phono/pipe" "github.com/dudk/phono/vst2" "github.com/dudk/phono/wav" vst2sdk "github.com/dudk/vst2" ) // Example: // Read two .wav files // Mix them // Process with vst2 // Save result into new .wav file // // NOTE: For example both wav files have same characteristics ie: sample rate, bit depth and number of channels. // In real life implicit conversion will be needed. func hard() { bs := phono.BufferSize(512) inPath1 := "../_testdata/sample1.wav" inPath2 := "../_testdata/sample2.wav" outPath := "../_testdata/out/example5.wav" // wav pump 1 wavPump1, err := wav.NewPump(inPath1, bs) check(err) // wav pump 2 wavPump2, err := wav.NewPump(inPath2, bs) check(err) // mixer mixer := mixer.New(bs, wavPump1.WavNumChannels()) // track 1 track1 := pipe.New( pipe.WithPump(wavPump1), pipe.WithSinks(mixer), ) defer track1.Close() // track 2 track2 := pipe.New( pipe.WithPump(wavPump2), pipe.WithSinks(mixer), ) defer track2.Close() // vst2 processor vst2path := "../_testdata/Krush.vst" vst2lib, err := vst2sdk.Open(vst2path) check(err) defer vst2lib.Close() vst2plugin, err := vst2lib.Open() check(err) defer vst2plugin.Close() vst2processor := vst2.NewProcessor( vst2plugin, bs, wavPump1.WavSampleRate(), wavPump1.WavNumChannels(), ) // wav sink wavSink, err := wav.NewSink( outPath, wavPump1.WavSampleRate(), wavPump1.WavNumChannels(), wavPump1.WavBitDepth(), wavPump1.WavAudioFormat(), ) check(err) // out pipe out := pipe.New( pipe.WithPump(mixer), pipe.WithProcessors(vst2processor), pipe.WithSinks(wavSink), ) defer out.Close() // run all track1Done, err := track1.Begin(pipe.Run) check(err) track2Done, err := track2.Begin(pipe.Run) check(err) outDone, err := out.Begin(pipe.Run) check(err) // wait results err = track1.Wait(track1Done) check(err) err = track2.Wait(track2Done) check(err) err = out.Wait(outDone) check(err) } There are already three pipe.Pipe , all connected to each other through a mixer. To run, use the p.Begin(pipe.actionFn) (pipe.State, error) function p.Begin(pipe.actionFn) (pipe.State, error) . Unlike p.Do(pipe.actionFn) error , it does not block the call, but simply returns a state, which can then be waited with the help of p.Wait(pipe.State) error .

What's next?

I want phono become the most convenient application framework. If there is a problem with sound, you do not need to understand complex APIs and waste time studying standards. All that is needed is to build a pipeline of suitable elements and start it.

For half a year, the following packages were washed down:

phono/wav- read / write wav filesphono/vst2- incomplete binding of the VST2 SDK, while you can only open the plugin and call its methods, but not all structuresphono/mixer- mixer, adds N signals, without balance and volumephono/asset- buffers samplingphono/track- sequential reading of samples (layered layered)phono/portaudio- reproduction of a signal, while experiments

In addition to this list, there is a constantly gaining backlog of new ideas and ideas, including:

- Countdown

- Changeable on the fly pipeline

- HTTP pump / sink

- Automation of parameters

- Ramping processor

- Balance and volume in the mixer

- Real-time pump

- Synchronized pump for multiple tracks

- Full vst2

In the following articles I will analyze:

pipe.Pipelife cycle - due to the complex structure, its state is controlled by the final atom- how to write your pipeline stages

This is my first open-source project, so I will be grateful for any help and recommendations. Welcome.

Links

')

Source: https://habr.com/ru/post/424623/

All Articles