Fast resize dzhipegov on the video card

In applications for working with images, the task of resizing dzhipegov (pictures compressed using the JPEG algorithm) is quite common. In this case, it is impossible to make a resize right away and you must first decode the original data. There is nothing complicated and new in this, but if it needs to be done many millions of times a day, then the optimization of the performance of such a solution, which must be very fast, becomes of particular importance.

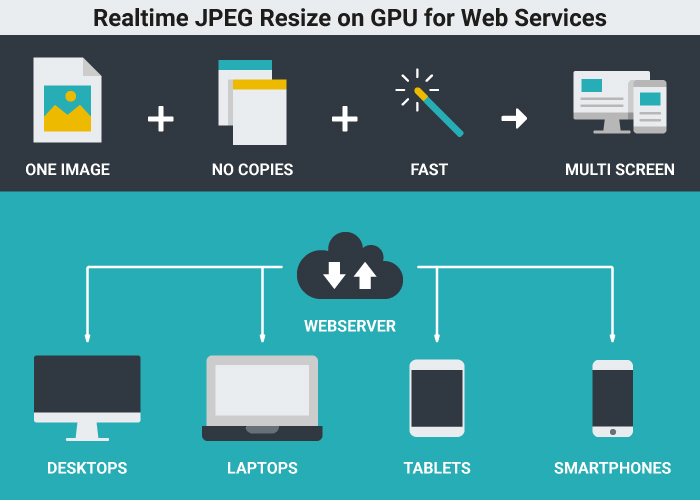

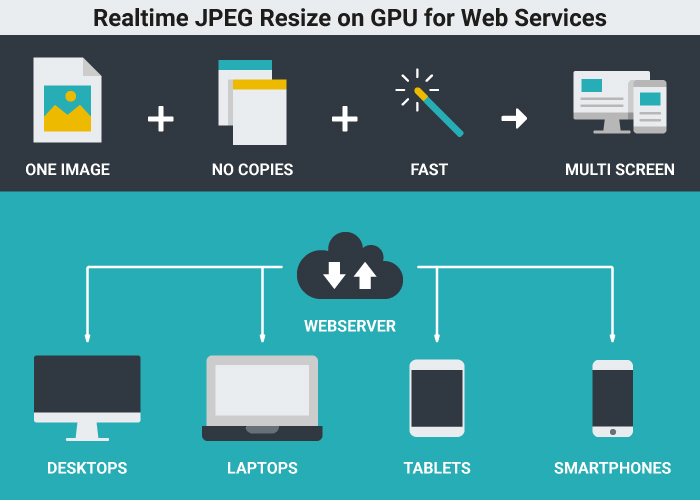

Such a task is often encountered when organizing remote hosting for image storage, since most of the cameras and phones are shot in JPEG format. Every day, the photo archives of leading web services (social networks, forums, photo hosting and many others) are replenished with a significant number of such images, so the question of how to store such images is extremely important. To reduce the size of outgoing traffic and to improve the response time to a user request, many web services store several dozen files for one image in different resolutions. The response speed is good, but these copies take up a lot of space. This is the main problem, although there are other disadvantages to this approach.

The idea of solving this problem is not to store on the server many variants of the original image in different resolutions, but to dynamically create the necessary image with the given dimensions from a previously prepared original, and as soon as possible. Thus, in real time, you can create an image of the desired resolution and immediately send it to the user. It is very important that the resolution of this image can immediately be made so that the user's device does not make on-screen resizing, since it simply will not be necessary.

')

Using other formats other than JPEG as the base for organizing such an image repository does not seem to be justified. Of course, there are standard, widely used formats that give better compression with similar quality (JPEG2000, WebP), but the encoding and decoding speed of such images is very low compared to JPEG, so it makes sense to choose JPEG as the base format for storing original photos, which, if necessary, will be scaled in real time after receiving a request from the user.

Of course, besides dzhipegov on each site most often there are images in formats PNG and GIF, but usually their relative number is small, and photos in these formats are stored very rarely. Therefore, these formats will not make a noticeable impact on the task in question in most cases.

So, the input data are JPEG files, and to achieve fast decoding (this is true for both the CPU and the GPU), compressed images must have built-in restart markers. These markers are described in the JPEG standard and some codecs are able to work with them, the rest are able to ignore them. If there are no such markers for jeeps, you can add them in advance using the jpegtran utility. When adding markers, the image does not change, but the file size becomes slightly larger. As a result, we obtain the following scheme of work:

You can make a more accurate solution, when before resizing, the inverse gamma is superimposed on each pixel component, so that the resize is in linear space, and then the gamma is applied again, but after sharpe. The actual difference for the user is small, but it exists, and the computational costs for such a modification are minimal. It is only necessary to insert the overlap of the inverse and direct gamma into the general processing scheme.

It is also possible solution when decoding dzipegov performed on a multi-core CPU using the library libjpeg-turbo. In this case, each picture is decoded in a separate CPU stream, and all other actions are performed on the video card. With a large number of CPU cores, it can turn out even faster, but there will be a serious loss in latency. If latency when decoding jipeg on one core of the CPU is acceptable, then this option can be very fast, especially for the case when the source jpegs have a small resolution. If you increase the resolution of the original image, the decoding time of the jipeg in one CPU stream will increase, so this option may be suitable only for small resolutions.

a) If the image size does not match the window size, the user's device (phone, tablet, laptop) will make a hardware resize after decoding before displaying the picture on the screen. In OpenGL, this hardware resize is done only by a bilinear algorithm, which often causes moire (divorces) and other artifacts to appear on images containing fine details.

b) Screen resize consumes additional energy from the device.

c) If for solving the problem to use a series of pre-scaled images, it is not always possible to get exactly the right size, which means you will have to send a picture with a higher resolution. The increased image size leads to more traffic, which I would also like to avoid.

Restart markers. We can quickly decode JPEG images on a video card only if there are restart markers inside it. The official JPEG standard describes these markers; this is a standard parameter. If there are no restart markers, then you cannot parallelize the decoding of the picture on the video card, which will lead to a very low decoder speed. Therefore, we need a base of prepared images in which there are these markers.

Fixed algorithm for image codec. Decoding and encoding images using the JPEG algorithm is by far the fastest option.

The resolution of images in the prepared database can be any, and we consider 1K and 2K as options (4K can also be taken). You can also make not only a reduction, but also an increase in images during resize.

We tested the fast resize application from the Fastvideo SDK kit on the NVIDIA Tesla V100 video card (Windows Server 2016 OS, 64-bit, driver 24.21.13.9826) on 24-bit 1k_wild.ppm and 2k_wild.ppm images with a resolution of 1K and 2K (1280x720 and 1920x1080). Tests are conducted for a different number of threads running on a single video card. It requires no more than 110 MB of memory on a video card for one stream. 4 threads need no more than 440 MB.

First, compress the original image in JPEG with the quality of 90%, with a decimation of 4: 2: 0 or 4: 4: 4. Then we decode and resize 2 times the width and height, we make a sharp, then we encode again with the quality of 90% in 4: 2: 0 or 4: 4: 4. The original data are in RAM, the final image is placed in the same place.

The runtime is counted from the start of loading the original image from RAM to saving the processed image into RAM. The time of program initialization and memory allocation on a video card is not included in the measurements.

Command line example for a 24-bit 1K image

PhotoHostingSample.exe -i 1k_wild.90.444.jpg -o 1k_wild.640.jpg -outputWidth 640 -q 90 -s 444 -sharp_after 0.95 -repeat 200

Decoding (including sending data to a video card): 0.70 ms

Resize twice (in width and height): 0.27 ms

Sharp: 0.02 ms

JPEG encoding (including sending data from a video card): 0.20 ms

Total time per frame: 1.2 ms

Thinning 4: 2: 0 for the original image reduces the speed of work, but the size of the source and destination files become smaller. When switching to 4: 2: 0, the degree of parallelism drops by 4 times, since now the 16x16 block is considered as one unit, therefore, in this mode, the operation speed is lower than for 4: 4: 4.

Performance is mainly determined by the JPEG decoding stage, since at this stage the picture has the maximum resolution, and the computational complexity of this processing stage is higher than all the others.

The test results showed that for the NVIDIA Tesla V100 video card, the processing speed of 1K and 2K images is maximum when running 2-4 streams simultaneously, and ranges from 800 to 1000 frames per second per video card. Processing of 1K images is faster than 2K, and work with 4: 2: 0 images is always slower than with 4: 4: 4. To get the final result on performance, you need to accurately determine all the parameters of the program and optimize it for a specific model of the video card.

A latency of the order of one millisecond is a good result. As far as is known, such latency cannot be obtained for a similar resize task on the CPU (even if there is no need to encode and decode dzipegov), so this is another important argument in favor of using video cards in high-performance image processing solutions.

To handle one billion jeeps per day with 1K or 2K resolutions, up to 16 NVIDIA Tesla V100 video cards may be required. Some of our customers are already using this solution, others are testing it in their tasks.

Resizing dzhipegov on the video card can be very useful not only for web services. There are a huge number of high-performance image processing applications where such functionality can be demanded. For example, fast resizing is very often necessary for almost any image processing circuit received from cameras before displaying a picture on a monitor. This solution can work for Windows / Linux on any NVIDIA video cards: Tegra K1 / X1 / X2 / Xavier, GeForce GT / GTX / RTX, Quadro, Tesla.

The library for quick resize of jeepers can be used in high-load web services, large online stores, social networks, online photo management systems, e-commerce, in almost any software for managing large enterprises.

Software developers can use this library, which provides latency on the order of a few milliseconds for resizing dzhipegov with a resolution of 1K, 2K and 4K on the video card.

Apparently, this approach may be faster than the NVIDIA DALI solution for fast decoding of dzhipegov, resize and image preparation at the stage of neural networks training for Deep Learning.

Such a task is often encountered when organizing remote hosting for image storage, since most of the cameras and phones are shot in JPEG format. Every day, the photo archives of leading web services (social networks, forums, photo hosting and many others) are replenished with a significant number of such images, so the question of how to store such images is extremely important. To reduce the size of outgoing traffic and to improve the response time to a user request, many web services store several dozen files for one image in different resolutions. The response speed is good, but these copies take up a lot of space. This is the main problem, although there are other disadvantages to this approach.

The idea of solving this problem is not to store on the server many variants of the original image in different resolutions, but to dynamically create the necessary image with the given dimensions from a previously prepared original, and as soon as possible. Thus, in real time, you can create an image of the desired resolution and immediately send it to the user. It is very important that the resolution of this image can immediately be made so that the user's device does not make on-screen resizing, since it simply will not be necessary.

')

Using other formats other than JPEG as the base for organizing such an image repository does not seem to be justified. Of course, there are standard, widely used formats that give better compression with similar quality (JPEG2000, WebP), but the encoding and decoding speed of such images is very low compared to JPEG, so it makes sense to choose JPEG as the base format for storing original photos, which, if necessary, will be scaled in real time after receiving a request from the user.

Of course, besides dzhipegov on each site most often there are images in formats PNG and GIF, but usually their relative number is small, and photos in these formats are stored very rarely. Therefore, these formats will not make a noticeable impact on the task in question in most cases.

Description of the resize algorithm on the fly

So, the input data are JPEG files, and to achieve fast decoding (this is true for both the CPU and the GPU), compressed images must have built-in restart markers. These markers are described in the JPEG standard and some codecs are able to work with them, the rest are able to ignore them. If there are no such markers for jeeps, you can add them in advance using the jpegtran utility. When adding markers, the image does not change, but the file size becomes slightly larger. As a result, we obtain the following scheme of work:

- Get image data from CPU memory

- If there is a color profile, we get it from the EXIF section and save

- Copy the picture on the video card

- JPEG decoding

- We do a resize according to the Lanczos algorithm (decrease)

- Impose sharpness

- JPEG image coding

- Copy the image to the host

- Add to the resulting file the original color profile

You can make a more accurate solution, when before resizing, the inverse gamma is superimposed on each pixel component, so that the resize is in linear space, and then the gamma is applied again, but after sharpe. The actual difference for the user is small, but it exists, and the computational costs for such a modification are minimal. It is only necessary to insert the overlap of the inverse and direct gamma into the general processing scheme.

It is also possible solution when decoding dzipegov performed on a multi-core CPU using the library libjpeg-turbo. In this case, each picture is decoded in a separate CPU stream, and all other actions are performed on the video card. With a large number of CPU cores, it can turn out even faster, but there will be a serious loss in latency. If latency when decoding jipeg on one core of the CPU is acceptable, then this option can be very fast, especially for the case when the source jpegs have a small resolution. If you increase the resolution of the original image, the decoding time of the jipeg in one CPU stream will increase, so this option may be suitable only for small resolutions.

Basic Resize Task Requirements for the Web

- It is advisable not to store dozens of copies of each image on the server in different resolutions, but to quickly create the desired image with the correct resolution immediately upon receipt of the request. This is important for reducing the size of the storage, because otherwise you will have to store many different copies of each image.

- The task needs to be solved as quickly as possible. This is a question about the quality of the service in terms of reducing the response time to a user request.

- The quality of the sent image should be high.

- The file size for the sent image should be as small as possible, and its resolution should exactly match the size of the window in which it appears. Here the following points are important:

a) If the image size does not match the window size, the user's device (phone, tablet, laptop) will make a hardware resize after decoding before displaying the picture on the screen. In OpenGL, this hardware resize is done only by a bilinear algorithm, which often causes moire (divorces) and other artifacts to appear on images containing fine details.

b) Screen resize consumes additional energy from the device.

c) If for solving the problem to use a series of pre-scaled images, it is not always possible to get exactly the right size, which means you will have to send a picture with a higher resolution. The increased image size leads to more traffic, which I would also like to avoid.

Description of the general scheme of work

- We get images from users in any format and in any resolution. Originals are stored in a separate database (if necessary).

- Offline using ImageMagick or similar software, save the color profile, convert the original original images to standard BMP or PPM format, then resize to 1K or 2K resolution and compress to JPEG, then add restart markers at a given fixed interval to jpegtran.

- We compile a database of such 1K or 2K images.

- Upon receiving a request from the user, we obtain information about the picture and the size of the window where this image should be shown.

- We find the image in the database and send it to the resizer.

- The resizer receives the image file, decodes, resizes, sharps, encodes and inserts the original color profile into the resulting dzhipeg. After that gives the picture to the external program.

- You can run several streams on each video card, and you can install several video cards on your computer — this way performance scaling is achieved.

- All this can be done on the basis of NVIDIA Tesla video cards (for example, P40 or V100), since NVIDIA GeForce video cards are not designed for continuous long-term operation, and NVIDIA Quadro has many video outputs that are not needed in this case. To solve this problem, the requirements for GPU memory size are minimal.

- Also from the base with the prepared images, you can dynamically allocate cache for frequently used files. It makes sense to store frequently used images according to statistics from the previous period.

Program parameters

- The width and height of the new image. They can be any and it is better to set them explicitly.

- JPEG thinning mode (subsampling). There are three options: 4: 2: 0, 4: 2: 2 and 4: 4: 4, but usually 4: 4: 4 or 4: 2: 0 are used. The maximum quality is 4: 4: 4, the minimum frame size is 4: 2: 0. Thinning is done for color-difference components that a person’s vision does not perceive as well as brightness. For each decimation mode there is its own optimal interval for restart markers to achieve the maximum encoding or decoding speed.

- JPEG compression quality and thinning mode when creating an image database.

- Sharp is done in a 3x3 window, sigma (radius) can be controlled.

- JPEG compression quality and thinning mode when encoding the final picture. Usually, a quality of at least 90% means that this compression is “visually lossless”, i.e. an unprepared user should not see JPEG artifacts under standard viewing conditions. It is estimated that 93-95% is needed for a trained user. The larger this value, the larger the frame size sent to the user, and the longer the decoding and encoding time.

Important limitations

Restart markers. We can quickly decode JPEG images on a video card only if there are restart markers inside it. The official JPEG standard describes these markers; this is a standard parameter. If there are no restart markers, then you cannot parallelize the decoding of the picture on the video card, which will lead to a very low decoder speed. Therefore, we need a base of prepared images in which there are these markers.

Fixed algorithm for image codec. Decoding and encoding images using the JPEG algorithm is by far the fastest option.

The resolution of images in the prepared database can be any, and we consider 1K and 2K as options (4K can also be taken). You can also make not only a reduction, but also an increase in images during resize.

Fast resize performance

We tested the fast resize application from the Fastvideo SDK kit on the NVIDIA Tesla V100 video card (Windows Server 2016 OS, 64-bit, driver 24.21.13.9826) on 24-bit 1k_wild.ppm and 2k_wild.ppm images with a resolution of 1K and 2K (1280x720 and 1920x1080). Tests are conducted for a different number of threads running on a single video card. It requires no more than 110 MB of memory on a video card for one stream. 4 threads need no more than 440 MB.

First, compress the original image in JPEG with the quality of 90%, with a decimation of 4: 2: 0 or 4: 4: 4. Then we decode and resize 2 times the width and height, we make a sharp, then we encode again with the quality of 90% in 4: 2: 0 or 4: 4: 4. The original data are in RAM, the final image is placed in the same place.

The runtime is counted from the start of loading the original image from RAM to saving the processed image into RAM. The time of program initialization and memory allocation on a video card is not included in the measurements.

Command line example for a 24-bit 1K image

PhotoHostingSample.exe -i 1k_wild.90.444.jpg -o 1k_wild.640.jpg -outputWidth 640 -q 90 -s 444 -sharp_after 0.95 -repeat 200

Benchmark for processing one image 1K in one stream

Decoding (including sending data to a video card): 0.70 ms

Resize twice (in width and height): 0.27 ms

Sharp: 0.02 ms

JPEG encoding (including sending data from a video card): 0.20 ms

Total time per frame: 1.2 ms

Performance for 1K

| Quality | Thinning | Resize | Streams | Frame rate (Hz) | |

| one | 90% | 4: 4: 4/4: 2: 0 | 2 times | one | 868/682 |

| 2 | 90% | 4: 4: 4/4: 2: 0 | 2 times | 2 | 1039/790 |

| 3 | 90% | 4: 4: 4/4: 2: 0 | 2 times | 3 | 993/831 |

| four | 90% | 4: 4: 4/4: 2: 0 | 2 times | four | 1003/740 |

Performance for 2K

| Quality | Thinning | Resize | Streams | Frame rate (Hz) | |

| one | 90% | 4: 4: 4/4: 2: 0 | 2 times | one | 732/643 |

| 2 | 90% | 4: 4: 4/4: 2: 0 | 2 times | 2 | 913/762 |

| 3 | 90% | 4: 4: 4/4: 2: 0 | 2 times | 3 | 891/742 |

| four | 90% | 4: 4: 4/4: 2: 0 | 2 times | four | 923/763 |

Thinning 4: 2: 0 for the original image reduces the speed of work, but the size of the source and destination files become smaller. When switching to 4: 2: 0, the degree of parallelism drops by 4 times, since now the 16x16 block is considered as one unit, therefore, in this mode, the operation speed is lower than for 4: 4: 4.

Performance is mainly determined by the JPEG decoding stage, since at this stage the picture has the maximum resolution, and the computational complexity of this processing stage is higher than all the others.

Summary

The test results showed that for the NVIDIA Tesla V100 video card, the processing speed of 1K and 2K images is maximum when running 2-4 streams simultaneously, and ranges from 800 to 1000 frames per second per video card. Processing of 1K images is faster than 2K, and work with 4: 2: 0 images is always slower than with 4: 4: 4. To get the final result on performance, you need to accurately determine all the parameters of the program and optimize it for a specific model of the video card.

A latency of the order of one millisecond is a good result. As far as is known, such latency cannot be obtained for a similar resize task on the CPU (even if there is no need to encode and decode dzipegov), so this is another important argument in favor of using video cards in high-performance image processing solutions.

To handle one billion jeeps per day with 1K or 2K resolutions, up to 16 NVIDIA Tesla V100 video cards may be required. Some of our customers are already using this solution, others are testing it in their tasks.

Resizing dzhipegov on the video card can be very useful not only for web services. There are a huge number of high-performance image processing applications where such functionality can be demanded. For example, fast resizing is very often necessary for almost any image processing circuit received from cameras before displaying a picture on a monitor. This solution can work for Windows / Linux on any NVIDIA video cards: Tegra K1 / X1 / X2 / Xavier, GeForce GT / GTX / RTX, Quadro, Tesla.

Advantages of the solution with a fast resize on the video card

- Significant reduction in storage size for source images

- Reducing the primary costs of infrastructure costs (hardware and software)

- Improved service quality due to low response time

- Reduction in outgoing traffic

- Lower power consumption on user devices

- Reliability and speed of the presented solution, which has already been tested on huge data sets

- Reduced development time to market such applications for Linux and Windows

- Scalability of the solution, which can work both on a single video card and as part of a cluster

- Quick return on investment for such projects.

Who might be interested

The library for quick resize of jeepers can be used in high-load web services, large online stores, social networks, online photo management systems, e-commerce, in almost any software for managing large enterprises.

Software developers can use this library, which provides latency on the order of a few milliseconds for resizing dzhipegov with a resolution of 1K, 2K and 4K on the video card.

Apparently, this approach may be faster than the NVIDIA DALI solution for fast decoding of dzhipegov, resize and image preparation at the stage of neural networks training for Deep Learning.

What else can you do

- In addition to resizing and sharpe, you can add crop, turns to 90/180/270, watermark overlay, brightness and contrast control to the existing algorithm.

- Optimizing solutions for NVIDIA Tesla P40 and V100 graphics cards.

- Additional optimization of JPEG decoder performance.

- Bundle mode for decoding dzhipegov on the video card.

Source: https://habr.com/ru/post/424575/

All Articles