MIT course "Computer Systems Security". Lecture 9: "Web Application Security", part 2

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems". Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: "Introduction: threat models" Part 1 / Part 2 / Part 3

Lecture 2: "Control of hacker attacks" Part 1 / Part 2 / Part 3

Lecture 3: "Buffer overflow: exploits and protection" Part 1 / Part 2 / Part 3

Lecture 4: "Separation of privileges" Part 1 / Part 2 / Part 3

Lecture 5: "Where Security Errors Come From" Part 1 / Part 2

Lecture 6: "Opportunities" Part 1 / Part 2 / Part 3

Lecture 7: "Sandbox Native Client" Part 1 / Part 2 / Part 3

Lecture 8: "Model of network security" Part 1 / Part 2 / Part 3

Lecture 9: "Web Application Security" Part 1 / Part 2 / Part 3

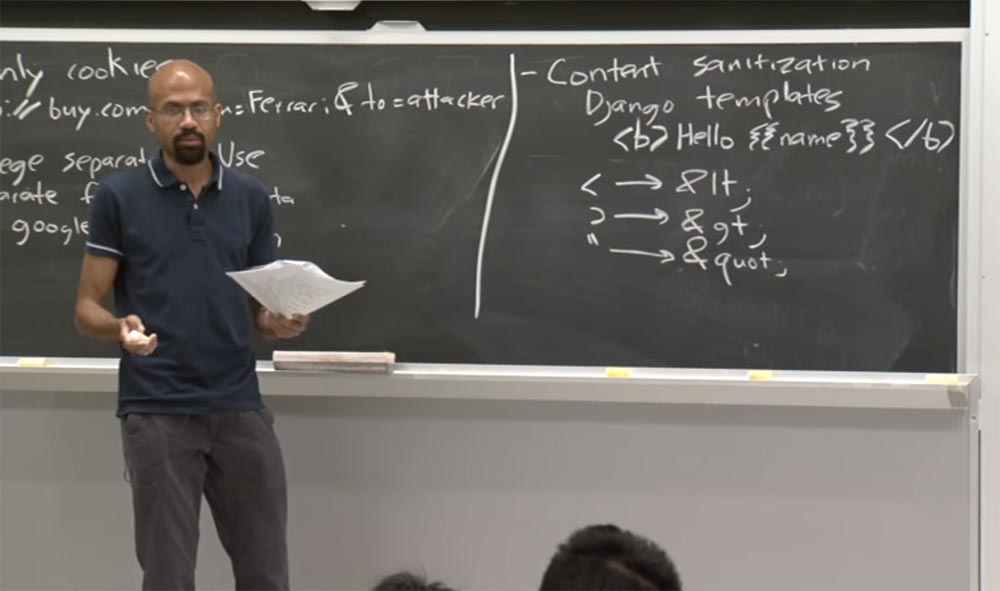

For example, Django will take these angle brackets, translate them into HTML form and remake the rest of the characters. That is, if the custom value of the name contains angle brackets, double quotes, and the like, all these characters will be excluded. It will make the content not be interpreted as HTML code on the client’s browser side.

')

So now we know that this is not quite reliable protection against some cross-site scripting attacks. The reason, as we showed in the example, is that these grammars are for HTML, and CSS and JavaScript are so complex that they can easily confuse the browser's parser.

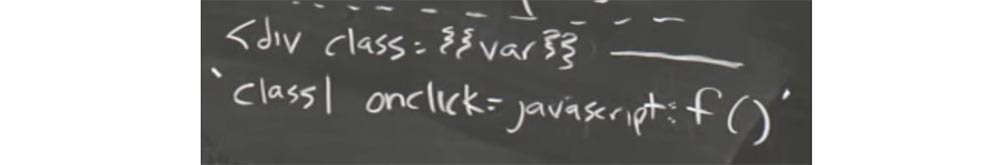

For example, we have a very common thing that is done within Django. So, you have some div function, and we want to set a dynamic class for it. We give the class a value of var, so on and so forth. The idea is that when Django processes this, he has to figure out what the current style is, and then insert it here.

In this case, the attacker can create a string that defines this class, for example, writes “class 1”. Up to this point, everything is going well with us, because it looks like a valid CSS expression.

But then the attacker places an onclick statement here, equal to the JavaScript code that makes the system call.

Since this is wrong, the browser would simply have to stop here. But the problem is that if you have ever seen the HTML of a real web page, everything here is broken and confused, even for legitimate, “friendly” sites. So if the browser stops before every erroneous HTML expression, not one site that you like will simply never work. If you ever want to give up on the world, and I didn’t help you enough, just open the JavaScript console in your browser while browsing the site to see how many errors it gives you.

You can, for example, go to the CNN website and just see how many errors there will be. Yes, mostly CNN works, but very unevenly. For example, to open Acrobat reader, you constantly need to reset the zero pointer exceptions, and at the same time you will feel a little deceived by life. But on the Internet, we have learned to accept this without much disturbance.

Therefore, since browsers must be very tolerant of such things, they will try to turn malicious code into something that seems reasonable to them. And therein lies a security vulnerability.

This is how content disinfection works, and it's still better than nothing. She can catch a lot of bad stuff, but she can't defend against everything.

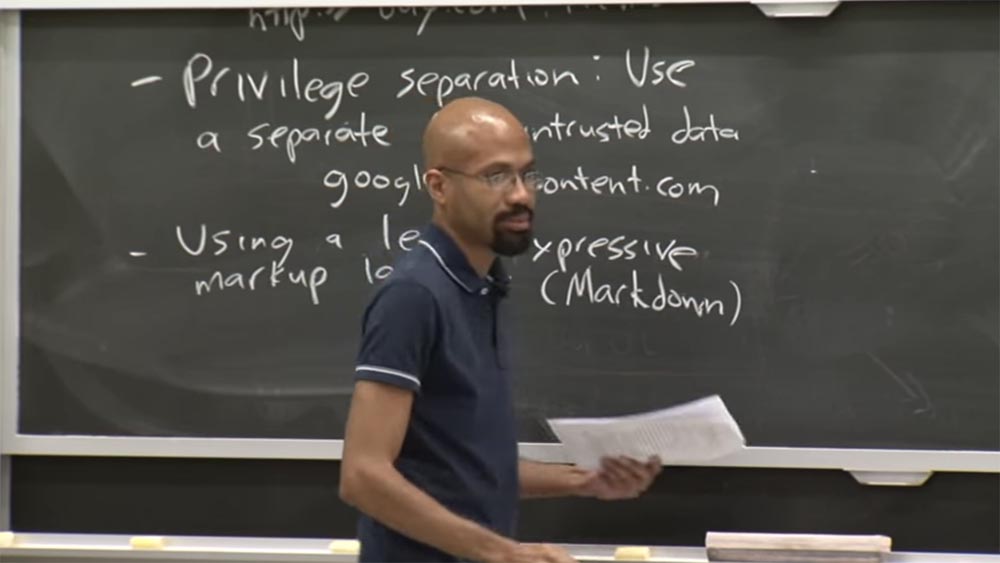

Another thing to think about is the use of a less expressive markup language. Let's see what is meant.

Audience: what to do if content clearing does not work?

Professor: yes, this is possible, for example, in this case Django will not be able to statically determine that this is bad. For example, in this particular case. But in the case when I insert a malicious image tag ...

Audience: in this particular case, I would expect the class assignment to be in quotes and in this case should have no effect ...

Professor: well, you see, there are some tricks there. If we assume that the grammar of HTML and CSS are carefully defined, then we can imagine a world in which ideal parsers could somehow grasp these problems or somehow transform them into normal things. But in fact, HTML grammars and CSS grammars suffer from inaccuracies. In addition, browsers do not implement specifications. Therefore, if we use a less expressive grammar, it will be much easier for us to disinfect the content.

Here the term Markdown is used - “easy-to-read markup” instead of the term Markup — the usual markup. The main idea of Markdown is that it is designed as a language, which, for example, allows users to send comments, but does not contain the ability to use an empty tag, applet support, and the like. Therefore, in Markdown, it is actually much easier to unambiguously identify a grammar and then simply apply it.

It is much easier to do disinfection in a simple language than in full-scale HTML, CSS, and JavaScript. And in a sense, it’s like the difference between understanding C code and Python code. There is actually a big difference in understanding a more expressive language. Therefore, by limiting expressiveness, you often improve security.

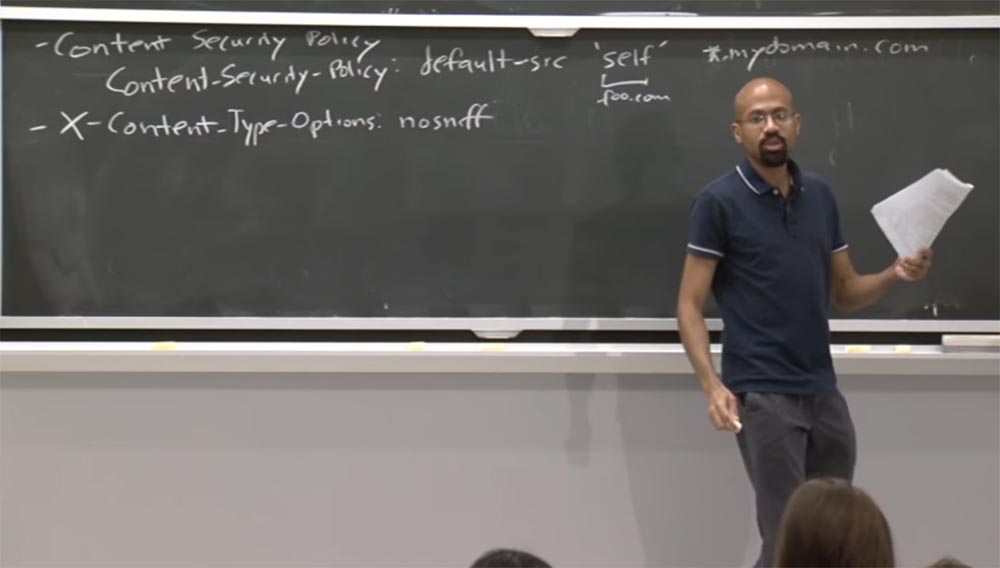

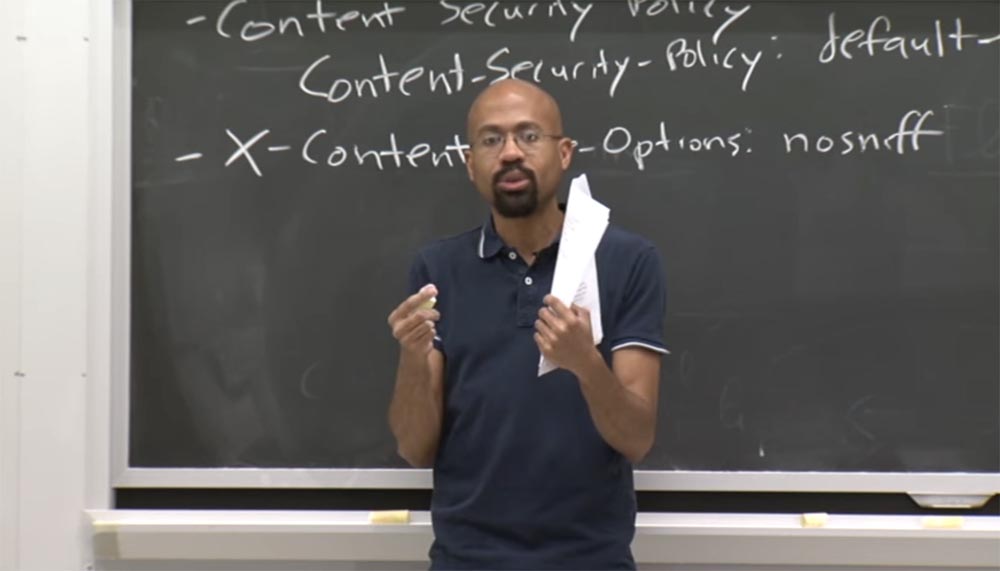

CSP, the Content Security Policy, is also used to protect against cross-site scripting attacks. The idea of CSP is that it allows the web server ...

Audience: I'm just curious to find out about this Markdown language. Do all browsers know how to parse a language?

Professor: no, no, no. You can simply convert different types of languages to HTML, but in their original form, browsers do not understand them. In other words, you have a commenting system, and it uses Markdown. That is, comments, before being displayed on the page, go to the Markdown compiler, which translates them into HTML format.

Audience: so why not always use Markdown?

Professor: Markdown allows you to use inline HTML, and as far as I know, there is a way to disable it in the compiler. But I could be wrong about that. The fact is that it is not always possible to use a limited language, and not everyone wants to do that.

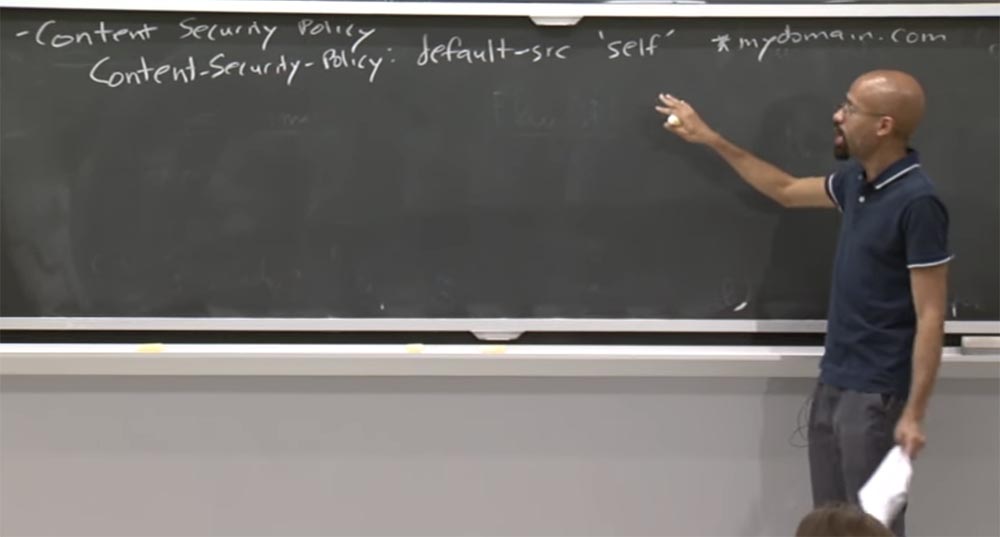

So let's continue the conversation on how to increase security with the Content Security Policy. This policy allows the server to tell the web browser what content types can be loaded on the page that it sends back, as well as where the content should come from.

For example, in an HTTP response, the server might use something like this: it includes the Content - Security - Policy header, the default source is self, and it will accept data from * .mydomain.com.

The self operator indicates that the content from this site should only come from the domain of a specific page or any subdomain mydomain.com. This means that if we had a self binding to foo.com, the server would send this page back to the browser.

Suppose a cross-site scripting attack attempts to create a link to bar.com. In this case, the browser will see that bar.com is not self and is not the domain mydomain.com, and will not miss this request further. This is a fairly powerful mechanism in which you can specify more detailed controls. You set the parameters indicating that your images should come from such a source, scripts from such and so on. It is actually convenient.

In addition, this policy actually prevents embedded JavaScript, so you can't open the tag, write some script and close the tag, because everything that can go to the browser should come only from a conditional source. CSP prevents such dangerous things as the use of an argument to the eval () function, which allows a web page to execute dynamically generated JavaScript code. So if the CSP header is set, the browser will not perform eval ().

Audience: Is this all that CSP protects against?

Professor: no. There is a whole list of resources that it actually protects, and you can set up protection against a lot of unwanted things, for example, specify where you can receive outgoing CSS and a bunch of other things.

Audience: but there are other things besides eval () that threaten security?

Professor: yes, they do. Therefore, the question always arises about the completeness of protection. For example, not only eval can dynamically generate JavaScript code. There is also a constructor of functions, there are certain ways to cause a specified waiting time, you go to a string and you can analyze the code in this way. CSP can disable all these dangerous attack vectors. But this is not a panacea for the complete isolation of malicious exploits.

Audience: Is it true that CSP can be configured to disable checking all internal scripts on a page?

Professor: yes, it helps to prevent the execution of dynamically generated code, and the embedded code should be ignored. The browser should always get the code from the source attribute. In fact, I do not know if all browsers do this. Personal experience shows that browsers exhibit different behaviors.

In general, Internet security is akin to the natural sciences, so people just put forward theories about how browsers work. And then you see how it actually happens. And the real picture may disappoint, because we are taught that there are algorithms, proofs and the like. But these browsers behave so badly that the results of their work are unpredictable.

Browser developers try to be one step ahead of the attackers, and you will see examples of this in the lecture. In fact, CSP is a pretty cool thing.

Another useful thing is that the server can set an HTTP header called X-Content-Type-Options, the value of which is nosniff.

This header prevents MIME from discarding the response from the declared content type, since the header tells the browser not to override the content type of the response. With the nosniff option, if the server says the content is text / html, the browser will display it as text / html.

Simply put, this header prevents the browser from “sniffing” the response from the declared content type, so that the browser doesn’t say: “aha, I sniffed the discrepancy between the file extension and the actual content, so I’ll turn this content into some other, understandable me a thing. " In this case, it turns out that you suddenly gave the keys to the kingdom to the barbarians.

Therefore, by setting this header, you are telling the browser not to do anything like this. This can significantly mitigate the effects of certain types of attacks. Here is a brief overview of some of the vulnerability factors for cross-site scripting attacks.

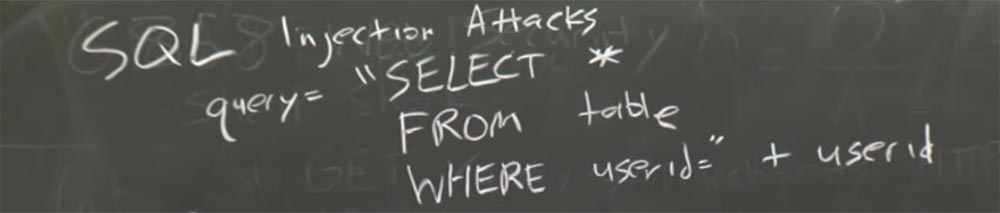

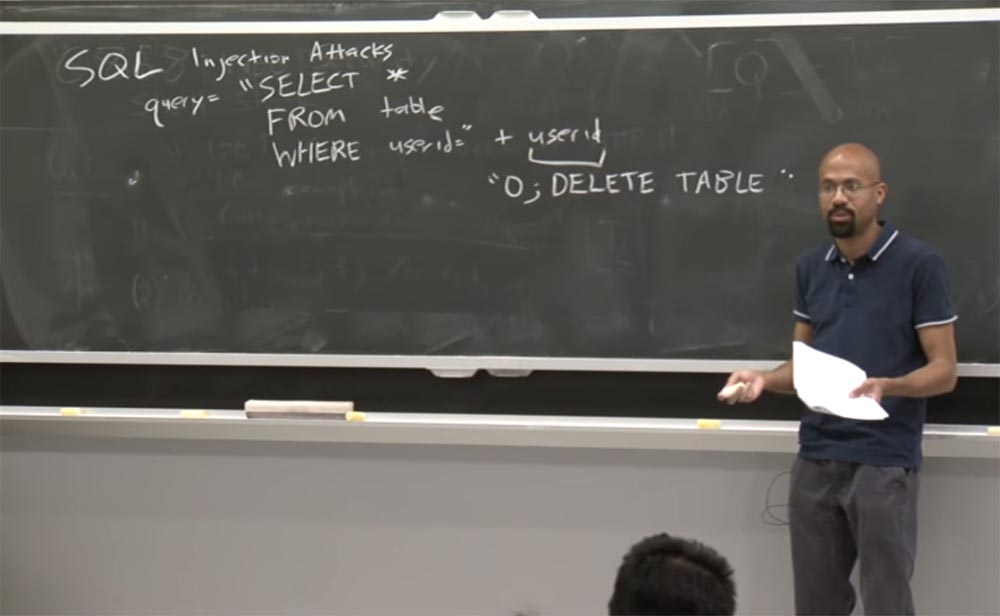

Now let's look at another popular attack vector, SQL. You've probably heard about the attacks called “SQL injection”, or SQL-injection attack. The essence of these attacks is to use the website database. To dynamically build the page shown to the user, database queries are required that are issued to this internal server. Imagine that you have a request to fetch all the values from a particular table, where the User ID field is equal to what is determined on the Internet from a potentially unreliable source.

We all know how this story will end - it will end very badly, there will be no survivors here. Because what comes from an unverified source can cause a lot of trouble. Alternatively, you can give the user id string the following value: user id = “0; DELETE TABLE “.

So what will happen here? Basically, the server database will say: “OK, I will set the user ID to zero, and then execute the“ delete table ”command. And everything is finished with you!

They say that a couple of years ago a kind of viral image appeared. Some people in Germany installed license plates on which 0 was written; DELETE TABLE. The idea was that traffic cameras use OCR to recognize your number, and then put this number into the database. In general, the people of "Volkswagen" decided to use this vulnerability, placing the malicious code on their numbers.

I don’t know if it worked because it sounds funny. But I would like to believe that this is true. So I repeat once again - the idea of disinfection is to prevent the execution of content from untrusted sources on your site.

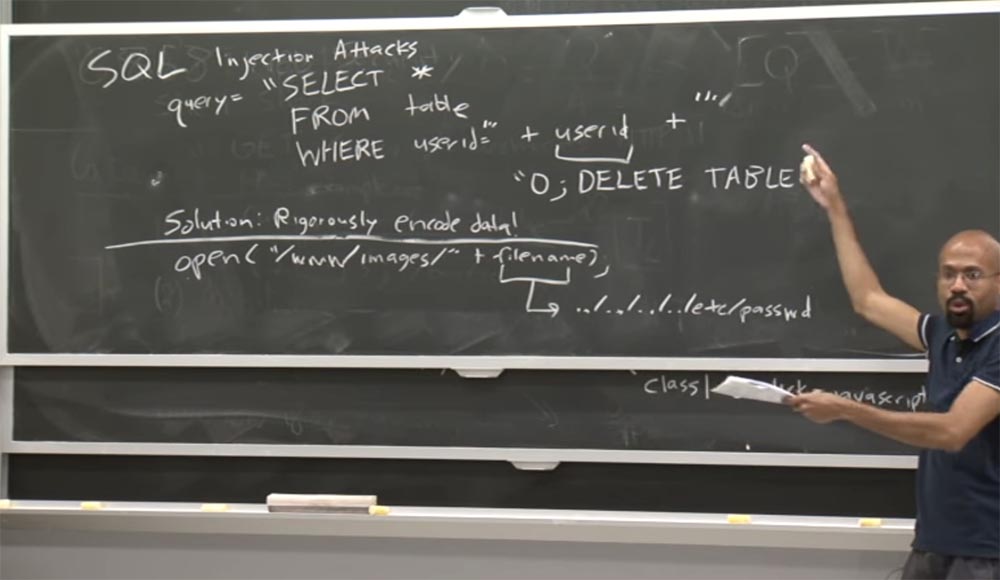

So pay attention to the fact that there may be some simple things that do not work as they should. So, you might think: “well, why can't I just put one more quote at the beginning of a line and another at the end, to thereby eliminate the execution of the malicious code of the intruder between the triple quotes”?

user id = "" + user id + ""

But it does not work, because the attacker can always just place the quotes inside the attacking line. So in most cases such a “half-hack” will not bring you as much security as you expect.

The solution here is that you need to carefully encrypt your data. And I repeat once more that when you receive information from an unreliable source, do not insert it into the system as it is. Make sure that it will not be able to jump out of the sandbox if you put it there in order to execute a malicious exploit.

For example, you want to insert the escape function to prevent the comma from being used in raw form. To do this, many of the web frameworks, such as Django, have built-in libraries that allow you to avoid SQL queries in order to prevent the execution of such things. These frameworks encourage developers to never directly interact with the database. For example, Django itself provides a high-level interface that performs disinfection for you.

But people always care about performance, and sometimes people think that these web frameworks are too slow. So, as you will soon see, people will still do raw SQL queries, which can lead to problems.

Problems can arise if the web server accepts path names from untrusted images. Imagine that somewhere on your server you do something like this: open with “www / images /” + filename, where filename is represented as something like ... / ... / ... / ... / etc / password.

That is, you give the command to open an image at this address from an untrusted user file, which in fact can seriously harm you. Thus, if you want to use a web server or web framework, then you should be able to detect these dangerous characters and avoid them in order to prevent these raw commands from being executed.

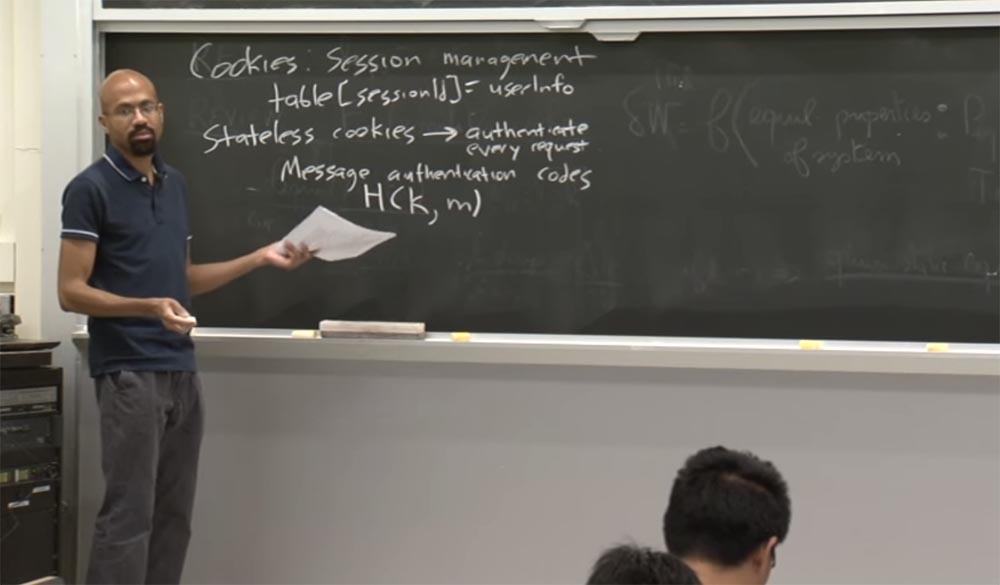

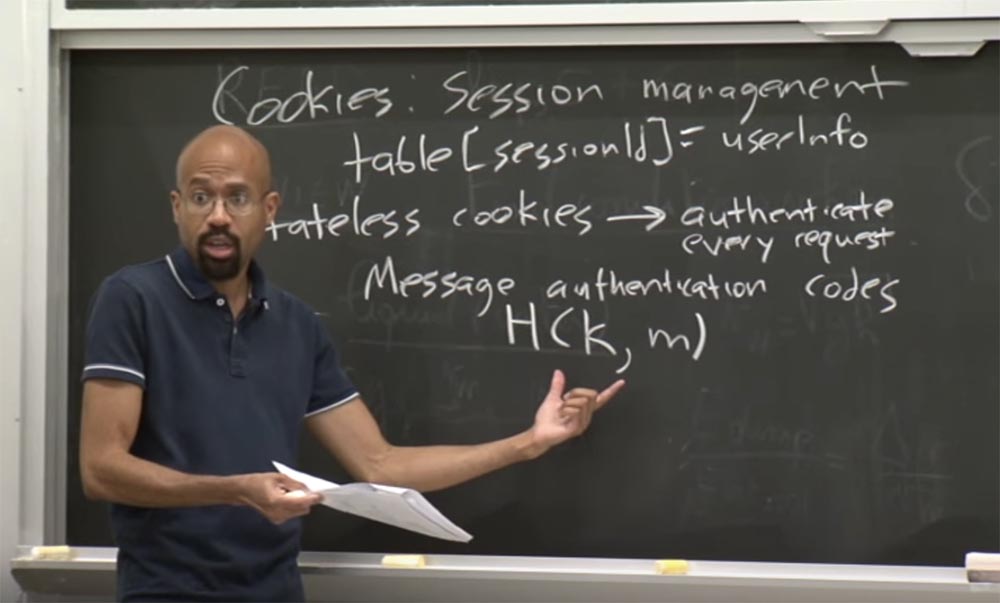

Let's take a break from discussing content disinfection and talk a little about cookies. Cookies are a very popular way to manage sessions in order to bind a user to some set of resources that exist on the server side. A lot of frameworks like Django or Zoobar, which you will get to know later, actually place a random session ID inside the cookie. The idea is that this session identifier is an index in some server-side table:

table [session ID] = user info.

That is, the session ID is equal to some user information. As a result, this session ID and cookies are very sensitive in their extension. Many attacks include theft of cookies to get this session ID. As we discussed in the last lecture, the same policy of the same source of origin can help you, to a certain extent, against some of these cookie theft attacks. Because there are rules based on the same origin policy that prevent arbitrary cookie changes.

The subtlety is that you don’t have to share a domain or subdomain with someone you don’t trust. Because, as we said in the last lecture, there are rules that allow two domains or subdomains of the same origin to access each other’s cookies. And therefore, if you trust a domain that you don’t have to trust, it may be able to directly set the session ID in these cookies to which you both have access. This will allow the attacker to force the user to use the session identifier chosen by the attacker.

Suppose an attacker sets a Gmail user's cookie. A user logs in to Gmail and types in several emails. The attacker can then use this cookie, in particular, use this session identifier, download Gmail and then access Gmail as if he or she were a victim user. Thus, there are many subtleties that can be done using these cookies to manage sessions. We will discuss some of them today and in subsequent lectures.

Maybe you think you can just get rid of cookies? After all, they bring more problems than good. Why can not they be abandoned?

stateless cookie, « », - , , , .

, , , . , . , , . , , , , .

— MA — Message Authentication Codes, . , . HCK - m. , , K. , , . , , .

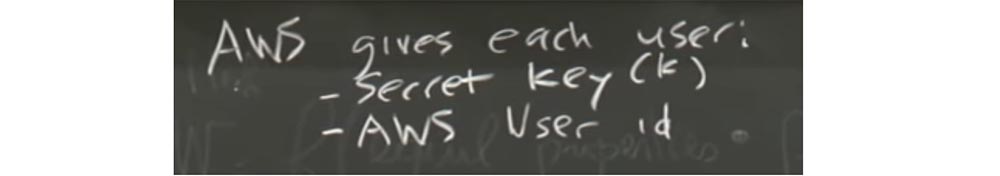

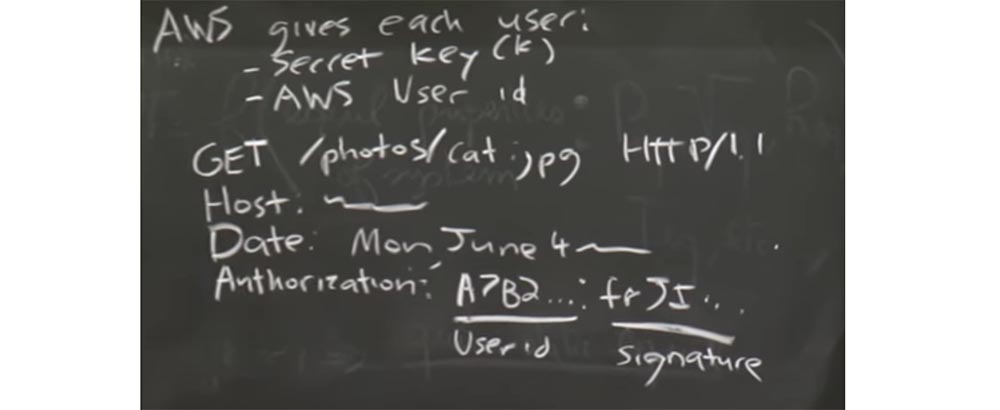

, . , stateless cookie, Amazon, , x3. - Amazon, AWS, . – K, – AWS, .

, AWS HTTP, .

, , , :

GET /photos/ cat; .jpg HTTP/1.1, - AWS:

HOST: — - — - — , , :

DATE: Mon, June 4, , , . , ID , , , .

? , 3- .

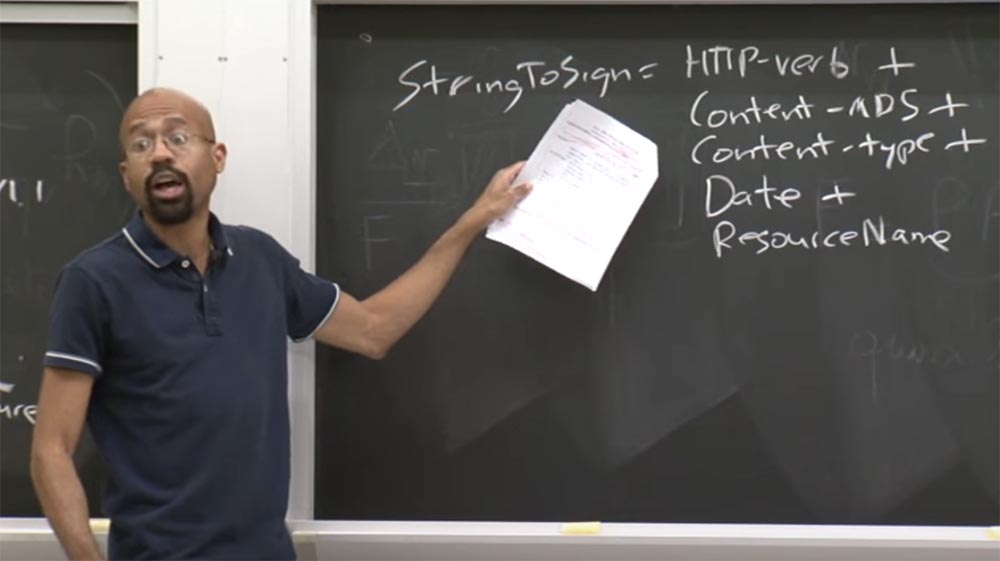

, String To Sign :

— HTTP, GET;

— MDS;

— , html jpg;

— ;

— , , .

, , HCK MAC. , . , . , . ?

, , , - . Amazon , stateless cookie, MD5 .

, , , cookie, . – , , .

, . , , “HCK, m”.

. GET /photos/ cat; .jpg HTTP/1.1 , , . , , . , ? : «, , , ».

56:15

MIT « ». 9: « Web-», 3

.

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until December for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Source: https://habr.com/ru/post/424295/

All Articles