Mobile watchman on Raspberry pi (h.264)

The themes of using Raspberry pi for FPV control and motion monitoring in a frame of H.264 vectors are not new. The development does not claim to originality, and the time was spent on it relatively little (from July to weekends. Sometimes.).

But, probably, my experience (and source codes) will appear to whom be useful.

The idea that you need to make a video observation in the apartment, arose after a neighbor said that someone was digging into the door lock.

')

The first thing that was done in a hurry was to install the famous motion program on Raspberry pi zero with a camera v1.3. In principle, the problem solves. If arranges the notification through mail and fps = 4-5.

But it did not seem interesting. At hand was a platform with wheels and strapping from old experiments and 18650 batteries from old laptops.

The result was a fun mix of mobile video surveillance and motion detection.

Since I have a rented VPS, there were no problems with access from outside (home grid behind NAT). The battery life is about 4 days if you do not abuse the ride and the headlamp.

You can travel around the apartment, remotely controlling both the camera and the platform, and leave it in the “watch” mode (motion detect) at any desired location.

All "iron" can be divided into two parts, the first of which does not depend on the second and can be used separately:

The Raspbian image has been set to read only mode. In this configuration, the raspberry easily experiences unexpected power off, since the SD card is not used for recording at all.

The software consists of 3 separate components.

FPV mode

Android service mode

FPV mode

Tracking mode of movement in the frame.

The simplest firmware on stm32f103

Tracking by the h.264 vectors was very capricious and prone to false positives. Very few options for implementing motion detection for H.264 are walking around the network. I tried them all.

The most primitive option is to count the number of vectors whose length exceeds a certain threshold value. And if some vectors are greater than the threshold, then this is a signal that there is movement in the frame.

Alas. This option is only for demonstration of the principle. There are too many false positives. Especially on surfaces of uniform color and texture.

All other options either give the same too many false positives, or simply do not pass the performance criterion: “must be processed during the frame time”.

As a result, chose his option. Though he practically does not give false positives, but requires movement in several frames in a row. But this is better than false alarms several times a day due to changes in illumination or in general due to incomprehensible “movements” in the frame according to the “solution” of the camera. The topic of reliable detection algorithms for mv H.264 is generally a separate complex topic. Algorithms require a lot of time for practical debugging and I have tight limits on the execution time.

An example of motion vectors (snapshot service modes):

On events "movement in the frame" generated notifications.

In principle, for my purposes, it turned out to be enough guaranteed triggering when a human figure moves (> 15% of the frame) for at least 2 seconds. With such a desensitization of sensitivity, false positives simply did not see at all.

Management of the platform "on the tractor." Those. control PWM and the direction of rotation of the left and right side. Control elements (stripes) under the thumbs of both hands. This turned out to be the most natural for me.

Camera control - two strips; touching of which gives the command to turn a certain angle (the farther from the center of the stripe - the greater the angle). Continuous control as for motors turned out to be inconvenient (again, subjectively for me).

Video lags turned out to be relatively small (<1 sec).

To control the wheel platform with a maximum speed of 3-4 km / h at 100% PWM lag of 0.6 seconds - this is quite normal and almost not noticed.

However, it seems to me that even 0.3 sec lag for, for example, a quadrocopter is a lot.

Tests showed that the implementation of translation in python makes a lag somewhere 50-70ms, compared with the issuance of the same H.264 stream through the rapivid. For me, these 70ms are not fundamental. But if someone wants to squeeze the maximum, he should take this into account.

When working via “local WiFi” (phone as access point), the lag is 350.600ms. But not more than 0.6 seconds and is stable in this range. Although, 50-70 meters of distance in the open area is only a play. And at a greater distance WiFi from the phone does not work.

It is worth noting that it is in a very “RF noisy” environment of apartment buildings, with a lot of WiFi networks in the area.

I was surprised by the result in the configuration “WiFi router -> provider over twisted pair -> VPS -> MTC 4G on the phone” via ssh port forwarding from raspberry to VPS.

The typical log turned out to be even slightly better than through local WiFi (!)

Even on the go in a car or near a large hypermarket, the lag is only 300ms.

However, sometimes (quite rarely and not predictably), the lag became up to several seconds. It helps re-connect. Probably, these are some features of 4G / MTS / providers in the chain, etc.

After everything worked, there was a desire to connect the audio channel in both directions. Technically it is possible and not even very difficult. But I no longer want to mess around with a soldering iron.

If there were no “extra” Rasberry pi on hand, then instead of it it would be easier to use the old phone as a video surveillance and platform management host. The only advantage of the raspberry over the old phone is less weight. And, maybe, smaller power consumption (did not compare).

All sources

But, probably, my experience (and source codes) will appear to whom be useful.

The idea that you need to make a video observation in the apartment, arose after a neighbor said that someone was digging into the door lock.

')

The first thing that was done in a hurry was to install the famous motion program on Raspberry pi zero with a camera v1.3. In principle, the problem solves. If arranges the notification through mail and fps = 4-5.

But it did not seem interesting. At hand was a platform with wheels and strapping from old experiments and 18650 batteries from old laptops.

The result was a fun mix of mobile video surveillance and motion detection.

Since I have a rented VPS, there were no problems with access from outside (home grid behind NAT). The battery life is about 4 days if you do not abuse the ride and the headlamp.

You can travel around the apartment, remotely controlling both the camera and the platform, and leave it in the “watch” mode (motion detect) at any desired location.

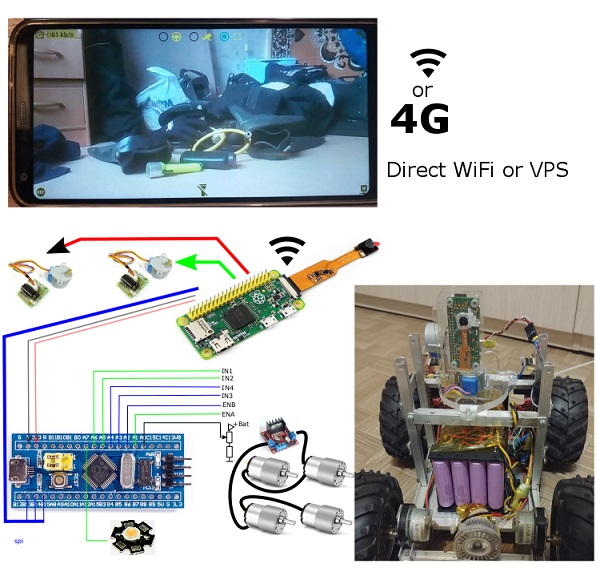

Hardware

All "iron" can be divided into two parts, the first of which does not depend on the second and can be used separately:

Video surveillance module

- Raspberry pi zero

- USB WiFi Dongle

- Raspberry pi camera v1.3

- 2x stepper motors 28BYJ-48 + ULN2003 driver

Mobile platform managed via SPI from raspberry

- 4S 16x18650 Li-ion + 4S 30A Li-ion 18650 (BMS) board + DC-DC charging with current and voltage stabilization

- dc-dc step down converter (15v -> 5v).

- stm32f103c8t6 board

- 4x gear motors + L298N board

- 12v LED lamp (headlight) + control on IRF3205 (+ smd pnp and npn)

The Raspbian image has been set to read only mode. In this configuration, the raspberry easily experiences unexpected power off, since the SD card is not used for recording at all.

Software

The software consists of 3 separate components.

Android app (tested on LG6 Oreo and old Samsung S5 Lollipop)

FPV mode

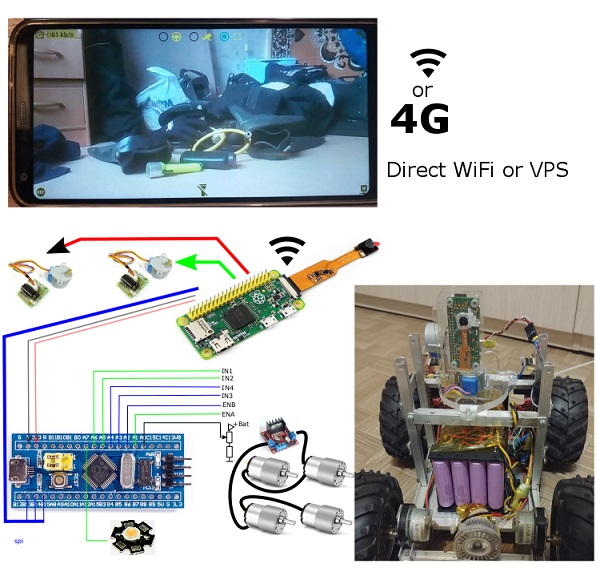

- Displaying H.264 video stream from the camera at the specified resolution and bitrate

- Elements control the camera and platform

- Record video and photo from the stream.

Android service mode

- Poll host (with the specified frequency)

- Upload a photo of the event "motion" in the frame of the event.

Python host Raspberry pi (picamera + spidev + RPi.GPIO)

FPV mode

- Broadcast H.264 stream (with the parameters specified by the Android application)

- receiving control commands for stepper motors and their control.

- Transmission of control commands via SPI (if enabled)

Tracking mode of movement in the frame.

- Motion detection in the frame (according to the parameters specified by the Android application)

- Receiving requests "is there a movement in the frame" and uploading photos on request

- Sending to the host (regardless of the application) photo movements in the frame.

The simplest firmware on stm32f103

- Receive SPI commands

- Control the direction of rotation of the wheels and PWM engines.

- Headlight control.

Motion tracking

Tracking by the h.264 vectors was very capricious and prone to false positives. Very few options for implementing motion detection for H.264 are walking around the network. I tried them all.

The most primitive option is to count the number of vectors whose length exceeds a certain threshold value. And if some vectors are greater than the threshold, then this is a signal that there is movement in the frame.

Alas. This option is only for demonstration of the principle. There are too many false positives. Especially on surfaces of uniform color and texture.

All other options either give the same too many false positives, or simply do not pass the performance criterion: “must be processed during the frame time”.

As a result, chose his option. Though he practically does not give false positives, but requires movement in several frames in a row. But this is better than false alarms several times a day due to changes in illumination or in general due to incomprehensible “movements” in the frame according to the “solution” of the camera. The topic of reliable detection algorithms for mv H.264 is generally a separate complex topic. Algorithms require a lot of time for practical debugging and I have tight limits on the execution time.

An example of motion vectors (snapshot service modes):

On events "movement in the frame" generated notifications.

In principle, for my purposes, it turned out to be enough guaranteed triggering when a human figure moves (> 15% of the frame) for at least 2 seconds. With such a desensitization of sensitivity, false positives simply did not see at all.

Motion control.

Management of the platform "on the tractor." Those. control PWM and the direction of rotation of the left and right side. Control elements (stripes) under the thumbs of both hands. This turned out to be the most natural for me.

Camera control - two strips; touching of which gives the command to turn a certain angle (the farther from the center of the stripe - the greater the angle). Continuous control as for motors turned out to be inconvenient (again, subjectively for me).

Lags fpv

Video lags turned out to be relatively small (<1 sec).

To control the wheel platform with a maximum speed of 3-4 km / h at 100% PWM lag of 0.6 seconds - this is quite normal and almost not noticed.

However, it seems to me that even 0.3 sec lag for, for example, a quadrocopter is a lot.

Tests showed that the implementation of translation in python makes a lag somewhere 50-70ms, compared with the issuance of the same H.264 stream through the rapivid. For me, these 70ms are not fundamental. But if someone wants to squeeze the maximum, he should take this into account.

When working via “local WiFi” (phone as access point), the lag is 350.600ms. But not more than 0.6 seconds and is stable in this range. Although, 50-70 meters of distance in the open area is only a play. And at a greater distance WiFi from the phone does not work.

It is worth noting that it is in a very “RF noisy” environment of apartment buildings, with a lot of WiFi networks in the area.

I was surprised by the result in the configuration “WiFi router -> provider over twisted pair -> VPS -> MTC 4G on the phone” via ssh port forwarding from raspberry to VPS.

The typical log turned out to be even slightly better than through local WiFi (!)

Even on the go in a car or near a large hypermarket, the lag is only 300ms.

However, sometimes (quite rarely and not predictably), the lag became up to several seconds. It helps re-connect. Probably, these are some features of 4G / MTS / providers in the chain, etc.

After everything worked, there was a desire to connect the audio channel in both directions. Technically it is possible and not even very difficult. But I no longer want to mess around with a soldering iron.

If there were no “extra” Rasberry pi on hand, then instead of it it would be easier to use the old phone as a video surveillance and platform management host. The only advantage of the raspberry over the old phone is less weight. And, maybe, smaller power consumption (did not compare).

All sources

Source: https://habr.com/ru/post/424191/

All Articles