8 stories that influenced the development of artificial intelligence

Today, not a single announcement about the release of a new smartphone or app is complete without mentioning artificial intelligence. AI in the trend, and you might think that he is with us recently, but it is not. Or rather, not quite like that: yes, the AI-tools were widely used today, but the development itself began more than half a century ago. And in the history of AI there were a lot of interesting episodes, not known to the general public, which we decided to tell Habr's readers.

At the origins of AI

There are so many articles written about artificial intelligence that it seems paradoxical that there is no single definition of this area. Some experts believe that, before knowing the nature of the human intellect, it is impossible to talk about creating its artificial counterpart. Others argue that the ability of a machine to perform tasks that require a person to display intelligence is already artificial intelligence. Also, this phenomenon can be understood as the ability of a computer to operate not only with clear concepts of “yes” and “no”, but also “maybe”, and also ask the question “what if?”. That is, to learn, draw conclusions and, based on the experience, make decisions.

')

If you do not take into account the ancient legends about artificial creatures, the first literary mention of the thinking mechanism can be found in the Czech writer Karel Čapek in his play “RUR” (Rossum Universal Robots) from 1920. The work describes the factory that produces "artificial people" - they are called robots. If you do not plan to read the play (especially in Czech ), we will immediately present a spoiler: first, the robots worked for people, and then decided to prepare a riot, which allegedly led to the disappearance of the human race. This idea now does not seem new, because widespread in modern literature and cinema.

Officially, the term “artificial intelligence” was first used by the young scientist John McCarthy in 1956 at the Dartmouth Conference. McCarthy explained AI as a science and technology for creating intelligent computer programs. Despite the differences in the interpretation of the terms, the final judgment made at the end of the meeting by the participants was the following: “any aspect of human intelligent activity can be accurately described in such a way that the machine can imitate it”.

Already in the 20s of the 20th century, nothing good was expected of artificial intelligence. Shots from the play of Karel čapek R.U.R. Source: Wikimedia

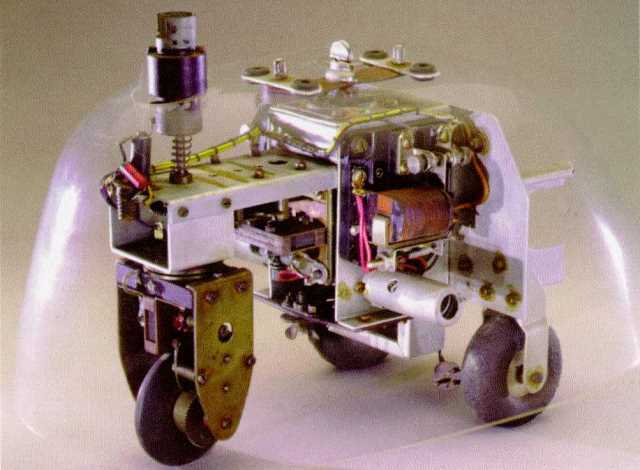

Episode 1: Walter's Mechanical Tortoise

The implementation of the concept of a robot with the beginnings of intelligence was succeeded by American cybernetics Gray Walter. His mechanical "turtles" , built in 1948–49, drove to a light source and, resting against obstacles, passed back and circled them. This was the first full-scale manifestation of the intellect by the machine: instead of helplessly resting against the leg of the table, the robot concluded that it was impossible to travel and he himself decided on a detour maneuver. In this case, the robot was created exclusively from analog components.

Heliophilus Gray Walter's Robot Turtle on Analog Components. Source: extremenxt.com

Episode 2: The First Machine Translator

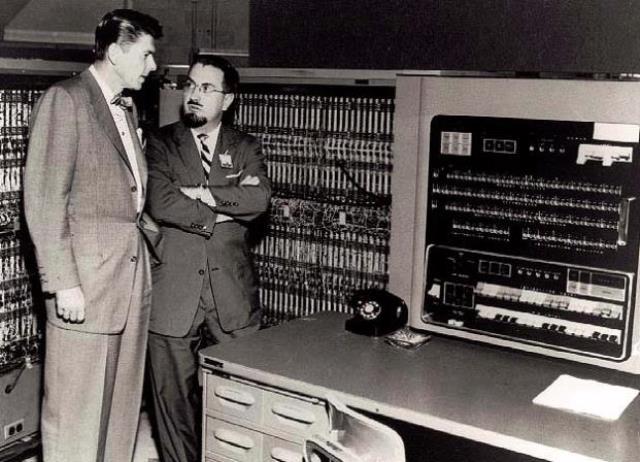

In 1954, IBM demonstrated an unfinished automatic translator from Russian to English, which operated with only six rules and had a vocabulary of 250 words in the field of organic chemistry. Such a machine was required by the military for the translation of Soviet documents.

The demonstration made a splash in the press, which only spurred further funding in the field of AI. The benefits of potential automatic translators were obvious: according to calculations, of the 4,000 full-time translators from different languages who were on the staff of the government service of the Joint Publication Research Service, only 300 people were loaded with work per month. Improving the quality of recognition and automatic translation would give huge savings by reducing bloated staff.

IBM translator from Russian to English, the future president of the United States, Ronald Reagan, and one of the fathers of computer science, Herbert Grosch, 1954. Source: Columbia.edu

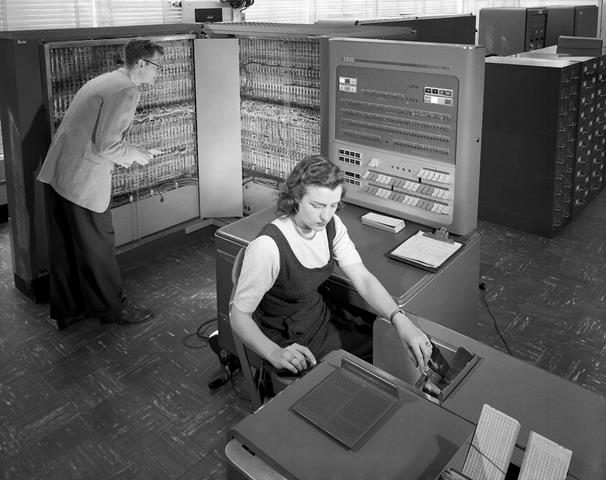

Episode 3: the first computer chess player

On May 11, 1997, in New York, the computer won for the first time in history during a match conducted according to all the “human” rules of the current world chess champion. This, of course, is about the second battle of Garry Kasparov and DeepBlue. It was 40 years after the programmer of Service Bureau Corporation (a division of IBM) and part-time chess player Alex Berstein with colleagues wrote The Bernstein Chess Program - the first program to play chess, working on the minimax algorithm on the IBM 704 mainframe. The computer played slowly : for each move it took 8 minutes, for such an interval of time he managed to calculate 2800 possible positions. Berstein himself never lost his own program, although he admitted: "Theoretically, 704 is not able to surprise me, but at times he succeeds in doing it - he played so well a couple of times that he even confused me."

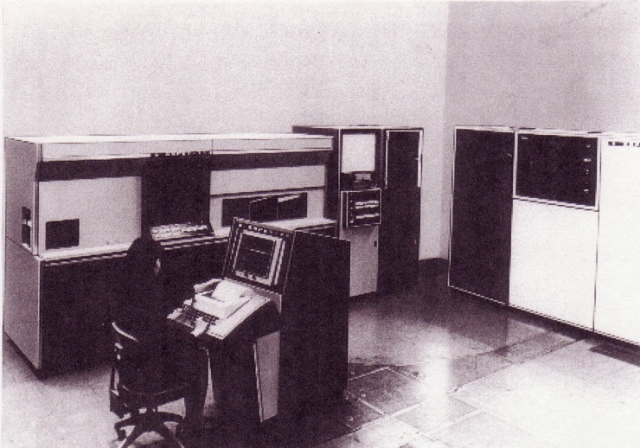

IBM 704 mainframe playing amateur chess

Source: NASA

But who IBM 704 (not just this one, from the Service Bureau Corporation, and the other one installed at Bell Labs) really surprised, was the writer Arthur Clarke, who ended up at Bell Labs just when the local craftsmen, connecting the vocoder to the mainframe, taught 704 to perform the song Daisy Bell - in order to demonstrate the possibility of computer speech synthesis. Writer Clarke was impressed and put the scene with the robot HAL 9000, performing the same song, into the 2001 Space Odyssey .

But that's another story. And in 1957, the patriarchs of artificial intelligence, Herbert Simon and Allen Newell, predicted that the computer would be able to beat the person within 10 years. And indeed: in 1967, the Mac Hack program began to perform successfully in tournaments along with people.

Episode 4: Shakey brooding robot and the arrival of the AI-winter

The development of computer technology and, in particular, AI solutions started in the 1950s and 60s, as shown above, very confidently, and initially led to impressive results. Moreover, scientists began to make loud statements about the brilliant prospects for the development of technology. So, in 1970, one of the fathers of the theory of AI Marvin Minsky predicted the emergence of computer intelligence, corresponding to human, "for 3-8 years." With their loud statements about the near future, AI scientists have raised the bar of expectations.

However, in the following years, scientists did not succeed in making the promised breakthroughs - the basic concepts and algorithms of artificial intelligence were developed, while real products were far away, progress was extremely slow, the untapped area of artificial intelligence dissipated the efforts of researchers. One example of unfulfilled expectations was the Shakey robot , which was of great importance to science, but meaningless from the point of view of investors. Works on its creation and improvement were carried out in 1966–77, it was a mobile robot with a camera, who independently sought a solution for the tasks set. Unfortunately, Shakey remained a test sample for technologies and algorithms, having no utilitarian benefit: he could work normally only in an artificially constructed test space, and it took more than an hour to complete the solution of the elementary problem “to detect a block on an elevation in a room and push it”.

Legendary robot Shakey with very thoughtful artificial intelligence.

Source: SRI International

Increasing investor skepticism (and they were largely military) regarding artificial intelligence peaked against the background of a report by mathematician Sir James Lighthill, who stated in 1973 that machines would always play chess at the level of an experienced amateur, and complex tasks like face recognition never be under their power. In addition to emotional reasoning, the report contained reasonable arguments against AI. Among them mentioned the insufficient computing power of computers of that time and the lack of full-fledged machine learning, which is why for effective work of AI, each time it was necessary to fill with a huge amount of data.

The dramatic reduction in funding for research in the field of artificial intelligence that followed the report marked the beginning of AI Winter.

Episode 6: Toshiba's AI on the postal service (and not only)

Despite the cooling of investors' interest in the topic of artificial intelligence, engineers continued to work on narrow-profile machines with AI elements. They were not so ambitious to try to create an "artificial mind", and came up with solutions to specific problems. But, unlike the robot builders, the creators of such machines achieved real success.

A good half of the 20th century, all mail in the offices was sorted manually - operators read indices and addresses, shifting envelopes and postcards to the required cells. Attempts to create an automatic sorter began in the 1920s, but the first serial semi-automatic machines appeared only in the 1950s.

In the sixties in the mail used sorters, reading on the envelopes indexes, written strictly according to all known patterns. Deviation from this pattern made the index unreadable for automation. Especially if the index was written in ordinary written handwriting, which even a person can not always make out the first time.

It is these indifferent numbers that even the simplest sorting post machines recognize.

In 1965, under the supervision of the Japanese Ministry of Post and Telecommunications, a project was launched to create a new generation automatic sorting machine. One year later, Toshiba (then Tokyo Shibaura Electric Co.) was ready to prototype a mechanism that recognizes handwritten printed numbers. And in 1967, Toshiba introduced the sorter with optical character recognition technology (OCR). The machine scanned the envelope with a Visicon digital camera and sent the resulting impression to the recognition unit, where all unnecessary information was discarded, except for the numbers grouped in the index. After recognizing handwritten numbers, the letter went to the correct sorting tray.

Toshiba postage sorting machine with advanced for 1967 AI. Source: Toshiba Science Museum

In the process of these first samples, the engineers collected a base of 300 thousand handwriting samples, significantly improving the effectiveness of AI, as they say, collected Big data, because the machine did not have the ability to self-learn. And on July 1, 1968, an updated sorter, called Toshiba TR-4, began work in the central post office in Tokyo.

Subsequently, the developments were useful to engineers when creating an ASPET / 71 computer, which was a complex for recognizing text from print media with a capacity of 2000 characters per second. If now the OCR-scanner or the OCR-application for the smartphone does not seem to be something surprising, then in 1971 ASPET / 71 looked like a tremendous breakthrough.

An ASPET / 71 computer that recognizes typed text.

Source: 子 情報 通信 学会

During the second AI boom of the mid-80s, Toshiba introduced an improved version of the translator AS-TRANSAC , the AI in which helped translate whole texts from Japanese into English and vice versa, preserving their meaning. Without the use of AI, which selected the desired values, automatic translation would be an incoherent set of words.

In 1998, the AI came in handy at Toshiba for the EUROPA framework, which allows you to create voice systems that understand keywords in voice queries and respond with synthesized speech. Together with EUROPA, the company introduced MINOS, a car navigation platform with voice command and address recognition.

Episode 7: AI in the 21st Century

A truly loud artificial intelligence declared itself in 1997, when the IBM Deep Blue chess computer beat Garry Kasparov, although a year before the machine had given way to a chess player. By itself, the fact of losing a person to a computer was not something surprising, but the hype in the media added significance to the event.

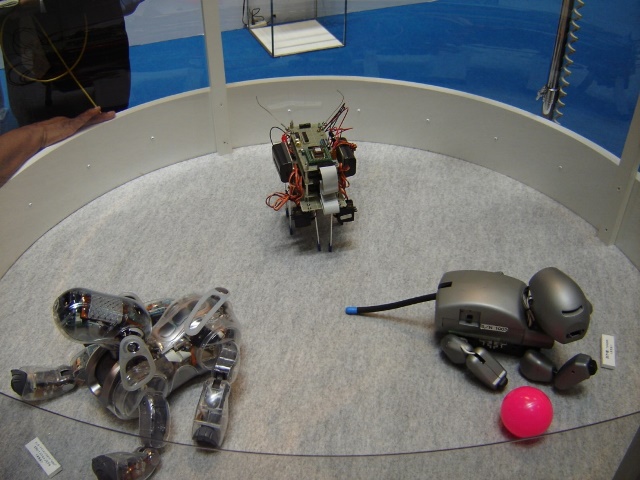

The release in 1999 of Sony's home robot Aibo inspired a false hope that the era of talking robots and omnipotent artificial intelligence was about to come, but the revolution did not happen again - after seven years, Sony closed the project, restarting it only in 2017.

Prototypes of Aibo. By the way, in addition to dogs of robots, Sony produced astronauts and lion cubs under this brand, albeit in much smaller quantities. Source: Alex / Flickr: DSC00193

A new boom of artificial intelligence came in the 2010s, when the power of computers and mobile devices allowed the use of AI in consumer devices and applications. For AI, all the stars finally came together: total digitalization helped create the huge databases needed for analysis and training of AI, and instead of outdated neural network learning algorithms, much more efficient and productive ones were developed.

The IBM Watson Explorer AI system in 2017 successfully replaced the 34 employees of the Japanese insurance company Fukoku Mutual Insurance, who were engaged in analyzing the health of customers to create individual insurance plans - AI easily operates thousands of records, calculating the most profitable insurance program for both parties.

The Google Brain algorithm running on YouTube fills the sidebar with recommended videos, finding the most interesting videos for each user. Remember how many interesting videos you discovered just because of the recommendations of YouTube.

The emergence of AI on the trading floors gave a powerful impetus to e-commerce - a recommendation AI on Amazon provides 35% of total sales, evaluating the products viewed and selecting to visitors the products that they will most likely buy.

Artificial intelligence is already used in many creative mobile applications, from image generation (Prisma) to face masking (Snapchat), in all recommender systems, voice recognition systems, in most monitoring systems, smart homes, home appliances, robots of all possible types , And so on and so forth.

And in the next decade, the world will see available serial unmanned vehicles, whose operation is built on AI and machine learning. Last year, Toshiba introduced the new Visconti 4 image recognition processor, the first version of which appeared in the early 90s. The AI in this chip is capable of processing a huge amount of graphic data on the fly, accurately determining on the video from cameras the markings, traffic lights, people, other cars and obstacles.

Episode 8: Cars Learn to Talk

Note: do not pronounce the recorded words, like IBM 704 in front of the bewildered Arthur Clarke, namely, to maintain a conversation with a person, adequately responding to his remarks. The empirical test, proposed by the English mathematician Alan Turing in 1950, was intended to answer the question that worried him at the time: can a machine think like a man? Recall that the essence of the test lies in the blind communication of the judge with two subjects - a man and a machine. During the dialogue, thinking, according to Turing, the computer should mislead the judge, masterfully posing as a person.

The test has found practical application in the competition for the Löbner Prize, held since 1990. Contestants represent their chatbot, posing as living interlocutors. By the way, the Löbner Prize, in addition to textual communication, provides for a nomination in the audiovisual program, the victory of the AI in which automatically cancels the holding of the competition in the future, marking the beginning of an era of complex AI. But so far no progress has been made in this nomination and it is unlikely that they will be in the near future.

Here is a vivid example that shows the difference between human intelligence and what is called AI in the framework of the Löbner Prize (this is a translated excerpt from the 2005 competition program):

Dialogue of the judge and the person

Judge: Przhshchevvich parzhishshch krvahoch, right?

Member: Is it Polish?

Judge: What?

Member: What you just wrote.

Judge: This is a scenario, isn't it?

Member: I'm a little concerned about your typing skills.

And now the dialogue of the judge and the chatbot Jabberwacky that won in that year

Judge: OK, that's enough. I will communicate with another entity.

Member: Ok, it was nice to use your comb.

Judge: What are you talking about? How did you use my comb?

Member: Good.

Judge: Ok?

Participant: Yes

Judge: Very meditative conversation.

Member: Moreover, I donated it to charity.

Judge: What? What did you donate to charity? This conversation?

Member: Um ... 1 million dollars?

Judge: What kind of charity?

Member: Are we talking about simpsons or unreal?

Judge: Honestly, I don’t understand a damn thing what we are discussing here.

Since 2005, chatbots have made a big step forward. In 2014, for the first time in the history of the Löbner Prize, the St. Petersburg bot Eugene (Zhenya Gustman), created by Vladimir Veselov, successfully deceived judges in 33% of cases - according to the conditions of the competition, overcoming the 30 percent mark means successful passing the test. That is, from 1990 to 2014, despite the annual rewarding of the most effective chatbots, the artificial intelligence test for humanity formally remained unsuccessful.

But until now, chatbots are far from the same artificial intelligence that fiction writers dream of — the machine cannot keep the conversation going with remarks that exactly match the context.Human speech is incredibly complex and diverse and therefore is still poorly amenable to complex computer analysis: understanding individual words and simple phrases, AI still does not understand deep meanings, and in their replicas even with the correct placement of words computer phrases seem absurd. And the most popular voice assistants Siri, Cortana and Alice from “Yandex”, although they sometimes give out very clever answers, but in any incomprehensible situation they stop the dialogue by switching to the search engine.

Artificial intelligence is strong, but still far from perfect. Prediction and recognition systems make mistakes, although much less frequently than humans. While there is talk in society about the ethicality of artificial intelligence solutions, AI managed to take a new height that seemed unattainable a couple of years ago: AlphaGo beat a man in Go, the last logical game where the primacy of human intelligence with its abstract thinking was considered unshakable.

Source: https://habr.com/ru/post/424007/

All Articles