Create a simple neural network

Translation Making a Simple Neural Network

What do we do? We will try to create a simple and very small neural network, which we will explain and teach something to distinguish. At the same time, we will not go into the history and mathematical jungles (it is very easy to find such information) - instead, we will try to explain the task (not the fact that we succeed) to you and yourself with pictures and code.

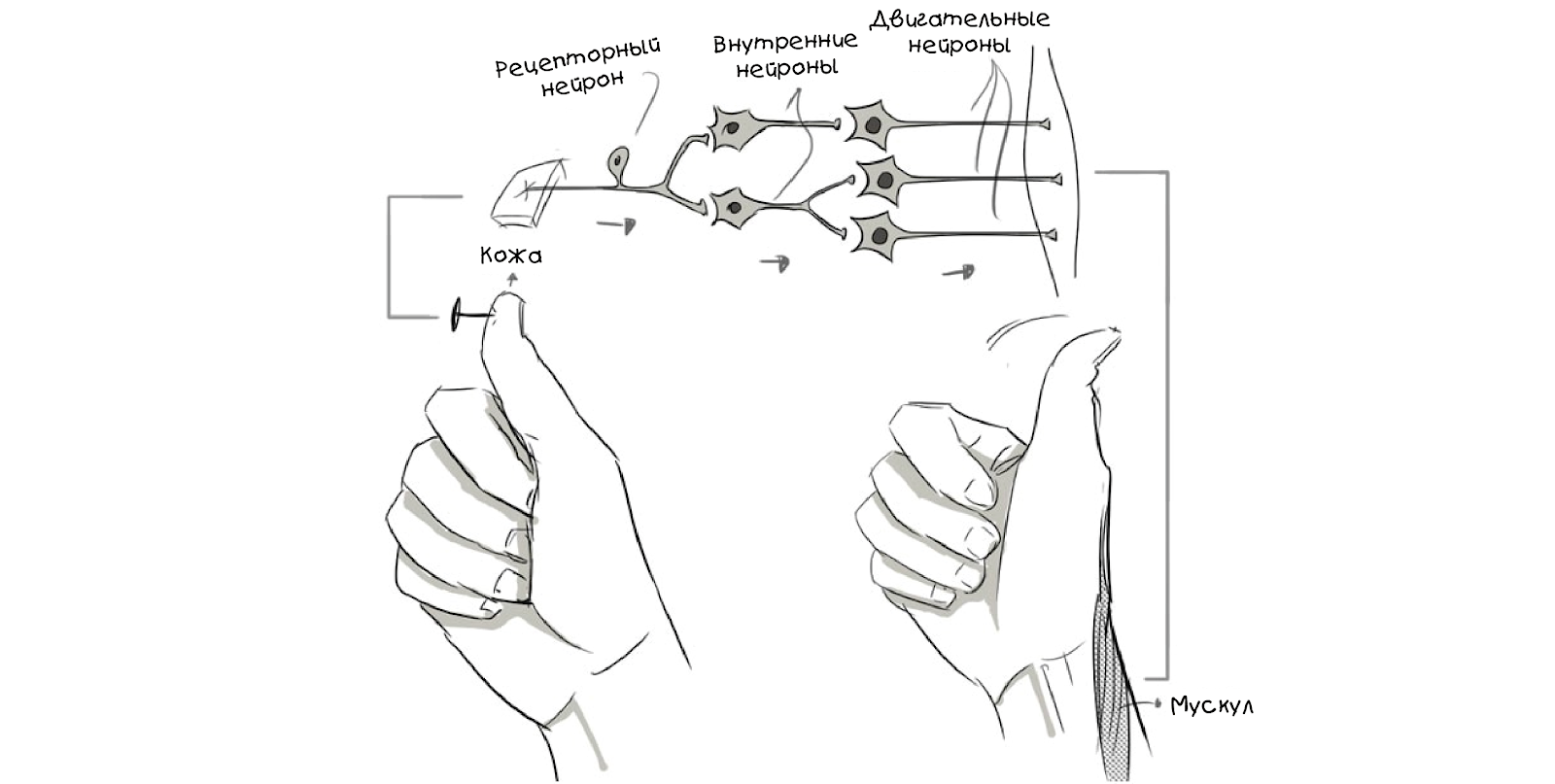

Many of the terms in neural networks are related to biology, so let's start from the beginning:

')

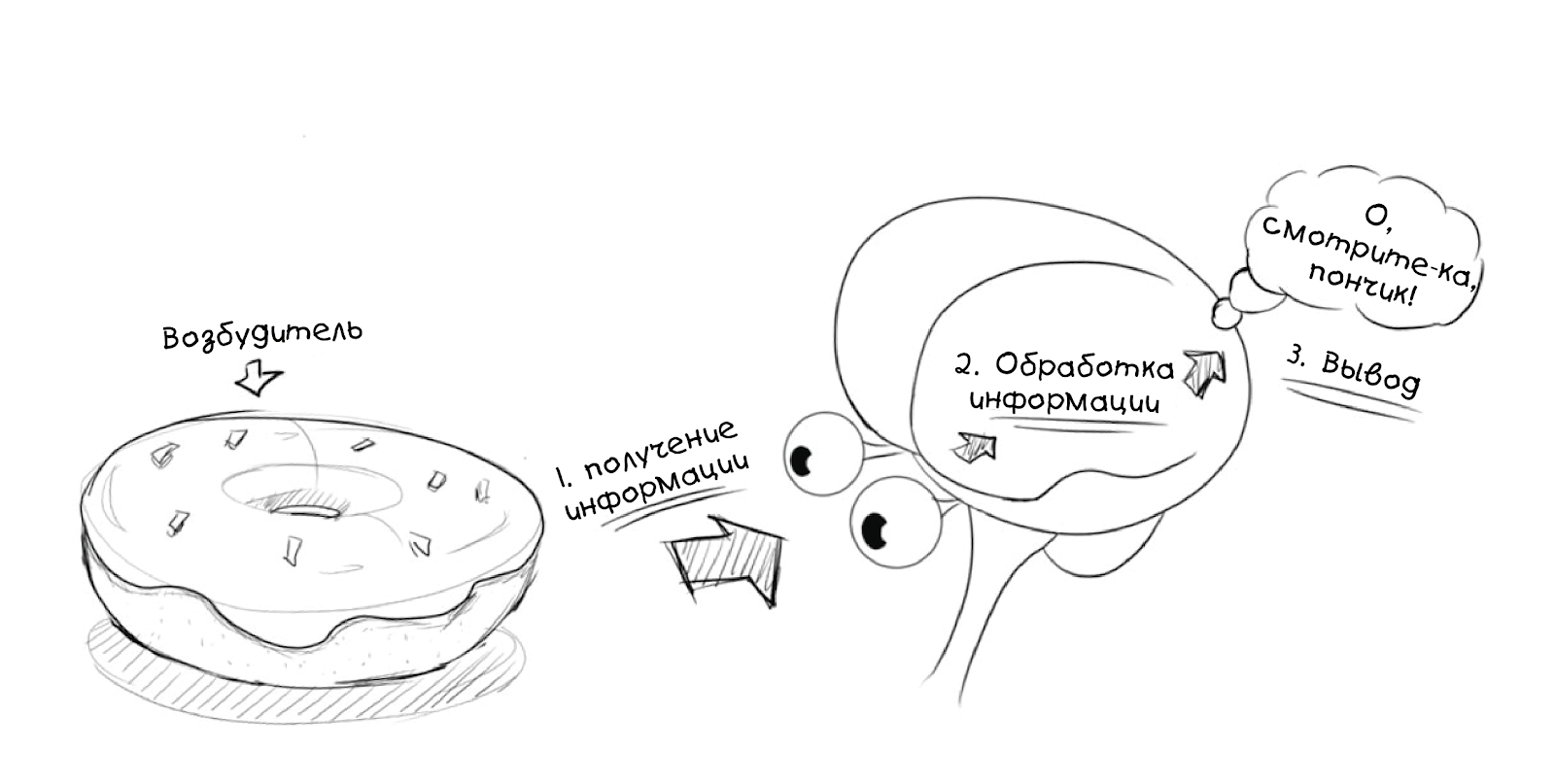

The brain is a complicated thing, but it can also be divided into several main parts and operations:

The causative agent may be internal (for example, an image or an idea):

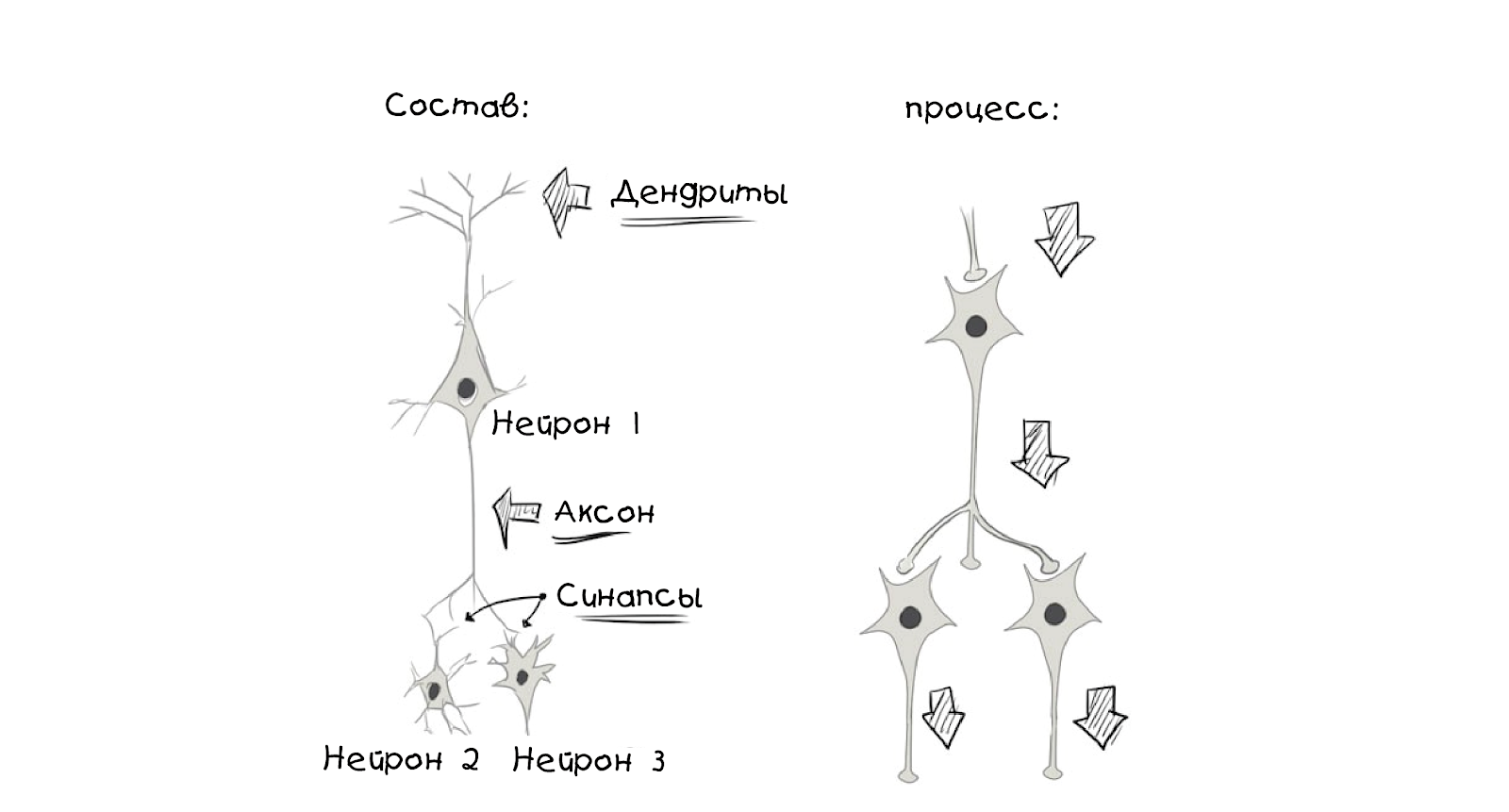

And now let's take a look at the basic and simplified parts of the brain:

The brain is generally similar to the cable network.

The neuron is the basic unit of calculus in the brain, it receives and processes the chemical signals of other neurons, and, depending on a number of factors, either does nothing, or generates an electrical impulse, or Action Potential, which then sends signals through the synapses to neighboring neurons:

Dreams, memories, self-regulated movements, reflexes and in general everything that you think or do — everything happens due to this process: millions, or even billions of neurons work at different levels and create connections that create various parallel subsystems and constitute a biological neural network. .

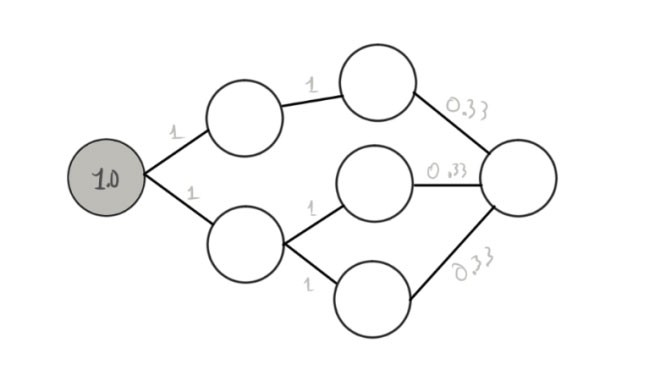

Of course, these are all simplifications and generalizations, but thanks to them we can describe a simple

neural network:

And to describe it formalized using the graph:

This requires some explanation. Circles are neurons, and lines are connections between them,

and, in order not to complicate at this stage, interconnections are the direct movement of information from left to right . The first neuron is currently active and highlighted in gray. We also assigned a number to it (1 - if it works, 0 - if not). The numbers between neurons show the weight of the connection.

The graphs above show the network time, for a more accurate display, you need to divide it into time segments:

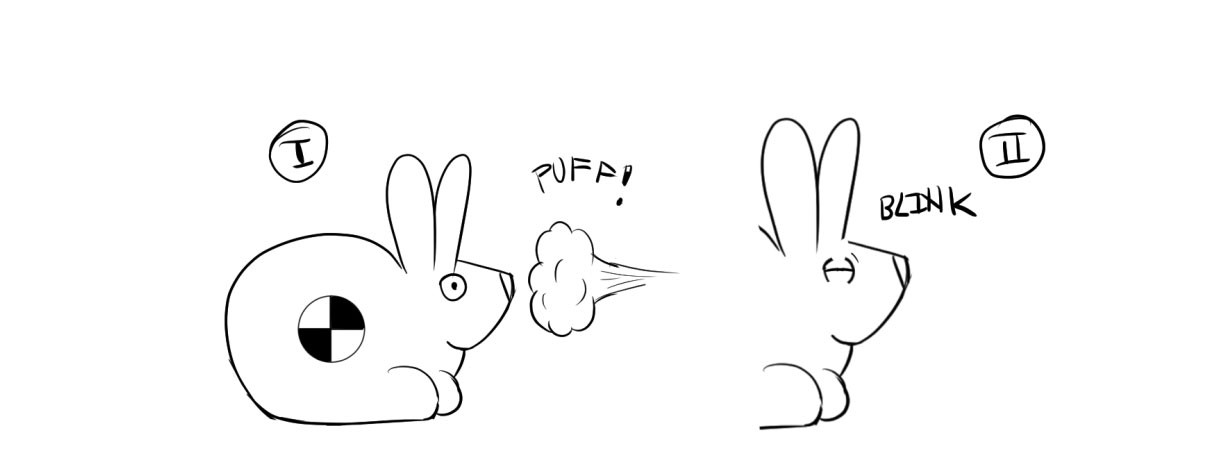

To create your neural network, you need to understand how weights affect neurons and how neurons are trained. As an example, take a rabbit (test rabbit) and put it in the conditions of a classic experiment.

When a safe stream of air is directed at them, the rabbits, like people, blink:

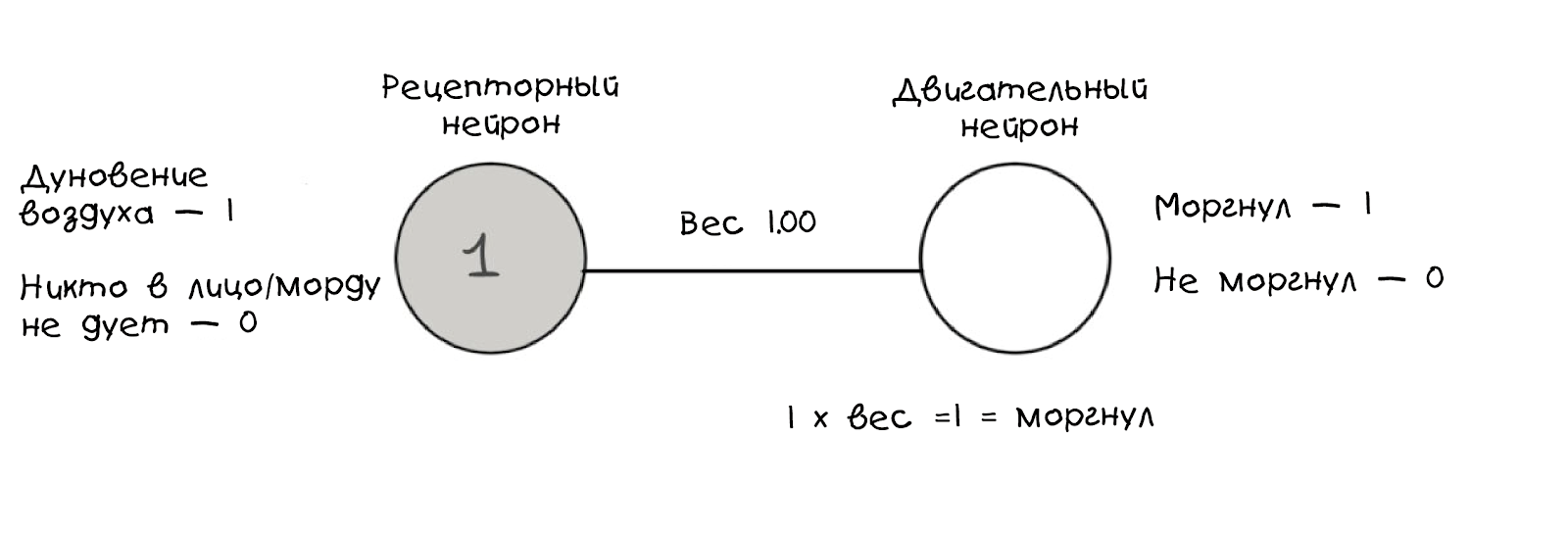

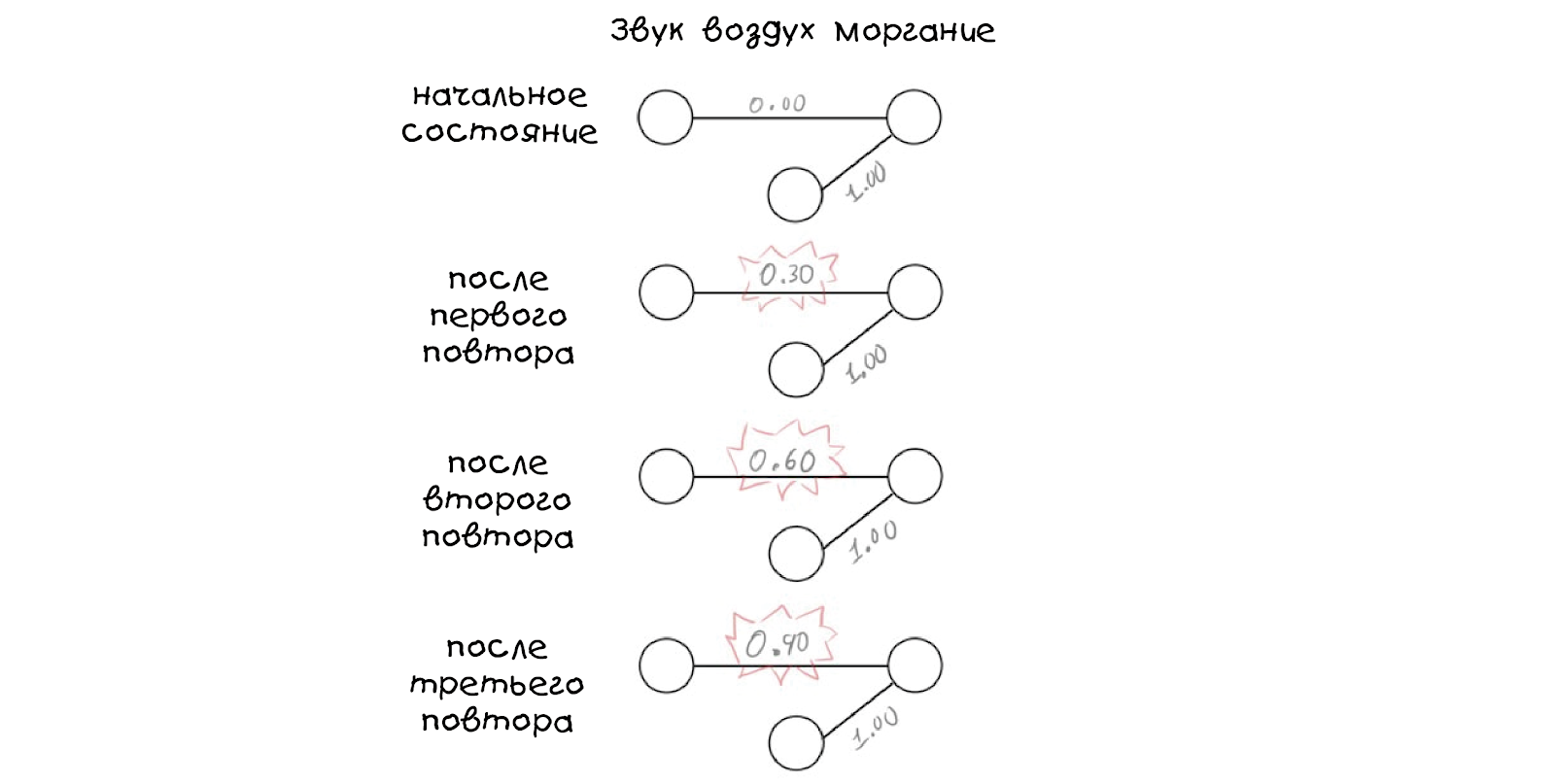

This behavior model can be drawn with graphs:

As in the previous diagram, these graphs show only the moment when the rabbit feels the breath, and we thus encode the breath as a logical value. In addition, we calculate whether the second neuron works, based on the weight value. If it is 1, then the sensory neuron is triggered, we blink; if the weight is less than 1, we do not blink: in the second neuron the limit is 1.

We introduce another element - a safe sound signal:

We can simulate a rabbit's interest like this:

The main difference is that now the weight is zero , so we did not get the blinking rabbit, well, so far, at least. Now we teach the rabbit to blink on the team, mixing

irritants (beep and whiff):

It is important that these events take place in different time periods , in the graphs it will look like this:

By itself, the sound does nothing, but the air flow still makes the rabbit blink, and we show this through weights multiplied by stimuli (red).

Learning complex behavior can be simplifiedly expressed as a gradual change in weight between connected neurons over time.

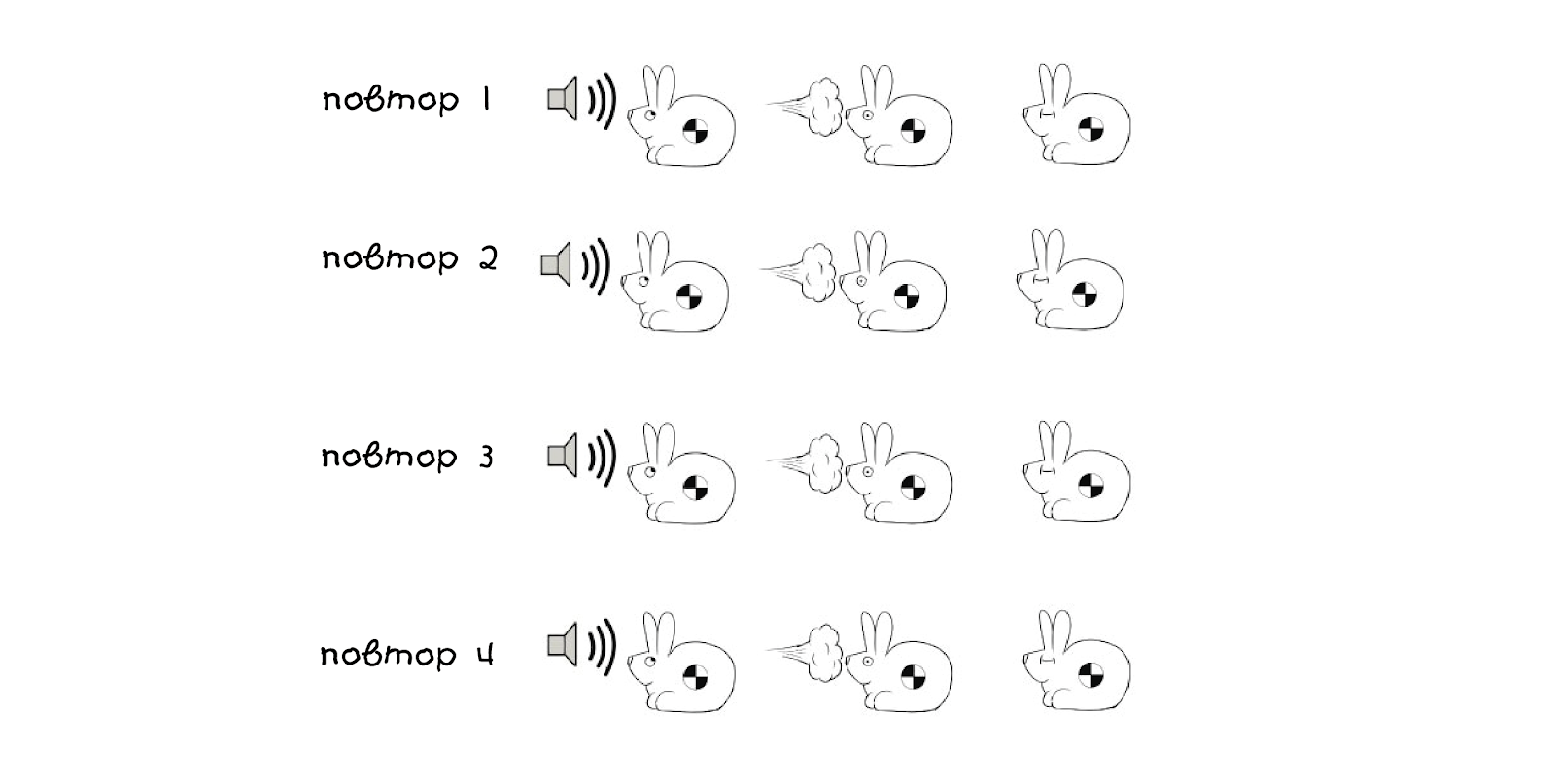

To train the rabbit, repeat the steps:

For the first three attempts, the schemes will look like this:

Please note that the weight for a sound stimulus grows after each repetition (highlighted in red), this value is now arbitrary - we chose 0.30, but the number can be anything, even negative. After the third repetition, you will not notice a change in the behavior of the rabbit, but after the fourth repetition, something amazing will happen - the behavior will change.

We removed the impact of air, but the rabbit still blinks when he heard the beep! Our last schema can explain this behavior:

We trained the rabbit to respond to the sound with a blink.

In a real experiment of this kind, it may take more than 60 repetitions to achieve a result.

Now we leave the biological world of the brain and rabbits and try to adapt everything that

learned to create an artificial neural network. To begin, let's try to do a simple task.

Let's say we have a four-button machine that gives out food when you press the correct one.

buttons (well, or energy, if you are a robot). The task is to find out which button gives a reward:

We can draw (schematically) what the button does when pressed as follows:

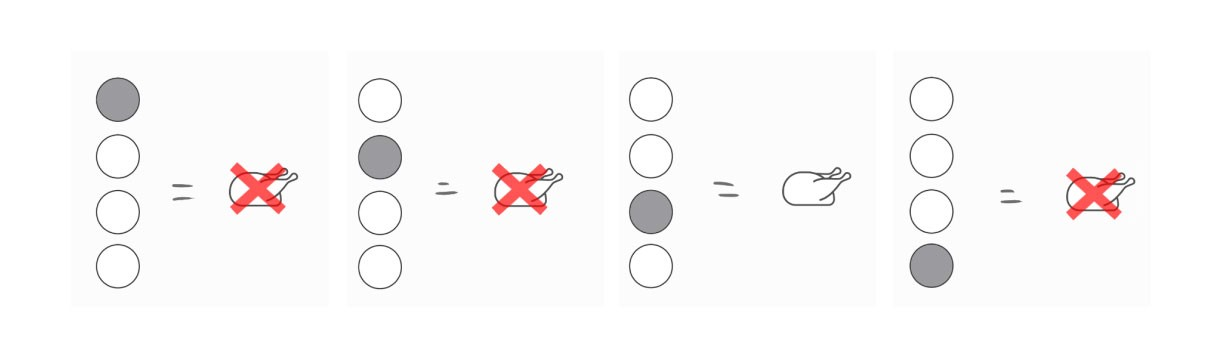

It is better to solve this problem entirely, so let's look at all possible results, including the correct one:

Click on the 3rd button to get your dinner.

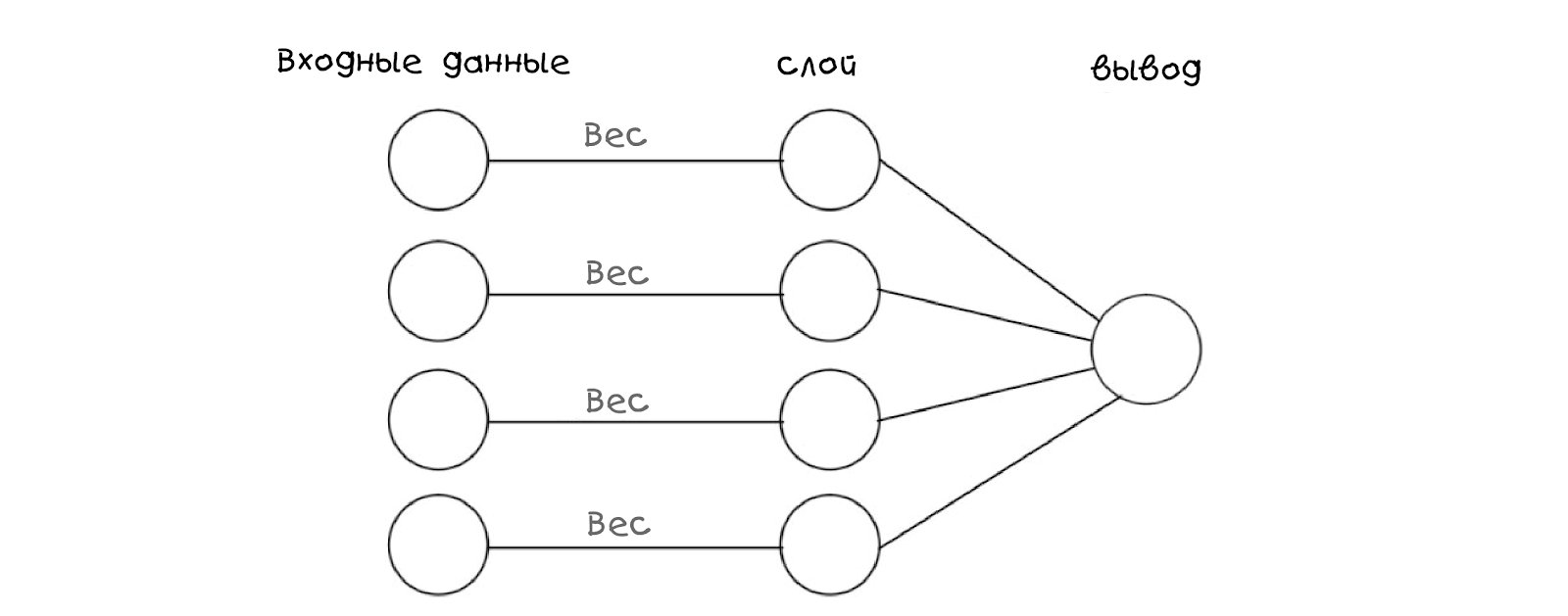

To reproduce a neural network in code, we first need to make a model or a graph with which to associate the network. Here is one suitable schedule for the task, and it also displays its biological analogue well:

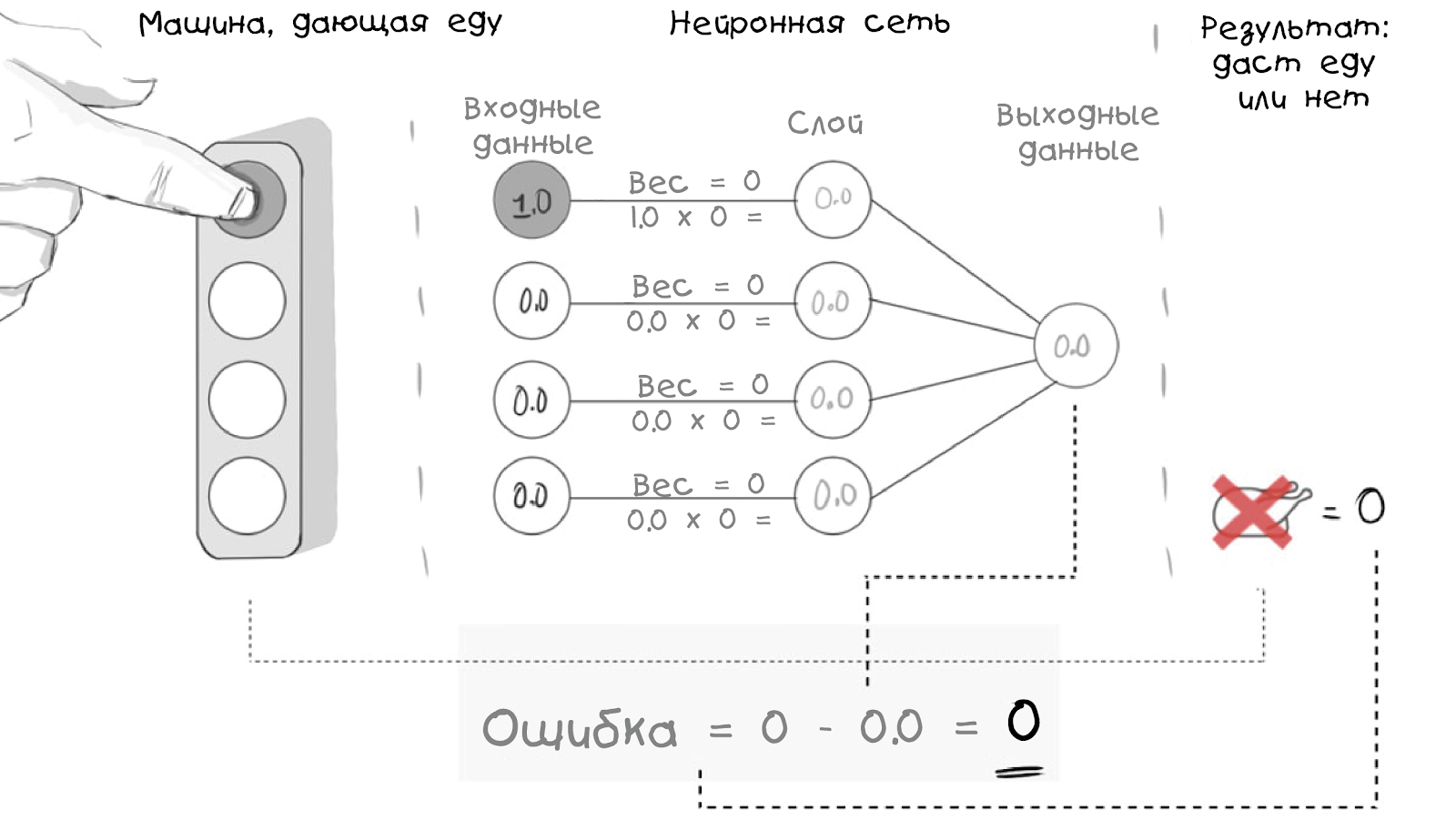

This neural network simply receives incoming information - in this case it will be the perception of which button is pressed. Next, the network replaces the incoming information with weights and makes a conclusion based on the addition of the layer. It sounds a bit confusing, but let's see how the button is represented in our model:

Note that all weights are 0, so the neural network, like a baby, is completely empty, but completely interconnected.

Thus, we associate an external event with the input layer of the neural network and calculate the value at its output. It may or may not coincide with reality, but for the time being we will ignore this and begin to describe the task in a way understandable to the computer. Let's start by entering the weights (we will use JavaScript):

var inputs = [0,1,0,0]; var weights = [0,0,0,0]; // The next step is to create a function that collects the input values and weights and calculates the output value:

function evaluateNeuralNetwork(inputVector, weightVector){ var result = 0; inputVector.forEach(function(inputValue, weightIndex) { layerValue = inputValue*weightVector[weightIndex]; result += layerValue; }); return (result.toFixed(2)); } // , , — / As expected, if we run this code, we will get the same result as in our model or graphics ...

evaluateNeuralNetwork(inputs, weights); // 0.00 A live example: Neural Net 001 .

The next step in the improvement of our neural network will be a way to check its own output or the resulting values is comparable to the actual situation,

let's first encode this particular reality into a variable:

To detect inconsistencies (and how many), we add an error function:

Error = Reality - Neural Net Output With it, we can evaluate the work of our neural network:

But more importantly - how about situations where reality gives a positive result?

Now we know that our model of the neural network is not working (and we know how) the great! This is great because now we can use the error function to control our learning. But all this will make sense if we redefine the error function as follows:

Error = <b>Desired Output</b> - Neural Net Output An elusive, but such an important discrepancy, tacitly showing that we will

use previously obtained results for comparison with future actions

(and for learning, as we will see later). It exists in real life, full

repeating patterns, so it can become an evolutionary strategy (well, in

most cases).

Next, we will add a new variable to our sample code:

var input = [0,0,1,0]; var weights = [0,0,0,0]; var desiredResult = 1; And a new feature:

function evaluateNeuralNetError(desired,actual) { return (desired — actual); } // After evaluating both the Network and the Error we would get: // "Neural Net output: 0.00 Error: 1" A live example: Neural Net 002 .

Let's summarize the subtotal . We started with a task, made its simple model in the form of a biological neural network and got a way to measure its performance in comparison with reality or the desired result. Now we need to find a way to correct inconsistencies - a process that, like computers, can also be considered as training for people.

How to train a neural network?

The basis of learning both biological and artificial neural networks is the repetition

and learning algorithms , so we will work with them separately. Let's start with

learning algorithms.

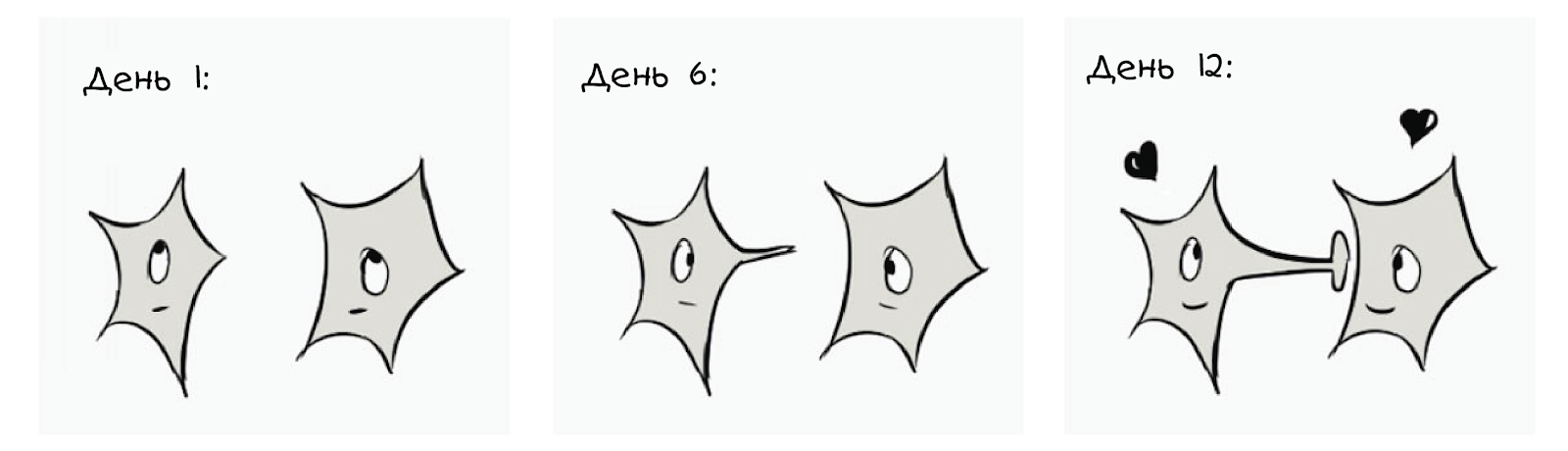

In nature, learning algorithms are defined as changes in physical or chemical

characteristics of the neurons after the experiments:

A dramatic illustration of how two neurons change over time in the code and our “learning algorithm” model means that we will simply change something for a while to make our lives easier. So let's add a variable to denote the degree of life ease:

var learningRate = 0.20; // , :) And what will it change?

This will change the weights (just like a rabbit!), Especially the weight of the output we want:

How to encode such an algorithm is your choice, for simplicity I add the learning coefficient to the weight, here it is as a function:

function learn(inputVector, weightVector) { weightVector.forEach(function(weight, index, weights) { if (inputVector[index] > 0) { weights[index] = weight + learningRate; } }); } When used, this learning function will simply add our learning coefficient to the weight vector of the active neuron , before and after the learning circle (or repeat), the results will be as follows:

// Original weight vector: [0,0,0,0] // Neural Net output: 0.00 Error: 1 learn(input, weights); // New Weight vector: [0,0.20,0,0] // Neural Net output: 0.20 Error: 0.8 // , 1 ( ) — , , , A live example: Neural Net 003 .

Okay, now that we are moving in the right direction, the last part of this puzzle will be the introduction of repetitions .

It's not so difficult, in nature we just do the same thing over and over again, and in the code we just indicate the number of repetitions:

var trials = 6; And the implementation of the number of repetitions in our training neural network will look like this:

function train(trials) { for (i = 0; i < trials; i++) { neuralNetResult = evaluateNeuralNetwork(input, weights); learn(input, weights); } } Well, our final report:

Neural Net output: 0.00 Error: 1.00 Weight Vector: [0,0,0,0] Neural Net output: 0.20 Error: 0.80 Weight Vector: [0,0,0.2,0] Neural Net output: 0.40 Error: 0.60 Weight Vector: [0,0,0.4,0] Neural Net output: 0.60 Error: 0.40 Weight Vector: [0,0,0.6,0] Neural Net output: 0.80 Error: 0.20 Weight Vector: [0,0,0.8,0] Neural Net output: 1.00 Error: 0.00 Weight Vector: [0,0,1,0] // Chicken Dinner ! A live example: Neural Net 004 .

Now we have a weight vector, which will give only one result (chicken for dinner), if the input vector corresponds to reality (pressing the third button).

So what's the cool thing we just did?

In this particular case, our neural network (after training) can recognize the input data and say what will lead to the desired result (we will still need to program specific situations):

In addition, it is a scalable model, a toy and a tool for our learning. We were able to learn something new about machine learning, neural networks and artificial intelligence.

Caution to users:

- The storage mechanism of the studied scales is not provided, so this neural network will forget everything that it knows. When updating or re-running the code, you need at least six successful repetitions to get the network fully trained, if you think that a person or a machine will press the buttons in a random order ... It will take some time.

- Biological networks for learning important things have a learning rate of 1, so only one successful replay will be needed.

- There is a learning algorithm that is very similar to biological neurons, it has a catchy name: the rule widroff-hoff , or learning widroff-hoff .

- The thresholds of neurons (1 in our example) and the effects of retraining (with a large number of repetitions, the result will be greater than 1) are not taken into account, but they are very important in nature and are responsible for large and complex blocks of behavioral responses. Like negative weight.

Notes and references for further reading

I tried to avoid mathematics and strict terms, but if you are interested, we built a perceptron , which is defined as an algorithm for supervised learning ( teaching with a teacher ) of double classifiers - a hard thing.

The biological structure of the brain is not a simple topic, partly because of inaccuracy, partly because of its complexity. It’s better to start with Neuroscience (Purves) and Cognitive Neuroscience (Gazzaniga). I modified and adapted the example of the Gateway to Memory (Gluck) rabbit, which is also an excellent guide to the world of graphs.

Another smart resource An Introduction to Neural Networks (Gurney), suitable for all your needs related to AI.

And now in Python! Thanks to Ilya Undshmidt for providing the version in Python:

inputs = [0, 1, 0, 0] weights = [0, 0, 0, 0] desired_result = 1 learning_rate = 0.2 trials = 6 def evaluate_neural_network(input_array, weight_array): result = 0 for i in range(len(input_array)): layer_value = input_array[i] * weight_array[i] result += layer_value print("evaluate_neural_network: " + str(result)) print("weights: " + str(weights)) return result def evaluate_error(desired, actual): error = desired - actual print("evaluate_error: " + str(error)) return error def learn(input_array, weight_array): print("learning...") for i in range(len(input_array)): if input_array[i] > 0: weight_array[i] += learning_rate def train(trials): for i in range(trials): neural_net_result = evaluate_neural_network(inputs, weights) learn(inputs, weights) train(trials) And now on GO! For this version, thank Kieran Maher.

package main import ( "fmt" "math" ) func main() { fmt.Println("Creating inputs and weights ...") inputs := []float64{0.00, 0.00, 1.00, 0.00} weights := []float64{0.00, 0.00, 0.00, 0.00} desired := 1.00 learningRate := 0.20 trials := 6 train(trials, inputs, weights, desired, learningRate) } func train(trials int, inputs []float64, weights []float64, desired float64, learningRate float64) { for i := 1; i < trials; i++ { weights = learn(inputs, weights, learningRate) output := evaluate(inputs, weights) errorResult := evaluateError(desired, output) fmt.Print("Output: ") fmt.Print(math.Round(output*100) / 100) fmt.Print("\nError: ") fmt.Print(math.Round(errorResult*100) / 100) fmt.Print("\n\n") } } func learn(inputVector []float64, weightVector []float64, learningRate float64) []float64 { for index, inputValue := range inputVector { if inputValue > 0.00 { weightVector[index] = weightVector[index] + learningRate } } return weightVector } func evaluate(inputVector []float64, weightVector []float64) float64 { result := 0.00 for index, inputValue := range inputVector { layerValue := inputValue * weightVector[index] result = result + layerValue } return result } func evaluateError(desired float64, actual float64) float64 { return desired - actual } Source: https://habr.com/ru/post/423647/

All Articles