Rule-based neural networks

(This article does not explain the basic concepts of the theory of neural networks. For those who are not familiar with them, I advise you to read them before reading, so as to avoid further delusions.)

The essence of this text is to familiarize with certain types of neural networks, which are illuminated in Russian-speaking spaces, not so often, if not to say that it does, very rarely.

The idea of logical neural networks is to establish on each neuron a hidden layer one of logical operations: conjunction or disjunction.

')

The concept of “initial rules” described above can be defined more simply - the knowledge base. For those familiar with fuzzy-control systems, this definition will not be new.

The knowledge base is the place where all our rules are located in the form of expressions “IF X1 AND \ OR X2 THEN Y”, etc. Why did I mention fuzzy systems? Because the creation of a Fuzzy-regulator can be represented as the first stage of creating RBNN, also because they prompted me to the idea of turning ordinary neural networks into something similar.

Suppose we have a knowledge base and a small expert system built on it. In the form of graphs, this could be expressed as:

Firstly, the important point is the introduction of a weight to each edge in such a structure. Each weight will reflect the probability of the relationship of one or another element to a group of others (for example, input parameter A to the first neuron of the hidden layer, respectively, to involvement in the AB group), or to the X, Y, Z, etc. response.

It may not be entirely clear to the reader where such neural networks can come in handy - in which case I will give a rather simple example:

Suppose we do not have a large sample of data, but only a " generalized opinion." We want to create a neural network that would give out an individual menu for a person.

Suppose that we do not know anything at all about the tastes and preferences of this user, but we still need to start with something. We make a generic diagram of a typical menu:

Accordingly, in the first days a person receives just such a menu, but with the “familiarity” of the neural network with the user's preferences, the weight of the connecting breakfast and omelet becomes smaller, and the weight of the connecting breakfast and porridge increases. Accordingly, now, the neural network "clearly" that the user prefers to one or another meal (in this case, our user is more like breakfast cereal, rather than an omelette). Over time, perhaps the preferences of the person will change and the neural network will again adapt to them.

So. At a minimum, RBNN networks can be very useful in cases when there are no large samples, when there are none, and also when we need a system that would completely adapt to a particular person. Moreover, such neural networks are quite simple, which allows them to be used to train other people and to understand the operation of neural networks.

Previously, it has always been customary to say that a neural network is a “black box”, and everything inside it cannot be explained in an accessible way. Now, having the structure presented above, it is possible to build a neural network that would be not only efficient, but also accessible for understanding the surrounding mechanism.

The essence of this text is to familiarize with certain types of neural networks, which are illuminated in Russian-speaking spaces, not so often, if not to say that it does, very rarely.

- Neural networks built on rules (Rule-based neural networks, hereinafter RBNN ) are neural networks based on basic rules (like ordinary implication), due to which, we, roughly speaking, get a ready expert system, however, now trained.

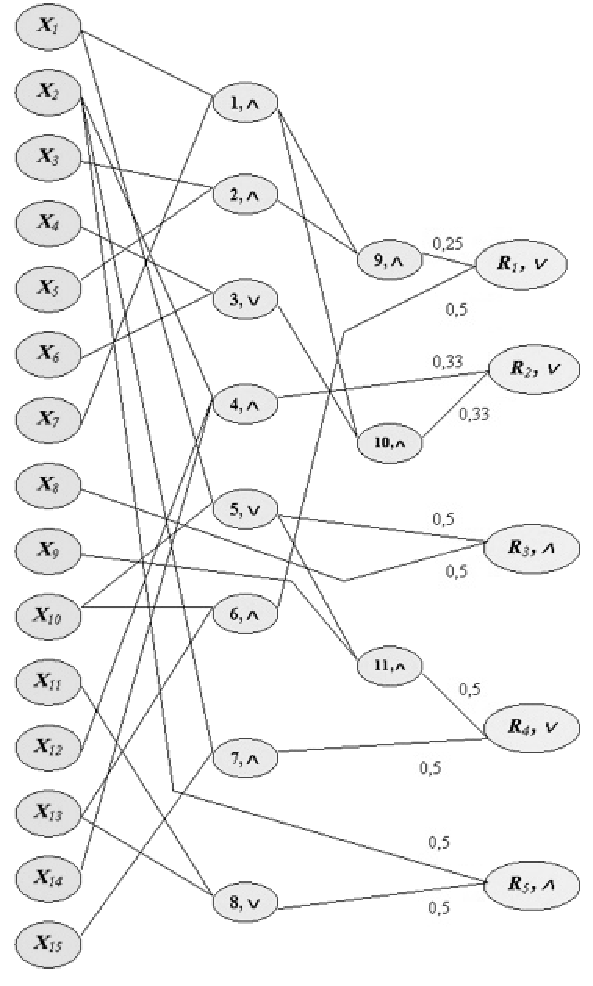

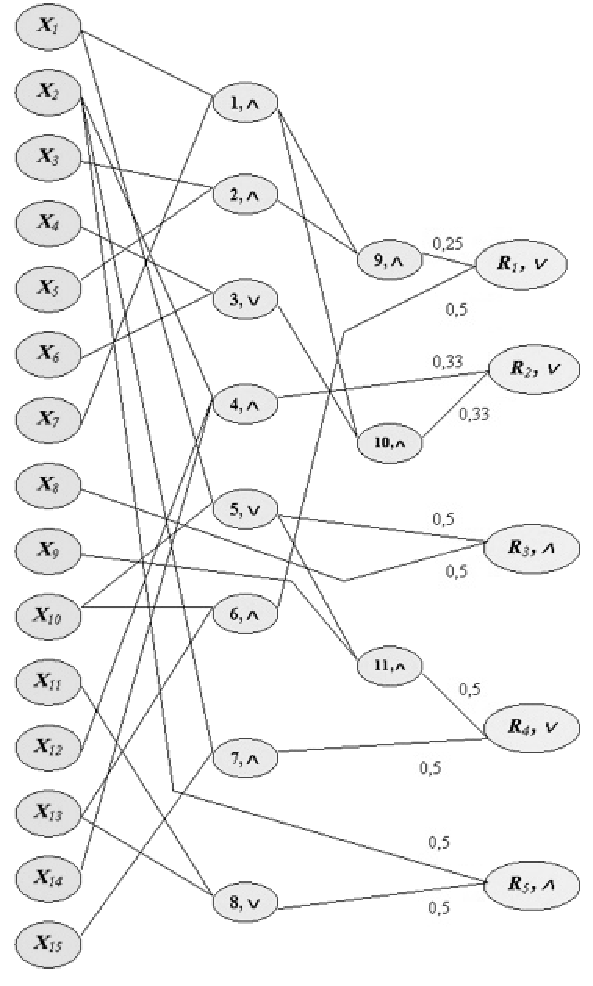

- Logical neural networks could be attributed to a completely different type, but I propose to attribute them to one of the varieties of RBNN. The concept of logical neural networks was first described in the work of A. B. Barsky - “Logical Neural Networks”.

The idea of logical neural networks is to establish on each neuron a hidden layer one of logical operations: conjunction or disjunction.

Illustration from the book “Logical Neural Networks”, p. 241Why is this type of neural networks worth referring to RBNN? Because the logical operations described above are peculiar rules that express the relationship of the input parameters to each other.

')

The concept of “initial rules” described above can be defined more simply - the knowledge base. For those familiar with fuzzy-control systems, this definition will not be new.

The knowledge base is the place where all our rules are located in the form of expressions “IF X1 AND \ OR X2 THEN Y”, etc. Why did I mention fuzzy systems? Because the creation of a Fuzzy-regulator can be represented as the first stage of creating RBNN, also because they prompted me to the idea of turning ordinary neural networks into something similar.

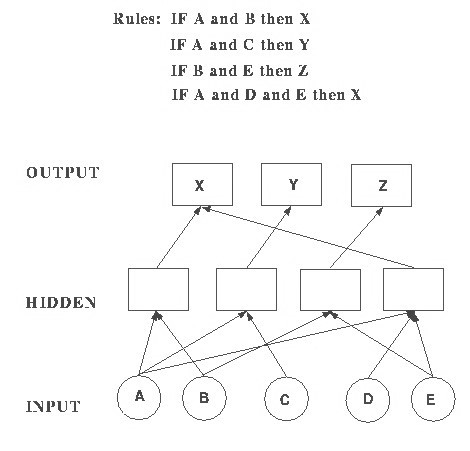

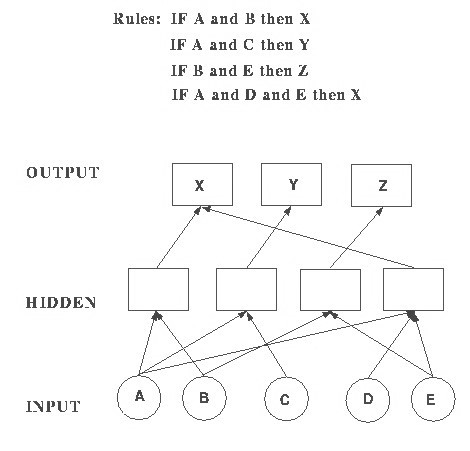

Suppose we have a knowledge base and a small expert system built on it. In the form of graphs, this could be expressed as:

Source: www.lund.irf.se/HeliosHome/rulebased.htmlNow the question is how to make a learning neural network out of this strict system?

Firstly, the important point is the introduction of a weight to each edge in such a structure. Each weight will reflect the probability of the relationship of one or another element to a group of others (for example, input parameter A to the first neuron of the hidden layer, respectively, to involvement in the AB group), or to the X, Y, Z, etc. response.

It may not be entirely clear to the reader where such neural networks can come in handy - in which case I will give a rather simple example:

Suppose we do not have a large sample of data, but only a " generalized opinion." We want to create a neural network that would give out an individual menu for a person.

Suppose that we do not know anything at all about the tastes and preferences of this user, but we still need to start with something. We make a generic diagram of a typical menu:

- omelet breakfast

- lunch-soup

- dinner porridge

Accordingly, in the first days a person receives just such a menu, but with the “familiarity” of the neural network with the user's preferences, the weight of the connecting breakfast and omelet becomes smaller, and the weight of the connecting breakfast and porridge increases. Accordingly, now, the neural network "clearly" that the user prefers to one or another meal (in this case, our user is more like breakfast cereal, rather than an omelette). Over time, perhaps the preferences of the person will change and the neural network will again adapt to them.

So. At a minimum, RBNN networks can be very useful in cases when there are no large samples, when there are none, and also when we need a system that would completely adapt to a particular person. Moreover, such neural networks are quite simple, which allows them to be used to train other people and to understand the operation of neural networks.

Previously, it has always been customary to say that a neural network is a “black box”, and everything inside it cannot be explained in an accessible way. Now, having the structure presented above, it is possible to build a neural network that would be not only efficient, but also accessible for understanding the surrounding mechanism.

Source: https://habr.com/ru/post/423393/

All Articles