AI, practical course. Emotion Based Musical Transformation

This is another article in a series of educational articles for developers in the field of artificial intelligence. In previous articles and we have reviewed the collection and preparation of data with images, in this article we will continue to discuss the collection and study of musical data.

The purpose of this project is:

')

- Creating an application that accepts a set of images as input.

- Highlighting the emotional coloring of images.

- Getting the output of a piece of music that reflects the corresponding emotion.

This project uses an algorithm (Musical Transformation Based on Emotions) to generate music modulated using emotions, which changes the basic melody according to a specific emotion and then harmonizes and completes the melody using a deep learning model. To complete this task, the following musical data sets are required:

- A data set for learning the melody completion algorithm (Bach choirs).

- A set of popular tunes that serve as templates for modulating emotions.

Collection and study of a musical data set

Bach Chorales - Music21 Project

Music21 is a Python-based toolkit for computerized musicology. It contains the complete collection of Bach chorals as part of his collected works. Therefore, the data collection procedure is very simple - you just need to install the music21 package (manuals for macOS *, Windows * and Linux * are available).

After installation, access to the Bach chorals can be obtained using the following code:

from music21 import corpus for score in corpus.chorales.Iterator(numberingSystem='bwv', returnType='stream'): pass # do stuff with scores here Iteration over all Bach chorals

Alternatively, you can use the following code: it returns a list of file names for all Bach chorals, which can be further processed using the parse function:

from music21 import corpus chorales = corpus.getBachChorales() score = corpus.parse(chorales[0]) # do stuff with score Getting a list of all the Bach choirs

Examine the data

After the data collection has been completed (in this case, after accessing it), the next step is to check and examine the characteristics of this data.

The following code displays the textual representation of the music file:

>>> from music21 import corpus

>>> chorales = corpus.getBachChorales()

>>> score = corpus.parse(chorales[0])

>>> score.show('text')

{0.0} <music21.text.TextBox "BWV 1.6 W...">

{0.0} <music21.text.TextBox "Harmonized...">

{0.0} <music21.text.TextBox "PDF 2004 ...">

{0.0} <music21.metadata.Metadata object at 0x117b78f60>

{0.0} <music21.stream.Part Horn 2>

{0.0} <music21.instrument.Instrument P1: Horn 2: Instrument 7>

{0.0} <music21.stream.Measure 0 offset=0.0>

{0.0} <music21.layout.PageLayout>

{0.0} <music21.clef.TrebleClef>

{0.0} <music21.key.Key of F major>

{0.0} <music21.meter.TimeSignature 4/4>

{0.0} <music21.note.Note F>

{1.0} <music21.stream.Measure 1 offset=1.0>

{0.0} <music21.note.Note G>

{0.5} <music21.note.Note C>

{1.0} <music21.note.Note F>

{1.5} <music21.note.Note F>

{2.0} <music21.note.Note A>

{2.5} <music21.note.Note F>

{3.0} <music21.note.Note A>

{3.5} <music21.note.Note C>

{5.0} <music21.stream.Measure 2 offset=5.0>

{0.0} <music21.note.Note F>

{0.25} <music21.note.Note B->

{0.5} <music21.note.Note A>

{0.75} <music21.note.Note G>

{1.0} <music21.note.Note F>

{1.5} <music21.note.Note G>

{2.0} <music21.note.Note A>

{3.0} <music21.note.Note A>

{9.0} <music21.stream.Measure 3 offset=9.0>

{0.0} <music21.note.Note F>

{0.5} <music21.note.Note G>

.

.

.

>>> print(score)

<music21.stream.Score 0x10bf4d828>Textual presentation of the choral

Above is a display of the choral text representation as an object music21.stream .Score. It is interesting to learn how the music21 project presents music in code, but it is not very useful from the point of view of studying important data features. Therefore, we need software that can display the score.

As previously stated in the article Configuring a Model and Hyper Parameters for Recognizing Emotions in Images , the score in the music21 collection of works is stored in MusicXML * files (with the .xml or .mxl extensions). To view these files in music notation, use the free application Finale NotePad * 2 (trial version of the professional package Finale * for working with music notation). The Finale NotePad application is available for Mac and Windows. After downloading Finale Notepad, run the following code to configure music21 to work with Finale Notepad:

>>> import music21

>>> music21.configure.run()Now we can run the above code, but use the score.show () fragment instead of the score.show ('text') . This will open the MusicXML file in the Finale application, which looks something like this:

The first page of Bach choral in musical notation

This format provides a clearer visual presentation for chorals. When considering several chorals, we are convinced that the data correspond to our expectations: These are short musical pieces containing at least four parts (soprano, alto, tenor and bass), divided into separate phrases by means of a fermat.

Usually, as part of the data mining procedure, certain descriptive statistics are calculated. In this case, we can determine how many times each tonality occurs in the collected works. The following is an example of code that allows you to calculate and visualize the utilization rating of each key in a data set.

from music21 import* import matplotlib.pyplot as plt chorales = corpus.getBachChorales() dict = {} for chorale in chorales: score = corpus.parse(chorale) key = score.analyze('key').tonicPitchNameWithCase dict[key] = dict[key] + 1 if key in dict.keys() else 1 ind = [i for i in range(len(dict))] fig, ax = plt.subplots() ax.bar(ind, dict.values()) ax.set_title('Frequency of Each Key') ax.set_ylabel('Frequency') plt.xticks(ind, dict.keys(), rotation='vertical') plt.show()

Frequency of use of each key in the collected works. Minor keys are represented in lower case letters, and major keys are in lower case letters. Bemols are denoted by the sign "-"

Below are some statistics related to the collected works.

The distribution of the used keys in the set of works

The arrangement of the notes, calculated as the offset from the beginning of the measure in quarter notes

Descriptive statistics of interest for the calculation will vary for each project. However, in most cases, it can help you figure out what type of data you are working with, and even manage certain actions during preprocessing. These statistics can also serve as a starting point for examining the results of data preprocessing.

Musical Transformation - Theoretical Information

There are two main carriers of expression in music - the height of sounds and rhythm. We use these expression carriers as parameters to rewrite our melody in the chosen mood.

In musical theory, when it comes to the height of the sounds in a melody, the ratio of the heights between the notes is implied. A system of musical notes based on a sequence of sounds of a certain pitch is called a scale. The intervals or measure of the width of each step of the sequence may differ from each other. Such a difference, or lack thereof, creates a relationship between tones and melodic tendencies, in which stable combinations and anger create an expression of mood. In the Western musical tradition, when it comes to a simple diatonic scale, the position of the note relative to the first note of the scale is called the degree of the scale (I-II-III-IV-V-VI-VII). In accordance with stable combinations and treads, the degree of the scale provides a musical tone that performs its function in the system. This makes the notion of the degree of the scale very useful in analyzing a simple melodic pattern and encoding it with the possibility of assigning different values.

At the initial level, we need to choose scales suitable from an artistic point of view to create any particular mood. Thus, if we need to change the mood of a melody, we should investigate its functional structure using the described concept of the scale, then assign new values to the existing pattern from the steps of the scale. Like the map, this template also contains information about the directions and periods in the melody.

For each particular mood, we use some additional parameters to make our new melodies more expressive, harmonious and expressive.

Rhythm is a way to organize sounds in time. It includes information such as: the order in which tones appear in a melody, their relative length, the pauses between them, and various accents. Thus, time periods are created to structure the musical size. If we need to preserve the original melodic figure, then we should preserve its rhythmic structure. To achieve this goal it is only necessary to change some parameters of the rhythm - the length of notes and pauses - and this should be enough from an artistic point of view. In addition, to make our modified melody more expressive, we can use some additional ways to emphasize its mood.

For example, ANXIETY can be expressed using a minor key and a more energetic rhythm. The template of our source scale looks like this: VV-VI-VI-VII VV-VI-V-II-I VVV (go to octave above) -III-I-VII-VI IV-IV-III-I-II-I .

Source pattern

The original melody is written in a major key, which differs from the minor by three notes - in major key III, VI and VII are major (half a tone higher), and in a minor tone - minor minor (half a tone below). Therefore, if we need to change the tonality used, we should simply replace the elevated steps with the lower ones (or vice versa). However, in order to create an even more relief effect of anxiety, it is necessary to leave the seventh major (elevated) step — this will increase the instability of this tone and will emphasize our scale in a special way.

To make the rhythm more energetic, we can add a syncope, or a syncopic interruption of the regular movement of the rhythm, by changing the position of some notes. In this case, we will move some similar notes one beat forward.

Transforming anxiety

GRACE can also be expressed with a simple minor key, but its rhythm should be calm at the same time. So, we replace the major steps with the minor ones, including the VII step. To make the rhythm calmer, you need to fill the pause by increasing the length of the notes in front of them.

Conversion Sadness

In order to express BLEGING, we should avoid decisive and strict intonations - this will be the principle of appropriate transformation. As you can see, in accordance with the differences in the sequence of sounds used, the scale of the scale has a different value and distance from the first (I) step. This creates their meaning in the overall picture. Therefore, the movement from stage IV to stage I is very straightforward due to its function. Intonation when moving from the V stage to the I stage also sounds very straightforward. We will avoid these two intonations to create an impression of space and uncertainty.

Therefore, in each element of the pattern in which the I stage follows the V stage, or the I stage follows the IV stage, and also in the reverse order of these steps, we will replace one of these notes (or both notes) with the steps nearest to them. You can change the rhythm in a way similar to creating the effect of GRUST - simply by reducing the tempo.

Transformation BLEGING

DETERMINATION is associated with powerful movement, so the simplest way to show it is to change the rhythm in a way similar to the change for the effect of ANCIENT. It is also necessary to reduce the duration of all notes except for each last note of each period, VV-VI-VI-VII VV-VI-V-II-I.

Conversion SOLUTION

The major key itself sounds positive and joyful, but to emphasize and express HAPPINESS / JOY, we use the major pentatonic tonality. It consists of the same steps with the exception of two of them - the fourth and seventh, I-II-III-V-VI.

Therefore, each time when these two steps are detected in our template, we replace them with the steps nearest to them. To emphasize the simplicity provided by our tonality, we use a descending melodic fragment consisting of five or more notes as a step-by-step demonstration of our scale.

Conversion JOY

CALM / CLEARABILITY can be expressed not only by changing the tonality, it also requires the transformation of melodic movement. To do this, you need to analyze the original template and highlight similar segments in it. The first note of each segment determines the harmonic context of the entire phrase, so these notes are of the greatest value to us: V - V -VI-VI-VII V - V -VI-V-II-I V -V-III-I-VII-VI IV- IV-III-I-II-I.

For the first segment, only the following steps should be used: IV-VI-VII; for the second: V-VII-II-I; for the third: VI-VII-III-II; for the fourth: VII-IV-I-VII.

These sets of possible steps are actually another type of musical structure, chords. However, we can still use them as a system for converting melodies. The steps of the scale can be replaced by the steps nearest to them from the indicated chord patterns. If the whole segment begins with a tone that is lower than the original, then it is necessary to replace all the steps in it with the lower steps that are available in the specified pattern. To create a delay effect, it is also necessary to break the duration of each note into eighth notes and gradually reduce the speed of these new eighth notes.

CONVERSION CALCULATION / IMPRESSION

To emphasize THANKS, it is necessary to use its stylistic display in the rhythm, creating an arpeggio effect: we return to the first note at the end of each segment (phrase). It is necessary to halve the duration of each last note in the segment and place the first note of this segment there.

Conversion THANKS

Practical part

Python and music21 toolkit

The transformation script was implemented using the Python language and the music21 toolkit.

Music21 is a very versatile and high-level class for performing manipulations with such musical concepts as: notes, size, chord, tonality, etc. It allows you to perform operations directly in the subject area as opposed to low-level manipulations with “raw” data from a file Musical Instrument Interface (MIDI). However, direct work with MIDI files in music21 is not always convenient, especially when it comes to visualizing the score. Therefore, to visualize and implement the algorithm, a more convenient way is to convert the original MIDI files to the musicXML format. In addition, the musicXML format is the input format for BachBot, which represents the next step in our processing sequence.

Conversion can be performed using Mosescore:

To get the musicXML file:

musescore input.mid -o output.xmlTo get the output MIDI file:

musescore input.mid -o output.midJupyter

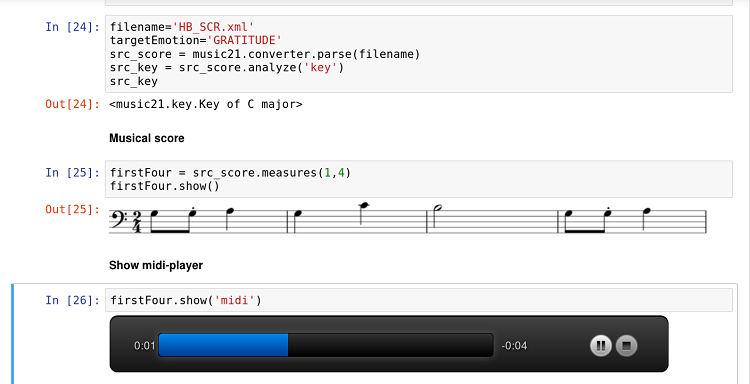

The music21 toolkit is well integrated with the Jupyter app. In addition, integration with Musescore allows you to display the score directly in the document Jupyter notebook and listen to the results through the built-in player in the process of development and experimentation.

Jupyter notebook document with code, score and player

The Score Show feature is especially useful for the teamwork of a programmer and theorist. The combination of Jupyter’s interactive nature, the domain-specific representation of music21 and the simplicity of the Python language makes this workflow particularly promising for this type of interdisciplinary research.

Implementation

The transformation script was implemented as a Python module, so it allows you to make a direct call:

python3 emotransform.py --emotion JOY input.midOr you can call it through an external script (or Jupyter):

from emotransform import transform transform('input.mid','JOY')In both cases, the result of the work will be a file modulated with a particular emotion.

The transformations associated with changes in the music levels - ANXIETY, GRACE, GREGNATION and JOY - are based on the use of the music21.Note.transpose function in combination with an analysis of the current and required position of the music levels. Here we use the music21.scale module and its functions to build the required scale from any tonic. To get the tonic of a particular melody, you can use the function analyze ('key') from the music21.Stream7 module.

For phrases-based transformations - DECISION, ACKNOWLEDGMENTS, CALM / IMPRESSION - additional research is required. This study will allow us to accurately detect the beginning and end of musical phrases.

Conclusion

In this article, we presented the main idea underlying the musical transformation based on emotions - changing the position of an individual note in the scale relative to the tonic (note level), the tempo of the play, as well as the musical phrase. This idea was implemented as a Python script. However, the implementation of theoretical ideas in the real world is not always simple, so we faced some difficulties and identified possible directions for future research. This study is mainly related to the discovery of musical phrases and their transformations. The right choice of instruments (music21) and research in the field of musical information are key factors for solving such problems.

Emotion-based musical transformation is the first stage in our sequence of processing musical data; the next stage is to deliver the converted and prepared melody to the BachBot input.

Source: https://habr.com/ru/post/423303/

All Articles