Managing Microservices with Kubernetes and Istio

A small story about the advantages and disadvantages of microservices, the concept of Service Mesh and Google tools that allow you to run microservice applications without clogging your head with endless settings of policies, accesses and certificates and quickly find errors hiding not in code, but in microservice logic.

The article is based on the Craig Box report at our last DevOops 2017 conference. The video and translation of the report is under the cut.

Craig Box (Craig Box, Twitter ) - DevRel from Google, responsible for the direction of microservices and tools Kubernetes and Istio. His current story is about managing microservices on these platforms.

')

Let's start with a relatively new concept called Service Mesh. This term is used to describe the network of microservices interacting with each other, of which the application consists.

At a high level, we view the network as a pipe that simply moves bits. We do not want to worry about them or, for example, about MAC addresses in applications, but we strive to think about the services and connections that they need. If you look at it from an OSI point of view, then we have a third-level network (with routing and logical addressing functions), but we want to think in terms of the seventh (with access to network services).

What should a real network of the seventh level look like? Perhaps we want to see something like tracing traffic around problem services. To be able to connect to the service, and at the same time the level of the model was raised up from the third level. We want to get an idea of what is happening in the cluster, find unintended dependencies, find out the root causes of failures. We also need to avoid unnecessary overhead, for example, a connection with a high latency or a connection to servers with cold or not fully heated caches.

We need to ensure that traffic between services is protected from trivial attacks. Mutual TLS authentication is required, but without embedding the appropriate modules in each application we write. It is important to be able to control what surrounds our applications not only at the connection level, but also at a higher level.

Service Mesh is a layer that allows us to solve the above problems in the micro-service environment.

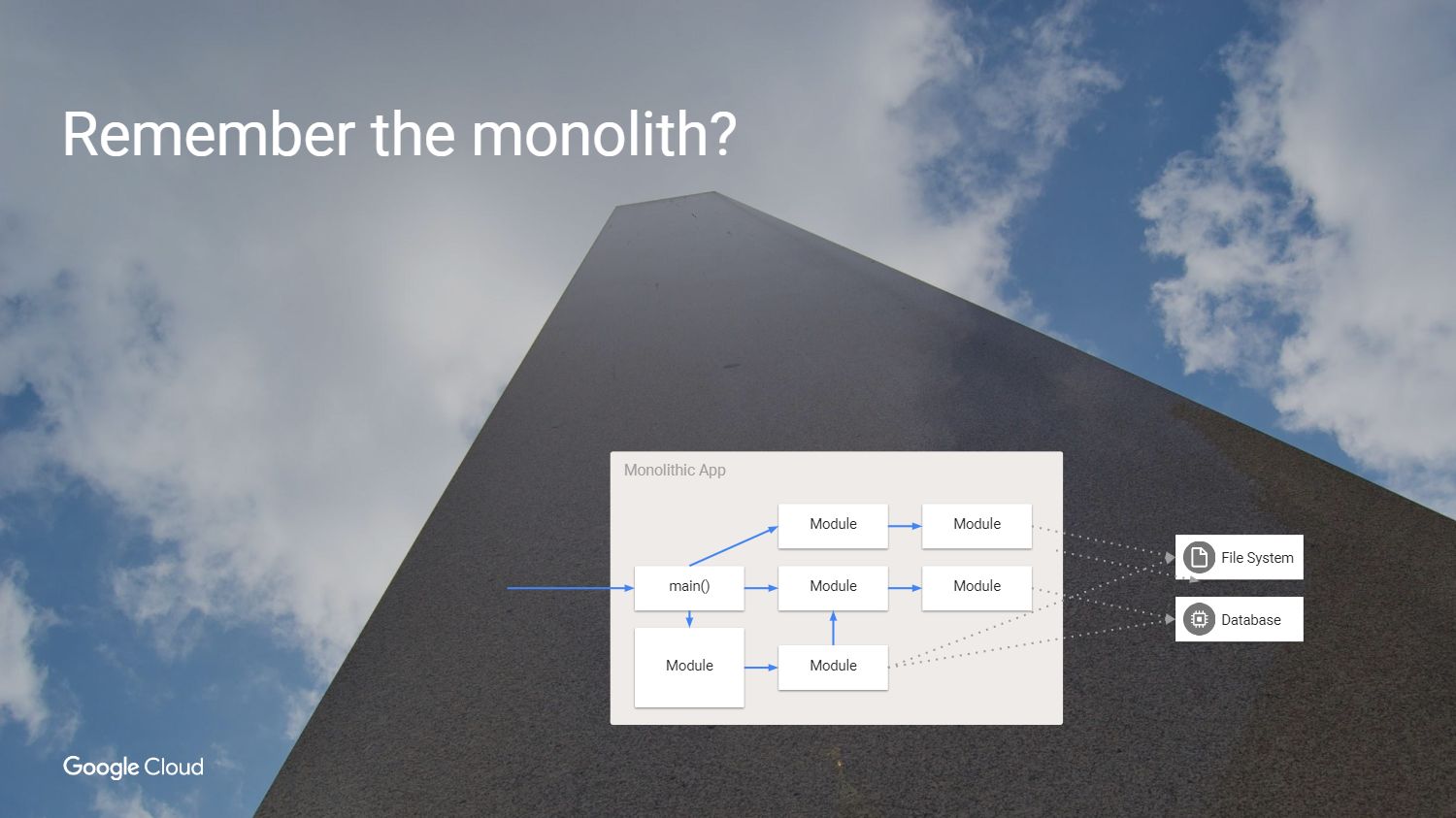

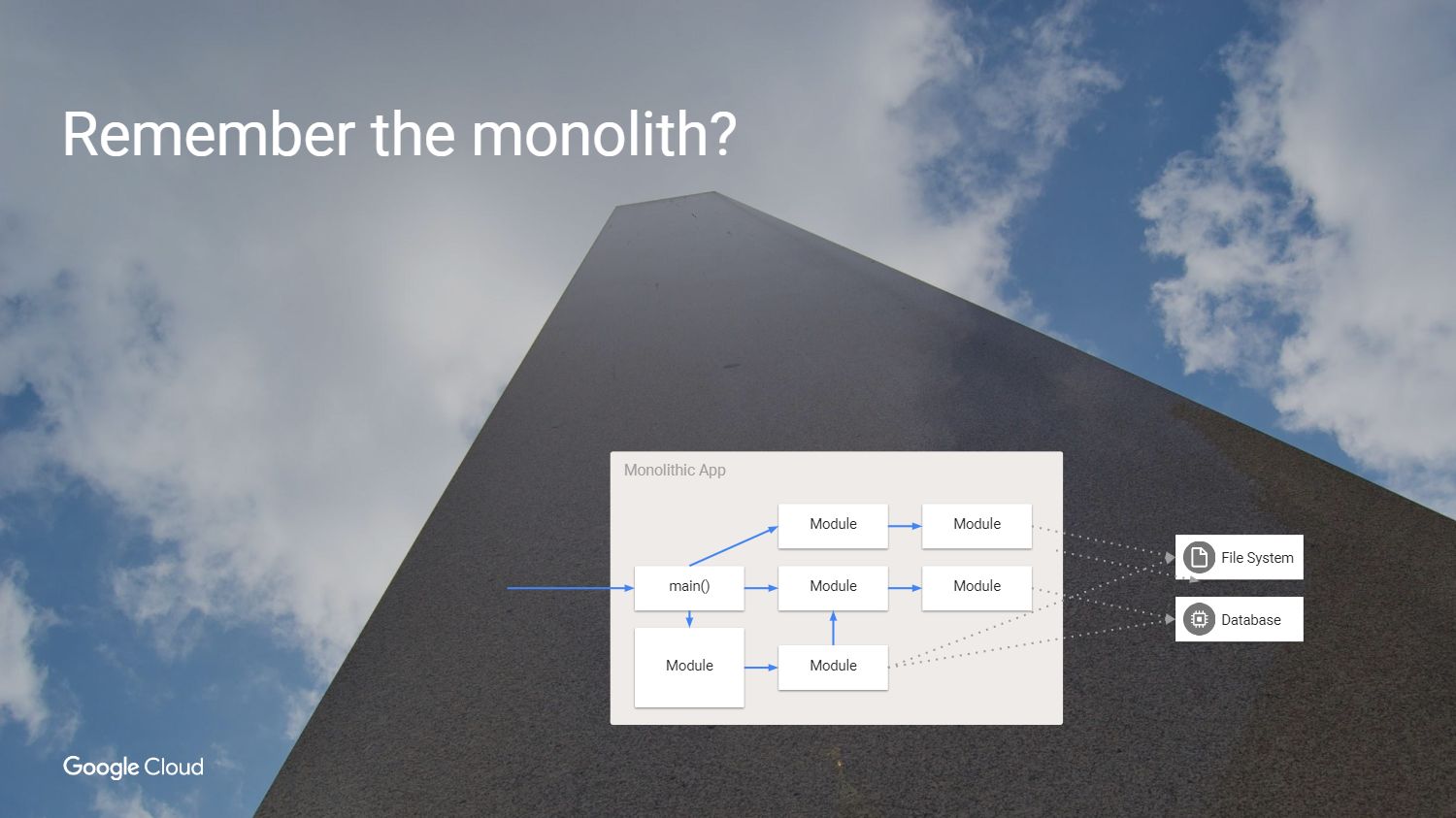

But first we ask ourselves, why should we solve these problems at all? How did we do software development before? We had an application that looks something like this - like a monolith.

It's great: all the code is on our palm. Why not continue to use this approach?

Yes, because the monolith has its own problems. The main difficulty is that if we want to rebuild this application, we must redeploy each module, even if nothing has changed. We have to create an application in the same language or in compatible languages, even if different teams are working on it. In fact, the individual parts cannot be tested independently of each other. It's time to change, it's time microservices.

So, we have divided the monolith into pieces. You may notice that in this example we have removed some of the unnecessary dependencies and stopped using the internal methods called from other modules. We created services from models that were used earlier, creating abstractions in cases where we need to save state. For example, each service must have an independent state so that when you access it you don’t worry about what is happening in the rest of our environment.

What was the result?

We left the world of giant apps, getting what really looks great. We accelerated development, stopped using internal methods, created services and now we can scale them independently, make the service more without the need to consolidate everything else. But what is the price of change that we lost in the process?

We had reliable calls within applications, because you just called a function or module. We have replaced a reliable call inside the module with an unreliable remote procedure call. But not always the service on the other side is available.

We were safe, using the same process inside the same machine. Now we connect to services that can be on different machines and on an unreliable network.

The new approach to the network may be the presence of other users who are trying to connect to services. Delays have increased, and at the same time their measurement capabilities have decreased. Now we have step-by-step connections in all services that create one module call, and we can no longer just look at the application in the debugger and find out exactly what caused the crash. And this problem must somehow be solved. Obviously, we need a new set of tools.

There are several options. We can take our application and say that if RPC does not work the first time, then you should try again, and then again and again. Wait a while and try again or add a jitter. We can also add the entry - exit traces to say that there was a start and end of the call, which for me is equivalent to debugging. You can add infrastructure to provide authentication of connections and teach all of our applications to work with TLS encryption. We will have to take on the burden of maintaining individual commands and constantly keep in mind the various problems that may arise in SSL libraries.

Maintaining consistency across multiple platforms is a thankless task. I would like the space between applications to become reasonable, so that the possibility of tracking appears. We also need the ability to change the configuration at run time in order not to recompile or not to restart the migration application. Here these Wishlist and implements Service Mesh.

Let's talk about Istio .

Istio is a complete framework for connecting, managing and monitoring the microservice architecture. Istio is designed to work on top of Kubernetes. He himself does not deploy software and does not care to make it available on the machines that we use for this purpose with containers in Kubernetes.

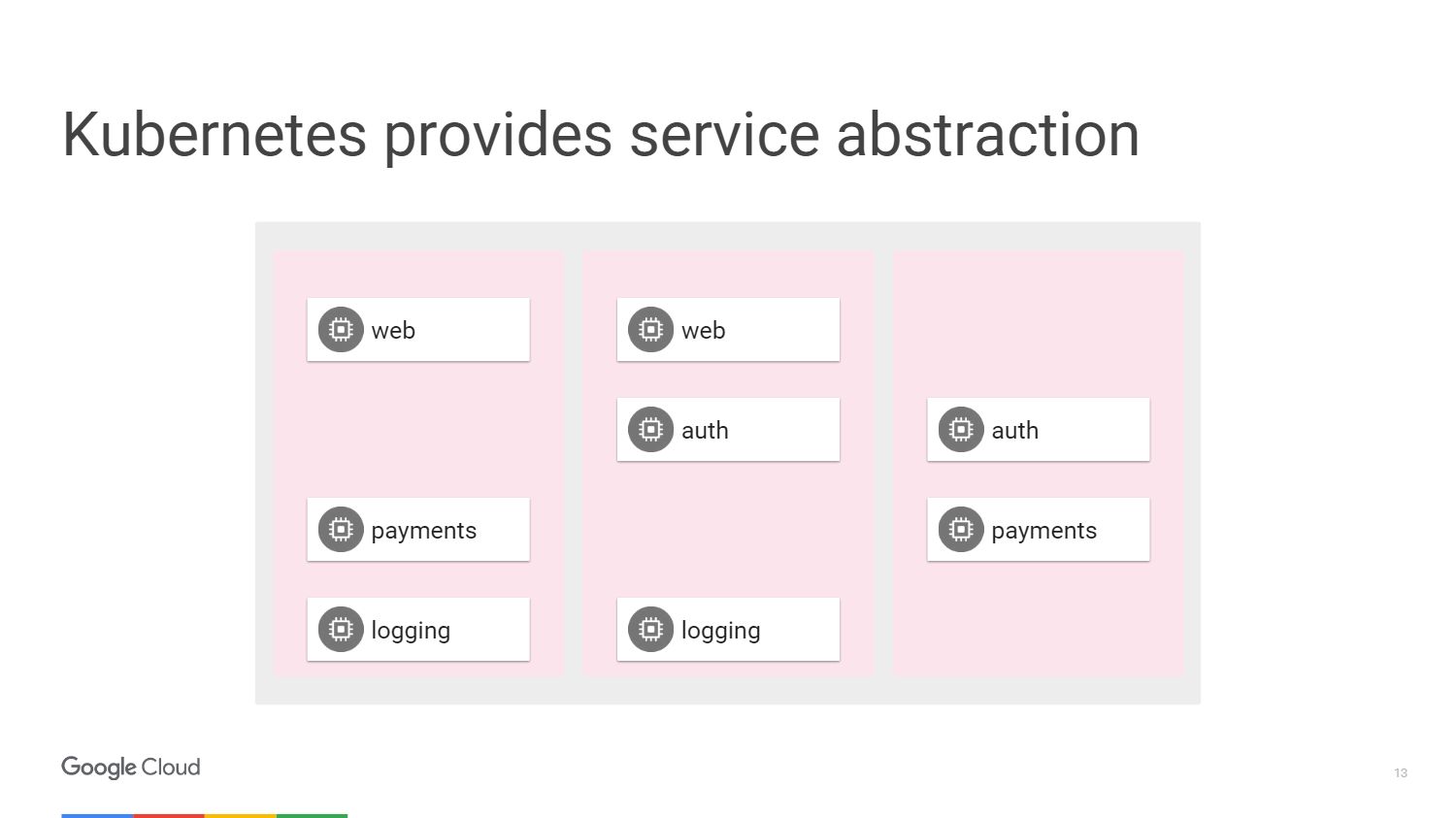

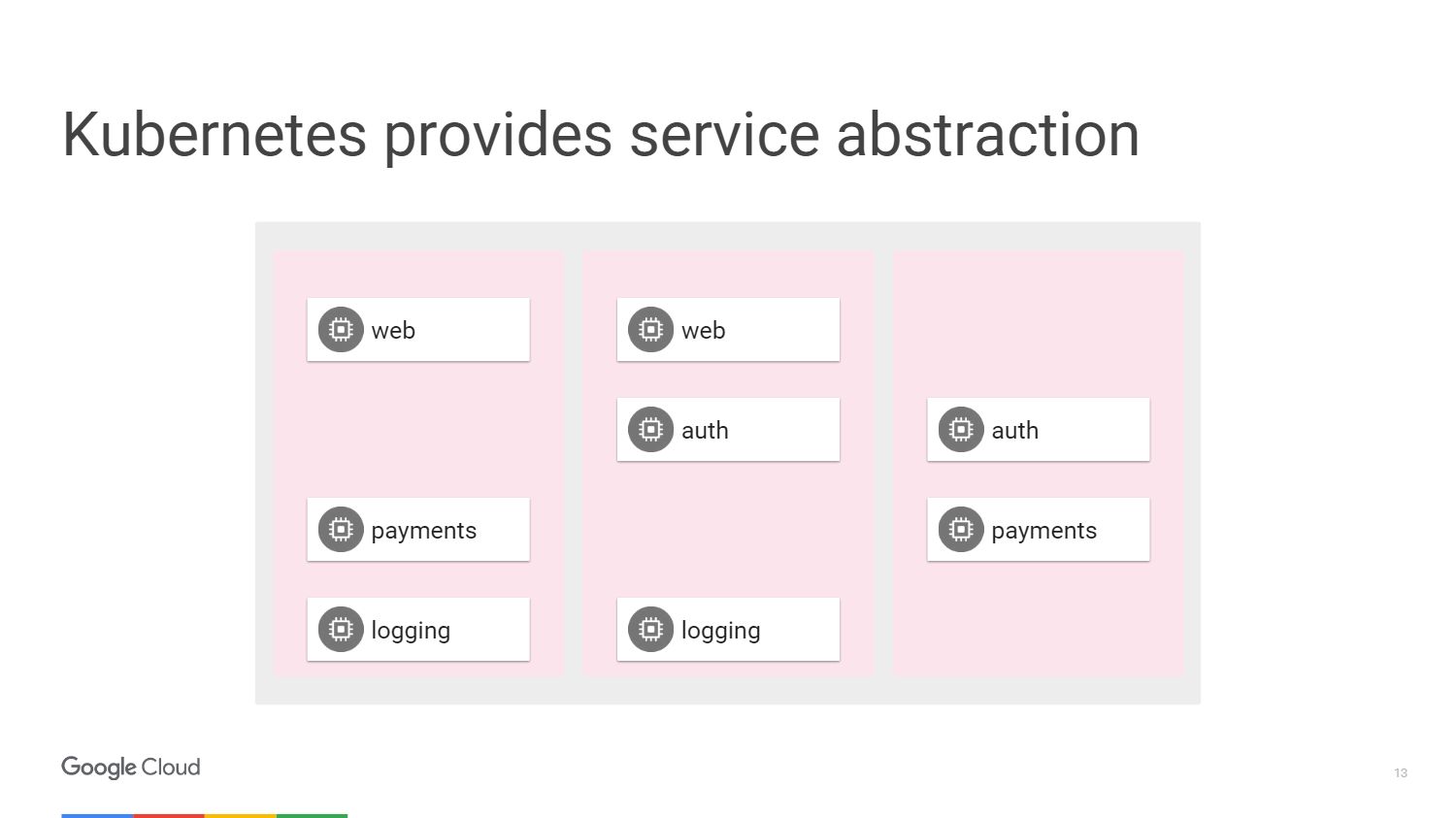

In the figure we see three different slices of machines and the blocks that make up our microservices. We have a way to group them together using the mechanisms provided by Kubernetes. We can target and say that a specific group, which may have automatic scaling, is attached to a web service or may have other deployment methods, will contain our web service. At the same time, we do not need to think about machines, we operate with terms of the level of access to network services.

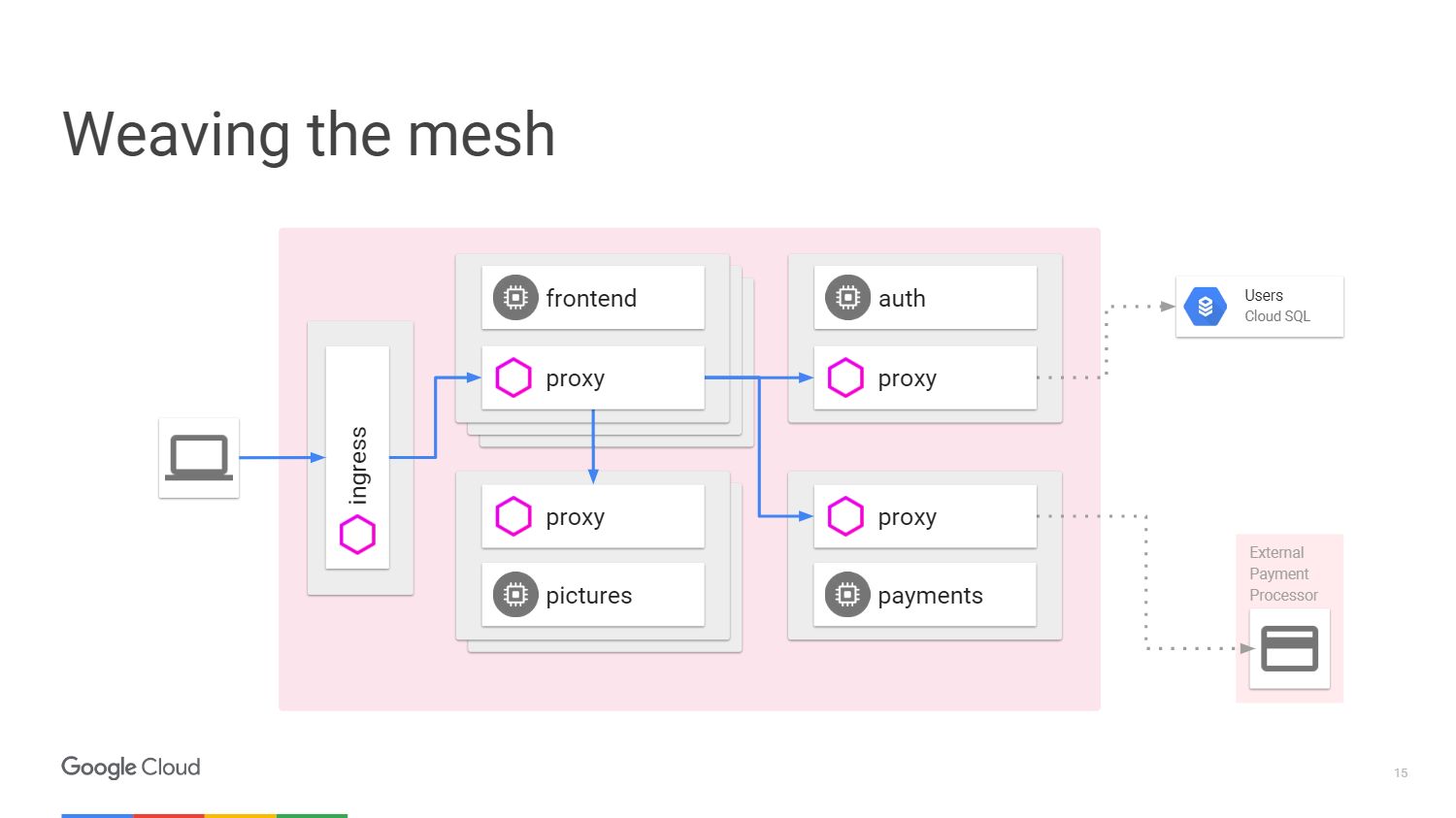

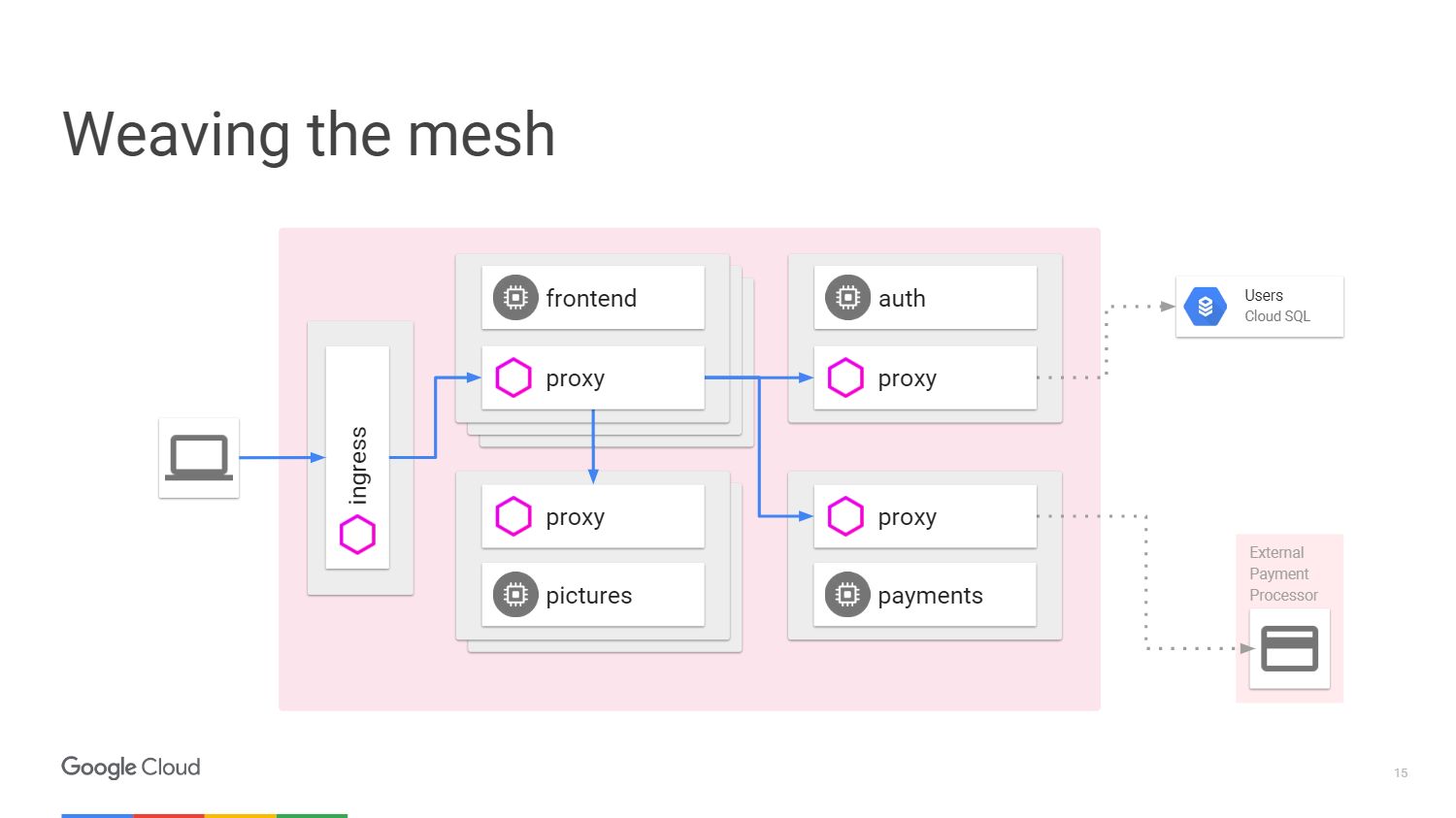

This situation can be represented as a diagram. Consider an example where we have a mechanism that does some image processing. On the left is the user, the traffic from which comes to us in microservice.

To receive payment from the user, we have a separate payment microservice that calls an external API, which is located outside the cluster.

To handle user logins to the system, we have an authentication microservice, and it has states saved, again, outside of our cluster in the Cloud SQL database .

What does Istio do? Istio improves Kubernetes. You set it up using the alpha function in Kubernetes called Initializer. When deploying software, kubernetes will notice it and ask if we want to change and add another container inside each kubernetes. This container will handle the paths and routing, be aware of all changes to the application.

This is how the scheme looks like with Istio.

We have external machines that provide inbound and outbound proxies for traffic in a particular service. We can unload functions that we already talked about. We do not need to teach the application how to perform telemetry or tracing using TLS. But we can add other things inside: automatic interruption, speed limit, canary release .

All traffic will now pass through proxy servers on external machines, and not directly to services. Kubernetes does everything on the same IP address. We will be able to intercept traffic that would go to the front or end services.

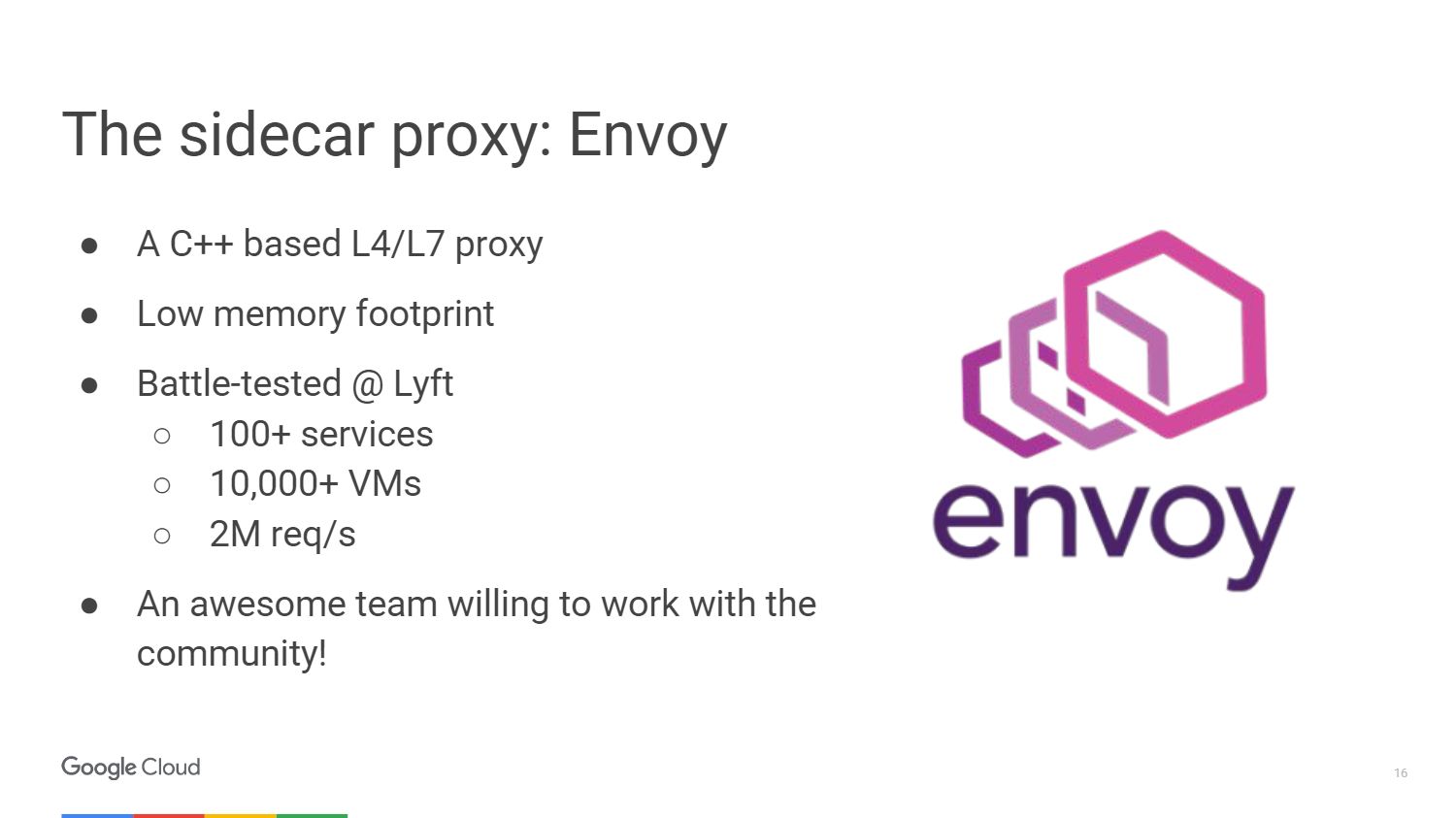

The external proxy that uses Istio is called Envoy .

Envoy is actually older than Istio, it was developed in Lyft. Works in production for over a year, launching the entire infrastructure of microservices. We chose Envoy for the Istio project in collaboration with the community. Thus, Google, IBM and Lyft are the three companies that are still working on it.

Envoy is written in C ++ version 11. It has been in production for more than 18 months before becoming an open source project. It does not take a lot of resources when you connect it to your services.

Here are a few things that Envoy can do. This is the creation of a proxy server for HTTP, including HTTP / 2 and protocols based on it, such as gRPC. It can also redirect to other protocols at the binary level. Envoy controls your infrastructure zone so you can make your part autonomous. It can handle a large number of network connections with retries and waits. You can set a certain number of attempts to connect to the server before stopping the call and send your servers information that the service is not responding.

No need to worry about restarting the application to add configuration to it. You simply connect to it using an API that is very similar to kubernetes, and change the configuration at runtime.

The Istio team contributed a lot to the UpStream Envoy Platform. For example, injection error notification. We made it so that we could see how the application behaves if the number of object requests that failed has been exceeded. They also implemented the graphical display and traffic sharing features to handle cases when canary deployment is used.

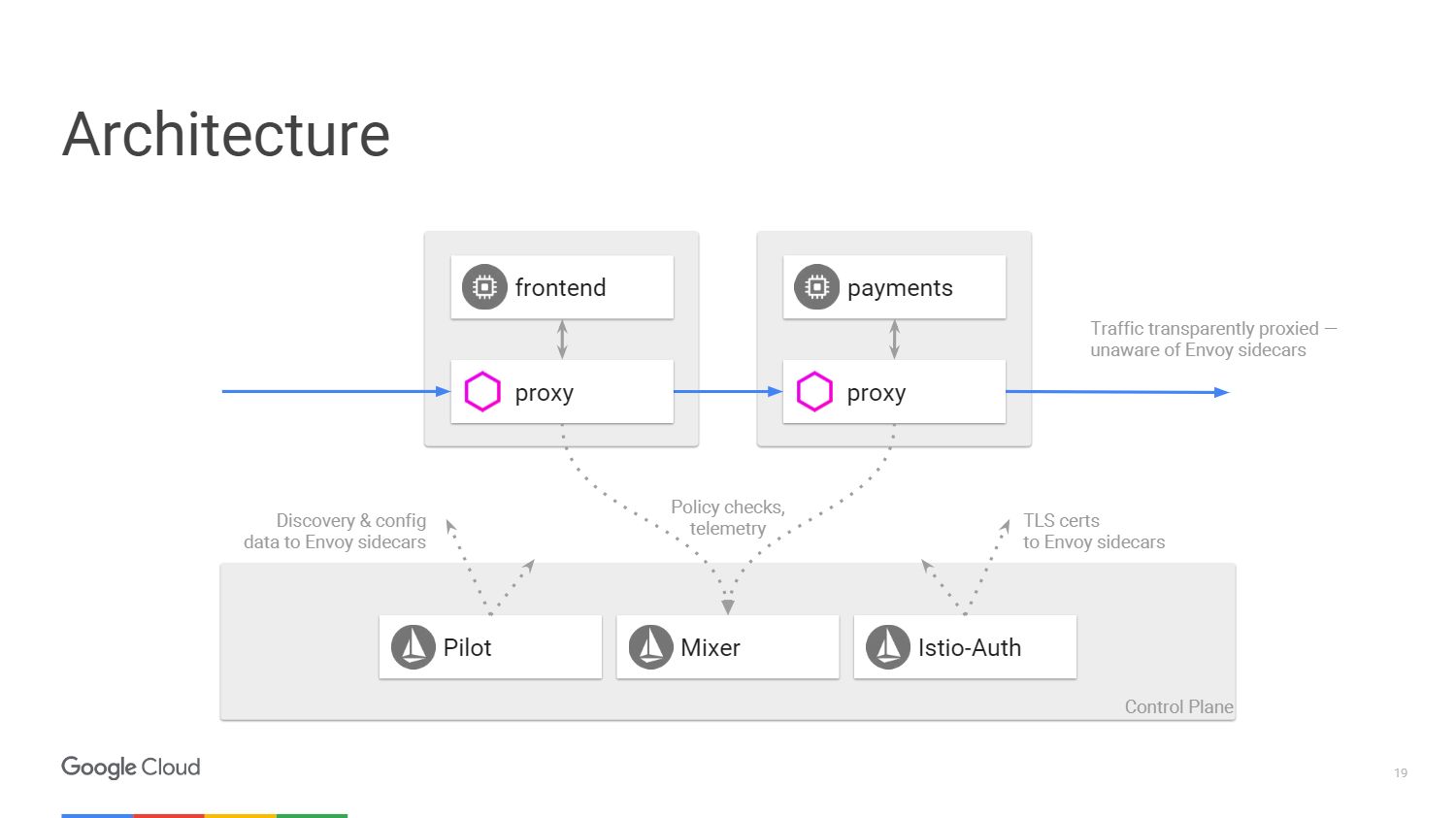

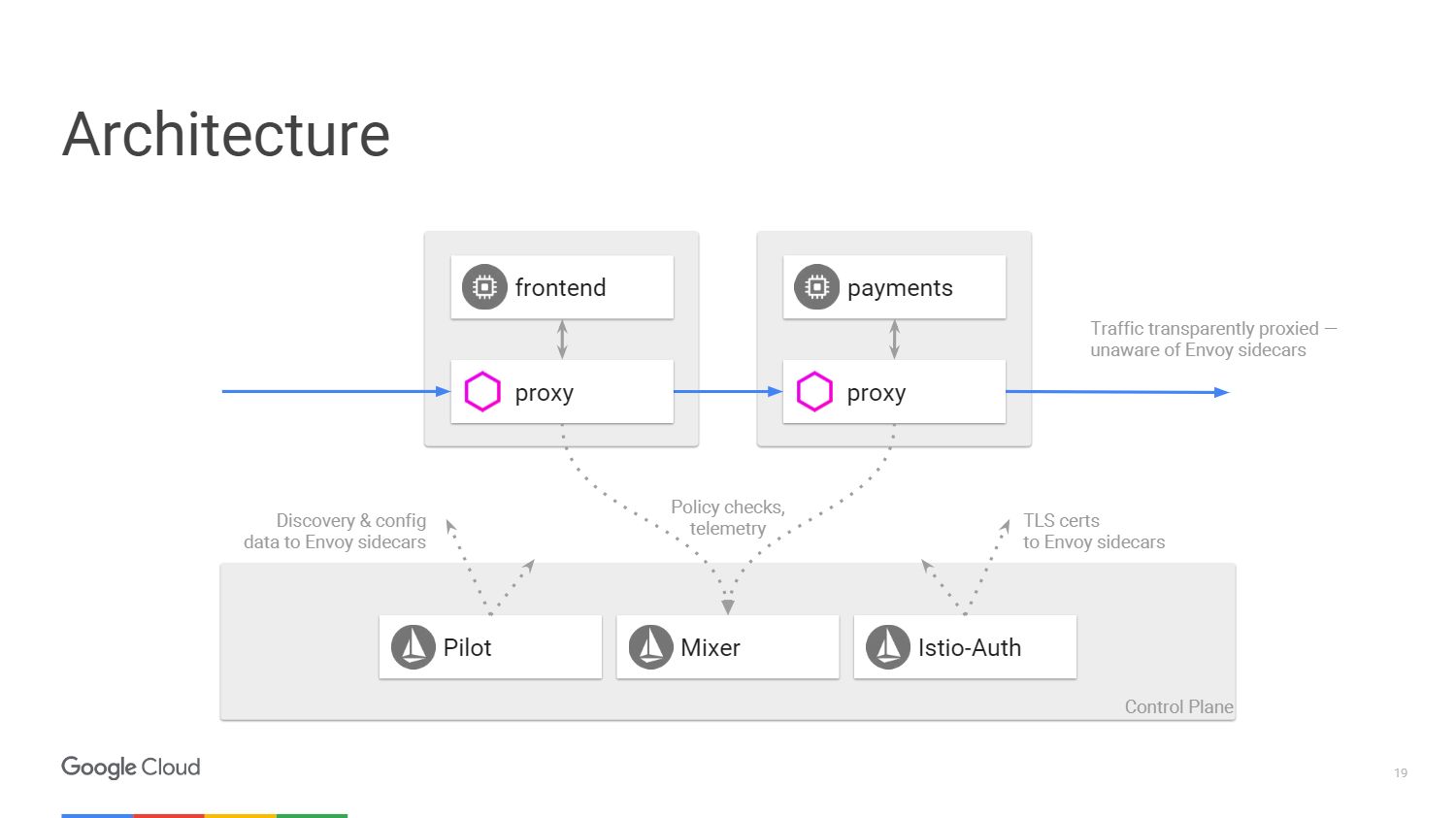

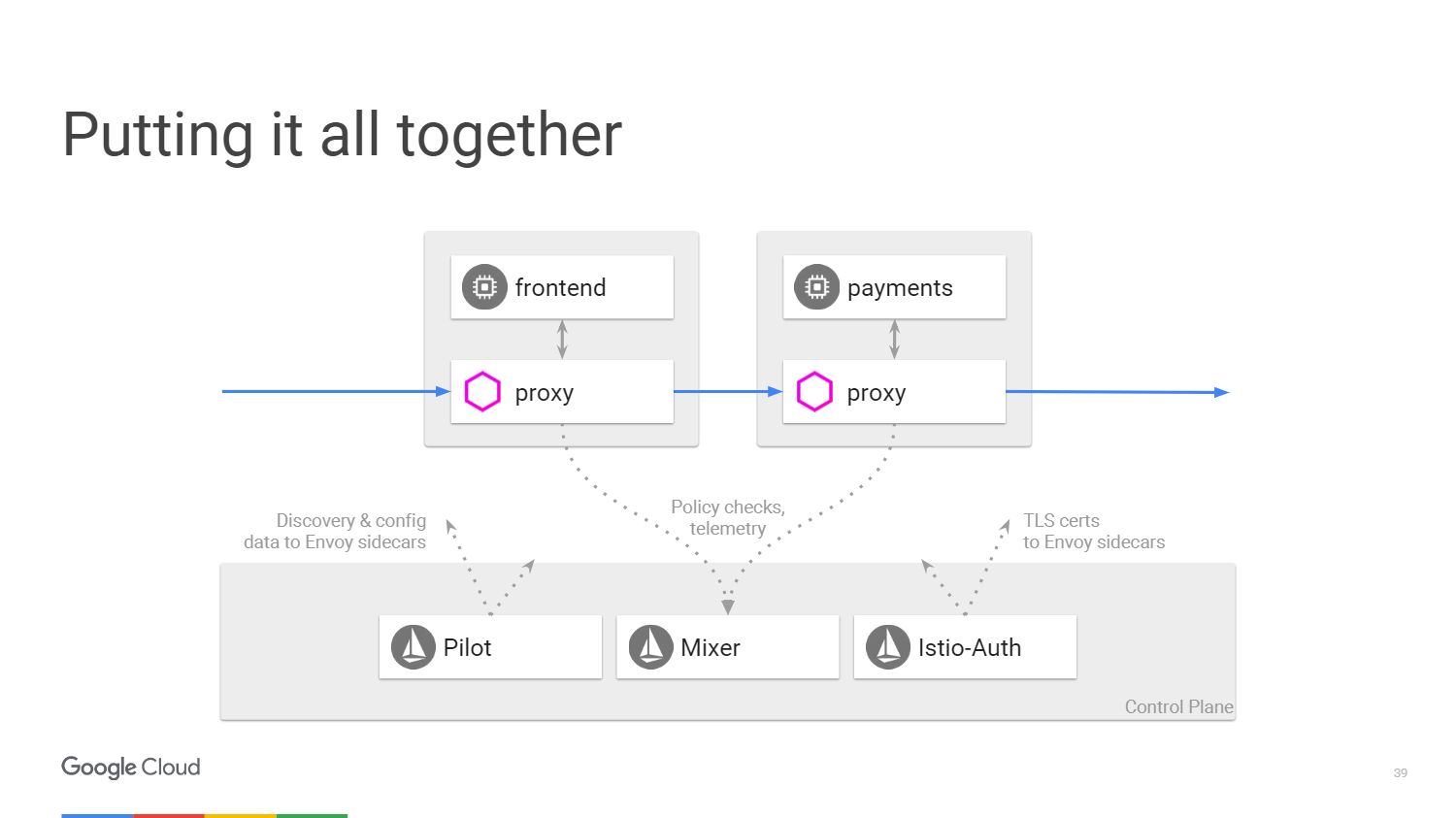

The figure shows what the Istio system architecture looks like. We will take only two microservices that we mentioned earlier. Ultimately, everything in the scheme is very similar to the software-defined network. The Envoy proxy, which we deployed with applications, migrates traffic using IP tables in the namespace. The control panel is responsible for managing the console, but it does not handle the traffic itself.

We have three components. Pilot, who creates the configuration, looks at the rules that can be changed using the Istio control panel API, and then updates Envoy so that it behaves like a cluster discovery service. Istio-Auth serves as a certificate authority and sends TLS certificates to proxy servers. The application does not require SSL, they can connect via HTTP, and the proxy will handle all of this for you.

Mixer processes requests to make sure that you comply with the security policy, and then transmits telemetry information. Without making any changes to the application, we can see everything that happens inside our cluster.

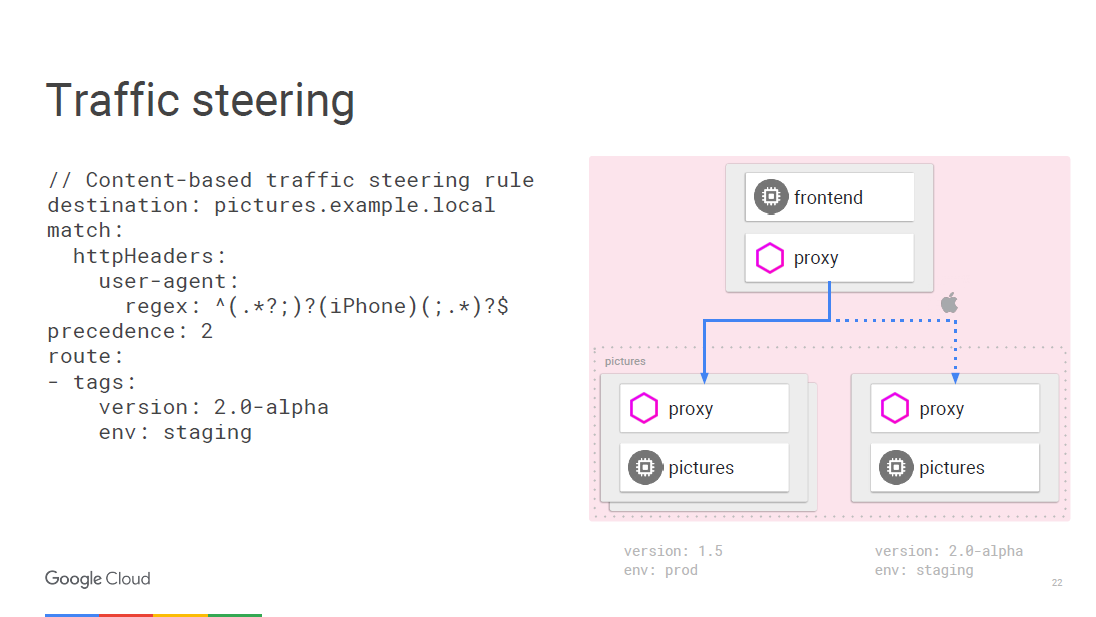

So let's talk more about the five things we get from Istio. First consider the traffic management . We can separate traffic control from infrastructure scaling, so earlier we could do something like 20 application instances and 19 of them will be on the old version, and one on the new, that is, 5% of the traffic will fall on the new version. With Istio, we can deploy any number of instances that we need, and at the same time indicate what percentage of traffic should be sent to new versions. Simple separation rule.

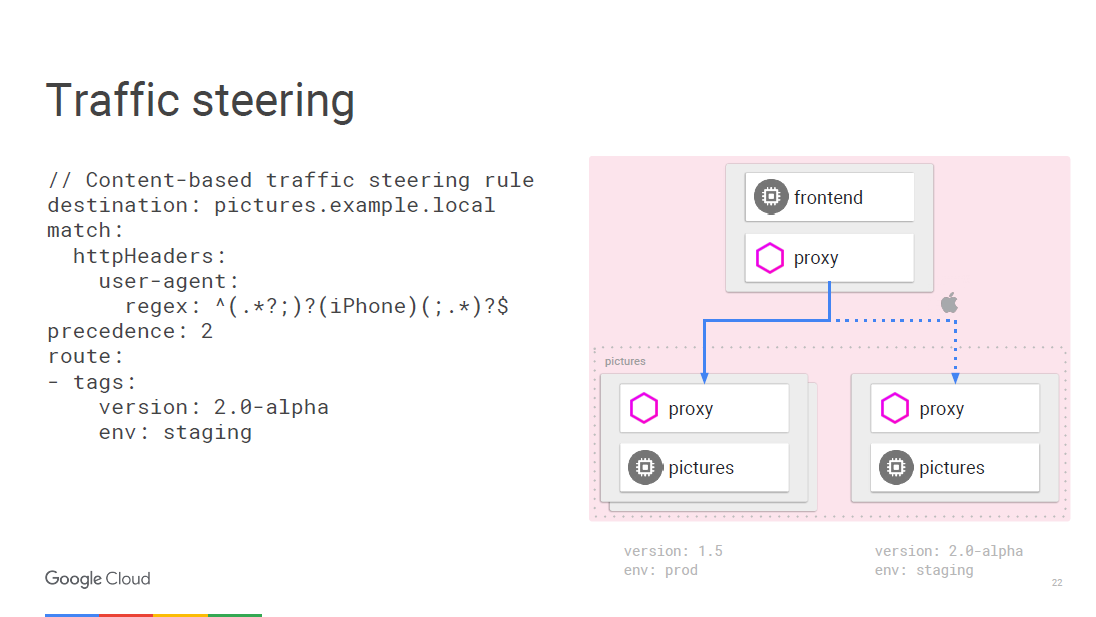

Everything can be programmed on the fly using rules. Envoy will be updated periodically as the configuration changes, and this will not lead to service failures. Since we work at the level of access to network services, we can look at the packages, and in this case it is possible to climb into the user agent, which is located on the third level of the network.

For example, we can say that any traffic from the iPhone follows a different rule, and we are going to send a certain amount of traffic to a new version that we want to test for a specific device. The internal calling microservice can determine which specific version it needs to connect to, and you can transfer it to another version, for example, 2.0.

The second advantage is transparency . When you have a presentation inside a cluster, you can understand how it is done. We do not need to create a toolkit for metrics during the development process. Metrics are already in each component.

Some believe that it is enough to keep a record of logs for monitoring. But in fact, all we need is to have such a universal set of indicators that can be fed to any monitoring service.

This is the Istio toolbar, created using the Prometheus service. Do not forget to deploy it inside the cluster.

The example in the screenshot shows a number of monitored parameters specific to the entire cluster. You can display more interesting things, for example, what percentage of applications gives more than 500 errors, which means failure. The response time is aggregated in all calling and answering instances of services within the cluster; this functionality does not require any configuration. Istio knows that Prometheus supports, and he knows what services are available in your cluster, so Istio-Mixer can send metrics to Prometheus without additional settings.

Let's see how it works. If you call a specific service, the proxy service sends information about that call to the Mixer, which captures parameters such as waiting for a response time, code status, and IP. It normalizes them and sends them to any servers that you have configured. Especially for displaying the main indicators, there is a Prometheus service and FLUX DB adapters, but you can also write your own adapter and output data in any format for any other application. And you will not have to change anything in the infrastructure if you want to add a new metric.

If you want to conduct a more in-depth study, then use the Zipkin distributed tracing system . Information on all calls that are routed through the Istio-Mixer can be sent to the Zipkin. There you will see the whole chain of microservice calls when answering the user and you will easily find a service that delays the processing time.

At the application level, there’s almost no need to worry about tracing. Envoy itself sends all the necessary information to Mixer, which sends it to the trace, for example, to Zipkin, stackdriver trace from Google or any other user application.

Let's talk about fault tolerance and efficiency .

Timeouts between calls to services are needed to test the health of, first of all, load balancers. We introduce errors in this relationship and see what happens. Consider an example. Suppose there is a connection between service A and service B. We are waiting for a response from the video service 100 milliseconds and give only 3 attempts if the result is not obtained. In fact, we are going to take it for 300 milliseconds before it reports a failed connection attempt.

Further, for example, our movie service should see the movie rating through another microservice. The timeout rating is 200 milliseconds and two call attempts are given. Calling the video service may cause you to wait 400 milliseconds in case the star rating is out of access. But, we remember, after 300 ms the movie service will report that it is non-working, and we will never know the real reason for the failure. Using timeouts and testing what happens in these cases is a great way to find all sorts of ingenious bugs in your microservice architecture.

Let's see now what's with efficiency. The kubernetes balancer itself operates only at the level of the fourth layer. We invented an input constructor for load balancing from layer two to layer seven. Istio is implemented as a balancer for the network layer with access to network services.

We perform TLS-offloading, so we use modern, well-doped SSL in Envoy, with which we don’t have to worry about vulnerabilities.

Another advantage of Istio is security .

What are some basic security features in Istio? Service Istio-Auth works in several directions. A common framework and identification standards for SPIFFE services are used . If we are talking about traffic flow, then we have the Istio Certificate Authority, which issues certificates for service accounts that we run inside the cluster. These certificates are SPIFFE compliant and are distributed by Envoy using the kubernetes protection mechanism. Envoy uses keys for two-way TLS authentication. Thus, backend applications receive identifiers on the basis of which a policy can already be organized.

Istio independently maintains root certificates so that you don’t worry about revocation and expiration. The system will respond to automatic scaling, so by entering a new entity, you get a new certificate. No manual settings. You do not need to configure a firewall. Users will use network policy and kubernetes to implement firewalls between containers.

Finally, the application of policies . Mixer is an integration point for infrastructure backends, which you can expand with Service Mesh. Services can easily move within a cluster, be deployed in multiple environments, in the cloud or locally. Everything is designed for the operational control of calls that go through Envoy. We can allow and prohibit specific calls, set preconditions for the missed calls, limit their speed and number. For example, you allow 20 free queries per day for some of your services. If the user has made 20 requests, the subsequent ones are not processed.

Prerequisites may include such things as, for example, passing an authentication server, ICL and its presence in the white list. Quota management can be used when it is required that everyone who uses the service has the same access speed. Finally, Mixer collects telemetry processing results for requests and responses. This allows manufacturers and users to look at this telemetry using services.

Remember the first slide with the photo app from which we started studying Istio? All of the above is hidden under such a simple form. At the top level, everything you need will be automatically executed. You will deploy the application and will not worry about how to define a security policy or configure some routing rules. The application will work exactly as expected.

Istio supports previous versions of kubernetes, but the new function of the initializer, which I talked about, is in versions 1.7 and higher. This is the alpha function in kubernetes. I recommend using Google Container Engine Alpha clusters. We have clusters that you can turn on for a certain number of days and at the same time use all the production capabilities in them.

First of all, Istio is an open source project on github. We have just released version 0.2. In version 0.1, it was possible to manage objects within the kubernetes namespace of the same name. Since version 0.2, we support working in our own namespace and the kubernetes cluster. We also added access to manage services that run on virtual machines. You can deploy Envoy on a virtual machine and secure the services that run on it. In the future, Istio will support other platforms, such as Cloud Foundry .

A quick guide to installing the framework is here . If you have a cluster running the Google Container Engine on 1.8 with alpha functions enabled, then installing Istio is just one command.

The article is based on the Craig Box report at our last DevOops 2017 conference. The video and translation of the report is under the cut.

Craig Box (Craig Box, Twitter ) - DevRel from Google, responsible for the direction of microservices and tools Kubernetes and Istio. His current story is about managing microservices on these platforms.

')

Let's start with a relatively new concept called Service Mesh. This term is used to describe the network of microservices interacting with each other, of which the application consists.

At a high level, we view the network as a pipe that simply moves bits. We do not want to worry about them or, for example, about MAC addresses in applications, but we strive to think about the services and connections that they need. If you look at it from an OSI point of view, then we have a third-level network (with routing and logical addressing functions), but we want to think in terms of the seventh (with access to network services).

What should a real network of the seventh level look like? Perhaps we want to see something like tracing traffic around problem services. To be able to connect to the service, and at the same time the level of the model was raised up from the third level. We want to get an idea of what is happening in the cluster, find unintended dependencies, find out the root causes of failures. We also need to avoid unnecessary overhead, for example, a connection with a high latency or a connection to servers with cold or not fully heated caches.

We need to ensure that traffic between services is protected from trivial attacks. Mutual TLS authentication is required, but without embedding the appropriate modules in each application we write. It is important to be able to control what surrounds our applications not only at the connection level, but also at a higher level.

Service Mesh is a layer that allows us to solve the above problems in the micro-service environment.

Monolith and microservices: pros and cons

But first we ask ourselves, why should we solve these problems at all? How did we do software development before? We had an application that looks something like this - like a monolith.

It's great: all the code is on our palm. Why not continue to use this approach?

Yes, because the monolith has its own problems. The main difficulty is that if we want to rebuild this application, we must redeploy each module, even if nothing has changed. We have to create an application in the same language or in compatible languages, even if different teams are working on it. In fact, the individual parts cannot be tested independently of each other. It's time to change, it's time microservices.

So, we have divided the monolith into pieces. You may notice that in this example we have removed some of the unnecessary dependencies and stopped using the internal methods called from other modules. We created services from models that were used earlier, creating abstractions in cases where we need to save state. For example, each service must have an independent state so that when you access it you don’t worry about what is happening in the rest of our environment.

What was the result?

We left the world of giant apps, getting what really looks great. We accelerated development, stopped using internal methods, created services and now we can scale them independently, make the service more without the need to consolidate everything else. But what is the price of change that we lost in the process?

We had reliable calls within applications, because you just called a function or module. We have replaced a reliable call inside the module with an unreliable remote procedure call. But not always the service on the other side is available.

We were safe, using the same process inside the same machine. Now we connect to services that can be on different machines and on an unreliable network.

The new approach to the network may be the presence of other users who are trying to connect to services. Delays have increased, and at the same time their measurement capabilities have decreased. Now we have step-by-step connections in all services that create one module call, and we can no longer just look at the application in the debugger and find out exactly what caused the crash. And this problem must somehow be solved. Obviously, we need a new set of tools.

What can be done?

There are several options. We can take our application and say that if RPC does not work the first time, then you should try again, and then again and again. Wait a while and try again or add a jitter. We can also add the entry - exit traces to say that there was a start and end of the call, which for me is equivalent to debugging. You can add infrastructure to provide authentication of connections and teach all of our applications to work with TLS encryption. We will have to take on the burden of maintaining individual commands and constantly keep in mind the various problems that may arise in SSL libraries.

Maintaining consistency across multiple platforms is a thankless task. I would like the space between applications to become reasonable, so that the possibility of tracking appears. We also need the ability to change the configuration at run time in order not to recompile or not to restart the migration application. Here these Wishlist and implements Service Mesh.

Istio

Let's talk about Istio .

Istio is a complete framework for connecting, managing and monitoring the microservice architecture. Istio is designed to work on top of Kubernetes. He himself does not deploy software and does not care to make it available on the machines that we use for this purpose with containers in Kubernetes.

In the figure we see three different slices of machines and the blocks that make up our microservices. We have a way to group them together using the mechanisms provided by Kubernetes. We can target and say that a specific group, which may have automatic scaling, is attached to a web service or may have other deployment methods, will contain our web service. At the same time, we do not need to think about machines, we operate with terms of the level of access to network services.

This situation can be represented as a diagram. Consider an example where we have a mechanism that does some image processing. On the left is the user, the traffic from which comes to us in microservice.

To receive payment from the user, we have a separate payment microservice that calls an external API, which is located outside the cluster.

To handle user logins to the system, we have an authentication microservice, and it has states saved, again, outside of our cluster in the Cloud SQL database .

What does Istio do? Istio improves Kubernetes. You set it up using the alpha function in Kubernetes called Initializer. When deploying software, kubernetes will notice it and ask if we want to change and add another container inside each kubernetes. This container will handle the paths and routing, be aware of all changes to the application.

This is how the scheme looks like with Istio.

We have external machines that provide inbound and outbound proxies for traffic in a particular service. We can unload functions that we already talked about. We do not need to teach the application how to perform telemetry or tracing using TLS. But we can add other things inside: automatic interruption, speed limit, canary release .

All traffic will now pass through proxy servers on external machines, and not directly to services. Kubernetes does everything on the same IP address. We will be able to intercept traffic that would go to the front or end services.

The external proxy that uses Istio is called Envoy .

Envoy is actually older than Istio, it was developed in Lyft. Works in production for over a year, launching the entire infrastructure of microservices. We chose Envoy for the Istio project in collaboration with the community. Thus, Google, IBM and Lyft are the three companies that are still working on it.

Envoy is written in C ++ version 11. It has been in production for more than 18 months before becoming an open source project. It does not take a lot of resources when you connect it to your services.

Here are a few things that Envoy can do. This is the creation of a proxy server for HTTP, including HTTP / 2 and protocols based on it, such as gRPC. It can also redirect to other protocols at the binary level. Envoy controls your infrastructure zone so you can make your part autonomous. It can handle a large number of network connections with retries and waits. You can set a certain number of attempts to connect to the server before stopping the call and send your servers information that the service is not responding.

No need to worry about restarting the application to add configuration to it. You simply connect to it using an API that is very similar to kubernetes, and change the configuration at runtime.

The Istio team contributed a lot to the UpStream Envoy Platform. For example, injection error notification. We made it so that we could see how the application behaves if the number of object requests that failed has been exceeded. They also implemented the graphical display and traffic sharing features to handle cases when canary deployment is used.

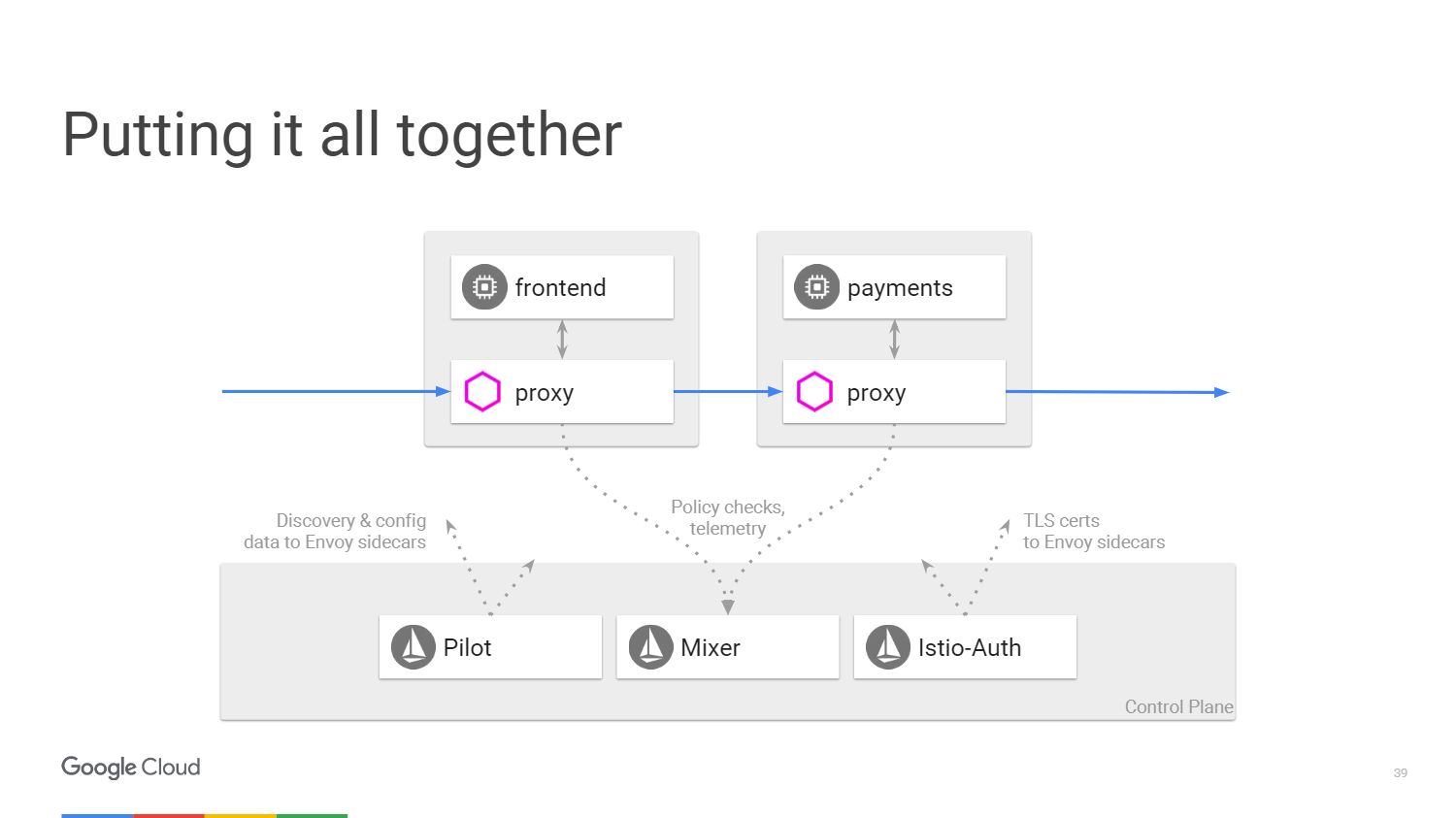

The figure shows what the Istio system architecture looks like. We will take only two microservices that we mentioned earlier. Ultimately, everything in the scheme is very similar to the software-defined network. The Envoy proxy, which we deployed with applications, migrates traffic using IP tables in the namespace. The control panel is responsible for managing the console, but it does not handle the traffic itself.

We have three components. Pilot, who creates the configuration, looks at the rules that can be changed using the Istio control panel API, and then updates Envoy so that it behaves like a cluster discovery service. Istio-Auth serves as a certificate authority and sends TLS certificates to proxy servers. The application does not require SSL, they can connect via HTTP, and the proxy will handle all of this for you.

Mixer processes requests to make sure that you comply with the security policy, and then transmits telemetry information. Without making any changes to the application, we can see everything that happens inside our cluster.

Advantages of Istio

So let's talk more about the five things we get from Istio. First consider the traffic management . We can separate traffic control from infrastructure scaling, so earlier we could do something like 20 application instances and 19 of them will be on the old version, and one on the new, that is, 5% of the traffic will fall on the new version. With Istio, we can deploy any number of instances that we need, and at the same time indicate what percentage of traffic should be sent to new versions. Simple separation rule.

Everything can be programmed on the fly using rules. Envoy will be updated periodically as the configuration changes, and this will not lead to service failures. Since we work at the level of access to network services, we can look at the packages, and in this case it is possible to climb into the user agent, which is located on the third level of the network.

For example, we can say that any traffic from the iPhone follows a different rule, and we are going to send a certain amount of traffic to a new version that we want to test for a specific device. The internal calling microservice can determine which specific version it needs to connect to, and you can transfer it to another version, for example, 2.0.

The second advantage is transparency . When you have a presentation inside a cluster, you can understand how it is done. We do not need to create a toolkit for metrics during the development process. Metrics are already in each component.

Some believe that it is enough to keep a record of logs for monitoring. But in fact, all we need is to have such a universal set of indicators that can be fed to any monitoring service.

This is the Istio toolbar, created using the Prometheus service. Do not forget to deploy it inside the cluster.

The example in the screenshot shows a number of monitored parameters specific to the entire cluster. You can display more interesting things, for example, what percentage of applications gives more than 500 errors, which means failure. The response time is aggregated in all calling and answering instances of services within the cluster; this functionality does not require any configuration. Istio knows that Prometheus supports, and he knows what services are available in your cluster, so Istio-Mixer can send metrics to Prometheus without additional settings.

Let's see how it works. If you call a specific service, the proxy service sends information about that call to the Mixer, which captures parameters such as waiting for a response time, code status, and IP. It normalizes them and sends them to any servers that you have configured. Especially for displaying the main indicators, there is a Prometheus service and FLUX DB adapters, but you can also write your own adapter and output data in any format for any other application. And you will not have to change anything in the infrastructure if you want to add a new metric.

If you want to conduct a more in-depth study, then use the Zipkin distributed tracing system . Information on all calls that are routed through the Istio-Mixer can be sent to the Zipkin. There you will see the whole chain of microservice calls when answering the user and you will easily find a service that delays the processing time.

At the application level, there’s almost no need to worry about tracing. Envoy itself sends all the necessary information to Mixer, which sends it to the trace, for example, to Zipkin, stackdriver trace from Google or any other user application.

Let's talk about fault tolerance and efficiency .

Timeouts between calls to services are needed to test the health of, first of all, load balancers. We introduce errors in this relationship and see what happens. Consider an example. Suppose there is a connection between service A and service B. We are waiting for a response from the video service 100 milliseconds and give only 3 attempts if the result is not obtained. In fact, we are going to take it for 300 milliseconds before it reports a failed connection attempt.

Further, for example, our movie service should see the movie rating through another microservice. The timeout rating is 200 milliseconds and two call attempts are given. Calling the video service may cause you to wait 400 milliseconds in case the star rating is out of access. But, we remember, after 300 ms the movie service will report that it is non-working, and we will never know the real reason for the failure. Using timeouts and testing what happens in these cases is a great way to find all sorts of ingenious bugs in your microservice architecture.

Let's see now what's with efficiency. The kubernetes balancer itself operates only at the level of the fourth layer. We invented an input constructor for load balancing from layer two to layer seven. Istio is implemented as a balancer for the network layer with access to network services.

We perform TLS-offloading, so we use modern, well-doped SSL in Envoy, with which we don’t have to worry about vulnerabilities.

Another advantage of Istio is security .

What are some basic security features in Istio? Service Istio-Auth works in several directions. A common framework and identification standards for SPIFFE services are used . If we are talking about traffic flow, then we have the Istio Certificate Authority, which issues certificates for service accounts that we run inside the cluster. These certificates are SPIFFE compliant and are distributed by Envoy using the kubernetes protection mechanism. Envoy uses keys for two-way TLS authentication. Thus, backend applications receive identifiers on the basis of which a policy can already be organized.

Istio independently maintains root certificates so that you don’t worry about revocation and expiration. The system will respond to automatic scaling, so by entering a new entity, you get a new certificate. No manual settings. You do not need to configure a firewall. Users will use network policy and kubernetes to implement firewalls between containers.

Finally, the application of policies . Mixer is an integration point for infrastructure backends, which you can expand with Service Mesh. Services can easily move within a cluster, be deployed in multiple environments, in the cloud or locally. Everything is designed for the operational control of calls that go through Envoy. We can allow and prohibit specific calls, set preconditions for the missed calls, limit their speed and number. For example, you allow 20 free queries per day for some of your services. If the user has made 20 requests, the subsequent ones are not processed.

Prerequisites may include such things as, for example, passing an authentication server, ICL and its presence in the white list. Quota management can be used when it is required that everyone who uses the service has the same access speed. Finally, Mixer collects telemetry processing results for requests and responses. This allows manufacturers and users to look at this telemetry using services.

Remember the first slide with the photo app from which we started studying Istio? All of the above is hidden under such a simple form. At the top level, everything you need will be automatically executed. You will deploy the application and will not worry about how to define a security policy or configure some routing rules. The application will work exactly as expected.

How to start working with Istio

Istio supports previous versions of kubernetes, but the new function of the initializer, which I talked about, is in versions 1.7 and higher. This is the alpha function in kubernetes. I recommend using Google Container Engine Alpha clusters. We have clusters that you can turn on for a certain number of days and at the same time use all the production capabilities in them.

First of all, Istio is an open source project on github. We have just released version 0.2. In version 0.1, it was possible to manage objects within the kubernetes namespace of the same name. Since version 0.2, we support working in our own namespace and the kubernetes cluster. We also added access to manage services that run on virtual machines. You can deploy Envoy on a virtual machine and secure the services that run on it. In the future, Istio will support other platforms, such as Cloud Foundry .

A quick guide to installing the framework is here . If you have a cluster running the Google Container Engine on 1.8 with alpha functions enabled, then installing Istio is just one command.

If you liked this report, come on October 14 to the DevOops 2018 conference (Peter): there you can not only listen to the reports, but also chat with any speaker in the discussion area.

Source: https://habr.com/ru/post/423011/

All Articles