390k. websites with an open .git folder

In July, security researcher Vladimir Smitka decided to check the Internet for the presence of open .git folders after a similar audit was recently conducted for the Internet domains of the Czech Republic and Slovakia.

As the saying goes: "there has never been such a thing, and here it is again." Let me remind you, 9 years ago, exactly the same story was with the Russian segment of the Internet affected by the open .svn syndrome. Below are the results of the hard work of the Czech researcher, tools and methods.

Causes of vulnerability

An attacker can learn a lot of information that is critical to the security of a site from a .git directory . This is a typical project tree.

├── HEAD ├── branches ├── config ├── description ├── hooks │ ├── pre-commit.sample │ ├── pre-push.sample │ └── ... ├── info │ └── exclude ├── objects │ ├── info │ └── pack └── refs ├── heads └── tags It can store passwords and access keys to various APIs, databases, and services rendered to the cloud.

Often, as it should be, an attempt to open the .git folder produces an HTTP 403 error, but the reason is only the lack of index.html / index.php and the rights to automatically index the folder, while individual files are still available . In order to make sure that the site is not affected by vulnerability, open the /.git/HEAD page /.git/HEAD .

This file contains a link to the current project branch.

$ cat .git/HEAD ref: refs/heads/master Even if automatic indexing of directories is prohibited, you can easily restore the entire .git folder by downloading individual files and defining dependencies with a regular expression processor, due to the fact that the .git structure is clearly defined. There is also a special tool - GitTools , which automatically performs all the necessary actions.

Means of production

Despite all the complexity and ambitiousness of the task, the costs in monetary terms were modest. For everything, everything went for $ 250.

Server

Smitka rented 18 VPS and 4 physical servers for the project. According to him, his choice did not fall on AWS due to the fact that the full cost of the service, taking into account the expected huge volumes of traffic, significant disk space and high loads on the CPU, did not lend itself to a simple calculation. The price of the rented VPS was fixed known in advance.

Domain List

The list was based on the text logs of the OpenData Rapid7 project in JSON .

{ "$id": "https://opendata.rapid7.com/sonar.fdns_v2/", "type": "object", "definitions": {}, "$schema": "http://json-schema.org/draft-07/schema#", "additionalProperties": false, "properties": { "timestamp": { "$id": "/properties/timestamp", "type": "string", "description": "The time when this response was received in seconds since the epoch" }, "name": { "$id": "/properties/name", "type": "string", "description": "The record name" }, "type": { "$id": "/properties/type", "type": "string", "description": "The record type" }, "value": { "$id": "/properties/value", "type": "string", "description": "The response received for a record of the given name and type" } } } After some filtering of TLDs and second-level domains, there were still over 230 million entries in the list.

Then the database was divided into blocks of 2 million records and distributed the load across different servers using a PHP application.

Soft

The workhorse was performed by Python hung with asyncio asyncio libraries with aiohttp . The attempt to use Requests and Urllib3 for these purposes was not crowned with success, of which the first one might well fit, but the researcher did not understand the time-outs in the documentation. The second one didn’t cope with the redirection of domains and because of this, it rather quickly exhausted the memory on the servers.

To identify the platform, the profile of vulnerable sites, Smitka used the WAD utility based on the Wappalyzer database - an extension for a web browser that allows you to determine the technologies used on the page.

Simple command line utilities like GNU Parallels were also used to speed up the execution time of the handler and prevent the script from stopping due to one hangup.

cat sites.txt | parallel --bar --tmpdir ./wad --files wad -u {} -f csv results

Scanning lasted 2 weeks, as a result, the researcher:

- found 390 thousand vulnerable web sites;

- collected 290 thousand email addresses;

- notified 90 thousand recipients of the found vulnerability.

In response to his efforts, Smitka received:

- 18 thousand message delivery errors;

- about 2000 letters of appreciation;

- 30 false positives with honeypot systems;

- 1 threat to call the Canadian police;

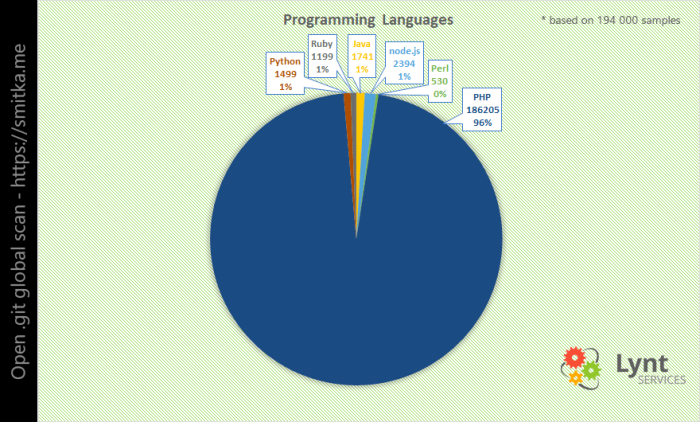

PHP was the most popular programming language . However, if we normalize the result to the relative share of one or another PL, then PHP yields to Python and Node.js. However, it is unclear how reliable such statistics of determining the market share of a given programming language can be.

The popularity list of web servers is headed by Apache , in second place is Nginx, and the Chinese clone Nginx Tengine is suddenly in third place.

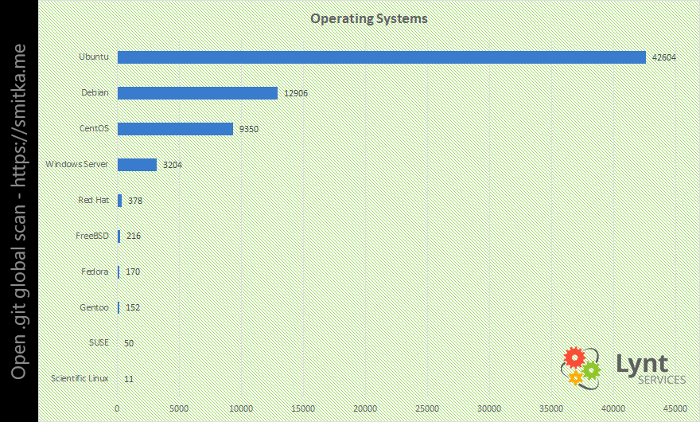

The most popular OS was Ubuntu , then Debian, and CentOS in third place.

The CMS nomination almost turned out to be a one-man show, and this actor is WordPress with 85% of all the platforms found.

What's next

Fix the vulnerability is easy.

.htaccess

RewriteRule "(^|/)\.(?!well-known\/)" - [F] .nginx

location ~ /\.(?!well-known\/) { deny all; } apache22.conf

<Directory ~ "/\.(?!well-known\/)"> Order deny,allow Deny from all </Directory> apache24.conf

<Directory ~ "/\.(?!well-known\/)"> Require all denied </Directory> Caddyfile

status 403 /blockdot rewrite { r /\.(?!well-known\/) to /blockdot } ')

Source: https://habr.com/ru/post/422725/

All Articles