Hybrid camera and lidar improves robotic capabilities, complementing information about the outside world

Lidars and cameras are two standard configuration items of almost any mobile. Both the first and second work with the reflected light. The cameras operate in a passive mode, that is, they catch the reflection of third-party light sources, but the lidars generate laser pulses, then measuring the "response" reflected from nearby objects. Cameras form a two-dimensional image, and lidars - three-dimensional, something like a “point cloud”.

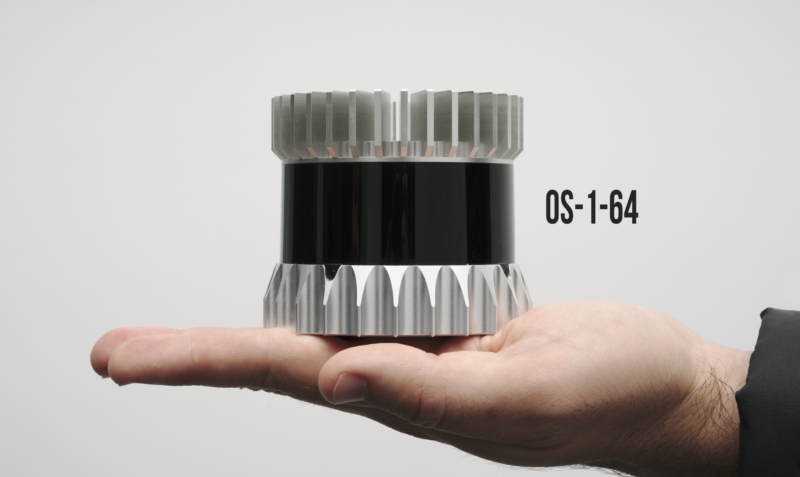

Ouster has developed a hybrid device that works both as a camera and as a lidar. This system is called OS-1. This device has an aperture larger than most DSLRs, while the sensor created by the company is very sensitive.

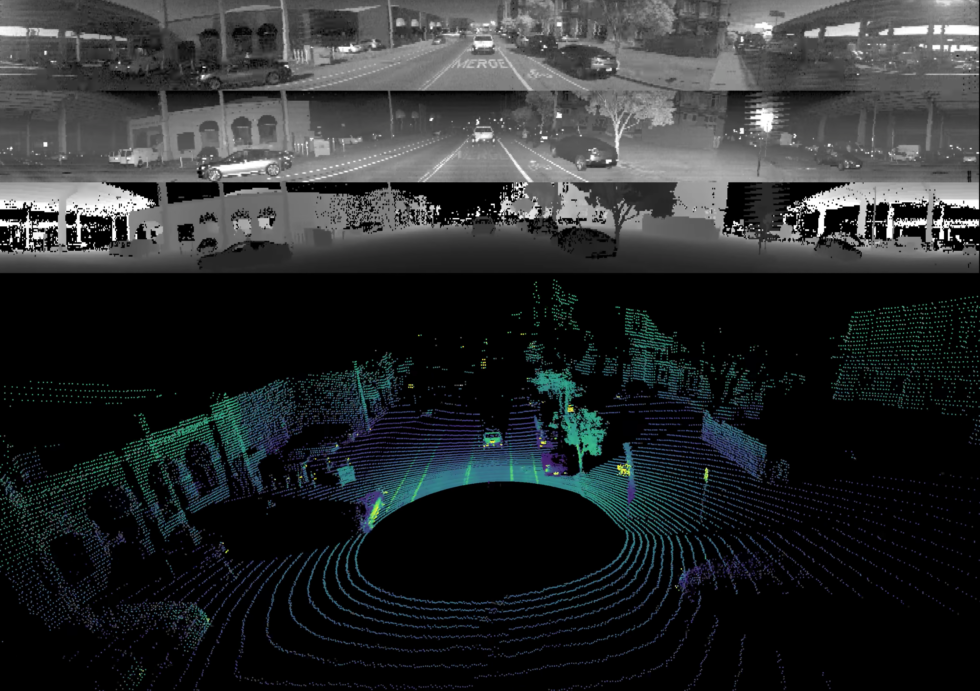

The images received by the system consist of three layers. The first is an image taken like a normal camera. The second is a “laser” layer, produced using the reflection of a laser beam. And the third is the “deep” layer, which allows you to estimate the distance between the individual pixels of the first two layers.

')

It is worth noting that the images still have significant limitations. First, these are low resolution images. Secondly, they are black and white, not colored. Thirdly, the lidar does not work with a visible light source, it deals with a spectrum close to the infrared.

At the moment, the cost of lidar is quite high - about $ 12,000. At first glance, there’s no point in a system that receives images of lower resolution than standard cameras, but costs like a cast-iron bridge, no. But the developers claim that a different principle of operation is used here than in the ordinary case.

These are graphic materials provided by Ouster. Here are shown three layers of images and a common “picture”, which is the result

In a typical situation, roboMobi combine data from several different sources, which takes time. The cameras and lidars work in different modes, the result of the work is also different. In addition, they are usually mounted in various places of the car body, so that the computer also has to deal with the correlation of images so that they are compatible. Moreover, sensors require regular recalibration, which is not so easy to do.

Some lidar developers have already tried to combine the camera with the lidar. But the results were not very good. It was the “standard camera + lidar” system, which was not too different from the existing schemes.

Ouster instead uses a system that allows OS-1 to collect all the data in one standard and from one location. All three layers of the image perfectly correlate, both in time and in space. In this case, the computer understands the distance between the individual pixels of the final image.

According to the authors of the project, such a scheme is almost ideal for machine learning. For computer systems, the processing of such images is not difficult. “Feed” the system a few hundred shots, it can be trained to understand exactly what is depicted in the final “picture”.

Some types of neural networks are designed to work seamlessly with multi-layer pixel maps. In addition, images may contain red, blue and green layer. Teaching such systems to work with the result of OS-1 is not particularly difficult. Ouster has already solved this problem.

They took several neural networks as the source material, which were designed to recognize RGB images, and modified them to fit their needs, teaching them to work with different layers of their images. Data processing is carried out on the equipment with Nvidia GTX 1060. With the help of neural networks, the car's computer taught to “paint” the road in yellow, and the potential obstacles - other cars - in red.

According to the developers, their system is an addition to the existing ones, and not a replacement. It is best to combine all sorts of sensors, sensors, cameras, lidars and hybrid systems to form a clear picture of the environment that will help the car navigate.

Source: https://habr.com/ru/post/422691/

All Articles