Organization of effective interaction microservices

Recently, microservice architectures have gained some popularity. How microservices interact can affect the performance and scalability of solutions based on them. This interaction can be synchronous or asynchronous. The material, the translation of which we present to your attention today, discusses synchronous methods of interaction of microservices. Namely, we will discuss the study of two technologies: HTTP / 1.1 and gRPC. The first technology is represented by standard HTTP calls. The second is based on using the high-performance RPC framework from Google. The author of the material offers a look at the code necessary to implement the interaction of microservices using HTTP / 1.1 and gRPC, measure performance, and choose a technology that allows you to organize the data exchange between microservices in the best possible way.

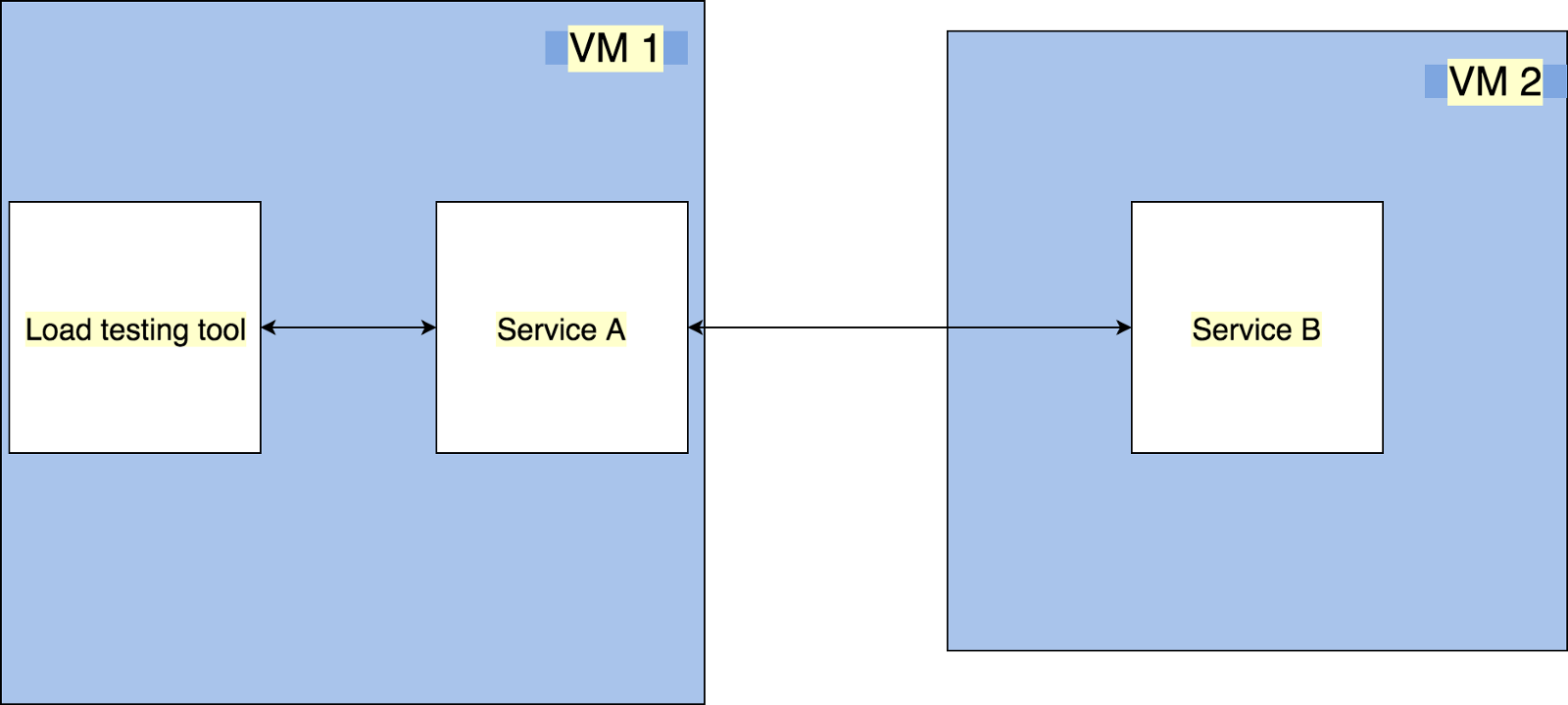

Let's start small and create a system of two microservices that can interact with each other. Please note that cluster mode is not used here. Here is the diagram of our application.

The architecture of the application running in normal mode

')

The application consists of the following components:

HTTP / 1.1 is a standard microservice communication technology that is used when using any HTTP libraries like axios or superagent .

Here is the service code B, which implements our system's API:

Here is the code for service A, which accesses service B using HTTP / 1.1:

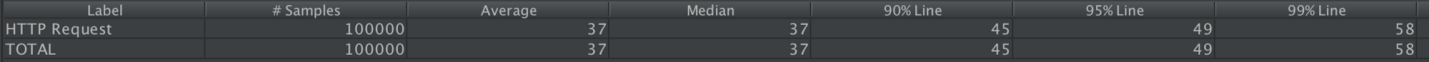

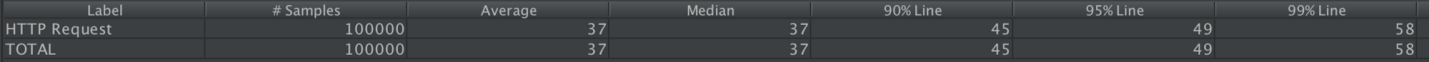

By running these microservices, we can use the jMeter features to perform performance tests. Let us find out how the system behaves when working with it 50 users, each of which performs 2000 requests. As can be seen in the following figure, the median of the measurement results is 37 ms.

The results of the study of the system operating in the usual mode and using HTTP / 1.1, using jMeter

gRPC uses Protocol Buffers technology by default. Therefore, using gRPC, in addition to the code of the two services, we will need to write the code of the proto-file, which describes the interface of interaction between the modules of the system.

Now, since now we are planning to use gRPC, we need to rewrite service code B:

Pay attention to some features of this code:

Now rewrite service A using gRPC:

Among the features of this code are the following:

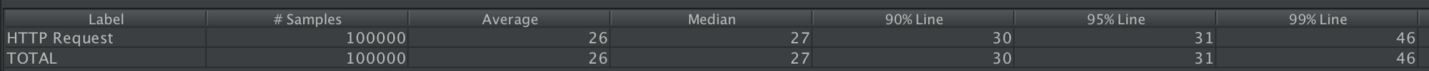

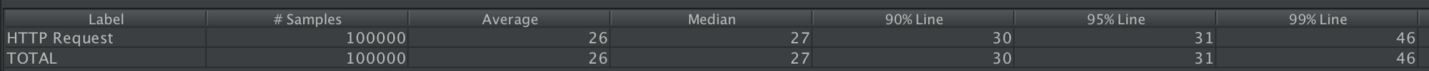

Now let's test what we did with jMeter.

The results of the study of the system operating in the usual mode and using gRPC, using jMeter

By making simple calculations, you can find out that a solution using gRPC is 27% faster than a solution using HTTP / 1.1.

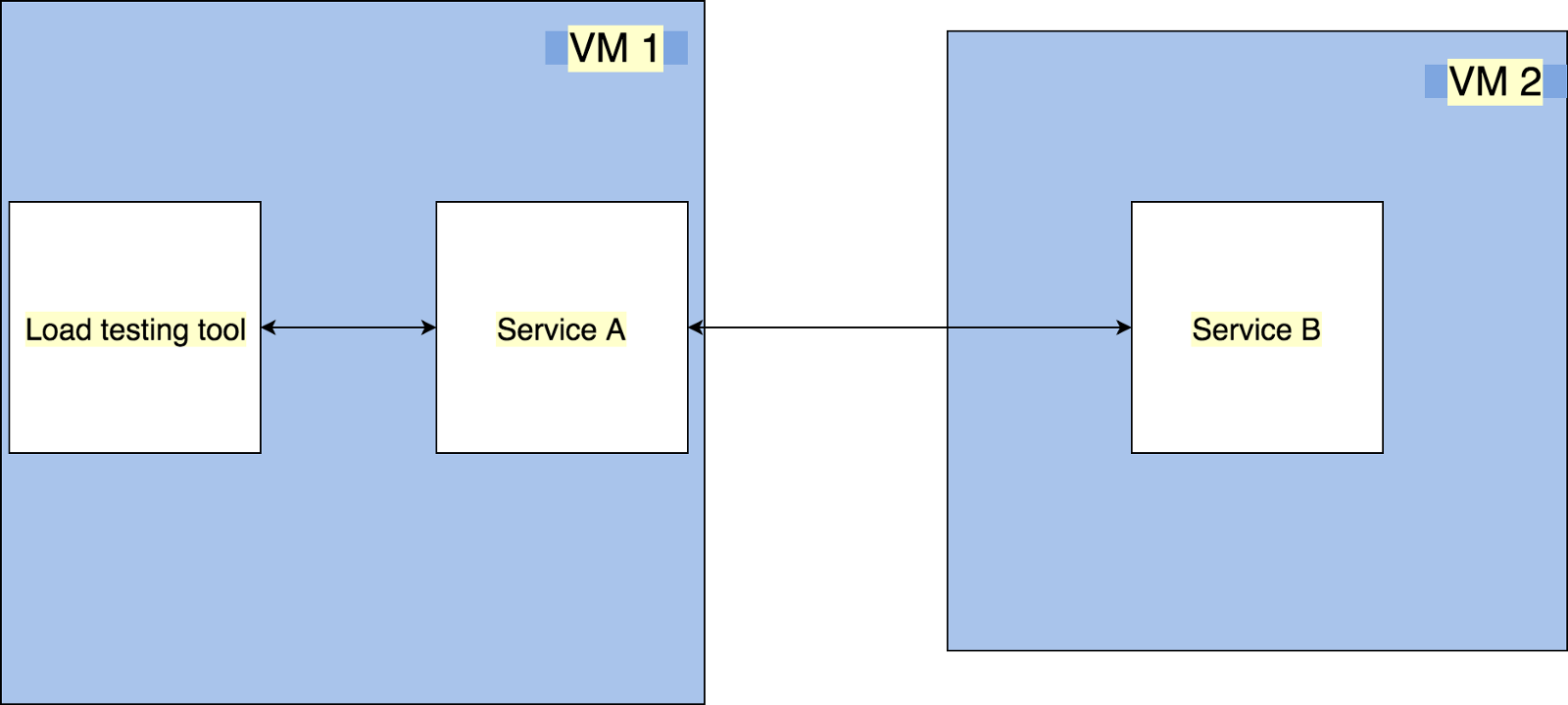

Here is a diagram of an application similar to the one we just studied, but operating in cluster mode.

Cluster Mode Application Architecture

If we compare this architecture with the previously discussed, we can note the following changes:

Such architecture is typical for real projects.

We investigate HTTP / 1.1 and gRPC in the new environment.

When using microservices in a clustered environment that use HTTP / 1.1, their code will not have to be changed. It is only necessary to configure nginx to organize balancing of the traffic of service B. In our case, in order to do this, you need to reduce the file

Now let's launch what we have done and look at the results of testing the system using jMeter.

The results of the study of a system operating in cluster mode and using HTTP / 1.1 using jMeter

The median in this case is 41 ms.

GRPC support appeared in nginx 1.13.10 . Therefore, we need the latest version of nginx, for which the usual command

Also here we do not use Node.js in cluster mode, as in this mode gRPC is not supported .

To install the latest version of nginx, use the following sequence of commands:

In addition, we need SSL certificates. You can create a self-signed certificate using openSSL :

To use gRPC, you need to edit the /

Now everything is ready to test the cluster gRPC solution with jMeter.

The results of the study of a system operating in cluster mode and using gRPC using jMeter

In this case, the median is equal to 28 ms, and this, in comparison with the same indicator obtained in the study of the cluster HTTP / 1.1 solution, is 31% faster.

The results of the study show that an application based on microservices that uses gRPC is about 30% more productive than a similar application that uses HTTP / 1.1 to exchange data between microservices. The source code of the projects reviewed in this material can be found here .

Dear readers! If you are developing microservices, please tell us about how you organize data exchange between them.

Normal application

Let's start small and create a system of two microservices that can interact with each other. Please note that cluster mode is not used here. Here is the diagram of our application.

The architecture of the application running in normal mode

')

The application consists of the following components:

- Means for testing the system (Load testing tool): jMeter .

- Service A (Service A): microservice that performs requests to service B and returns the responses received from it.

- Service B (Service B): microservice that sends static JSON data in response to requests after a 10-ms delay used for all of its APIs.

- Virtual Machines (VM 1 and VM 2): Amazon EC2 t2.xlarge instances.

▍HTTP / 1.1

HTTP / 1.1 is a standard microservice communication technology that is used when using any HTTP libraries like axios or superagent .

Here is the service code B, which implements our system's API:

server.route({ method: 'GET', path: '/', handler: async (request, h) => { const response = await new Promise((resolve) => { setTimeout(() => { resolve({ id: 1, name: 'Abhinav Dhasmana', enjoys_coding: true, }); }, 10); }); return h.response(response); }, }); Here is the code for service A, which accesses service B using HTTP / 1.1:

server.route({ method: 'GET', path: '/', handler: async (request, h) => { try { const response = await Axios({ url: 'http://localhost:8001/', method: 'GET', }); return h.response(response.data); } catch (err) { throw Boom.clientTimeout(err); } }, }); By running these microservices, we can use the jMeter features to perform performance tests. Let us find out how the system behaves when working with it 50 users, each of which performs 2000 requests. As can be seen in the following figure, the median of the measurement results is 37 ms.

The results of the study of the system operating in the usual mode and using HTTP / 1.1, using jMeter

▍gRPC

gRPC uses Protocol Buffers technology by default. Therefore, using gRPC, in addition to the code of the two services, we will need to write the code of the proto-file, which describes the interface of interaction between the modules of the system.

syntax = "proto3"; service SampleDataService { rpc GetSampleData (Empty) returns (SampleData) {} } message SampleData { int32 id = 1; string name = 2; bool enjoys_coding = 3; } message Empty {} Now, since now we are planning to use gRPC, we need to rewrite service code B:

const grpc = require('grpc'); const proto = grpc.load('serviceB.proto'); const server = new grpc.Server(); const GetSampleData = (call, callback) => { setTimeout(() => { callback(null, { id: 1, name: 'Abhinav Dhasmana', enjoys_coding: true, }); }, 10); }; server.addService(proto.SampleDataService.service, { GetSampleData, }); const port = process.env.PORT; console.log('port', port); server.bind(`0.0.0.0:${port}`, grpc.ServerCredentials.createInsecure()); server.start(); console.log('grpc server is running'); Pay attention to some features of this code:

- With

const server = new grpc.Server();we create a grpc server. server.addService(proto...commandserver.addService(proto...we add the service to the server.- The

server.bind(`0.0.0.0:${port}...commandserver.bind(`0.0.0.0:${port}...is used to bind the port and credentials.

Now rewrite service A using gRPC:

const protoPath = `${__dirname}/../serviceB/serviceB.proto`; const proto = grpc.load(protoPath); const client = new proto.SampleDataService('localhost:8001', grpc.credentials.createInsecure()); const getDataViagRPC = () => new Promise((resolve, reject) => { client.GetSampleData({}, (err, response) => { if (!response.err) { resolve(response); } else { reject(err); } }); }); server.route({ method: 'GET', path: '/', handler: async (request, h) => { const allResults = await getDataViagRPC(); return h.response(allResults); }, }); Among the features of this code are the following:

- With the

const client = new proto.SampleDataService...we create a grpc client. - A remote call is made using the

client.GetSampleData({}...command.

Now let's test what we did with jMeter.

The results of the study of the system operating in the usual mode and using gRPC, using jMeter

By making simple calculations, you can find out that a solution using gRPC is 27% faster than a solution using HTTP / 1.1.

Clustered mode application

Here is a diagram of an application similar to the one we just studied, but operating in cluster mode.

Cluster Mode Application Architecture

If we compare this architecture with the previously discussed, we can note the following changes:

- There is a load balancer (Load Balancer), in the role of which NGINX is used.

- Service B is now present here in triplicate, which listens on different ports.

Such architecture is typical for real projects.

We investigate HTTP / 1.1 and gRPC in the new environment.

▍HTTP / 1.1

When using microservices in a clustered environment that use HTTP / 1.1, their code will not have to be changed. It is only necessary to configure nginx to organize balancing of the traffic of service B. In our case, in order to do this, you need to reduce the file

/etc/nginx/sites-available/default to this type: upstream httpservers { server ip_address:8001; server ip_address:8002; server ip_address:8003; } server { listen 80; location / { proxy_pass http://httpservers; } } Now let's launch what we have done and look at the results of testing the system using jMeter.

The results of the study of a system operating in cluster mode and using HTTP / 1.1 using jMeter

The median in this case is 41 ms.

▍gRPC

GRPC support appeared in nginx 1.13.10 . Therefore, we need the latest version of nginx, for which the usual command

sudo apt-get install nginx not suitable for installation.Also here we do not use Node.js in cluster mode, as in this mode gRPC is not supported .

To install the latest version of nginx, use the following sequence of commands:

sudo apt-get install -y software-properties-common sudo add-apt-repository ppa:nginx/stable sudo apt-get update sudo apt-get install nginx In addition, we need SSL certificates. You can create a self-signed certificate using openSSL :

openssl req -x509 -newkey rsa:2048 -nodes -sha256 -subj '/CN=localhost' \ -keyout localhost-privatekey.pem -out localhost-certificate.pem To use gRPC, you need to edit the /

etc/nginx/sites-available/default file: upstream httpservers { server ip_address:8001; server ip_address:8002; server ip_address:8003; } server { listen 80; location / { proxy_pass http://httpservers; } } Now everything is ready to test the cluster gRPC solution with jMeter.

The results of the study of a system operating in cluster mode and using gRPC using jMeter

In this case, the median is equal to 28 ms, and this, in comparison with the same indicator obtained in the study of the cluster HTTP / 1.1 solution, is 31% faster.

Results

The results of the study show that an application based on microservices that uses gRPC is about 30% more productive than a similar application that uses HTTP / 1.1 to exchange data between microservices. The source code of the projects reviewed in this material can be found here .

Dear readers! If you are developing microservices, please tell us about how you organize data exchange between them.

Source: https://habr.com/ru/post/421579/

All Articles