Learn OpenGL. Lesson 5.10 - Screen Space Ambient Occlusion

SSAO

The theme of background lighting was covered by us in the lesson on the basics of lighting , but only in passing. Let me remind you: the background component of illumination is the essence of a constant value added to all calculations of the illumination of the scene to simulate the process of light scattering . In the real world, light experiences many reflections with varying degrees of intensity, which results in equally uneven illumination of indirectly illuminated portions of the scene. It is obvious that the illumination with constant intensity is not very plausible.

One type of approximate calculation of shading from indirect illumination is the ambient occlusion (AO ) algorithm, which simulates the weakening of indirect illumination in the vicinity of corners, folds, and other surface irregularities. Such elements, in the main, considerably overlap with the adjacent geometry and therefore leave less opportunities for the light rays to escape, obscuring these areas.

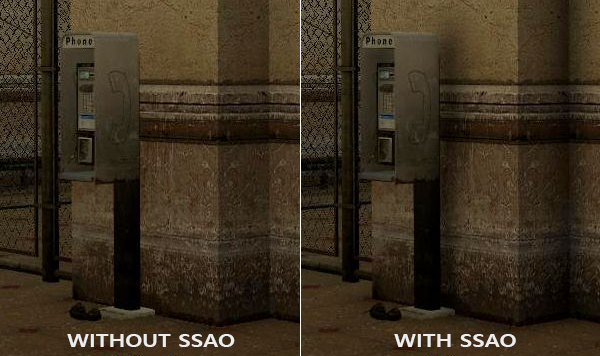

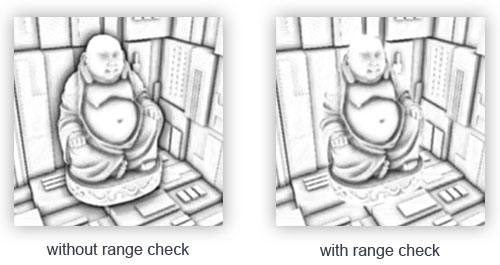

Below is a comparison of the rendering without and using the AO algorithm. Pay attention to how the intensity of background lighting in the vicinity of the corners of the walls and other sharp surface breaks decreases:

')

The effect may not be very noticeable, but the presence of the effect in the whole scene adds to its realism due to the additional illusion of depth created by small details of the self-shadowing effect.

Content

Part 1. Start

Part 2. Basic lighting

Part 3. Loading 3D Models

Part 4. OpenGL advanced features

Part 5. Advanced Lighting

- Opengl

- Creating a window

- Hello window

- Hello triangle

- Shaders

- Textures

- Transformations

- Coordinate systems

- Camera

Part 2. Basic lighting

Part 3. Loading 3D Models

Part 4. OpenGL advanced features

- Depth test

- Stencil test

- Mixing colors

- Face clipping

- Frame buffer

- Cubic cards

- Advanced data handling

- Advanced GLSL

- Geometric shader

- Instancing

- Smoothing

Part 5. Advanced Lighting

- Advanced lighting. Model Blinna-Phong.

- Gamma Correction

- Shadow maps

- Omnidirectional shadow maps

- Normal mapping

- Parallax mapping

- Hdr

- Bloom

- Deferred rendering

- SSAO

It is worth noting that the algorithms for calculating the AO are quite resource-intensive, since they require an analysis of the surrounding geometry. In a naive implementation, it would be possible to simply release a multitude of rays at each point of the surface and determine the degree of its shading, but this approach very quickly reaches the limit of resource intensity that is acceptable for interactive applications. Fortunately, in 2007, Crytek published a paper describing its own approach to the implementation of the Screen-Space Ambient Occlusion, SSAO algorithm , which was used in the release version of the Crysis game. The approach calculated the degree of shading in the screen space using only the current depth buffer instead of actual data about the surrounding geometry. Such optimization radically accelerated the algorithm in comparison with the reference implementation and, at the same time, gave mostly plausible results, which made this approach an approximate calculation of background shading as the de facto standard in the industry.

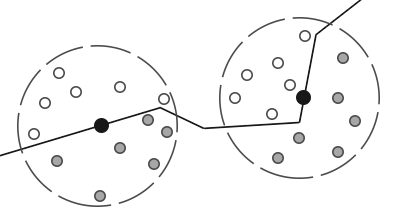

The principle on which the algorithm is based is quite simple: for each fragment of full-screen quad, the shading factor ( occlusion factor ) is calculated based on the depth values of the surrounding fragments. The calculated shading coefficient is then used to reduce the background light intensity (up to complete exclusion). Obtaining a coefficient requires collecting depth data from a variety of samples from a spherical region surrounding the fragment in question, and comparing these depth values with the depth of the fragment in question. The number of samples having a depth greater than the current fragment directly determines the shading factor. Look at this scheme:

Here, each gray point lies inside a certain geometric object, and therefore contributes to the value of the shading coefficient. The more samples there are inside the geometry of surrounding objects, the less will be the residual intensity of the background shading in this area.

It is obvious that the quality and realism of the effect directly depends on the number of samples taken. With a small number of samples, the accuracy of the algorithm decreases and leads to the appearance of a banding artifact or “polishing” due to abrupt transitions between areas with very different shading coefficients. A large number of samples simply kills performance. Randomization of the core samples allows, with similar results in quality, to slightly reduce the number of samples required. This implies a reorientation by turning to a random angle of the set of sample vectors. However, introducing randomness immediately brings a new problem in the form of a noticeable noise pattern, which requires the use of blur filters to smooth out the result. Below is an example of how the algorithm works (by John Chapman ) and its typical problems: banding and noise patterns.

As can be seen, a noticeable polish due to the small number of samples is well removed by introducing randomization of the orientation of the samples.

Crytek's SSAO concrete implementation had a recognizable visual style. Since Crytek specialists used a spherical sampling core, this affected even flat surfaces such as walls, making them shaded — in fact, half of the core sample size was immersed under the geometry. Below is a screenshot of a scene from Crysis, depicted in grayscale based on the value of the shading coefficient. Here the effect of "dullness" is clearly visible:

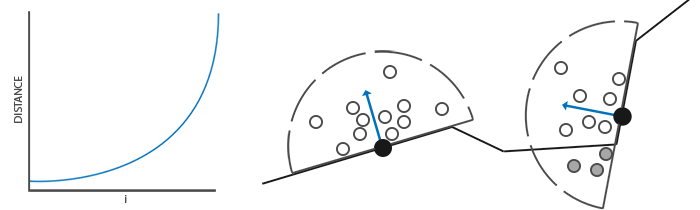

To avoid this effect, we move from the spherical core of the sample to a hemisphere oriented along the normal to the surface:

Sampling from such a hemisphere oriented normal ( normal-oriented hemisphere ) we do not have to take into account in the calculation of the shading coefficient fragments lying under the surface of the adjacent surface. This approach removes unnecessary shading, in general, gives more realistic results. This lesson will use a hemispheric approach and a slightly refined code from a brilliant SSAO lesson from John Chapman .

Source buffer

The process of calculating the shading factor in each fragment requires the presence of data on the surrounding geometry. Specifically, we need the following data:

- Position vector for each fragment;

- Normal vector for each fragment;

- Diffuse color for each fragment;

- Core sampling;

- Random vector of rotation for each fragment, used in reorientation of the sample core.

Using the data on the coordinates of the fragment in the species space, we can orient the hemisphere of the sample core along the normal vector defined in the species space for the current fragment. Then the resulting core is used to make samples with different offsets from the texture, which stores the data on the coordinates of the fragments. We make a set of samples in each fragment and each performed sample compare its depth value with the depth value from the fragment coordinate buffer to estimate the shading value. The resulting value is then used to limit the contribution of the background component in the final lighting calculation. Using a fragmentary random vector of rotation, we can significantly reduce the required number of samples to obtain a decent result, then this will be demonstrated.

Since SSAO is an effect implemented in the screen space, it is possible to directly calculate the rendering of a full-screen quad. But then we will not have data on the geometry of the scene. To circumvent this limitation, we will render all the necessary information into textures, which will later be used in the SSAO shader to access the geometric and other information about the scene. If you carefully followed these lessons, you should already know in the described approach the appearance of the deferred shading algorithm. In many ways, the effect of SSAO as a native gets into the render with deferred shading - after all, textures that store coordinates and normals are already available in the G-buffer.

In this lesson, the effect is implemented on top of a somewhat simplified version of the code from the lesson about deferred lighting . If you have not yet become acquainted with the principles of deferred lighting - I strongly advise you to refer to this lesson.

Since access to the fragment information about coordinates and normals should already be available through the G-buffer, the fragment shader of the geometry processing stage is quite simple:

#version 330 core layout (location = 0) out vec4 gPosition; layout (location = 1) out vec3 gNormal; layout (location = 2) out vec4 gAlbedoSpec; in vec2 TexCoords; in vec3 FragPos; in vec3 Normal; void main() { // gPosition = FragPos; // gNormal = normalize(Normal); // - gAlbedoSpec.rgb = vec3(0.95); } Since the SSAO algorithm is an effect in the screen space, and the shading coefficient is calculated based on the visible area of the scene, it makes sense to conduct calculations in the view space. In this case, the FragPos variable obtained from the vertex shader wounds the situation in the species space. It is worth making sure that the coordinates and normals data is stored in the G-buffer in the species space, since all further calculations will be carried out in it.

It is possible to restore the position vector on the basis of only a known fragment depth and a certain amount of mathematical magic, as described, for example, in Matt Pettineo's blog . This, of course, requires a lot of computational cost, but it eliminates the need to store position data in a G-buffer, which takes a lot of video memory. However, for the sake of simplicity of the example code, we will leave this approach for personal study.

Texture gPosition color buffer is configured as follows:

glGenTextures(1, &gPosition); glBindTexture(GL_TEXTURE_2D, gPosition); glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB16F, SCR_WIDTH, SCR_HEIGHT, 0, GL_RGB, GL_FLOAT, NULL); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE); This texture stores the coordinates of the fragments and can be used to obtain depth data for each point from the sample core. I will note that the texture uses the floating-point data format - this allows the coordinates of the fragments not to be reduced to the interval [0., 1.]. Also note the replay mode - set GL_CLAMP_TO_EDGE . This is necessary to eliminate the possibility of not purposely oversampling in the screen space. Going beyond the main range of texture coordinates will give us incorrect position and depth data.

Next, we will deal with the formation of a hemispherical core of samples and the creation of a method of random orientation.

Creation of a normal hemisphere

So, the task is to create a set of sample points located inside a hemisphere oriented along the normal to the surface. Since the creation of the sampling kernel for all possible directions of the normal is computationally unattainable, we use the transition to the tangent space , where the normal is always represented as a vector in the direction of the positive semi-axis Z.

Assuming the hemisphere radius to be single, the process of forming a core of a sample of 64 points looks like this:

// 0.0 - 1.0 std::uniform_real_distribution<float> randomFloats(0.0, 1.0); std::default_random_engine generator; std::vector<glm::vec3> ssaoKernel; for (unsigned int i = 0; i < 64; ++i) { glm::vec3 sample( randomFloats(generator) * 2.0 - 1.0, randomFloats(generator) * 2.0 - 1.0, randomFloats(generator) ); sample = glm::normalize(sample); sample *= randomFloats(generator); float scale = (float)i / 64.0; ssaoKernel.push_back(sample); } Here we randomly select the x and y coordinates in the interval [-1., 1.], and the z coordinate in the interval [0., 1.] (if the interval were the same as for x and y , we would get a spherical core sampling). The resulting vector of the samples will be limited to the hemispheres, since the core of the sample will ultimately be oriented along the normal to the surface.

At the moment, all sample points are randomly distributed inside the core, but for the sake of effect quality, samples that are closer to the origin of the core coordinates would be worth making a larger contribution to the calculation of the shading factor. This can be achieved by changing the distribution of formed sample points, increasing their density near the origin. This task is easily accomplished using the accelerated interpolation function:

scale = lerp(0.1f, 1.0f, scale * scale); sample *= scale; ssaoKernel.push_back(sample); } The lerp () function is defined as:

float lerp(float a, float b, float f) { return a + f * (b - a); } Such a trick gives us a modified distribution, where most of the sample points lie near the origin of the coordinates of the nucleus.

Each of the obtained sampling vectors will be used to offset the fragment coordinates in the species space to obtain data on the surrounding geometry. To obtain decent results when working in species space, an impressive number of readings may be required, which inevitably will hurt performance. However, the introduction of pseudo-random noise or the rotation of the vectors of the samples in each processed fragment will significantly reduce the required number of samples with comparable quality.

Random rotation of the sample core

So, introducing randomness into the distribution of points of the sample core can significantly reduce the requirement for the number of these points to obtain a decent effect quality. It would be possible to create a random rotation vector for each fragment of the scene, but this is too expensive for memory. It is more efficient to create a small texture containing a set of random rotation vectors, and then simply use it with the repeat mode set GL_REPEAT .

Create a 4x4 array and fill it with random rotation vectors oriented along the normal vector in the tangent space:

std::vector<glm::vec3> ssaoNoise; for (unsigned int i = 0; i < 16; i++) { glm::vec3 noise( randomFloats(generator) * 2.0 - 1.0, randomFloats(generator) * 2.0 - 1.0, 0.0f); ssaoNoise.push_back(noise); } Since the core is aligned along the positive semi-axis Z in the tangent space, the component z is left equal to zero - this will ensure rotation only around the Z axis.

Next, create a 4x4 size texture and fill in our array of rotation vectors. Be sure to use the GL_REPEAT repeat mode for texture tiling:

unsigned int noiseTexture; glGenTextures(1, &noiseTexture); glBindTexture(GL_TEXTURE_2D, noiseTexture); glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB16F, 4, 4, 0, GL_RGB, GL_FLOAT, &ssaoNoise[0]); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT); Well, now we have all the data necessary for the direct implementation of the SSAO algorithm!

SSAO Shader

The effect shader will be executed for each fragment of full-screen quad, calculating the shading factor in each of them. Since the results will be used in another stage of the rendering that creates the final lighting, we will need to create another framebuffer object to store the result of the shader:

unsigned int ssaoFBO; glGenFramebuffers(1, &ssaoFBO); glBindFramebuffer(GL_FRAMEBUFFER, ssaoFBO); unsigned int ssaoColorBuffer; glGenTextures(1, &ssaoColorBuffer); glBindTexture(GL_TEXTURE_2D, ssaoColorBuffer); glTexImage2D(GL_TEXTURE_2D, 0, GL_RED, SCR_WIDTH, SCR_HEIGHT, 0, GL_RGB, GL_FLOAT, NULL); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST); glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, ssaoColorBuffer, 0); Since the result of the algorithm is a single real number within [0., 1.], for storage it will be sufficient to create a texture with a single accessible component. That is why GL_RED is set as the internal format for the color buffer.

In general, the rendering process of the SSAO stage looks like this:

// : G- glBindFramebuffer(GL_FRAMEBUFFER, gBuffer); [...] glBindFramebuffer(GL_FRAMEBUFFER, 0); // G- SSAO glBindFramebuffer(GL_FRAMEBUFFER, ssaoFBO); glClear(GL_COLOR_BUFFER_BIT); glActiveTexture(GL_TEXTURE0); glBindTexture(GL_TEXTURE_2D, gPosition); glActiveTexture(GL_TEXTURE1); glBindTexture(GL_TEXTURE_2D, gNormal); glActiveTexture(GL_TEXTURE2); glBindTexture(GL_TEXTURE_2D, noiseTexture); shaderSSAO.use(); SendKernelSamplesToShader(); shaderSSAO.setMat4("projection", projection); RenderQuad(); glBindFramebuffer(GL_FRAMEBUFFER, 0); // : glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); shaderLightingPass.use(); [...] glActiveTexture(GL_TEXTURE3); glBindTexture(GL_TEXTURE_2D, ssaoColorBuffer); [...] RenderQuad(); The shaderSSAO shader takes the G-buffer textures it needs as input, as well as the noise texture and the core of the sample:

#version 330 core out float FragColor; in vec2 TexCoords; uniform sampler2D gPosition; uniform sampler2D gNormal; uniform sampler2D texNoise; uniform vec3 samples[64]; uniform mat4 projection; // // 1280x720 const vec2 noiseScale = vec2(1280.0/4.0, 720.0/4.0); void main() { [...] } Notice the noiseScale variable. Our small, noisy texture should be covered over the entire surface of the screen, but since the texture coordinates of TexCoords are within [0., 1.] this will not happen without our intervention. For this purpose, we calculate the multiplier for the texture coordinates, which is found as the ratio of the screen size to the size of the noise texture:

vec3 fragPos = texture(gPosition, TexCoords).xyz; vec3 normal = texture(gNormal, TexCoords).rgb; vec3 randomVec = texture(texNoise, TexCoords * noiseScale).xyz; Since creating a noise texture texNoise we set the repeat mode to GL_REPEAT , now it will be repeated many times on the surface of the screen. With the randomVec , fragPos and normal values in hand , we can create a TBN transformation matrix from the tangent to the specific space:

vec3 tangent = normalize(randomVec - normal * dot(randomVec, normal)); vec3 bitangent = cross(normal, tangent); mat3 TBN = mat3(tangent, bitangent, normal); Using the Gram-Schmidt process, we create an orthogonal basis randomly tilted in each fragment based on a random value randomVec . Important point: since in this case it does not matter to us that the TBN matrix is precisely oriented along the surface of the triangle (as is the case with parallax mapping, note), we do not need pre-calculated data on tangents and bicandals.

Next, we go through the array of the sample core, translate each sample vector from the tangent space into the viewport, and get its sum with the current position of the fragment. Then we compare the value of the depth of the resulting sum with the value of the depth obtained by sampling from the corresponding texture of the G-buffer.

While it sounds confusing, let's break it down into steps:

float occlusion = 0.0; for(int i = 0; i < kernelSize; ++i) { // vec3 sample = TBN * samples[i]; // - sample = fragPos + sample * radius; [...] } Here, kernelSize and radius are variables that control the characteristics of the effect. In this case, they are 64 and 0.5, respectively. At each iteration, we translate the core vector of the sample into the view space. Next, add to the obtained value of the sample offset in the species space value of the position of the fragment in the species space. The offset value is then multiplied by the radius variable, which controls the core radius of the SSAO effect sample.

After these steps, we need to convert the resulting vector sample into screen space, so that we can sample the G-buffer texture that stores the positions and depths of the fragments using the resulting projected value. Since the sample is in view space, we need a projection projection matrix:

vec4 offset = vec4(sample, 1.0); offset = projection * offset; // offset.xyz /= offset.w; // offset.xyz = offset.xyz * 0.5 + 0.5; // [0., 1.] After conversion to the clip space, we manually perform the perspective division by simply dividing the xyz components into the w component. The resulting vector in the normalized coordinates of the device ( NDC ) is translated into the interval of values [0., 1.] so that it can be used as texture coordinates:

float sampleDepth = texture(gPosition, offset.xy).z; Use the xy components of the sample vector to fetch the G-buffer positions from the texture. We obtain the depth value ( z components) corresponding to the sampling vector when viewed from the observer’s position (this is the first non-obscured visible fragment). If the obtained sample depth is greater than the stored depth, then we increase the shading factor:

occlusion += (sampleDepth >= sample.z + bias ? 1.0 : 0.0); Notice the bias offset, which is added to the original fragment depth (in the example set to 0.025). This offset is not always necessary, but the presence of a variable allows you to control how the SSAO effect looks, and, in certain situations, removes problems with ripples in shaded areas.

But that's not all, since such an implementation leads to noticeable artifacts. It manifests itself in cases where a fragment is considered lying near the edge of a certain surface. In such situations, when comparing depths, the algorithm will inevitably capture the depths of the surfaces, which may lie very far behind the considered one. In these places, the algorithm erroneously greatly increases the degree of shading, which will create noticeable dark halos at the edges of the objects. An artifact is treated by introducing an additional distance check (example by John Chapman ):

The check will limit the contribution to the shading factor only for depth values within the radius of the sample:

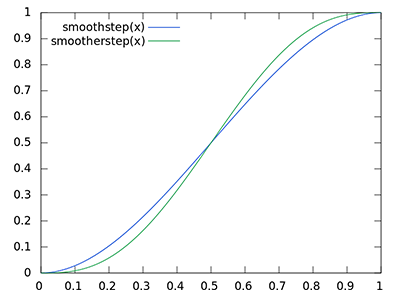

float rangeCheck = smoothstep(0.0, 1.0, radius / abs(fragPos.z - sampleDepth)); occlusion += (sampleDepth >= sample.z + bias ? 1.0 : 0.0) * rangeCheck; We also use the GLSL smoothstep () function, which implements a smooth interpolation of the third parameter between the first and second. In this case, returning 0 if the third parameter is less than or equal to the first, or 1 if the third parameter is greater than or equal to the second. If the depth difference is within the radius , then its value will be smoothly smoothed in the interval [0., 1.] in accordance with this curve:

If we used clear boundaries in the depth check conditions, this would add sharp artifacts in the places where the difference values of the depths are outside the radius .

With the final touch, we normalize the shading coefficient using the sample core size and record the result. We also invert the final value by subtracting it from the unit, so that the final value can be used directly to modulate the background component of the lighting without additional actions:

} occlusion = 1.0 - (occlusion / kernelSize); FragColor = occlusion; For a scene with a lying nanosuit we know, performing an SSAO shader gives the following texture:

As you can see, the effect of background shading creates a good illusion of depth. The only output image of the shader already allows you to distinguish the details of the suit and make sure that it really lies on the floor, and does not levitate at some distance from it.

And yet the effect is far from ideal, since the noise pattern introduced by the texture of random rotation vectors is easily noticeable. To smooth the result of the SSAO calculation, we apply a blur filter.

Blur background shading

After constructing the result of the SSAO and before the final mixing of the light, it is necessary to blur the texture that stores the data on the shading coefficient. For this we will create another framebuffer:

unsigned int ssaoBlurFBO, ssaoColorBufferBlur; glGenFramebuffers(1, &ssaoBlurFBO); glBindFramebuffer(GL_FRAMEBUFFER, ssaoBlurFBO); glGenTextures(1, &ssaoColorBufferBlur); glBindTexture(GL_TEXTURE_2D, ssaoColorBufferBlur); glTexImage2D(GL_TEXTURE_2D, 0, GL_RED, SCR_WIDTH, SCR_HEIGHT, 0, GL_RGB, GL_FLOAT, NULL); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST); glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, ssaoColorBufferBlur, 0); The tiling noise texture in screen space provides well-defined randomness characteristics that can be used to your advantage when creating a blur filter:

#version 330 core out float FragColor; in vec2 TexCoords; uniform sampler2D ssaoInput; void main() { vec2 texelSize = 1.0 / vec2(textureSize(ssaoInput, 0)); float result = 0.0; for (int x = -2; x < 2; ++x) { for (int y = -2; y < 2; ++y) { vec2 offset = vec2(float(x), float(y)) * texelSize; result += texture(ssaoInput, TexCoords + offset).r; } } FragColor = result / (4.0 * 4.0); } The shader simply goes through SSAO texture texels with an offset from -2 to +2, which corresponds to the actual size of the noise texture. The offset is equal to the exact size of one texel: for the calculation, the textureSize () function is used, which returns vec2 with the dimensions of the specified texture. So the shader simply averages the results stored in the texture, which gives a quick and fairly effective blur:

Total we have a texture with the hands on the background shading for each fragment on the screen - everything is ready for the final stage of image reduction!

Apply background shading

The stage of applying the shading coefficient in the final lighting calculation is surprisingly simple: for each fragment, it is enough to simply multiply the value of the background component of the light source by the shading coefficient from the prepared texture. You can take a ready-made shader with the Blinna-Phong model from the lesson on deferred shading and tweak it a bit:

#version 330 core out vec4 FragColor; in vec2 TexCoords; uniform sampler2D gPosition; uniform sampler2D gNormal; uniform sampler2D gAlbedo; uniform sampler2D ssao; struct Light { vec3 Position; vec3 Color; float Linear; float Quadratic; float Radius; }; uniform Light light; void main() { // G- vec3 FragPos = texture(gPosition, TexCoords).rgb; vec3 Normal = texture(gNormal, TexCoords).rgb; vec3 Diffuse = texture(gAlbedo, TexCoords).rgb; float AmbientOcclusion = texture(ssao, TexCoords).r; // - // : - vec3 ambient = vec3(0.3 * Diffuse * AmbientOcclusion); vec3 lighting = ambient; // (0, 0, 0) - vec3 viewDir = normalize(-FragPos); // vec3 lightDir = normalize(light.Position - FragPos); vec3 diffuse = max(dot(Normal, lightDir), 0.0) * Diffuse * light.Color; // vec3 halfwayDir = normalize(lightDir + viewDir); float spec = pow(max(dot(Normal, halfwayDir), 0.0), 8.0); vec3 specular = light.Color * spec; // float dist = length(light.Position - FragPos); float attenuation = 1.0 / (1.0 + light.Linear * dist + light.Quadratic * dist * dist); diffuse *= attenuation; specular *= attenuation; lighting += diffuse + specular; FragColor = vec4(lighting, 1.0); } There are only two major changes: the transition to calculations in species space and the multiplication of the background lighting component by the value of AmbientOcclusion . An example of a scene with a single blue point source of light:

The full source code is here .

The manifestation of the SSAO effect is highly dependent on parameters such as kernelSize , radius and bias , often their fine tuning is a matter of course the artist’s workout during the development of a particular location / scene. There are no “best” and universal combinations of parameters: for some scenes, a small core radius of the sample is good, others benefit from an increased radius and number of samples. The example uses 64 sample points, which, frankly, is redundant, but you can always edit the code and see what happens with a smaller number of samples.

In addition to these uniforms, which are responsible for adjusting the effect, there is the possibility to explicitly adjust the severity of the background shading effect. To do this, it is enough to raise the coefficient to a power controlled by another uniform:

occlusion = 1.0 - (occlusion / kernelSize); FragColor = pow(occlusion, power); I advise you to spend some time on the game with the settings, as this will give a better understanding of the nature of the changes in the final picture.

Summing up, it is worth saying that although the visual effect of the use of SSAO is rather subtle, but in scenes with well-spaced lighting it undeniably adds a noticeable bit of realism. Having such a tool in your arsenal is definitely valuable.

Additional resources

- SSAO Tutorial : an excellent lesson-article from John Chapman, on the basis of which the code of this lesson is built.

- Know your SSAO artifacts : A very valuable article lucidly showing not only the most pressing problems with the quality of SSAO, but also ways to solve them. Recommended for reading.

- SSAO With Depth Reconstruction : A supplement to the main SSAO lesson by the author OGLDev, concerning the frequently used technique to restore the fragment coordinates based on the depth value. The importance of this approach is due to the significant savings in memory due to the lack of need to store positions in the G-buffer. The approach is so universal, as it applies to SSAO.

PS : We have a telegram-konf to coordinate transfers. If there is a serious desire to help with the translation, then you are welcome!

Source: https://habr.com/ru/post/421385/

All Articles