What is an “intuitive interface” in chatbots, virtual assistants, avatars and social robots?

While we are preparing a detailed technical article for you, let's talk about business and trends in the field of smart systems.

Very fast in our life is working with big data and "smart systems". And, like any trend, a “bottleneck” is immediately apparent here, namely, the interface. No matter how “smart” the system is, with whatever data and how well it works, the result of its work must be understandable to the person. The work with such a system should be convenient for the user. Along with the introduction of digital transformation, Big Data, IoT systems of business will explicitly require the creation of an ergonomic and most intuitive interface of human interaction with these systems. The problem of high-quality interface solutions will appear more and more acutely. And this problem is especially acute where the system is dealt with not by a “specially trained professional”, but by an ordinary user.

Then we asked ourselves the question: what is a “high-quality interface solution”? We began to look for the answer in specific cases. And the logic prompted us to look more closely at the cases from the recent international conference CES2018, here they are:

')

Yes, this is the same movie about the conversation with LG Cloi (but the video is far from interesting only)

Here are some more examples:

Virtual assistants once again dominate CES 2018

Honda Brings Robotic Devices to CES 2018

There are a lot of cases, first of all they point to a huge variety of interface solutions, which are rather difficult to arrange. Sometimes in such solutions it is not easy to find the business component - what specific business problems (client tasks) they should solve and in what functionality it should be expressed.

Let's look at several points in the report of the well-known analytical agency Gartner "Top Trends in the Gartner Hype Cycle for Emerging Technologies, 2018" . At the very peak of technological expectations (Expectations) we see virtual assistants (Virtual Assistants) and smart robots (Smart Robots). It is also worth paying attention to this report "Gartner Market Guide for Conversational Platforms, 20 June 2018" .

A good experience of systematization of what is happening on the market for us was a document from PwC: “The Relevance and Emotional Lace" PwC Digital Services, Holobotics Practice " .

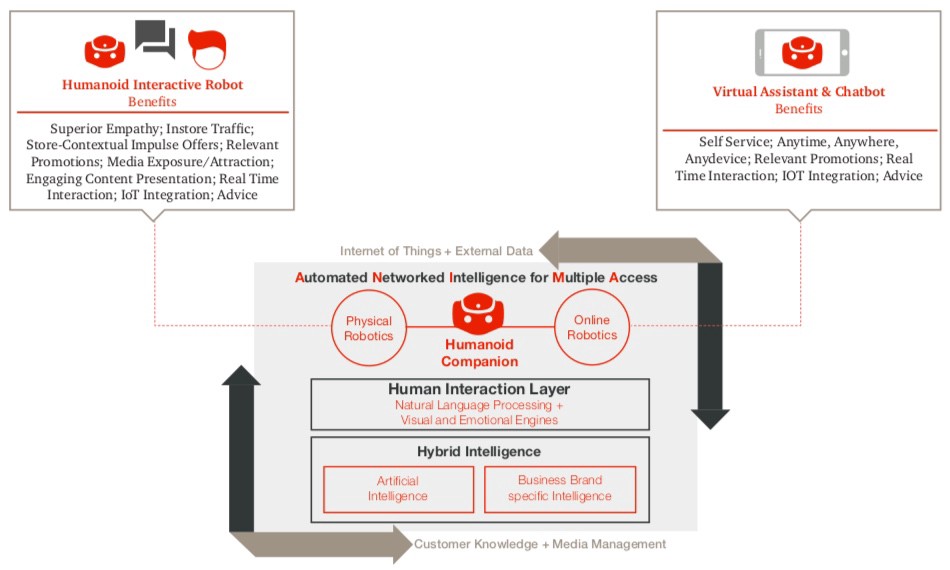

The analysis combines chatbots (Chatbots), virtual assistants (Virtual Assistants) and interactive robots (Humanoid Interactive Robots) into one type of interface solution - Humanoid Companion. Moreover, it divides this solution into 2 subtypes - Physical Robotics and Online Robotics. Note that now these subsegments of the market, which solve, in essence, the similar task of creating a high-quality and ergonomic interface solution, are noticeably divorced - they are often taken up by various specialized manufacturers and suppliers, various analysts, etc. The breakdown into subtypes in the PwC document is based on the criterion of "which iron is the basis of the solution" - physical robot, column, computer.

Automated Network Intelligence for Multiple Access. Credit: PWC. Available here .

We propose to introduce another criterion, which, in our opinion, will provide a clearer picture and a clearer systematization of this type of solutions. This criterion is the interaction modality, the modality that is included in the interface solution. Let's consider in more detail.

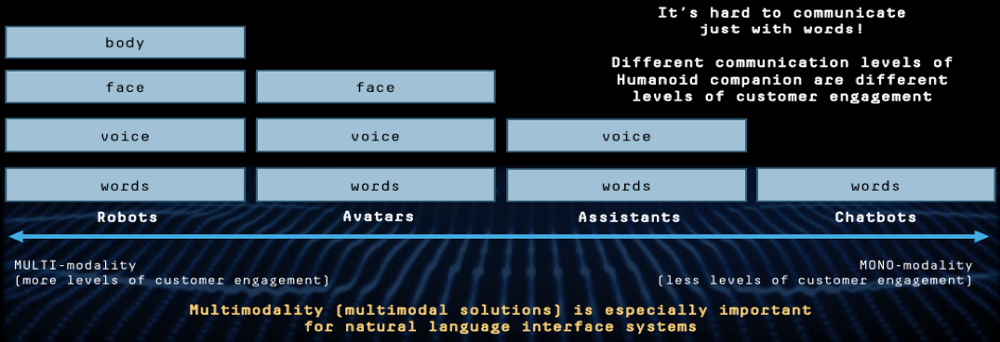

There are 4 main levels / modalities in which interaction takes place (comfortable and ergonomic for a person): words, voice, face, body. Communicating with each other, we include in communication some of these modalities or all modalities at once. This is what we now see in the interface systems: a variety of solutions aimed at the same task, but differing depending on which modalities are included in them.

Credit: Neurodata Lab.

Here are some examples (companies for examples are taken from Forrester's “ The Top 10 Chatbots For Enterprise Customer Service ” review):

Chatbot - in such solutions only one modality is used - words, text interaction.

Assistant - a virtual assistant assumes the presence of a second modality - voice, voice interaction:

- Sometimes it's just talking chatbot (IPsoft)

- But first of all, these include solutions that allow to conduct a full-fledged dialogue (Nuance)

Avatar is more complex and rarer solutions, now they can be seen mainly in the HR sphere:

- Andi the Interview Coach - Multi-Modal Skype Bot, an interesting solution from Botanic Technologies

- HR solution from a Russian startup (Vera Robber Recruiter), this is how it looks in work:

There are also some, let's say, intermediate solutions that use different modalities depending on the side of the interaction (for example, a person writes, an assistant speaks and moves, and so on). Curious examples:

Robot - and our beloved Human Robots - are multimodal communicative agents working directly at the level of 4 modalities - words, voice, face and body.

Vector by Anki. Credit: Anki.

What is especially important: the inclusion of new modalities on the one hand opens up completely new opportunities for the system, and on the other hand requires additional technologies - new, advanced technologies in the field of AI, in particular, in the field of emotional artificial intelligence, aimed at working with Natural Language Processing ( natural language processing) and focused on (interface) solutions of this type.

If you want to receive our new publications directly, if the article interested you, and you would like to get access to a demo stand showing the work of our Emotion AI products, you can contact the author of the article:

Olga Serdiukova

Chief Marketing Officer and Partner Development Director

Neurodata Lab LLC ⬝ Email ⬝ LinkedIn

Source: https://habr.com/ru/post/421037/

All Articles