KDD 2018, fourth day, stands Nobel laureate

The second day of the main KDD program. There is again a lot of interesting things under the cut: from machine learning on Pinterest to different ways to get to the water pipes. Including the performance of the Nobel laureate in economics - a story about how NASA works with telemetry, and many graph embeddings :)

Market design and computerized marketplace

Not bad performance of the Nobel laureate who worked with Shapley on markets. The market is an artificial thing that people invent. There are so-called commodity markets, when you buy a certain product and you do not care from whom, it is only important at what price (for example, the stock market). And there are matching markets when price is not the only factor (and sometimes there is none at all).

For example, the distribution of children by school. Previously, in the US, the scheme worked like this: parents write out a list of schools by priorities (1, 2, 3, etc.), schools first consider those who have listed them as number 1, sorted by their school criteria and take as much as they can . For those who missed, we take the second school and repeat the procedure. From the point of view of game theory, the scheme is very bad: parents have to behave “strategically”, it is inappropriate to honestly say their preferences - if you don’t get into school 1, by the second round, school 2 may already be filled and you don’t get into it even if your stats are higher than those accepted in the first round. In practice, disrespect for the theory of games results in corruption and internal agreements between parents and schools. Mathematicians suggested another algorithm - “pending adoption”. The basic idea is that the school does not immediately agree, but simply keeps a “ranked list of candidates” “in memory”, and if someone goes beyond the tail of the tail, they immediately receive a refusal. In this case, there is a dominant strategy for parents: first we go to school 1, if at some moment we get rejected, then we go to school 2 and we are not afraid to lose anything - the chances of getting to school 2 are the same as if we went to school right away This scheme was implemented “in production”, though. The results of the A / B test was not told.

')

Another example is kidney transplantation. Unlike many other organs, it is possible to live with one kidney, so often a situation arises that someone is willing to give a kidney to another person, but not abstract, but concrete (in view of personal relationships). However, the likelihood that the donor and the recipient are compatible is very small, and you have to wait for another body. There is an alternative - the exchange of kidneys. If two donor-recipient pairs are incompatible inside but compatible between pairs, then an exchange can be made: 4 simultaneous extraction / implantation operations. For this, the system is already running. And if there is a “free” organ that is not tied to a specific pair, then it can give rise to a whole chain of exchanges (in practice there were chains up to 30 transplantations).

There are a lot of similar markets now: from Uber to the online advertising market, and everything changes very quickly due to computerization. Among other things, “privacy” is changing a lot: as an example, the speaker pointed out a study of one student who showed that in the United States after the elections, the number of visits to Thanksgiving Day has decreased due to inter-state trips with different political views. The study was conducted on an anonymized dataset of the coordinates of the phones, but the author rather easily singled out the phone’s “home” deanonymized dataset.

Separately, the speaker went through technological unemployment. Yes, unmanned cars will deprive many of them (in the US, 6% of jobs are at risk), but they will create new jobs (for auto mechanics). Of course, an elderly driver will not be able to retrain, and for him it will be a strong blow. At such times, you need to concentrate not on how to prevent changes (it will not work), but on how to help people get through them as painlessly as possible. In the middle of the last century, many people lost their jobs during the mechanization of agriculture, but we are glad that half of the population now does not have to go to work in the field? Unfortunately, this is only talk, the speaker didn’t offer any options to mitigate the blow for those on whom technological unemployment is heading ...

And yes, again about faireness. It is impossible to make so that the distribution of the forecast model was the same in all groups, the model will lose its meaning. What can be done, in theory, so that the distribution of ERRORS of the first and second kind is the same for all groups? This already looks much more sensible, but how to achieve this in practice is not clear. Gave a link to an interesting article on legal practice - in the United States, the judge decides whether to release bail based on ML prediction .

Recommenders I

He got confused in the schedule and came to the wrong speech, but still in the topic - the first block on recommendation systems.

Leveraging Meta-path based Context mechanism

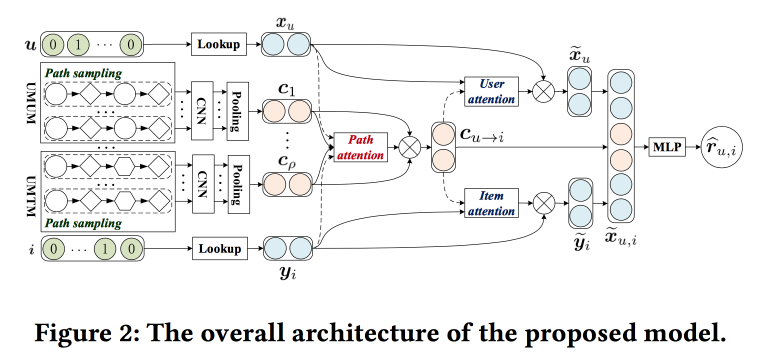

The guys are trying to improve the recommendations by analyzing the paths in the graph. The idea is quite simple. There is a “classic” neural network recommender with embeddings for objects and users and a fully connected part from above. There are recommendations on the graph, including with neural network wraps. Let's try to combine it all in one mechanism. Let's start by building a “meta graph” that unites users, movies and attributes (actor / director / genre, etc.), sample a number of paths on the random walk-om box, feed a convolutional network at the input, add the user's embeddings to the side and object, and above we will hang attention (here is a little tricky, with its own characteristics for different branches). To get the final answer, we will install a perceptron with two hidden layers from above.

Consumer Internet Applications

During the break between the presentations, I move to the speech I initially wanted: here are invited speakers from LinkedIn, Pinterest and Amazon. All girls and all heads of DS-departments.

Neraline Contextual Recommentations for Active Communities LinkedIn

The point is to stimulate the development of communities and their activation in LinkedIn. Half missed the development, the last recommendation: to exploit local patterns. For example, in India, students often after graduation try to contact graduates of the same university from previous courses with a decorated career. LinkedIn takes this into account when building and when issuing recommendations.

But simply creating a community is not enough, it is necessary that there was an activity: users published content, received and gave feedback. Show the correlation of the received feedback with the number of publications in the future. Show how the information cascades spread over the graph. But what if some node is not involved in the cascade? Send notification!

Then there were quite a lot of calls with yesterday's story about working with notifications and tape. Here, they also use the multipurpose optimization approach “maximize one of the metrics while keeping the others within certain limits”. In order to control the load, we introduced our system “Air Trafic Control”, which limits the load on notifications to the user (they were able to reduce formal replies and complaints by 20% without dropping engagement). ATC decides whether the user can push or not, and another push is preparing this push called Concourse, which is streaming (like us, on Samza !). It was about her many told yesterday. Concourse has an offline partner named Beehive, but gradually more and more goes into streaming.

We noted a few more points:

- Important dedupilation, and quality, given the presence of many channels and content.

- It is important to have a platform. Moreover, they have a dedicated team of the platform, and programmers work there.

Pinterest Approach to Machine learning

Now the representative of Pinterest speaks and talks about two big tasks where ML - tape (homefeed) and search are used. The speaker immediately says that the final product is the result of the work not only of the data scientist, but also of the ML engineers, and programmers — all of them have been assigned to people.

The tape (the situation when there is no user intention) is built according to the following model:

- We understand the user - we use information from the profile, the graph, interaction with the pins (what I saw that I drank), we build embeddings according to the behavior and attributes.

- We understand the content - we look at it in all aspects: visual, textual, who is the author, which boards it falls on, who reacts. It is very important to remember that people in one picture often see different things: someone has a blue accent in design, someone has a fireplace, and someone has a kitchen.

- Putting it all together - a three-step procedure: we generate candidates (recommendations + subscriptions), personalize (using the ranking model), blend with policies and business rules.

For recommendations, use a random walk by user-board-pin graph, implement PinSage, which was spoken about yesterday . Personalization has evolved from sorting by time, through a linear model and GBDT to a neural network (since 2017). When collecting the final list, it is important not to forget about the business rules: freshness, variety, additional filters. We started with heuristics, now they are moving towards the optimization model of the context as a whole relative to the goals.

In the search situation (when the intention is there), they move a little differently: they try to better understand the intention. To do this, use the techniques of query understanding and query expansion, and the expansion is made not just by autocompletion, but through beautiful visual navigation. Use different techniques for working with pictures and texts. We started in 2014 still without deep learning, launched Visual Search with deep learning in 2015, added object detection with semantic analysis and search in 2016, recently launched the Lens service - you point the smartphone camera at an object and get pins. In deep learning, multi task is actively used: there is a common block that builds embedding of a picture. and on top of other networks to solve different problems.

In addition to these tasks, ML is used a lot more where: notifications / advertising / spam / forecasting, etc.

A little about the lessons learned:

- We must remember about the bass, one of the most dangerous "rich get richer" (the tendency of machine learning to pour traffic into the already popular objects).

- Be sure to test and monitor: the introduction of the grid at first strongly collapsed all the indicators, then it turned out that because of a bug, the distribution of features drifted long ago and voids appeared online.

- The infrastructure and the platform are very important, special emphasis on convenience and parallelization of experiments, but we must also be able to cut off experiments offline.

- Metrics and understanding: offline does not guarantee online, but we make tools for interpreting models.

- Building a sustainable ecosystem: about the garbage filter and clickbate, be sure to add negative feedback to the MD and the model.

- Don't forget that there is a layer for embedding business rules.

Broad Knowledge Graph by Amazon

Now comes the girl from Amazon.

There are knowledge graphs — entity nodes, edge attributes, etc. — that are built automatically, for example, by Wikipedia. They help to solve many problems. We would like to get a similar thing for products, but there are a lot of problems with it: there is no structured data at the entrance, the products are dynamic, there are many different aspects that do not fall on the knowledge graph model (arguably, in my opinion, rather “do not fall without serious complication of the structure "), There are a lot of verticals and" not named entities ". When the concept was “sold” to the management and received good, the developers said that it was “a project for a hundred years,” and finally managed in 15 person-months.

We started with extracting entities from the Amazon catalog: there is no structure here, although it is crowdsourced and dirty. Next hooked up OpenTag (explained in more detail yesterday) for word processing. And the third component was Ceres - a tool for parsing from the web, taking into account the DOM tree. The idea is that by annotating one of the pages of the site, you can easily parse the rest - after all, all generated by the template (but there are many nuances). To do this, they used the Vertex markup system (bought by Amazon in 2011) - they make markup, use the xpath set to isolate attributes, and determine which ones are applicable to a specific page using logistic regression. To merge information from different sites, use random forest. They also use active learning, complex pages are sent to manual layout. In the end, they make supervised knowledge cleaning - a simple classifier, for example, a brand / not a brand.

Next, a little for life. They identify two types of targets. Roofshots are the short-term goals that we achieve and we are moving the product, and Moonshots, which we are expanding and holding global leadership.

Embeddings and Representations

After lunch, I went to the section on how to build embeddings, mainly for graphs.

Finding Similar Exercises with a Unified Semantic Representation

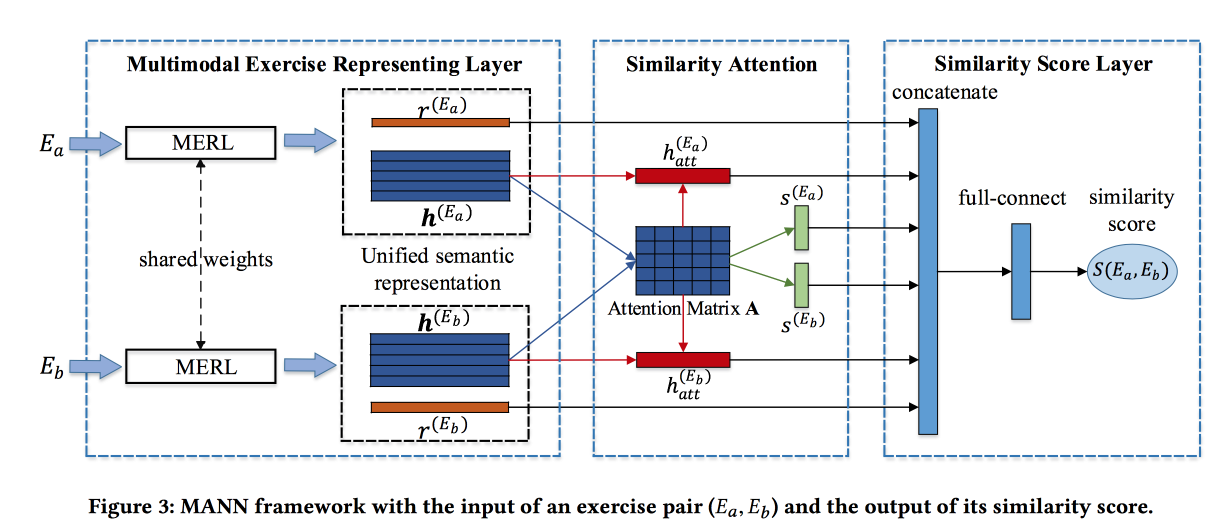

The guys solve the problem of finding similar tasks in some Chinese online learning system. Tasks are described by text, pictures, and a set of related concepts. Developer input - Blend information from these sources. For pictures they make a rollout, for concepts, embeddings are trained, for words too. Word embedings are transmitted to Attention-based LSTM along with information about concepts and pictures. Get some representation of the assignment.

The block described above is turned into a Siamese network, in which attention is also added at the output of the similarity score.

They teach on a marked dataset of 100 thousand exercises and 400 thousand pairs (only 1.5 million exercises). Add hard negative, sampling exercises with the same concepts. The attention matrices can then be used to interpret similarity.

Arbitrary-Order Proximity Preserved Network Embedding

The guys are building a very interesting variant of embeddings for graphs. First, methods based on walks and on the basis of neighbors are criticized for focusing on the “proximity” of a certain level (corresponding to the length of the walk). They also offer a method that takes into account the proximity of the desired order, and with controlled weights.

The idea is very simple. Let's take a polynomial function and apply to the adjacency matrix of the graph, and factor the result by SVD. In this case, the degree of a particular member of a polynomial is the level of proximity, and the weight of this member is the effect of this level on the result. Naturally, this wild idea is not realizable: after building the adjacency matrix into a power, it becomes compacted, does not fit into the memory, and figures factor this.

Without mathematics, it's a mess, because if a polynomial function is applied to the result AFTER decomposition, we get exactly the same thing as if we applied the decomposition to a large matrix. Not really, really. We consider SVD approximately and leave only the uppermost eigenvalues, but after applying a polynomial, the order of the eigenvalues can change, so you need to take numbers with a margin.

The algorithm impresses with its simplicity and shows stunning results in the problem of link prediction.

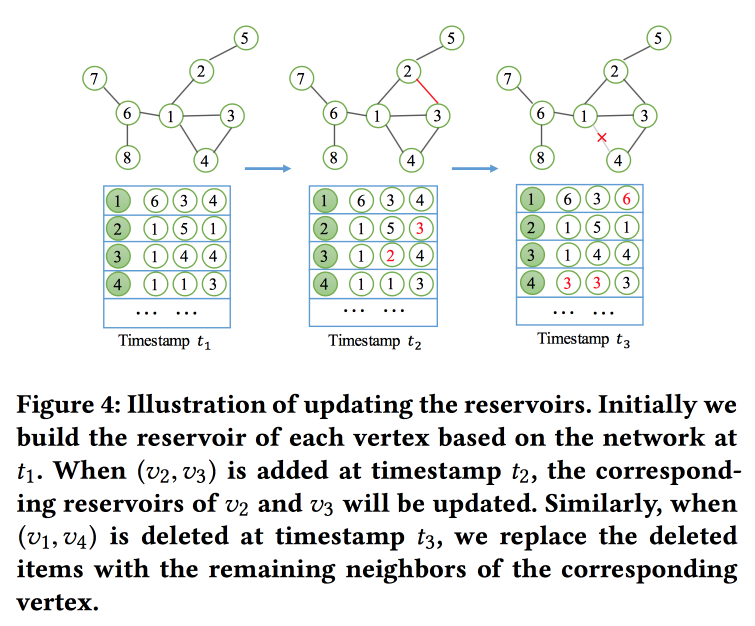

NetWalk: A Flexible Deep Embedding Approach for Dynamic Networks

As the name implies, we will build graph embedings based on walks. But it is not easy, but in streaming mode, since we are solving the problem of finding anomalies in dynamic networks (the work on this topic was yesterday). To quickly read and update embeddings, the concept of a “ reservoir ” is used, in which the count's sample lies and is stochastically updated as changes arrive.

For training, they formulate a rather complicated task with several goals, the main ones being the proximity of embeddings for the nodes in one way and the minimum error when the network is restored by the autoencoder.

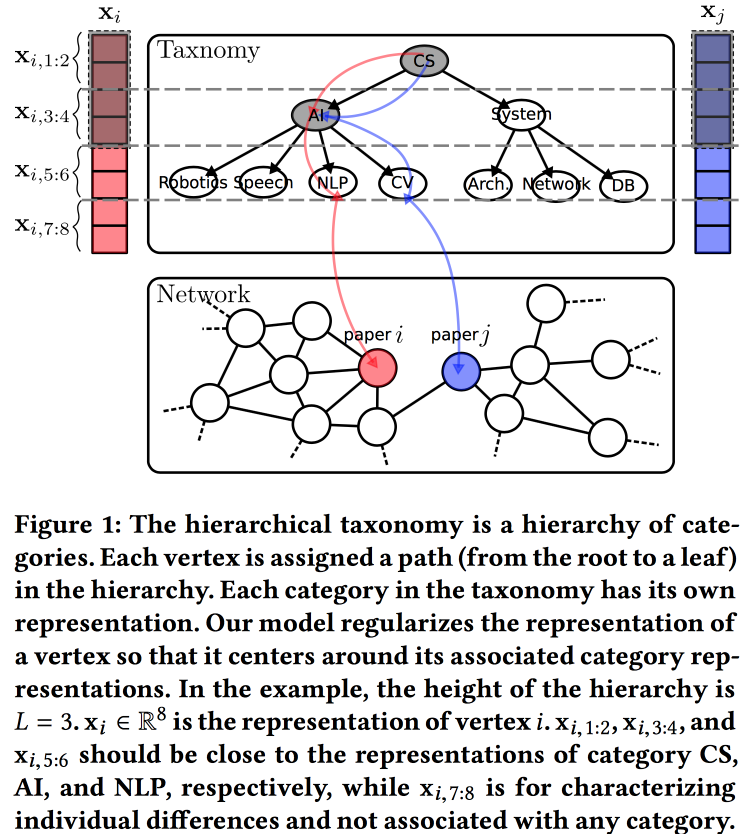

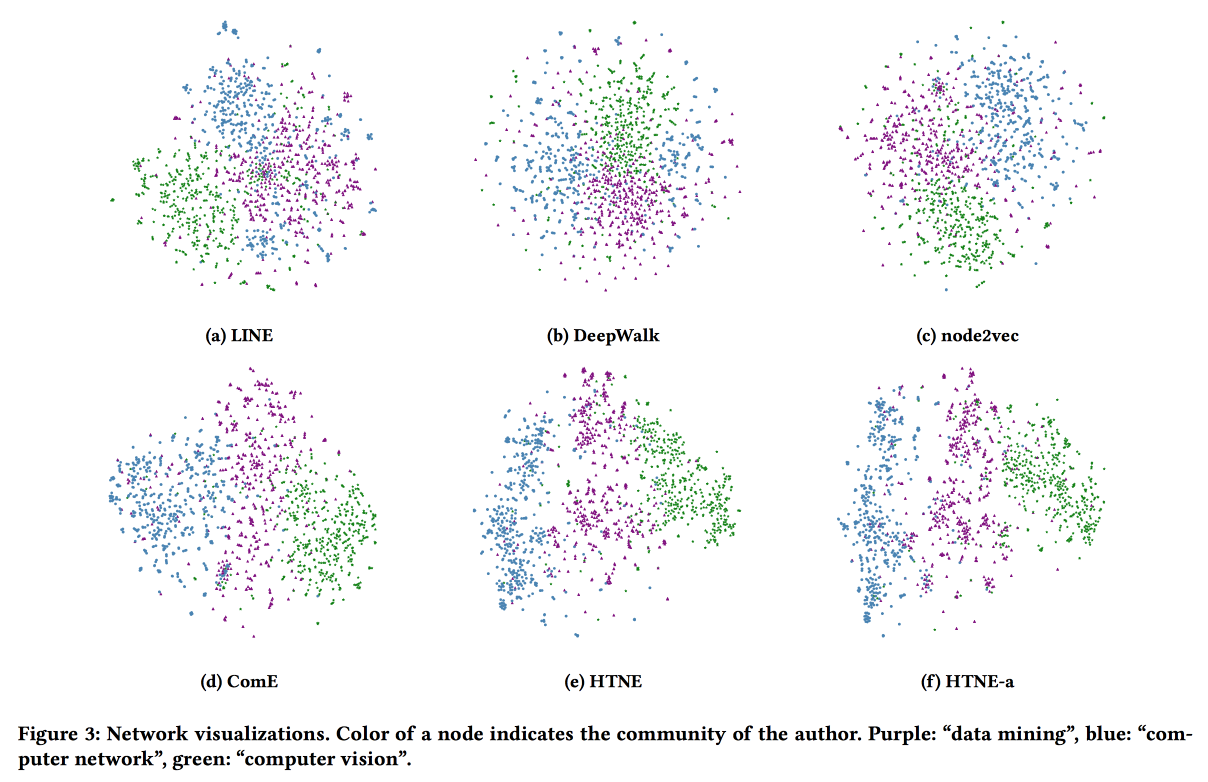

Hierarchical Taxonomy Aware Network Embedding

Another way to build embeddings for the graph, this time based on a probabilistic generating model. The quality of embeddings is improved by using information from a hierarchical taxonomy (for example, areas of knowledge for citation networks or product categories for products in e-tail). We generate the generation process according to some “tops”, some of which are tied to nodes in the taxonomy, and some - to a specific node.

We associate with the taxonomy parameters an a priori normal distribution with zero mean, the parameters of a particular vertex in taxonomy are the normal distribution with an average equal to the taxonomy parameter, and the free parameters of the vertex are with the normal distribution with zero mean and infinite variance. The vertex environment is generated using the Bernoulli distribution, where the probability of success is proportional to the proximity of the parameters of the nodes. We optimize this whole machine with the EM algorithm .

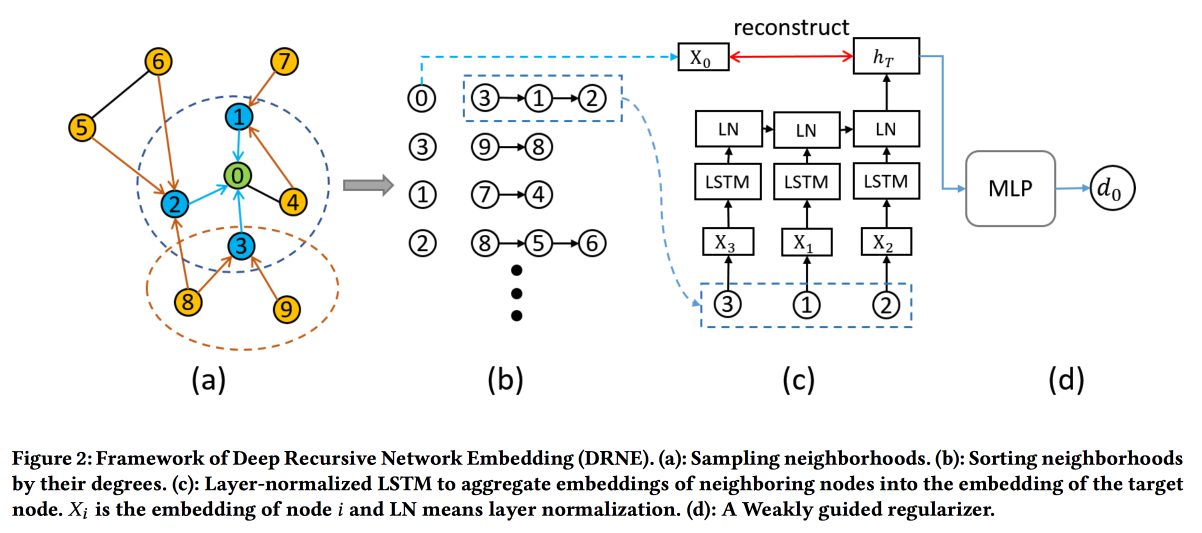

Deep Recursive Network Embedding with Regular Equivalence

Common methods for building embeddings do not work for all tasks. For example, consider the task "the role of the node." To determine the role, it is not the specific neighbors (which are usually viewed) that are important, but the structure of the graph in the neighborhood of the vertex and some patterns in it. At the same time, it is very difficult algorithmically to find these patterns (regular equivalence) algorithmically, but for large graphs it is unrealistic.

So let's go the other way . For each node, we calculate the parameters associated with its graph: degree, density, different centralities, etc. On them, embeddings cannot be built, but recursion can be used, since the presence of the same pattern implies that the attributes of the neighbors of two nodes with the same role should be similar. So, you can stack more layers.

, base line- DeepWalk node2wek .

Embedding Temporal Network via Neighborhood Formation

. : , . , — .

Hawkes Process , . HP . attention . log likelihood . .

Safety

. , . , ML , , .

Using Machine Learning to Assess the Risk of and Prevent Water Main Breaks

: , , — . , . , ( - , ), 1-2 % . , .

data miner- Data Science for Social Good . , , :

, . : , GBDT. -1 % .

base line-: « » , , « , » . ML, , .

27 32- , , , ( , — ). , $1,2 .

, , , 1940-, , ( ) .

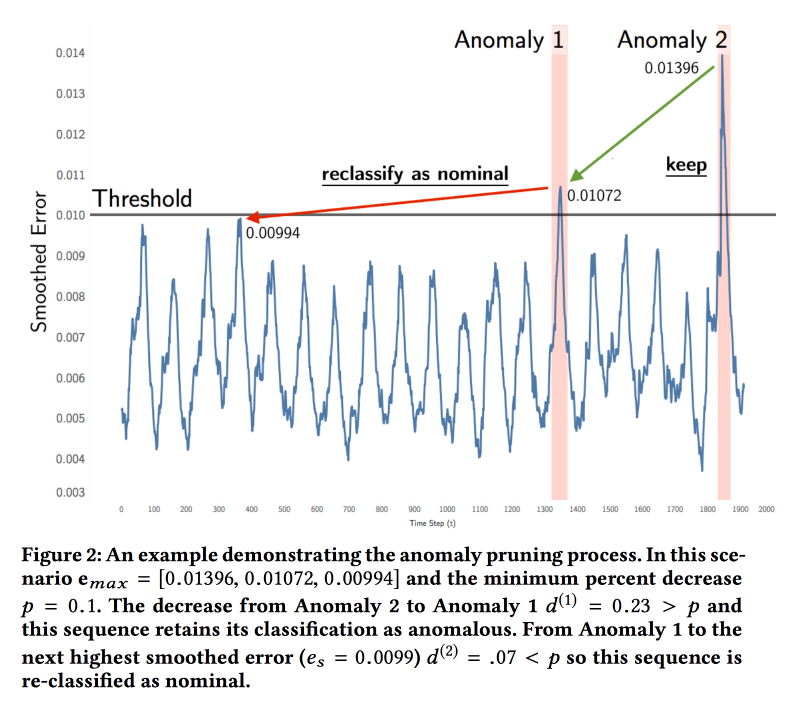

Detecting Spacecraft Anomalies Using LSTMs and Nonparametric Dynamic Thresholding

NASA ( ). — . , . , .

ML . LSTM , . ( , ). , , . , . , .

: soil moisture active passive Curiosity c Mars Science Laboratory. 122 , 80 %. , , . , , .

Explaining Aviation Safety Incidents Using Deep Temporal Multiple Instance Learning

, , . Safety Incidents, , . , . .

, - , . «», .. , . , , . , , .

GRU , Multiple Instances Learning . , «» — , . « , , — » ( = ). max pooling .

cross entropy loss . base line MI-SVM ADOPT.

ActiveRemediation: The Search for Lead Pipes in Flint, Michigan

, , .

. 120 . , 2013 , : . , 2014-. 2015- — . , . , …

— , . , . .

. «», . : , , , . , , — , …

6 . , 20 %. data scientist-.

, 19 , , , . , « ». , , XGBoost - . ( 7 % , ).

, -, , , — . « » .

, , 16 % 3 %. , , — Excel .

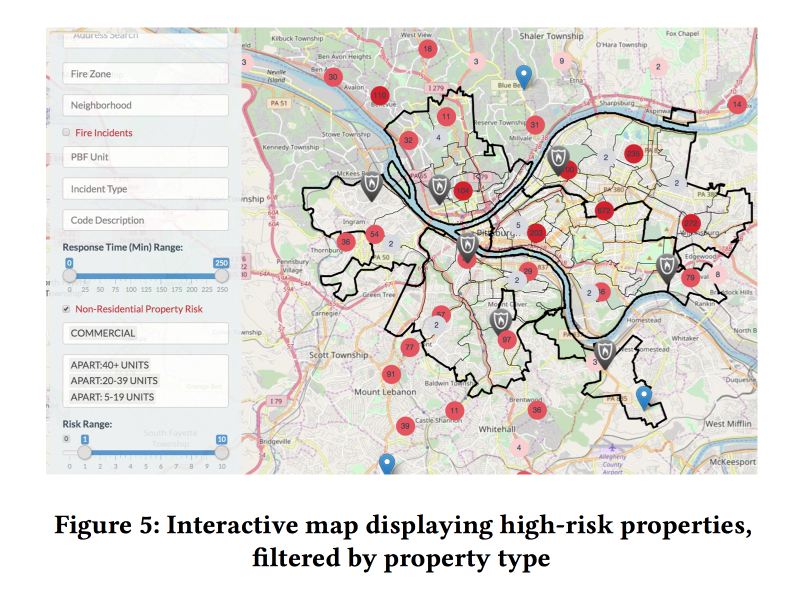

A Dynamic Pipeline for Spatio-Temporal Fire Risk Prediction

— . , , , 2018-. . , , .

, . - -, . , .

, , , .., , . .

XGBoost-: . , , .

( , /) , , . , , .

. (, ). — ( Fairness, ).

Source: https://habr.com/ru/post/420997/

All Articles